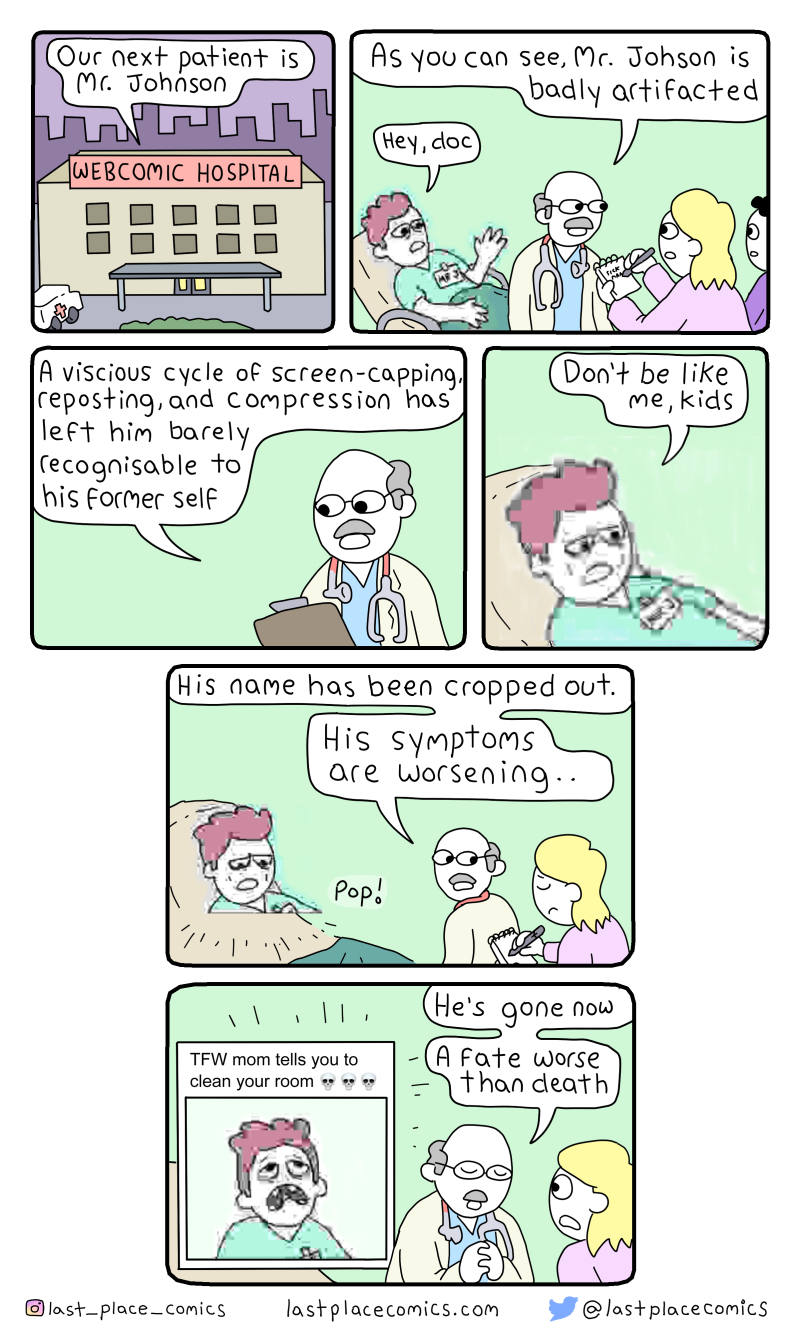

The post Artifacting Man appeared first on Last Place Comics.

The post Artifacting Man appeared first on Last Place Comics.

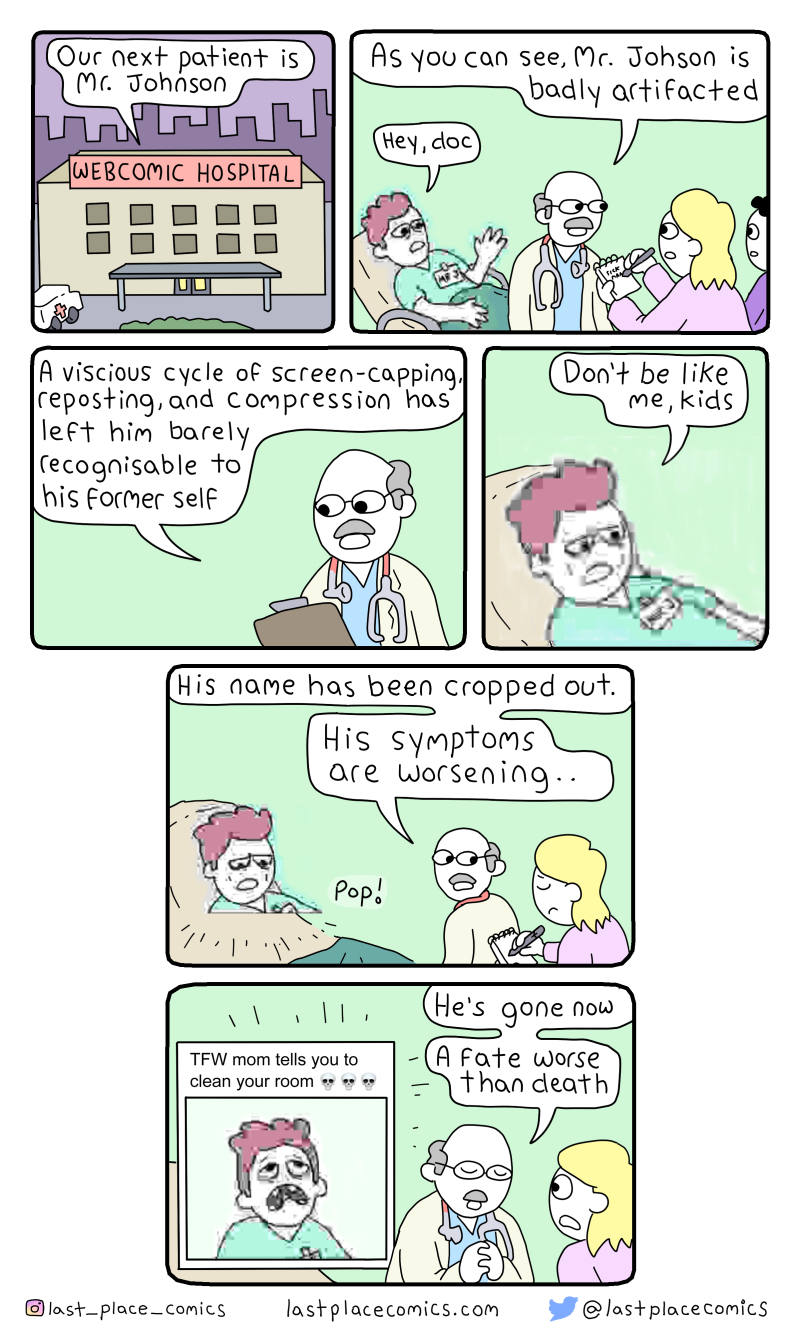

The post Main Character appeared first on Last Place Comics.

Adam Victor BrandizziI guess eventually you'll get to ask "What the heck are protos?"

When Martin Gusinde was ordained as a priest in Germany in 1911, he hoped to travel to New Guinea to work as a missionary among exotic tribes. Instead, his superiors sent him to Chile to teach at the German school in Santiago. Within a few years, however, he found his calling at Chile’s Museum of Ethnology and Anthropology, carrying out expeditions to Tierra del Fuego in the far south of Chile and Argentina. Gusinde’s haunting photographs of the Selk’nam, Yamana, and Kawésqar peoples—now collected and published in The Lost Tribes of Tierra del Fuego—present a way of life that was already on the brink of extinction when he visited the region in 1918–1924 and that has since ceased to exist.

Gusinde first went to Isla Grande de Tierra del Fuego in December of 1918, filled with (in his own words) “indescribable enthusiasm” and “youthful dreams” of an encounter with archaic tribes. The young Charles Darwin, on his famous voyage to South America in 1832, was shocked by the primitive state of Isla Grande natives: “One can hardly make oneself believe that they are fellow-creatures, and inhabitants of the same world,” he wrote.

Yet when Gusinde arrived in the mission settlement of La Candelária, his illusions were “shattered” and “demolished.” By that time, the once populous Selk’nam (some 4,000 inhabited the region in the 1880s) had been reduced to a few hundred living in poverty around the mission. In addition to the fatal scourge of measles and smallpox that decimated other Amerindian groups, the Selk’nam were singled out in the 1890s for a campaign of genocide: Romanian engineer Julius Popper paid bounties for Selk’nam heads and ears and organized hunting parties to clear them from the territory to make way for miners and ranchers.

Photography was an important aspect of Gusinde’s scientific and humanistic endeavor, and The Lost Tribes of Tierra del Fuego is the first book to address this aspect of his work in its own right. Comparing his field notes with the 1,200 images preserved in mission archives and digitized by the book’s editors, it became clear that many of his photographs did not so much record daily life and ritual, but rather, reconstructed, recreated, and reenacted traditions that had already been abandoned. “As these people disappear, so does their uniqueness,” he wrote. “The most urgent thing, right now, is to save what is left.”

There is something bewitchingly surreal about his photographs of the Hain initiation ceremony, in which young Selk’nam men are hazed by a pantheon of spirits that are revealed, in the final moments (forbidden to women), to be kinsmen in elaborate masks. Several photos show naked male figures standing barefoot in the snow, their bodies painted in bold white stripes on dark ochre and wearing eerie, phallic headdresses. An image of a snowy field strewn with corpse-like forms—according to the caption, initiates enacting a passage through the underworld—evokes uncanny echoes of the actual Selk’nam genocide. White bone-dust covering the skin and conical masks of Kawésqar initiates gives them a spectral, hallucinatory quality.

Another series of photographs depicts traditional Selk’nam clothing, archery, dwellings, and wrestling. Despite their staged, documentary nature, these images stand out nonetheless as intensely intimate and personal, each individual identified by full name and kinship in Gusinde’s detailed field notes, which inform the book’s captions. His portraits especially reveal a tension between Gusinde’s ethnographic training and his humanistic (and artistic) instincts. A few sad photos demonstrate the poverty of these peoples’ contemporary lives in shacks around missions.

Gusinde was one of the first twentieth-century ethnographers to break with the tradition of detached observation and identify personally with the societies he studied: he himself was initiated into the secretive Selk’nam and Yamana rites of manhood. In 1923 he photographed the last Hain ritual before the Selk’nam were decimated by a final wave of measles and forced to assimilate.

The last fluent Selk’nam speakers died in the 1980s, and Herminia Vera, who spoke the language as a child, lived until 2014: at ninety-one, she was born the same year Gusinde photographed the final Hain ceremony documented in this book. But Joubert Yanten, a linguistically talented mestizo man, has sought to encourage a cultural revival. When he discovered as a teenager that he was descended from the Selk’nam, he set out to learn the language. Adopting the tribal name Keyuk, he studied a 1915 lexicon and listened to recordings made by anthropologist Anne Chapman in the 1970s. Now Keyuk is a media star in Chile, composing lyrics in Selk’nam and performing with an “ethno-electronic” band.

In a recent interview with The New Yorker Keyuk explains the etymology of the group’s name: “The word ‘Selk’nam’ can mean ‘We are equal,’… though it can also mean ‘we are separate.’” Gusinde’s camera captures the essence of this fundamental enigma of the ethnographic encounter.

The Lost Tribes of Tierra Del Fuego is published in English by Thames & Hudson, and in French and Spanish by Editions Xavier Barral.

Adam Victor BrandizziA great peace from back then. In hindsight, I just wonder if it would be better becasue there are people actively against any restriction. But that's the best approach.

For about two months now, Americans have been told the same thing over and over again by public-health officials and influencers everywhere: Stay home to stop the spread of the coronavirus.

The message is true. If we all stay hermetically sealed in our homes for long enough, the virus will die out; if we don’t, it will linger. But framing the message in such a stark way may inadvertently encourage people to make worse choices. A less extreme, more nuanced, set of recommendations may get more traction—perhaps a public-health equivalent of “Reduce, reuse, recycle.” Something like “Stay close to home, keep your distance, wash your hands,” but catchier.

I think a lot about this public-health balancing act in other contexts, mostly pregnancy and parenting. For example, I’ve written about safe-sleep messaging. Doctors tell parents to put babies to sleep alone in a crib, on their back. This is indeed optimal. But the messaging doesn’t allow for parents to understand—if this is simply impossible—what the next-best alternative might be. Are all other sleeping situations equally risky? It turns out they are not.

One of the most dangerous sleeping arrangements for infants is on a sofa with a parent. This is far more hazardous than in bed with a parent, especially if the bed contains no pillows or blankets. If health professionals communicate “Everything other than alone-in-crib is extremely dangerous,” parents may end up on the sofa, possibly because they’re trying to avoid falling asleep in the bed. This can have tragic consequences. It would be better to communicate that while the safest sleeping arrangement is alone-in-crib, bed-sharing is much safer than sofa-sharing.

COVID-19 messaging has sometimes had a similar flavor, whether it comes from civic leaders or friends and family. Just stay home! Everything else is risky. One mother wrote me to say that she’d mentioned on Facebook that she had taken her child out for a walk—and people shamed her. Some people are getting paranoid. They’re afraid to go to the park, because they can’t stop thinking about all the what-ifs. What if a sick person breathes on a plant and then a few seconds later you happen to touch it and then scratch your nose under your mask and then you get sick? This is not completely impossible, but it is extremely unlikely.

When people are advised that one very difficult behavior is safe, and (implicitly or not) that everything else is risky, they may crack under the pressure, or throw up their hands. That is, if people think all activities (other than staying home) are equally risky, they figure they might as well do those that are more fun. If taking a walk at a six-foot distance from a friend puts me at very high risk, why not just have that friend and a bunch of others over for a barbecue? It’s more fun. This is an exaggeration, of course, but different activities carry very different risks, and conscientious civic leaders should actively help people choose among them.

Stark messaging may also discourage people from taking reasonable precautions. Public-health officials tell people to wash their hands and wear masks. But because the above-the-fold message is “Just stay home,” people may struggle to understand the purpose of these other pieces of advice. If the only truly safe thing to do is stay home, then how should I think about the mask suggestion? Is it a futile gesture, like putting a Band-Aid on a gunshot wound? (In fact, mask wearing and hand-washing are both important steps people can take to lower their risk of getting and transmitting the virus. Neither of these lowers your risk to zero, but they affect it a lot.)

As the economy reopens, it makes sense to communicate a range of risks, and to speak in terms of probabilities rather than in binaries. Staying home and not seeing anyone is the safest way to avoid the virus. But seeing people at a distance with masks on is safer than a spring-break party. Grocery shopping can be made safer with a mask, and with appropriate hand-washing protocols. If you see your older parents, don’t hug them, and consider choosing an outdoor venue.

One way the pandemic differs from the sleep example is externalities—the fact that our actions affect other people. If I go out and risk a chance of infection, I may then hurt others. But if we as a society keep the bar for correct behavior very high (“Just stay home”), and then suggest that not reaching the bar is shameful (“How dare you take a walk with your daughter?”), we may create a situation where people who suspect that they have the virus fail to act responsibly (by telling contacts they have it and then isolating themselves) in order to avoid stigma. The message ought to be that people may get infected even if they take precautions, and that having the coronavirus isn’t some kind of moral failing.

Ultimately, the public needs to better understand the virus. People need to see, for example, why hand-washing matters—because even if you get a virus particle on your hand, if you wash it off before you touch your face, you will not become infected. People need to be less afraid but more careful. Stark messaging can’t bring that about, but smart messaging can.

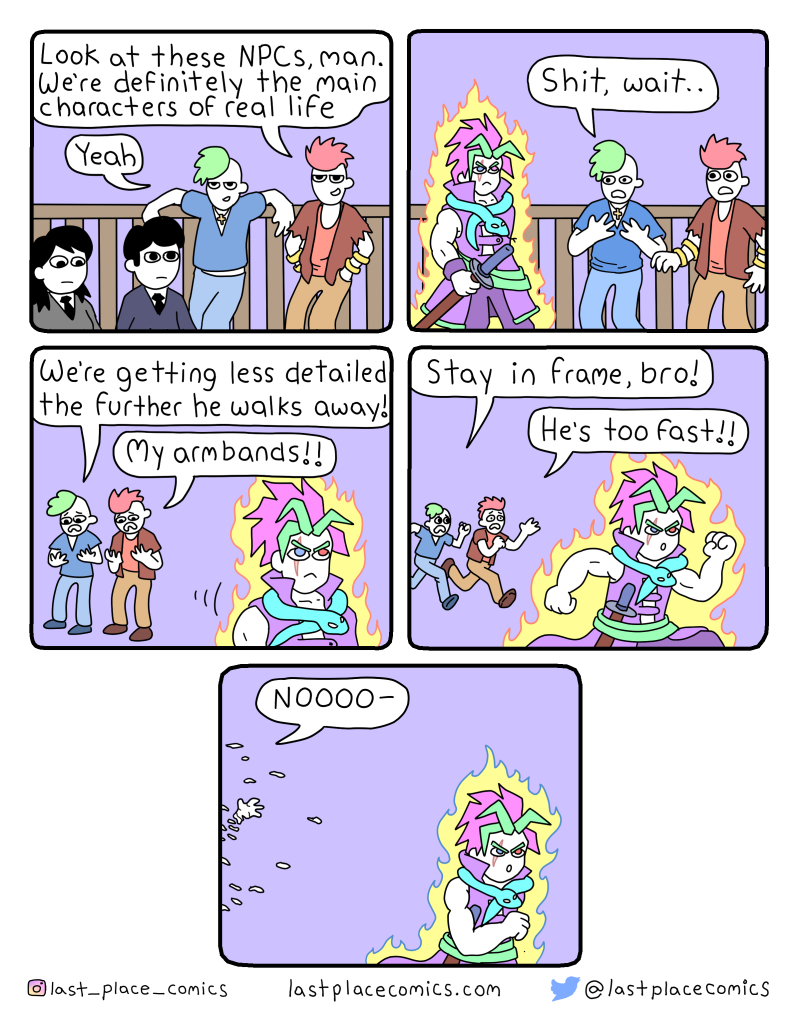

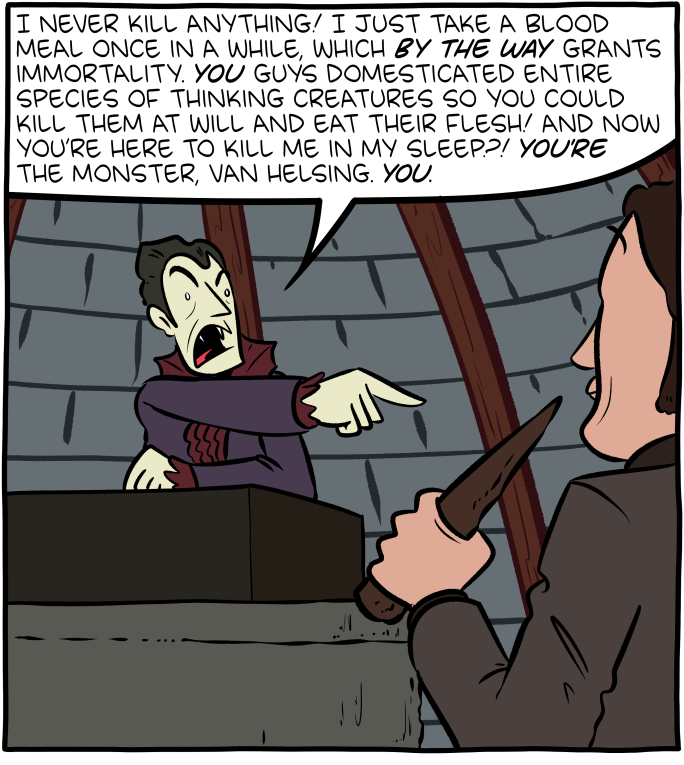

Hovertext:

Come to think of it, probably any mythological creature has some legitimate grievances with humanity.

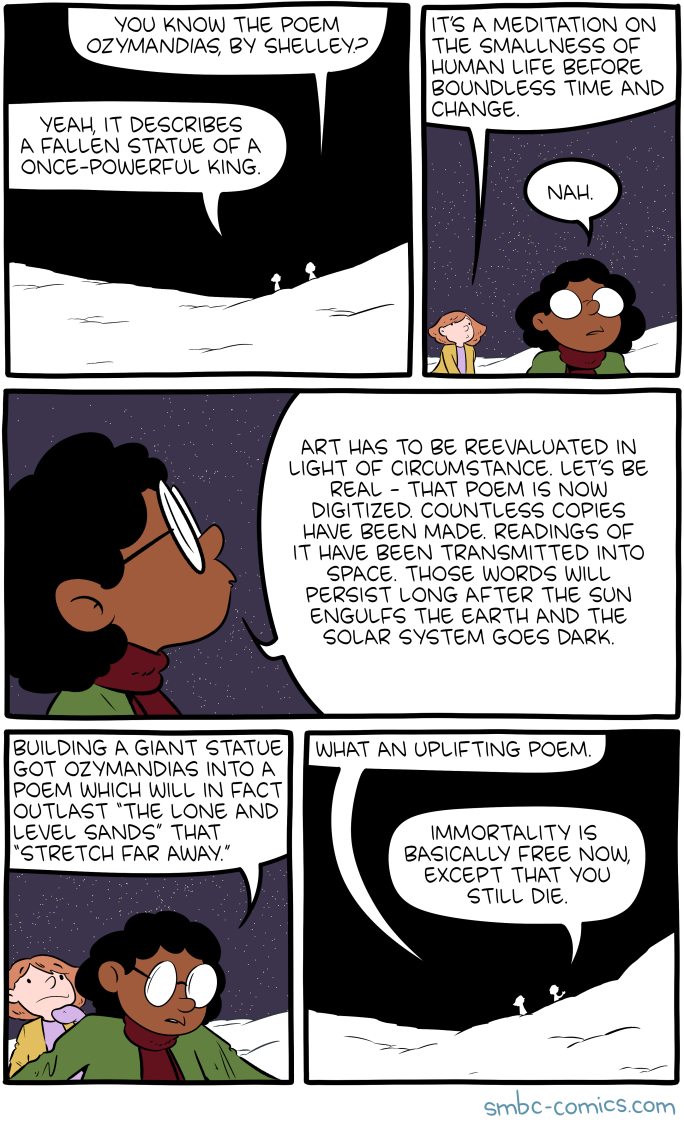

Hovertext:

I keep trying to think of a joke, but I just can't improve on the profundity of the red button comic.

Aliás, caso você ainda não tenha o livro da velha chata, tem a venda na lojinha.

Um meteoro não cairia (literalmente falando) mal =)

Um meteoro não cairia (literalmente falando) mal =)

tag: buraco negro

The post Cientistas nos anos 90 e agora appeared first on DrPepper.com.br.

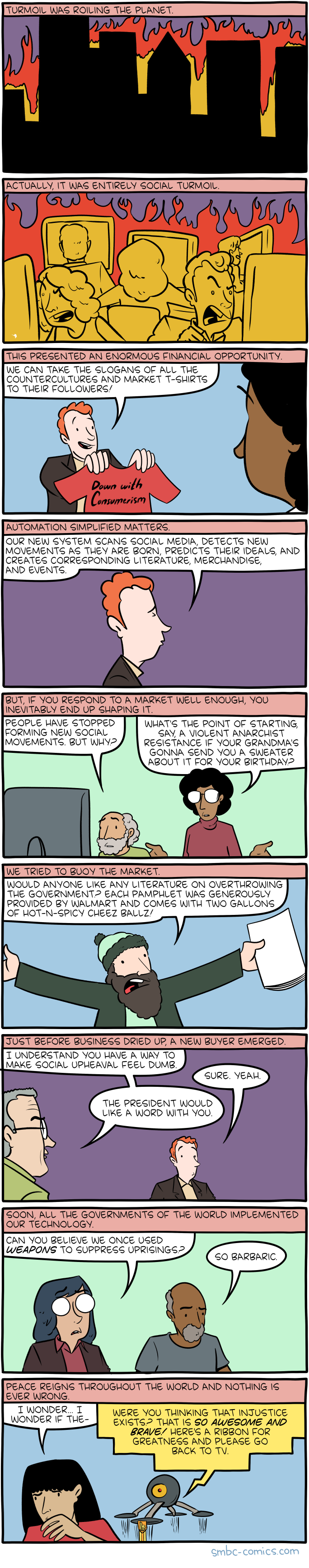

Hovertext:

At first, the Encouragement Drones seemed so innocent...

It’s eight years later, and men’s deodorant scents continue to be nonsense. I currently favor “Cool Wave,” myself, because who doesn’t want to smell like a cool wave.

Note: they don’t specify what it’s a cool wave of.

Note from Missy: At least guys get scents other than flowers, even if they’re mysterious waves. If you’re a woman who thinks floral scents are disgusting, there are very few options. If someone made a deodorant that gently smelled of fresh-baked chocolate chip cookies, or Froot Loops, or pepperoni pizza, I’d stock up on that shizz immediately.

As always, thanks for using my Amazon Affiliate links (US, UK, Canada).