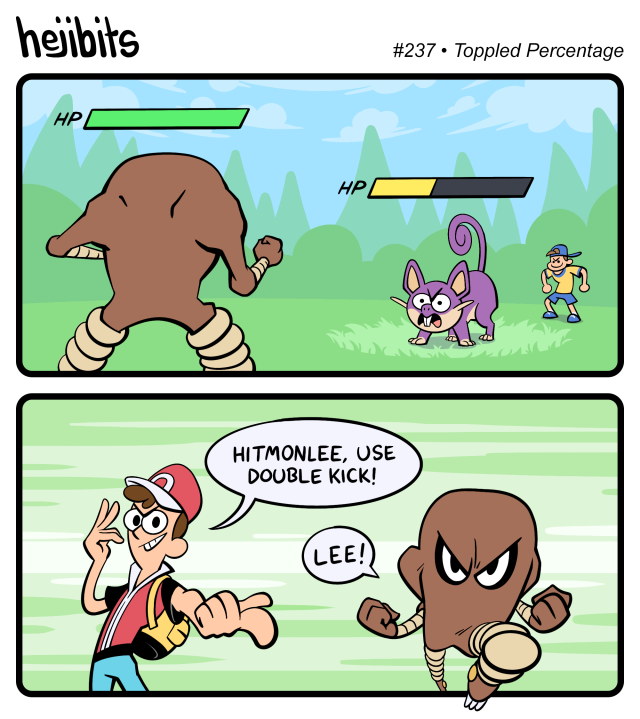

Hejibits #237 - Toppled Percentage

they say every artist has a dark period

O post Mentirinhas #1605 apareceu primeiro em Mentirinhas.

O post Mentirinhas #1581 apareceu primeiro em Mentirinhas.

O post Mentirinhas #1577 apareceu primeiro em Mentirinhas.

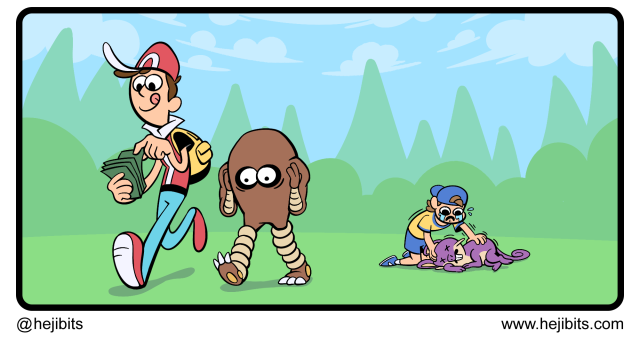

Hovertext:

This will featured in 2025's all-theodicy smbc collection.

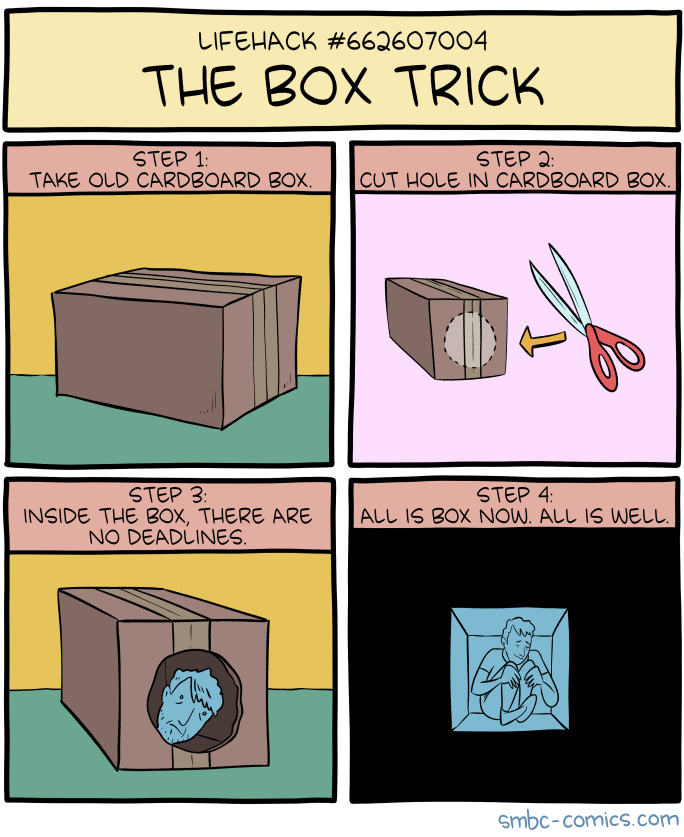

Hovertext:

Oddly enough, this comic inspired by TH White's 'The Goshawk,' which I recommend to anyone interested in goshawks.

A recent documentary from PBS includes a fascinating clip of an octopus changing colors while sleeping. The marine biologist involved in Octopus: Making Contact thinks that the sea creature was dreaming about hunting, which sparked the color shift to a camouflaged shade. Dr. David Scheel describes his theory in the documentary:

So here she’s asleep, she sees a crab and her color starts to change a little bit. Then she turns all dark. Octopuses will do that when they leave the bottom. This is a camouflage, like she’s just subdued a crab and now she’s going to sit there and eat it and she doesn’t want anyone to notice her. …This really is fascinating. But yeah, if she’s dreaming that’s the dream.

If you’re wondering how it was possible to document this occurrence, the octopus in question is being kept in captivity and closely studied by Dr. Scheel, an Alaska-based professor. Stay tuned for the full documentary, which premieres October 2, 2019, on PBS. (via the sleeping octopus’s enthusiastic cousin, Laughing Squid)

O post Mundo Avesso – Extintos apareceu primeiro em Um Sábado Qualquer.

.png)

Hovertext:

On the plus side, he never punishes you because the only noticeable way to do that would be to flip your bit to dead.

Hovertext:

Why do they call them deadlines if they move around all the time?

At last, you can buy all of Shakespeare's Sonnets, reduced to convenient short stupid couplets:

Enjoy the final episode everyone! I’m sure it will prove… interesting ;p

Um meteoro não cairia (literalmente falando) mal =)

Um meteoro não cairia (literalmente falando) mal =)

tag: buraco negro

The post Cientistas nos anos 90 e agora appeared first on DrPepper.com.br.