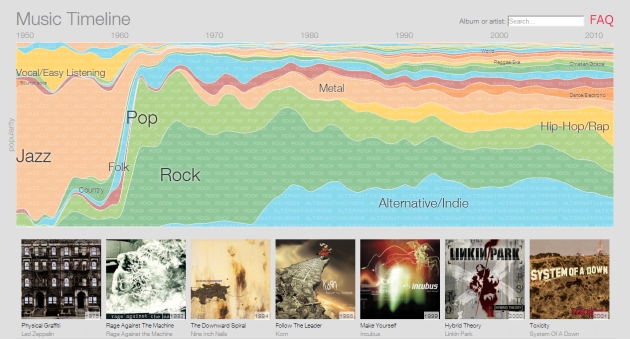

It's no surprise that Google has been tracking music uploads, but what's unexpected is exactly what the search giant is doing with all of that info. Interactive maps of music's ongoing journey are charted through Play Music's users' libraries, found ...

It's no surprise that Google has been tracking music uploads, but what's unexpected is exactly what the search giant is doing with all of that info. Interactive maps of music's ongoing journey are charted through Play Music's users' libraries, found ...

Cooper Griggs

Shared posts

Google is mapping the history of modern music

It's no surprise that Google has been tracking music uploads, but what's unexpected is exactly what the search giant is doing with all of that info. Interactive maps of music's ongoing journey are charted through Play Music's users' libraries, found ...

It's no surprise that Google has been tracking music uploads, but what's unexpected is exactly what the search giant is doing with all of that info. Interactive maps of music's ongoing journey are charted through Play Music's users' libraries, found ...

Production Methods: An Articulated Bandsaw!

A bandsaw is the go-to shop fixture if you're cutting an intricate shape out of wood. But there's a size limit as to what you can get up onto the bed and maneuver with your hands, in a manner that's safe for both you and the machine. Imagine if you had to cut a 16-foot beam or a log for a log cabin, for instance.

To get around this, Italian production-tool manufacturer MD Dario has come up with an ingenious solution: Mount an entire bandsaw on a two-section arm with ball-bearing joints at all three connection points.

By taking this moutain-comes-to-Mohammed approach, a single operator can quickly and accurately move the saw around while the workpiece remains mobile. In the video below, fast-forward to 1:07 to get to the good part:

(more...)The Totally-Unverifiable but Awesome Tale of Next-Level Laziness

Submitted by: Unknown

tumblr_m3cqn56arF1qdhxyeo1_500.gif (499×477)

dakotamcfadzean: The secret dies with me.

The evolution of the modern gym. [doghousediaries]

Hooray for Piracy!

Cooper GriggsImportant distinction.

The End Of Anonymity

Click here to see how a useless photo turns into an identifiable face.

Detective Jim McClelland clicks a button and the grainy close-up of a suspect—bearded, expressionless, and looking away from the camera—disappears from his computer monitor. In place of the two-dimensional video still, a disembodied virtual head materializes, rendered clearly in three dimensions. McClelland rotates and tilts the head until the suspect is facing forward and his eyes stare straight out from the screen.

It’s the face of a thief, a man who had been casually walking the aisles of a convenience store in suburban Philadelphia and shopping with a stolen credit card. Police tracked the illicit sale and pulled the image from the store’s surveillance camera. The first time McClelland ran it through facial-recognition software, the results were useless. Algorithms running on distant servers produced hundreds of candidates, drawn from the state’s catalog of known criminals. But none resembled the suspect’s profile closely enough to warrant further investigation.

It wasn’t altogether surprising. Since 2007, when McClelland and the Cheltenham Township Police Department first gained access to Pennsylvania’s face-matching system, facial-recognition software has routinely failed to produce actionable results. While mug shots face forward, subjects photographed “in the wild,” whether on the street or from a ceiling-mounted surveillance camera, rarely look directly into the lens. The detective had grown accustomed to dead ends.

But starting in 2012, the state overhauled the system and added pose-correction software, which gave McClelland and other trained officers the ability to turn a subject’s head to face the camera. While I watch over the detective’s shoulder, he finishes adjusting the thief’s face and resubmits the image. Rows of thumbnail mug shots fill the screen. McClelland points out the rank-one candidate—the image mathematically considered most similar to the one submitted.

It’s a match. The detective knows this for a fact because the suspect in question was arrested and convicted of credit card fraud last year. McClelland chose this demonstration to show me the power of new facial-recognition software, along with its potential: Armed with only a crappy screen grab, his suburban police department can now pluck a perpetrator from a combined database of 3.5 million faces.

This summer, the reach of facial-recognition software will grow further still. As part of its Next-Generation Identification (NGI) program, the FBI will roll out nationwide access to more than 16 million mug shots, and local and state police departments will contribute millions more. It’s the largest, most comprehensive database of its kind, and it will turn a relatively exclusive investigative tool into a broad capacity for law enforcement. Officers with no in-house face-matching software—the overwhelming majority—will be able to submit an image to the FBI’s servers in Clarksburg, West Virginia, where algorithms will return a ranked list of between 2 and 50 candidates.

The $1.2-billion NGI program already collects more than faces. Its repositories include fingerprints and palm prints; other biometric markers such as iris scans and vocal patterns may also be incorporated. But faces are different from most markers; they can be collected without consent or specialized equipment—any cameraphone will do the trick. And that makes them particularly ripe for abuse. If there’s any lesson to be drawn from the National Security Agency’s (NSA) PRISM scandal, in which the agency monitored millions of e-mail accounts for years, it’s that the line between protecting citizens and violating their privacy is easily blurred.

So as the FBI prepares to expand NGI across the United States, the rational response is a question: Can facial recognition create a safer, more secure world with fewer cold cases, missing children, and more criminals behind bars? And can it do so without ending anonymity for all of us?

***

The FBI’s Identification Division has been collecting data on criminals since it formed in 1924, starting with the earliest-used biometric markers—fingerprints. Gathered piecemeal at first, on endless stacks of ink-stained index cards, the bureau now maintains some 135 million digitized prints. Early forensic experts had to work by eye, matching the unique whorls and arcs of prints lifted from crime scenes to those already on file. Once computers began automating fingerprint analysis in the 1980s, the potentially months-long process was reduced to hours. Experts now call most print matching a “lights-out” operation, a job that computer algorithms can grind through while humans head home for the night.

Fingerprints don’t grow mustaches, and DNA can’t throw on a pair of sunglasses. But faces can sprout hair and sag with time.

Matching algorithms soon evolved to make DNA testing, facial recognition, and other biometric analysis possible. And as it did with fingerprints, the FBI often led the collection of new biometric markers (establishing the first national DNA database, for example, in 1994). Confidence in DNA analysis, which involves comparing 13 different chromosomal locations, is extremely high—99.99 percent of all matches are correct. Fingerprint analysis for anything short of a perfect print can be less certain. The FBI says there’s an 86 percent chance of correctly matching a latent print—the typically faint or partial impression left at a crime scene—to one in its database, assuming the owner’s print is on file. That does not mean that there’s a 14 percent chance of identifying the wrong person: Both DNA and fingerprint analysis are admissible in court because they produce so few false positives.

Facial recognition, on the other hand, never identifies a subject—at best, it suggests prospects for further investigation. In part, that’s because faces are mutable. Fingerprints don’t grow mustaches, and DNA can’t throw on a pair of sunglasses. But faces can sprout hair and sag with time and circumstance. People can also resemble one another, either because they have similar features or because a low-resolution image tricks the algorithm into thinking they do.

As a result of such limitations, no system, NGI included, serves up a single, confirmed candidate but rather a range of potential matches. Face-matching software nearly always produces some sort of answer, even if it’s completely wrong. Kevin Reid, the NGI program manager, estimates that a high-quality probe—the technical term for a submitted photo—will return a correct rank-one candidate about 80 percent of the time. But that accuracy rating is deceptive. It assumes the kind of image that officers like McClelland seldom have at their disposal.

During my visit to the Cheltenham P.D., another detective stops by McClelland’s cubicle with a printout. “Can you use this?” he asks. McClelland barely glances at the video still—a mottled, expressionistic jumble of pixels—before shaking his head. “I’ve gotten to the point where I’ve used the system so much, I pretty much know whether I should try it or not,” he says. Of the dozens of photos funneled his way every week, he might run one or two. When he does get a solid hit, it’s rarely for an armed robbery or assault and never for a murder.

Violent criminals tend to obscure their faces, and they don’t generally carry out their crimes in public. If a camera does happen to catch the action, McClelland says, “they’re running or walking fast, as opposed to somebody who’s just, la-di-da, shoplifting.” At this point, facial recognition is best suited to catching small-time crooks. When your credit card is stolen and used to buy gift cards and baby formula—both popular choices, McClelland says, because of their high resale value—matching software may come to the rescue.

Improving the technology’s accuracy is, in some ways, out of the FBI’s hands. Law-enforcement agencies don’t build their own algorithms—they pay to use the proprietary code written by private companies, and they fund academics developing novel approaches. It’s up to the biometrics research community to turn facial recognition into a genuinely powerful tool, one worthy of the debate surrounding it.

***

In August 2011, riots broke out across London. What started as a protest of a fatal shooting by police quickly escalated, and for five days, arson and looting were rampant. In the immediate aftermath of the riots, the authorities deployed facial-recognition technology reportedly in development for the 2012 Summer Olympics. “There were 6,000 images taken of suspects,” says Elke Oberg, marketing manager at Cognitec, a German firm whose algorithms are used in systems worldwide. “Of those, one had an angle and quality good enough to run.”

Facial recognition can be thwarted by any number of factors, from the dirt caked on a camera lens to a baseball hat pulled low. But the technology’s biggest analytical challenges are generally summed up in a single acronym: APIER, or aging, pose, illumination, expression, and resolution.

A forward-facing mug shot provides a two-dimensional map of a person’s facial features, enabling algorithms to measure and compare the unique combination of distances separating them. But the topography of the human face changes with age: The chin, jawline, and other landmarks that make up the borders of a specific likeness expand and contract. A shift in pose or expression also throws off those measurements: A tilt of the head can decrease the perceived distance between the eyes, while a smile can warp the mouth and alter the face’s overall shape. Finally, poor illumination and a camera with low resolution both tend to obscure facial features.

For the most part, biometrics researchers have responded to these challenges by training their software, running each algorithm through an endless series of searches. And companies such as Cognitec and NEC, based in Japan, teach programs to account for exceptions that arise from suboptimal image quality, severe angles, or other flaws. Those upgrades have made real progress. A decade ago, matching a subject to a five-year-old reference photo meant overcoming a 25 percent drop in accuracy, or 5 percent per year. Today, the accuracy loss is as low as 1 percent per year.

Computer scientists are now complementing those gains with companion software that mitigates the impact of bad photos and opens up a huge new pool of images. The best currently deployed example is the 3-D–pose correction software, called ForensicaGPS, that McClelland showed me in Pennsylvania. New Hampshire–based Animetrics released the software in 2012, and though the company won’t share exact numbers, it’s used by law-enforcement organizations globally, including the NYPD and Pennsylvania’s statewide network.

Before converting 2-D images to 3-D avatars, McClelland adjusts various crosshairs, tweaking their positions to better line up with the subject’s eyes, mouth, chin, and other features. Then the software creates a detailed mathematical model of a face, capturing data that standard 2-D–centric algorithms miss or ignore, such as the length and angle of the nose and cheekbones. Animetrics CEO Paul Schuepp says it “boosts anyone’s face-matching system by a huge percentage.”

Social media has precisely what facial recognition needs: billions of high-quality, camera-facing head shots, many of them tied directly to identities.

Anil Jain, one of the world’s foremost biometrics experts, has been developing software at Michigan State University (MSU) that could eliminate the need for probe photos entirely. Called FaceSketchID, its most obvious function is to match forensic sketches—the kind generated by police sketch artists—to mug shots. It could also make inferior video footage usable, Jain says. “If you have poor quality frames or only a profile, you could make a sketch of the frontal view of the subject and then feed it into our system.”

A sketch artist, in other words, could create a portrait based not on an eyewitness account of a murder but on one or more grainy, off-angle, or partially obscured video stills of the alleged killer. Think of it as the hand-drawn equivalent of Hollywood-style image enhancement, which teases detail from a darkened pixelated face. A trained artist could perform image rehabilitation, recasting the face with the proper angle and lighting and accentuating distinctive features—close-set eyebrows or a hawkish, telltale nose. That drawing can then be used as a probe, and the automatic sketch ID algorithms will try to find photographs with corresponding features. Mirroring the artist’s attention to detail, the code focuses less on finding similar comprehensive facial maps and more on the standout features, digging up similar eyebrows or noses.

At press time, the system, which had been in development since 2011, had just been finished, and Jain expected it to be licensed within months. Another project he’s leading involves algorithms that can extract facial profiles from infrared video—the kind used by surveillance teams or high-end CCTV systems. Liquor-store bandits aren’t in danger of being caught on infrared video, but for more targeted operations, such as tracking suspected terrorists or watching for them at border crossings, Jain’s algorithms could mean the difference between capturing a high-profile target and simply recording another anonymous traveler. The FBI has helped support that research.

Individually, none of these systems will solve facial recognition’s analytical problems. Solutions tend to arrive trailing caveats and disclaimers. A technique called super-resolution, for example, can double the effective number of pixels in an image but only after comparing images snapped in extremely rapid succession. A new video analytics system from Animetrics, called Vinyl, automatically extracts faces from footage and sorts them into folders, turning a full day’s work by ananalyst into a 20-minute automated task. But analysts still have to submit those faces to matching algorithms one at a time. Other research, which stitches multiple frames of video into a more useful composite profile, requires access to massive computational resources.

But taken together, these various systems will dramatically improve the technology’s accuracy. Some biometrics experts liken facial recognition today to fingerprint analysis decades ago. It will be years before a set of standards is developed that will lift it to evidentiary status, if that happens at all. But as scattered breakthroughs contribute to better across-the-board matching performance, the prospect of true lights-out facial recognition draws nearer. Whether that’s a promise or a threat depends on whose faces are fair game.

***

The best shot that Detective McClelland runs during my visit, by far, was pulled from social media. For the purposes of facial recognition, it couldn’t be more perfect—a tight, dead-on close-up with bright, evenly distributed lighting. There’s no expression, either, which makes sense. He grabbed the image from the profile of a man who had allegedly threatened an acquaintance with a gun.

This time, there’s no need for Animetrics’ 3-D wizardry. The photo goes in, and the system responds with first-, second-, and third-ranked candidates that all have the same identity (the images were taken during three separate arrests). The case involved a witness who didn’t have the suspect’s last name but was connected to him via social media. The profile didn’t provide a last name either, but with a previous offender as a strong match, the detective could start building his case.

The pictures we post of ourselves, in which we literally put our best face forward, are a face matcher’s dream. Animetrics says it can search effectively against an image with as few as 65 pixels between the eyes. In video-surveillance stills, eye-to-eye pixel counts of 10 or 20 are routine, but even low-resolution cameraphone photos contain millions of pixels.

Social media, then, has precisely what facial recognition needs: billions of high-quality, camera-facing head shots, many of them directly tied to identities. Google and Facebook have already become incubators for the technology. In 2011, Google acquired PittPatt (short for Pittsburgh Pattern Recognition), a facial-recognition start-up spun out of Carnegie Mellon University. A year later, Facebook acquired Israel-based Face.com and redirected its facial-recognition work for internal applications. That meant shutting down Face.com’s KLIK app, which could scan digital photos and automatically tag them with the names of corresponding Facebook friends. Facebook later unveiled an almost identical feature, called Tag Suggestions. Privacy concerns have led the social network to shut down that feature throughout the European Union.

Google, meanwhile, has largely steered clear of controversy. Former CEO Eric Schmidt has publicly acknowledged that the company has the technical capability to provide facial-recognition searches. It chooses not to because of the obvious privacy risks. Google has also banned the development of face-matching apps for its Google Glass wearable computing hardware.

Facebook didn’t respond to interview requests for this story, and Google declined. But using images stored by the social media giants for facial recognition isn’t an imaginary threat. In 2011, shortly after PittPatt was absorbed by Google, Carnegie Mellon privacy economist Alessandro Acquisti demonstrated a proof-of-concept app that used PittPatt’s algorithms to identify subjects by matching them to Facebook images. Mining demographic information freely accessible online, Acquisti could even assign social security numbers to some.

Deploying a national or global equivalent, which could match a probe against a trillion or more images (as opposed to a few hundred thousand, in Acquisti’s case), would require an immense amount of processing power—something within the realm of possibility for Silicon Valley’s reigning data companies but currently off the table. “That doesn’t mean it will not happen,” says Acquisti. “I think it’s inevitable, because computational power keeps getting better over time. And the accuracy of face recognition is getting better. And the availability of data keeps increasing.”

So that’s one nightmare scenario: Social media will intentionally betray us and call it a feature. Acquisti predicts it will happen within 20 years, at most. But there’s another, less distant path for accessing Facebook and Google’s billions of faces: Authorities could simply ask. “Anything collected by a corporation, that law enforcement knows they’ve collected, will eventually get subpoenaed for some purpose,” says Kevin Bowyer, a computer-science professor and biometrics and data-mining expert at the University of Notre Dame.

The question isn’t whether Facebook will turn over data to law enforcement. The company has a history of providing access to specific accounts in order to assist in active investigations. It has also been sucked into the vortex of the NSA’s PRISM program, forced along with other companies to allow the widespread monitoring of its users’ data. “What we’ve seen with NSA surveillance and how the FBI gets access to records is that a lot of it comes from private companies,” says Jennifer Lynch, senior staff attorney at the Electronic Frontier Foundation, a nonprofit digital-rights group. “The data, the pictures, it becomes a honeypot for the government.”

The FBI, it should be noted, is not the NSA. Tempting as it is to assign guilt by association or in advance, there’s no record of overreach or abuse of biometrics data by the agency. If NGI’s facial database exists as the FBI has repeatedly described it, as a literal rogue’s gallery consisting solely of mug shots, it carries relatively few privacy risks.

And yet “feature creep”—or the stealthy, unannounced manner in which facial-recognition systems incorporate new data—has already occurred. When I asked McClelland whether he could search against DMV photos, I thought it would be a throwaway question. Drivers are not criminals. McClelland looked at me squarely. “In Pennsylvania? Yes.”

Police have had access to DMV photos for years; they could search the database by name, location, or other parameters. But in mid-2013, they gained the ability to search using other images. Now, every time they run a probe it’s also checked against the more than 30 million license and ID photos in the Pennsylvania Department of Transportation’s (PennDOT) database. McClelland tells me he doesn’t get many hits, and the reason for that is the system’s underlying algorithm. PennDOT’s priority isn’t to track criminals but to prevent the creation of duplicate IDs. Its system effectively ignores probes that aren’t of the perfect forward-facing variety captured in a DMV office. As a result, one of the most potentially unsettling examples of widespread face collection, comprising the majority of adults in Pennsylvania—those who didn’t surrender their privacy by committing a crime but simply applied for state-issued identification—is hobbled by a simple choice of math. “I kind of like it,” says McClelland. “You’re not getting a lot of false responses.”

The benefit those PennDOT photos could have on criminal investigations is anyone’s guess. But this much is certain: Attempts are being made, thousands of times a year, to cross the exact line that the FBI promises it won’t—from searching mug shots to searching everyone.

***

It would be easy to end there, on the cusp of facial recognition’s many potential nightmare scenarios. The inexorable merger of reliable pattern analysis with a comprehensive pool of data can play out with all the slithering dread of a cyber thriller. Or, just maybe, it can save the day.

Last May, a month after the Boston Marathon bombings killed three people and injured more than 250, MSU’s Anil Jain published a study showing what could have been. Jain ran the faces of both suspects through NEC’s NeoFace algorithm using surveillance images collected at the scene of the detonations. Although the older Tsarnaev brother, Tamerlan, actually had a mug shot on file, as a result of a 2009 arrest for assault and battery, it failed to show up within the top 200 candidates. He was wearing sunglasses and a cap, and the algorithm was unable to match it to his booking photo.

Dzhokhar Tsarnaev was another matter. Jain placed a photo of the younger brother, taken on his graduation day, in a million-image data set composed primarily of mug shots. In a blind search—meaning no demographic data, such as age and gender, narrowed the list of potential candidates—NeoFace paired a shot from the bombing with Tsarnaev’s graduation-day photo as a rank-one result. Facial recognition would have produced the best, and essentially only, lead in the investigation.

There’s a catch. The reference photo was originally posted on Facebook. In order to have pulled off that match, law-enforcement officers would have needed unprecedented access to the social network’s database of faces, spread throughout a trillion or more images. More than three days after the bombings, the Tsarnaevs brought the investigation to a close by murdering an MIT police officer and fighting a running gun battle through the streets of Cambridge and nearby Watertown. Time for facial analysis would have been short, and the technical hurdles might have been insurmountable.

Still, it could have worked.

Maybe it’s too early to debate the boundaries of facial recognition. After all, its biggest victories and worst violations are yet to come. Or maybe it’s simply too difficult, since it means wrestling with ugly calculations, such as weighing the cost of collective privacy against the price of a single life. But perhaps this is precisely when and why transformative technology should be debated—before it’s too late.

Four Ways Your Body Can Betray You

1) Finger/Palm: The latent prints collected at crime scenes include finger and palm prints. Both can identify an individual, but latent impressions tend to be smudged and incomplete. Last April, the FBI revolutionized print analysis, rolling out the first national palm-print database and updating algorithms to triple the accuracy of fingerprint searches.

2) DNA: Matching a suspect’s DNA to a crime-scene sample used to mean waiting up to 60 days for lab results. IntegenX recently released RapidHIT technology, which compares DNA in 90 minutes—fast enough to nail a suspect during interrogation. The two-by-two-foot scanner packs a lab’s worth of chemical analyses onto a single disposable microfluidic cartridge.

3) Iris: An iris scan requires suspects to stare directly into a nearby camera, making it all but useless for criminal investigations. But it’s a foolproof approach to authentication, and nearly any consumer-grade camera can capture the unique patterns in the eye. Schools, prisons, and companies (including Google) already use iris scans for security.

4) Voice: Though voice recognition is largely a commercial tool—banks such as Barclays use it to verify money transfers—vocal-pattern matching also catches crooks. Within 30 seconds of phone conversation, the system created by Nuance Communications can build a unique voice print and then run it against a database of prints from confirmed fraudsters.

The Five Biggest Challenges To Facial Recognition

1) Age: Years take a toll on the face. The more time that has passed between two photos of the same subject, the more likely the jawline will have changed or the nose bloomed. Any number of other features can also lose their tell-tale similarities with age.

2) Pose: Most matching algorithms compare the distance between various features—the space separating the eyes, for example. But a subject turned away from the camera can appear to have wildly different relative measurements.

3) Illumination: Dim lighting, heavy shadows, or even excessive brightness can have the same adverse effect, robbing algorithms of the visual detail needed to spot and compare multiple features.

4) Expression: Whether it’s an open-mouthed yell, a grin, or a pressed-lip menace, if a subject’s expression doesn’t match the one in a reference shot, key landmarks (such as mouth size and position) may not line up.

5) Resolution: Most facial-recognition algorithms are only as good as the number of pixels in a photograph. That can be a function of everything from camera quality to the subject’s distance from the lens (which dictates how much zooming is needed to isolate the face).

This article originally appeared in the 2014 issue of Popular Science.

Video: A Marine With A Prosthetic Hand Controlled By His Own Muscles

Out on a routine reconnaissance mission in Afghanistan’s Helmand province, Marine Staff Sergeant James Sides reached out his right hand to grab the bomb. It was the ordnance disposal tech’s fifth deployment overseas, and his second to Afghanistan. But this time, July 15, 2012, the improvised explosive device detonated. Sides was blinded in his left eye and lost his right arm below the elbow.

After a long recovery at Walter Reed National Military Medical Center, Sides learned to use a prosthetic hand. Then 11 months later, he went back to the hospital — this time, for a surgical implant that could represent the future of prosthetics.

The implanted myoelectric sensors (IMES) in Sides’ right arm can read his muscles and bypass his mind, translating would-be movement into real movement. The IMES System, as its developers are calling it, could be the first implanted multi-channel controller for prosthetics. Sides is the first patient in an investigational device trial approved by the U.S. Food and Drug Administration.

“I have another hand now,” he says.

Inspired in large measure by veterans like Sides, many groups are working on smarter bionic limbs; you can read about some of them here. Touch Bionics makes a line of bionic hands and fingers that respond to a user’s muscle feedback, but they attach over the skin. DARPA’s Reliable Neural-Interface Technology (RE-NET) program bridges the gaps among nerves, muscles and the brain, allowing users to move prosthetics with their thoughts alone. This is promising technology, but it requires carefully re-innervating (re-wiring nerves) the residual muscles in a patient’s limb. The implant Sides received is simpler, and potentially easier for doctors and patients to adopt. The project is funded by the Alfred Mann Foundation for Scientific Research.

It uses the residual muscles in an amputee’s arm — which would normally control and command muscle movement down the hand — and picks up their signals with a half-dozen electrodes. The tiny platinum/iridium electrodes, about 0.66 inches long and a tenth of an inch wide, are embedded directly into the patient’s muscle. They are powered by magnetic induction, so there would be no need to swap batteries or plug them in — a crucial development in making them user-friendly, according to Dr. Paul Pasquina, principal investigator on the IMES system and former chief of orthopedics and rehabilitation at Walter Reed.

It translates muscle signals into hand action in less than 100 milliseconds. To Sides, it’s instantaneous: “I still close what I think is my hand,” he says. “I open my hand, and rotate it up and down; I close my fingers and the hand closes. It’s exactly as if I still had a hand. It’s pretty gnarly.”

In the video at the bottom, watch him take a swig of Red Bull, lift a heavy Dutch oven lid and sort tiny blocks into separate bins.

While skin-connected smart prosthetics are more sophisticated than body-controlled, analog ones, they have two key drawbacks, according to Pasquina: Their limited range of motion and the skin barrier between the device and the muscle. Because myoelectric devices connect over the skin, the connection is inexact, which means patients have to learn to flex a different suite of muscles to properly activate the electrodes. “You have to reprogram your mind,” as Pasquina puts it. “We’re going after the muscles that are doing things they’ve been programmed to be able to do.”

What’s more, an external connection is easy to interrupt — reaching overhead, or getting wet, can dislodge the electrodes and render the prosthetic useless. Sides recalls sweating profusely one day while using his original on-the-skin myo-device.

“I would lose connectivity, and the hand would go berserk,” he says.

Maybe most importantly, external myoelectric devices can’t think seamlessly in three dimensions. For patients like Sides, that means moving the hand up or down or side to side. But when he wanted to move his thumb, he had to nudge it in the proper position using his left hand. Now, the device responds as if he was moving it himself in one fluid motion, he says. “It makes day-to-day life a lot easier.”

Right now, the system is designed for up to three simultaneous degrees of movement. Future systems will include up to 13 angles of motion and pre-programmed patterns, much like Touch Bionics’ i-Limb myoelectric hand. Pasquina said natural movement has been a major challenge for the DARPA project as well as his own research.

“We can create these arms, but how will the user control the arm, and integrate it as part of themselves?” he says. “It’s not just controlling an arm, it’s controlling my arm; ‘I want to control this and make it feel like it’s a part of me.’”

Someday, the sensors could be implanted immediately after a traumatic injury — right after an amputation and before a patient’s injury is even closed up. Further research will prove it can work in multiple people, Pasquina says. But that’s after several years of testing and trials monitored by the FDA.

“This is not a science experiment. This is something we want to influence the lives of our service members in a positive way,” he says.

Robot Super Hero on a Rocket Surfboard. a request from the first...

Cooper Griggsawesome

Robot Super Hero on a Rocket Surfboard.

a request from the first graders I talked to today.

tumblr_lm4jm9qTOI1qz6f9yo1_500.jpg (472×699)

friend: you really need to go outside

German city to ban cars by 2034

Architectural Renderings of Life Drawn with Pencil and Pen by Rafael Araujo

Cooper Griggsbeautiful

Nautilus

Caracol

Double Conic Spiral, process

Double Conic Spiral. Ink, acrylic/canvas.

Morpho

Calculation (Sequence) #2. Acrylic, china ink/canvas.

In the midst of our daily binge of emailing, Tweeting, Facebooking, app downloading and photoshopping it’s almost hard to imagine how anything was done without the help of a computer. For Venezuelan artist Rafael Araujo, it’s a time he relishes. At a technology-free drafting table he deftly renders the motion and subtle mathematical brilliance of nature with a pencil, ruler and protractor. Araujo creates complex fields of three dimensional space where butterflies take flight and the logarithmic spirals of shells swirl into existence. He calls the series of work Calculation, and many of his drawings seem to channel the look and feel of illustrations found in Da Vinci’s sketchbooks. In an age when 3D programs can render a digital version of something like this in just minutes, it makes you appreciate Araujo’s remarkable skill. You can see much more here. (via ArchitectureAtlas)

Google announces contact lens that monitors diabetes

Imaginative photographer lands world-tour job at Coke

Growing up, Canadian photographer Joel Robison, known on Flickr as Boy_Wonder, was always a bit of a daydreamer. Blame it on the movies he watched or the stories he read as a kid, but it caused him to look at everyday objects differently. It’s a creative direction that blended both fantasy and reality. And years later, it would lead him to a dream job in photography and become a Flickr icon!

Joel’s foray into photography began after college graduation. It was when he established a teaching career when he felt something was missing.

“I didn’t really feel connected to that part of my mind that was creative,” Joel recalls. “I didn’t feel inspired. One day, I came across Flickr and saw photos by people that were so creative, entertaining and engaging! It was like a light bulb went off in my mind that maybe you need to try a camera.”

Joel went on eBay and bought a cheap, used DSLR camera for about $120. He began to play with it — learning different camera settings, shooting every single day, and he quickly came to realize that he wasn’t going to put the camera down for a long time.

“I started taking self-portraits and using that as a chance to to tell my story through my eyes, with me as the person that’s telling that story and that changed everything for me,” Joel admits. “On Flickr, I was inspired by connecting with other artists whom I felt have a similar style as me. It was this collaborative friendship that helped keep my inspiration flowing.”

Joel downloaded Photoshop and began to see the potential it had to create stories he’d seen in movies as a kid. He was finally able to tap into his imagination and express it to others.

“When I first started learning Photoshop, it would take a few hours for me to finish an image,” Joel says. “But the more I practiced and played with it… you know, trying to make these ideas in my head come to life, it started to happen. I started to see myself as being small, things around me being big or have a different purpose than they actually do. And the more I recognized that the pictures in front of me were the same as the ones that were in my mind, it encouraged me to keep going, keep trying new things.”

A few years into his photography, Joel took a picture of a few Coke bottles in the snow and posted it on Flickr. A friend of his suggested he send the photo to Coca-Cola in hopes they’d use it. At the time, Joel laughed it off and didn’t think anything of it.

“It was about a year later that I got this message from someone that works inside Coke saying, ‘Hey we’d like to take your picture and share it on Twitter. Would you be OK with that?’” Joel says, “I was very excited, and it made my day.”

“About three weeks later, I got this phone call from them saying they had developed a project for me to moderate their Flickr community,” Joel recalls. “They [Coca-Cola] wanted me to take their Flickr page, help it grow and to use my photos to encourage other people to submit their photos based around their positive themes.”

Joel moderated Coca-Cola’s Flickr community for about a year and a half. When it was all over, Coca-Cola presented Joel with an incredible opportunity.

“In 2013, they offered me a role on the FIFA World Cup Trophy Tour,” Joel says. “It’s a worldwide tour, led by Coca-Cola and FIFA, taking the FIFA World Cup Trophy on a world tour of 90 countries. And they offered me the role of photographer and voice of social media. I was shocked.”

“It totally changed the course of my life,” Joel admits. “I was able to quit my job and accept photography as my full-time career, and I haven’t looked back since. It’s been non-stop, I feel more passionate about it every day, and I feel like I am allowed to share with the world what I see, and I feel very supported in that.”

Today, many Flickr members marvel at Joel’s work, and he has inspired others to follow his lead. When asked about his success, Joel says he’s extremely lucky and admits he has a dream job.

“I’m 29 years old and I never would have dreamed that my life would include photography, travel or the experiences that I’ve been able to have,” Joel says. “To be able to create what I do, be accepted as I am and have people enjoy what I do is amazing.”

Visit Joel’s photostream to see more of his photography.

Check out Zev Hoover’s story, a 14-year-old photographer who’s been inspired by Joel’s work.

Do you want to be featured on The Weekly Flickr? We are looking for your photos that amaze, excite, delight and inspire. Share them with us in the The Weekly Flickr Group, or tweet us at @TheWeeklyFlickr.

Do you want to be featured on The Weekly Flickr? We are looking for your photos that amaze, excite, delight and inspire. Share them with us in the The Weekly Flickr Group, or tweet us at @TheWeeklyFlickr.

Germany's second largest city, Hamburg, has made plans to banish cars from the city centre in the next 20 years.

Germany's second largest city, Hamburg, has made plans to banish cars from the city centre in the next 20 years.