Renato Cerqueira

Shared posts

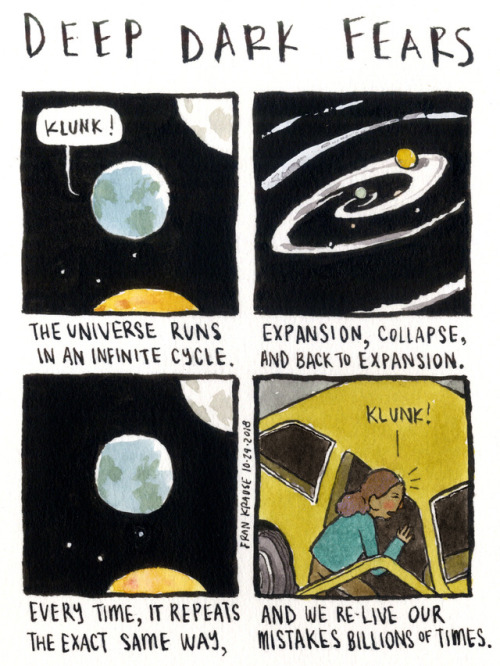

:/ A fear submitted by Gladia to Deep Dark Fears - thanks!You...

:/ A fear submitted by Gladia to Deep Dark Fears - thanks!

You can pick up signed books and original artwork in my Etsy store - check it out!

Come out wherever you are! An anonymous fear submitted to Deep...

What was that? An anonymous fear submitted to Deep Dark Fears -...

What was that? An anonymous fear submitted to Deep Dark Fears - thanks!

Looking for a gift for that weirdo you hang out with? You can find signed copies of my Deep Dark Fears books in my Etsy store -> CLICK HERE!

#comics #deepdarkfears

Fun in the sun! A fear submitted by Brooke to Deep Dark Fears -...

Fun in the sun! A fear submitted by Brooke to Deep Dark Fears - thanks!

I have an Etsy store where you can find signed copies of my books. I make drawings in them and then I send them to you, that’s the deal. Click here for more info!

Como é ser parte de um supermercado cooperativo

Quem me conhece sabe que tem um tempo que eu falo dessa tal cooperativa. Basta sentar comigo num bar que é bem provável que você vá ouvir, ou já tenha ouvido, da maravilha que é a BEES e talicoisa. Notei que ainda não tinha escrito nada sobre isso e achei que era uma boa ideia pra espalhar mais a ideia por aí.

Cresci em cidade grande e embora tivesse uma vendinha bem perto de casa, que tinha aquelas jarras de vidro cheias de balas pra eu pedir pra mamãe quando eu passava por lá no caminho do colégio, no geral pra mim supermercado sempre foi uma coisa com “super” no nome. Aquela história: um grande espaço, mil corredores e um estoque infindável de todo tipo de coisa.

A maioria dos supermercados cooperativos se baseam na idéia do Park Slope Food Coop (abre em uma nova janela ), em Nova Iorque. O Park Slope Food Coop foi fundado em 1973 e opera em um sistema em que para fazer compras no supermercado, a pessoa precisa ser uma cooperadora. Pra se tornar uma cooperadora, as pessoas pagam um valor uma vez (comprando uma parte na cooperativa) e pra manter seu status ativo, fazem um turno de trabalho de 2h45 uma vez por mês no supermercado, em tarefas que fazem o supermercado funcionar (estoque, manutenção, administração, etc).

Nunca visitei o Park Slope, mas o primeiro supermercado cooperativo que eu visitei foi La Cagette (abre em uma nova janela ), em Montpellier, no início de 2019 e eu fiquei imediatamente encantado pela ideia. A atmosfera do lugar era bem diferente, mais próxima da vendinha que eu me lembrava quando era criança. Ao invés do ambiente cheio de propaganda, com alguns produtos sendo iluminados como se fossem sagrados, o ambiente era bem mais tranquilo, as pessoas que entravam e saíam se conheciam e conversavam no caixa ou enquanto estavam estocando uma prateleira.

Um supermercado tradicional tem todos os elementos do capitalismo moderno: o marketing agressivo, descontos risíveis se você der informações suas e sempre se identificar quando faz compras de modo que eles possam fazer um perfil seu, funcionários mal pagos executando funções repetitivas por horas a fio (e muitas vezes mais de uma função ao mesmo tempo). Além disso, supermercados tendem a ter uma margem de lucro variável nos produtos, muitas vezes legumes ou macarrão são muito baratos e tem uma margem de lucro bem pequena, e quando você compra algo como uma caneta ou uma pilha, ou um produto orgânico, é nesse tipo de coisa que tem uma margem gigantesca.

Quando voltei pra Bruxelas depois de conhecer La Cagette, fui procurar pra saber se algo do tipo existia por aqui. E encontrei a BEES coop (abre em uma nova janela ).

A BEES coop começou como um grupo de compras, as pessoas que participavam do grupo tomavam decisões sobre o que comprar e compravam coletivamente para obter preços reduzidos pro grupo. Mas a ideia foi se transformando até que, também se inspirando no Park Slope, se transformou no atual supermercado cooperativo que funciona desde 2017.

O supermercado lembra um supermercado tradicional: tem cestas e carrinhos e uma gama de produtos que vai de arroz e carnes até produtos de higiene de peças de bicicleta. A grande diferença é na escolha do que entra na loja: essa escolha é também feita de maneira participativa, e prioriza produtos orgânicos, locais ou de cooperativas; e muitas vezes prioridade para o pequeno produtor, mesmo que não tenha o selo “xyz”, ao invés do grande distribuidor. Além disso, também tem um monte de coisa que é disponível a granel: macarrão, arroz, lentilha, sabonete líquido, vinho e mesmo azeite. Dá pra levar seu saco, pote ou garrafa e encher por lá mesmo.

Além dos cooperadores, existem seis pessoas assalariadas que trabalham em tempo parcial. Elas também trabalham na loja, mas uma grande parte do seu trabalho é de fazer os pedidos de produtos, decidir o posicionamento de produtos na loja, recepcionar encomendas e repassar às cooperadoras no início do turno quais são as tarefas pendentes para o turno atual.

Toda pessoa cooperadora tem um cartão, que lhe identifica na entrada da loja e com o qual pode fazer suas compras. Além disso, tem direito a nomear duas outras pessoas de mais de 18 anos que vivem sob o mesmo teto, essas não precisam trabalhar, são “comedoras” e ganham seus próprios cartões para fazer compras.

Eu, como cooperador, trabalho 2h45 na loja por mês. Às vezes fico no caixa, outras vezes reabastecendo prateleiras, na entrada da loja ou arrumando o estoque. Tem também gente que trabalha no escritório, recebendo novos membros, atualizando os status dos membros atuais e outras tarefas do tipo; Tem gente que trabalha no corte de queijos; Tem gente que limpa a loja e guarda os legumes na câmara fria no fim do dia; E tem gente que faz trabalhos em comitês específicos, como o comitê que organiza a assembléia geral ou o comitê que seleciona os produtos.

Um turno normalmente tem uma pessoa “supercooperadora”, que faz um treinamento com as pessoas assalariadas para saber mais sobre o funcionamento da loja, além de ser o ponto de contato das outras cooperadoras para ausências inesperadas. Além disso, é para a supercooperadora que os funcionários explicam quais tarefas tem mais prioridade no turno atual. Além dos trabalhos de sempre, como caixa e entrada da loja, as vezes entregas precisam ser organizadas no estoque, novos produtos precisam ser registrados no sistema da loja, ou as prateleiras de cerveja estão quase vazias e precisam de uma atenção especial ;)

Cada turno de trabalho na loja começa com os membros se reunindo, recebendo da supercooperadora as tarefas para o turno e então se dividindo pra realizar as tarefas. E depois de umas horinhas, todo mundo se despede, com o novo turno acontecendo só dali a 4 semanas.

Trabalhar e fazer as compras na BEES é uma experiência agradável. Não só você conhece os rostos que dividem seus turnos com você, mas você começa a encontrar as mesmas pessoas que fazem compras em horários similares a você, e depois a encontrar essas pessoas nas assembléias gerais. O comitê de vinhos as vezes coloca um aviso caloroso dizendo que eles acharam um novo vinho super gostoso e que você devia experimentar. Quando você está no caixa é normal ver as pessoas conversando dizendo que tal e tal produto são novos e são tão bons por causa disso e daquilo. E nos corredores é comum ver gente se reconhecendo e começando a conversar.

Uma coisa importante, que me perguntam com frequência é: “tá, tudo muito bonito, seu Lond, mas e o preço das coisas?”. E a verdade é que depende. Como eu disse lá no alto, um supermercado tradicional trabalha com uma margem variável de lucro, cada produto tem uma margem diferente. Na BEES a margem é constante. Todo produto tem uma margem de 20%. A loja não tem fins lucrativos, a margem está lá para a manutenção da loja e salários dos funcionários.

A nossa experiência é que o preço médio do carrinho é bem próximo do que é no supermercado tradicional. A gente também começou a comprar mais coisas orgânicas, porque o preço é bem próximo do não-orgânico no supermercado normal. Tem produtos mais caros e algumas marcas tradicionais simplesmente não são vendidos por lá.

E a quantidade plástico que a gente traz pra casa diminuiu de maneira espetacular. Os produtos de supermercado aqui tendem a ter muito mais embalagem plástica do que no Brasil, tenho impressão. E na BEES tem um incentivo ativo a reutilização, então a gente começou a usar sacos laváveis de pano pra legumes e granel, dá preferência pra embalagens de vidro que são retornáveis (ou mesmo quando não são, por aqui tem lixeiras especiais pra vidro, que são reciclados) e pra coisas como óleo e azeite a gente enche os galões a granel, reusando as mesmas garrafas de vidro pra encher de azeite na cozinha no dia-a-dia.

O projeto não é perfeito, claro, mas é uma demonstração agrádavel de que é possível se organizar de maneiras diferentes dentro do capitalismo, enquanto não saímos dele. Que é possível encontrar formas alternativas de fazer coisas essenciais como suas compras de mês que operam de uma forma coletiva, com uma preocupação ambiental e uma preocupação com o aspecto humano, com o trabalhador do outro lado da produção, evitando os grandes conglomerados que infectam a vida do dia-a-dia e tem tanto poder.

Tentei buscar um pouco e não achei projetos similares no Brasil. Sei que existem lojas, como o Armazém do Campo do MST, que tentam aproximar o produtor do consumidor e vendem produtos de assentamentos e de pequenos agricultores. Mas não achei nada com o mesmo modelo participativo, como a BEES ou Park Slope.

Em todo caso, deixo algumas sugestões pra você lendo esse texto: preste atenção o quanto de coisas você compra no supermercado é por causa de propaganda. Pense em comprar mais coisas a granel, no Rio eu sei que existem lojas tradicionais de granel, não são nenhuma BEES, mas você vai sentir o quanto isso diminui o uso de plástico. E por fim, procure projetos que te conectem a pequenos produtores, enquanto a gente não tira do poder do agronegócio por maneiras diretas, a gente pode tirar o poder do agronegócio indo ao pequeno produtor quando possível.

Mastodon 2.7

2019 - O ano de deixar o instagram

No início de 2018 eu finalmente apaguei o meu Facebook.

Eu já tinha reclamado do facebook em 2015 (abre em uma nova janela ); na época a preocupação era mais em termos de como eu consumia informação na internet. Eu procurei alternativas ao Facebook que tinha se tornado meu modo de consumir notícias e de passar as notícias a frente.

As preocupações com privacidade começaram quase ao mesmo tempo, posts como esse do Splinter, de 2016 (abre em uma nova janela ) ou esse do Gizmodo, de 2017 (abre em uma nova janela ) mostravam que os algoritmos de recomendação de amigos do Facebook tinham muito mais informação do que eles deixavam transparecer.

Em seguida todo o escândalo da Cambridge Analytica ganhou força, e logo depois, uma montanha de revelações ainda piores e a campanha #DeleteFacebook começou a ganhar força. Um artigo do New York Times fala de como o Facebook lutou contra os escândalos usando de lobbying e de difamação contra outras empresas. (abre em uma nova janela ).

No entanto, uma coisa sempre necessária de lembrar é que o Facebook é dono do Instagram e do Whatsapp. Hoje em dia, já há quem diga que a compra do Instagram pelo Facebook foi a maior falha regulatória da última década. (abre em uma nova janela )

Sair do Facebook foi um passo importante, mas pra manter contato com outras pessoas, é sempre muito difícil fugir do Whatsapp e do Instagram. A verdade é que o ideal seria que o Facebook (e o Google, já que estamos falando do assunto), sofressem um processo semelhante ao que a Microsoft passou no fim dos anos 90 (abre em uma nova janela ), quando a empresa teve que ser dividida, e o resultado foi o fôlego dado a outros navegadores como Firefox e Chrome, que puderam finalmente causar uma mudança na internet, que antes era basicamente dedicada ao Internet Explorer.

No entanto, como estamos falando de aplicações sociais por natureza, ações individuais como o #DeleteFacebook podem sim ter efeitos que tiram o poder da rede como um todo, a prova disso é como o Facebook já começou a mover sua atenção para o Instagram de diversas formas, como quando notificações de que pessoas que você seguia tinham postado fotos no Facebook estavam sendo testadas no Instagram (abre em uma nova janela ). Ao mesmo tempo, o Whatsapp deve receber anúncios em breve. (abre em uma nova janela )

Tudo isso mostra como o Facebook pretende continuar extendendo sua influência para os outros apps, em uma tentativa de manter seu controle sobre o dados dos seus usuários.

Por isso, uma das minhas resoluções de 2019 é de tentar abandonar o Instagram. A minha idéia é começar a mover mais e mais para o Fediverso (abre em uma nova janela ), agora que o Pixelfed (abre em uma nova janela ), uma alternativa ao instagram, começa a tomar forma.

Resta ver se a mudança vai ser possível. Que tal também vir pro Fediverso comigo? 😉

Why does decentralization matter?

The Depressing Phenomenon of Men Who Ask Their Dates No Questions

They do, however, talk a lot about snowboarding, ‘Mad Men,’ Socrates, their own penises, Amnesty International, mushrooms, foot fetishes, monogamy, war and trash bags

Nikki, a 22-year-old journalism student from Minneapolis, is telling me about the worst date she’s ever been on, with a man called Athens she met at college. “He talked about his goals, his week, his career, his meditation, his favorite books, his respect for ‘real’ musicians and how most people pronounce ‘namaste’ wrong,” she says. Nikki waited in vain to be asked a single question about herself while Athens raved about philosophy, monogamy, wanting to live in a van and how acid could lead to a higher sense of self. She waited for him to ask her about herself the entire date. “He texted me the next day about how much fun the date was,” she continues, “and he spelled my name wrong in the text.”

Nikki’s experience is bleakly funny, but it’s far from an anomaly. In the past week, I’ve heard from more than 250 women, men and non-binary people about their experiences with men asking them zero questions on dates. For example, Diana, a 25-year-old New Zealander currently based in Indiana, recently went on a date with the man who fixed her dishwasher. Assuming she was from Australia, he monologued about snakes, Steve Irwin and prison colonies while ordering pork nachos for the two of them (Diana is a vegetarian). After several hours of unidirectional conversation, Diana hadn’t been asked to share a single personal detail. “He didn’t ask me anything,” she tells me. “Like, not one thing. To this day, I’m not sure if he knows my name.”

Some of these men went into excruciating detail about dull topics while their dates sat across from them uninterrogated about their own jobs, dreams, values, favorite TV shows and best jokes. Vanessa, a 49-year-old consultant in Wellington, tells me about a date who treated her to a speech about his new office layout without learning a single detail about her. “He talked about how Bryan at work had got a desk next to the window, which was obviously a travesty,” she says. “Then he explained at length how his phone charger wouldn’t fit the electrical plug on his desk.” I heard from people whose dates — all men — Chromecasted their haircut pictures, performed feeble magic tricks, sang songs, broadcast the date on Instagram, adopted the downward dog position, watched the bar TV or pulled out their phones and began texting; anything but ask a solitary question of their dates in return, most of whom had been sitting like free therapists for hours.

To add insult to injury, many of the women who shared these stories with me said that the men told them later that they felt the dates had gone swimmingly, often asking for a second. This makes sense: being able to speak about oneself freely and without interruption to a patient, attentive audience is a service that usually costs upwards of $150 a session. If some smart, attractive social media editor from Ohio is willing to act as a free therapist for a few hours — and as a semi-relevant aside, almost all of these men refused to pick up the check for dinner — it’s no wonder the same men were lining up for more. As Anna, another woman I spoke to about her zero-question date, puts it: “Of course he thought the date went well. He’d been able to talk about himself uninterrupted for hours, while I looked on bored.”

Most of the people I spoke to about this phenomenon were women, but several gay men and non-binary people had near-identical experiences with romantic prospects who asked them no questions. “That happens so frequently dating other queer or gay men,” Kyle Turner, a 24-year-old freelance writer based in Brooklyn, tells me. “I spend a majority of the time asking them questions and they rarely return the favor, so at a certain point, I either try to slip in things about myself in response or give up.” Several women told me that, at a certain point, they began to treat the lack of reciprocity like a game, waiting in amusement to see how long it might take to be asked something about themselves. “I invented a bad first date drinking game,” Allie, a 27-year-old organizer from the Bay Area, tells me. “See how many sips and songs you can get through before he stops talking.” The date typically ends with Allie drunk, bemused and still a stranger to her date, despite her being treated to pretty much his entire inner world.

When I asked these ignored daters to hazard a guess as to the cause of this self-absorption, I got a variety of responses. Some thought it may have been nerves, while others felt men in general were more likely to view dates as a personal marketing exercise (“Here’s why you should find me attractive”) rather than an opportunity to get to know a romantic prospect. For a professional opinion, I spoke to Elise Franklin, a psychotherapist based in L.A., who tells me that the nerves hypothesis has limited applicability. “Sure, it can definitely be nerves for some,” she says. “I know I ramble when I’m nervous, and that’s common.” However, she says that a more significant explanation for the phenomenon is narcissism; a personality trait more common in men than women. “Narcissists can’t tolerate being told, ‘Your feelings don’t match my feelings,’” she explains. “To them, their feelings are everyone’s feelings — if I feel this way, then you feel this way, and if I’m interested in this, you are too.”

“Narcissism is encouraged in men,” Franklin continues. “Men are discouraged from mirroring their parents, and other members of society in general.” Because of this, she says, men are more likely to end up in the position of the oblivious, raving dater than women are. “Women are, in general, expected to be people pleasers,” she says. “We’ve learned our worth through social currency, and we’ve been the understanding ear for centuries.” She points out that her own listening profession, therapy, is dominated by women — the American Psychological Association found that there were 2.1 female psychologists for every male, and in less professionalized roles such as counseling, the gender gap is even larger.

Is this really such a gendered phenomenon, though? Aren’t women just as capable of being bloviating, self-absorbed bores? Yes, but with the significant proviso that social attitudes to gender mean that narcissism is tolerated in men but punished in women — an argument made by Jeffrey Kluger in his 2014 book The Narcissist Next Door, and confirmed in part by studies that show men interrupt women more than the reverse and that listener bias means even when men and women are speaking equal amounts, women are perceived as speaking 55 percent more and men 45 percent less.

As far as I’m aware, there’s no statistically significant data on this topic, and it’s a phenomenon that receives little media attention or academic inquiry. But my Twitter DMs and Gmail inbox are swollen with hundreds of anecdotes, all of which make one thing clear: There’s no shortage of men more willing to wax lyrical about snowboarding, Mad Men, Socrates, their own penises, Amnesty International, mushrooms, foot fetishes, monogamy and war — and to sings songs, strike yoga poses, share the contents of their entire camera rolls and perform magic tricks — than to ask the flesh-and-blood women and men they’re presently on a date with a single question about themselves.

The kicker? Most of them walk away thinking they nailed it.

The post The Depressing Phenomenon of Men Who Ask Their Dates No Questions appeared first on MEL Magazine.

Round and round. A fear submitted by Alec to Deep Dark Fears -...

Round and round. A fear submitted by Alec to Deep Dark Fears - thanks!

My two Deep Dark Fears books are available now from your local bookstore, Amazon, Barnes & Noble, Book Depository, iBooks, IndieBound, and wherever books are sold. You can find more information here!

Mastodon 2.6 released

Mastodon quick start guide

Setting up the development environment for Mastodon on Arch Linux

Well, on the last post I described how to run a mastodon instance using Arch Linux. But what if you wanted to contribute to Mastodon also?

I still plan to write maybe a small demo on how to get your hands dirty on Mastodon’s codebase, maybe fixing a small bug, but before that we need to have the development environment up and working!

Now, as it’s the case with the guide on how to run your instance, this guide is very similar to the official guide (opens in a new window ), and when in doubt, you should double check the official guide because it’s more likely to be up-to-date. This guide is also very similar to how to run an instance, I mean, it’s the same software, right?

There is also an official guide to setting up your environment using vagrant (opens in a new window ) which might be easier if you have enough resources for a VM running side-by-side with your environment and/or does not run Linux.

This guide is focused on Mastodon, but most of the setup done here will work for other ruby on rails projects you might want to contribute to.

This was last updated on 31st of January, 2019.

Note on the choices made in this guide

The official guide recommends rbenv (opens in a new window ), but I’m more used to rvm (opens in a new window ). rbenv is likely to be more lightweight. So if you don’t have any preferences, you might want to stick to rbenv and ruby-build (opens in a new window ) when installing ruby.

Since this is a development setup, I’m not mentioning any security concerns.

⚠️ Do not use this guide for running a production instance. ⚠️

Refer to how to run a mastodon instance using Arch Linux instead.

Questions are super welcome, you can contact me using any of the methods listed in the about page. Also if you notice that something doesn’t seem right, don’t hesitate to hit me up.

As with the other guide, I tested the steps on this guide on a virtual machine and they should work if you copy-paste them. Things might not work well if your computer has less than 2GB of ram.

On this page

- General Mastodon development tips

- Dependencies

- PostgreSQL configuration

- Redis

- Setting up ruby and node

- Cloning the repo and installing dependencies

- Working on master

- Tests

- Other useful commands

- Troubleshooting

General Mastodon development tips

From the official guide:

You can use a localhost->world tunneling service like ngrok (opens in a new window ) if you want to test federation, however that should not be your primary mode of operation. If you want to have a permanently federating server, set up a proper instance on a VPS with a domain name, and simply keep it up to date with your own fork of the project while doing development on localhost.

Ngrok and similar services give you a random domain on each start up. This is good enough to test how the code you’re working on handles real-world situations. But as soon as your domain changes, for everybody else concerned you’re a different instance than before.

Generally, federation bits are tricky to work on for exactly this reason - it’s hard to test. And when you are testing with a disposable instance you are polluting the databases of the real servers you’re testing against, usually not a big deal but can be annoying. The way I have handled this so far was thus: I have used ngrok for one session, and recorded the exchanges from its web interface to create fixtures and test suites. From then on I’ve been working with those rather than live servers.

I advise to study the existing code and the RFCs before trying to implement any federation-related changes. It’s not that difficult, but I think “here be dragons” applies because it’s easy to break.

If your development environment is running remotely (e.g. on a VPS or virtual machine), setting the

REMOTE_DEVenvironment variable will swap your instance from using “letter opener” (which launches a local browser) to “letter opener web” (which collects emails and displays them at /letter_opener ).

When trying to fix a bug or implement a new feature, it is a good idea to branch off the master branch with a new branch and then submit your pull request using that branch.

A good way to see that your environment is working as it should is to check out the latest stable release (for instance, at the time of writing the latest stable release is v2.7.1) and then run tests as suggested in the tests session. They should all pass because the tests in stable releases should always be working.

Dependencies

Since we’re trying to run the same software as in the production guide, we’ll need mostly the same dependencies, this is what we’ll need:

-

postgresql: The SQL database used by Mastodon -

redis: Used by mastodon for in-memory data store -

ffmpeg: Used by mastodon for conversion of GIFs to MP4s. -

imagemagick: Used by mastodon for image related operations -

protobuf: Used by mastodon for language detection -

git: Used for version control. -

python2: Used by gyp, a node tool that builds native addons modules for node.js

Besides those, it’s a good idea to install the base-devel group, since it comes with gcc and some ruby modules need to compile native extensions.

Now, you can install those with:

sudo pacman -S postgresql redis ffmpeg imagemagick protobuf git python2 base-devel

PostgreSQL configuration

Take a look at Arch Linux’s wiki about PostgreSQL (opens in a new window ). The first thing to do is to initialize the database cluster. This is done by doing:

sudo -u postgres initdb --locale en_US.UTF-8 -E UTF8 -D '/var/lib/postgres/data'

If you want to use a different language, there’s no problem.

After this completes, you can then do

sudo systemctl start postgresql # will start postgresql

You will need to start postgresql every time you want to use it for development. You could also enable it so it starts with your system if you prefer, but it will be running and using resources even when you don’t need it.

Now that postgresql is running, we can create your user in postgresql:

# Launch psql as the postgres user

sudo -u postgres psql

In the prompt that opens, you need to do the command below replacing the username you use on your setup.

-- Creates user with SUPERUSER permission level

CREATE USER <your username here> SUPERUSER;

The SUPERUSER level will let you do anything without having to change users. With great powers…

Redis

We also need to start redis. Same as postgresql:

sudo systemctl start redis # will start redis

As with postgres, you can enable it too to make it start with the system, but personally I prefer to start on demand.

Setting up ruby and node

This part is very similar to the production guide, so I’ll copy and paste a bit:

First step is that we install rvm that will be used for configuring ruby. For that we’ll follow the instructions at rvm.io (opens in a new window ). Before doing the following command, visit rvm.io (opens in a new window ) and check which keys need to be added with gpg --keyserver hkp://keys.gnupg.net --recv-keys.

\curl -sSL https://get.rvm.io | bash -s stable

After that, we’ll have rvm. You will see that to use rvm in the same session you need to execute additional commands:

source $HOME/.rvm/scripts/rvm

With rvm installed, we can then install the ruby version that Mastodon uses:

rvm install 2.6.1

Now, this will take some time, drink some water, stretch and come back.

Similarly, we will install nvm for managing which node version we’ll use.

curl -o- https://raw.githubusercontent.com/creationix/nvm/v0.33.11/install.sh | bash

Refer to nvm github (opens in a new window ) for the latest version.

You will also need to run

export NVM_DIR="$HOME/.nvm"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm

[ -s "$NVM_DIR/bash_completion" ] && \. "$NVM_DIR/bash_completion" # This loads nvm bash_completion

And add these same lines to ~/.bash_profile

And then to install the node version we’re using:

nvm install 8.11.3

And to install yarn:

npm install -g yarn

While we’re at it, we also need to install bundler:

gem install bundler

And with that we have ruby and npm dependencies ready to go.

Cloning the repo and installing dependencies

You need to clone the repo somewhere on your computer. I usually clone my projects in a source folder in my home directory, if you do different, change the following instructions accordingly.

cd ~/source

git clone https://github.com/tootsuite/mastodon.git

And then, cd ~/source/mastodon and we will install the dependencies of the project:

bundle install # install ruby dependencies

yarn install --pure-lockfile # install node dependencies

This will also take a while, try to relax a bit, have you listened to your favorite song today? 🎶

Since we created the postgres user before, we can setup the development database using:

bundle exec rails db:setup

This will use the default development configuration to setup the database. Which means: no password, same user as your username, using a database named mastodon_development in localhost.

In development mode the database is setup with an admin account for you to test with. The email address will be admin@YOURDOMAIN (e.g. admin@localhost:3000) and the password will be mastodonadmin.

Now, you have two options.

Run each service separately

If you checked out the guide to run an instance, you probably noticed that mastodon has three parts: A web service, a sidekiq service to run background jobs and a streaming service. In development you need those three components too, plus the webpack development server, which will compile assets (javascript, css) as needed. In production we don’t need webpack running all the time because we compile the assets only once after we update Mastodon.

To run those separately, you will need one window for each, since each of those holds your terminal while it’s running.

To run the web server:

bundle exec puma -C config/puma.rb

To run sidekiq:

bundle exec sidekiq

To run the streaming service:

PORT=4000 yarn run start

And to run webpack:

./bin/webpack-dev-server --listen-host 0.0.0.0

All of those should start immediately, except for the webpack server, which compiles the assets before starting.

To check that everything is working as expected, if you open your browser window at http://localhost:3000 you should see Mastodon landing page!

Run everything using Foreman

Now, most of the time this method is more practical. Running each service by itself is good if one is not starting to see what is the error, but most of the time you’ll want to start everything so that you can start coding away. In which case, first you’ll want to install foreman

gem install foreman

And then, when you need to start your dev environment you can do:

foreman start -f Procfile.dev

Working on master

When working on master, the steps are similar to when updating an instance, but they happen much more frequently since master changes much more frequently.

This means, every time you pull changes into your computer (for instance, when you do git pull origin master), you might need to:

# Update any gems that were changed

bundle install

# Update any node packages that were changed

yarn install --pure-lockfile

# Update the database to the latest version

bin/rails db:migrate RAILS_ENV=development

Now, you don’t need to run them all the time, you will notice if one of them is not working as it should. How?

Bundler complains like this:

Could not find proper version of railties (5.2.0) in any of the sources

Run `bundle install` to install missing gems.

The name of the gem and version will change, but this means that one of your dependencies is not up to date and you need to run bundle install again.

If the database is missing a migration, rails will complain with:

ActiveRecord::PendingMigrationError - Migrations are pending. To resolve this issue, run:

bin/rails db:migrate RAILS_ENV=development

This will appear on your console, but also on your browser.

If the ruby being used in the project is updated, you will also see some complaints from rvm (in this example, with a hypothetical ruby 2.6.2):

$ cd .

Required ruby-2.6.2 is not installed.

To install do: 'rvm install "ruby-2.6.2"'

In that case we need to do the same as we did to install it the first time, that is:

rvm install 2.6.2

And since rvm manages gems by ruby version, you’ll need to install the dependencies again using bundle install.

Tests

Tests in the mastodon project live in the spec folder. Tests also use migrations, so if the database was updated since you last ran tests, you will need to run something like this:

bin/rails db:migrate RAILS_ENV=test

But when you try to run tests with a database missing migrations, you’ll get an error from Rails that will explain exactly that.

To run all the tests, you need to do:

rspec

To run only one test, you can run it like so:

rspec spec/validators/status_length_validator_spec.rb

Other useful commands

If you add a new string that needs to be translated, you can run

yarn manage:translations

To update the localization files. This is needed so that weblate (opens in a new window ) can inform translators that there are new strings to be translated in other languages.

You can check code quality using

rubocop

Have in mind that it might complain about code violations that you did not introduce, but you should always try not to introduce new violations.

Troubleshooting

RVM says it’s not a function

Follow recommended instructions at https://rvm.io/integration/gnome-terminal (opens in a new window )

Mastodon has no css

If mastodon has no css and you see something like #<Errno::ECONNREFUSED: Failed to open TCP connection to localhost:3035 (Connection refused - connect(2) for "::1" port 3035)> in your console, the issue is where webpacker is trying to connect. You can fix it by changing config/webpacker.yml.

Instead of

dev_server:

host: localhost

Use

dev_server:

host: 127.0.0.1