Adam Victor Brandizzi

Shared posts

How the Ballpoint Pen Changed Handwriting - The Atlantic

Adam Victor BrandizziVia Slate Star Codex.

Recently, Bic launched a campaign to “save handwriting.” Named “Fight for Your Write,” it includes a pledge to “encourage the act of handwriting” in the pledge-taker’s home and community, and emphasizes putting more of the company’s ballpoints into classrooms.

As a teacher, I couldn’t help but wonder how anyone could think there’s a shortage. I find ballpoint pens all over the place: on classroom floors, behind desks. Dozens of castaways collect in cups on every teacher’s desk. They’re so ubiquitous that the word “ballpoint” is rarely used; they’re just “pens.” But despite its popularity, the ballpoint pen is relatively new in the history of handwriting, and its influence on popular handwriting is more complicated than the Bic campaign would imply.

The creation story of the ballpoint pen tends to highlight a few key individuals, most notably the Hungarian journalist László Bíró, who is credited with inventing it. But as with most stories of individual genius, this take obscures a much longer history of iterative engineering and marketing successes. In fact, Bíró wasn’t the first to develop the idea: The ballpoint pen was originally patented in 1888 by an American leather tanner named John Loud, but his idea never went any further. Over the next few decades, dozens of other patents were issued for pens that used a ballpoint tip of some kind, but none of them made it to market.

These early pens failed not in their mechanical design, but in their choice of ink. The ink used in a fountain pen, the ballpoint’s predecessor, is thinner to facilitate better flow through the nib—but put that thinner ink inside a ballpoint pen, and you’ll end up with a leaky mess. Ink is where László Bíró, working with his chemist brother György, made the crucial changes: They experimented with thicker, quick-drying inks, starting with the ink used in newsprint presses. Eventually, they refined both the ink and the ball-tip design to create a pen that didn’t leak badly. (This was an era in which a pen could be a huge hit because it only leaked ink sometimes.)

The Bírós lived in a troubled time, however. The Hungarian author Gyoergy Moldova writes in his book Ballpoint about László’s flight from Europe to Argentina to avoid Nazi persecution. While his business deals in Europe were in disarray, he patented the design in Argentina in 1943 and began production. His big break came later that year, when the British Air Force, in search of a pen that would work at high altitudes, purchased 30,000 of them. Soon, patents were filed and sold to various companies in Europe and North America, and the ballpoint pen began to spread across the world.

The ballpoint’s universal success has changed how most people experience ink.Businessmen made significant fortunes by purchasing the rights to manufacture the ballpoint pen in their country, but one is especially noteworthy: Marcel Bich, the man who bought the patent rights in France. Bich didn’t just profit from the ballpoint; he won the race to make it cheap. When it first hit the market in 1946, a ballpoint pen sold for around $10, roughly equivalent to $100 today. Competition brought that price steadily down, but Bich’s design drove it into the ground. When the Bic Cristal hit American markets in 1959, the price was down to 19 cents a pen. Today the Cristal sells for about the same amount, despite inflation.

The ballpoint’s universal success has changed how most people experience ink. Its thicker ink was less likely to leak than that of its predecessors. For most purposes, this was a win—no more ink-stained shirts, no need for those stereotypically geeky pocket protectors. However, thicker ink also changes the physical experience of writing, not necessarily all for the better.

I wouldn’t have noticed the difference if it weren’t for my affection for unusual pens, which brought me to my first good fountain pen. A lifetime writing with the ballpoint and minor variations on the concept (gel pens, rollerballs) left me unprepared for how completely different a fountain pen would feel. Its thin ink immediately leaves a mark on paper with even the slightest, pressure-free touch to the surface. My writing suddenly grew extra lines, appearing between what used to be separate pen strokes. My hand, trained by the ballpoint, expected that lessening the pressure from the pen was enough to stop writing, but I found I had to lift it clear off the paper entirely. Once I started to adjust to this change, however, it felt like a godsend; a less-firm press on the page also meant less strain on my hand.

My fountain pen is a modern one, and probably not a great representation of the typical pens of the 1940s—but it still has some of the troubles that plagued the fountain pens and quills of old. I have to be careful where I rest my hand on the paper, or risk smudging my last still-wet line into an illegible blur. And since the thin ink flows more quickly, I have to refill the pen frequently. The ballpoint solved these problems, giving writers a long-lasting pen and a smudge-free paper for the low cost of some extra hand pressure.

As a teacher whose kids are usually working with numbers and computers, handwriting isn’t as immediate a concern to me as it is to many of my colleagues. But every so often I come across another story about the decline of handwriting. Inevitably, these articles focus on how writing has been supplanted by newer, digital forms of communication—typing, texting, Facebook, Snapchat. They discuss the loss of class time for handwriting practice that is instead devoted to typing lessons. Last year, a New York Times article—one that’s since been highlighted by the Bic’s “Fight for your Write” campaign—brought up an fMRI study suggesting that writing by hand may be better for kids’ learning than using a computer.

I can’t recall the last time I saw students passing actual paper notes in class, but I clearly remember students checking their phones (recently and often). In his history of handwriting, The Missing Ink, the author Philip Hensher recalls the moment he realized that he had no idea what his good friend’s handwriting looked like. “It never struck me as strange before… We could have gone on like this forever, hardly noticing that we had no need of handwriting anymore.”

No need of handwriting? Surely there must be some reason I keep finding pens everywhere.

Of course, the meaning of “handwriting” can vary. Handwriting romantics aren’t usually referring to any crude letterform created from pen and ink. They’re picturing the fluid, joined-up letters of the Palmer method, which dominated first- and second-grade pedagogy for much of the 20th century. (Or perhaps they’re longing for a past they never actually experienced, envisioning the sharply angled Spencerian script of the 1800s.) Despite the proliferation of handwriting eulogies, it seems that no one is really arguing against the fact that everyone still writes—we just tend to use unjoined print rather than a fluid Palmerian style, and we use it less often.

Fountain pens want to connect letters. Ballpoint pens need to be convinced to write.I have mixed feelings about this state of affairs. It pained me when I came across a student who was unable to read script handwriting at all. But my own writing morphed from Palmerian script into mostly print shortly after starting college. Like most gradual changes of habit, I can’t recall exactly why this happened, although I remember the change occurred at a time when I regularly had to copy down reams of notes for mathematics and engineering lectures.

In her book Teach Yourself Better Handwriting, the handwriting expert and type designer Rosemary Sassoon notes that “most of us need a flexible way of writing—fast, almost a scribble for ourselves to read, and progressively slower and more legible for other purposes.” Comparing unjoined print to joined writing, she points out that “separate letters can seldom be as fast as joined ones.” So if joined handwriting is supposed to be faster, why would I switch away from it at a time when I most needed to write quickly? Given the amount of time I spend on computers, it would be easy for an opinionated observer to count my handwriting as another victim of computer technology. But I knew script, I used it throughout high school, and I shifted away from it during the time when I was writing most.

My experience with fountain pens suggests a new answer. Perhaps it’s not digital technology that hindered my handwriting, but the technology that I was holding as I put pen to paper. Fountain pens want to connect letters. Ballpoint pens need to be convinced to write, need to be pushed into the paper rather than merely touch it. The No.2 pencils I used for math notes weren’t much of a break either, requiring pressure similar to that of a ballpoint pen.

Moreover, digital technology didn’t really take off until the fountain pen had already begin its decline, and the ballpoint its rise. The ballpoint became popular at roughly the same time as mainframe computers. Articles about the decline of handwriting date back to at least the 1960s—long after the typewriter, but a full decade before the rise of the home computer.

Sassoon’s analysis of how we’re taught to hold pens makes a much stronger case for the role of the ballpoint in the decline of cursive. She explains that the type of pen grip taught in contemporary grade school is the same grip that’s been used for generations, long before everyone wrote with ballpoints. However, writing with ballpoints and other modern pens requires that they be placed at a greater, more upright angle to the paper—a position that’s generally uncomfortable with a traditional pen hold. Even before computer keyboards turned so many people into carpal-tunnel sufferers, the ballpoint pen was already straining hands and wrists. Here’s Sassoon:

We must find ways of holding modern pens that will enable us to write without pain. …We also need to encourage efficient letters suited to modern pens. Unless we begin to do something sensible about both letters and penholds we will contribute more to the demise of handwriting than the coming of the computer has done.

I wonder how many other mundane skills, shaped to accommodate outmoded objects, persist beyond their utility. It’s not news to anyone that students used to write with fountain pens, but knowing this isn’t the same as the tactile experience of writing with one. Without that experience, it’s easy to continue past practice without stopping to notice that the action no longer fits the tool. Perhaps “saving handwriting” is less a matter of invoking blind nostalgia and more a process of examining the historical use of ordinary technologies as a way to understand contemporary ones. Otherwise we may not realize which habits are worth passing on, and which are vestiges of circumstances long since past.

In Defense of Mobile Homes

In Defense of Mobile Homes

Consider the trailer park.

Or, better yet, reconsider the trailer park, whose stereotypical association with peeling paint and unemployed seniors is outdated.

The quiet story of trailer parks over the last two decades is their reinvention as “mobile home communities” by investors who saw a lucrative opportunity in providing housing to low-income Americans.

The billionaire investor and real estate mogul Sam Zell recently said of his investment fund that owns mobile home communities—some of which advertise amenities like pools and tennis clubs—that he doesn’t “know of any stock or property I’m involved in that has a better prospect.”

Since 2003, Warren Buffett has owned Clayton Homes, which builds houses destined for trailer parks across the country. At 1400 square feet, many of the homes don’t look like they were delivered on the back of a truck.

Franke Rolfe, a Stanford graduate who teaches people how to profit in the mobile home industry, buys dilapidated trailer parks, cleans them up, and rents mobile homes to the working poor. A 2014 New York Times Magazine article reported that he and a partner earned a 25% return on their investment.

Trailer parks’ appeal to these investors is simple. Millions of Americans struggle with rent payments, but still want a lawn. For them, mobile homes are the cheapest form of housing available. At the same time, it’s rare for someone to build a new mobile home park, because no homeowner wants a trailer park nearby. An industry with healthy demand but a fixed supply attracts the country’s capitalists.

These capitalists realized that trailer parks are an undervalued asset. But maximizing profits at a mobile home park isn’t pretty. It means taking advantage of the lack of supply and the expense of moving a mobile home to raise rents every year. These investors avoid states with rent control, and they’re attuned to just how much a family can pay without becoming insolvent.

But in their pursuit of profit, investors also dramatically increased the stock of well managed, affordable housing. And they’d create a lot more—at better prices—if America’s homeowners weren’t dead set against trailer parks.

Building the Affordable House

During his presidency, Bill Clinton championed efforts to “steadily expand the dream of homeownership to all Americans.” George W. Bush, too, promoted an “ownership society.” Helping Americans to own their homes, he said, would “put light where there’s darkness.”

Neither president could change the reality that many Americans cannot afford a house. The percentage of homeowners increased from 64% to a peak of 69% during their tenures. But the bump relied on no money down mortgages and irresponsible lending—the phenomenon satirized in The Big Short when the character played by Steve Carell tells a stripper in Florida that she really shouldn’t have five home loans. (In real life, it happened in Vegas.) Since the housing bubble burst, the homeownership rate has returned to 64%.

Why can’t we build affordable homes? America is full of gargantuan houses that are customized like each owner is a reality TV contestant. It sure seems like more people could afford homes if we made them smaller and more efficiently.

But according to Witold Rybczynski, an emeritus professor of architecture at the University of Pennsylvania, that is not the case.

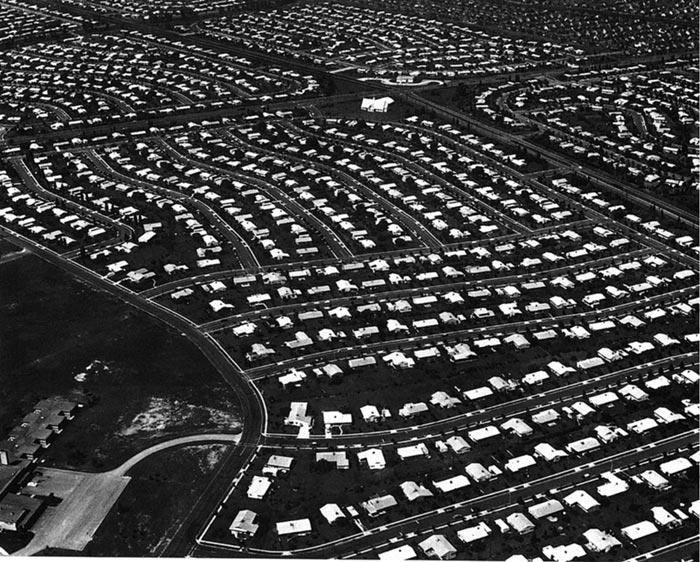

An old picture of Levittown, Pennsylvania, which features Levitt and Sons standardized, one-story homes.

Writing in The Wilson Quarterly, Rybczynski cites the famous case of the “Levittowns” built for newly married GIs after World War II. Levitt and Sons constructed houses like Henry Ford built cars. “Teams of workers performed repetitive tasks,” Rybczynski writes, and every house was a one-story with the kitchen and bathroom facing the street to reduce the length of the pipes.

Each “Levittowner” was identical except for its paint job, and thanks to the cold efficiency of the process, they cost $9,900 in the 1950s. That’s around $90,000 in 2016 dollars. A new home today is more than twice as large and sells for $300,000.

So if we made ‘em like we did in the old days, could we slash the price? Rybczynski says no.

The modern McMansion seems like an expensive, bespoke product. But its construction is actually a triumph of industrial efficiency. Windows, doors, and other parts arrive prefabricated, Rybczynski writes, so labor costs have actually halved since 1949. Levitt and Sons spent $4 to $5 per square foot building Levittowners, and, adjusted for inflation, builders today spend the same amount.

Instead the problem is almost wholly that land is too expensive. Reduce the size of a new, modern house by 50%, Rybczynski notes, and houses in metropolitan areas will still cost over $200,000.

That’s the secret to the extreme affordability of a mobile home—take land out of the equation.

The Extreme Profitability of Mobile Homes

Franke Rolfe is the landlord for tens of thousands of people. Along with a business partner and his backers in private equity, he co-owns mobile home communities in 16 states.

But, he explains, “a mobile home park is by definition a parking lot. Legally, our parks are no different from a parking lot by an airport.”

This is why used mobile homes only cost $10,000-$20,000. They make it possible for someone to buy a home but not the earth it’s parked on. As a Times profile of Rolfe reported, his average tenant pays $250 to $300 in monthly rent. If the tenant doesn’t own her home, she might pay another $200 or $300, with the option to apply half of that toward purchasing a mobile home.

“We’re the cheapest form of detached housing there is,” says Rolfe. “You can’t do cheaper.”

Harvard's Joint Center for Housing recently reported that one in five renters earns less than $15,000 a year. For this group, paying more than $400 a month in rent is unaffordable. Especially for a family, finding an apartment is tough, and renting a typical house may be impossible—the Harvard report notes that “single-family homes have among the highest median rents of any type of rental housing.”

Buying a mobile home also compares favorably with a mortgage: According to a 2015 U.S. Census survey, only 3.4% of Americans with a mortgage paid less than $600 in monthly housing costs.

The economics of mobile homes has attracted new residents. When Times reporter Gary Rivlin attended Rolfe’s class on the trailer park industry, which Rolfe calls Mobile Home University, a manager explained that his tenants were once all retirees. But the park was now full of two-income families “making minimum wage at Taco Bell.”

So why do investors like Rolfe and Warren Buffett see gold in these cash-strapped Americans?

When a business makes boatloads of money from poor customers, the answer can be unseemly if not illegal. In the subprime auto loan industry, executives offer low-income Americans car loans with such high fees that 1 in 4 customers default. Hidden fees are common, and when a customer fails to pay, companies still profit by repossessing and re-lending the car. The LA Times found that one Kia Optima was lent out and repossessed eight times in under three years.

Warren Buffett’s mobile home construction business, Clayton Homes, has been accused of using a similar, exploitative business model. According to reporting by the Seattle Times and the Center for Public Integrity, Clayton Homes lent customers money to buy their mobile homes at above-average rates and unexpectedly added fees or changed the terms of the loan. When low income buyers fell behind on their mobile home payments, Clayton Homes profited by repossessing the house and re-selling it.

The situation at mobile home parks like Rolfe’s, however, looks more like the poor’s experience with financial services. Since their meager savings aren’t profitable for banks, they pay for services wealthier Americans get for free: a few dollars to cash a check, a few dollars to send money to an aunt. And since they can’t afford a mortgage, they pay more dearly for the land under their mobile home.

As Rivlin recounts of his time at Rolfe’s Mobile Home University, he learned that “one of the best things about investing in trailer parks is that ambitious landlords can raise the rent year after year without losing tenants. The typical resident is more likely to endure the increase than pay a trucking company the $3,000 it can easily cost to move even a single-wide trailer to another park.” A 30% increase in rent “might sound steep,” but Rolfe says residents “can always pick up extra hours.”

This realization has earned Rolfe, his partners, and his fellow mobile home moguls handsome returns: poor Americans could afford to pay much more for a taste of homeownership.

American Dream; American Reality

Rolfe bought his first trailer park in 1996 from an owner who, he told the Times, “would open the door just wearing his underwear, totally hungover.”

You’ll find more Stanford graduates like Rolfe selling software than mobile homes. But Rolfe saw that the park had unrealized, financial potential. His business partner, Dave Reynolds, had the same epiphany in 1993. This has now become a formula they teach at Mobile Home University: identify promising trailer parks and buy them from their mom-and-pop owners.

But when savvy investors like Rolfe and Reynolds buy a mobile home park, they do not just passively collect rent payments. “The parks they take over tend to be in lousy shape,” Rivlin writes, “and they spend hundreds of thousands of dollars fixing them up.” They also hire tough managers who pay equal attention to keeping the grass mowed and kicking out tenants who break the law.

“There’s more money in decent than slumlording,” Rivlin quotes Rolfe telling a Mobile Home U class. He wants responsible tenants who will see the value in paying increasing rent payments.

A mobile home park In the UK. Copyright Clive Perrin and licensed for reuse.

The result is that their tenants have a safe place to live (and a lawn) at a low price point. Some parks are easily identified by the occasional beat up trailer, but others are easy to mistake for a cookie cutter neighborhood of small to mid-size houses. In his visits to Rolfe’s trailer parks, Rivlin was struck by how many tenants said they were satisfied with the landlord and pleased with their home.

One resident said her only complaint was “the redneck jokes I’ve been hearing since the day I moved in.”

***

The phrase affordable housing evokes New York City’s projects and government subsidies. But as emeritus architecture professor Witold Rybczynski likes to point out, affordable housing “once meant commercially built houses that ordinary working people could afford.”

A profit motive is pushing Franke Rolfe and Dave Reynolds and Sam Zell and private equity partners to invest in turning rundown trailer parks into safe, decent, affordable housing.

It’s not pretty, but it gets results. In the Times, Rivlin noted that as of 2014, Reynolds and Rolfe’s 10,000 mobile home lots made them “about equivalent in size to a public-housing agency in a midsize city.” Sam Zell’s Equity Lifestyle Properties owns around 140,000 lots.

They are responding to an unmet need. Limited government funding for affordable housing means that only one in four eligible households receive assistance. California’s governor has noted that it takes a $5 billion subsidy to provide 100 people with affordable housing in San Francisco, where units are distributed by lottery. And according to a Harvard housing report, public, affordable housing is (on average) in the worst shape of all of the country’s rental properties.

But the market mechanism that drives investors to turn trailer parks into decent, affordable housing is half broken.

One reason Rolfe, Zell, and others invest in mobile home parks is that it’s nearly impossible to build a new one. “Cities fight tooth and nail,” says Rolfe. “In Louisiana, we drew a standing room only crowd [at a public hearing] to fight an expansion of twenty lots to our trailer park.” With residents and city officials blocking construction, investors like Rolfe can raise their tenants’ rents without worrying about a competitor popping up nearby.

Rolfe is the first to say that residents’ concerns about mobile home parks are “valid.” Trailer parks drag down property values, often due to the expectation that they’ll bring crime. (“It would be nice if television showed something positive happening in a mobile home park,” Rolfe says.) Since mobile homes are cheap and taxed like cars, their owners don’t pay much in taxes even as they send their children to the local school.

“From a financial perspective,” says Rolfe, “it’s simple to see why cities don’t want mobile home parks.”

But just because it’s understandable, that doesn’t make it a good idea.

Few Americans would cop to wanting to live in a town without poor people, but that’s the effect of their actions when they oppose a trailer park or dense or affordable housing. And while residents may not want to subsidize newcomers, our tax code is progressive because rich people benefit when their taxes fund the school attended by a poor but brilliant young girl. Saying “not in my backyard” is also the exact reason that land and single-family homes are so expensive in the first place.

California governor Jerry Brown recently proposed legislation that would limit the ability of locals to block the development of new (and denser) housing. But it’s unlikely someone will champion trailer parks—even though they’ll be immediately affordable.

They have good reasons. Unlike a typical house, mobile homes decrease in value over time, so many are worth little once families pay off their loans. Nor does a home offer much security if you can be evicted from the land it sits on. It’s also not good politics to suggest Americans settle for housing that is synonymous with “trailer trash.”

That’s too bad. Clinton and Bush’s dream of every American owning a home ended in tragedy. But driven by profits, trailer park moguls are meeting America’s fast food workers and low-income retirees where they live.

Our next article explores what a baboon massacre at the London Zoo tells us about human nature. To get notified when we post it → join our email list.

![]()

Announcement: The Priceonomics Content Marketing Conference is on November 1 in San Francisco. Get your early bird ticket now.

Editing Wikipedia for a decade: Gareth Owen – Wikimedia Blog

Adam Victor Brandizzi"The problem with Wikipedia is that it only works in practice; in theory it’s a total disaster." Good one :)

Gareth Owen is one of the earliest contributors to Wikipedia—his user ID is 151, and his first edit was to create the “Hobbits” article in March 2001.

He has seen Wikipedia grow “in just a couple of years from a sparse website to something where you could look something up with a reasonable chance of getting a non-terrible response.” As described in his own voice.

Owen, a native of North West England who now lives just outside Manchester in the United Kingdom, has been using the internet when he was a student in the early 90s. He discovered Wikipedia in its earliest days, when the site was just a “silly little spin-off” from a less-collaborative wiki called Nupedia. Owen was most active during Wikipedia’s first few years. Collaborating with people from different places on providing information to the public about topics of interest to him was his motivating force.

“I enjoyed doing research on my favourite topics,” Owen explains. “I enjoyed the collaborative process and watching people devote their time to something really worthwhile—essentially altruistically—and expecting little in return.”

So far, Owen has edited Wikipedia over 6,000 times and has created 113 new articles. He has been most interested in editing articles about music, sports and mathematics, his field of study. He has started some important articles about music, covering bands like The Beatles and The Velvet Underground, and he has rewritten articles about Bob Dylan, Miles Davis, and The Rolling Stones.

The sports category on Wikipedia is rich with many articles first written by Owen, such as Manchester United F.C., Rugby World Cup and expanding the existing article on the Summer Olympic Games to include details about the history of the Olympic Games from the beginning until Sydney 2000. These are just a few examples.

“Everything was up for grabs back then and there was so much to be done,” Owen elaborated. “If you started working on an area, you could expand an entry with a few token sentences to something with a larger overview of a big subject. And if you did a good job, Larry Sanger or Jimmy Wales would add your article to the “Brilliant Prose” list, which was a pretty good feeling.”

The Wikipedia quality standards have changed over the years. The “brilliant prose” selection system has since been replaced with new criteria for selecting Wikipedia’s best articles, which are now called “featured articles.” Some of Owen’s articles that were cited in the “brilliant prose” list in the early 2000s now appear as featured articles, thanks to the efforts of other Wikipedians. Some examples include The Beatles, Sandy Koufax and Babe Ruth.

During this time, Wikipedia was very quiet with a minimal rate of spam and edit wars. Owen remembers that “the rate of editing was slow enough that only a few people would keep an eye on anon edits and correct the most egregious damage manually. Jimmy Wales would arbitrate anything that ran on too long. Obviously that didn’t scale very well, and extra layers of administrative oversight came in by the mid-2000s.”

“The George W. Bush article was a battleground even then, but I shudder to think how much admin time has been devoted to trying to impose a neutral point of view on articles about the Clinton/Trump presidential race,” he adds.

A couple of years ago, Owen and his family were surprised by a show host who quoted Owen on BBC Radio 4. The show had been discussing Wikipedia when the host John Lloyd closed by saying, “In the words of an original Wikipedian, Gareth Owen, ‘The problem with Wikipedia is that it only works in practice; in theory it’s a total disaster.’” The quote has been used several times, including once by the New York Times.

“This remark has cropped up in a number of articles and features (sometimes credited to me, sometimes others). I wonder if in 100 years it’ll be the only trace of me left on the internet.”

Samir Elsharbaty, Digital Content Intern

Wikimedia Foundation

“The decade” is a new blog series that profiles Wikipedians who have spent ten years or more editing Wikipedia. If you know of a long-time editor who we should talk to, send us an email at blogteam[at]wikimedia.org.

The Massacre at Monkey Hill

The Massacre at Monkey Hill

Photo from Malcolm Peaker

***

In 1932, Solly Zuckerman sat down to write a book about a baboon massacre at the London Zoo.

The carnage in question had started seven years earlier when the Zoological Society of London opened a new baboon exhibit. The enclosure, called “Monkey Hill,” was state-of-the-art: an open-air rock cliff with simian-friendly amenities designed to keep the resident primates happy and healthy.

But something had gone terribly wrong.

As soon as the first batch of hamadryas baboons were ushered onto the artificial rock face, war broke out. By the end of the decade, two-thirds had been killed.

The seven-year bloodbath was a hit with the viewing public, and the misdeeds of these murderous monkeys made a splash in papers on both sides of the Atlantic.

For Zuckerman, who dissected and studied animal carcasses at the zoo, the violence provided an important insight into primate behavior. Ape and monkey society is built on violence and sexual dominance, he theorized. Humanity’s closest relatives were creatures of chaos, lust, and slaughter. This insight launched Zuckerman’s career and defined the early scientific field of primatology.

For many, the theory also offered an appealing explanation for human behavior. In political science, psychiatry, and the popular press, the slaughter at Monkey Hill was taken as evidence of the inherent depravity of humanity.

There was just one problem: the science was bunk. Baboons do not routinely butcher one another in the wild, nor do all primates behave like hamadryas baboons.

Primatologists have long since dismissed Zuckerman’s theory of primate behavior. But for decades, the mistaken lessons of Monkey Hill defined primatology, permeated popular culture, and contributed to a widespread view of humanity as a species on the brink of mayhem.

The Benefits of Outdoor Living

Monkey Hill was supposed to save the baboons.

Until the 1920s, the London Zoo housed most of its animals indoors, in the dark, and behind bars. But the sickly, sad-looking creatures depressed the zoo visitors and dropped dead of preventable illnesses at alarming rates. The primates were of particular concern. Pneumonia, tuberculosis, and vitamin-D deficiency were the scourge of the Monkey House.

Taking a cue from German zookeepers, the London Zoological Society designed an outdoor enclosure called Monkey Hill in 1924—a place where primates could roam about and live as if they were in their native habitat. Well, nearly. The zookeepers used shelters, heaters, and UV-lights to combat the perennial gloom of fog-swaddled London.

As science writer Jonathan Burt explains, the open-air exhibit was “intended to showcase the benefits of outdoor living for animal health.” Plus, he adds, from behind the 12-foot outer wall that surrounded the enclosure zoo, visitors were afforded a much better show.

They got one.

In 1925, a shipment of ninety-seven hamadryas baboons arrived by boat from the Horn of Africa. They were all supposed to be male. As Jane Goodall’s biographer Dale Peterson writes, the zoo’s gender preference was “based on the idea that the males—big and dramatically fanged and caped, with pink buttocks—would appeal to a zoo-going public more than the smaller and less gaudy females.” But whether by neglect or indifference, six females were included in the shipment. The result was a bloodbath.

Once the exhibit opened, the males immediately went to war over access to the females. With the former outnumbering the latter 15-to-1, the competition was ferocious. Caught in a constant tug-of-war between dozens of brawny, sharp-fanged males, most of the females were killed over the coming months. Even so, the males fought over their bodies. The violence was sometimes so fierce that zookeepers had to wait days before they could scale Monkey Hill and retrieve a carcass. Within two years, nearly half of the baboons were dead.

A male hamadryas baboon. Photo by Sonja Pauen.

But rather than remove the remaining females from the enclosure, the Zoological Society of London thought that they could quell the violence by adding more. Just as the fighting began to simmer down in 1927, the zoo introduced 30 additional females and five adolescent males. The violence exploded anew.

By 1931, 64% of all the males and 92% of the females that had been brought to Monkey Hill had died. While some of the males had died of disease, virtually all of the females died violent deaths. Of the 15 infants born in the enclosure, only one managed to survive.

This all came as a shock to the zookeepers. Instead of a sanctuary, they had inadvertently created a fighting pit—a gladiator arena with sex. And there was a lot of sex. In 1929, one member of the Zoological Society worried that the constant baboon copulation—violent, polygamous, and occasionally necrophilic—would have a “demoralizing” effect on the public.

Just the opposite was true. The sex and violence on Monkey Hill was a draw for zoo visitors. The tabloid press in England took a prurient interest in the sorry state of the females on the hill, with one describing “meek, subdued, tractable creatures” who “live on the leavings and take life sadly.” In 1930, even Time magazine profiled the “wife-stealer” primates at the London Zoo.

The same year, the zoo superintendent finally removed the surviving females. It was a moral choice, but not a shrewd business decision: the violence dropped along with attendance at the exhibit. To at least one tabloid, the conclusion was obvious: “men are a peaceable race, once you eliminate the women.”

This was only the first of many flawed conclusions to be drawn from Monkey Hill.

Red in Claw and Fang

When Solly Zuckerman was hired by the London Zoo to dissect baboon carcasses, he came with experience.

Born in South Africa, where baboons were regarded as a pest, he had spent his teenage years shooting the animals for bounty and dissecting their bodies for fun. This odd adolescent hobby notwithstanding, Zuckerman had very little experience observing how baboon troops behaved in the wild. He wasn’t alone. Very little was known about the social lives of nonhuman primates.

The exhibit at Monkey Hill gave Zuckerman an opportunity to watch a group of monkeys being monkeys. What he saw, both on the hill and the autopsy table, was a revelation. After a few years of observation, lab work, and a short stint in the field back in South Africa, Zuckerman published his grand theory on primate society: The Social Life of Monkeys and Apes.

According to Zuckerman, life in the jungle is nasty, brutish, and short. All “sub-human” primate relationships fit into strict, male-dominated hierarchies that are maintained through coercion, intimidation, and bloodshed. The only thing that prevents the chest-thumping testosterone fest from descending into absolute chaos is the prospect of copulation. Whenever conflict arises within a troop, females offer sex to paper things over. When that fails, the alpha males resort to murder. Such is the way not only of baboons, wrote Zuckerman, but all non-human primates.

Male hamadryas baboons are considerably larger than the females. Photo by Ruben Undheim.

This conclusion was based on his observations at Monkey Hill, but it was also, according to Zuckerman, an inescapable result of primate biological characteristics. Males dominate females because they are bigger, and they keep females as permanent members of their troops because they are fertile year-round. “Reproductive physiology is the fundamental mechanism of society,” he wrote. Violent behavior is baked in.

The Social Life of Monkeys and Apes was published in 1932 (over the objections of the zoo management, who found Zuckerman’s lengthy descriptions of baboon sex unseemly). This Clockwork Orange-view of ape and monkey behavior dominated the field of primatology for the next three decades.

To his credit, Zuckerman was careful to say that his theory only explained non-human primate behavior. But not everyone got the memo. Social theorists, journalists, and the public at large took Monkey Hill and Zuckerman’s grand theory as a description of humanity unencumbered by law and social mores.

In their political tract, Personal Aggressiveness and War, the English politician Evan Durbin and psychologist John Bowlby used the story of Monkey Hill as evidence that human beings had a natural propensity towards war. “Fighting is infectious in the highest degree,” they wrote in 1938. “It is one of the most dangerous parts of our animal inheritance.”

This must have been a compelling conclusion at the time. This was the same year that Germany annexed Austria, laying the groundwork for the Second World War.

In the following decades, scientists came to doubt Zuckerman’s theory. But in popular culture, the idea stuck around.

In 1957, the popular science magazine, New Scientist, wrote the “sadistic society of the totalitarian monkeys” on Monkey Hill and drew a parallel to the mutiny on the Bounty. This was the infamous maritime rebellion in which men, adrift upon the lawless sea, seized the HMS Bounty and settled as outlaws in the South Pacific. Some 30 men took up residence on Pitcairn Island in particular.

“In 10 years all the men had been killed except one,” the article explained. “The principal difference between Pitcairn Island and Monkey Hill seemed to be that the abnormal social conditions resulted more lethally for the males in the human colony and for females in the sub-human colony.”

The public’s infatuation with this theory of the violent primate came at an odd time. As the primatologist Shirley Strum and William Mitchell wrote in the 1980s, “just as specialists were abandoning [Zuckerman’s] baboon model, the popular press and nonspecialists interested in interpreting human evolution adopted and championed that view of primate society.”

These nonspecialists included writers like Robert Ardrey, who began championing the so-called “killer ape theory”—the idea that the evolutionary success of homo sapiens was the result of our inherent aggression. Thus, 2001: A Space Odyssey depicts the “dawn of man” as an early hominid learning how to use a weapon and beat a cow skeleton to bits in a fit of rage.

The idea that the violence at Monkey Hill reflected our natural state contributed not only to a grim view of human nature, but of the natural world as a whole.

“The hamadryas colony at the London Zoo reinforced a widespread belief in an unconstrained Darwinian struggle for existence,” write Carl Sagan and Ann Druyan in their 1993 book, Shadows of Forgotten Ancestors. “Many people felt that they had now glimpsed Nature in the raw, a brutal Nature, red in claw and fang, a Nature from which we humans are insulated and protected by our civilized institutions and sensibilities.”

There but for the grace of God, law, and authority go we to Monkey Hill.

Lab versus Field

But, of course, this was all based on bad science.

As anyone who has watched nature documentaries on bonobos can tell you, not all primates are patriarchal killing machines. And even the male-centric hamadryas baboons only live up to Zuckerman’s hyper-violent description in certain, artificial circumstances.

Circumstances like Monkey Hill.

First, there was the issue of space. A typical troop of 100 baboons in the scrublands of Ethiopia will range over an area of roughly 50,000 square meters. The enclosure at the London Zoo was a little over 500 square meters, nearly one-hundredth the size.

Beyond the crowding, the exhibit’s extreme gender lopsidedness was far from anything that might be observed in the wild. Hamadryas baboons are the only primate species besides gorillas that maintain harems: family units that consist of a single male and up to ten females and infants. These harems form together into clans. Overtime, these clans establish a clear hierarchy of dominance. If that hierarchy is violated, the culprit will be severely punished by the various males.

But the London Zoo had penned nearly one hundred males with no prior social ties together with half a dozen unfortunate females.

In short, trying to generalize about primate behavior based on Monkey HiIll would be like trying to learn about human nature by watching a prison riot.

Still, Zuckerman’s grand theory of primate behavior had staying power because it tapped into a key bias within the biological sciences: work in the lab outranked research in the field.

When other primatologists, like Clarence Ray Carpenter, offered contradictory accounts of monkeys in Panama notably not murdering one another with abandon, much of the research community looked down upon these observations as second-class science. How could observations made by a sweaty, exhausted, mosquito-bitten field worker compare to the conclusions of a sober-minded scientist in the lab?

Solly Zuckerman, 1943.

It wasn’t until the early 1960s that Solly Zuckerman’s theoretical superstructure finally came toppling down.

By then, Zuckerman had been knighted, had served as chief scientific adviser to the Ministry of Defense (alongside primate anatomy, he took an interest in ballistics), and was serving as Secretary of the London Zoological Society.

As an elder zoologist, he was particularly dismissive of a young “amateur” scientist named Jane Goodall. With few credentials to her name, Goodall had spent months observing chimpanzees at Gombe National Park in Tanzania. This was an “act of radical immersion” that was unheard of in the primatology community, writes Goodall’s biographer, Dale Peterson. Returning from East Africa, she published findings in which she observed that chimpanzees, unlike their baboon cousins, did not form harems. More generally, they didn’t seem to behave as the violent patriarches Zuckerman insisted they were.

Though Zuckerman never admitted that he was wrong, in the words of Dale Peterson, this showed that primate life was not solely a “masculine melodrama on the themes of sex and violence.” Through field observations, Goodall and a new generation of primatologists, showed that primate behavior is complex, varied, and heavily influenced by environment. It was, in other words, immune to the sweeping generalizations put forward by Zuckerman.

The end of the Monkey Hill era, writes Peterson, marked “the debut of primatology as a modern science.”

The Moral of Monkey Hill

Humans are suckers for a good animal allegory.

When Ivan Pavlov learned that he could trick his dog into salivating at the sound of a bell, “Pavlov’s dog” became cultural shorthand for the mechanical naiveté of human behavior.

When B.F. Skinner discovered that birds could be taught to bob their heads in a certain way to receive food, “Skinner’s pigeons” likewise became a reference for the universality of human superstition.

And when animal behaviorist John Calhoun created an overpopulated mouse enclosure at the National Institute of Mental Health—and watched as the rodents descended into a “behavioral sink” of asexualism, cannibalism, and violence—John Calhoun’s “rats of NIMH” became a modern parable about overcrowding in urban centers.

As Eric Michael Johnson writes in Scientific American, Monkey Hill is now its own kind of a parable.

The case of Zuckerman and the baboons of London, he writes, is “a zoological case study that reveals the danger of embracing a faulty assumption about ‘natural’ behavior.” The baboons on Monkey Hill were not emblematic of all apes and monkeys. They certainly weren’t emblematic of humans. Trapped, overcrowded, and placed within an unnatural social environment, they weren’t even emblematic of their own species.

If there is a lesson to be learned from the story of Monkey Hill, it is that we are too eager to learn lessons from the animal kingdom.

Our next article looks at prison data to show that the reduction in mass incarceration in America is a glitch. To get notified when we post it → join our email list.

![]()

Announcement: The Priceonomics Content Marketing Conference is on November 1 in San Francisco. Get your early bird ticket now.

O barato das campanhas

Adam Victor BrandizziAs mudanças nos gastos foram bem curiosas, eu diria.

O fim do financiamento empresarial funcionou. Ainda não se conhece o preço total da eleição, porque as prestações de contas definitivas ainda estão por vir, mas as campanhas ficaram mais baratas. Podem ter custado menos da metade, talvez até um terço das de 2012. E isso é bom? Menor a necessidade de pedir dinheiro para empresas, menores as oportunidades para corrupção.

Tirando os sócios de uma ou outra construtora local, não se viu empreiteiros entre os doadores de 2016, por exemplo. Aumentou a participação do financiamento público, é fato. As contribuições de pequenos valores de pessoas físicas pela internet beiraram o irrisório, também é verdade. Mas diminuiu muito o peso e, portanto, a influência do capital empresarial sobre as urnas.

Do lado das despesas, houve um barateamento generalizado do marketing eleitoral. Marqueteiros precisaram trabalhar em mais campanhas para ganhar um pedaço do que costumavam receber. Serviços e equipamentos também passaram por um enxugamento.

Em 2012, gabavam-se de usar a RED – empregada em Hollywood para filmar longas metragens – ao custo de R$ 7,5 mil por dia de aluguel da câmera. Em 2016, a vedete foi a DJI Osmo, uma portátil com estabilização de imagem para tomadas em movimento e que usa o celular do fotógrafo como tela para ver as imagens. Preço: R$ 2,5 mil. Não para alugá-la, mas para comprá-la.

Mudaram também os profissionais. Até 2012, diretores de filmes de publicidade comercial eram os mais requisitados para gravar os candidatos das cidades mais populosas para suas aparições no programa eleitoral e nos spots de TV. Em 2016, seu preço ficou alto demais para as campanhas. Entraram em cena diretores de filmagem de casamento, que cobram um décimo. Não fez diferença visível nem na urna. Vários “noivos” acabaram eleitos.

Do lado da receita, o fim das doações empresarias foi ainda mais importante. Não houve nada parecido com a churrascada eleitoral promovida pela JBS em 2014. O frigorífico doou mais de R$ 350 milhões naquela eleição, e ajudou a eleger mais de 180 deputados federais – foi, disparada, a maior bancada eleita na Câmara. Nenhum doador universal se destacou em 2016. Só os partidos.

As direções partidárias exerceram uma influência maior no destino da eleição, ao decidir quais candidatos seriam privilegiados com mais recursos e quais ficariam por conta própria. As estratégias variaram de agremiação para agremiação.

O PRB, presidido por um bispo licenciado da Igreja Universal do Reino de Deus, concentrou a liberação de recursos no diretório nacional. Foram quase R$ 50 milhões, metade disse oriunda do Fundo Partidário – ou seja, recursos públicos. Marcelo Crivella, que lidera as pesquisas no segundo turno no Rio de Janeiro, foi quem mais recebeu. Logo a seguir, ficou Celso Russomanno, que terminou em terceiro lugar para prefeito paulistano.

Já o PMDB dispersou os recursos entre centenas de diretórios locais, como era esperado. Ao contrário do centralizado PRB, o PMDB é uma confederação de partidos estaduais que funciona como condomínio, e onde as decisões, inclusive as financeiras, dependem de acordo entre os seus vários caciques.

Os auto-financiados, como João Doria (PSDB), levaram vantagem, porque a legislação ainda é falha em muitos aspectos. Candidatos ricos podem tirar quanto quiserem do próprio bolso e colocar na campanha. Não deixa de ser um financiamento empresarial. A diferença é que a empresa pertence ao candidato.

Outra falha é não haver limite absoluto para as doações individuais, apenas uma proporção da renda do doador. Quanto mais rico é, mais ele pode doar e influir na campanha.

Mesmo assim, foi melhor do que a farra das doações empresariais. Por isso é temerário quando políticos começam a falar em reforma eleitoral. Sabe-se lá quais contrabandos passarão através dela.

Operations for software developers for beginners

I work as a software developer. A few years ago I had no idea what “operations” was. I had never met anybody whose job it was to operate software. What does that even mean? Now I know a tiny bit more about it so I want to write down what I’ve figured out.

operations: what even is it?

I made up these 3 stages of operating software. These are stages of understanding about operations I am going through.

Stage 1: your software just works. It’s fine.

You’re a software developer. You are running software on computers. When you write your software, it generally works okay – you write tests, you make sure it works on localhost, you push it to production, everything is fine. You’re a good programmer!

Sometimes you push code with bugs to production. Someone tells you about the bugs, you fix them, it’s not a big deal.

I used to work on projects which hardly anyone used. It wasn’t a big deal if there was a small bug! I had no idea what operations was and it didn’t matter too much.

Stage 2: omg anything can break at any time this is impossible

You’re running a site with a lot of traffic. One day, you decide to upgrade your database over the weekend. You have a bad weekend. Charity writes a blog post saying you should have spent more than 3 days on a database upgrade.

I think if in my “what even is operations” state somebody had told me “julia!! your site needs to be up 99.95% of the time” I would have just have hid under the bed.

Like, how can you make sure your site is up 99.95% of the time? ANYTHING CAN HAPPEN. You could have spikes in traffic, or some random piece of critical software you use could just stop working one day, or your database could catch fire. And what if I need to upgrade my database? How do I even do that safely? HELP.

I definitely went from “operations is trivial, whatever, how hard can keeping a site up be?” to “OMG THIS IS IMPOSSIBLE HOW DOES ANYONE EVER DO THIS”.

Stage 2.5: learn to be scared

I think learning to be scared is a really important skill – you should be worried about upgrading a database safely, or about upgrading the version of Ruby you’re using in production. These are dangerous changes!

But you can’t just stop at being scared – you need to learn to have a healthy concern about complex parts of your system, and then learn how to take the appropriate precautionary steps and then confidently make the upgrade or deploy the big change of whatever the thing you are appropriately scared of is.

If you stop here then you just end up using a super-old Ruby version for 4 years because you were too scared to upgrade it. That is no good either!

Stage 3: keeping your site up is possible

So, it turns out that there is a huge body of knowledge about keeping your site up!

There are people who, when you show them a large complicated software system running on thousands or tens of thousands of computers, and tell them “hey, this needs to be up 99.9% of the time”, they’re like “yep, that is a normal problem I have worked on! Here’s the first step we can take!”

These people sometimes have the job title “operations engineer” or “SRE” or “devops engineer” or “software engineer” or “system administrator”. Like all things, it’s a skillset that you can learn, not a magical innate quality.

Charity is one of these people! That blog post (”The Accidental DBA”)) I linked to before has a bunch of extremely practical advice about how to upgrade a database safely. If you’re running a database and you’re scared – you’re right! But you can learn about how to upgrade it from someone like Charity and then it will go a lot better.

getting started with operations

So, we’ve convinced ourselves that operations is important.

Last year I was on a team that had some software. It mostly ran okay, but infrequently it would stop working or get super slow. There were a bunch of different reasons it had problems! And it wasn’t a disaster, but it also wasn’t as awesome as we wanted it to be.

For me this was a really cool way to get a little bit better at operations! I worked on making the service faster and more reliable. And it worked! I made a couple of good improvements, and I was happy.

Some stuff that helped:

- work on a dashboard for the service that clearly shows its current state (this is surprisingly hard!)

- move some complicated code that did a lot of database operations into a separate webservice so we could easily time it out if something went wrong

- do some profiling and remove some unnecessarily slow code

The most cool part of this, though, is that a much more experienced SRE later came in to work with the team on making the same service operate better, and I got to see what he did and what his process for improving things looked like!

It’s really helped me to realize that you don’t turn into a Magical Operations Person overnight. Instead, I can take whatever I’m working on right now, and make small improvements to make it operate better! That makes me a better programmer.

you can make operations part of your job

As an industry, we used to have “software development” teams who wrote code and threw it over the wall to “operations teams” who ran that code. I feel like we’ve collectively decided that we want a different model (“devops”) – that we should have teams who both write code and know how to operate it. And there are a lot of details of how that works exactly (do you have “SRE”s?)

But as an individual software engineer, what does that mean for you? I thiiink it means that you get to LEARN COOL STUFF. You can learn about how to deploy changes safely, and observe what your code is doing. And then when something has gone wrong in production, you’ll both understand what the code is doing (because you wrote it!!) and you’ll have the skills to figure it out and systematically prevent it in the future (because you are better at operations!).

I have a lot more to say about this (how I really love being a generalist, how doing some operations work has been an awesome way to improve my debugging skills and my ability to reason about complex systems and plan how to build complicated software). And I need to write in the future about super useful Ideas For Operating Software Safely I’ve learned about (like dark reads and circuit breakers). But I’m going to stop here for now. If you want more reading The Ops Identity Crisis is a good post about software developers doing operations, from the point of view of an ops person.

This is my favorite paragraph from Charity’s “WTF is operations?” blog post (which you should just go read instead of reading me):

The best software engineers I know are the ones who consistently value the impact and lifecycle of the code they ship, and value deployment and instrumentation and observability. In other words, they rock at ops stuff.

Programming book list

There are a lot of “12 CS books every programmer must read” lists floating around out there. That's nonsense. The field is too broad for almost any topic to be required reading for all programmers, and even if a topic is that important, people's learning preferences differ too much for any book on that topic to be the best book on the topic for all people.

This is a list of topics and books where I've read the book, am familiar enough with the topic to say what you might get out of learning more about the topic, and have read other books and can say why you'd want to read one book over another.

Algorithms / Data Structures / Complexity

Why should you care? Well, there's the pragmatic argument: even if you never use this stuff in your job, most of the best paying companies will quiz you on this stuff in interviews. On the non-bullshit side of things, I find algorithms to be useful in the same way I find math to be useful. The probability of any particular algorithm being useful for any particular problem is low, but having a general picture of what kinds of problems are solved problems, what kinds of problems are intractable, and when approximations will be effective, is often useful.

McDowell; Cracking the Coding Interview

Some problems and solutions, with explanations, matching the level of questions you see in entry-level interviews at Google, Facebook, Microsoft, etc. I usually recommend this book to people who want to pass interviews but not really learn about algorithms. It has just enough to get by, but doesn't really teach you the why behind anything. If you want to actually learn about algorithms and data structures, see below.

Dasgupta, Papadimitriou, and Vazirani; Algorithms

Everything about this book seems perfect to me. It breaks up algorithms into classes (e.g., divide and conquer or greedy), and teaches you how to recognize what kind of algorithm should be used to solve a particular problem. It has a good selection of topics for an intro book, it's the right length to read over a few weekends, and it has exercises that are appropriate for an intro book. Additionally, it has sub-questions in the middle of chapters to make you reflect on non-obvious ideas to make sure you don't miss anything.

I know some folks don't like it because it's relatively math-y/proof focused. If that's you, you'll probably prefer Skiena.

Skiena; The Algorithm Design Manual

The longer, more comprehensive, more practical, less math-y version of Dasgupta. It's similar in that it attempts to teach you how to identify problems, use the correct algorithm, and give a clear explanation of the algorithm. Book is well motivated with “war stories” that show the impact of algorithms in real world programming.

CLRS; Introduction to Algorithms

This book somehow manages to make it into half of these “N books all programmers must read” lists despite being so comprehensive and rigorous that almost no practitioners actually read the entire thing. It's great as a textbook for an algorithms class, where you get a selection of topics. As a class textbook, it's nice bonus that it has exercises that are hard enough that they can be used for graduate level classes (about half the exercises from my grad level algorithms class were pulled from CLRS, and the other half were from Kleinberg & Tardos), but this is wildly impractical as a standalone introduction for most people.

Just for example, there's an entire chapter on Van Emde Boas trees. They're really neat -- it's a little surprising that a balanced-tree-like structure with O(lg lg n) insert, delete, as well as find, successor, and predecessor is possible, but a first introduction to algorithms shouldn't include Van Emde Boas trees.

Kleinberg & Tardos; Algorithm Design

Same comments as for CLRS -- it's widely recommended as an introductory book even though it doesn't make sense as an introductory book. Personally, I found the exposition in Kleinberg to be much easier to follow than in CLRS, but plenty of people find the opposite.

Demaine; Advanced Data Structures

This is a set of lectures and notes and not a book, but if you want a coherent (but not intractably comprehensive) set of material on data structures that you're unlikely to see in most undergraduate courses, this is great. The notes aren't designed to be standalone, so you'll want to watch the videos if you haven't already seen this material.

Okasaki; Purely Functional Data Structures

Fun to work through, but, unlike the other algorithms and data structures books, I've yet to be able to apply anything from this book to a problem domain where performance really matters.

For a couple years after I read this, when someone would tell me that it's not that hard to reason about the performance of purely functional lazy data structures, I'd ask them about part of a proof that stumped me in this book. I'm not talking about some obscure super hard exercise, either. I'm talking about something that's in the main body of the text that was considered too obvious to the author to explain. No one could explain it. Reasoning about this kind of thing is harder than people often claim.

Dominus; Higher Order Perl

A gentle introduction to functional programming that happens to use Perl. You could probably work through this book just as easily in Python or Ruby.

If you keep up with what's trendy, this book might seem a bit dated today, but only because so many of the ideas have become mainstream. If you're wondering why you should care about this "functional programming" thing people keep talking about, and some of the slogans you hear don't speak to you or are even off-putting (types are propositions, it's great because it's math, etc.), give this book a chance.

Levitin; Algorithms

I ordered this off amazon after seeing these two blurbs: “Other learning-enhancement features include chapter summaries, hints to the exercises, and a detailed solution manual.” and “Student learning is further supported by exercise hints and chapter summaries.” One of these blurbs is even printed on the book itself, but after getting the book, the only self-study resources I could find were some yahoo answers posts asking where you could find hints or solutions.

I ended up picking up Dasgupta instead, which was available off an author's website for free.

Mitzenmacher & Upfal; Probability and Computing: Randomized Algorithms and Probabilistic Analysis

I've probably gotten more mileage out of this than out of any other algorithms book. A lot of randomized algorithms are trivial to port to other applications and can simplify things a lot.

The text has enough of an intro to probability that you don't need to have any probability background. Also, the material on tails bounds (e.g., Chernoff bounds) is useful for a lot of CS theory proofs and isn't covered in the intro probability texts I've seen.

Sipser; Introduction to the Theory of Computation

Classic intro to theory of computation. Turing machines, etc. Proofs are often given at an intuitive, “proof sketch”, level of detail. A lot of important results (e.g, Rice's Theorem) are pushed into the exercises, so you really have to do the key exercises. Unfortunately, most of the key exercises don't have solutions, so you can't check your work.

For something with a more modern topic selection, maybe see Aurora & Barak.

Bernhardt; Computation

Covers a few theory of computation highlights. The explanations are delightful and I've watched some of the videos more than once just to watch Bernhardt explain things. Targeted at a general programmer audience with no background in CS.

Kearns & Vazirani; An Introduction to Computational Learning Theory

Classic, but dated and riddled with errors, with no errata available. When I wanted to learn this material, I ended up cobbling together notes from a couple of courses, one by Klivans and one by Blum.

Operating Systems

Why should you care? Having a bit of knowledge about operating systems can save days or week of debugging time. This is a regular theme on Julia Evans's blog, and I've found the same thing to be true of my experience. I'm hard pressed to think of anyone who builds practical systems and knows a bit about operating systems who hasn't found their operating systems knowledge to be a time saver. However, there's a bias in who reads operating systems books -- it tends to be people who do related work! It's possible you won't get the same thing out of reading these if you do really high-level stuff.

Silberchatz, Galvin, and Gagne; Operating System Concepts

This was what we used at Wisconsin before the comet book became standard. I guess it's ok. It covers concepts at a high level and hits the major points, but it's lacking in technical depth, details on how things work, advanced topics, and clear exposition.

Cox, Kasshoek, and Morris; xv6

This book is great! It explains how you can actually implement things in a real system, and it comes with its own implementation of an OS that you can play with. By design, the authors favor simple implementations over optimized ones, so the algorithms and data structures used are often quite different than what you see in production systems.

This book goes well when paired with a book that talks about how more modern operating systems work, like Love's Linux Kernel Development or Russinovich's Windows Internals.

Arpaci-Dusseau and Arpaci-Dusseau; Operating Systems: Three Easy Pieces

Nice explanation of a variety of OS topics. Goes into much more detail than any other intro OS book I know of. For example, the chapters on file systems describe the details of multiple, real, filessytems, and discusses the major implementation features of ext4. If I have one criticism about the book, it's that it's very *nix focused. Many things that are described are simply how things are done in *nix and not inherent, but the text mostly doesn't say when something is inherent vs. when it's a *nix implementation detail.

Love; Linux Kernel Development

The title can be a bit misleading -- this is basically a book about how the Linux kernel works: how things fit together, what algorithms and data structures are used, etc. I read the 2nd edition, which is now quite dated. The 3rd edition has some updates, but introduced some errors and inconsistencies, and is still dated (it was published in 2010, and covers 2.6.34). Even so, it's a nice introduction into how a relatively modern operating system works.

The other downside of this book is that the author loses all objectivity any time Linux and Windows are compared. Basically every time they're compared, the author says that Linux has clearly and incontrovertibly made the right choice and that Windows is doing something stupid. On balance, I prefer Linux to Windows, but there are a number of areas where Windows is superior, as well as areas where there's parity but Windows was ahead for years. You'll never find out what they are from this book, though.

Russinovich, Solomon, and Ionescu; Windows Internals

The most comprehensive book about how a modern operating system works. It just happens to be about Windows. Coming from a *nix background, I found this interesting to read just to see the differences.

This is definitely not an intro book, and you should have some knowledge of operating systems before reading this. If you're going to buy a physical copy of this book, you might want to wait until the 7th edition is released (early in 2017).

Downey; The Little Book of Semaphores

Takes a topic that's normally one or two sections in an operating systems textbook and turns it into its own 300 page book. The book is a series of exercises, a bit like The Little Schemer, but with more exposition. It starts by explaining what semaphore is, and then has a series of exercises that builds up higher level concurrency primitives.

This book was very helpful when I first started to write threading/concurrency code. I subscribe to the Butler Lampson school of concurrency, which is to say that I prefer to have all the concurrency-related code stuffed into a black box that someone else writes. But sometimes you're stuck writing the black box, and if so, this book has a nice introduction to the style of thinking required to write maybe possibly not totally wrong concurrent code.

I wish someone would write a book in this style, but both lower level and higher level. I'd love to see exercises like this, but starting with instruction-level primitives for a couple different architectures with different memory models (say, x86 and Alpha) instead of semaphores. If I'm writing grungy low-level threading code today, I'm overwhelmingly likely to be using c++11 threading primitives, so I'd like something that uses those instead of semaphores, which I might have used if I was writing threading code against the Win32 API. But since that book doesn't exist, this seems like the next best thing.

I've heard that Doug Lea's Concurrent Programming in Java is also quite good, but I've only taken a quick look at it.

Computer architecture

Why should you care? The specific facts and trivia you'll learn will be useful when you're doing low-level performance optimizations, but the real value is learning how to reason about tradeoffs between performance and other factors, whether that's power, cost, size, weight, or something else.

In theory, that kind of reasoning should be taught regardless of specialization, but my experience is that comp arch folks are much more likely to “get” that kind of reasoning and do back of the envelope calculations that will save them from throwing away a 2x or 10x (or 100x) factor in performance for no reason. This sounds obvious, but I can think of multiple production systems at large companies that are giving up 10x to 100x in performance which are operating at a scale where even a 2x difference in performance could pay a VP's salary -- all because people didn't think through the performance implications of their design.

Hennessy & Patterson; Computer Architecture: A Quantitative Approach

This book teaches you how to do systems design with multiple constraints (e.g., performance, TCO, and power) and how to reason about tradeoffs. It happens to mostly do so using microprocessors and supercomputers as examples.

New editions of this book have substantive additions and you really want the latest version. For example, the latest version added, among other things, a chapter on data center design, and it answers questions like, how much opex/capex is spent on power, power distribution, and cooling, and how much is spent on support staff and machines, what's the effect of using lower power machines on tail latency and result quality (bing search results are used as an example), and what other factors should you consider when designing a data center.

Assumes some background, but that background is presented in the appendices (which are available online for free).

Shen & Lipasti: Modern Processor Design

Presents most of what you need to know to architect a high performance Pentium Pro (1995) era microprocessor. That's no mean feat, considering the complexity involved in such a processor. Additionally, presents some more advanced ideas and bounds on how much parallelism can be extracted from various workloads (and how you might go about doing such a calculation). Has an unusually large section on value prediction, because the authors invented the concept and it was still hot when the first edition was published.

For pure CPU architecture, this is probably the best book available.

Hill, Jouppi, and Sohi, Readings in Computer Architecture

Read for historical reasons and to see how much better we've gotten at explaining things. For example, compare Amdahl's paper on Amdahl's law (two pages, with a single non-obvious graph presented, and no formulas), vs. the presentation in a modern textbook (one paragraph, one formula, and maybe one graph to clarify, although it's usually clear enough that no extra graph is needed).

This seems to be worse the further back you go; since comp arch is a relatively young field, nothing here is really hard to understand. If you want to see a dramatic example of how we've gotten better at explaining things, compare Maxwell's original paper on Maxwell's equations to a modern treatment of the same material. Fun if you like history, but a bit of slog if you're just trying to learn something.

Algorithmic game theory / auction theory / mechanism design

Why should you care? Some of the world's biggest tech companies run on ad revenue, and those ads are sold through auctions. This field explains how and why they work. Additionally, this material is useful any time you're trying to figure out how to design systems that allocate resources effectively.1

In particular, incentive compatible mechanism design (roughly, how to create systems that provide globally optimal outcomes when people behave in their own selfish best interest) should be required reading for anyone who designs internal incentive systems at companies. If you've ever worked at a large company that "gets" this and one that doesn't, you'll see that the company that doesn't get it has giant piles of money that are basically being lit on fire because the people who set up incentives created systems that are hugely wasteful. This field gives you the background to understand what sorts of mechanisms give you what sorts of outcomes; reading case studies gives you a very long (and entertaining) list of mistakes that can cost millions or even billions of dollars.

Krishna; Auction Theory

The last time I looked, this was the only game in town for a comprehensive, modern, introduction to auction theory. Covers the classic second price auction result in the first chapter, and then moves on to cover risk aversion, bidding rings, interdependent values, multiple auctions, asymmetrical information, and other real-world issues.

Relatively dry. Unlikely to be motivating unless you're already interested in the topic. Requires an understanding of basic probability and calculus.

Steighlitz; Snipers, Shills, and Sharks: eBay and Human Behavior

Seems designed as an entertaining introduction to auction theory for the layperson. Requires no mathematical background and relegates math to the small print. Covers maybe, 1/10th of the material of Krishna, if that. Fun read.

Crampton, Shoham, and Steinberg; Combinatorial Auctions

Discusses things like how FCC spectrum auctions got to be the way they are and how “bugs” in mechanism design can leave hundreds of millions or billions of dollars on the table. This is one of those books where each chapter is by a different author. Despite that, it still manages to be coherent and I didn't mind reading it straight through. It's self-contained enough that you could probably read this without reading Krishna first, but I wouldn't recommend it.

Shoham and Leyton-Brown; Multiagent Systems: Algorithmic, Game-Theoretic, and Logical Foundations

The title is the worst thing about this book. Otherwise, it's a nice introduction to algorithmic game theory. The book covers basic game theory, auction theory, and other classic topics that CS folks might not already know, and then covers the intersection of CS with these topics. Assumes no particular background in the topic.

Nisan, Roughgarden, Tardos, and Vazirani; Algorithmic Game Theory

A survey of various results in algorithmic game theory. Requires a fair amount of background (consider reading Shoham and Leyton-Brown first). For example, chapter five is basically Devanur, Papadimitriou, Saberi, and Vazirani's JACM paper, Market Equilibrium via a Primal-Dual Algorithm for a Convex Program, with a bit more motivation and some related problems thrown in. The exposition is good and the result is interesting (if you're into that kind of thing), but it's not necessarily what you want if you want to read a book straight through and get an introduction to the field.

Misc

Beyer, Jones, Petoff, and Murphy; Site Reliability Engineering

A description of how Google handles operations. Has the typical Google tone, which is off-putting to a lot of folks with a “traditional” ops background, and assumes that many things can only be done with the SRE model when they can, in fact, be done without going full SRE.

For a much longer description, see this 22 page set of notes on Google's SRE book.

Fowler, Beck, Brant, Opdyke, and Roberts; Refactoring

At the time I read it, it was worth the price of admission for the section on code smells alone. But this book has been so successful that the ideas of refactoring and code smells have become mainstream.

Steve Yegge has a great pitch for this book:

When I read this book for the first time, in October 2003, I felt this horrid cold feeling, the way you might feel if you just realized you've been coming to work for 5 years with your pants down around your ankles. I asked around casually the next day: "Yeah, uh, you've read that, um, Refactoring book, of course, right? Ha, ha, I only ask because I read it a very long time ago, not just now, of course." Only 1 person of 20 I surveyed had read it. Thank goodness all of us had our pants down, not just me.

...