Thomas Rushton

Shared posts

HPE's Hybrid IT group gets shiny new permanent head

Meanwhile, Pointnext chief Ana Pinczuk goes for a long walk

HPE has appointed a permanent president to its new hybrid IT business group, in the form of Phil Davis, who is currently the firm's global sales chief.…

SQL: Concatenating Column Values as Strings in T-SQL (Using CONCAT and CONCAT_WS)

There were a number of new T-SQL functions introduced in SQL Server 2012. As I've mentioned before, I get excited when there are new T-SQL functions.

Some, I'm not so excited about. EOMONTH was in that category, not because of the functionality, but because of the name (wish it was ENDOFMONTH), and lack of symmetry (lack of a STARTOFMONTH or BEGINNINGOFMONTH).

One that I thought was curious was CONCAT. I thought "why on earth do we need a function to concatenate strings. I can already do that. But when I got into using it, I realized how wonderful it was.

The problem with concatenating values is that you first need to convert them to strings. CONCAT does that automatically with all parameters that you pass to it. (It takes a list of values as parameters and you must have at least two parameters). You can see here how I can mix data types in the parameter list:

All the values are implicitly cast to a string. OK, so that's a little bit useful, but still no big deal?

The really powerful aspect is that it ignores NULL parameters in the list. (The documentation says that it implicitly casts them to an empty string but based on discussions I've had with the product group lately, my guess is that it simply ignores any parameter that's NULL).

Now that's something that's much messier with normal T-SQL. The problem is that when you concatenate anything that's NULL with the + operator in T-SQL, the answer is NULL, no matter what the other values are:

But this handles it nicely:

But notice that we're still not quite there. It's a pain to need to specify the separator each time (I've used N' ' as a single unicode space). More of a pain though, is notice that I still have two separators between Tim and Taylor in the example above.

CONCAT_WS in SQL Server 2017 comes to the rescue for that. It lets you specify the separator once, and ignores NULLs in the parameter list, and importantly, doesn't add the separator when the value is NULL. That's a pain if you want to use the function to create a string like a CSV as output (and I wish it had been a parameter to this function) but for this use case, it's perfect.

The post SQL: Concatenating Column Values as Strings in T-SQL (Using CONCAT and CONCAT_WS) appeared first on The Bit Bucket.

03/24/17 PHD comic: 'The Four Stages'

| Piled Higher & Deeper by Jorge Cham |

www.phdcomics.com

|

|

|

||

|

title:

"The Four Stages" - originally published

3/24/2017

For the latest news in PHD Comics, CLICK HERE! |

||

Eventually you reach a critical mass of LEGO pieces where you can build most things from what you already have

My kids are fascinated by camper vans and recreational vehicles. (They keep asking to go camping, but not for the purpose of experiencing nature. It's because they want to travel in a camper.) They wanted a camper van LEGO set, but instead of buying one, we went online and found the instructions for an existing camper van set and built it from the pieces we already have.

And then, of course, the kids modified the result to suit their needs. The resulting camper van looks like a lot of fun, but driving it around may be a bit difficult seeing as it has a height clearance of 30 feet.

Useful sites:

- BrickInstructions.com has instructions for LEGO sets, in case you lost yours, or you want to see instructions for other sets.

- Rebrickable contains user-contributed instructions. You can tell it what LEGO sets you own, and it will tell you what you can build from them.

- BrickSet has among other things a part finder. One thing I use it for is recovering the name of the set by searching for pieces that appear to be unique to that set.

Fred Dibnah knocking down Sauron’s TowerAs requested by...

Fred Dibnah knocking down Sauron’s Tower

As requested by smit1977z

PASS Summit Announcements: DMA/DEA

Microsoft usually has some interesting announcements at the PASS Summit, and this year was no exception. My next few blogs will cover the major announcements. This first one is about the Data Migration Assistant (DMA) tool v 2.0 general availability and the technical preview of Database Experimentation Assistant (DEA).

In short, customers will be able to assess and upgrade their databases using DMA, and validate target database’s performance using DEA, which will build higher confidence for these upgrades.

More details on DMA:

DMA enables you to upgrade to a modern data platform on-premises or in Azure VM by detecting compatibility issues that can impact database functionality on your new version of SQL Server. It recommends performance and reliability improvements for your target environment. It also allows you to not only move your schema and data, but also uncontained objects from your source server to your target server.

DMA replaces all previous versions of SQL Server Upgrade Advisor and should be used for upgrades for most SQL Server versions. Note that the SQL Server Upgrade Advisor allowed for migrations to SQL Database, but DMA does not yet support this (it only performs the assessment).

DMA helps you to assess and migrate the following components, along with performance, security and storage recommendations, in the target SQL Server platform that the database can benefit from post upgrade:

- Schema of databases

- Data

- Server roles

- SQL and windows logins

After the successful upgrade, applications will be able to connect to the target SQL server databases with the same credentials. Click here for more info.

Microsoft Download Center links: Microsoft® Data Migration Assistant v2.0

More details on DEA:

Technical Preview of DEA is the new A/B testing solution for SQL Server upgrades. It enables customers to conduct experiments on database workloads across two versions of SQL Server. Customers who are upgrading from older SQL Server versions (starting 2005 and above) to any new version of the SQL Server will be able to use key performance insights, captured using a real world workload to help build confidence about upgrade among database administrators, IT management and application owners by minimizing upgrade risks. This enables truly risk-free migrations.

The tool offers the following capabilities for workload comparison analysis and reporting:

- Automated script to set up workload capture and replay of production database (using existing SQL server functionality Distributed Replay & SQL tracing)

- Perform statistical analysis model on traces collected using both old and new instances

- Visualize data through analysis report via rich user experience

Supported versions:

Source: SQL Server 2005, SQL Server 2008, SQL Server 2008 R2, SQL Server 2012, SQL Server 2014, and SQL Server 2016

Target: SQL Server 2012, SQL Server 2014, and SQL Server 2016

Click here for more info.

Microsoft Download Center links: Microsoft® Database Experimentation Assistant Technical Preview

More info:

5 bold changes to support the scale problem of SQL Saturdays (and other data platform conferences)

I love SQL Saturdays. I love SQL Relay. I love user groups. Long may they continue, grow and thrive.

I’ve attended as a speaker, as a sponsor, as a helper, as an attendee and as an organiser. I’ve personally benefitted significantly from my involvement in these events in each role. But in their current form they are unsustainable. They need to evolve. We need these events to work for all five groups. As we grow we need to change.

Hey, look, a #DevOps!

Come to the @DLM_Consultants booth to talk about #database lifecycle management #DLM at lunch.#SQLRelay Nottingham. pic.twitter.com/3VfBDyMuXY

— DLM Consultants (@DLM_Consultants) October 6, 2016

One trend that many of us in the community have noticed is that as the number of free events, in particular SQL Saturdays, has grown rapidly in recent years. As a consequence many sponsors have seen sharply rising costs and some have been forced to cut back. It follows that organisers have found it harder to secure the sponsorship they need to run a growing number of events. Funding free community events is getting more and more challenging. I’m not the first person to speak about this. Many have already shared their views about how to deal with the problems associated with scaling SQL Saturdays. A few months ago PASS caused a bit of a stir when they made some changes. As a sponsor I have seen the number of events grow. I can’t afford to sponsor them all with DLM Consultants, but that’s not a surprise because I’m not very big. However, I used to work for Redgate and even they can’t sponsor everywhere. For the larger sponsors it isn’t because organisers are charging too much – it’s because of all the additional costs and time.

Lost count of confs I represented @Redgate at. Today’s the last. Amazing times.Thanks #SQLfamily #Redgate #SSGAS2016 pic.twitter.com/Le38Jrj0HV

— Alex Yates (@_AlexYates_) August 13, 2016

The cost of a decent size booth at an event might range from three to four figures and that’s fine for the big, established sponsors. As an organiser you might agonise over how much you charge but it probably doesn’t actually make as big a difference as you think it does.

I just sponsored my first event with DLM Consultants (out of my own pocket). It cost me several times more to pay for the hotels, swag, banner, polo shirts and to take the time out of the office. I’ve spoken to other (much larger) sponsors this week at SQL Relay who really feel the pain of sending their best technical, sales and marketing people out of the office for so long. Most of the folk you see on sponsor booths have a full time job besides manning trade show booths – that’s what makes them interesting to talk to.

How would your team manage if you sent three or four of your best team members to an event for the best part of a week? Several times every month? (And if they are going to all the events then they have to deal with a fair amount of fatigue, which affects them personally, and by extension, their businesses.) As the number of events has grown, these people are being pulled out of the office more often.

Of course SQL Saturdays aren’t during the week which does make things easier – but add a day either side for travel etc and you still have three days hard work including unsociable hours. I’ve known people who have had to fly around Europe or the US every weekend for an entire month to get to all the SQL Saturdays. The people that work the booths will need to be compensated somehow. And their employers, the sponsors, will pick up the bill.

There are a lot of free events now – and we should celebrate that. There are lots of opportunities for our community to meet, learn and prosper. But the sponsors who provide the cash don’t have bottomless pockets of money or resources. There is a limit – and they are beginning to feel the pinch. (I use the word ‘beginning’ for a reason. It’s going to get harder.)

We need to evolve our free events in order to keep growing. And growth isn’t just an aspiration, it’s a requirement. I’ll come back to that point later.

Bold statement number one – cut costs… goodbye free lunch

There is no such thing as a free lunch.

Dear organisers, you do not need to provide lunch.

I’ll say it again. Free lunch for attendees is not required. It’s a burden that is crippling the economics of free events. It doesn’t work. It is holding your event back. Stop it.

One of the biggest costs and logistical pains for organisers is the catering. Food normally costs more than venues. And given that dropout rates for attendees at free events is notoriously hard to judge, organisers normally have to over-spend on food to ensure no-one goes hungry.

I’m calling it.

It’s time we started asking people to bring a packed lunch. Or we can give the option for attendees pay a few coins at morning registration for basic sandwiches that can be ordered during the keynote from the local supermarket (so organisers don’t have to guess numbers). Of course, food must not be consumed in any room on-site other than the sponsor hall or in a sponsor talk.

To be clear, the event is free. SQL Saturdays are still free for attendees. The venue is still paid for by sponsors. The sessions are still free. People just have to pay for their own food or bring a packed lunch. I don’t think many would say that’s unreasonable.

@_AlexYates_ breaking down the ci learning curve like a boss pic.twitter.com/c6W3XSR7k2 — Terry McCann (@SQLShark) October 4, 2016

Right there you have saved a bunch of cash. Now fewer sponsors can support larger events with more attendees.

Bold statement number two – attract new sponsors

Dear organisers, your events are cheaper now, you can afford more space and you can support more people, but you still can’t expect the usual sponsors to attend all the Saturdays indefinitely. Start looking elsewhere.

Who are the local recruiters? Who are the local employers that are hiring? Who are the local single person consulting companies who can’t afford a large booth? Many will only want a single pull-up and to be allowed to stand near it and perhaps to give a good technical session and/or pre-con/workshop.

You can’t necessarily expect all these folk to bring a big booth or to pay as much money, but you might fit twice as many of them in the venue. Think about new sponsorship options that are suitable for the businesses in your area.

Bold statement number three – attract new attendees

One of the biggest problems for the regular sponsors is that they see the same people year after year at free community events. It might take several interactions with a brand before someone decides to purchase, but at the same time many of the long running sponsors find themselves talking to the same people year after year who have either already bought their products/services or who never will.

Growth, reaching new attendees, is essential for SQL Saturdays in order for the established sponsors to get value out of them. Curbing their growth is a bad idea. These events need to work for everyone. Without growth, the money will dry up.

Dear organisers, how much do you know about the registrants for your next event? Do you know how many of them are first time attendees either at your event or community events in general? Can you tell me at each of your historical events what the ratio is of regular attendees to new attendees?

Organisers need to focus on finding new audiences and they need to be able to demonstrate to sponsors that there will be a large number of newbies at each event. (Finding this data should be a manageable task. The organisers are data professionals after all!)

Bold statement number four – We need more event diversity

Typically, in the future, the big regular sponsors will sponsor some events each year, but not all of them. This means they need know which events will be most suitable. If several hundred SQL Saturdays are all competing for the same sponsorship from the same sponsors in the same way (for example, with simple attendee numbers) everyone suffers.

If organisers want to attract sponsors, they need to look at the data again to establish the demographic of their crowd.

Dear organisers, can you tell me the sorts of people you are attracting, compared to the other SQL Saturdays/Relays/User groups etc? Is your audience a highly experienced bunch or a young grad crowd? Are they in engineering or managerial roles? Are they on-prem SQL users or Azure or BI fans? Is there anything else unique about them that may be valuable to specific sponsors?

Once you know what the attendee demographic looks like you can be more strategic about which sponsors you go after. If you have grads you want appropriate training providers and tools sponsors. If you have an experienced crowd you want good quality recruiters and different sorts of training providers. If you have a BI crowd, an Azure crowd or a traditional SQL Server crowd you’ll want to go after appropriate tools sponsors. If you have managers you want consultants and enterprise tools sponsors instead of download-try-buy tools sponsors. Of course, this is a massive generalisation, but you get the idea.

Once you know this information (and can prove it) it puts you in a better position to target appropriate sponsors and it makes it easier for sponsors to understand which events will be the most likely to give them the biggest bang for their buck. It also means you are less likely to compete for the same sponsors with the other events in your area that have different demographics.

(And by the way, once you understand your demographic you will be better at selecting/attracting relevant speakers too!)

We’ll, something happened. Never had 40 notifications after a session before! Not sure if it was good or bad!#sqlRelay #speakerSelfie pic.twitter.com/wUm89j09KK

— Alex Yates (@_AlexYates_) October 4, 2016

Bold statement number five – attendees have a duty to talk to sponsors

Dear attendees, that’s right… you heard me.

I’m fully aware that as a regular sponsor I might be coming on a bit strong here – but hear me out. I think it’s justified. As I mentioned before, I regularly attend these events as an attendee, speaker, helper and organiser too so I’m not coming from an entirely one-sided perspective.

Without the sponsors these events can’t happen for free. As an attendee, if you want training, there are free and paid options available. If you want to go to a free event then someone else is paying for you to receive your training/networking opportunities. If you want to attend events without having to speak to sponsors, go to paid events, or make an anonymous donation to your chosen free event and ignore the sponsors to your heart’s content.

#SQLRelay Reading in full flow Can you spot the @DLM_Consultants ?

pic.twitter.com/qReHyqKC8R

— Rob Sewell (@sqldbawithbeard) October 5, 2016

Organisers will normally politely ask attendees to go and speak to the sponsors. It is seen as a nice thing to do. An optional thing that attendees can do to show their gratitude or to learn about the products and services the sponsors have to offer.

I don’t think organisers should need to say any more than that. Attendees should not feel strongly pressured by the organisers or sponsors to do the rounds. That’s not nice. However, attendees do need to play their part. It’s not just a nice way to show gratitude – it is one of the crucial dependencies between the different components of these events. Attendees need to speak to sponsors for the entire system to work. In an era where free events are feeling the growing pains and where sponsorship is harder and harder to secure it is increasingly important for attendees to do their bit.

This sense of duty should not have to be stated, it should come from within. We should create a culture where speaking to the sponsors is seen instinctively as the right thing to do, rather than something that attendees need to be reminded about. We all have a role to play in that if we either attend events ourselves or we know people who do.

If you have attended a free SQL event and you have not taken fifteen minutes out of one of your lunch or coffee breaks to go and say hi to the sponsors I’m afraid you should feel a bit guilty. You are taking advantage of the system. If you are the sort of person who tries to get your raffle ticket into a sponsors’ raffle draw while they are distracted to avoid speaking to them – well, that’s really not cool. Everyone – attendees, organisers, sponsors, speakers and helpers – will lose out if attendees don’t give sponsors a chance.

Next time you go to a free event as an attendee, during a break, make an effort to go up to each sponsor and ask a simple question: “Hi, can you tell me what you do?”. You don’t need to have a long conversation. If their stuff isn’t appropriate for you tell them so politely and move on. Believe me, once you have told them that you aren’t the right person for them to be speaking to they’ll let you walk away as quickly as you want. On the other hand, there is a chance they’ll have something to share that is actually interesting or helpful – and you might benefit from it.

Most of the sponsors are friendly and polite. If you feel any of them are too pushy report it to the event organisers. Those sorts of sponsors damage the ecology of SQL events in their own way and if you tell the organisers they should deal with that.

Tell me why I’m wrong

I believe we can become more efficient by cutting our catering costs.

I believe we can fund more events by looking for new and different sponsors.

I believe we can keep SQL Saturdays attractive to sponsors by attracting new attendees. We should not curb their growth – we need to continue to grow. We should make an effort to accommodate extra attendees through our efficiency and funding changes.

I believe that by helping sponsors to understand the different demographics of different events they will be able to spend their sponsorship resources more wisely to get a better return, allowing them to justify supporting more events.

I believe all attendees should feel a duty to at least say hi to each sponsor. That’s their part of the deal and if they shirk that responsibility they should feel a bit bad.

Let me know what you think. What changes would you make to the way we run free community events to ensure they continue to grow and to thrive so that more people can benefit like I have – whatever role they are playing.

Looking back through the photos…#SQLRelay was awesome.

pic.twitter.com/G8YaBg68CU

— Alex Yates (@_AlexYates_) October 10, 2016

Questions You Should Be Asking About Your Backups

#basicbackup

So you’re taking backups! That’s great. Lots of people aren’t, and that’s not so great. Lots of people think they are, but haven’t bothered to see if the jobs are succeeding, or if their backups are even valid. That’s not great, either; it’s about the same as not taking them to begin with. If you want an easy way to check on that, sp_Blitz is your white knight. Once you’ve got it sorted out that you’re taking backups, and they’re not failing, here are some questions you should ask yourself. Or your boss. Or random passerby on the street. They’re usually nice, and quite knowledgeable about backups.

These questions don’t cover more advanced scenarios, like if you’re using Log Shipping or Mirroring, where you have to make sure that your jobs pick up correctly after you fail over, and they certainly don’t address where you should be taking backups from if you’re using Availability Groups.

How often am I taking them?

If someone expects you to only lose a certain amount of data, make sure that your backup schedules closely mimic that expectation. For example, when your boss says “hey, we can’t lose more than 15 minutes of data or we’ll go out of business”, that’s a pretty good sign that you shouldn’t be in simple recovery mode, taking daily full backups. Right? Plot this out with the people who pay the bills.

I’m not knocking daily full backups, but there’s no magic stream-of-database-consciousness that happens with them so that you can get hours of your data back at any point. For that, you’ll need to mix in transaction log backups, and maybe even differentials, if you’re feeling ambitious.

Am I validating my backups?

Most people see their backups completing without error as victory. It’s not bad, but making sure they can be restored is important, too. Sometimes things go wrong!

It’s also helpful for you to practice restore commands. A little bit of familiarity goes a long way, especially when it comes to troubleshooting common errors. Do I need to rename files? Restore to a different path? Change the database name? Do I have permissions to the folder? This is all stuff that can come up when doing an actual restore, and having seen the error messages before is super helpful.

Where am I backing up to?

If you’re backing up to a local drive, how often are you copying the files off somewhere else? I don’t mean to be the bearer of paranoia, but if anything happens to that drive or your server, you lose your backups and your data. And your server. This puts you in the same boat as people not taking backups.

How many copies do I have?

If you lose a backup in one place, does a copy exist for you to fall back on? Are both paths on the same SAN? That could be an issue, now that I think about it.

How long do I keep them?

Do you have data retention policies? How often are you running DBCC CHECKDB? These are important questions. If you have a long retention policy, make sure you’re using backup compression to minimize how much disk space is taken up.

How do I restore them?

Here’s a great question! You have a folder full of Full, Differential, and Log Backups. What do you do to put them together in a usable sequence? Paul Brewer has a great script for that. If you use a 3rd party tool, this is probably taken care of. If you use Maintenance Plans, or Ola’s scripts you might find that a bit more difficult, especially if the server you took the backups on isn’t around. You lose the msdb history, as well as any commands logged via Ola’s scripts. That means building up the history to restore them is kaput.

Way kaput.

Totes kaput.

Thanks for reading!

T-SQL Tuesday #83 – We’re still terrible at delivering databases

This is my first contribution to the T-SQL Tuesday blog party. This month it’s hosted by the impatient DBA. The theme for this month is that we’re still dealing with the same problems.

I hope you don’t all want to throw me out of the party after you finish reading…

Here’s goes. *GULP*

When a graduate sales guy, within a few months of starting his first sales role, (sales!), with no background in IT, (none!), is already regularly, (regularly!), advising experienced data professionals about how to improve their delivery process – there’s a problem. An endemic problem.

And some data professionals still don’t care.

Now granted, this precocious know-it-all sales guy didn’t know the first thing about maintaining or developing databases. If you had asked him to write even a simple SELECT statement he would have struggled to open management studio and connect to the right SQL instance, but he understood a few basic problems and, in principle, how they might be solved. He was able to share this advice with database professionals and those professionals (sometimes) were able to use the advice to solve real problems.

This aggravating sales guy worked for a software tools vendor. That tools vendor sold a database comparison tool. DBAs and developers could use said comparison tool to compare (for example) a development database to a production database, reveal all the differences and script out a deployment script. Over and over this (frankly annoying) sales person would answer the phone to someone who said they had a problem with a deployment, please can they just buy a licence of said comparison tool to help them figure it out.

Rather than politely processing the order this spotty sales kid had the audacity to suggest to the highly qualified and experienced data professional that actually this comparison tool would only fix the symptoms, not the root cause. Every now and then a DBA or developer (or manager, eek!) would become outraged that this glorified call centre operator would have the balls to second guess them and act like he knew more than they did. Sales people, as everyone knows, are just parasites extracting their commission cheques from the already stretched budgets of software businesses. Just imagine how many servers you could buy if your organisation didn’t have to pay sales commission. It doesn’t bare thinking about.

If this smart Alec hadn’t already annoyed the data professional (they were a DBA on this occasion, but not always), what he was about to say would more than likely do the trick. “I’m sorry Mr or Mrs DBA, but buying [said third party comparison product] won’t fix your problem. The real problem is that you don’t know what you need to deploy.”

Boy this kid was in for it now. Claiming that an experienced professional didn’t know what (s)he was deploying? That’s as good as saying they didn’t know what they were doing! Who does this audacious drop-out think he is? Clearly this attitude is why he never got a *real* job.

It got worse.

“I’m sorry Mr or Mrs DBA, but buying [said third party comparison product] won’t fix your problem. The real problem is that you don’t know what you need to deploy. As a consequence you haven’t tested it properly. And by the way, you know you already have potentially dangerous code running in production because you just told me that sometimes you make undocumented ‘hot-fix’ changes there. Basically, you need to re-think your whole process.”

It’s at this point the contemptuous ‘too good for a real job’ youngster even started to raise the eyebrows of his own colleagues. His own manager would stand up from her desk and start to walk over, ready to intervene. She had sales targets to hit and couldn’t afford for this newbie to screw up even the simplest transactions. This should have been money in the bank!

“The real problem is that you don’t have any sort of change control. You aren’t source controlling your SQL. You also need to look at unit testing. Finally, frankly, executing these deployments manually is a bad idea – but we’ll get to that. Let’s start by getting your manager on the phone and discussing all the problems you currently have.”

…

I’m not going to lie – this sales approach didn’t always go well for me.

However, fast forward six years and I’m still having the same conversations, but now I’m doing it as a consultant (and I’m getting paid much more for the trouble :-P). I would still make a terrible DBA, or a terrible developer, (although I can just about type SELECT * FROM nowadays), but after six years solving the same problems again and again and again I’ve earned a certain reputation for being pretty good at a very niche job.

Hey, look, a #DevOps!

Come to the @DLM_Consultants booth to talk about #database lifecycle management #DLM at lunch.#SQLRelay Nottingham. pic.twitter.com/3VfBDyMuXY

— DLM Consultants (@DLM_Consultants) October 6, 2016

The problem for me, is that mine is still seen as a niche role. I find the fact that there is even a market for Database Lifecycle Management (DLM) consultants in the first place pretty sad. It’s 2016 and there are still many SQL folk who don’t version control their databases. The vast majority of the SQL community aren’t doing any automated testing – yet they wonder why they end up with horrible legacy databases that break in unpredictable ways. And for some reason, many SQL folk still love their slow, manual and error-prone deployment processes that by 2016, they must understand, (surely!), are simply embarrassing?

It’s about time we took database change management, testing and deployment seriously. After all, isn’t delivering our businesses’ data requirements in a timely fashion (without breaking stuff) the essence of our job roles? Let’s start doing it properly.

…

Sorry if I just ruined the party. You all seem like nice folk, honestly. And the punch was delicious.

I’ll see myself out.

Editor's Soapbox: Programming is Hard

A bit ago, I popped into an “Explain Like I’m 5” thread to give my version of the differences between C, C++, Objective-C and C#. In true Reddit fashion, I had the requisite “no five year old could understand this” comments and similar noise. One thing that leapt out to me was that a few commenters objected to this statement: “Programming is hard.”

The most thorough objection read like this:

Programming is not hard(such a discouraging label). If you learned a written language, you can learn a computer language. The only thing difficult about programming is mastering the art of learning itself, which is what separates the soon-to-be obsoletes from the ever-evolving masters of the trade. Good programming, at its heart, is all about keeping up with the technologies of the industry. The fundamentals themselves are easy peasy.

– /u/possiblywithdynamite

Now, I don’t want to pick on /u/possiblywithdynamite specifically, although I do want to point out that “keeping up with the technologies of the industry” is the exact opposite of good programming. I’d also like to add that a positive attitude towards “life-long learning” is neccessary to be successful in general, not just programming. I don’t want to get sidetracked by those points.

Instead, I want to focus on a few other related things:

- Programming is hard

- Skill has value

- Programming should be hard

- That doesn’t mean we can’t make programming accessible to non-programmers

- Incremental Complexity: Each option a user has is another option to screw up. You’ve made their lives harder. That doesn’t mean you should take options away, but it does mean that you should present the options gradually. Concepts in your language, tool, or tutorial should stand alone or build on simpler concepts. There are so many designs that miss this.

- Glider Bikes, not Training Wheels: If you’re constraining degrees of freedom, if you’re nerfing your tool to avoid certain kinds of mistakes, you need to understand why. What’s the benefit? What habits do you want your users to build? This goes from things like building a visual syntax to even things like using garbage-collected memory. I’d argue that, by and large, garbage collection is a good thing.

- Fail or Succeed Fast and Obviously: In terms of making programming accessible, one of the greatest enhancements that ever happened was live syntax-checking in our editors and IDEs.

- Visualizations are Good: Right now, I’m trying to wrap my head around TensorFlow, Google’s Neural Network API. I’d be lost without TensorBoard, which takes your dataflow and creates a diagram of it. In general, providing an understanding of a program in multiple ways is good. Code is always truth, but UML diagrams aren’t terrible (until they’re used as a specification instead of a visualization).

- Signpost All The Things!: Signposts aren’t just warnings of danger, they’re also guides. If you want to get from here to there, go this way, not that way. These take the form of clear error messages, excellent tutorials, and useful libraries and functions. An implementation of the Builder pattern isn’t just a great way to design an API, it’s a great way to show someone how to design their own APIs. This also encapsulates the idea of “affordances”: the design of a thing tells you instantly how to use the thing.

- Be Unsurprising: If I’m in the unknown, I don’t know if I’m doing the right or the wrong thing. And if there are surprises in store, I’m going to get even more confused. A surprise is anything that’s different, and I don’t like things that are different.

Now, what do we mean by “hard”? There’s the obvious definition: you have to do a lot of work to succeed. Time and energy have to be invested. Or, it’s a task that requires great skill and talent. These are our common ideas of “hard”, and they’re more what I meant in my original comment, but I want to highlight an alternate definition of “hard”.

A task is hard when there are more ways to screw it up than to do it correctly. If you’re blundering through the world of programming and software development, there are a lot more ways to be wrong than there are to be right. Most of what we do on this site is inventory all the ways in which people can mangle basic tasks. Think about how many CodeSODs work but are also terrible.

When we talk about learning a skill—be it programming, a musical instrument, writing, improvised comedy, etc.—what we’re really talking about is building habits, patterns, and practices that help us do things correctly more often than we do them wrong. And how do we do that? Usually by screwing up a bunch of times. Improvisers call this “getting your reps”, musicians call it “going to the woodshed” (because your playing is so awful you need to be away from the house so as not to disturb your family). As the old saying goes, “An expert is someone who has failed more times than a novice has even tried.” The important thing is that you can read up on best practices and listen to experts, but if you want to get good at something hard, you’re going to need to screw up a bunch. We learn best from failures.

Okay, so programming is hard, and we can deal with its inherent difficulty by building skill. But should programming be hard? After all, haven’t we spent the past few decades finding ways to make programming easier?

Well, I don’t want to imply that we should make programming hard just for the sake of making it hard. I don’t want to give the impression that we should all throw out our garbage collectors and our application frameworks and start making hand-crafted artisanal Assembly. Keep in mind how I defined hard: lots of ways to screw up, but only a few ways to succeed. That means difficulty and degrees of freedom are related. The more options and flexibility you have, the harder everything has to be.

Let’s shift gears. I didn’t learn to ride a bike until relatively late compared to my peers. As was standard at the time, my parents bought me a small bike and put training wheels on it. I was just awful at it. I’d fall over even with the training wheels on. I hated it, but for a kid too young to drive in the “yes, you can go out by yourself” era of the 80s, being able to ride a bike was a freedom I desperately wanted.

At some point, my parents decided I was too old for training wheels, so they took them off. “Sink or swim,” my dad said. And it was amazing, because I almost instantly got better. Within a few days, I was riding like I was born to it. Cheerfully running errands down to the corner shop, riding back and forth to school, it was everything I wanted.

Little did I know that training wheels are considered harmful.

To learn to bike, you must solve two problems: the pedaling problem and the balance problem. Training wheels only solve the pedaling problem—that is, the easy one. Learning to balance on a bike is much more difficult, and a “training” tool that eliminates the need to balance is worse than beside the point.

Which brings us to languages like Scratch and Blockly. Now, these are educational “toy” languages with syntax training wheels. You cannot write a syntactically incorrect program in these languages. You simply aren’t permitted by the editor. I am not a child educator, so I can’t speak to how effective it is as an educational tool. But these sorts of visual languages aren’t limited to educational languages. There’s SSIS and Windows Workflow. MacOS has Automator. I still see Pointy-Haired Bosses looking for UML diagrams before anybody writes a line of code.

It’s not just “visual” languages. Think about “simple” languages like JavaScript, PHP, VB. Think about the worst sin Microsoft ever committed: putting a full-featured IDE in their Office suite. In all these cases, the languages attempt to constrain your options, provide faster feedback, and make a “best guess” about what you’re trying to do. They try to steer you around some of the common mistakes by eschewing types, simplifying syntax, and cutting back on language features.

I am not trying to say that these languages are bad. I’m not trying to say that they don’t have a place. I definitely don’t want this to sound like Ivory Tower Elitism. That’s not the point I want to make. What I am saying is that simplifying languages by helping the users solve the easy problems and not the hard ones is a mistake.

Beyond that point, programming needs to be hard. Let’s focus on syntax. I remember in my first C++ class, I spent days—literal days— hunting for a semicolon. That’s a huge hurdle for a beginner, struggling with no progress and unhelpful feedback. Languages that simplify their syntax, that forgive missing semicolons, seem like a pretty natural way to bypass that difficult hump.

Here’s the problem, though: once you understand how to think syntactically, syntax itself isn’t really a bother. There’s a hump, but the line flattens off quickly. Learning a new language syntax ceases to be a challenge. By taking the training wheels off, you’re going to fail more often—and failing is how you learn. You can find more things to do, and more ways to do them, without having to start over—because you built strong habits. More than that, you can’t avoid the importance of syntax. At some point, your application will need to interact with data, and that data will need to be structured. We’re often going to wrap that structure in some kind of syntax.

This is just an illustration of the general problem. Making a hard task easier means giving up flexibility and freedom. Our tools become less expressive, and less powerful, as a result. The users of those tools become less skilled. They’re less able to navigate past the bad solutions because the bad solutions aren’t even presented to them.

Now, if this ended here, it’d be in danger of being a grognard-screed. “My powerful-but-opaque-and-incomprehensible-tooling isn’t the problem, you just need to ‘git gud’.” I emphatically do not want this to be read that way, because I strongly believe that programming and software development need to be accessible. In the wilderness of incorrect solutions, someone with little or no experience should be able muddle through and see progress towards a goal. I don’t want the IT field to be a priesthood of experts who require initiates to undergo arcane rituals before they can be considered “worthy”.

Hard means it’s easy to screw up. Accessible means that there are some sign-posts that well help keep you on the path. These are not mutually exclusive. Off the top of my head, here are a few things that we can keep in mind when designing languages, tools, and tutorials:

Also, don’t lie. Programming is hard. That might be a “discouraging” label, but it’s an accurate label. What’s more discouraging, going: “This is hard, but here’s how you can get started,” or “This is easy (but it’s actually hard and you’re going to fail more often than you succeed)”?

Phew. This turned into a much longer piece than I imagined it would be. As developers, we’re constantly building tools for others to use: other developers, our end users, and maybe even aspiring developers. Understanding what makes something hard, and why it’s sometimes good for things to be hard, is important. Recognizing that we can deal with difficult problems by building skills, by practicing and honing our craft, means that we also have to recognize the value of being skilled.

[Advertisement] Release!

is a light card game about software and the people who make it. Play with 2-5 people, or up to 10 with two copies - only $9.95 shipped!

[Advertisement] Release!

is a light card game about software and the people who make it. Play with 2-5 people, or up to 10 with two copies - only $9.95 shipped!

Cumulative Update #13 for SQL Server 2012 SP2

Dear Customers,

The 13th cumulative update release for SQL Server 2012 SP2 is now available for download at the Microsoft Support site.

To learn more about the release or servicing model, please visit:

- CU#13 KB Article: https://support.microsoft.com/en-us/kb/3165266

- Understanding IncrementalServicingModel for SQL Server

- SQL Server Support Information: http://support.microsoft.com/ph/2855

- Update Center for Microsoft SQL Server: http://technet.microsoft.com/en-US/sqlserver/ff803383.aspx

01/29/16 PHD comic: 'Special folder'

| Piled Higher & Deeper by Jorge Cham |

www.phdcomics.com

|

|

|

||

|

title:

"Special folder" - originally published

1/29/2016

For the latest news in PHD Comics, CLICK HERE! |

||

Why aren’t SAN snapshots a good backup solution for SQL Server?

First rule of backups: Backup can’t depend on the production data.

First rule of backups: Backup can’t depend on the production data.

SAN snapshots, and I don’t care who your vendor is, by definition depend on the production LUN. We’ll that’s the production data.

That’s it. That’s all I’ve got. If that production LUN fails for some reason, or becomes corrupt (which sort of happens a lot) then the snapshot is also corrupt. And if the snapshot is corrupt, then your backup is corrupt. Then it’s game over.

Second rule of backups: Backups must be moved to another device.

With SAN snapshots the snapshot lives on the same device as the production data. If the production array fails (which happens), or gets decommissioned by accident (it’s happened), or tips over because the raised floor collapsed (it’s happened), or someone pulls the wrong disk from the array (it’s happened), or someone is showing off how good the RAID protection in the array is and pulls the wrong two disks (it’s happened), or two disks in the same RAID set fail at the same time (or close enough to each other than the volume rebuild doesn’t finish between them failing) (yep, that’s happened as well), etc. If any of these happen to you, it’s game over. You’ve just lost the production system, and the backups.

I’ve seen two of those happen in my career. The others that I’ve listed are all things which I’ve heard about happening at sites. Anything can happen. If it can happen it will (see item above about the GOD DAMN RAISED FLOOR collapsing under the array), so we hope for the best, but we plan for the worst.

Third rule of backups: OLTP systems need to able to be restored to any point in time.

See my entire post on SAN vendor’s version of “point in time” vs. the DBAs version of “point in time” .

If I can’t restore the database to whatever point in time I need to, and my SLA with the business says that I need to, then it’s game over.

Fourth rule of backups: Whoever’s butt is on the line when the backups can’t be restored gets to decide how the data is backed up.

If you’re the systems team and you’ve sold management on this great snapshot based backup solution so that the DBAs don’t need to worry about it, guess what conversation I’m having with management? It’s going to be the “I’m no longer responsible for data being restored in the event of a failure” conversation. If you handle the backups and restores, then you are responsible for doing them, and it’s your butt on the line when your process isn’t up the job. I don’t want to hear about it being my database all of a sudden.

Just make sure that you keep in mind that when you can’t restore the database to the correct point in time, it’s probably game over for the company. You just lost a days worth of production data? Awesome. How are you planning on getting that back into the system? This isn’t a file server or the home directory server where everything that was just lost can be easily replaced or rebuild. This is the system of record that is used to repopulate all those other systems, and if you break rules number one and two above you’ve just lost all the companies data. Odds are we just lost all our jobs, as did everyone else at the company. So why don’t we leave the database backups to the database professionals.

Now I don’t care what magic the SAN vendor has told you they have in their array. It isn’t as good as transaction log backups. There’s a reason that we’ve been doing them since long before your SAN vendor was formed, and there’s a reason that we’ll be doing them long after they go out of business.

If you are going to break any of these rules, be prepared for the bad things that happen afterwards. Breaking most of these rules will eventually lead to what I like to call an “RGE”. An RGE is a Resume Generating Event, because when these things happen people get fired (or just laid off, if they are lucky).

So don’t cause an RGE for yourself or anyone else, and use normal SQL backups.

Denny

The post Why aren’t SAN snapshots a good backup solution for SQL Server? appeared first on SQL Server with Mr. Denny.

Compromising Yourself with WinRM’s “AllowUnencrypted = True”

One thing that’s a mixed blessing in the world of automation is how often people freely share snippets of code that you can copy and paste to make things work.

Sometimes, this is a snippet of code / functionality that would have been hard or impossible to write yourself, and saves the day. Sometimes, this is a snippet that changes some configuration settings to finally make something work.

For both types of code, you should really understand what’s happening before you run it. Configuration snippets are particularly important in this regard, as they permanently change the posture of the system.

One disappointing example is the number of posts out there that show you how to enable CredSSP without ever discussing the dangers. They don’t tend to warn you that the CredSSP authentication mechanism essentially donates your username and password to the remote system – the reason we disable it by default.

So let’s talk about another example, where folks demonstrate how to easily connect to WinRM over SOAP directly.

winrm set winrm/config/client/auth @{Basic="true"}

winrm set winrm/config/service/auth @{Basic="true"}

winrm set winrm/config/service @{AllowUnencrypted="true"}

Hmm. That’s configuring a lot of non-default settings. And without any sort of security guidance. But whatever.

I can use pretty much any HTTP-aware tool to make calls now. Take an example of using a client that requires these settings, enumerating the ‘WinRM’ service from a remote computer. Here’s a network capture of that event:

The tool is using ‘Authorization: Basic’, as you can see from the top. The rest of the red is the content of the WinRM SOAP request.

The first thing you’ll notice is that this is a lot of unencrypted content. In fact, all of it. This command and response was over plain HTTP. If I was retrieving sensitive information from that remote computer, it is now public knowledge. This message also could have been tampered with in transit – either going there, or coming back. If an attacker intercepted this communication, they could have rewritten my innocent service request to instead add themselves to the local administrators group of that local machine.

There’s one particularly sensitive bit of information you may have noticed. The Authorization header:

Authorization: Basic RnJpc2t5TWNSaXNreTpTb21lIVN1cDNyU3RyMG5nUGFzc3coKXJk

If we research what that complicated string of text is, we’ll see that it’s just a Base64 encoding of the username and password, separated by a colon:

PS [C:\temp]

>> [System.Text.Encoding]::Ascii.GetString([Convert]::FromBase64String("RnJpc2t5TWNSaXNreTpTb21lIVN1cDNyU3RyMG5nUGFzc3coKXJk"))FriskyMcRisky:Some!Sup3rStr0ngPassw()rd

Hope you didn’t need those credentials, because you just donated them!

Basic Authentication isn’t always the devil, as it can be done over a secure authenticated channel (like HTTPS). And HTTP isn’t always the devil, as it can be done over a secure authenticated channel (like Kerberos). But combine them (and disable all kinds of WinRM security safeguards), and you’re in for a bad day.

So please – if you are using code from others, make sure you understand what it does. Understanding code is much easier than writing it, so you’re still benefiting.

And blog / sample authors? Don’t think you’re getting away so easy :) If you’re providing code samples that might have an unintended side effect (i.e.: complete system and credential compromise), please make those risks drastically clear. Saying “for testing purposes only” doesn’t count.

Lee Holmes [MSFT]

Principal Software Engineer

How to enable TDE Encryption on a database in an Availability Group

By default, the Add Database Wizard and New Availability Group Wizard for AlwaysOn Availability Groups do not support databases that are already encrypted: see Encrypted Databases with AlwaysOn Availability Groups (SQL Server).

If you have a database that is already encrypted, it can be added to an existing Availability Group – just not through the wizard. You’ll need to follow the procedures outlined in Manually Prepare a Secondary Database for an Availability Group.

This article discusses how TDE encryption can be enabled for a database that already belongs to an Availability Group. After a database is already a member of an Availability Group, the database can be configured for TDE encryption but there are some key steps to do in order to avoid errors.

To follow the procedures outlined in this article you need:

- An AlwaysOn Availability Group with at least one Primary and one Secondary replica defined.

- At least one database in the Availability Group.

- A Database Master Key on all replica servers (primary and secondary servers)

- A Server Certificate installed on all replica instances (primary and all secondary replicas).

For this configuration, there are two servers:

SQL1 – the primary replica instance, and

SQL2 – the secondary replica instance.

Step One: Verify each replica instance has a Database Master Key (DMK) in Master – if not, create one.

To determine if an instance has a DMK, issue the following query:

USE MASTER GO SELECT * FROM sys.symmetric_keys WHERE name = '##MS_DatabaseMasterKey##'

If a record is returned, then a DMK exists and you do not need to create one, but if not, then one will need to be created. To create a DMK, issue the following TSQL on each replica instance that does not have a DMK already:

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'Mhl(9Iy^4jn8hYx#e9%ThXWo*9k6o@';

Notes:

- If you query the sys.symmetric_keys without a filter, you will notice there may also exist a “Service Master Key” named: ##MS_ServiceMasterKey##. The Service Master Key is the root of the SQL Server encryption hierarchy. It is generated automatically the first time it is needed to encrypt another key. By default, the Service Master Key is encrypted using the Windows data protection API and using the local machine key. The Service Master Key can only be opened by the Windows service account under which it was created or by a principal with access to both the service account name and its password. For more information regarding the Service Master Key (SMK), please refer to the following article: Service Master Key. We will not need to concern ourselves with the SMK in this article.

- If the DMK already exists and you do not know the password, that is okay as long as the service account that runs SQL Server has SA permissions and can open the key when it needs it (default behavior). For more information refer to the reference articles at the end of this post.

- You do not need to have the exact same database master key on each SQL instance. In other words, you do not need to back up the DMK from the primary and restore it onto the secondary. As long as each secondary has a DMK then that instance is prepared for the server certificate(s).

- If your instances do not have DMKs and you are creating them, you do not need to have the same password on each instance. The TSQL command, CREATE MASTER KEY, can be used on each instance independently with a separate password. The same password can be used, but the key itself will still be different due to how our key generation is done.

- The DMK itself is not used to encrypt databases – it is used simply to encrypt certificates and other keys in order to keep them protected. Having different DMKs on each instance will not cause any encryption / decryption problems as a result of being different keys.

Step Two: Create a Server Certificate on the primary replica instance.

To have a Database Encryption Key (DEK) that will be used to enable TDE on a given database, it must be protected by a Server Certificate. To create a Server Certificate issue the following TSQL command on the primary replica instance (SQL1):

USE MASTER GO CREATE CERTIFICATE TDE_DB_EncryptionCert WITH SUBJECT = 'TDE Certificate for the TDE_DB database'

To validate that the certificate was created, you can issue the following query:

SELECT name, pvt_key_encryption_type_desc, thumbprint FROM sys.certificates

which should return a result set similar to:

The thumbprint will be useful because when a database is encrypted, it will indicate the thumbprint of the certificate used to encrypt the Database Encryption Key. A single certificate can be used to encrypt more than one Database Encryption Key, but there can also be many certificates on a server, so the thumbprint will identify which server certificate is needed.

Step Three: Back up the Server Certificate on the primary replica instance.

Once the server certificate has been created, it should be backed up using the BACKUP CERTIFICATE TSQL command (on SQL1):

USE MASTER BACKUP CERTIFICATE TDE_DB_EncryptionCert TO FILE = 'TDE_DB_EncryptionCert' WITH PRIVATE KEY (FILE = 'TDE_DB_PrivateFile', ENCRYPTION BY PASSWORD = 't2OU4M01&iO0748q*m$4qpZi184WV487')

The BACKUP CERTIFICATE command will create two files. The first file is the server certificate itself. The second file is a “private key” file, protected by a password. Both files and the password will be used to restore the certificate onto other instances.

When specifying the filenames for both the server certificate and the private key file, a path can be specified along with the filename. If a path is not specified with the files, the file location where Microsoft SQL Server will save the two files is the default “data” location for databases defined for the instance. For example, on the instance used in this example, the default data path for databases is “C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA”.

Note:

If the server certificate has been previously backed up and the password for the private key file is not known, there is no need to panic. Simply create a new backup by issuing the BACKUP CERTIFICATE command and specify a new password. The new password will work with the newly created files (the server certificate file and the private key file).

Step Four: Create the Server Certificate on each secondary replica instance using the files created in Step 3.

The previous TSQL command created two files – the server certificate (in this example: “TDE_DB_EncryptionCert”) and the private key file (in this example: “TDE_DB_PrivateFile”) The second file being protected by a password.

These two files along with the password should then be used to create the same server certificate on the other secondary replica instances.

After copying the files to SQL2, connect to a query window on SQL2 and issue the following TSQL command:

CREATE CERTIFICATE TDE_DB_EncryptionCert

FROM FILE = '<path_where_copied>\TDE_DB_EncryptionCert'

WITH PRIVATE KEY

( FILE = '<path_where_copied>\TDE_DB_PrivateFile',

DECRYPTION BY PASSWORD = 't2OU4M01&iO0748q*m$4qpZi184WV487')

This installs the server certificate on SQL2. Once the server certificate is installed on all secondary replica instances, then we are ready to proceed with encrypting the database on the primary replica instance (SQL1).

Step Five: Create the Database Encryption Key on the Primary Replica Instance.

On the primary replica instance (SQL1) issue the following TSQL command to create the Database Encryption Key.

USE TDE_DB2

go

CREATE DATABASE ENCRYPTION KEY

WITH ALGORITHM = AES_256

ENCRYPTION BY SERVER CERTIFICATE TDE_DB_EncryptionCert

The DEK is the actual key that does the encryption and decryption of the database. When this key is not in use, it is protected by the server certificate (above). That is why the server certificate must be installed on each of the instances. Because this is done inside the database itself, it will be replicated to all of the secondary replicas and the TSQL does not need to be executed again on each of the secondary replicas.

At this point the database is NOT YET encrypted – but the thumbprint identifying the server certificate used to create the DEK has been associated with this database. If you run the following query on the primary or any of the secondary replicas, you will see a similar result as shown below:

SELECT db_name(database_id), encryption_state, encryptor_thumbprint, encryptor_type FROM sys.dm_database_encryption_keys

Notice that TempDB is encrypted and that the same thumbprint (i.e. Server Certificate) was used to protect the DEK for two different databases. The encryption state of TDE_DB2 is 1, meaning that it is not encrypted yet.

Step Six: Turn on Database Encryption on the Primary Replica Instance (SQL1)

We are now ready to turn on encryption. The database itself as a database encryption key (DEK) that is protected by the Server Certificate. The server certificate has been installed on all replica instances. The server certificate itself is protected by the Database Master Key (DMK) which has been created on all of the replica instances. At this point each of the secondary instances is capable of decrypting (or encrypting) the database, so as soon as we turn on encryption on the primary, the secondary replica copies will begin encrypting too.

To turn on TDE database encryption, issue the following TSQL command on the primary replica instance (SQL1):

ALTER DATABASE TDE_DB2 SET ENCRYPTION ON

To determine the status of the encryption process, again query sys.dm_database_encryption_keys :

SELECT db_name(database_id), encryption_state,

encryptor_thumbprint, encryptor_type, percent_complete

FROM sys.dm_database_encryption_keys

When the encryption_state = 3, then the database is encrypted. It will show a status of 2 while the encryption is still taking place, and the percent_complete will show the progress while it is still encrypting. If the encryption is already completed, the percent_complete will be 0.

At this point, you should be able to fail over the Availability Group to any secondary replica and be able to access the database without issue.

What happens if I turn on encryption on the primary replica but the server certificate is not on the secondary replica instance?

The database will quit synchronizing and possibly report “suspect” on the secondary. This is because when the SQL engine opens the files and begins to read the file, the pages inside the file are still encrypted. It does not have the decryption key to decrypt the pages. The SQL engine will think the pages are corrupted and report the database as suspect. You can confirm this is the case by looking in the error log on the secondary. You will see error messages similar to the following:

2014-01-28 16:09:51.42 spid39s Error: 33111, Severity: 16, State: 3.

2014-01-28 16:09:51.42 spid39s Cannot find server certificate with thumbprint '0x48CE37CDA7C99E7A13A9B0ED86BB12AED0448209'.

2014-01-28 16:09:51.45 spid39s AlwaysOn Availability Groups data movement for database 'TDE_DB2' has been suspended for the following reason: "system" (Source ID 2; Source string: 'SUSPEND_FROM_REDO'). To resume data movement on the database, you will need to resume the database manually. For information about how to resume an availability database, see SQL Server Books Online.

2014-01-28 16:09:51.56 spid39s Error: 3313, Severity: 21, State: 2.

2014-01-28 16:09:51.56 spid39s During redoing of a logged operation in database 'TDE_DB2', an error occurred at log record ID (31:291:1). Typically, the specific failure is previously logged as an error in the Windows Event Log service. Restore the database from a full backup, or repair the database.

The error messages are quite clear that the SQL engine is missing a certificate – and it’s looking for a specific certificate – as identified by the thumbprint. If there is more than one server certificate on the primary, then the one that needs to be installed on the secondary is the one whose thumbprint matches the thumbprint in the error message.

The way to resolve this situation is to go back to step three above and the back up the certificate from SQL1 (whose thumbprint matches) and then create the server certificate on SQL2 as outlined in step four. Once the server certificate exists on the secondary replica instance (SQL2), then you can issue the following TSQL command on the secondary (SQL2) to resume synchronization:

ALTER DATABASE TDE_DB2 SET HADR RESUME

References

- Encrypted Databases with AlwaysOn Availability Groups (SQL Server)

-

Manually Prepare a Secondary Database for an Availability Group

-

Transparent Data Encryption (TDE)

-

Move a TDE Protected Database to Another SQL Server

-

SQL Server and Database Encryption Keys (Database Engine)

-

DMV - sys.dm_database_encryption_keys (Transact-SQL)

-

DMV - sys.symmetric_keys (Transact-SQL)

-

DMV – sys.certificates (Transact-SQL)

-

Service Master Key

-

SQL Server Certificates and Asymmetric Keys

6 Things You Need to Stop Doing on Facebook

Maybe making lists should be on this list. But they’re actually a good way to communicate. And that level of ironic self-reference has been done before so I won’t repeat it here. And there also won’t be any berating people for posting pics of their kids or their pets – it’s Facebook, why act surprised when people post things about their personal lives that have nothing to do with you? That’s what Facebook is for.

This is about things people do that aren’t about themselves. They’re not genuinely sharing something, they’re reposting some bullshit they’ve found online. And they need to stop. I should stress that the people who do this are not necessarily stupid, almost everyone on my friends list has done at least one of them. So this is an intervention. For pretty much everyone. Get your fucking act together.

In ascending order (more annoying to me as we go along) they are:

6: Which meaningless personality test are you?

These things go in waves. One of them tickles the fancy of a few people then dozens of increasingly vapid imitations pop up. Before you know it, people are eagerly posting “Guess which Golden Girls health issue I am?” These things are fucking stupid – don’t perpetuate them. Oh, and if you feel compelled to defend them, the answer is you are Blanche’s genital warts.

5: Posting “news” or images without verifying them

You’re on the internet for fuck’s sake. If someone posts something that outrages you, BEFORE YOU SHARE IT plug it in to a search engine. It takes barely any longer than mindlessly spreading the bullshit further. And you know what? If you don’t find multiple REPUTABLE news outlets confirming the story it’s probably bullshit. Finding it on partisan blogs is meaningless. It isn’t a conspiracy of the mainstream media – they’re the first to spread awful, sensationalist stories. If you can’t get objective backup it’s bullshit, pure and simple.

4: Not posting links to the source/original creator

There’s been a surge in the last year of third party sites that scrape someone else’s content and repost it without adding anything meaningful of their own. You know what you do if you see one of these and you like the original content they’re using? YOU POINT TO THE ORIGINAL FUCKING CONTENT! It isn’t hard – look at this example of some piece of shit loser site appropriating someone’s YouTube video:

By simply clicking on the link to show the video on YouTube YOU CAN LINK DIRECTLY TO THE PERSON WHO CREATED IT! Seriously, one more fucking click and you can give credit to the person who actually created it and not the worthless leech site that creates NOTHING of value. It isn’t difficult.

3. You shared a stupid linkbait teaser headline – you won’t believe what happens next!

What will happen next is I will cave your fucking skull in. Seriously, I despise this obnoxious trend of using headlines that don’t tell you what the fucking story is. If you don’t trust your content enough to use a descriptive headline then your content is shit or you think your audience is shit or more likely, you are shit. Every time you share one of these idiotic “teaser” description you encourage the fuckwits to keep doing it. It’s a stupid fashion and like all stupid fashions it will die out but it will be gone SOONER if you stop encouraging them.

2. Linking to sites/pages that force you to register/follow/like them

These people are worse than Hitler. There, I said it. People didn’t stand up to Hitler soon enough and bad things happened. If you link to people who force a viewer to do something before they can view the content you are rewarding someone who is worse than Hitler. Which makes you far worse than Hitler. I hope you’re ashamed now.

1. 99% of you won’t have the guts to share this post

This is the WORST! Every time I think this fucked trend has died some fucking idiot does it again and I want to punch my screen when I see it! And I don’t punch my screen because that would be stupid. So I save up that frustration so when I meet someone in person who has done this I can punch them. Hard. Repeatedly. In the head. With a bus. These posts are always about some “worthy” cause which isn’t a bad thing in itself but they are always worded in this condescending way that suggests the poster is better than everyone who doesn’t feel as strongly as them. It’s made worse by the fact these are rarely original, they’re another bit of copypasta which makes me doubly sick because if you repost these you’re worse than a condescending prick, you’re an unoriginal condescending prick. And to top it all off there’s this stupid “99% of people won’t have the guts to repost this” bullshit. What is this, primary school? You’ve got to double dog dare me to do something? Even when these are done for a cause I support, they end up making me hate the poster and everyone involved with the cause.

Almost everyone I know has been guilty of at least one of these offences which will make the list contentious as most honest people will see themselves here. 99% of people won’t have the guts to admit their shortcomings and share this post – will you?

01/08/14 PHD comic: 'Happy New Year'

| Piled Higher & Deeper by Jorge Cham |

www.phdcomics.com

|

|

|

||

|

title:

"Happy New Year" - originally published

1/8/2014

For the latest news in PHD Comics, CLICK HERE! |

||

When I’m not on call during the Black Friday weekend

Thomas RushtonOh, if only...

When I’m not on call during the Black Friday weekend

Using high-spec Azure SQL Server for short term intensive data processing

So I had this plan: I was going to download the (very unfortunate) Adobe data breach, suck it into SQL Server, do the usual post-import data clean up then try and draw some insightful conclusions from what I saw. Good in theory and something I’ve done many times before after other breaches, problem is it’s abso-freaking-lutely massive. The data itself is only 2.9GB zipped and 9.3GB once extracted, but we’re talking over 153 million records here. This could well be the largest password dump we’ve seen to date.

Anyway, I download it, extract it then imported it into a SQL Server table using the bcp utility. That process alone takes a good hour and a half (just the import), which isn’t too bad as a one off, but what came next made the process unbearable. Even on my six core, hyper threaded, fast SSD, 12GB of RAM desktop, I simply couldn’t start transforming the data without things running for a hell of a long time (an hour plus for a simple query), and I also ending up with the transaction log chewing up every single spare byte of disk. Oh sure, I could have moved that over to a spare mechanical drive with heaps of capacity, but that’s not very creative now, is it?

I needed grunt. Lots of grunt. Short term grunt. Once the transformations were done and the data queried for an upcoming post, I could bring back a local copy of the DB for posterity and ditch the whole VM thing, but I had to get there in the first place. I needed… “The Cloud” (queue dramatic music)

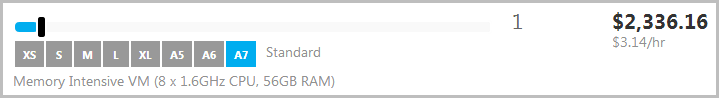

I didn’t want to muck around here so I looked for the chunkiest SQL Server virtual machine I could find. 8 CPUs with 56GB of RAM, thank you very much. That’s the top spec SQL Server Standard VM running at the moment according to the calculator:

Now this isn’t cheap – run it for a year and you’ll pay $28k for it which is a lot of coin just to muck around with a password dump. But this is the cloud era, a time of on-demand commoditised resources available for as little or as long as you like. What’s more, these days Azure bills by the minute so if I only want to spend, say, $10 then I can run this rather chunky machine for just over 3 hours then ditch it and never pay another cent. Frankly, my time is pretty damn valuable to me so I’m very happy to pay 10 bucks to save hours of mucking around trying to analyse a huge volume of data on a home PC.

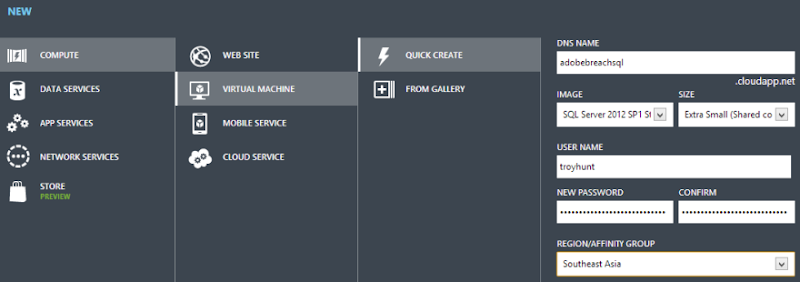

So let’s jump into it and first up we’ll need a new SQL Server VM. Now I could do this with just a SQL Server DB and not a whole virtual machine (indeed for longer term purposes I really don’t like the idea of managing an OS), but I needed to download the dump and import it using SQL Server’s magic tools so I’m going to create an entire VM:

Wait a minute – this is an “Extra Small” VM and not the full fat A7 scale we saw earlier. You see, I need to download several GB of data first, I only really need the chunky processing power once the transformations start. Doing it this way saves me $2.57 an hour! Ok, the money is incidental (although all this did take several hours), I’m doing it this way simply as an exercise to show how easily scale can be changed to meet compute demand.

You’ll also see I’ve provisioned the VM in Southeast Asia which is as close as I can get to Sydney until the Australia farm comes online sometime next year. When all this is done I want to get gigabytes of database backup down to my local machine so proximity is important.

With the machine now provisioned I can remote into a fully-fledged VM with SQL Server running in the cloud:

And just as a quick sanity check, here’s how she’s specced:

1 socket, 1 virtual processor and 768MB of RAM. Lightweight!

I’ll skip the boring bits of downloading the dump and installing 7Zip. With that done, it’s time to turn it off. Huh? No really, it’s bedtime and I don’t want to play with this again until tomorrow. That’s one of the other key lessons I wanted to instil here: When compute resources aren’t needed, you simply turn them off and no longer pay for them. It’s that simple. Now of course I’m still using storage and I’m paying for that whilst the virtual machine isn’t running, but storage is cheap (and don’t let anyone tell you otherwise). It’s $7 a month for 100GB of locally redundant storage and my virtual machine has a total of 126GB by default. Call it just over one cent an hour for all that.

Nigh nigh virtual machine:

![]()

The next day…

Did somebody say “performance”? It’s time to crank it up to 11 so it’s back over to the Azure portal and into the VM settings:

Save that and, expectedly, the machine may actually need a restart:

![]()

Of course it’s already shut down so we’re not too worried about that. This all takes about a minute to do:

Let’s fire it back up:

This is significant if you come from the pre-cloud world: One minute – that’s how long it takes to deploy another 7 cores and increase the RAM 75 times over. Let’s see how the specs look inside now:

Yep, that oughta do it! Now that' I’ve got some seriously chunky processing power I can begin the intensive processes of extracting the data from the zip then importing it into SQL Server. I won’t go into the detail of what that entails here, suffice to say it’s both boring and laborious (yes, even in the cloud!)

Right, time for queries. Frankly, remotely managing a VM is painful at the best of times so I’ll be running SSMS locally and orchestrating it all from my end. All the processing is obviously still done within the remote machine and there’s just a little bit of network use to send the query then return the results so there’ll be no noticeable performance hit doing this. There’s a neat little tutorial on Provisioning a SQL Server Virtual Machine on Windows Azure which effectively boils down to this:

- Create a TCP endpoint accessible over port 1433 using the Azure portal

- Remote into the machine and allow incoming TCP connections over 1433 for the public firewall profile

- Enable mixed mode auth on the SQL Server

- Create a local SQL login in the sysadmin role (obviously don’t use these rights for production websites connecting to the DB!)

- Register the remote server using SSMS from my local machine

Now I’ve got the entire dump running in the cloud on a high-spec machine with a remote connection. Time to start the heavy work! I won’t bore you with the nature of the queries (although I will share the results in the coming days), the point I wanted to illustrate here was the ease of provisioning and scaling resources: I got exactly what I wanted, when I wanted it and didn’t pay for anything I didn’t want. Then when I didn’t want it any more I just copied the database backup down to my local machine and deleted the VM. That was it.