Whig Zhou

Shared posts

Friday Ephemera

Bloody Harvest—How Everyone Ignored the Crime of the Century

In June of this year the China Tribunal delivered its Final Judgement and Summary Report.1 An independent committee composed of lawyers, human rights experts, and a transplant surgeon, the Tribunal was established to investigate forced organ harvesting on the Chinese mainland. These rumours have haunted the country for years—lurid tales of the fate suffered by members of the banned Falun Gong religion after being taken into police custody. Their organs, so the rumours go, are cut from their bodies while they are still alive, and then transplanted into waiting patients.

The Tribunal examined these claims, extending the group of victims to include Uyghur Muslims (among others), and its findings were unambiguous. “On the basis of all direct and indirect evidence, the Tribunal concludes with certainty that forced organ harvesting has happened in multiple places in the PRC [People’s Republic of China] and on multiple occasions for a period of at least twenty years and continues to this day.”2 Further to this, “the PRC and its leaders actively incited the persecution, the imprisonment, murder, torture, and the humiliation of Falun Gong practitioners with the sole purpose of eliminating the practice of, and belief in, the value of Falun Gong.”3 The Tribunal was also able to conclude, “with certainty,” that the Communist Party has been responsible for acts of torture inflicted on Uyghurs.4 These acts were found to constitute crimes against humanity.5

The Falun Gong religious group was outlawed in China twenty years ago, with President Jiang Zemin apparently deciding that the group’s expansion was a potential threat to his power—a competitor for the loyalties of the Chinese people. He branded the group an ‘evil cult’. The ensuing imprisonment and disappearance of large numbers of practitioners coincided with an enormous, unexplained provision of transplant hospitals, and a flood of new laboratories. Research into immunosuppressant drugs suddenly accelerated.6 China did not actually have a formal organ donation scheme until 2013, but this has presented no obstacle to the country’s transplant surgeons. They have been charging ahead with an estimated 69,300 transplants per year.7 Even the formal voluntary donors that now exist cannot hope to match this number: in 2017 the total number of eligible donors in the country was a paltry 5,146.8

Throughout most of the world the disparity between donor numbers and patient numbers leads to long waiting lists, but in China it is possible to get a heart transplant within a matter of days,9 and some individuals have been told that they can travel to the mainland on a specific date and immediately receive their transplant.10 In other words, the Chinese authorities know exactly when a particular person is due to die, and they can guarantee that a healthy heart will be found in the to-be-deceased. As stated in the Final Judgement, this “could only occur if there was an available bank of potential living donors who could be sacrificed to order.”11

The Tribunal heard that both Uyghur Muslims and Falun Gong practitioners received regular blood tests in detention. According to the testimony of former prisoner Gulbahar Jelilova, injections were given once every ten days, along with regular ultrasound tests.12 The blood cannot have been taken for the purpose of transfusion, because the quantities were too small. The purpose cannot have been infection control, because blood was only taken from the Falun Gong and Uyghur prisoners, rather than the entire population of each prison. There is, however, another reason that the authorities might need to take blood in this way. Blood testing is essential for organ transplantation, because the procedure involves a danger that the beneficiary’s antibodies will interact with antigens in the donor organs, prompting the body to reject the new organs. As for the ultrasound tests, these were surely carried out to establish the structural appearance and condition of internal organs, and this too is consistent with planned organ transplantation.13

It turns out that the Communist Party is hardly bothering to hide the identity of its human sacrifices. The Tribunal heard recordings of telephone calls made to Chinese hospitals by investigators from the World Organisation to Investigate the Persecution of Falun Gong (WOIPFG). Requests were made, in Mandarin, for organ transplants. When the callers enquired about the sources, most hospital staff were happy to reveal that the organs would be coming from Falun Gong prisoners (all that clean living and qigong exercise is thought to guarantee healthy body parts).14 In their arrogance, the Chinese authorities do not expect serious condemnation. In fact, they are now expanding the project. Human rights investigator Ethan Gutmann provided evidence to the Tribunal in December 2018, stating that “over the last 18 months, literally every Uyghur man, woman, and child – about 15 million people – have been blood and DNA tested, and that blood testing is compatible with tissue matching.”

All Falun Gong practitioners appearing as witnesses before the Tribunal were also able to describe the torture they suffered in detention. While none of these testimonies could be independently verified (for obvious reasons), the level of detail was striking, as were the similarities in the accounts. Prisoners were stripped, beaten, and kept awake for as much as 20 days at a time. Electric batons were used as a matter of course.15 The Final Judgement and Summary Report includes a vivid description of the ordeal of practitioner Jintao Liu: “They shoved faeces into his mouth. They forced a toilet brush handle into his anus. They pushed the handle so hard that he couldn’t defecate… They woke him at night by pouring cold water on him, or by piercing his skin with needles.”16 Women were given pills that stopped their menstrual cycles and caused disorientation, and many of them suffered mental breakdowns.17 Rape was routine: the prisoner Yin Liping told the Tribunal that she was locked in a room with more than forty men of unknown identity in the Masanjia Labour Camp on 19 April 2001, and raped by all of them.18

Incredibly, the Final Judgement has received minimal press coverage, despite the magnitude of the crimes described and the prestige of the Tribunal’s panel. The chair was Sir Geoffrey Nice QC, a barrister for forty-eight years and a judge for thirty-four. This was the man who led the prosecution of Serbian president Slobodan Milošević at the United Nations’ International Criminal Tribunal for the Former Yugoslavia. The panel also included human rights lawyers from the United States, Iran, and Malaysia, and a thoracic transplant specialist of several decades’ standing.

The findings may have been dramatic, but the Tribunal’s approach was every bit as measured and sober as we might expect from a panel of such repute. Members were “alive to the risk of group enthusiasm operating on the minds of witnesses who are Falun Gong supporters.”19 They took care to avoid bias against the CCP, adopting the practice of examining each category of evidence in isolation, with the relevant evidence treated as if it related to an imaginary state with an excellent human rights record.20 Invitations to attend proceedings or to comment or provide evidence were sent out to China’s Ambassador to London, and also to various Chinese transplant physicians, and even Western doctors who have spoken in support of the Chinese regime (none took up the Tribunal’s offer).21 All of this seems like the kind of professionalism we would hope for. Why, then, has the China Tribunal been effectively ignored?

One reason could be that the international community has already made up its mind about this issue. The Transplantation Society and the World Health Organisation (WHO) have both stated that criticism of the Chinese human transplant system is unwarranted,22 and as the Tribunal’s Judgement admits, many separate governments and international organisations have also expressed their doubts concerning the allegations.23 There are exceptions—the governments of Israel, Spain, Italy, and Taiwan have now banned citizens from travelling to China for transplant surgery—but it has been far more common to raise a sceptical eyebrow at the reports.

This doubt may result in part from the movement’s alien ring to Western ears. Falun Gong? What the hell is that? Is it some kind of religion? Practitioners have often tried to insist that they are not a religion, not political, and not an organisation of any kind, but this has simply left open the question of what they are, exactly. The temptation has been to swallow the mainland propaganda, dismissing the group as a cult. Indeed, Gutmann observed the same Western suspicions in the wake of the Communist Party’s original crackdown in 1999: “Congress avoided using Falun Gong practitioners’ testimony in hearings, while the administration concentrated on the human rights of ‘traditional’ Chinese dissidents and the occasional House Christian. Hollywood stuck to the Dalai Lama.”24 If these people have questionable beliefs about the nature of reality, the West seemed to be asking, then why should we trust them about anything at all? But this attitude, says Ethan Gutmann, is like devaluing the currency to zero simply because there are counterfeit bills in circulation.

It is also worth noting that Uyghur Muslims were mentioned in the Tribunal’s Final Judgement. Falun Gong may be a mystery, but Islam should be familiar enough to Western governments. Sir Geoffrey Nice and his colleagues were quite clear that Uyghurs have been the victims of a crime against humanity. Why was the latter detail not picked up in the press? Perhaps it was simply lost in this year’s rush of coverage relating to the Xinjiang concentration camps.

The doubts of the international community may not result solely from a distaste for the Falun Gong. The British government has stated on several occasions that the evidence is insufficient to prove that forced organ harvesting has taken place. These statements might give the impression that the government has already carried out a careful examination of the available material. Indeed, Baroness Goldie and MP Mark Field have both made reference in Parliament to certain ‘analysis’ and ‘assessment’. However, the Tribunal’s requests to the Foreign Office to provide details of this analysis and assessment were always met with silence. It is difficult to escape the suspicion that no such analysis ever took place. This should lead us to ask what reasons the UK government might have to avoid investigating reports of egregious human rights violations.25 As for the WHO, it “operates in a multilateral stakeholder environment and may well be susceptible to political realities,” in the cutting observation of the Tribunal.26

Of course, we could give these governments and organisations the benefit of the doubt, attributing to them nothing more malign than a misguided scepticism. This would still be no excuse. The horror unveiled by the Tribunal was, if anything, a conservative estimate of the scale of the tragedy. The conclusions about organ harvesting related only to the Falun Gong—the Tribunal reached no similar conclusions about the Uyghurs (or House Christians, or Tibetan Buddhists, or Eastern Lightning).27 But testimonies abound, if we care to look for them. A defecting policeman has told Ethan Gutmann that when Uyghur prisoners were taken to be executed, they went with doctors in “special vans for harvesting organs.” Afterwards the bodies were encased in cement and buried in secrecy.28

Gutmann spoke to such doctors—men who had carried out blood tests on Uyghurs just as described in the Tribunal’s Final Judgement and Summary Report. They were able to provide him with the missing details. First, news would arrive that Communist Party officials had checked into a hospital with various organ problems. Staff would begin taking blood from Uyghurs at the prison, and when a corresponding blood type was found, they would move to tissue matching. The chosen prisoners would be shot in the right side of the chest so that death did not occur instantly. Blood types would be matched at the execution site, and soon enough “the officials would get their organs, rise from their beds, and check out.”29

No figures are available for the scale of Uyghur harvesting, but it should be clear that the China Tribunal presented only a small piece of the full tragedy. Indeed, it may never be possible to calculate any of the Chinese harvesting figures with real accuracy. The Falun Gong numbered 70 million when their own crackdown began30 —a small nation—and these millions were scattered in every direction. Some fled overseas in search of asylum, some went underground on the mainland, some renounced their former beliefs, some died in agony on cell floors or in the Party’s many specially-designed torture chambers. And some were harvested. Gutmann puts the latter figure at 65,000 during the early years (2000 to 2008), but arrest records—or records of any kind – are minimal.31 From the very beginning, practitioners were being wheeled into operating theatres in nameless droves.

Throughout 2006 the Falun Gong-run newspaper Epoch Times recorded a series of anecdotes from a single hospital in Sujiatun during those early years. One of these came from an accounting department employee who had become concerned about her husband, a surgeon at the hospital. He had been working strange hours, earning higher wages than normal, and displaying signs of mental breakdown. After nearly a year of this her husband came clean. He told her that there were extra patients hidden away in the subterranean depths of the hospital. The doctors were summoned whenever these special patients arrived, and they were expected to apply anaesthetic before removing the kidneys, skin tissue, corneas, and other organs. Some patients were still alive at the end while others were not, but all of them were quickly sent to the incinerator, after which the hospital staff would pocket rings and watches. Her husband told her that the patients were Falun Gong practitioners, and he said that there was never any need for paperwork.32

The Sujiatun accounts were dismissed by many because US officials from the regional consular office went to have a look for themselves. They found “no evidence that the site is being used for any function than as a normal public hospital.” But as Gutmann points out, “three weeks had elapsed between the publication of the first story in the Epoch Times and the consular visit – an eternity by Chinese construction standards.”33

There is too much of this to ignore. It is not possible, in good conscience, to simply dismiss the allegations. The Tribunal posed a thought experiment to demonstrate this: “Supposing it were said of either the UK or the USA that Muslims were being tortured to death in a prison in Leeds or Philadelphia… (and) that the allegations were entirely untrue although (they had been) made by a perfectly respectable organisation and had attracted attention in government committees in various countries. Would the simple denial be all that the UK or the USA would do on grounds that their word should be enough, and that it would be to honour an impertinence by doing more? Or might they do a great deal more, including… seeking redress from whoever made the totally false but believable allegation, and… throwing open the gates of the prison and offering sight of all records to an appropriate neutral team of observers?”34

The organ harvesting allegations have continued for the best part of two decades, and they show no sign of stopping. A major report was published as early as 2006 by two Canadian human rights attorneys, David Kilgour and David Matas (later expanded into a book, Bloody Harvest: Organ Harvesting of Falun Gong Practitioners in China). The evidence has continued to mount over the years, culminating in the investigations of the China Tribunal, and yet still the doubts persist. In the context of the sheer gravity of the allegations and the extended period over which they have been made, many international organisations and governments now stand condemned along with the Chinese Communist Party.

The evidence points to the crime of the century thus far, and a crime that bears comparison with the worst of the last century. “Victim for victim and death for death, the gassing of the Jews by the Nazis, the massacre by the Khmer Rouge, or the butchery to death of the Rwanda Tutsis may not be worse,” in the Tribunal’s blunt assessment.35 One of the chief culprits for this crime must surely be China’s leader at the time of the Falun Gong crackdown—the psychopathic Jiang Zemin. “Beating them to death is nothing,” Jiang is reported to have said. “If they are disabled from the beating, it counts as them injuring themselves. If they die, it counts as suicide!”36 Equally culpable are his most enthusiastic lieutenants: Bo Xilai, Wang Lijun, Zhou Yongkang.

However, the guilt is also shared by many ordinary individuals: surgeons, officials, prison guards, police. And they know it. “We are all going to hell,” said a Chinese medical director to a policeman who was working with him at the execution grounds, according to the latter’s testimony to Ethan Gutmann.37 Judgement has been delayed for the time being. But these crimes have been well documented by many brave individuals now, and the condemnation of history is inevitable. Eventually children across the world will read in their school textbooks about the Falun Gong Holocaust of the early twenty-first century, and everyone will know the names of the main perpetrators.

Aaron Sarin is a freelance writer living in Sheffield and currently working on a book about the nation-state system, cultural universals, and global governance. He regularly contributes to seceder.co.uk and you can follow him on Twitter @aaron_sarin

Feature photo: Hundreds of supporters of the Chinese Falun Gong movement marched through the Prague centre on September 28, 2018, celebrating the Chinese Mid-Autumn Festival, but also warning of the persecution of the movement in China. Ondrej Deml/CTK Photo/Alamy Live News

References:

1 Independent Tribunal into Forced Organ Harvesting from Prisoners of Conscience in China – Final Judgement and Summary Report, 17 June 2019

2 Ibid., p19

3 Ibid., p35

4 Ibid., p25

5 Ibid., p53

6 Ibid., pp. 14-5

7 Ibid., pp. 30-1

8 Ibid., p45

9 Ibid., p32

10 Ibid., p18

11 Ibid., pp. 32-3

12 Ibid., pp. 24-5

13 Ibid., pp. 19-1

14 Ibid., p27

15 Ibid., pp. 26-7

16 Ibid., p22

17 Ibid., pp. 24-5

18 Ibid., p26

19 Ibid., p7

20 Ibid., p9

21 Ibid., p6

22 Ibid., p37

23 Ibid., p1

24 Ethan Gutmann – The Slaughter: Mass Killings, Organ Harvesting, and China’s Secret Solution to its Dissident Problem (Prometheus Books, New York, 2014), pp. 103-4

25 Final Judgement and Summary Report, op. cit., p38

26 Ibid., p37

27 Ibid., p47

28 Gutmann, op. cit., p23

29 Ibid., p26

30 Ibid., p70

31 Ibid., p279

32 Ibid., p222

33 Ibid., pp. 222-3

34 Final Judgement and Summary Report, op. cit., p41

35 Final Judgement and Summary Report, op. cit., p1

36 Ibid., p13

37 Gutmann, op. cit., p17

The post Bloody Harvest—How Everyone Ignored the Crime of the Century appeared first on Quillette.

Wokeademia spreads

All applicants for tenure track and tenured faculty positions are asked to submit a Statement of Contribution to Diversity, Equity and Inclusion. ...

Examples:

...Explaining how the candidate's research, scholarship or creative activities contribute to understanding the barriers experienced by marginalized groups;

...Committing to public engagement with organizations or community groups serving marginalized populations or extending opportunities to disadvantaged people

In general, strong statements share common attributes; the statement:

...Demonstrates a track record on diversity, equity and inclusion matters throughout candidate's career as a student and educator...

Provides clear and concrete examples of how the candidate might approach the issue articulated at Cornell University.

Awareness/Understanding of Diversity, Equity and Inclusion

Weak

...No indication of efforts to educate self about diversity topics in higher education.

Discounts the importance of diversity.

...Unaware of demographic data about diversity in specific disciplines or in higher education.

Strong

...Sophisticated understanding of differences stemming from ethnic, socioeconomic, racial, gender, disability, sexual orientation, and cultural backgrounds and the obstacles people from these backgrounds face in higher education.

... Provides examples of programs to address climate or underrepresentation.

...Addresses why it’s important for faculty to contribute to meeting the above challenges.

Experience Promoting Diversity, Equity, Inclusion

Weak

May have attended a workshop or read books, but no interest in participating ....You have to be on the team.

Strong

Significant direct experience advancing diversity, equity and inclusion through research, service and teaching. Examples may include advising an organization supporting underrepresented individuals; addressing attendees at a workshop promoting diversity, equity, inclusion; creating and implementing strategies and/or pedagogy to encourage a respectful class environment for underrepresented students; serving on relevant university committee on diversity, equity and inclusion; research on underrepresented communities; active involvement in professional or scientific organization aimed at addressing needs of underrepresented students.

Plans to Advance Diversity, Equity, Inclusion at Cornell

Weak

... Merely says they would do what is asked, if hired.

Strong

Details plans to promote diversity, equity and inclusion through research, service and teaching...

References ongoing efforts at Cornell and ways to improve and modify them to advance diversity, equity and inclusion.

Physiognomy: a field ready for scientific revival

People keep asking me about the state of the art re. evidence for physiognomy, so here’s a brief review.

Phrenology used to be considered legit, and then eventually people realized it was all bogus. Since then, it is usually brought up an example of how science goes wrong in terms of stereotyping, and references to it are used to attack people who don’t agree with Aristotle that the brain is mainly used to cool blood — which is to say, to attack people who study brain size, shape etc. and relate this to differences in human psychology, chiefly intelligence. Some examples of such attacks can be seen here, here and here.

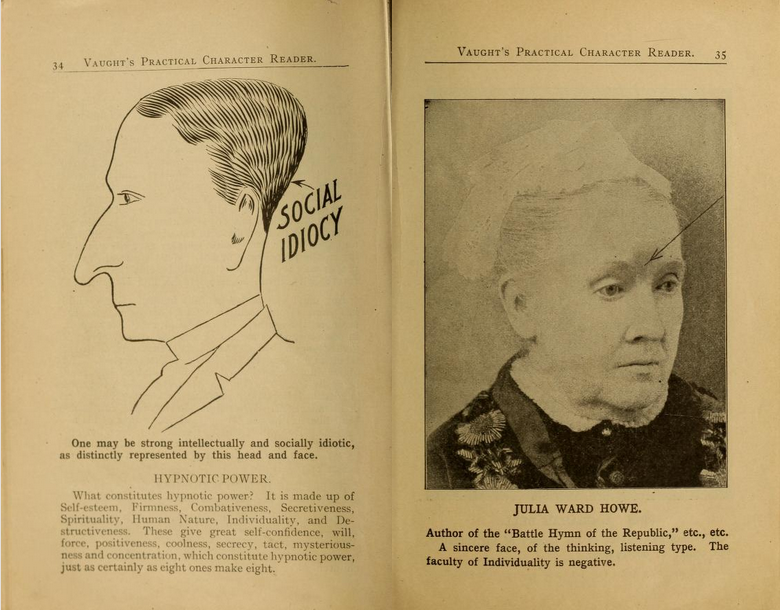

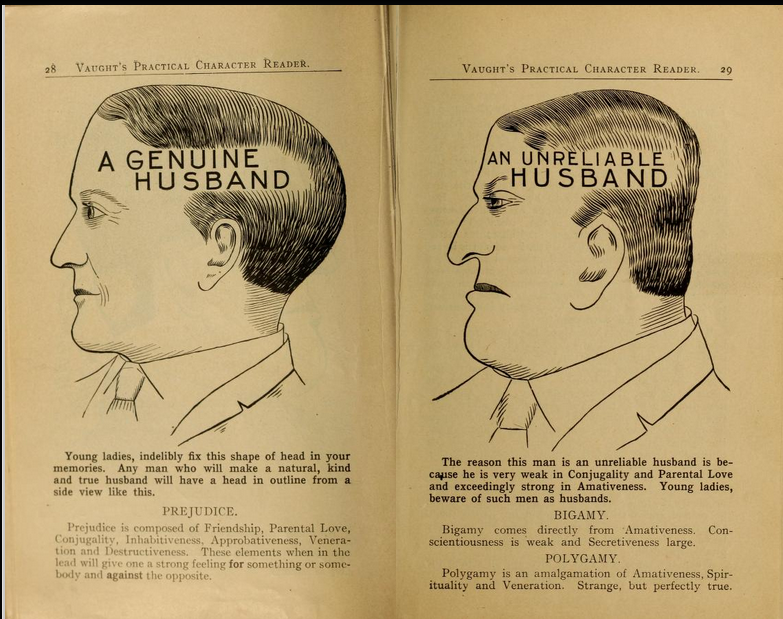

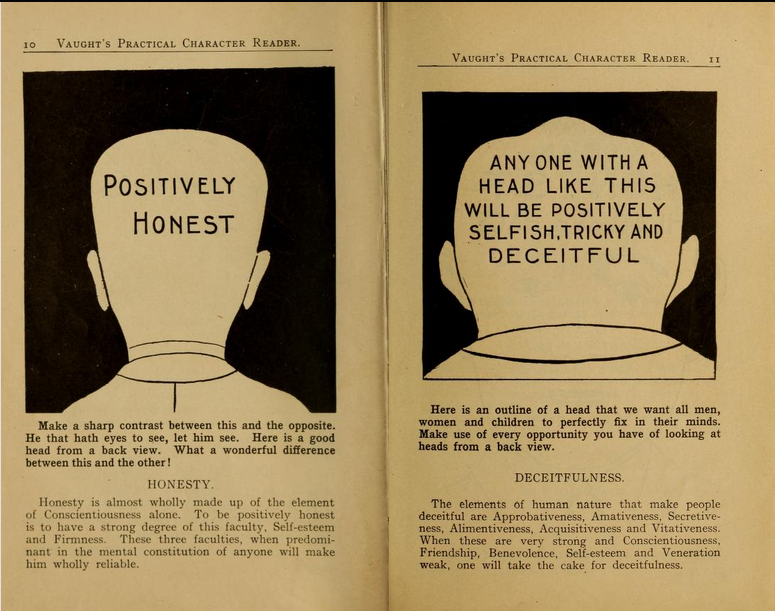

Aside from the political attacks, the skeptical reader might wonder, how does real phrenology look like? Actual phrenology, not strawman. Well, I came across a 1907 book that I shall take an illustrative and perhaps representative of popular phrenology.

- L. A. Vaught, Vaught’s Practical Character Reader, 1902 (1907 revised), Chicago

Some screenshots of pages in the book.

I find these to be hilarious, and it strains the mind to think the author was serious. Perhaps he was selling a bullshit book. But maybe? The past was a different place. Bloodletting was popular for hundreds if not thousands of years but doesn’t work for much of anything (in fact is detrimental). Whatever the case, this is how an actual early 1900s phrenology-physiognomy book looks like.

Modern tests

One can generally split up the science in two parts. One relating features of the brain to psychological differences. The second is relating visible features to psychological differences. The first is now mainstream in science and one can find probably 1000s of papers in mainstream journals publishing papers on this. This development happened in spite of attempts by social justice scholars and Marxists, especially Steven Jay Gould, to mislead the public (reviews of his main attack book are very informative, see here and here). The second is still controversial, but there is growing evidence for it and I expect it to be quite mainstream 20 years from now. The general hypothesis — that facial characteristics relate to character — is quite sensible because we all make judgments of persons based on quite limited information, including pictures (Tinder, politicians on TV, people in bars and so on), making it a reasonable hypothesis that this practice has evolutionary origins because of adaptive value, which is to say, it is useful because it has some accuracy.

Some recent studies include:

-

Wu, X., & Zhang, X. (2016). Automated inference on criminality using face images. arXiv preprint arXiv:1611.04135, 4038-4052. Original paper.

We study, for the first time, automated inference on criminality based solely on still face images, which is free of any biases of subjective judgments of human observers.Via supervised machine learning, we build four classifiers(logistic regression, KNN, SVM, CNN) using facial images of 1856 real persons controlled for race, gender, age and facial expressions, nearly half of whom were convicted criminals, for discriminating between criminals and non-criminals. All four classifiers perform consistently well and empirically establish the validity of automated face-induced inference on criminality, despite the historical controversy surrounding this line of enquiry. Also, some discriminating structural features for predicting criminality have been found by machine learning. Above all, the most important discovery of this research is that criminal and non-criminal face images populate two quite distinctive manifolds. The variation among criminal faces is significantly greater than that of the non-criminal faces. The two manifolds consisting of criminal and non-criminal faces appear to be concentric, with the non-criminal manifold lying in the kernel with a smaller span, exhibiting a law of ”normality” for faces of non-criminals. In other words, the faces of general law-biding public have a greater degree of resemblance compared with the faces of criminals, or criminals have a higher degree of dissimilarity in facial appearance than non-criminals.

This paper went viral, and the authors were shamed into publishing an apology of sorts. Still, their introduction is informative:

In all cultures and all periods of recorded human history,people share the belief that the face alone suffices to reveal innate traits of a person. Aristotle in his famous work Prior Analytics asserted, ”It is possible to infer character from features, if it is granted that the body and the soul are changed together by the natural affections”. Psychologists have known, for as long as a millennium, the human tendency of inferring innate traits and social attributes (e.g., the trustworthiness, dominance) of a person from his/her facial appearance, and a robust consensus of individuals’ inferences . These are the facts found through numerous studies [3, 39, 5, 6, 10, 26, 27, 34, 32].

Some of the studies cited above are:

- Willis, J., & Todorov, A. (2006). First impressions: Making up your mind after a 100-ms exposure to a face. Psychological science, 17(7), 592-598.

- Todorov, A., Pakrashi, M., & Oosterhof, N. N. (2009). Evaluating faces on trustworthiness after minimal time exposure. Social Cognition, 27(6), 813-833.

- Borkenau, P., Brecke, S., Möttig, C., & Paelecke, M. (2009). Extraversion is accurately perceived after a 50-ms exposure to a face. Journal of Research in Personality, 43(4), 703-706.

- Todorov, A., Loehr, V., & Oosterhof, N. N. (2010). The obligatory nature of holistic processing of faces in social judgments. Perception, 39(4), 514-532.

These are all pre-replication crisis papers by psychologists looking at evidence for humans being able to determine traits from faces. I didn’t read them closely but they seem to be the typical low power, multi-sample studies, so they are probably not very informative aside from establishing that one can get this kind of thing published and cited in mainstream journals. Of course, we know that anything humans can do by intuitive judgment can be done better by a machine given sufficient training data and the right algorithm. So, are there more recent computer studies that provide strong evidence?

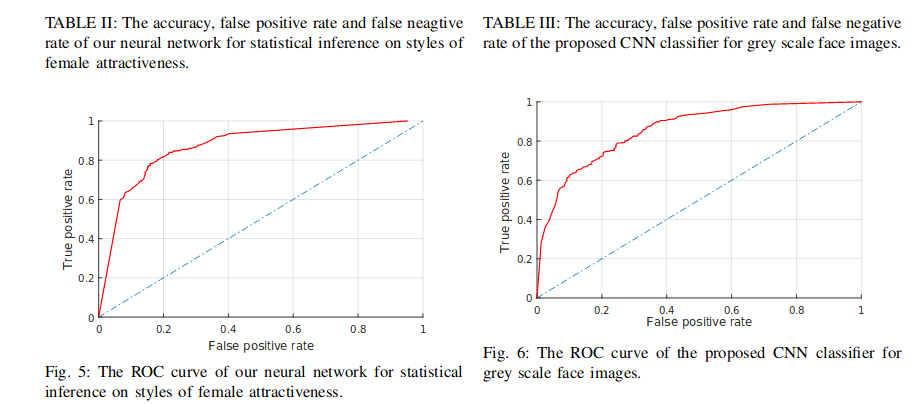

The authors from before have a follow up paper (this time being a bit less blunt!):

- Wu, X., Zhang, X., & Liu, C. (2016). Automated inference on sociopsychological impressions of attractive female faces. arXiv preprint arXiv:1612.04158.

This article is a sequel to our earlier work [25]. The main objective of our research is to explore the potential of supervised machine learning in face-induced social computing and cognition, riding on the momentum of much heralded successes of face processing, analysis and recognition on the tasks of biometric-based identification. We present a case study of automated statistical inference on sociopsychological perceptions of female faces controlled for race, attractiveness, age and nationality. Our empirical evidences point to the possibility of training machine learning algorithms, using example face images characterized by internet users, to predict perceptions of personality traits and demeanors.

Does it work?

But this study was just predicting rated attractiveness of women, so not really a psychological trait. It could however be quite useful for automating dating app usage.

What about sexual orientation? This one has obvious evolutionary relevance for mating purposes, so humans should be somewhat adept at it. There are several studies.

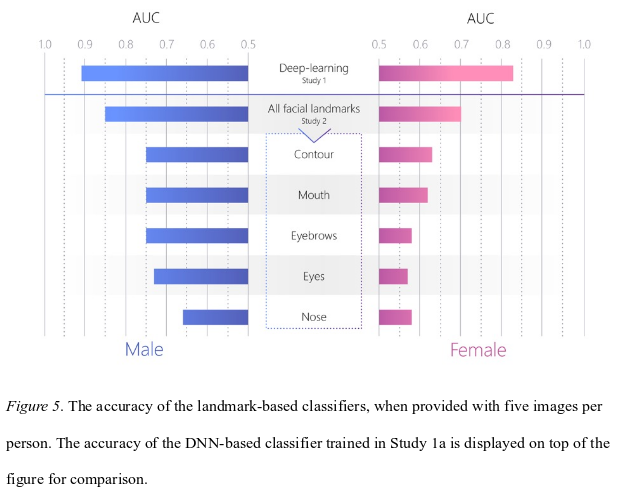

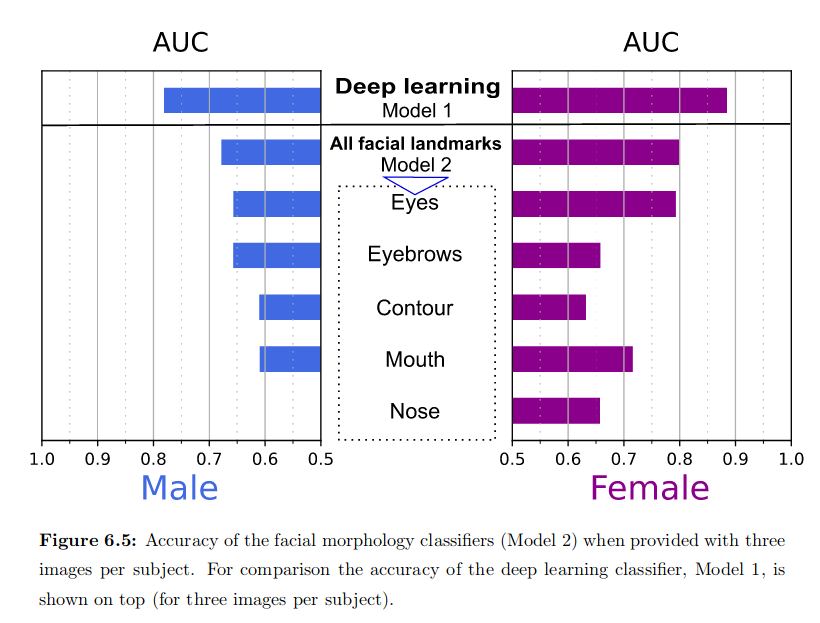

- Wang, Y., & Kosinski, M. (2018). Deep neural networks are more accurate than humans at detecting sexual orientation from facial images. Journal of personality and social psychology, 114(2), 246.

We show that faces contain much more information about sexual orientation than can be perceived or interpreted by the human brain. We used deep neural networks to extract features from 35,326 facial images. These features were entered into a logistic regression aimed at classifying sexual orientation. Given a single facial image, a classifier could correctly distinguish between gay and heterosexual men in 81% of cases, and in 71% of cases for women. Human judges achieved much lower accuracy: 61% for men and 54% for women. The accuracy of the algorithm increased to 91% and 83%, respectively, given five facial images per person. Facial features employed by the classifier included both fixed (e.g., nose shape) and transient facial features (e.g., grooming style). Consistent with the prenatal hormone theory of sexual orientation, gay men and women tended to have gender-atypical facial morphology, expression, and grooming styles. Prediction models aimed at gender alone allowed for detecting gay males with 57% accuracy and gay females with 58% accuracy. Those findings advance our understanding of the origins of sexual orientation and the limits of human perception. Additionally, given that companies and governments are increasingly using computer vision algorithms to detect people’s intimate traits, our findings expose a threat to the privacy and safety of gay men and women.

And there is a pretty close replication.

-

Leuner, J. (2019). A Replication Study: Machine Learning Models Are Capable of Predicting Sexual Orientation From Facial Images. arXiv preprint arXiv:1902.10739.

Recent research used machine learning methods to predict a person’s sexual orientation from their photograph (Wang and Kosinski, 2017). To verify this result, two of these models are replicated, one based on a deep neural network (DNN) and one on facial morphology (FM). Using a new dataset of 20,910 photographs from dating websites, the ability to predict sexual orientation is confirmed (DNN accuracy male 68%, female 77%, FM male 62%, female 72%). To investigate whether facial features such as brightness or predominant colours are predictive of sexual orientation, a new model based on highly blurred facial images was created. This model was also able to predict sexual orientation (male 63%, female 72%). The tested models are invariant to intentional changes to a subject’s makeup, eyewear, facial hair and head pose (angle that the photograph is taken at). It is shown that the head pose is not correlated with sexual orientation. While demonstrating that dating profile images carry rich information about sexual orientation these results leave open the question of how much is determined by facial morphology and how much by differences in grooming, presentation and lifestyle. The advent of new technology that is able to detect sexual orientation in this way may have serious implications for the privacy and safety of gay men and women.

So, like human observers, machines can predict sexual orientation from images.

Moving on to other traits, what about autism?

-

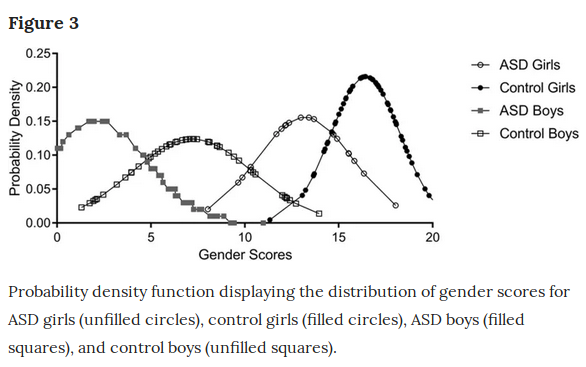

Tan, D. W., Gilani, S. Z., Maybery, M. T., Mian, A., Hunt, A., Walters, M., & Whitehouse, A. J. (2017). Hypermasculinised facial morphology in boys and girls with autism spectrum disorder and its association with symptomatology. Scientific reports, 7(1), 9348.

Elevated prenatal testosterone exposure has been associated with Autism Spectrum Disorder (ASD) and facial masculinity. By employing three-dimensional (3D) photogrammetry, the current study investigated whether prepubescent boys and girls with ASD present increased facial masculinity compared to typically-developing controls. There were two phases to this research. 3D facial images were obtained from a normative sample of 48 boys and 53 girls (3.01–12.44 years old) to determine typical facial masculinity/femininity. The sexually dimorphic features were used to create a continuous ‘gender score’, indexing degree of facial masculinity. Gender scores based on 3D facial images were then compared for 54 autistic and 54 control boys (3.01–12.52 years old), and also for 20 autistic and 60 control girls (4.24–11.78 years). For each sex, increased facial masculinity was observed in the ASD group relative to control group. Further analyses revealed that increased facial masculinity in the ASD group correlated with more social-communication difficulties based on the Social Affect score derived from the Autism Diagnostic Observation Scale-Generic (ADOS-G). There was no association between facial masculinity and the derived Restricted and Repetitive Behaviours score. This is the first study demonstrating facial hypermasculinisation in ASD and its relationship to social-communication difficulties in prepubescent children.

So in plain English: they took photos of non-autistic kids, and trained an algorithm to classify male and female faces. Then they applied this to another sample of autistic kids, and the results you see above: the autistic kids are masculinized compared to their sex norms. The autistic girls are almost halfway towards the normal male distribution! Having dated a number of autistic girls, I was not at all surprised by these results (they also have noticeably more arm hair and deeper voices).

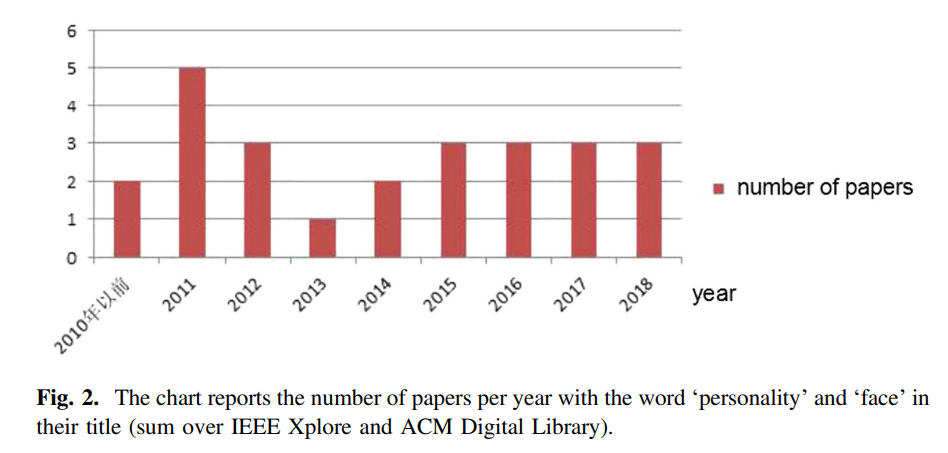

There is even a recent review of face to trait studies:

-

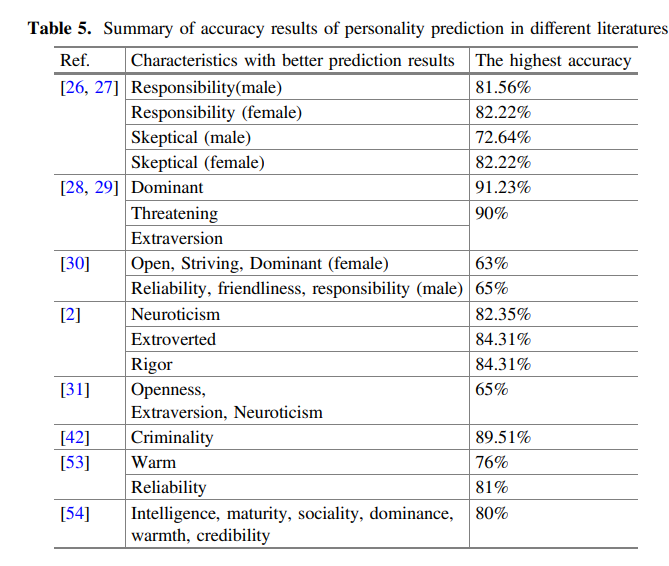

Jia, X., Tian, W., & Fan, Y. (2018, November). Physiognomy in New Era: A Survey of Automatic Personality Prediction Based on Facial Image. In International Conference on Internet of Things as a Service (pp. 12-29). Springer, Cham.

At present, personality computing technology facilitates the understanding, prediction, and management of human behavior. With the increasing importance of faces in personal daily assessments, establishing a relationship between facial morphological features and personality traits is a major breakthrough in personality computing technology. This paper is a survey of such technology of automatic personality prediction based on face and it aims at providing not only a solid knowledge base about the state-of-the-art in automatic personality prediction, but also to provide a conceptual model of automatic personality prediction, based on the literature. In addition, the analysis of the prediction results of the existing researches is emphasized, and there are still problems in the field, such as lack of information on research data, single age group of the sample population, incomplete design characteristics of the artificial design etc., and the potential applications and development directions are determined.

There is also newer research using human subjects. For instance, someone wrote a dissertation on it at Cornell University no less:

-

Royer, C. E. (2018). Convictable Faces: Attributions of Future Criminality from Facial Appearance. Dissertation at Cornell University.

Can participants accurately determine whether someone will later become a criminal based only on the person’s high school yearbook photo? This project builds on previous research which has found participants are capable of accurately and reliably assessing personality characteristics—like trustworthiness and dominance—based only on a photograph. This paper discusses a series of studies which examine whether participants are also capable of making accurate predictions of criminality by utilizing high school yearbook photographs of men with later criminal records. In Study 1, participants were able to make accurate predictions of future criminality from high school yearbook photographs. In Study 2, the results from the previous study were replicated and confidence in criminality attributions was found to predict accuracy. In Study 3, participants were less accurate when judging photographs of Black students compared to White students, suggesting cross-race bias.Altogether, these studies demonstrated that participants have accurate stereotypes about what a person with a criminal record looks like. These stereotypes may create a self-fulfilling prophecy in which people who look criminal are treated like criminals and thus end up with criminal records. This theory was tested in Study 4 in which participants were asked to judge guilt based on mugshots of exonerated men and true criminals. Overall, this serious of studies demonstrated that participants can make accurate and consistent predictions of future criminality based only on facial appearance.

- Sučić, I., Wertag, A., & Juničić, N. (2019). Better the Devil you know than the Devil you don’t: Dark traits and appearance-based inferences. Psihološka istraživanja, 22(1), 89-114.

Every day, people make quick, spontaneous and automatic appearance-based inferences of others. This is particularly true for social attributes, such as intelligence or attractiveness, but also aggression and criminality. There are also indications that certain personality traits, such as the dark traits (i.e. Machiavellianism, narcissism, psychopathy, sadism), influence the degree of accuracy of appearance-based inferences, even though not all authors agree to this. Therefore, this study aims to investigate whether there are interpersonal advantages related to the dark traits when assessing someone’s criminality. For that purpose, an on-line study was conducted on a convenience sample of 676 adult females, whose task was to assess whether a certain person was a criminal or not based on their photograph. The results have shown that narcissism and Machiavellianism were associated with a greater tendency of indicating that someone is a criminal, reflecting an underlying negative bias that the individuals high on these traits hold about people in general.

What about the weird cranium bumps stuff?

There is already a modern study of this.

-

Jones, O. P., Alfaro-Almagro, F., & Jbabdi, S. (2018). An empirical, 21st century evaluation of phrenology. cortex, 106, 26-35.

Phrenology was a nineteenth century endeavour to link personality traits with scalp morphology, which has been both influential and fiercely criticised, not least because of the assumption that scalp morphology can be informative of underlying brain function. Here we test the idea empirically rather than dismissing it out of hand. Whereas nineteenth century phrenologists had access to coarse measurement tools (digital technology referring then to fingers), we were able to re-examine phrenology using 21st century methods and thousands of subjects drawn from the largest neuroimaging study to date. High-quality structural MRI was used to quantify local scalp curvature. The resulting curvature statistics were compared against lifestyle measures acquired from the same cohort of subjects, being careful to match a subset of lifestyle measures to phrenological ideas of brain organisation, in an effort to evoke the character of Victorian times. The results represent the most rigorous evaluation of phrenological claims to date. [sample size is 5.7k people from UKBB)

So, while this is only a single study, we can probably be confident that bumps on the scalp aren’t terribly informative about personality, except in gross cases of brain injury which sometimes causes personality changes.

So, all in all, modern science confirms that human psychological differences relate to visual appearance, including variation in facial features. Humans pick up on these automatically and use them in their social judgments to increase the accuracy of their social judgments in the same way they incorporate group averages (stereotypes). No scientist should be very surprised by these findings.

On a personal note: I’ve been meaning to do some of my own research on this using data scraped from various dating sites and applications. OKCupid data is especially good for this given the rich personality data, but the site is quite bad and not very popular anymore. A big shame! Instead, one will have to rely on data from Tinder, Hinge, Coffee Meets Bagel etc. These datasets don’t generally provide information on the more interesting traits such as sexual paraphilias/kinks (who likes anal sex? what about foot fetish?), criminality (aside from the ancestry and sex link), and detailed political beliefs (what does the typical libertarian look like? Aside from the coffee salon demographics). I haven’t had the time to do this research due to being busy doing work on the genomics of race differences. Get in contact with me if you want to collaborate. I have a lot of data but little experience in these kinds of algorithms.

The PNSE Paper

I’ve mentioned this a few times, but it’s worth going over in detail. The full title is Clusters Of Individual Experiences Form A Continuum Of Persistent Non-Symbolic Experiences In Adults by Jeffery Martin, with “persistent non-symbolic experience” (PNSE) as a scientific-sounding culturally-neutral code word for “enlightenment”. Martin is a Reiki practitioner associated with the “Center for the Study of Non-Symbolic Consciousness”, so we’re not getting this from the most sober of skeptics, but I still find the project interesting enough to deserve a look.

Martin searched various religious and spiritual groups for people who both self-reported enlightenment and were affiliated with “a community that provided validity to their claims”. He says he eventually found 1200 such people who were willing to participate in the study, but that “the data reported here comes primarily from the first 50 participants who sat for in-depth interviews…based on the overall research effort these 50 were felt to be a sufficient sample to represent what has been learned from the larger population”. Although Martin says he tried to get as much diversity as possible, the group was mostly white male Americans.

Martin’s research was mostly qualitative, based on in-depth interviews, so we’re mostly going with his impressions. But his impression was that most people who self-described as enlightened had similar experiences, which could be be plotted on:

…a continuum that seemed to progress from ‘normal’ waking consciousness toward a distant location where participants reported no individualized sense of self, no self-related thoughts, no emotion, and no apparent sense of agency or ability to make a choice. Locations prior to this seemed to involve consistent changes toward this direction.

He describes this distant form of consciousness as involving changes in sense-of-self, cognition, emotion, memory, and perception.

Starting with sense-of-self, he says:

Perhaps the most universal change in what PNSE participants reported related to their sense of self. They experienced a fundamental change from a highly individualized sense of self, which is common among the ‘normal’ population, to something else. How that ‘something else’ was reported often related to their religious or spiritual tradition(s), or lack thereof. For example, Buddhists often referred to a sense of spaciousness while Christians frequently spoke of experiencing a union with God, Jesus, or the Holy Spirit depending on their sect. However, each experienced a transformation into a sense of self that seemed ‘larger’ and less individuated than the one that was experienced previously. Often participants talked about feeling that they extended beyond their body, sometimes very far beyond it…

This change was dramatic and most participants noticed it immediately, even if initially they could not pinpoint exactly what had occurred. Sense of self changed immediately in approximately 70% of participants. In the other 30% it unfolded gradually, with the unfolding period reported as varying from a few days to four months.

Those who were not involved in a religious or spiritual tradition that contextualized the experience often felt that they might have acquired a mental disorder. This analysis was not based on emotional or mental distress. It was typically arrived at rationally because the way they were experiencing reality was suddenly remarkably different than they had previously, and as far as they could tell different from everyone they knew. Many of these participants sought professional mental health care, which no participant viewed as having been beneficial. Clinicians often told them their descriptions showed similarities to depersonalization and derealization, except for the positive nature of the experience.

There were nuances within how sense of self was experienced at different locations along the continuum. In the earliest locations, the sense of self felt expanded, and often seemed more connected to everything. In the farthest locations on the continuum, an even more pronounced change occurred in sense of self; a ll aspects of having an individualized sense of self had vanished for these participants. Prior to this location some aspects of an individualized sense of self remained, and participants could occasionally be drawn into them.

On cognition:

Another consistent report is a shift in the nature and quantity of thoughts. Virtually all of the participants discussed this as one of the first things they noticed upon entering PNSE. The nature and degree of the change related to a participant’s location on the continuum. On the early part of the continuum, nearly all participants reported a significant reduction in, or even complete absence of, thoughts. Around 5% reported that their thoughts actually increased. Those who reported thoughts, including increased thoughts, stated that they were far less influenced by them. Participants reported that for the most part thoughts just came and went, and were generally either devoid of or contained greatly reduced emotional content.

Almost immediately it became clear that participants were not referring to the disappearance of all thoughts. They remained fully able to use thought for problem solving and living what appeared outwardly to be a ‘normal’ life. The reduction seemed limited to self-related thoughts. Nevertheless, participants were experiencing a reduction in quantity of thoughts that was so significant that when they were asked to quantify the reduction, t hose who could answered within the 80-95% range. This high percentage may suggest why someone would say all thought had fallen away.

There do not appear to be negative cognitive consequences to this reduction in thought. When asked, none said they wanted their self-referential thoughts to return to previous levels or to have the emotional charge returned to them. Participants generally reported that their problem solving abilities, mental capacity, and mental capability in general had increased because it was not being crowded out or influenced by the missing thoughts. They would often express the notion that thinking was now a much more finely tuned tool that had taken its appropriate place within their psychological architecture.

On perception:

Participants in the later part of the middle range of the PNSE continuum often reported seeing the unfolding layers of these perceptual processes in detail. They reported being able to begin to detect the difference between the orientation response and the physical, cognitive, and emotional processes that arose after it. They reported reaching a point where some events were reacted to by one or more of these layers while others were not. This was in contrast to participants on the early end of the continuum who perceived all of these layers as one during an event, or at least as a greatly reduced number of discrete processes.

You can read more, plus the sections on emotion and memory, yourself; they mostly fit with the stereotypes you would expect of enlightened people; a lot of tranquility, joy, and focus on the present moment.

What I like about this paper is the parts where it departs from these stereotypes. It makes clear that most of these people’s external characteristics didn’t change at all. In many cases, their friends and family didn’t even notice anything was different, and could not be convinced that anything about them was different:

Despite an overwhelming change in how it felt to experience both themselves and the world after the onset of PNSE, the outward appearance of the participants changed very little. Generally speaking they retained their previous mannerisms, hobbies, political ideology, food and clothing preferences, and so forth. If someone were an environmentalist prior to PNSE, typically they remained so after it. If they weren’t, they still are not.

Many participants discussed the thought, just after their transition to PNSE, that they would have to go to work and explain the difference in themselves to co-workers. They went on to describe a puzzled drive home after a full day of work when no one seemed to notice anything different about them. Quite a few chose to never discuss the change that had occurred in them with their families and friends and stated that no one seemed to notice much of a difference. In short, although they had experienced radical internal transformation, externally people didn’t seem to take much notice of it, if any.

Similarly, despite people saying that they no longer had any sense of agency, they were behaving as agentically as anyone else:

On the far end of the continuum, participants reported no sense of agency. They reported that they did not feel they could take any action of their own, nor make any decisions. Reality was perceived as just unfolding, with ‘doing’ and ‘deciding’ simply happening. Nevertheless, many of these participants were functioning in a range of demanding environments and performing well. One, for example, was a doctoral level student at a major university. Another was a young college professor who was building a strong career. Still another was a seasoned public and private sector executive who served as a high-level consultant and on various institutional-level boards.

Can you imagine investing in a company whose executive believes he cannot take any action and is just watching reality unfold? But it seems to work out.

Other times the PNSE participants are just outright wrong about their experience. When asked if they were stressed, they would say of course not, they were experiencing inner peace. But their friends and family said they were totally stressed. For example:

Over the course of a week, [one participant’s] father died, followed very rapidly by his sister. He was also going through a significant issue with one of his children. Over dinner I asked him about his internal state, which he reported as deeply peaceful and positive despite everything that was happening. Having known that the participant was bringing his longtime girlfriend, I’d taken an associate researcher with me to the meeting to independently collect the observations from her. My fellow researcher isolated the participant’s girlfriend at the bar and interviewed her about any signs of stress that the participant might be exhibiting. I casually asked the same questions to the participant as we continued our dinner conversation. Their answers couldn’t have been more different. While the participant reported no stress, his partner had been observing many telltale signs: he wasn’t sleeping well, his appetite was off, his mood was noticeably different, his muscles were much tenser than normal, his sex drive was reduced, his health was suffering, and so forth.

Or:

It was not uncommon for participants to state that they had gained increased bodily awareness upon their transition into PNSE. I arranged and observed private yoga sessions with a series of participants as part of a larger inquiry into their bodily awareness. During these sessions it became clear that participants believed they were far more aware of their body than they actually were. For example, the instructor would often put her hand on part of the body asking the participant to relax the tense muscles there, only to have the participant insist that s/he was totally relaxed in that area and did not feel any muscle tension.

Or even:

During some interviews participants expressed that they no longer felt it was possible for them to be racist or sexist. I asked these participants to take Harvard University’s Project Implicit tests online. All of these participants were white males and each showed a degree of sexism and/or racism, including participants who were in the later no emotion and agency locations on the continuum. Project Implicit uses physiology to test these responses.

It’s tempting to say these people are just making it up. But I think about some of the people I know with very severe psychiatric issues, people who are constantly miserable – and are similarly externally unaffected. These people are holding down stressful jobs, keeping difficult relationships together, etc – and often the people they haven’t “opened up to” don’t have any inkling of what they’re going through. They may tell me it must seem obvious to everybody that they’re completely falling apart – whereas in fact they are speaking fluently, they’re well-dressed, and they haven’t made a single social misstep during the whole time I’ve known them. If unusually negative mental states don’t affect behavior as strongly as people believe, why not unusually positive mental states?

Also, other times these people under-estimate themselves:

As participants neared the further reaches of the continuum, they frequently reported significant difficulty with recalling memories that related to their life history. They did not feel this way about facts, but rather about the details of the biographical moments surrounding the learning of those facts. They also reported that encoding for these types of memories seemed greatly reduced. A lthough this was their perception it did not appear to be the case when talking to them. They were typically rich sources of personal history information and their degree of recall seemed indistinguishable from participants who were in earlier locations on the continuum.

But:

There was a noticeable exception that seemed to be a genuine deficit. As they neared and entered the farther reaches of the continuum, participants routinely reported that they wereincreasingly unable to remember things such as scheduled appointments, while still being able to remember events that were part of a routine. For example, they might consistently remember to pick their child up at school each day, but forget other types of appointments such as doctor visits. Often they had adapted their routines to adjust for this change. Many would immediately write down scheduled events, items they needed to get at the store, and so forth on prominently displayed lists. When visiting their homes I noticed that these lists could be found on: televisions, computer monitors, near toilets, on and next to doors, and so forth. It was clear that the lists were being placed in locations that the participants would look with at least some degree of regularity. Participants consistently stated that they would prefer to remain in PNSE even if going back to ‘normal’ experience meant that they would no longer have this type of deficit.

Finally, Martin is impressed with the certainty that accompanies all of these experiences. People describe their PNSE as obviously more real and better than past states. They tend to be very effusive about this, saying that having the experience shattered everything they had previously believed in the most obvious and final way. But here too, there are signs that the participants are not well-attuned to what is going on in their own heads. Martin says that participants who moved from one level of his continuum to another (whether forward or back) would always say that the level they were currently at was the most fundamental and obviously real (even if they had said the opposite before). When he would tell participants about the experiences of other participants who were at different points of the continuum or just describing their experiences a slightly different way, both participants would confidently pronounce that the other wasn’t really enlightened.

I like this paper because it provides the basis for a minimalist account of enlightenment, similar to Daniel Ingram’s. Enlightenment hasn’t transformed these people’s personalities. It hasn’t given them infinite willpower or productivity or the ability to shoot qi bolts from their third eyes. It hasn’t even given them that much self-understanding. It’s just given them a different kind of internal experience.

The experience itself is hard to describe, but seems marked by drawing the self-other boundary in a different place. Participants don’t see themselves as making decisions; the decisions get made “under the hood” in a way where the person just feels like their path is laid out before them. They don’t see themselves as having thoughts; computations obviously get done, but they are not in awareness. They don’t feel like they have stress, even if the stress is physiologically present and obvious from their actions. On the other hand, they were more aware of certain low-level perceptual processes that are usually unconscious. It seems to be accompanied by total certainty that this is correct and revelatory and new (…much like the altered states people sometimes get on drugs).

None of this seems wildly outside the realm of possibility. It seems about as surprising as the existence of some new mental disorder. If 50 (or 1200, depending on how you count it) people with no history of lying said they had some kind of weird new mental disorder, I’d be willing to credit that they were describing their experience correctly, and able to give some useful information on the sorts of things that caused this disorder. It just sounds like information processing in the brain switching to some new attractor state if you force it hard enough.

Good Men Aren’t Getting Harder to Find

In a recent editorial, Wall Street Journal editor at large Gerard Baker noted that the share of female college graduates has risen to 57 percent, and posited that the disproportionate number of college-educated women is affecting the dating market. Since there are now four female college graduates in their 20s or 30s for every three college-educated males of the same age, and since women prefer not to date men whose status is lower than theirs, there must not be enough men to go around.

This hypothesis fits conveniently with a number of narratives, promulgated across the political spectrum from Bernie Sanders to Jordan Peterson, about boys and men falling behind or being abandoned by society. However, on closer examination, the story is a bit more nuanced. Baker makes a mistake common in trend pieces on higher education: He takes a statistic about “college graduates” and draws a conclusion that fails to consider the differences among the huge range of degree-granting institutions in the United States.

Every year in the US, nearly 2 million students enroll in one of the nearly 4,300 degree-granting colleges and universities. Of these schools, a few dozen at most would be considered elite, and maybe a few dozen more would be considered highly-selective. A hugely disproportionate share of writers at national media outlets attended a handful of elite private universities, and nearly everyone in mainstream media, and probably almost everyone they know attended elite or selective private universities, or selective state flagships. But these universities collectively educate only a small fraction of the total number of US college students.

US News and World Report ranks 400 universities and 225 liberal arts colleges, which pretty much covers every institution you’ve heard of and many you haven’t. But even this seemingly-exhaustive list still includes only 15 percent of degree-granting institutions. The traditional college experience of enrolling at the age of 18 in a four-year residential program at an academically-selective college or university is not the most common way in which Americans experience college. Millions of American students attend commuter campuses that serve the needs of training workers for local businesses and institutions.

When you take a statistic like the one that shows that 57 percent of all bachelors degrees are awarded to women, you’re drawing a generalization about the full set of 4,300 colleges that may not be true at specific schools, or subsets like the set of elite private universities. And, in fact, the disparity between men and women earning degrees at selective and elite universities seems to be much smaller than the disparity among overall college graduates.

At Harvard, Princeton, Columbia and University of Chicago, recent classes skewed slightly male. At Yale, Stanford, and Duke men and women are at parity.

Further down the rankings list, there were some significant disparities at schools like UNC-Chapel Hill, which is 62 percent female, NYU, which is 58 percent female, and UCLA and University of Georgia which are 57 percent female.

However genders were at parity or skewed slightly male at schools like Ohio State University, Binghamton University, Indiana University-Bloomington, University of Wisconsin-Madison, University of Michigan-Ann Arbor, and University of Tennessee-Knoxville. My undergraduate alma-mater, the University of Maryland at College Park, is 53 percent male. At schools focused on science and engineering, the proportions skew heavily male, as at MIT, which is 54 percent male and Georgia Tech, which is about 60 percent male.

A spot-check of a few dozen elite and selective schools suggests that there is near gender parity at the most elite private universities, and perhaps a slight tilt toward women among selective private schools and public flagships, but not one nearly as dramatic as the nationwide numbers would lead you to believe.

And there is no evidence that women are outnumbering or outperforming men in elite fields. Women who hold bachelor’s degrees earn significantly less, on average, than men who hold bachelor’s degrees, which indicates that the median female college graduate is working in a lower-status job than the median male college graduate. About two-thirds of lawyers are men, while nearly nine out of ten paralegals are women. Two-thirds of financial advisers are men, and while women earn more master’s degrees overall, men earn two out of three MBAs. Men report most of the news at top print, television and online outlets. Five out of six engineers and three out of four computer scientists are men. Reports of a generation of lost incel dudes living in basements and anesthetizing themselves with Fortnite and Doritos are wildly overstated.

In fact, it is the least selective schools that are driving the national gender gap in bachelor’s degrees. For example, at for-profit colleges, most of which have very low admissions standards, 63 percent of students are female.

The elite schools and, to a lesser extent, the selective schools, train America’s professionals, its media and business elites, and its academics and thought leaders. Graduating from these schools denotes class and status, and women who graduate from these schools might be hesitant to date men who attended less prestigious institutions or did not attend college at all.

Less-selective schools, however, don’t signify the same kind of status. Schools where the median student scores below 1100 on the SAT train students for middle-class careers, and female graduates of these institutions are unlikely to perceive a status gap between themselves and men who work in skilled, middle-class jobs that do not require a college degree. It seems that the larger share of female college graduates is a function of the fact that middle-class jobs that skew heavily female are more likely to require a college credential, while male-dominated jobs of similar status do not.

Over 90 percent of nurses are women. To become a registered nurse, one needs at least an associate’s degree, and most newly-minted nurses have a bachelor’s degree. There were 101,000 bachelors degrees in nursing awarded in the 2012-2013 academic year, which means nurses earn about 6 percent of all bachelor’s degrees in the United States.

Three-quarters of American schoolteachers are women, and all teachers must earn at least a bachelor’s degree. About 11 percent of all female college students major in education.

Jobs that confer a comparable status and skew male often do not require academic credentials. To become a plumber or an electrician, for example, one must complete an apprenticeship that often lasts for several years and pass a state certification exam, but these jobs do not require college degrees. The skilled trades are about 98 percent male. About 87 percent of US police officers are men, and only a third of cops have a four-year degree. In order to become a firefighter or a paramedic, you need a state certification, but not a degree. More than 90 percent of firefighters and more than two thirds of paramedics are men.

So, even though more women earn degrees than men, there is virtually no gender gap at elite schools, and gender gaps in elite fields favor men. What the data actually tell us is that there are significantly more women than men going to lower-ranked colleges and universities to earn credentials that qualify them to become teachers, nurses, paralegals, clerks and office administrators. The fact that nursing and teaching require degrees while law enforcement, emergency medical services, and skilled trades do not seems to largely explain why more women than men earn college degrees.

That means that the dating apocalypse Gerard Baker fears, in which a surfeit of educated, credentialed women can’t find any men of comparable status to date, will not happen unless teachers and nurses are unwilling to date police officers, firefighters, paramedics and tradesmen.

Daniel Friedman is the Edgar Award-nominated author of Don’t Ever Get Old, Don’t Ever Look Back and Riot Most Uncouth. Follow him on Twitter @DanFriedman81.

The post Good Men Aren’t Getting Harder to Find appeared first on Quillette.

Some Income Tax Data on the Top Incomes

The first table shows a breakdown for taxpayers from the top .001% to the top 5%. Focusing on the top .001% for a moment, there were 1,433 such taxpayers in 2017. (You'll notice that the number of taxpayers in the top .01%, .1% and 1% rise by multiples of 10, as one would expect.)

The "Adjusted Gross Income Floor" tells you that to be in the top .001% in 2017, you had to have income of $63.4 million in that year. If you had income of more than $208,000, you were in the top 5%,

The total income for the top .001% was $256 billion. Of that amount, the total federal income tax paid was $61.7 billion. Thus, the average federal income tax rate paid was 24.1% for this group. The top .001% received 2.34% of all gross income, and paid 3.86% of all income taxes.

Of course, it's worth remembering that this table is federal income taxes only. It doesn't include state taxes on income, property, or sales. It doesn't include the share of corporate income taxes that end up being paid indirectly (in the form of lower returns) by those who own corporate stock.

Here's a follow-up table showing the same information, but for groups ranging from the top 1% to the top 50%.

Of course, readers can search through these tables for what is of most interest to them. But here are af few quick thoughts of my own.

1) Those at the very tip-top of the income distribution, like the top .001% or the top .01%, pay a slightly lower share of income in federal income taxes than say, the top 1%. Why? I think it's because those at the very top are often receiving a large share of their annual income in the form of capital gains, which are taxed at a lower rate than regular income.

2) It's useful to remember that many of those at the very tip-top are not there every year. It's not like the fall into poverty the next year, of course. But they are often making a decision about when to turn capital gains into taxable income, and they are people who--along with their well-paid tax lawyers-- have some control over the timing of that decision and how the income will be received.

3) The average tax rate shown here is not the marginal tax bracket. The top federal tax bracket is 37% (setting aside issues of payroll taxes for Medicare and how certain phase-outs work as income rises). But that marginal tax rate applies only to an additional dollar of regular income earned. With deductions, credits, exemptions, and capital gains taken into account, the average rate of income tax a as a share of total income is lower.

4) The top 50% pays almost all the federal income tax. The last row on the second table shows that the top 50% pays 96.89% of all federal income taxes. The top 1% pays 38.47% of all federal income taxes. Of course, anyone who earns income also owes federal payroll taxes that fund Social Security and Medicare, as well as paying federal excise taxes on gasoline, alcohol, and tobacco, and these taxes aren't included here.

5) This data is about income in 2017. It's not about wealth, which is accumulated over time. Thus, this data is relevant for discussions of changing income tax rates, but not especially relevant for talking about a wealth tax.

6) There's a certain mindset which looks at, say, the $2.3 trillion in total income for the top 1%, and notes that the group is "only" paying $615 billion in federal income taxes, and immediately starts thinking about how the federal government could collect a few hundred billion dollars more from that group, and planning how to spend that money. Or one might focus further up, like the 14,330 in the top .01% who had more than $12.8 million in income in 2017. Total income for this group was $565 billion, and they "only" paid about 25% of it in federal income taxes. Surely they could chip in another $100 billion or so? On average, that's only about $7 million apiece in additional taxes for those in the top .01%. No big deal. Raising taxes on other people is so easy.

I'm not someone who spends much time weeping about the financial plight of the rich, and I'm not going to start now. It's worth remembering (again) that the numbers here are only for federal income tax, so if you are in a state or city with its own income tax, as well as paying property taxes and the other taxes at various levels of government, the tax bill paid by those with high incomes is probably edging north of 40% of total income in a number of jurisdictions.

But let's set aside the question of whether the very rich can afford somewhat higher federal income taxes (spoiler alert: they can), and focus instead on the total amounts of money available. The numbers here suggest that somewhat higher income taxes at the very top could conceivably bring in a few hundred billion dollars, even after accounting for the ability of those with very high income to alter the timing and form of the income they receive. To put this amount in perspective, the federal budget deficit is now running at about $800 billion per year. To put it another way, it seems implausible to me that plausibly higher taxes limited to those with the highest incomes would raise enough to get the budget deficit down to zero, much less to bridge the existing long-term funding gaps for Social Security or Medicare, oi to support grandiose spending programs in the trillions of dollars for other purposes. Raising federal income taxes at the very top may be a useful step, but it's not a magic wand that can pay for every wish list.

Dictators: The Great Performers

The paradox of the modern dictator is that he must create the illusion of mass support while turning the population into a nation of terrorised prisoners endlessly condemned to faking enthusiasm for their oppressor. Frank Dikötter, a brilliant historian with a prize-winning trilogy on Mao’s China behind him, takes eight of the most successful 20th-century dictators: Mussolini, Hitler, Stalin, Mao Zedong, Kim Il-sung, Nicolae Ceausescu, Papa Doc Duvalier and Mengistu, and shows with chilling brevity and clarity how this is done.

The road...

The Real Agents of S.H.I.E.L.D.

This article does not reflect the views of the Transportation Security Administration.

It is most living Americans’ “Where Were You When” moment, the day we all watched looped film of airliners crashing into the Twin Towers, watched victims trapped by raging flames forced to choose between being burned alive and jumping to their deaths. Readers not old enough to remember the horror of that day can get a sense from audio of 9/11 released by the Transportation Security Administration (TSA) in 2018. The TSA is an agency of the U.S. Department of Homeland Security that was created as a response to the 9/11 attacks to make sure nothing like that ever happens again.

As that collective trauma fades into history, the TSA, where I work, enjoys about the same level of public support as a measles outbreak.

The Threat and Why We Do What We Do

If you worked for the federal government on 9/11 in any sort of national security capacity, you knew fear of further attacks were pervasive, particularly after the anthrax mailings sharpened the impression of being under attack by unknown assailants on multiple fronts. (I worked in a building that got one of the letters.) Fear is hardly conducive to good policymaking, yet it was in this environment that the Department of Homeland Security, and its red-headed stepchild, the Transportation Security Agency, was born. It’s mission: to avoid a repeat of the airport security failure that allowed 19 Al Qaeda terrorists to hijack four jetliners using smuggled box-cutters.

For whatever reason, militant Islamists have long been fixated on attacking commercial aircraft. 9/11 carried the highest body count, but other equally ambitious attacks have been foiled by bad terrorist planning, good intelligence work, the intervention of brave passengers, and sheer luck.

Most Americans’ first acquaintance with Al Qaeda was 9/11, but that was not their first attempted attack on commercial aviation. In 1995, 9/11 mastermind Khalid Sheik Mohammed put together the “Bojinka Plot,” which was to start with the assassination of Pope John Paul II when he visited the Philippines, and conclude by placing bombs on 11 US bound planes. Luckily, members of the terrorist cell accidentally started a fire at their safehouse apartment and were subsequently arrested.

Few now remember that just three months after 9/11 would-be suicide bomber Richard Reid was stopped from igniting the explosive packed into his shoes by observant passengers on an American Airlines flight from Paris to Miami. You can thank Reid for having to take your shoes off and get them x-rayed when you fly.

In 2006, another massive Al Qaeda bombing plot was disrupted. Seven US-bound airliners were to be taken down with bomb’s assembled mid-flight from the liquid explosive TATP smuggled in sports-drink bottles. You can thank the perpetrators of that plot for why you are limited in the amount of liquid you can carry on board. (As an aside, if you want to carry a liquid on board, freeze it solid. No quantity restrictions.)

Then came the attempt by the “Underwear Bomber,” Omar Farouk Abdulmutallab, to detonate PETN explosive powder sewn into his underwear to take down a Northwest Airlines flight over Detroit. Again, an observant passenger intervened. In response, the TSA rapidly deployed full body scanners to all major US airports.

In 2010, intelligence was passed to the US warning that three US-bound cargo planes had bombs on board. They were stopped and searched before reaching the US.

Outside the U.S., Islamist terrorists have been more successful.

In 2015, a chartered jet bound for Russia, Metrojet Flight 9268, was blown from the sky by a bomb planted by ISIS, killing 224.

In 2016, the Somali Affiliate of Al-Qaeda, al-Shabaab, smuggled a bomb on board Daallo Airlines Flight 159, which detonated and blew a hole in the aircraft, sucking out the suicide bomber. The bomb was likely concealed in a laptop, which is one reason passengers are now required to get their laptops and other large electronics out of bags.

The onerous but performative aspect of the TSA’s job is designed to show bad guys watching us that everyone, even grandmothers and war vets, are subject to thorough screening. Of course we know it is extremely unlikely that a grandmother managed to pack plastic explosives in her oversize tube of toothpaste. But until some security genius comes up with a reliable way to read hostile intent, we have to react as if she might have. Which gives bad guys less motivation to enlist grannies—through bribery, trickery, or compulsion—as smugglers.