Is spacetime really a continuum? That is, can points of spacetime really be described—at least locally—by lists of four real numbers  ? Or is this description, though immensely successful so far, just an approximation that breaks down at short distances?

? Or is this description, though immensely successful so far, just an approximation that breaks down at short distances?

Rather than trying to answer this hard question, let’s look back at the struggles with the continuum that mathematicians and physicists have had so far.

The worries go back at least to Zeno. Among other things, he argued that that an arrow can never reach its target:

That which is in locomotion must arrive at the half-way stage before it arrives at the goal.—Aristotle summarizing Zeno

and Achilles can never catch up with a tortoise:

In a race, the quickest runner can never overtake the slowest, since the pursuer must first reach the point whence the pursued started, so that the slower must always hold a lead.—Aristotle summarizing Zeno

These paradoxes can now be dismissed using our theory of real numbers. An interval of finite length can contain infinitely many points. In particular, a sum of infinitely many terms can still converge to a finite answer.

But the theory of real numbers is far from trivial. It became fully rigorous only considerably after the rise of Newtonian physics. At first, the practical tools of calculus seemed to require infinitesimals, which seemed logically suspect. Thanks to the work of Dedekind, Cauchy, Weierstrass, Cantor and others, a beautiful formalism was developed to handle real numbers, limits, and the concept of infinity in a precise axiomatic manner.

However, the logical problems are not gone. Gödel’s theorems hang like a dark cloud over the axioms of mathematics, assuring us that any consistent theory as strong as Peano arithmetic, or stronger, cannot prove itself consistent. Worse, it will leave some questions unsettled.

For example: how many real numbers are there? The continuum hypothesis proposes a conservative answer, but the usual axioms of set theory leaves this question open: there could vastly more real numbers than most people think. And the superficially plausible axiom of choice—which amounts to saying that the product of any collection of nonempty sets is nonempty—has scary consequences, like the existence of non-measurable subsets of the real line. This in turn leads to results like that of Banach and Tarski: one can partition a ball of unit radius into six disjoint subsets, and by rigid motions reassemble these subsets into two disjoint balls of unit radius. (Later it was shown that one can do the job with five, but no fewer.)

However, most mathematicians and physicists are inured to these logical problems. Few of us bother to learn about attempts to tackle them head-on, such as:

• nonstandard analysis and synthetic differential geometry, which let us work consistently with infinitesimals,

• constructivism, which avoids proof by contradiction: for example, one must ‘construct’ a mathematical object to prove that it exists,

• finitism (which avoids infinities altogether),

• ultrafinitism, which even denies the existence of very large numbers.

This sort of foundational work proceeds slowly, and is now deeply unfashionable. One reason is that it rarely seems to intrude in ‘real life’ (whatever that is). For example, it seems that no question about the experimental consequences of physical theories has an answer that depends on whether or not we assume the continuum hypothesis or the axiom of choice.

But even if we take a hard-headed practical attitude and leave logic to the logicians, our struggles with the continuum are not over. In fact, the infinitely divisible nature of the real line—the existence of arbitrarily small real numbers—is a serious challenge to almost all of the most widely used theories of physics.

Indeed, we have been unable to rigorously prove that most of these theories make sensible predictions in all circumstances, thanks to problems involving the continuum.

One might hope that a radical approach to the foundations of mathematics—such as those listed above—would allow avoid some of the problems I’ll be discussing. However, I know of no progress along these lines that would interest most physicists. Some of the ideas of constructivism have been embraced by topos theory, which also provides a foundation for calculus with infinitesimals using synthetic differential geometry. Topos theory and especially higher topos theory are becoming important in mathematical physics. They’re great! But as far as I know, they have not been used to solve the problems I want to discuss here.

Today I’ll talk about one of the first theories to use calculus: Newton’s theory of gravity.

Newtonian Gravity

In its simplest form, Newtonian gravity describes ideal point particles attracting each other with a force inversely proportional to the square of their distance. It is one of the early triumphs of modern physics. But what happens when these particles collide? Apparently the force between them becomes infinite. What does Newtonian gravity predict then?

Of course real planets are not points: when two planets come too close together, this idealization breaks down. Yet if we wish to study Newtonian gravity as a mathematical theory, we should consider this case. Part of working with a continuum is successfully dealing with such issues.

In fact, there is a well-defined ‘best way’ to continue the motion of two point masses through a collision. Their velocity becomes infinite at the moment of collision but is finite before and after. The total energy, momentum and angular momentum are unchanged by this event. So, a 2-body collision is not a serious problem. But what about a simultaneous collision of 3 or more bodies? This seems more difficult.

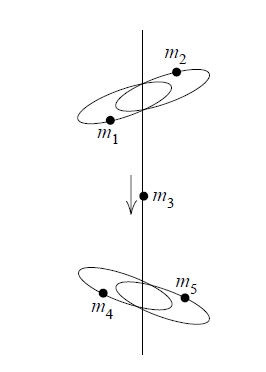

Worse than that, Xia proved in 1992 that with 5 or more particles, there are solutions where particles shoot off to infinity in a finite amount of time!

This sounds crazy at first, but it works like this: a pair of heavy particles orbit each other, another pair of heavy particles orbit each other, and these pairs toss a lighter particle back and forth. Xia and Saari’s nice expository article has a picture of the setup:

Each time the lighter particle gets thrown back and forth, the pairs move further apart from each other, while the two particles within each pair get closer together. And each time they toss the lighter particle back and forth, the two pairs move away from each other faster!

As the time  approaches a certain value

approaches a certain value  the speed of these pairs approaches infinity, so they shoot off to infinity in opposite directions in a finite amount of time, and the lighter particle bounces back and forth an infinite number of times!

the speed of these pairs approaches infinity, so they shoot off to infinity in opposite directions in a finite amount of time, and the lighter particle bounces back and forth an infinite number of times!

Of course this crazy behavior isn’t possible in the real world, but Newtonian physics has no ‘speed limit’, and we’re idealizing the particles as points. So, if two or more of them get arbitrarily close to each other, the potential energy they liberate can give some particles enough kinetic energy to zip off to infinity in a finite amount of time! After that time, the solution is undefined.

You can think of this as a modern reincarnation of Zeno’s paradox. Suppose you take a coin and put it heads up. Flip it over after 1/2 a second, then flip it over after 1/4 of a second, and so on. After one second, which side will be up? There is no well-defined answer. That may not bother us, since this is a contrived scenario that seems physically impossible. It’s a bit more bothersome that Newtonian gravity doesn’t tell us what happens to our particles when

Your might argue that collisions and these more exotic ‘noncollision singularities’ occur with probability zero, because they require finely tuned initial conditions. If so, perhaps we can safely ignore them!

This is a nice fallback position. But to a mathematician, this argument demands proof.

A bit more precisely, we would like to prove that the set of initial conditions for which two or more particles come arbitrarily close to each other within a finite time has ‘measure zero’. This would mean that ‘almost all’ solutions are well-defined for all times, in a very precise sense.

In 1977, Saari proved that this is true for 4 or fewer particles. However, to the best of my knowledge, the problem remains open for 5 or more particles. Thanks to previous work by Saari, we know that the set of initial conditions that lead to collisions has measure zero, regardless of the number of particles. So, the remaining problem is to prove that noncollision singularities occur with probability zero.

It is remarkable that even Newtonian gravity, often considered a prime example of determinism in physics, has not been proved to make definite predictions, not even ‘almost always’! In 1840, Laplace wrote:

We ought to regard the present state of the universe as the effect of its antecedent state and as the cause of the state that is to follow. An intelligence knowing all the forces acting in nature at a given instant, as well as the momentary positions of all things in the universe, would be able to comprehend in one single formula the motions of the largest bodies as well as the lightest atoms in the world, provided that its intellect were sufficiently powerful to subject all data to analysis; to it nothing would be uncertain, the future as well as the past would be present to its eyes. The perfection that the human mind has been able to give to astronomy affords but a feeble outline of such an intelligence.—Laplace

However, this dream has not yet been realized for Newtonian gravity.

I expect that noncollision singularities will be proved to occur with probability zero. If so, the remaining question would why it takes so much work to prove this, and thus prove that Newtonian gravity makes definite predictions in almost all cases. Is this is a weakness in the theory, or just the way things go? Clearly it has something to do with three idealizations:

• point particles whose distance can be arbitrarily small,

• potential energies that can be arbitrariy large and negative,

• velocities that can be arbitrarily large.

These are connected: as the distance between point particles approaches zero, their potential energy approaches  and conservation of energy dictates that some velocities approach

and conservation of energy dictates that some velocities approach

Does the situation improve when we go to more sophisticated theories? For example, does the ‘speed limit’ imposed by special relativity help the situation? Or might quantum mechanics help, since it describes particles as ‘probability clouds’, and puts limits on how accurately we can simultaneously know both their position and momentum?

Next time I’ll talk about quantum mechanics, which indeed does help.

What if you really didn't have to accept that there are only two valid choices for a particular race, and your third-party vote actually mattered more than as just a protest?

What if you really didn't have to accept that there are only two valid choices for a particular race, and your third-party vote actually mattered more than as just a protest?

NYPD brass testified before the New York City Council Thursday that it has no idea how much money it seizes from citizens each year using civil asset forfeiture, and an attempt to collect the data would crash its computer systems, The Village Voice

NYPD brass testified before the New York City Council Thursday that it has no idea how much money it seizes from citizens each year using civil asset forfeiture, and an attempt to collect the data would crash its computer systems, The Village Voice ![[After setting your car on fire] Listen, your car's temperature has changed before. [After setting your car on fire] Listen, your car's temperature has changed before.](http://imgs.xkcd.com/comics/earth_temperature_timeline.png)

If you've visited Baltimore at any point during 2016, there's a good chance your every movement was tracked by the city's newest high-tech surveillance program.

If you've visited Baltimore at any point during 2016, there's a good chance your every movement was tracked by the city's newest high-tech surveillance program.