Shared posts

Van de Ven: Some basics on CPU P states on Intel processors

3D Sugar Printer

The Sugar Lab 3D sugar printer started as a cool project and has now exploded into a finely tuned sugar printer.

“The Sugar Lab is a micro-design firm for custom 3D printed sugar. With our background in architecture and our penchant for complex geometry, we’re bringing 3D printing technology to the genre of mega-cool cakes. 3D printing represents a paradigm shift for confections, transforming sugar into a dimensional, structural medium. It makes it possible to design, digitally model and print an utterly original sugar sculpture on top of a cake. All of our projects are custom. The design process begins from scratch, when we hear from you.”

July 2013 GNU Toolchain Update

It has been a strangely quiet month in the GNU Toolchain world. There have however been a few new features added, and here they are:

* The ARM AArch64 toolchain now supports a 32-bit ABI as well as the previous 64-bit ABI. The abi can be selected by the new: -mabi=<name> command line option. -mabi=ilp32 selects the 32-bit ABI where the int, long and pointer types are all 32-bits long. The -mabi-lp64 selects the 64-bit ABI where the int type is 32-bits but the long and pointer types are 64-bits. The two ABIs are incompatible. The default depends upon how the toolchain was configured.

* The PowerPC toolchain now has support for the Power8 architecture, including support for the ISA 2.07 specification for transactional memory. This is enabled via the new -mhtm command line option.

* Support for the Andes NDS32 part has been added to NEWLIB.

* The Microblaze target now supports Big Endian as well as little endian code generation.

* Linker scripts normally ensure that the alignment of an output section is the maximum of the alignment requirements of all of its input sections. In cases where the output section has different load (LMA) and run-time addresses (VMA) however, the load address was not forced to the meet the alignment requirement. Using the new linker script directive ALIGN_WITH_INPUT will make sure that the load address also meets the alignment requirements.

* The MIPS assembler and linker now support a new command line option: --insn32. This controls the choice of microMIPS instructions used in code generated by the either of the tools - for example when generating PLT entries or lazy binding stubs, or in relaxation. If -insn32 is specified, then only 32-bit instruction encodings with be used. By default or if --no-insn32 is specified, all instruction encodings are used, including 16-bit ones where possible.

* The MIPS assembler also supports a new command line option: -mnan=<encoding>

This select between the IEEE 754-2008 (-mnan=2008) or the legacy (-mnan=legacy) NaN encoding format. The latter is the default.

* All support for MIPS ECOFF based targets has been removed from the toolchain.

Cheers

Nick

Awesome Silhouettes of Superheroes Reveal Their Past and Present

California-based artist Khoa Ho's poster series titled Superheroes - Past/Present features iconic superheroes like Batman, Superman, and Spiderman as creatively designed silhouettes revealing their former struggles and current strength. The series draws inspiration form the Batman Begins quote: "It's not who you are underneath. It's what you do that defines you."

The graphic designer's simple silhouettes are both wonderful to look at and a real treat for comic book lovers. Anyone who knows the stories behind each of these heroic characters will be able to identify the lower half of the image that alludes to past trials and tribulations that have helped form the brave vigilantes they are in the present day.

Khoa Ho website

via [silent giant]

Comparing ASM.js and NaCl

Whats the problem with the web in its current form?

Since the mid 2000's or so there has been this big push to take all those legacy applications and shove them into an HTML form tag with some slick CSS. Great idea in theory except it doesn't really work for all those millions of lines of existing C/C++ applications. And then theres applications like games and office/productivity suites that require a greater level of user interactivity and of course performance. We aren't arguing you can't do amazing things with Javascript. We are simply trying to say that the heavy lifting almost always comes down to native code and theres already piles of existing C/C++ sitting around. Unfortunately the only available scripting environment in your browser is JavaScript. And although JIT engines have made it very fast in recent years going from the power of C++ to JavaScript is still like programming with a hand tied behind your back. JavaScript lacks the typing system necessary to do low level bitwise operations. No sorry, charCodeAt doesn't begin to cut it. Perhaps the most hilarious solution to this problem is the JavaScript typed array. Which not only allows you to boot the Linux kernel in your browser but also craft precise heap feng-shui payloads which were rather ugly with regular strings. Unfortunately typed arrays only take you so far because in the end you're still writing JavaScript, and thats no fun. There has to be a better way to do this...

What is ASM.js?

It's a virtual machine that lets you compile C/C++ programs to JavaScript and then run them in a browser. This process is done by using LLVM to turn C/C++ code into LLVM bitcode which is then fed to Mozilla's Emscripten, which turns it into JavaScript. If a browser follows the asm.js specification (a strict subset of JavaScript) it can benefit from some AOT compilation that will optimize the generated native code. Compiling one language to another is not new, its been done many times before. Because many different languages can be compiled into LLVM bitcode the future holds a lot potential use cases for ASM.js.

Each ASM.js application lives within its own virtual machine whose entire virtual memory layout is contained within a JavaScript typed array. The ASM.js specification is technically a subset of JavaScript which means not-JavaScript since you have to support it explicitly to get the benefits (see above). ASM.js has a syntax you can read/write as a human, its not a bytecode, and it is pretty close to JavaScript. The benefit of ASM.js is that fast JavaScript interpreters already exist. It 'just works' today, but will only benefit from the AOT compilation as long as your JavaScript interpreter supports parsing the ASM.js specific syntax... which only Firefox nightly supports right now...

One of the key things this subset of JavaScript does for you is eliminate the JavaScript JIT's requirement of generating code that operates on data that could change types at any moment. When your browsers JavaScript JIT compiler looks at a piece of JavaScript code like this...

function A(var b, var c) {

return b + c;

}

A('wat', 'ok');

A(1,2);

You may have guessed by now that programs such as games are complex and have visual components that normally render on a GPU. For this we have WebGL. But then theres audio, networking, file access and threads. It sounds like tackling this problem is going to require some heavy lifting and a very rich API...

What is Native Client (NaCl)?

We have talked about NaCl a lot on this blog but thats because its a novel approach to a very hard problem, namely executing native code in a memory safe way on architectures like x86. Its not another reinvention of malicious code detection, its a unique approaching to sandboxing all code while still giving it access to the raw CPU. NaCl does not transform existing code, it takes straight x86/x86_64/ARM instructions from the internet, validates them and executes them. This is a fundamental difference between ASM.js and NaCl. The former takes C/C++ and turns it into JavaScript which gets put through a JIT engine, the latter executes the native instructions as you provide them to the browser.

There are of course some trade offs such as a somewhat complicated build process, but Google has gone out of their way to ease this pain. Portable Native Client (PNaCl) takes this a step further and allows developers to ship a bitcode for their application which PNaCl will compile on the fly for your particular architecture. In this sense PNaCl seems a bit closer to the ASM.js model on the surface, but underneath its an entirely different architecture. The code PNaCl produces exists within a sandbox and must adhere to the strict rules NaCl imposes.

NaCl also provides an extensive and rich API through PPAPI for doing heavy lifting like graphics, audio and threads. We aren't going to cover the NaCl architecture too deeply here, see our older blog posts for that. Instead we'll move onto our security comparison of NaCl and ASM.js

What security benefits/tradeoffs do they introduce?

Every new line of code adds attack surface, these two components are no different. Because ASM.js is a strict subset of JavaScript there will be new parser code to look at. The exploitation properties of ASM.js remain to be seen as its only in Firefox nightly right now. My first guess is it makes JIT page allocation caps in Firefox impossible. I'm also confident you can use it to degrade, if not completely defeat, ASLR. In our 2011 JIT attacks paper Yan Ivnitskiy and I discussed using JavaScript to influence the output of JIT engines in order to introduce specific ROP code sequences into executable memory. I suspect the faster JIT pipelines used to transform ASM.js code into native code will make this process easier at some point but I have no data to prove that right now.

The approach of taking an unmanaged language like C/C++ and converting it to a managed and memory safe one solves the memory corruption issues in that particular piece of code. So if some C++ code originally contained a buffer overflow, once converted and running in ASM.js you would be accessing a JavaScript type which comes with builtin runtime checks to prevent such issues. Instead of overwriting some critical data you would trigger an exception and the browser would continue on as if nothing ever happened. As a side point, it may be fun to write an ASAN-like tool from ASM.js that tests and fuzzes C/C++ code in the browser using the JavaScript runtime as the instrumentation.

NaCl offers more than just bringing existing C/C++ code to your browser. NaCl has a unique sandbox implementation that can be used in a lot other places. NaCl statically validates all code before executing it. This validation ensures that the module can only execute code that adheres to a strict set of rules. This is a requirement because NaCl modules have full access to the CPU and can do a lot more than JavaScript programs currently can by utilizing the PPAPI interfaces in Chrome. This validation and code flow requirements have performance overhead but not enough that it makes large applications unusable.

I still think we will see NaCl on Android one day as a way to compete with iOS code signing. NaCl is such a game changer for browser plugins that I believe it will influence sandbox designs going forward. If you're designing a sandbox for your application today you should have read its design documents yesterday.

ASM.js does not provide a sandbox, or any additional layer of security for that matter. It's just a toolchain that compiles a source language into a subset of JavaScript. It relies on the memory safe virtual machine provided by the existing JavaScript interpreter to contain code. We all know how well that has worked out. Of course I'm not picking on Mozilla here, any browser without a sandbox is an easy target. But this is where the main difference between ASM.js and NaCl becomes apparent. ASM.js never intended to increase the security of Firefox, only bring existing C/C++ code to a larger web platform. NaCl intends to do this as well but also to increase the security of Chrome by containing all plugins in two sandboxes. I suppose if one were to take a plugin like Adobe Flash Player and port it to ASM.js this is a security benefit to Firefox users but in the end they are still without a sandbox around their JavaScript engine and probably paid a performance penalty for it too.

Conclusion

ASM.js is still under heavy development, so is NaCl/PNaCl, but the latter is a bit more mature at the moment given its end goal. For ASM.js to run existing C/C++ programs and libraries it will need to provide an extensive API that does a lot more than current JavaScript interpreters provide. NaCl already currently offers this along with better performance and a stronger sandbox design. If Mozilla wants to get serious about running all untrusted code from the internet in its JavaScript interpreter then it needs a real sandbox ASAP.

Finally, if the future is recompiling everything to JavaScript then the future is ugly.

Update 6/3/13: Fixed some ambiguous text that implied asm.js only worked in Firefox. See above for details.

Pedais de Josh Homme (Qotsa) 2013

Josh Homme

Pedalboard: Junho 2013 … Like Clockwork “Ultra Fucking Baddass Tour”

STONE DEAF PDF-1 Parametric Distortion Filter

BOSS GE-7 Equalizer

FULLTONE Ultimate Octave

DUNLOP Rotovibe

HARMAN DIGITECH Whammy 4

EHX Blade Swith+ (Seleciona o amplificador ou soma os dois) Compre aqui

DUNLOP Q Zone CryBaby

DUNLOP VLP1 Volume

SIB! Echodrive

MORLEY Power Wah Vintage (1970)

BOSS RE20 SpaceEcho

FUZZROCIOUS The Demon (Mod: Oitava acima permanente + Gate/Boost)

EHX Polyphonic Octava Generator POG ( ligado a um switch)

KORG Pithblack tuner

Guitarduino show and tell

[Igor Stolarsky] plays in a band called 3′s & Sevens. We’d say he is the Guitarist but since he’s playing this hacked axe we probably should call him the band’s Guitarduinist. Scroll down and listen to the quick demo clip of what he can do with the hardware add-ons, then check out his video explanation of the hardware.

There are several added inputs attached to the guitar itself. The most obvious is the set of colored buttons which are a shield riding on the Arduino board itself. This attaches to his computer via a USB cable where it is controlling his MaxMSP patches. They’re out of the way and act as something of a sample looper which he can then play along with. But look at the guitar body under his strumming hand and you’ll also see a few grey patches. These, along with one long strip on the back of the neck, are pressure sensors which he actuates while playing. The result is a level of seamless integration we don’t remember seeing before. Now he just needs to move the prototype to a wireless system and he’ll be set.

If you don’t have the skills to shred like [Igor] perhaps an automatic chording device will give you a leg up.

[via GeekBoy]

Filed under: Arduino Hacks, musical hacks

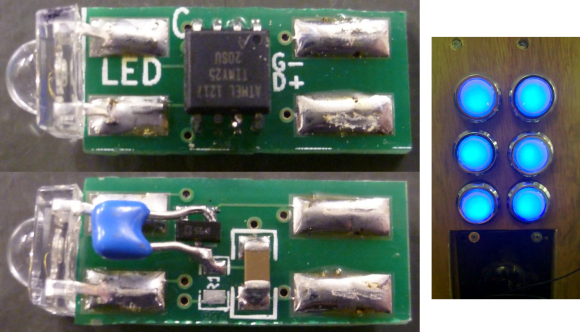

Adding RGB backlight to arcade buttons

These arcade buttons started out as illuminated buttons. But they were bulb-based which only allowed for one color. [Jon] and his friends at the Leeds Hackspace wanted to find a way to retro fit them with RGB LEDs, without changing the buttons themselves. The hack lets them replace the bulb with an addressable circuit board. The really interesting thing about it is that there is no separate interface for addressing. The communications happen on the voltage bus itself.

After deciding to include a microcontroller inside the button they built a test version using some protoboard to see if it would fit. Indeed there was enough room and the proof-of-concept led to the factory spun board which you see above. It has pads for two of the four LED module feet on either side, with the opposite end of the board fitting into the bulb receptacle. The voltage line is pulsed to send commands to the microcontroller. We’re interested in finding out exactly how that works but we’ll have to dig through the code before unlocking the secret.

Filed under: led hacks

June 2013 GNU Toolchain Update

There are lots of developments to report on this month:

* NEWLIB has a new configure option: --enable-newlib-nano-malloc

This enables the use of a small footprint memory allocator which can be useful on targets with a restricted amount of RAM available.

* NEWLIB has a new configure option: --disable-newlib-unbuf-stream-opt

This disables the unbuffered stream optimizations in newlib, which makes functions like fprintf() smaller and use less stack, but it also means that functions like ungetc() are slower and more complicated.

* The BFD library in the BINUTILS now has support for debug sections placed into alternative files via the use of the DWZ compression program.

* The MIPS port of the GAS assembler supports a new command line option: --relax-branch

This enables the automatic replacement of out of range branch instructions with longer alternatives. This feature is not enabled by default because the expander will not always choose an optimal branch instruction. If the assembler source code has been created by GCC then there is no need for this feature as GCC will have already selected the correct size branch instructions to use.

* GCC and G++ support a new command line option: -fcilkplus

This enables the use of Cilk Plus language extension features for C and C++. This was created by Intel as a way of adding support for data and code parallelism to C and C++ programs. It does this by adding new keywords and functions to the language that are designed to allow the compiler to take advantage of any parallel processing features available in the target architecture.

Detailed information about Cilk Plus can be found at http://www.cilkplus.org. The present implementation follows ABI version 0.9.

* The ARM port of GCC supports a new command line option: -mrestrict-it

This restricts the generation of IT blocks to conform to the rules of ARMv8. IT blocks can only contain a single 16-bit instruction from a select set of instructions. This option is on by default for ARMv8 Thumb mode.

* The I386 port of GCC supports two new variants for the -march, -mcpu and -mtune options: core-avx2 and slm

* The MIPS port of GCC supports a new command line option: -meva

This enables the use of the MIPS Enhanced Virtual Addressing instructions.

* The PowerPC port of GCC supports a whole set of new command line options:

-mcrypto

Enables the use of the built-in functions that allow direct access to the cryptographic instructions that were added in version 2.07 of the PowerPC ISA.

-mdirect-move

Generates code that uses the instructions to move data between the general purpose registers and the vector/scalar registers that were added in version 2.07 of the PowerPC ISA.

-mpower8-fusion

Generates code that keeps some integer operations adjacent so that the instructions can be fused together on power8 and later processors.

-mpower8-vector

Generates code that uses the vector and scalar instructions that were added in version 2.07 of the PowerPC ISA. Also enables the use of built-in functions that allow more direct access to the vector instructions.

-mquad-memory

Generates code that uses the quad word memory instructions. This option requires use of 64-bit mode.

* The SPARC port of GCC supports a new command line option: -mfix-ut699

Enables the documented workarounds for the floating-point errata of the UT699 processor.

Cheers

Nick

LLVM 3.3 Released!

LLVM 3.3 is a big release: it adds new targets for the AArch64 and AMD R600 GPU architectures, adds support for IBM's z/Architecture S390 systems, and major enhancements for the PowerPC backend (including support for PowerPC 2.04/2.05/2.06 instructions, and an integrated assembler) and MIPS targets.

Performance of code generated by LLVM 3.3 is substantially improved: the auto-vectorizer produces much better code in many cases and is on by default at -O3, a new SLP vectorizer is available, and many general improvements landed in this release. Independent evaluations show that LLVM 3.3's performance exceeds that of LLVM 3.2 and of its primary competition on many benchmarks.

3.3 is also a major milestone for the Clang frontend: it is now fully C++'11 feature complete. At this point, Clang is the only compiler to support the full C++'11 standard, including important C++'11 library features like std::regex. Clang now supports Unicode characters in identifiers, the Clang Static Analyzer supports several new checkers and can perform interprocedural analysis across C++ constructor/destructor boundaries, and Clang even has a nice "C++'11 Migrator" tool to help upgrade code to use C++'11 features and a "Clang Format" tool that plugs into vim and emacs (among others) to auto-format your code.

LLVM 3.3 is the result of an incredible number of people working together over the last six months, but this release would not be possible without our volunteer release team! Thanks to Bill Wendling for shepherding the release, and to Ben Pope, Dimitry Andric, Nikola Smiljanic, Renato Golin, Duncan Sands, Arnaud A. de Grandmaison, Sebastian Dreßler, Sylvestre Ledru, Pawel Worach, Tom Stellard, Kevin Kim, and Erik Verbruggen for all of their contributions pulling the release together.

If you have questions or comments about this release, please contact the LLVMdev mailing list! Onward to LLVM 3.4!

Sanders: A picture is worth a thousand words

Van den Oever: Is that really the source code for this software?

ISA Aging: A X86 case study

Bruno Cardoso LopesTalking about the last paper I've presented and a quick review about ISCA'13

Last sunday (23 Jun) in Tel Aviv, Israel, we presented the paper ISA Aging: A X86 case study in the WIVOSCA 2013, the Seventh Annual Workshop on the Interaction amongst Virtualization, Operating Systems and Computer Architecture, inside ISCA’13 - 40th International Symposium on Computer Architecture. The workshop was great, thanks to the organization held by Girish Venkatasubramanian and James Poe. Bellow the abstract:

Microprocessor designers such as Intel and AMD implement old instruction sets at their modern processors to ensure backward compatibility with legacy code. In addition to old back-ward compatibility instructions, new extensions are constantly introduced to add functionalities. In this way, the size of the IA-32 ISA is growing at a fast pace, reaching almost 1300 different instructions in 2013 with the introduction of AVX2 and FMA3 by Haswell. Increasing the size of the ISA impacts both hardware and software: it costs a complex microprocessor front- end design, which requires more silicon area, consumes more energy and demands more hardware debugging efforts; it also hinders software performance, since in IA-32 newer instructions are bigger and take up more space in the instruction cache. In this work, after analyzing x86 code from 3 different Windows versions and its respective contemporary applications plus 3 Linux distributions, from 1995 to 2012, we found that up to 30 classes of instructions get unused with time in these software. Should modern x86 processors sacrifice efficiency to provide strict conformance with old software from 30 years ago? Our results show that many old instructions may be truly retired.

Slides for the presentation can be downloaded here. Besides WIVOSCA, the AMAS-BT workshop on Binary Translation also had great talks. Moving from the workshops to the ISCA conference itself, there was an amazing talk entitled DNA-based Molecular Architecture with Spatially Localized Components, which totally twisted my mind regarding the emerging technologies topic. The conference isn’t over yet and I hope to attend more break-through research talks in the next few days.

Vibrant Emotionally Charged Paintings of Expressive Women

Polish artist Anna Bocek's figurative paintings feature vibrant and energetic portraits of women. The painter's subjects are filled with emotion, communicated through facial expressions, body postures, and Bocek's brilliant color palette. Each portrait features a rich array of color, both in the background and on the subject, that adds to its evocative liveliness.

Strokes of color parade through every piece by the artist. The range of vibrant hues can be found in unexpected areas, adding an artistically dramatic element to her work. Bocek says, “My inspiration for painting is the theater, both as artist and viewer. I am drawn to the humanity of the characters living on stage, rather than the physical space of the theater itself. I am fascinated by the gestures, grimaces and emotions that convey the depth of our human spirit.”

Anna Bocek website

via [Empty Kingdom]

FPGA plays Mario like a champ

This isn’t an FPGA emulating Mario Bros., it’s an FPGA playing the game by analyzing the video and sending controller commands. It’s a final project for an engineering course. The ECE5760 Advanced FPGA course over at Cornell University that always provides entertainment for us every time the final projects are due.

Developed by team members [Jeremy Blum], [Jason Wright], and [Sima Mitra], the video parsing is a hack. To get things working they converted the NES’s 240p video signal to VGA. This resulted in a rolling frame show in the demo video. It also messes with the aspect ratio and causes a few other headaches but the FPGA still manages to interpret the image correctly.

Look closely at the screen capture above and you’ll see some stuff that shouldn’t be there. The team developed a set of tests used to determine obstacles in Mario’s way. The red lines signify blocks he will have to jump over. This also works for pits that he needs to avoid, with a different set of tests to detect moving enemies. Once it knows what to do the FPGA emulates the controller signals necessary, pushing them to the vintage gaming console to see him safely to the end of the first level.

We think this is more hard-core than some other autonomous Mario playing hacks just because it patches into the original console hardware instead of using an emulator.

Filed under: FPGA

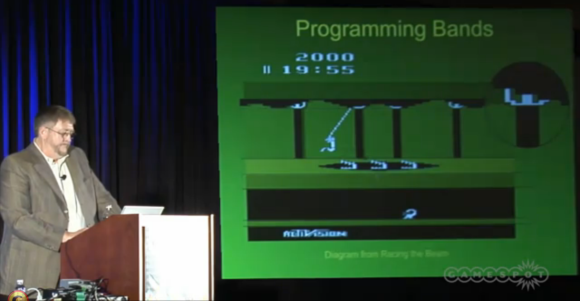

Retrotechtacular: How I wrote Pitfall for the Atari 2600

This week we’re taking another departure from the ordinarily campy videos featured in the Retrotechtacular section. This time around the video is only two years old, but the subject matter is from the early 1980′s. [David Crane], designer of Pitfall for the Atari 2600 gave a talk at the 2011 Game Developer’s Conference. His 38-minute presentation rounds up to a full hour with the Q&A afterwards. It’s a bit dry to start, but he hits his stride about half way through and it’s chock-full of juicy morsels about the way things used to be.

[David] wrote the game for Activision, a company that was started after game designers left Atari having been told they were no more important than assembly line workers that assembled the actual cartridges. We wonder if any heads rolled at Atari once Pitfall had spent 64-weeks as the number one worldwide selling game?

This was a developer’s panel so you can bet the video below digs deep into coding challenges. Frame buffer? No way! The 2600 could only pump out 160 pixels at once; a single TV scan line. The programs were hopelessly synced with the TV refresh rate, and were even limited on how many things could be drawn within a single scan line. For us the most interesting part is near the end when [David] describes how the set of game screens are nothing more than a pseudo-random number generator with a carefully chosen seed. But then again, the recollection of hand optimizating the code to fit a 6k game on a 4k ROM is equally compelling.

If you like this you should take a look at an effort to fix coding glitches in Atari games.

[via Reddit]

Filed under: Software Development, software hacks

Terra: A low-level counterpart to Lua

A very interesting project developed by Zachary DeVito et al at Stanford University:

Terra is a new low-level system programming language that is designed to interoperate seamlessly with the Lua programming language:

-- This top-level code is plain Lua code. print("Hello, Lua!") -- Terra is backwards compatible with C -- we'll use C's io library in our example. C = terralib.includec("stdio.h") -- The keyword 'terra' introduces -- a new Terra function. terra hello(argc : int, argv : &rawstring) -- Here we call a C function from Terra C.printf("Hello, Terra!\n") return 0 end -- You can call Terra functions directly from Lua hello(0,nil) -- Or, you can save them to disk as executables or .o -- files and link them into existing programs terralib.saveobj("helloterra",{ main = hello })Like C, Terra is a simple, statically-typed, compiled language with manual memory management. But unlike C, it is designed from the beginning to interoperate with Lua. Terra functions are first-class Lua values created using the terra keyword. When needed they are JIT-compiled to machine code.

Seems as if the target use case is high-performance computing. The team has also released a related paper, titled Terra: A Multi-Stage Language for High-Performance Computing:

High-performance computing applications, such as auto-tuners and domain-specific languages, rely on generative programming techniques to achieve high performance and portability. However, these systems are often implemented in multiple disparate languages and perform code generation in a separate process from program execution, making certain optimizations difficult to engineer. We leverage a popular scripting language, Lua, to stage the execution of a novel low-level language, Terra. Users can implement optimizations in the high-level language, and use built-in constructs to generate and execute high-performance Terra code. To simplify meta-programming, Lua and Terra share the same lexical environment, but, to ensure performance, Terra code can execute independently of Lua’s runtime. We evaluate our design by reimplementing existing multi-language systems entirely in Terra. Our Terra-based auto-tuner for BLAS routines performs within 20% of ATLAS, and our DSL for stencil computations runs 2.3x faster than hand-written C.

Python will have enums in 3.4!

After months of intensive discussion (more than a 1000 emails in dozens of threads spread over two mailing lists, and a couple of hundred additional private emails), PEP 435 has been accepted and Python will finally have an enumeration type in 3.4!

The discussion and decision process has been long and arduous, but eventually very positive. A collective brain is certainly better than any single one; the final proposal is better in a number of ways than the initial one, and the vast majority of Python core developers now feel good about it (give or take a couple of very minor issues).

I’ve been told enums have been debated on Python development lists for years. There’s at least one earlier PEP (354) that’s been discussed and rejected in 2005.

I think part of the success of the current attempt can be attributed to the advances in metaclasses that has been made in the past few releases (3.x). These advances allow very nice syntax of enum definitions that provides a lot of convenient features for free. I tried to find interesting examples of metaclasses in the standard library in 2011; Once the enum gets pushed I’ll have a much better example ![]()

Cognitive Architectures: A Way Forward for the Psychology of Programming

Michael Hansen presents the ACT-R cognitive architecture, a simulation framework for psychological models, showing how it could be used to measure the impact of various programming paradigms.

This describes an interesting attempt to formalize comparison of programming languages from point of view of cognitive psychology. There are also some some interesting references from the presentation.

Deloget: The SoC GPU driver interview

Hijacking airplanes with an Android phone (Help Net Security)

Troll Booth

Testing libc++ with Address Sanitizer

I've been running the libc++ tests off and on for a while. It's a quite extensive test suite, but I wondered if there were any bugs that the test suite was not uncovering. In the upcoming clang 3.3, there is a new feature named Address Sanitizer which inserts a bunch of runtime checks into your executable to see if there are any "out of bounds" reads and writes to memory.

In the back of my head, I've always thought that it would be nice to be able to say that libc++ was "ASan clean" (i.e, passed all of the test suite when running with Address Sanitizer).

So I decided to do that.

[ All of this work was done on Mac OS X 10.8.2/3 ]

How to run the tests:

There's a script for running the tests. It's calledtestit. $ cd $LLVM/libcxx/test ; ./testit

Running the tests with Address Sanitizer

$ cd $LLVM/libcxx/test ; CC=/path/to/tot/clang++ OPTIONS= "-std=c++11 -stdlib=libc++ -fsanitize=address" ./testit

What are the failures?

- In 11 tests, Address Sanitizer detected a one-byte write outside a heap block. All of these involve iostreams. I created a small test program that ASan also fires on, and sent it to Howard Hinnant (who wrote most of libc++), and he found a place where he was allocating a zero-byte buffer by mistake. One bug, multiple failures. He fixed this in revision 177452.

- 2 tests for std::random were failing. This turned out to be an off-by-one error in the test code, not in libc++. I fixed these in revisions 177355 and 177464.

- Address Sanitizer detected memory allocations failing in 4 cases. This is expected, since some of the tests are testing the memory allocation system of libc++. However, it appears that ASan does not call the user-supplied

new_handlerwhen memory allocation fails (and may not throwstd::bad_alloc, ether). I have filed PR15544 to track this issue. - 25 cases are failing where the program is failing to load, due to a missing symbol. This is most commonly

std::__1::__get_sp_mut(void const *), but there are a couple others. Howard says that this was added to libc++ after 10.8 shipped, so it's not in the dylib in /usr/lib. If the tests are run with a copy of libc++ built from source, they pass. - There are the 12 cases that were failing before enabling Address Sanitizer.

$ cd $LLVM/libcxx/test ; DYLD_LIBRARY_PATH=$LLVM/libcxx/lib CC=/path/to/tot/clang++ OPTIONS= "-std=c++11 -stdlib=libc++ -fsanitize=address" ./testit

- The 4 failures that have to do with memory allocation failures.

- The 12 failures that we started with.

Conclusion

I'm glad to see that there were so few problems in the libc++ code. It's a fundamental building block for applications on Mac OS X (and, as llvm becomes more popular, other systems). And now it's better than it was when we started this exercise.However, we did find a couple bugs in the test suite, and one heap-smashing bug in libc++. We also found a limitation in Address Sanitizer, too - which the developers are working on addressing.Instruction Relationship Framework in LLVM

Motivation:

The motivation for this feature stemmed from the Hexagon backend. Much like other processors, Hexagon provides multiple variations for many instructions. It is a common requirement in machine instruction passes to switch between various formats of the same instruction. For example, consider an Add instruction with predicated true (Add_pt) and predicated false (Add_pf) forms. Let's assume that a non-predicated Add instruction is selected during target lowering. However, during if-conversion, the optimization pass might decide to change the non-predicated Add into the predicated true Add_pt form. These transformations require a framework to relate non-predicated forms to the respective predicated forms. In the absence of such a framework, this transformation is typically achieved using large switch cases. There are many deficiencies in using a switch case based approach. The manual implementation of switch case clauses requires a very high maintenance cost. It also results in lost optimization opportunities due to incomplete implementation of switch cases. The lack of a relationship model resulted in around 15% of Hexagon backend code dedicated to switch cases with several of those functions growing to over thousands of lines of code.This problem inspired us to explore some alternatives. We started to look for a framework that was easy to maintain, flexible, scalable, and less error prone. After some initial discussions and brainstorming in the Hexagon group, we decided to modify TableGen to express instruction relations. The initial design was submitted to the LLVM-dev mailing list for review. Jakob Stoklund gave valuable suggestions and helped with the design of the relationship framework. The idea was to add a query language that can be used to define different kind of relationships between instructions. TableGen was extended to parse relationship models. It uses the information to construct tables which are queried to determine new opcodes corresponding to a relationship. The Hexagon backend relies heavily on the relationship framework and it has significantly improved the code quality of our target.

Before getting into the implementation details, let's consider an API that takes an instruction opcode as input and returns its predicated true/false form. We'll first look at the switch-case based solution and then compare it with the relationship based implementation.

short getPredicatedTrue(short opcode) {

switch (opcode) {

default:

return -1;

case Hexagon::Add:

return Hexagon::Add_pt;

case Hexagon::Sub:

return Hexagon::Sub_pt;

case Hexagon::And:

return Hexagon::And_pt;

case Hexagon::Or:

return Hexagon::Or_pt;

case ... :

return ...

}

short getPredicatedFalse(short opcode) {

switch (opcode) {

default:

return -1;

case Hexagon::Add:

return Hexagon::Add_pf;

case Hexagon::Sub:

return Hexagon::Sub_pf;

case Hexagon::And:

return Hexagon::And_pf;

case Hexagon::Or:

return Hexagon::Or_pf;

case ... :

return ...

}

short getPredicatedOpcode(short opcode, bool predSense) {

return predSense ? getPredicatedTrue(opcode)

: getPredicatedFlase(opcode);

}

The relationship framework offers a very systematic solution to this problem. It requires instructions to model their attributes and categorize their groups. For instance, a field called 'PredSense' can be used to record whether an instruction is predicated or not and its sense of predication. Each instruction in the group has to be unique such that no two instructions can share all the same attributes. There must be at least one field with different value. The instruction groups are modeled by assigning a common base name to all the instructions in the group. One of the biggest advantages of this approach is that, by modeling these attributes and groups once, we can define multiple relationships with very little effort.

With the relationship framework, the getPredicatedOpcode API can be implemented as below:

short getPredicatedOpcode(short opcode, bool predSense) {

return predSense ? getPredicated(opcode, PredSenseTrue)

: getPredicated(opcode, PredSenseFalse);

}

Architecture:

The entire framework is driven by a class called InstrMapping. The TableGen back-end has been extended to include a new parser for the relationship models implemented using InstrMapping class. Any relationship model must derive from this class and assign all the class members to the appropriate values. This is how the class looks like:

class InstrMapping {

// Used to reduce search space only to the instructions using this relation model

string FilterClass;

// List of fields/attributes that should be same for all the instructions in

// a row of the relation table. Think of this as a set of properties shared

// by all the instructions related by this relationship.

list RowFields = [];

// List of fields/attributes that are same for all the instructions

// in a column of the relation table.

list ColFields = [];

// Values for the fields/attributes listed in 'ColFields' corresponding to

// the key instruction. This is the instruction that will be transformed

// using this relation model.

list KeyCol = [];

// List of values for the fields/attributes listed in 'ColFields', one for

// each column in the relation table. These are the instructions a key

// instruction will be transformed into.

list > ValueCols = [];

}

def getPredicated : InstrMapping {

// Choose a FilterClass that is used as a base class for all the instructions modeling

// this relationship. This is done to reduce the search space only to these set of instructions.

let FilterClass = "PredRel";

// Instructions with same values for all the fields in RowFields form a row in the resulting

// relation table.

// For example, if we want to relate 'Add' (non-predicated) with 'Add_pt'

// (predicated true) and 'Add_pf' (predicated false), then all 3

// instructions need to have a common base name, i.e., same value for BaseOpcode here. It can be

// any unique value (Ex: XYZ) and should not be shared with any other instruction not related to 'Add'.

let RowFields = ["BaseOpcode"];

// List of attributes that can be used to define key and column instructions for a relation.

// Here, key instruction is passed as an argument to the function used for querying relation tables.

// Column instructions are the instructions they (key) can transform into.

//

// Here, we choose 'PredSense' as ColFields since this is the unique attribute of the key

// (non-predicated) and column (true/false) instructions involved in this relationship model.

let ColFields = ["PredSense"];

// The key column contains non-predicated instructions.

let KeyCol = ["none"];

// Two value columns - first column contains instructions with PredSense=true while the second

// column has instructions with PredSense=false.

let ValueCols = [["true"], ["false"]];

}

multiclass ALU32_Pred {

let PredSense = !if(PredNot, "false", "true"), isPredicated = 1 in

def NAME : ALU32_rr<(outs IntRegs:$dst),

(ins PredRegs:$src1, IntRegs:$src2, IntRegs: $src3),

!if(PredNot, "if (!$src1)", "if ($src1)")#

" $dst = "#mnemonic#"($src2, $src3)",

[]>;

}

multiclass ALU32_base {

let BaseOpcode = BaseOp in {

let isPredicable = 1 in

def NAME : ALU32_rr<(outs IntRegs:$dst),

(ins IntRegs:$src1, IntRegs:$src2),

"$dst = "#mnemonic#"($src1, $src2)",

[(set (i32 IntRegs:$dst), (OpNode (i32 IntRegs:$src1),

(i32 IntRegs:$src2)))]>;

defm pt : ALU32_Pred; // Predicate true

defm pf : ALU32_Pred; // Predicate false

}

}

let isCommutable = 1 in {

defm Add : ALU32_base<"add", "ADD", add>, PredRel;

defm And : ALU32_base<"and", "AND", and>, PredRel;

defm Xor: ALU32_base<"xor", "XOR", xor>, PredRel;

defm Or : ALU32_base<"or", "OR", or>, PredRel;

}

defm Sub : ALU32_base<"sub", "SUB", sub>, PredRel;

With the help of this extra information, TableGen is able to construct the following API. It offers the same functionality as switch-case based approach and significantly reduces the maintenance overhead:

int getPredicated(uint16_t Opcode, enum PredSense inPredSense) {

static const uint16_t getPredicatedTable[][3] = {

{ Hexagon::Add, Hexagon::Add_pt, Hexagon::Add_pf },

{ Hexagon::And, Hexagon::And_pt, Hexagon::And_pf },

{ Hexagon::Or, Hexagon::Or_pt, Hexagon::Or_pf },

{ Hexagon::Sub, Hexagon::Sub_pt, Hexagon::Sub_pf },

{ Hexagon::Xor, Hexagon::Xor_pt, Hexagon::Xor_pf },

}; // End of getPredicatedTable

unsigned mid;

unsigned start = 0;

unsigned end = 5;

while (start < end) {

mid = start + (end - start)/2;

if (Opcode == getPredicatedTable[mid][0]) {

break;

}

if (Opcode < getPredicatedTable[mid][0])

end = mid;

else

start = mid + 1;

}

if (start == end)

return -1; // Instruction doesn't exist in this table.

if (inPredSense == PredSense_true)

return getPredOpcodeTable[mid][1];

if (inPredSense == PredSense_false)

return getPredicatedTable[mid][2];

return -1;

}

//===------------------------------------------------------------------===//

// Generate mapping table to relate predicate-true instructions with their

// predicate-false forms

//

def getFalsePredOpcode : InstrMapping {

let FilterClass = "PredRel";

let RowFields = ["BaseOpcode"];

let ColFields = ["PredSense"];

let KeyCol = ["true"];

let ValueCols = [["false"]];

}

Conclusion:

I hope this article succeeds in providing some useful information about the framework. The Hexagon backend makes extensive use of this feature and can be used as a reference for getting started on relationship framework.Dissecando timbres: Little Sister – Queens Of The Stone Age

A primeira vez que me apaixonei em um timbre foi em 2008, enquanto jogava Midnight Club 3: DUB. O rock era um pouco imaturo na minha cabeça, mas mesmo assim caí na tentação de buscar pela OST do jogo por um “sonzinho” desconhecido. O timbre em questão estava no solo de “Little Sister”, dos americanos do Queens Of The Stone Age. ( Veja lista de seus pedais)

Não era minha primeira vez com o som de Palm Desert. Na verdade o QOTSA havia me prendido a atenção com seu premiado clipe “Go With The Flow”, em 2007, no “MTV Lab Toca Aí” (durante a boa safra da MTV Brasil).

Em 2012 me interessei ainda mais pela banda, e me aproximei mais da história de seu líder, Josh Homme. Aprendi o significado da palavra “timbrar” ouvindo a discografia do Queens, os mistérios envolvendo seus equipamentos, guitarras, etc. Todo este segredo envolvendo seu pedalboard me instigou ainda mais pelo estudo dos pedais/timbres.

Vamos aos trabalhos…

A Guitarra

(http://www.motorave.com/belaire/) Custa US$ 4650 e demora 18 meses para ficar pronta

A canção faz parte do álbum Lullabies To Paralyze, particularmente, este álbum foi gravado quase totalmente por guitarras semi-acústicas afinadas em E. Em algumas apresentações, Josh também utiliza uma Epiphone Dot e uma raríssima Teisco ’68 V-2. No clipe oficial, que pode ser visto abaixo, Homme utiliza uma MotorAve BelAir.

Para os versos e refrão, use o captador da ponte com o tone zerado.

Para o solo, use o captador do braço com o tone no máximo.

O Amplificador

Em uma recente entrevista ao programa Guitarings, no Youtube, ele comenta que seu amplificador favorito e usado é o Ampeg VT40, que infelizmente saiu de linha há algumas décadas.

Para quem ainda não o conhece, o Ampeg VT40 é um amplificador de baixo valvulado, que é regulado da seguinte maneira:

Bass: 3 (o’clock) | Middle: máximo | Treeble: 11 (o’clock)

Pedais

Após anos de pesquisa e um bom dinheiro investido, Josh tenta manter segredo sobre seu equipamento, mais ainda no que toca ao seu pedalboard. Nas apresentações do QOTSA podemos perceber 2 pedalboards bem completos

Porém, no clipe oficial da música, apenas 2 pedais avulsos estão “estacionados” á frente de seu frontman.

O pedal à direita de Homme é o descontinuado Matchless Hotbox, um pre-amp com duas válvulas 12AX7 e 5 controles. A configuração dele, para esta música, é baseada em pouco ganho, muito volume e pouquíssimo treeble. Do lado direito, as settings originais do pedal.

Já do lado esquerdo de Josh, temos um pequeno pedal com 3 knobs arredondados. Analisando o histórico de Josh, temos 2 pedais que possuem estas características:

O primeiro suspeito é o Fulltone Ultimate Octave, a distorção/fuzz principal de Josh, com volume médio, tone no 0 e fuzz em 11 o’clock. O segundo é o famoso Big Muff, um dos pedais de distorção mais usados no mundo, o qual geralmente é associado ao timbre de Josh Homme. Minhas apostas estão no Fulltone, porém, o Muff bem regulado também pode alcançar um timbre similar.

Em breve o QOTSA vai estrear novo disco e com ele um novo pedalboard deva estar surgindo para a gente poder postar aqui para vocês

Após tanto tempo de pesquisa, sabemos muito, mas não tudo sobre os misteriosos timbres do Ginger Elvis. O que nos mostra o quão importante é a autenticidade do músico, o modo com que devemos conhecer nosso equipamento, e a importância da dedicação à nossa paixão pela música. Até a próxima.

March 2013 GNU Toolchain Update

It has been a very quiet month this month. The GCC sources are still closed to new features, pending the creation of the 4.8 branch.

The binutils sources now have support for a 64-bit cygwin target called x86_64-cygwin. Patches for GCC are currently under development and a full gcc port should be ready soon.

There is a new function attribute for GCC called no_sanitize_address which tells the compiler that it should not instrument memory accesses in that function when compiling with the -fsanitize=address option enabled.

One new, target specific, feature that has made it into GCC is support for x86 hardware transactional memory. This is done via intrinsics for Restricted Transactional Memory (RTM) and extensions to the memory model for Hardware Lock Elision (HLE).

For HLE two new flags can be used to mark a lock as using hardware elision:

__ATOMIC_HLE_ACQUIRE

Starts lock elision on a lock variable.

The memory model in use must be __ATOMIC_ACQUIRE or stronger.

__ATOMIC_HLE_RELEASE

Ends lock elision on a lock variable.

The memory model must be __ATOMIC_RELEASE or stronger.

So for example:

while (__atomic_exchange_n (& lockvar, 1, __ATOMIC_ACQUIRE | __ATOMIC_HLE_ACQUIRE))

_mm_pause ();

[do stuff with the lock acquired]

__atomic_clear (& lockvar, __ATOMIC_RELEASE | __ATOMIC_HLE_RELEASE);

The new intrinsics that support Restricted Transactional Memory are:

unsigned _xbegin (void)

This tries to start a transaction. It returns _XBEGIN_STARTED if

it succeeds, or a status value indicating why the transaction

could not be started if it fails.

void _xend (void)

Commits the current transaction. When no transaction is active

this will cause a fault. All memory side effects of the

transactions will become visible to other threads in an atomic

manner.

int _xtest (void)

Returns a non-zero value when a transaction is currently active,

and otherwise zero.

void _xabort (unsigned char status)

Aborts the current transaction. When no transaction is active

this is a no-op. The parameter "status" is included in the return

value of any _xbegin() call that is aborted by this function.

Here is a small example:

if ((status = _xbegin ()) == _XBEGIN_STARTED)

{

[do stuff]

_xend ();

}

else

{

[examine status to see why the transaction failed and possibly retry].

}

Cheers

Nick

Four Books on Debugging

It often seems like the ability to debug complex problems is not distributed very evenly among programmers. No doubt there’s some truth to this, but also people can become better over time. Although the main way to improve is through experience, it’s also useful to keep the bigger picture in mind by listening to what other people have to say about the subject. This post discusses four books about debugging that treat it as a generic process, as opposed to being about Visual Studio or something. I read them in order of increasing size.

Debugging, by David Agans

Debugging, by David Agans

At 175 pages and less than $15, this is a book everyone ought to own. For example, Alex says:

Agans actually sort of says everything I believe about debugging, just tersely and without much nod in the direction of tools.

This is exactly right.

As the subtitle says, this book is structured around nine rules. I wouldn’t exactly say that following these rules makes debugging easy, but it’s definitely the case that ignoring them will make debugging a lot harder. Each rule is explained using real-world examples and is broken down into sub-rules. This is great material and reading the whole thing only takes a few hours. I particularly liked the chapter containing some longer debugging stories with specific notes about where each rule came into play (or should have). Another nice chapter covers debugging remotely—for example from the help desk. Us teachers end up doing quite a bit of this kind of debugging, often late at night before an assignment is due. It truly is a difficult skill that is distinct from hands-on debugging.

This material is not specifically about computer programming, but rather covers debugging as a generic process for identifying the root cause of a broad category of engineering problems including stalled car engines and leaking roofs. However, most of his examples come from embedded computer systems. Unlike the other three books, there’s no specific advice here for dealing with specific tools, programming languages, or even programming-specific problems such as concurrency errors.

Debug It! by Paul Butcher

Butcher’s book contains a large amount of great material not found in Agans, such as specific advice about version control systems, logging frameworks, mocks and stubs, and release engineering. On the other hand, I felt that Butcher did not present the actual process of debugging as cleanly or clearly, possibly due to the lack of a clear separation between the core intellectual activity and the incidental features of dealing with particular software systems.

Why Programs Fail, by Andreas Zeller

Agans and Butcher approach debugging from the point of view of the practitioner. Zeller, on the other hand, approaches the topic both as a practitioner and as a researcher. This book, like the previous two, contains a solid explanation of the core debugging concepts and skills. In addition, we get an extensive list of tools and techniques surrounding the actual practice of debugging. A fair bit of this builds upon the kind of knowledge that we’d expect someone with a CS degree to have, especially from a compilers course. Absorbing this book requires a lot more time than Agans or Butcher does.

Debugging by Thinking, by Robert Metzger

Debugging by Thinking, by Robert Metzger

I have to admit, after reading three books about debugging I was about ready to quit, and at 567 pages Metzger is the longest of the lot. The subtitle “a multidisciplinary approach” refers to six styles of thinking around which the book is structured:

- the way of the detective is about deducing where the culprit is by following a trail of clues

- the way of the mathematician observes that useful parallels can be drawn between methods for debugging and methods for creating mathematical proofs

- the way of the safety expert treats bugs as serious failures and attempts to backtrack the sequence of events that made the bug possible, in order to prevent the same problem from occurring again

- the way of the psychologist analyzes the kinds of flawed thinking that leads people to implement bugs: Was the developer unaware of a key fact? Did she get interrupted while implementing a critical function? Etc.

- the way of the computer scientist embraces software tools and also mathematical tools such as formal grammars

- the way of the engineer is mainly concerned with the process of creating bug-free software: specification, testing, etc.

I was amused that this seemingly exhaustive list omits my favorite debugging analogy: the way of the scientist, where we formulate hypotheses and test them with experiments (Metzger does discuss hypothesis testing, but the material is split across the detective and mathematician). Some of these chapters—the ways of the mathematician, detective, and psychologist in particular—are very strong, while others are weaker. Interleaved with the “six ways” are a couple of lengthy case studies where buggy pieces of code are iteratively improved.

Summary

All of these books are good. Each contains useful information and each of them is worth owning. On the other hand, you don’t need all four. Here are the tradeoffs:

- Agans’ book is The Prince or The Art of War for debugging: short and sweet and sometimes profound, but not usually containing the most specific advice for your particular situation. It is most directly targeted toward people who work at the hardware/software boundary.

- Butcher’s book is not too long and contains considerably more information that is specific to software debugging than Agans.

- Zeller’s book has a strong computer science focus and it is most helpful for understanding debugging methods and tools, instead of just building solid debugging skills.

- Metzger’s book is the most detailed and specific about the various mental tools that one can use to attack software debugging problems.

I’m interested to hear feedback. Also, let me know if you have a favorite book that I left out (again, I’m most interested in language- and system-independent debugging).

Undefined Behavior Executed by Coq

I built a version of OCaml with some instrumentation for reporting errors in using the C language’s integers. Then I used that OCaml to build the Coq proof assistant. Here’s what happens when we start Coq:

[regehr@gamow ~]$ ~/z/coq/bin/coqtop

intern.c:617:10: runtime error: left shift of 255 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:617:10: runtime error: left shift of negative value -1

intern.c:167:13: runtime error: left shift of 255 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:167:13: runtime error: left shift of negative value -1

intern.c:173:13: runtime error: left shift of 234 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:173:13: runtime error: left shift of negative value -22

intern.c:173:13: runtime error: left shift of negative value -363571240

interp.c:978:43: runtime error: left shift of 2 by 62 places cannot be represented in type 'long'

interp.c:1016:19: runtime error: left shift of negative value -1

interp.c:1016:12: runtime error: signed integer overflow: -9223372036854775807 + -2 cannot be represented in type 'long'

interp.c:936:14: runtime error: left shift of negative value -1

ints.c:721:48: runtime error: left shift of 1 by 63 places cannot be represented in type 'intnat' (aka 'long')

ints.c:674:48: runtime error: signed integer overflow: -9223372036854775808 - 1 cannot be represented in type 'long'

compare.c:307:10: runtime error: left shift of 1 by 63 places cannot be represented in type 'intnat' (aka 'long')

str.c:96:23: runtime error: left shift of negative value -1

compare.c:275:12: runtime error: left shift of negative value -1

interp.c:967:14: runtime error: left shift of negative value -427387904

interp.c:957:14: runtime error: left shift of negative value -4611686018

interp.c:949:14: runtime error: signed integer overflow: 31898978766825602 * 65599 cannot be represented in type 'long'

interp.c:949:14: runtime error: left shift of 8059027795813332990 by 1 places cannot be represented in type 'intnat' (aka 'long')

Welcome to Coq 8.4pl1 (February 2013)

unixsupport.c:257:20: runtime error: left shift of negative value -1

Coq

This output means that Coq---via OCaml---is executing a number of C's undefined behaviors before it even asks for any input from the user. The problem with undefined behaviors is that, according to the standard, they destroy the meaning of the program that executes them. We do not wish for Coq's meaning to be destroyed because a proof in Coq is widely considered to be a reliable indicator that the result is correct. (Also, see the update at the bottom of this post: the standalone verifier coqchk has the same problems.)

In principle all undefined behaviors are equally bad. In practice, some of them might only land us in purgatory ("Pointers that do not point into, or just beyond, the same array object are subtracted") whereas others (store to out-of-bounds array element) place us squarely into the ninth circle. To which category do the undefined behaviors above belong? To the best of my knowledge, the left shift problems are, at the moment, benign. What I mean by "benign" is that all compilers that I know of will take a technically undefined construct such as 0xffff (on a machine with 32-bit, two's complement integers) and compile it as if the arguments were unsigned, giving the intuitive result of 0xffff0000. This compilation strategy could change.

If we forget about the shift errors, we are still left with three signed integer overflows:

- -9223372036854775807 + -2

- -9223372036854775808 - 1

- 31898978766825602 * 65599

Modern C compilers are known to exploit the undefinedness of such operations in order to generate more efficient code. Here's an example where two commonly-used C compilers evaluate (INT_MAX+1) > INT_MAX to both 0 and 1 in the same program, at the same optimization level:

#include <stdio.h>

#include <limits.h>

int foo (int x) {

return (x+1) > x;

}

int main (void)

{

printf ("%d\n", (INT_MAX+1) > INT_MAX);

printf ("%d\n", foo(INT_MAX));

return 0;

}

$ gcc -w -O2 overflow.c

$ ./a.out

0

1

$ clang -O2 overflow.c

$ ./a.out

0

1

Here's a longish explanation of the reasoning that goes into this kind of behavior. One tricky thing is that the effects of integer undefined behaviors can even affect statements that precede the undefined behavior.

Realistically, what kinds of consequences can we expect from signed integer overflows that do not map to trapping instructions, using today's compilers? Basically, as the example shows, we can expect inconsistent results from the overflowing operations. In principle a compiler could do something worse than this---such as deleting the entire statement or function which contains the undefined behavior---and I would not be too surprised to see that kind of thing happen in the future. But for now, we might reasonably hope that the effects are limited to returning wrong answers.

Now we come to the important question: Is Coq's validity threatened? The short answer is that this seems unlikely. The long answer requires a bit more work.

What do I mean by "Coq's validity threatened"? Of course I am not referring to its mathematical foundations. Rather, I am asking about the possibility that the instance of Coq that is running on my machine (and similar ones running on similar machines) may produce an incorrect result because a C compiler was given a licence to kill.

Let's look at the chain of events that would be required for Coq to return an invalid result such as claiming that a theorem was proved when in fact it was not. First, it is not necessarily the case that the compiler is bright enough to exploit the undefined integer overflow and return an unexpected result. Second, an incorrect result, once produced, might never escape from the OCaml runtime. For example, maybe the overflow is in a computation that decides whether it's time to run the garbage collector, and the worst that can happen is that the error causes us to spend too much time in the GC. On the other hand, a wrong value may in fact propagate into Coq.

Let's look at the overflows in a bit more detail. The first, at line 1016 of interp.c, is in the implementation of the OFFSETINT instruction which adds a value to the accumulator. The second overflow, at line 674 of ints.c, is in a short function called caml_nativeint_sub(), which I assume performs subtraction of two machine-native integers. The third overflow, at line 949 of interp.c, is in the implementation of the MULINT instruction which, as far as I can tell, pops a value from the stack and multiplies it by the accumulator. All three of these overflows fit into a pattern I've seen many times where a higher-level language implementation uses C's signed math operators with insufficient precondition checks. In general, the intent is not to expose C's undefined semantics to programs in the higher-level language, but of course that is what happens sometimes. If any of these overflows is exploited by the compiler and returns strange results, OCaml will indeed misbehave. Thus, at present the correctness of OCaml programs such as Coq relies on a couple of things. First, we're hoping the compiler is not smart enough to exploit these overflows. Second, if a compiler is smart enough, we're counting on the fact that the resulting errors will be egregious enough that they will be noticed. That is, they won't just break Coq in subtle ways.

If these bugs are in the OCaml implementation, why am I picking on Coq? Because it makes a good example. The Coq developers have gone to significant trouble to create a system with a small trusted computing base, in order to approximate as nearly as possible the ideal of an unassailable system for producing and checking mathematical proofs. This example shows how even such a careful design might go awry if its chain of assumptions contains a weak link.

These results were obtained using OCaml 3.12.1 on a 64-bit Linux machine. The behavior of the latest OCaml from SVN is basically the same. In fact, running OCaml's test suite reveals signed overflows at 17 distinct locations, not just the three shown above; additionally, there is a division by zero and a shift by -1 bit positions. My opinion is that a solid audit of OCaml's integer operations should be performed, followed by some hopefully minor tweaking to avoid these undefined behaviors, followed by aggressive stress testing of the implementation when compiled with Clang's integer overflow checks.

UPDATE: psnively on twitter suggested something that I should have done originally, which is to look at Coqchk, the standalone proof verifier. Here's the output:

[regehr@gamow coq-8.4pl1]$ ./bin/coqchk

intern.c:617:10: runtime error: left shift of 255 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:617:10: runtime error: left shift of negative value -1

intern.c:167:13: runtime error: left shift of 255 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:167:13: runtime error: left shift of negative value -1

intern.c:173:13: runtime error: left shift of 234 by 56 places cannot be represented in type 'intnat' (aka 'long')

intern.c:173:13: runtime error: left shift of negative value -22

intern.c:173:13: runtime error: left shift of negative value -363571240

interp.c:978:43: runtime error: left shift of 2 by 62 places cannot be represented in type 'long'

interp.c:1016:19: runtime error: left shift of negative value -1

interp.c:1016:12: runtime error: signed integer overflow: -9223372036854775807 + -2 cannot be represented in type 'long'

interp.c:936:14: runtime error: left shift of negative value -1

ints.c:721:48: runtime error: left shift of 1 by 63 places cannot be represented in type 'intnat' (aka 'long')

ints.c:674:48: runtime error: signed integer overflow: -9223372036854775808 - 1 cannot be represented in type 'long'

compare.c:307:10: runtime error: left shift of 1 by 63 places cannot be represented in type 'intnat' (aka 'long')

Welcome to Chicken 8.4pl1 (February 2013)

compare.c:275:12: runtime error: left shift of negative value -1

Ordered list:

Modules were successfully checked

[regehr@gamow coq-8.4pl1]$

Game Gear vs. Game Boy

Submitted by: Unknown (via I Raff I Ruse)

Tagged: epic , gifs , game boy , fight , game gear , 90s kids , batteries Share on Facebook