La première partie de ce billet vous a permis de constituer le squelette de votre bot et de l’enregistrer sur le site de Microsoft dédié afin de définir les éléments clés qui permettront aux différents utilisateurs de communiquer avec votre bot.

La seconde partie s’est proposée de vous donner une vue d’ensemble du Bot Framework et a introduit LUIS (Language Understanding Intelligence Service).

Dans cette troisième et dernière partie de ce billet, il est temps de mettre toutes ces informations en perspective en mettant en œuvre l’ensemble des points clés abordés jusqu’à présent sur un petit projet.

Mise en œuvre et exemples : Open Data RATP

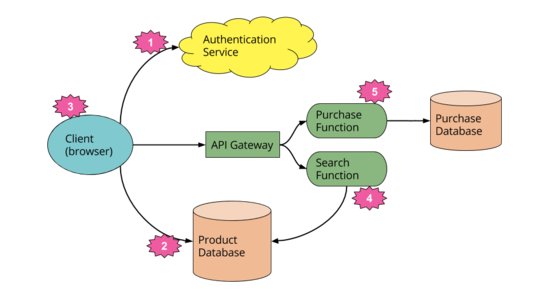

Dans toutes les grandes villes de France, un service de transport en commun est proposé et certains d’entre eux propose une grande variété de données issues de leur réseau en libre accès via l’Open Data. C’est le cas notamment de la RATP.

Nous allons donc combiner ces données « Open Data » mises à disposition par la RATP, le Bot Framework et LUIS, afin de mettre en place un bot simple permettant de demander les horaires de passages d’un type de locomotion, sur une ligne donnée et ce à un arrêt précis.

Cet exemple devrait vous procurer une bonne compréhension de la façon dont les différentes briques logicielles abordées dans les deux parties précédentes s’assemblent, et dans ce contexte, des interactions entre ces dernières.

Les données que nous allons utiliser sont disponibles ici, et plus particulièrement là.

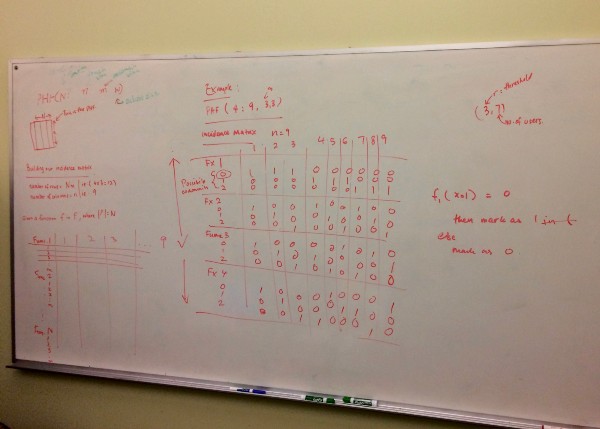

Grace à celles-ci, nous récupérons et disposons donc les informations concernant les lignes, arrêts, trajets et heures de passage pour tous les critères précédents. Les relations entre les différentes entités sont présentées/synthétisées dans le schéma ci-dessous.

Figure 1 : Interactions entre les entités fournies par le STIF ( Stif)

Le but étant de pouvoir interroger notre bot sur le prochain passage d’un moyen de transport sur une ligne et à un arrêt donné.

Mise en place du modèle LUIS

Afin de débuter notre projet, nous allons créer une application sur LUIS, et lui apprendre à reconnaitre les différentes expressions qui nous intéresses ici.

Comme nous l’avons déjà évoqué, LUIS suit un modèle d’entrainement assez simple. Il suffit de lui fournir des phrases, issues du langage naturel, et de le guider dans l’extraction des différentes variables contenues dans la phrase (les Entities).

Tout ceci s’effectue simplement via l’interface web de LUIS. Outre les phrases que vous lui fournirez, LUIS sera en mesure par la suite de vous proposer des phrases qu’il aura rencontré dans votre environnement de production afin d’enrichir son modèle.

Figure 2 : Interface générale de LUIS

Compte tenu de ces éléments, la première étape consiste donc à définir les actions que devra tenter de prédire LUIS en fonction de l’entrée fournie. Ces actions sont appelées comme mentionné précédemment des Intents dans l’interface, et vous pouvez en définir autant que vous le souhaitez !

A chaque fois qu’une phrase sera soumise à votre modèle LUIS, ce dernier émettra une probabilité pour chacune des actions que vous aurez définie au préalable.

Nous allons commencer par créer notre première action, qui définira l’action « Prochain passage ». Nommez cet Intent comme vous le souhaitez, de manière assez explicite (en gardant à l’idée que vous devrez utiliser ce nom dans votre code C#).

Cette action nécessite des paramètres qui sont :

Nous allons donc devoir définir ces paramètres, ou Entities pour LUIS. Encore une fois, vous êtes libre du nom que vous donnez à vos Entities, cependant, elles aussi seront utilisées par la suite dans le code C# du bot.

Un exemple de ce que vous devriez obtenir est présenté dans la figure suivante :

Figure 3 : Exemple de définition des Intents et Entities dans LUIS

-

TRANSPORTATION_TYPE : Le moyen de transport

-

TRANSPORTATION_ROUTE : La ligne

-

TRANSPORTATION_STOP : L’arrêt

-

TRANSPORTATION_DIRECTION : La direction de la ligne (bonus)

Ces informations devront donc être extraites directement par LUIS à partir d’une phrase.

L’étape suivante consiste à entrainer notre modèle pour qu’il commence à reconnaitre les phrases qui doivent déclencher notre action (Intent) et extraire les bonnes parties qui définissent nos paramètres.

Pour cela, vous devez fournir à LUIS des phrases (Utterrances) manuellement, ou via un outil d’importation dans l’interface web.

A la suite de quoi vous devrez définir chaque entité dans chaque phrase, ainsi que l’action correspondante :

Après quelques exemples, vous devriez voir LUIS devenir meilleur et commencer à inférer les paramètres ainsi que l’action associée.

Dans la partie droite de l’interface vous pouvez visualiser les résultats de l’entrainement du modèle, modèle dont vous pouvez forcer l’entrainement via le bouton Train situé en bas à gauche de cette même interface.

Figure 5 : Visualisation des performances du modèle

Une fois notre modèle est entrainé et prêt, nous allons récupérer les informations nécessaires à son intégration dans le Bot Framework.

Pour se faire, il nous faut récupérer l’AppID et le AppName de notre application :

Figure 6 : Récupération des informations d’identification du modèle LUIS

Intégration de LUIS dans notre application

A ce stade, notre bot ne fait que renvoyer la longueur de la chaine de caractère qu’il reçoit. Nous allons commencer à le guider en intégrant le modèle LUIS entrainé afin qu’il puisse interagir avec nous.

Commençons par créer un nouveau dossier Dialogs dans notre projet, lequel contiendra toutes les classes dérivées de Dialog. Puis, créez une classe RatpDialog laquelle aura la charge de traiter les messages entrants et de faire appel à LUIS.

Figure 7 : Architecture actuelle du projet

Votre classe RatpDialog n’est encore qu’une coquille (vide). Afin qu’elle soit compatible avec les Dialog du Bot Framework, il est donc nécessaire qu’elle hérite d’un descendant de IDialog et dans notre cas d’un LuisDialog. Ce dernier encapsule l’appel à l’API de LUIS et fournit directement le résultat de cet appel.

Pour commercer, nous allons ajouter les informations d’identification de notre modèle LUIS afin que le Bot Framework puisse aller récupérer les informations du modèle et traiter les demandes.

Pour cela, il suffit simplement de décorer notre classe dérivée de LuisDialog de la manière suivante :

Figure 8 : Informations d’identification pour l’API LUIS

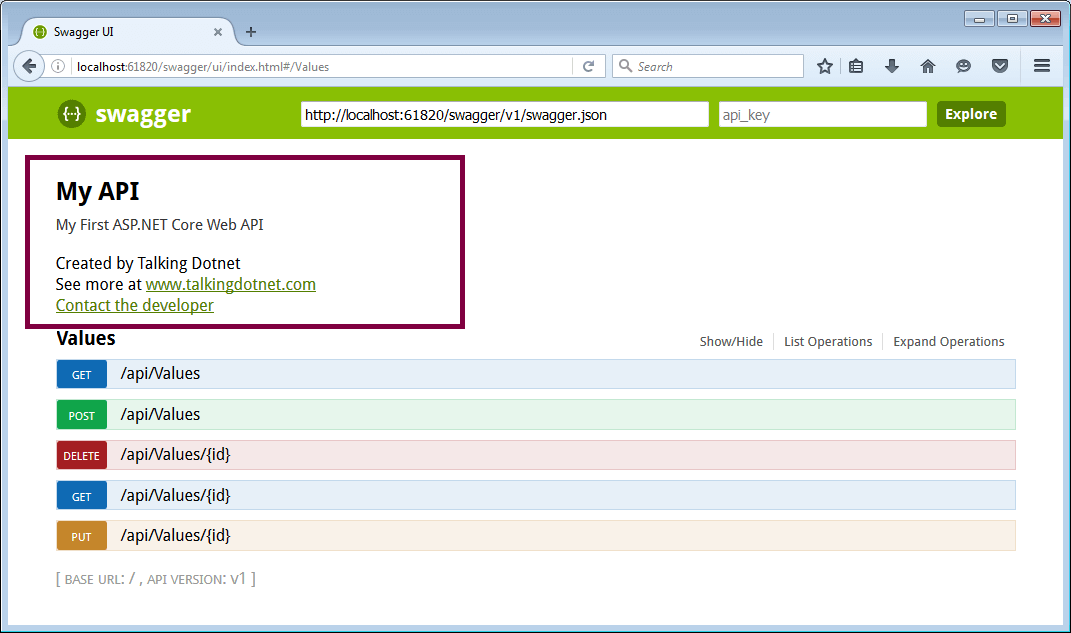

Les informations AppId et Subscription Key sont récupérables depuis l’interface web de LUIS, via le bouton App

Settings. Au cas où vous n’auriez pas de clé d’abonnement (Subscription Key), vous pouvez en ajouter une en vous rendant sur le portail Azure, et en ajoutant un nouveau service cognitif, de type LUIS, gratuitement.

Figure 9 : Création d’un service cognitif

Veillez à bien choisir Language Understanding Intelligent Service (LUIS) comment type d’API. Une fois créé, vous pourrez accéder à la clé de souscription dans l’interface de gestion du service Azure. Vous n’aurez plus qu’à coller cette dernière dans le champ Subscription Key (dans le menu App Settings) sur LUIS, et à l’ajouter.

Dans un Dialog « simple », vous devez surcharger la méthode StartAsync afin de définir la méthode qui traitera les requêtes (Vous trouverez plus d’informations ici).

Pour un LuisDialog, celle-ci est d’ores et déjà implémentée, et intègre donc :

-

L’interrogation de l’API de LUIS,

-

La conversion du résultat

-

Et l’émission de celui-ci vers le point de terminaison (méthode) spécifiée.

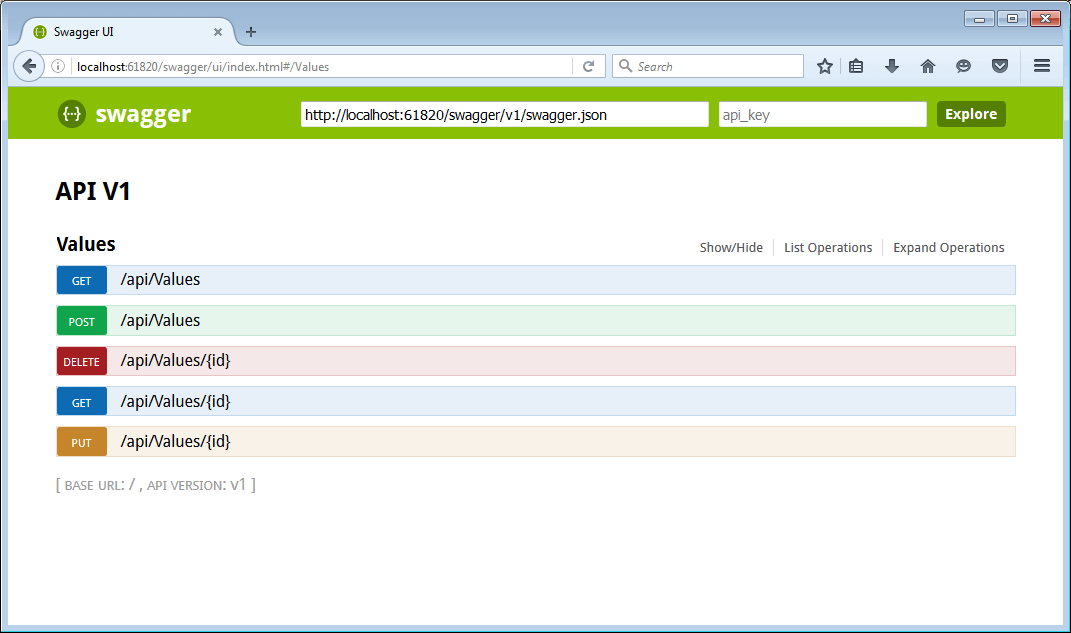

Chaque action (Intent) que vous avez défini dans votre modèle LUIS doit donc posséder un point de terminaison afin de pouvoir être traitée par le bot.

Pour déclarer un point de terminaison, il vous suffit simplement d’annoter la méthode voulue à l’aide d’un LuisIntent, avec en paramètre, le nom de l’Intent associé.

Figure 10 : Exemple de décoration d’une méthode comme point de terminaison

Comme vous pouvez le constater, cette méthode récupère un contexte global, associé à la conversation courante, ainsi qu’un objet contenant le résultat de l’appel au modèle LUIS. Cet objet fournit des accesseurs vers les Entity inférées, les probabilités pour chacun des Intent que vous pourriez avoir défini, etc.

Enfin, vous pouvez extraire les différents paramètres issus des déductions de LUIS directement dans votre méthode, tel qu’en témoigne la capture ci-dessous :

Figure 11 : Exemple d’extraction des Entities inférées par LUIS

Suite à quoi, il ne vous reste plus qu’à vous concentrer sur la partie métier de votre dialogue.

Intégration du modèle de données

Les données mises à disposition par la RATP sont fournies sous la forme de plusieurs fichiers CSV. Nous avons choisi d’importer ces données dans une base de données SQL Azure, afin de faciliter l’interrogation de celles-ci.

Afin de créer notre base de données, nous nous rendons dans le portail de gestion Azure, afin d’ajouter un service de stockage (Rubrique Données + stockage => SQL Database).

Figure 12 : Création de la base de données sur Azure

Il vous suffira alors de rentrer les informations concernant votre serveur de base de données, son nom, le nom d’utilisateur à utiliser pour l’administration, le mot de passe de celui-ci et l’emplacement de votre base.

Enfin, n’oubliez pas de spécifier le nom de la base de données à créer sur le serveur.

Figure 13 : Création d’un nouveau serveur de base de données

Sur la Figure 7 précédente, vous pouvez constater que le dossier App_Data comporte un sous-dossier ratp_data. Ce fichier n’est pas inclus dans l’archive joint à cet article ici. De fait, il s’agit simplement des données mises à disposition par le STIF sur le site de l’Open Data, lesquelles sont assez volumineuses (environ 1Go).

Le script de génération de la structure de la base, au format SQL, est quant à lui, joint à l’archive précédente.

Afin d’importer nos données, nous avons simplement utilisé le logiciel SQL Server 2016 Import/Export. Ce dernier propose la mise en place d’ETL (Extract Transform Load) simple depuis de multiples sources de données. Aucune modification particulière ayant été apportée au projet, nous ne détaillerons pas ce processus.

Il convient de noter que dans le cas où vous utiliserez un autre outil d’importation, les bases de données SQL Azure sont compatibles avec le protocole SQLServer. Vous pouvez donc aisément utiliser ce type de sortie pour votre importation.

Une fois nos données importées, nous pouvons commencer à développer notre modèle côté client. Pour se faire, nous utilisons Entity Framework, lequel va automatiquement gérer les classes nécessaires pour refléter la structure de notre base.

Dans le dossier Models, ajoutez un nouvel élément ADO.NET Entity Data Model et sélectionnez EF Designer from database.

Figure 14 : Création d’un contexte EntityFramework depuis une base de données existante

Choisissez New Connection et spécifiez Microsoft SQL Server en tant que source de données. Remplissez les informations demandées :

Cette étape va permettre à Visual Studio d’ajouter les entrées nécessaires dans le fichier de configuration, et d’initier la création des classes du modèle à partir de la structure de la base.

Une fois réalisé, vous devriez obtenir un fichier .edmx dans votre dossier Models, qui contiendra toutes les informations et classes nécessaires pour utiliser votre base de données dans le projet.

La structure générée devrait être semblable à celle-ci-dessous.

Figure 15 : Structure générée par ADO.NET

Afin de pouvoir utiliser le contexte généré pour la base de données, vous devez instancier un objet, dont le nom est celui que vous avez spécifier lors de l’ajout de la ressource ADO.NET Entity Data Model.

Par exemple, dans notre cas, nous avons choisi de nommer notre modèle « ratpbotdemodbEntities », utilisons alors ce dernier pour instancier un objet de type ratpbotdemodbEntities pour manipuler les données stockées en base.

Par commodité, nous avons décidé d’utiliser une classe outil, RatpHelper encapsulant l’interrogation de la base. Libre à vous de suivre ce modèle, ou d’utiliser directement votre Data Model votre Dialog.

Enfin, pour récupérer l’heure de prochain passage désirée, nous récupérons le moyen de transport voulu, puis les différentes lignes utilisant ce moyen de transport, en essayant de cibler un arrêt spécifique vis-à-vis de son nom. Si l’arrêt en question est trouvé, nous trions les heures de passages à cette heure par rapport à la différence entre l’heure actuelle et l’heure théorique de passage en valeur absolue. Il nous suffit alors de renvoyer la première valeur, celle dont l’heure de passage est la plus proche.

// Récupération du moyen de transport (TRAM, Métro, RER, etc.)

var mean = db.TBL_RATP_MEAN_TYPES.Where(x =>

x.MEAN_TYPE_NAME.Equals(meanName, StringComparison.InvariantCultureIgnoreCase)).First();

// Récupération des lignes utilisant ce moyen de transport

var route = mean.TBL_RATP_ROUTES.Where(r =>

r.ROUTE_NAME.Equals(lineName, StringComparison.InvariantCultureIgnoreCase));

// Recherche d'un arrêt, dont le nom correspond à celui spécifié, et dont le moyen de // transport et la ligne concorde avec les paramètres

var st = db.TBL_RATP_STOPS.Where(x =>

x.STOP_NAME.Contains(stopName)).SelectMany(x => x.TBL_RATP_STOP_TIMES);

// Si l'on trouve un arrêt de transport, on les heures de passes par rapport à la

// différence entre l'heure de passage et l'heure actuelle

// On récupère la plus proche

if (st.Count() > 0)

{

return st.Select(s => s.STOP_TIMES_DEPARTURE)

.ToList()

.Where(t => t.TotalSeconds > DateTime.Now.TimeOfDay.TotalSeconds)

.OrderBy(t => Math.Abs((DateTime.Now.TimeOfDay - t).TotalSeconds)).ToList();

}

else

{

return Enumerable.Empty().ToList();

}

Intégration avec le contrôleur

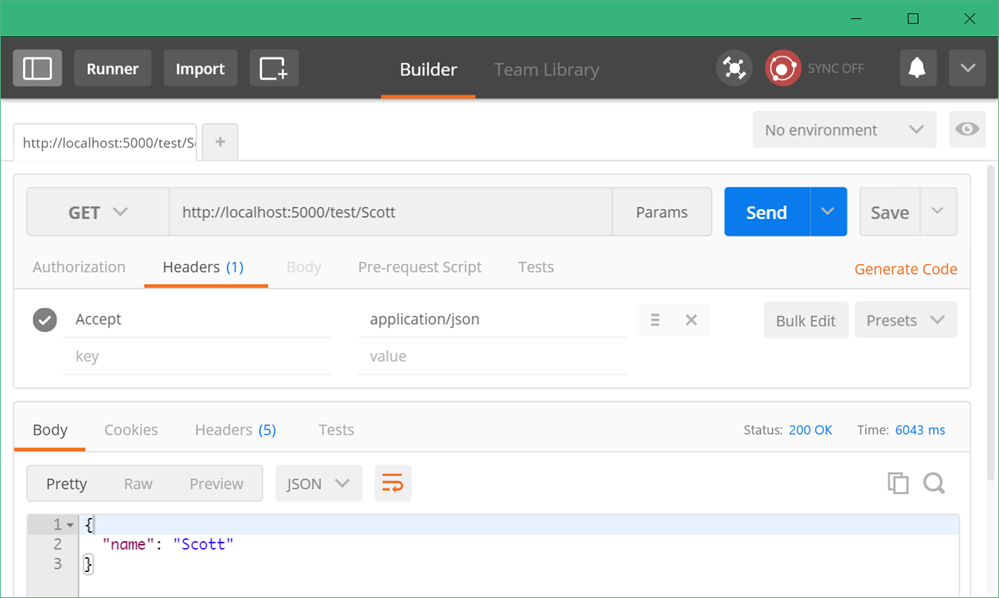

Maintenant que nous avons un dialogue disposé à interagir avec les participants de la conversation, il est nécessaire de connecter celui-ci à la conversation afin qu’il soit en mesure de recevoir les messages.

Cette opération est faite via le contrôleur qui a la charge de rediriger les messages vers le dialogue approprié. Les messages sont toujours émis vers le contrôleur « messages », c’est pourquoi vous avez un MessagesController dans votre dossier Controllers.

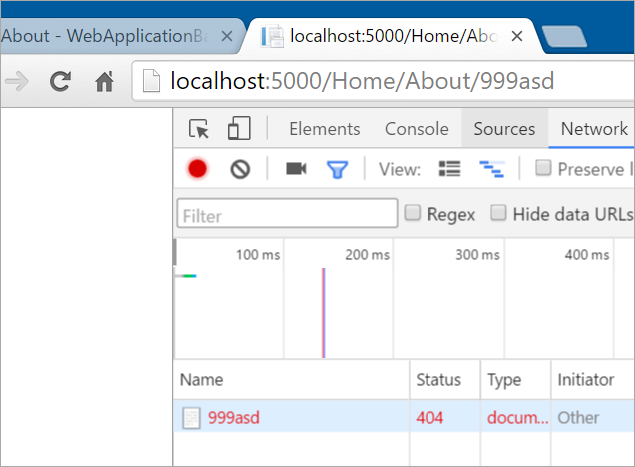

Par défaut, les contrôleurs sont liés à une URL particulière, dont le formalisme est le suivant :

http://masuperurl.fr/<controllerName>/

Les requêtes du bot sont envoyées via la méthode HTTP POST et automatiquement exposées en tant qu’objet de type Message par le Framework.

Un message peut-être de différents types, lesquels indiquent le type d’évènement auquel doit répondre le bot. Dans notre cas, nous nous intéresserons uniquement aux messages de type « Message ».

Enfin, pour rediriger une requête vers un dialogue spécifique, il suffit d’utiliser l’utilitaire de conversation et d’attendre de manière asynchrone le résultat de l’opération dans le dialogue.

Figure 16 : Gestion asynchrone du routage d’une requête vers un dialogue

Ainsi, si le message est de type « Message », le message est redirigé vers un nouveau dialogue.

En guise de conclusion

Toutes ces informations vous ont permis de mettre en œuvre le Bot Framework, LUIS, ASP.NET, entityframework, ce qui, fait un bon tour d’horizon des technologies Microsoft. Afin de tester votre bot, vous pouvez démarrer celui-ci localement, et tester directement ces réactions avec le Bot Emulator, comme présenté sur la capture ci-dessous :

Ceci clôt notre exploration des bots et par la même notre série de billet. Nous espérons que cette dernière nous aura donné l’envie de vous lancer. Que le bot soit avec vous

NavigoBot

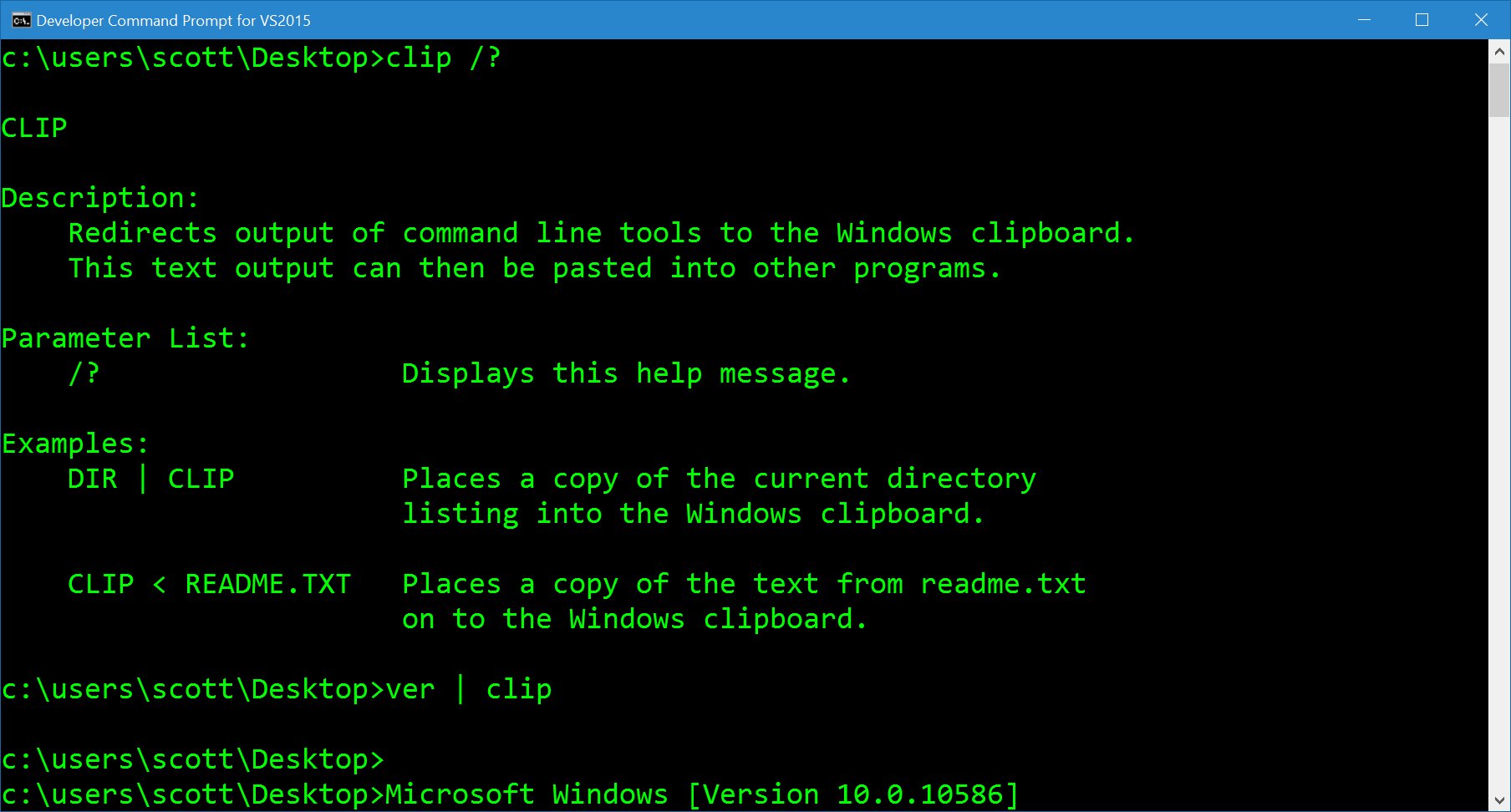

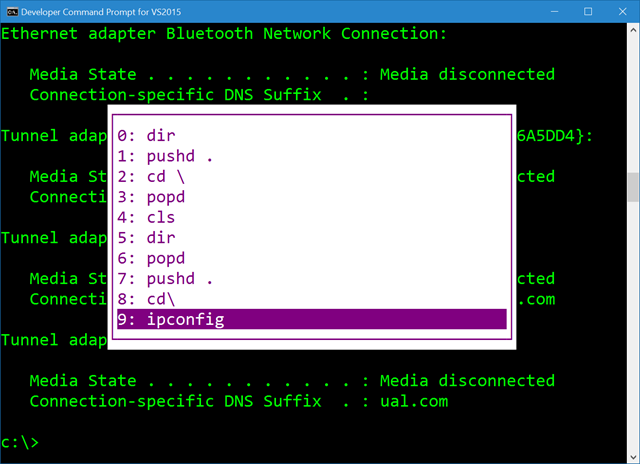

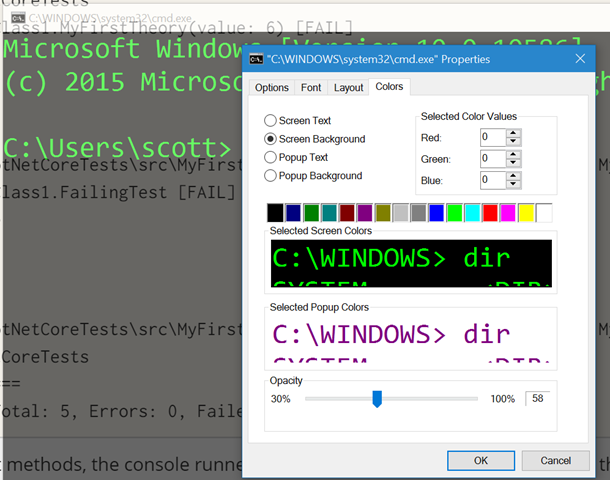

I'm a big fan of keyboard shortcuts.

I'm a big fan of keyboard shortcuts.

I was meeting with a mentee today and she was commenting how stressed out she was. Overwhelmed with work, email, home, life, dinners, the news, finances...you know. LIFE. I am too. You likely are as well.

I was meeting with a mentee today and she was commenting how stressed out she was. Overwhelmed with work, email, home, life, dinners, the news, finances...you know. LIFE. I am too. You likely are as well.