Quaternions are pretty well-known to graphics programmers at this point, but even though we can use them for rotating our objects and cameras, they’re still pretty mysterious. Why are they 4D instead of 3D? Why do the formulas for constructing them have factors like \(\cos\theta/2\) instead of just \(\cos\theta\)? And why do they have the “double-cover” property, where there are two different quaternions (negatives of each other) that represent the same 3D rotation?

These properties need not be inexplicable teachings from on high—and you don’t have to go get a degree in group theory to comprehend them, either. There are actually quite understandable reasons for quaternions to be so weird, and I’m going to have a go at explaining them.

Numbers That Rotate

Quaternions are basically complex numbers that have eaten a super mushroom and become twice as big, so it’s helpful to understand complex numbers before going on. The main property of complex numbers that’s important here is how they represent rotations in 2D. Unfortunately, if you grew up in the USA you were probably never introduced to this in school—you probably learned all about how \(i^2 = -1\), but nothing about how complex numbers represent rotations. Steven Wittens’ How to Fold a Julia Fractal is a neat article with interactive diagrams that explain complex numbers from the geometric perspective.

The main fact we need here is just this: multiplying by a unit complex number is equivalent to rotating in 2D space: \[ (\cos\theta + i \sin\theta) (x + iy) = (x\cos\theta – y\sin\theta) + i(x\sin\theta + y\cos\theta) \] Here, I’m multiplying two complex numbers, but the one on the right, \(x + iy\), I’m thinking of as just a point in 2D space; the one on the left, \(\cos\theta + i\sin\theta\), I’m thinking of as a transformation—namely a rotation by \(\theta\). And as you can see, when I multiply them, the result is the point \((x, y)\) rotated by \(\theta\).

In particular, if I set \(\theta = \pi/2\), then the number on the left just reduces to \(i\). So multiplying by \(i\) does a 90-degree rotation: \[ (i) (x + iy) = -y + ix \]

Rotate All The Dimensions!

OK, complex numbers are cute and all, but we really want to rotate in 3D. So how can we do that?

First of all, we can’t actually create a complex-number-like algebra that works in 3D. What happens if we try? In 2D, we used real numbers for the x-axis, and imaginary numbers (proportional to \(i\)) for the y-axis. Let’s make up a brand-new imaginary unit, \(j\), which (we declare by fiat) isn’t equal to any of the existing complex numbers. This unit will represent the z-axis, so we can represent a point in 3D like \(x + iy + jz\). So far so good. But now let’s try rotating this point, by multiplying it by \(i\): \[ (i)(x + iy + jz) = -y + ix + (ij)z \] Whoops! What’s that \(ij\) thing? It can’t be reduced to a real number, or to anything proportional to \(i\) or \(j\). So it can’t represent a point in our 3D space. It’s an extra dimension! We tried to make an algebra in 3D, but found ourselves in 4D instead. If we define \(k \equiv ij\), we’ve just invented quaternions. (Well, almost. We also have to declare that \(i\) and \(j\) anticommute, i.e. that \(ij = -ji\).)

The takeaway here is that due to different imaginary units multiplying with each other, you can only create complex-like algebras in power-of-two-dimensional spaces. But let’s not give up yet. After all, 3D space is embedded in 4D space, so can’t we get 3D rotations by doing 4D rotations that are restricted to the 3D subspace?

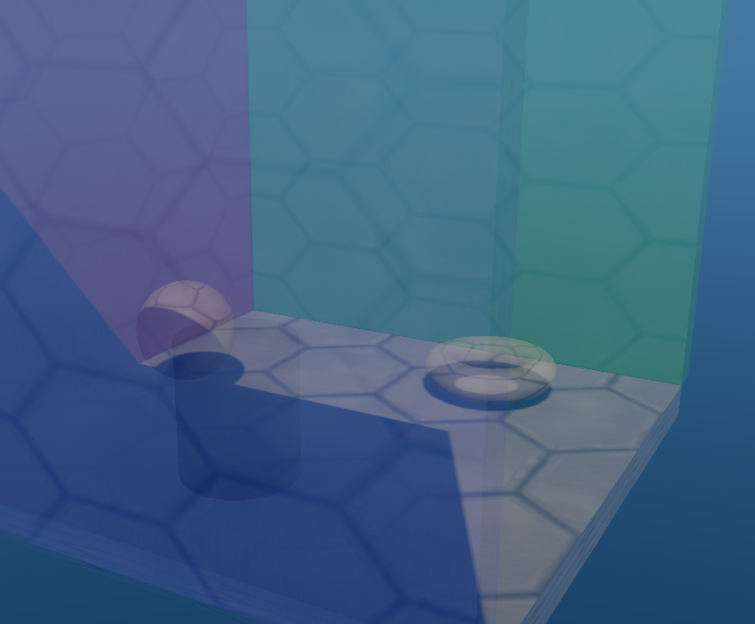

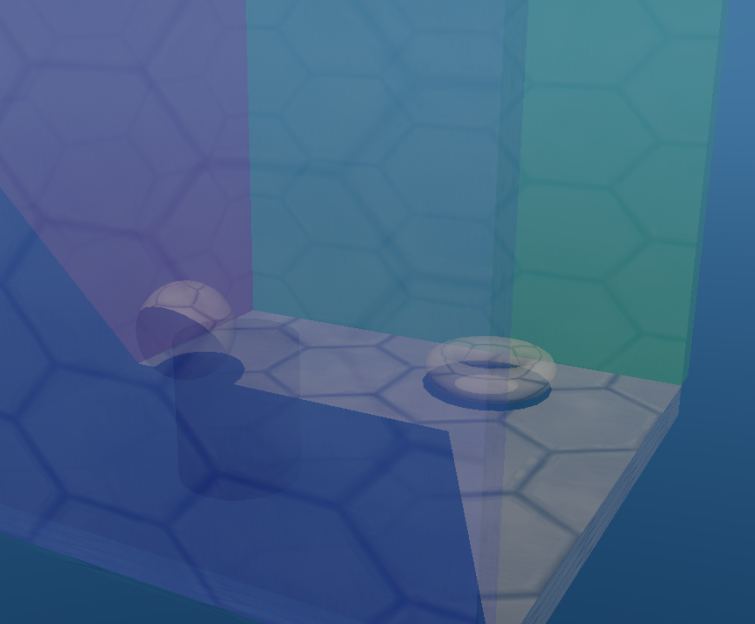

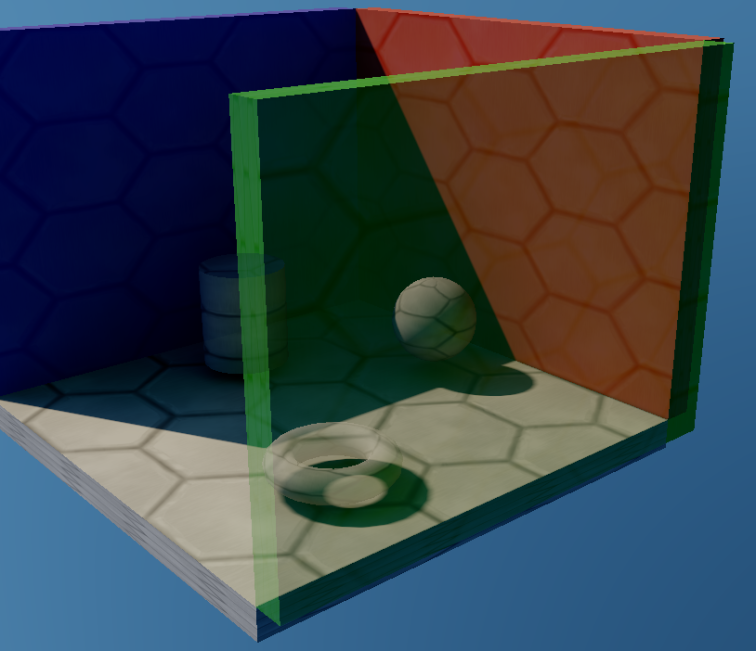

Quaternions do represent rotations in 4D, in a similar manner to how complex numbers represent rotations in 2D. If I think of an arbitrary quaternion as a point in 4D space, and then multiply it (on the left) by \(i\), I get: \[ (i)(w + ix + jy + kz) = -x + iw – jz + ky \] Let’s look at what happened here. The \((w, x)\) components of the point became \((-x, w)\), which is a 90-degree rotation in the \(wx\) plane. Simultaneously, the \((y, z)\) components became \((-z, y)\), which is another 90-degree rotation, in the \(yz\) plane!

In general—in any number of dimensions—rotation takes place in a 2D plane. In 2D space, there’s just the one plane, so there’s just one dimension of rotation. In 3D, we talk about rotating about an axis, but we really mean rotating in a plane perpendicular to that axis. In 4D, there are enough dimensions that it’s possible to rotate in two independent planes at once—here, the \(wx\) and \(yz\) planes. The planes have no axes in common; they intersect only at a single point, which is the center of rotation, so both rotations can take place without disturbing each other (not possible in 3D, where two planes always intersect in a line).

It turns out that multiplying by a quaternion always rotates in two independent planes at once, by the same angle:

- \(i\) rotates in the \(wx\) and \(yz\) planes.

- \(j\) rotates in the \(wy\) and \(zx\) planes.

- \(k\) rotates in the \(wz\) and \(xy\) planes.

Other (non-axis-aligned) quaternions rotate in other (non-axis-aligned) pairs of planes, or by other angles (not 90 degrees). Moreover, one of the two planes always includes the \(w\) axis—i.e., the axis of real numbers. This isn’t what we want for 3D rotation; we need to be able to rotate in just one plane, and we need to be able to choose any plane in the \(xyz\) subspace.

So far, we’ve multiplied a point on the left by \(i\). But quaternion multiplication isn’t commutative, so what happens when we multiply on the right? \[ (w + ix + jy + kz)(i) = -x + iw + jz – ky \] What happened here? The \((w, x)\) components went to \((-x, w)\), which is the same as before. However, the \((y, z)\) components went to \((z, -y)\), which is different. They still rotated 90 degrees, but in the opposite direction! It turns out that in general, swapping the order will reverse the direction of rotation in one of the two planes—namely, the one that doesn’t contain the \(w\) axis.

We can see now how to get our rotations to be restricted to just one plane in 4D. If we multiply on both the left and the right, the rotation whose sign changed will cancel out, and the other rotation will be done twice! \[ (i)(w + ix + jy + kz)(i) = -w – ix + jy + kz \] Just as expected, the \((w, x)\) components became \((-w, -x)\), i.e. a 180-degree rotation; the \((y, z)\) components were left unchanged.

We’re almost there. We actually don’t want to rotate the plane that includes the \(w\) axis; we’d rather it stayed fixed and the other one survived. We can do this by just inverting the quaternion on the right: \[ (i)(w + ix + jy + kz)(i^{-1}) = (i)(w + ix + jy + kz)(-i) = w + ix – jy – kz \] The inversion, as you’d expect, flips the direction of rotation in both planes—so the end result here is that the \(wx\) rotation is canceled out and the \(yz\) rotation is done twice.

So, Why Do Quaternions Double-Cover?

First of all, we can see now why quaternions have half-angles in their formulas, like \(\cos\theta/2\). To get a rotation that only affects a single plane in 4D, we have to apply the quaternion twice, multiplying on both the left and (with its inverse) on the right. The rotation we want gets done twice (and the one we don’t want gets canceled out), so we have to halve the angle going in to make up for it.

Because of that, a quaternion that rotates 180 degrees in 4D ends up rotating 360 degrees, thus doing nothing, when we apply it twice to restrict it to 3D. People say sometimes that quaternions have “720 degrees” of rotation internally. That’s one way to think about it; another way is that quaternions just have the usual 360 degrees of rotation, but you always have to apply them twice.

So if you take a quaternion and rotate it 180 degrees in 4D (i.e. negate it), it’s a different quaternion—but when applied-twice, it totals an extra 360 degrees and therefore has the same effect as the original. You can also see it algebraically: \(qpq^{-1} = (-q)p(-q)^{-1}\) since the two minus signs cancel out.

Postscript: Arbitrary 4D Rotations

You might be wondering at this point how quaternions can represent arbitrary 4D rotations. As mentioned before, a single quaternion always rotates in two independent planes by the same angle, and one of those planes always includes the \(w\) axis. So how can we rotate in two independent planes by different angles, or in planes that don’t include the \(w\) axis?

It turns out you can do this by applying two distinct quaternions, one on the left and one on the right, like \(apb\) where \(p\) is the point being rotated. For 3D rotations, \(b = a^{-1}\); for arbitrary 4D rotations, \(a\) and \(b\) don’t have any particular relationship. But the idea is generally the same: choose \(a\) and \(b\) so that two combine to cancel out the planes you don’t want and keep the ones you do want.

Why do you need this odd double-quaternion construction for arbitrary 4D rotations, while in 2D a single complex number does the job? It comes down to degrees of freedom. In 2D, there’s one dimension of rotation, and one dimension of unit complex numbers. In 4D, there are six dimensions of rotation (count ’em: \(wx, wy, wz, xy, xz, yz\)) but only three dimensions of unit quaternions—so you need to double down on quaternions to have enough degrees of freedom for a 4D rotation.

Further Reading