Tuan.kuranes

Shared posts

Memory Bandwidth and Vertices

The post Memory Bandwidth and Vertices appeared first on OpenGL SuperBible.

Migrating databases with zero downtime

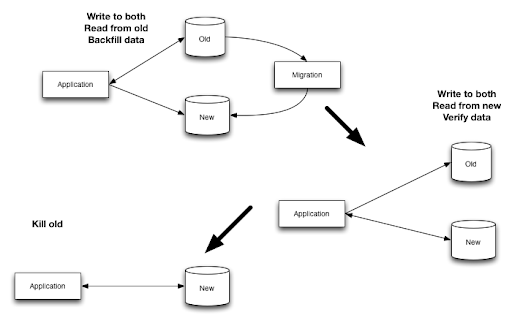

Every article I’ve read and linked to that includes a data migration phase from one database to another tells the same story:

- forklift

- incremental replication

- consistency checking

- shadow writes

- shadow writes and shadow reads for validation

- end of life of the original data store

The same story for Netflix’s migration from SimpleDB to Cassandra and Shift.com’s migration from MongoDB/Titan to Cassandra. And once again, the same appears in FullContact’s migration from MongoDB to Cassandra. This last post also provides a nice diagram of the process:

The key part of these stories is that the migration was performed with zero downtime.

Original title and link: Migrating databases with zero downtime (NoSQL database©myNoSQL)

Path Integral Methods for Light Transport Simulation: Theory & Practice

This post has been generated by Page2RSS

New Release

The new source and converter are available here: potree_2014.01.22.zip

A small demo is included. The application has to be deployed on a webserver in order to run.

Translating a human-readable JSON-like data format into C++ structs

Even though Molecule’s run-time engine exclusively uses binary files without doing any parsing, the asset pipeline uses a human-readable non-binary format for storing pretty much everything except raw asset files like textures or models. This post explains the process behind translating data from such a human-readable format into actual instances of C++ structs with very little setup code required.

Why a human-readable non-binary format? Isn’t that slower to load?

A non-binary format is of course slower to load than a binary one, but it is only used for files that are being used by the editor and the asset pipeline. As such, those files are constantly changed, so they have to be easy to read, diff, and merge – and users should be able to spot errors in the file structure almost immediately.

Why not XML?

Much has been said about XML and XML vs. JSON already. Personally, to me XML is a human-readable language, but not one that can be parsed easily by humans. I find it hard to parse larger pieces of XML just by looking at the contents without any visual aid like e.g. coloring or syntax highlighting. Other formats tend to be much easier to read, and have less overhead and visual clutter.

Why not JSON?

If you take a look at the JSON example on Wikipedia, JSON is still a bit to verbose for my taste (I certainly don’t want to put everything in quotation marks). Hence, I use a slightly altered format that is unambiguous to parse, supports objects as well as arrays, and is easily understood just by looking at an example.

Why not use an existing parser?

We programmers really don’t like re-inventing the wheel, especially in tools code where there is usually a bit more leeway for things like e.g. the number of memory allocations made. Still, I don’t want to make a hundred calls to new and delete for parsing a measly options file.

Sadly, that rules out most parsers already. Most of them tend to have individual classes for objects, arrays, values of different kinds, attributes, and more, calling new all over the place every time they encounter a new value, stuffing it into a big tree-like data-structure like a map.

I would like to have a parser that reads the file exactly once, parses in-place, uses no dynamic string allocations for parsing (the strings are already there, no need to create tons of std::string!), and puts everything into a somewhat generic data structure, holding values of objects and arrays inside a simple array. Additionally, I would like to go from this data structure to any C++ struct I like, and make the translation process as easy as possible.

Molecule’s data format

Let’s start with a simple example file that shows almost everything that can be put into a data file:

AnyObject =

{

# this is a comment

stringValue = “any string”

floatValue = 10.0f

integerValue = -20

boolValue = true

intArray = [ 10, 20, 30 ]

stringArray = [ “str1”, “str2”, “str3” ]

aNestedObject =

{

name = “object1”

someValue = 1.0f

}

}

I would argue that just by looking at the contents of this file you can immediately parse all the information, without the need for any aid like syntax highlighting, color-coded keywords, etc.

Here is a more complete example: an options file specifying shader options with which to build a pixel shader for applying deferred point lights.

Options =

{

# general

warningsAsErrors = false

# debugging

generateDebugInfo = false

# flow control

avoidFlowControl = false

preferFlowControl = false

# optimization

optimizationLevel = 3

partialPrecision = true

skipOptimization = false

}

# array of defines with their names and values

Defines =

[

# toggles between different PCF kernels (2x2, 3x3, 5x5, 7x7). 0 means no shadow mapping.

{

name = "ME_SHADOW_MAP_PCF_KERNEL"

values = [0, 2, 3, 5, 7]

}

]

Parsing

Parsing is quite simple, really.

The general rules are:

- Every time a ‘{‘ is encountered, the definition of a new object starts. ‘}’ closes the definition.

- Every time a ‘[‘ is encountered, the definition of a new array starts. ‘]’ closes the definition.

- Every time a ‘=’ is encountered and there are non-whitespaces to the right, add a new value to the current object or array.

- Ignore everything after a ‘#’.

The parser essentially just parses the whole file line-by-line, and keeps track of its current state. Individual lines and values are parsed by using fixed-size strings on the stack. Disambiguating different value types is also straightforward if you try to identify types in the following order:

- If the value starts with a ‘”’, it must be a string. Else, go to 2.

- If the value ends with an ‘f’ or contains a ‘.’, it must be a float because we ruled out strings already. Else, go to 3.

- If the value starts with either ‘t’ (true) or ‘f’ (false), it must be a bool. Else, go to 4.

- The type is an integer.

Implementing the parser yourself also has the added benefit that error checking can be made a bit more robust with meaningful error messages. For example, telling the user that the parser “encountered an error in line 8” isn’t very helpful, and we can do so much better than that.

How does “Malformed data: Array was opened without preceding assignment in line 8. Did you forget a ‘=’?” sound? Much better.

All in all, the parser weights in at around 300 lines of C++ code, including comments, asserts, and error messages.

Generic data structure

Parsing is one thing, but how do we hold the values in memory? I settled for a straightforward implementation, using the following classes:

- DataBin: holds any number of DataObject and DataArray (both stored in an array)

- DataObject: holds any number of DataArray and DataValue (both stored in an array)

- DataArray: holds values or objects (stored in an array)

- DataValue: can either be a string, a float, an integer, or a boolean value (stored using a union)

Memory for the class instances is simply allocated using a linear allocator, so the data for a whole object or array is always contiguous in memory. This creates no fragmentation, you can allocate a reasonably sized buffer once and use that for all parsing operations (resetting the allocator after parsing a file has finished), and it also helps with the next step: translating the data into C++ structs.

Translating generic data into C++ structs

What do I mean by translation? We don’t want to access individual pieces of data using a name-based lookup as in the following example:

const bool value = dataBin.GetValue(“Options/warningsAsErrors”);

There are several reasons why I try to stay away from such an approach:

- It is error-prone. Every time you want to access a value, you risk misspelling it, and accesses are probably going to happen from several different .cpp files, making it harder to find the culprit.

- Every time somebody wants to access a value, it has to be retrieved from the generic data structure, which is some kind of search operation. That can be sped up by using hashes, binary search, or similar – but why do it on each access operation if we don’t have to?

- We want to be able to pass objects around to other functions. In many cases, we want to throw away the contents of a file and close the file handle in the meantime, and only keep the parts we need.

What we want is something like the following:

struct PixelShaderOptions

{

bool warningsAsErrors;

bool generateDebugInfo;

bool avoidFlowControl;

bool preferFlowControl;

int optimizationLevel;

bool partialPrecision;

bool skipOptimization;

};

// assume dataBin is filled with generic data by the parser

DataBin dataBin;

PixelShaderOptions options;

// translates the object named “Options” from dataBin, putting all data into the given struct according to the translator

TranslateObject("Options", dataBin, translator, &options);

After the data has been translated, we can simply access it via e.g. options.optimizationLevel. We can pass it around to other functions and throw away the file in the meantime. Accessing values is fast, and checked at compile-time.

The remaining question is: how do we build such a generic translator using standard, portable C++? What info does the translator hold? What we need is a list that specifies which value goes into what struct member. In our case, struct members can be a std::string, a std::vector, and other non-POD types, so using offsetof is not an option.

C++ Pointer-to-member

Pointers-to-members is one of those C++ features that get used every once in a blue moon. Personally, this was the second time I used pointers-to-members in the last ten years of C++ programming.

What exactly is a pointer-to-member? In layman terms, a pointer-to-member in C++ allows you to refer to non-static members of class objects in a generic way, which means that you can e.g. store a pointer to a std::vector member, and assign values to members of any class instance using that pointer.

Similar to pointers-to-member-functions, those pointers also exhibit awkward syntax, and in order to access a member’s value of a class instance, you have to use either the .* or the ->* operator.

Using pointers-to-members, we can store a list of the names of values that we want to translate, along with their pointer-to-member. That is, we could do something like the following:

DataTranslator<PixelShaderOptions> translator; translator.Add(“warningsAsErrors”, &PixelShaderOptions::warningsAsErrors); translator.Add(“optimizationLevel”, &PixelShaderOptions::optimizationLevel); // and so on...

The DataTranslator is a simple class template that holds pointers-to-members of a certain type, as shown in the following example:

template <class T>

class DataTranslator

{

// single members

typedef bool T::*BoolMember;

typedef int T::*IntMember;

typedef float T::*FloatMember;

typedef std::string T::*StringMember;

// array members

typedef std::vector<bool> T::*BoolArrayMember;

typedef std::vector<int> T::*IntArrayMember;

typedef std::vector<float> T::*FloatArrayMember;

typedef std::vector<std::string> T::*StringArrayMember;

public:

DataTranslator& Add(const char* name, BoolMember member);

DataTranslator& Add(const char* name, IntMember member);

DataTranslator& Add(const char* name, FloatMember member);

// other overloads omitted

};

For storing the pointer-to-member internally, we can either use one array containing some kind of pointer-to-member-variant (that we have to build ourselves first), or use separate arrays for storing the pointers to different types. In Molecule, I chose the latter approach because it makes data translation faster – it only touches the data it needs for translating a member of a certain type. For example, when translating an integer value, we don’t need to look at all the other members, but only at the pointers-to-int members.

One additional common trick we can use in the DataTranslator interface is to return a reference to ourselves in each Add() method. This allows us to define static, immutable translators like in the following example:

const DataTranslator<PixelShaderOptions> g_pixelShaderOptionsTranslator = DataTranslator<PixelShaderOptions>()

.Add("warningsAsErrors", &PixelShaderOptions::warningsAsErrors)

.Add("generateDebugInfo", &PixelShaderOptions::generateDebugInfo)

.Add("avoidFlowControl", &PixelShaderOptions::avoidFlowControl)

.Add("preferFlowControl", &PixelShaderOptions::preferFlowControl)

.Add("optimizationLevel", &PixelShaderOptions::optimizationLevel)

.Add("partialPrecision", &PixelShaderOptions::partialPrecision)

.Add("skipOptimization", &PixelShaderOptions::skipOptimization);

Translating an object is now as simple as walking the DataObject that we are being given, and matching each DataValue stored in the DataObject with the corresponding pointer-to-member stored in the translator. Using some kind of hashing scheme such as quasi compile-time string hashes for the value names, we only have to search through our array of pointers-to-members corresponding to the type of the DataValue, which is a very fast operation.

Conclusion

The data format introduced in this post is used all over the place in Molecule’s asset pipeline. It is used for asset compiler options, resource packages, components, entities, and schemas. Schemas are an interesting thing when used in conjunction with an entity-component-architecture, and will be the topic of upcoming posts.

Filed under: Asset pipeline, C++

Tips for Writing Accessible Games Analysis

This essay builds off the points I made in the On The Book’s Structure heading in the How to Read This Book section of Game Design Companion: A Critical Analysis of Wario Land 4. You don’t need to have read that section to understand this article.

Games Writing and Levels of Abstraction

Writing is the least ideal means for talking about games. The process involves using an abstract set of symbols to make comment on an abstract system of rules. For the reader, this means burrowing through two layers of abstraction just to understand what you’re saying.

(In saying this, I still believe that the written word is the way to go when it comes to serious games analysis. Video inevitably amounts to entertainment, as the stimuli created from moving images distracts the brain from, and therefore diminishes, the meaning of a text. And with audio, the linear flow of speech doesn’t give the listener the ability to naturally pause and absorb the information being given. It’s a bit like being on a content treadmill).

There’s also the problem of length and details. Because games are complex systems that are defined by their details, a writer must give a significant amount of background on the game in question before they can arrive at any sort of critique. By this point, the reader may have lost interest. So what’s a writer to do? How can we make games writing more accessible without sacrificing integrity?

A Narrow Focus

At some point, preferably before any writing takes place, the writer must decide whether they want their article to have a broad or narrow focus. Most games writers go for the broad option, even though it’s easier to have a narrow focus. Writing just about one particular aspect of a game not only affords the writer more accuracy, but also allows them to cut down on the preamble and jump straight to the chase. On the other hand, without a generous word limit, writing about an entire game can be a troubling task. Games are monolithic structures that, more often than not, cannot be critiqued within the confines of a 800-word review, so while game reviewers no doubt have plenty of opinions, the format offers minimal space for the writer to explain how they came to their conclusions. So unless you’re prepared to put in a few thousand words, it’s best to have a narrow focus.

Cutting Down on Words

Less is more. I often use writing as a means to get to what I want to say, but once I know what that is exactly, I cut everything else and just say it. Here are some techniques that I use to say more with less:

- Heavily edit the parts of the article that give context to the analysis/criticism. There should be little to no fat here.

- Use video or images in place of words. There are plenty of game reviews and Let’s Plays on YouTube which already do a good job of introducing games. Why write about it yourself when someone else can do the hard work for you and the reader gets to see the game in context?

- Use diagrams to explain ideas too complex or fiddly for words, or to reinforce a worded explanation.

- Use metaphoric language. This is something that I’m not so good at, but many games criticism bloggers are adept in. Analogies and metaphor are a great way to convey a lot by saying very little. This technique suits certain topics better than others (like game feel, for instance).

- Find a creative way to present the content. I’m working on this with Adventures in Game Analysis.

Chunk it Out

By chunking your writing out, you give the reader more room to breathe. Here are some more techniques:

- Use dot points where possible, especially to break up long sentences. Here’s a good example.

- Break articles up into a series. When I write about a game, I usually identify several key discussion points and then, given that I can write about them at length, I’ll write the articles individually. Game Design Companion is a great example of this: it’s just a bunch of individual essays.

Write a Story Instead

We’ve been sharing stories since the dawn of time and so the brain has developed quite a fondness for narrative. Stories allow us to ground abstract ideas in relatable situations. Writing story-based criticism, though, can be quite a challenge as critique doesn’t necessarily lend itself well to storytelling and you have to do more than double the work (write a good analysis piece, a good story, and have them seamlessly connect together). Here’s an example of a games analysis story done well

Other Tips

- Bold key sentences. I rarely do this, but it’s a good technique.

- If you’re interested in giving this writing thing a go, then write something and send it in to me. Like everyone else, I’m pretty busy, but I’d be happy to help out too. Writing about games is hard, so us writers need all the encouragement we can get.

Computing oriented minimum bounding boxes in 2D

Meet Stéphane, creator of SculptGL

Stéphane, who made SculptGL (the web sculpting tool we based Sculptfab on), just joined our team in Paris!We asked him to sit down for a quick interview, so the community could learn a bit more about him and our team! (past team interviews here).

> How long have you been with Sketchfab? How did you hear about it? What’s your role here?

I’ve been at Sketchfab since December 1st. I first heard about it when I was looking for an internship (around February 2013), but I didn’t apply at that time. Then, I met @trigrou when Sketchfab did Sculptfab, and a few months later I finally joined Sketchfab. I do 3D stuffs on the frontend.

me3D from stephomi on Sketchfab.

> How did you get into 3D? What interests you most about 3D?

Well, my love for 3D certainly comes from videogames, it’s as simple as that. As for the technical side, it’s not like I’ve been programming since I was a kid… I actually started at my engineering school pretty late. But when I was given the opportunity to carry out a 3D industrial project with my school, I didn’t hesitate and jump in. I especially liked the modelling/editing aspect of the 3D world, that partially explains why I made SculptGL.

> Do you do 3D modelling yourself? How would you get started?

No I am not an artist. I don’t really believe in the “I lack talent” excuse :). I think it’s (almost) all about experience/hard work, and I simply never tried hard enough.

> What do you do in your spare time?

Well, games, manga, movies and farting dogs on youtube. As for games, just to name a few : Skyrim, Shadow of the Colossus, Journey, Last of Us, LBA, Kingdom Hearts, Final fantasy, Secret of Mana, etc. If I had to name my favourite manga, it would be berserk; but I also like a lot Urasawa Naoki’s work (especially Pluto). I also read a lot of shounen stuffs, but usually, the seinen are those that stays the most in my memory. I am slowly starting to miss sports, just a bit…

> Favorite movie?

It’s honestly impossible to name one.

> What do you listen to while working?

It depends, many soundtracks from movies and games, but also some lyric-less music such as house, electro stuffs in order to focus.

> Favorite model on sketchfab?

I really enjoyed many entries from the VG remix contest, especially this one or this one. I also liked this one a lot.

God_of_War_III_Tactics_Advance from Duy Khanh Nguyen on Sketchfab.

> Where are you from? how old are you?

I am from Lyon, 25 years old.

> What would you have for your last meal on earth?

A “fondue” (melted cheese), should I be feeling stuffed, a trou normand might help. I hate cooking though.

> Craziest thing you’ve ever done?

I remember, one day I went to school, half asleep, and suddenly I realized that…. hem..well that’s more embarrassing than crazy, so I’ll keep it for myself :D.

> What are you most proud of?

Working in the 3D field, especially since I didn’t “land” in this world but really worked for it.

Object-order ray tracing for fully dynamic scenes

This article presents a method for tracing incoherent secondary rays that integrates well with existing rasterization-based real-time rendering engines. In particular, it requires only linear scene access and supports fully dynamic scene geometry. All parts of the method that work with scene geometry are implemented in the standard graphics pipeline. Thus, the ability to generate, transform and animate geometry via shaders is fully retained. Our method does not distinguish between static and dynamic geometry. Moreover, shading can share the same material system that is used in a deferred shading rasterizer. Consequently, our method allows for a unified rendering architecture that supports both rasterization and ray tracing. The more expensive ray tracing can easily be restricted to complex phenomena that require it, such as reflections and refractions on arbitrarily shaped scene geometry. Steps in rendering that do not require the tracing of incoherent rays with arbitrary origins can be dealt with using rasterization as usual.

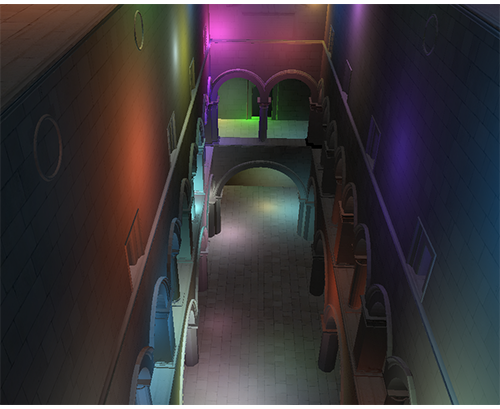

WebGL Deferred Shading

WebGL brings hardware-accelerated 3D graphics to the web. Many features of WebGL 2 are available today as WebGL extensions. In this article, we describe how to use the WEBGL_draw_buffers extension to create a scene with a large number of dynamic lights using a technique called deferred shading, which is popular among top-tier games.

live demo • source code

Today, most WebGL engines use forward shading, where lighting is computed in the same pass that geometry is transformed. This makes it difficult to support a large number of dynamic lights and different light types.

Forward shading can use a pass per light. Rendering a scene looks like:

foreach light {

foreach visible mesh {

if (light volume intersects mesh) {

render using this material/light shader;

accumulate in framebuffer using additive blending;

}

}

} |

This requires a different shader for each material/light-type combination, which adds up. From a performance perspective, each mesh needs to be rendered (vertex transform, rasterization, material part of the fragment shader, etc.) once per light instead of just once. In addition, fragments that ultimately fail the depth test are still shaded, but with early-z and z-cull hardware optimizations and a front-to-back sorting or a z-prepass, this not as bad as the cost for adding lights.

To optimize performance, light sources that have a limited effect are often used. Unlike real-world lights, we allow the light from a point source to travel only a limited distance. However, even if a light’s volume of effect intersects a mesh, it may only affect a small part of the mesh, but the entire mesh is still rendered.

In practice, forward shaders usually try to do as much work as they can in a single pass leading to the need for a complex system of chaining lights together in a single shader. For example:

foreach visible mesh {

find lights affecting mesh;

Render all lights and materials using a single shader;

} |

The biggest drawback is the number of shaders required since a different shader is required for each material/light (not light type) combination. This makes shaders harder to author, increases compile times, usually requires runtime compiling, and increases the number of shaders to sort by. Although meshes are only rendered once, this also has the same performance drawbacks for fragments that fail the depth test as the multi-pass approach.

Deferred Shading

Deferred shading takes a different approach than forward shading by dividing rendering into two passes: the g-buffer pass, which transforms geometry and writes positions, normals, and material properties to textures called the g-buffer, and the light accumulation pass, which performs lighting as a series of screen-space post-processing effects.

// g-buffer pass

foreach visible mesh {

write material properties to g-buffer;

}

// light accumulation pass

foreach light {

compute light by reading g-buffer;

accumulate in framebuffer;

} |

This decouples lighting from scene complexity (number of triangles) and only requires one shader per material and per light type. Since lighting takes place in screen-space, fragments failing the z-test are not shaded, essentially bringing the depth complexity down to one. There are also downsides such as its high memory bandwidth usage and making translucency and anti-aliasing difficult.

Until recently, WebGL had a roadblock for implementing deferred shading. In WebGL, a fragment shader could only write to a single texture/renderbuffer. With deferred shading, the g-buffer is usually composed of several textures, which meant that the scene needed to be rendered multiple times during the g-buffer pass.

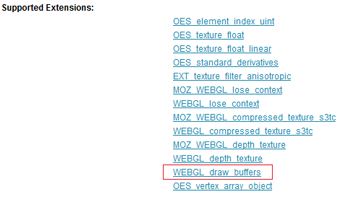

WEBGL_draw_buffers

Now with the WEBGL_draw_buffers extension, a fragment shader can write to several textures. To use this extension in Firefox, browse to about:config and turn on webgl.enable-draft-extensions. Then, to make sure your system supports WEBGL_draw_buffers, browse to webglreport.com and verify it is in the list of extensions at the bottom of the page.

To use the extension, first initialize it:

var ext = gl.getExtension('WEBGL_draw_buffers');

if (!ext) {

// ...

} |

We can now bind multiple textures, tx[] in the example below, to different framebuffer color attachments.

var fb = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, fb); gl.framebufferTexture2D(gl.FRAMEBUFFER, ext.COLOR_ATTACHMENT0_WEBGL, gl.TEXTURE_2D, tx[0], 0); gl.framebufferTexture2D(gl.FRAMEBUFFER, ext.COLOR_ATTACHMENT1_WEBGL, gl.TEXTURE_2D, tx[1], 0); gl.framebufferTexture2D(gl.FRAMEBUFFER, ext.COLOR_ATTACHMENT2_WEBGL, gl.TEXTURE_2D, tx[2], 0); gl.framebufferTexture2D(gl.FRAMEBUFFER, ext.COLOR_ATTACHMENT3_WEBGL, gl.TEXTURE_2D, tx[3], 0); |

For debugging, we can check to see if the attachments are compatible by calling gl.checkFramebufferStatus. This function is slow and should not be called often in release code.

if (gl.checkFramebufferStatus(gl.FRAMEBUFFER) !== gl.FRAMEBUFFER_COMPLETE) {

// Can't use framebuffer.

// See http://www.khronos.org/opengles/sdk/docs/man/xhtml/glCheckFramebufferStatus.xml

} |

Next, we map the color attachments to draw buffer slots that the fragment shader will write to using gl_FragData.

ext.drawBuffersWEBGL([ ext.COLOR_ATTACHMENT0_WEBGL, // gl_FragData[0] ext.COLOR_ATTACHMENT1_WEBGL, // gl_FragData[1] ext.COLOR_ATTACHMENT2_WEBGL, // gl_FragData[2] ext.COLOR_ATTACHMENT3_WEBGL // gl_FragData[3] ]); |

The maximum size of the array passed to drawBuffersWEBGL depends on the system and can be queried by calling gl.getParameter(gl.MAX_DRAW_BUFFERS_WEBGL). In GLSL, this is also available as gl_MaxDrawBuffers.

In the deferred shading geometry pass, the fragment shader writes to multiple textures. A trivial pass-through fragment shader is:

#extension GL_EXT_draw_buffers : require

precision highp float;

void main(void) {

gl_FragData[0] = vec4(0.25);

gl_FragData[1] = vec4(0.5);

gl_FragData[2] = vec4(0.75);

gl_FragData[3] = vec4(1.0);

} |

Even though we initialized the extension in JavaScript with gl.getExtension, the GLSL code still needs to include #extension GL_EXT_draw_buffers : require to use the extension. With the extension, the output is now the gl_FragData array that maps to framebuffer color attachments, not gl_FragColor, which is traditionally the output.

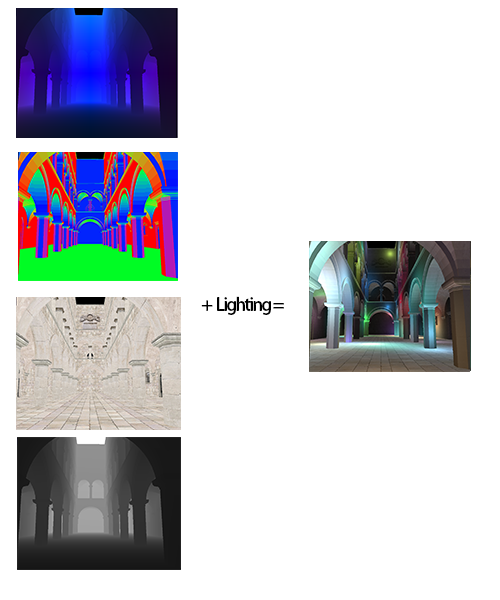

g-buffers

In our deferred shading implementation the g-buffer is composed of four textures: eye-space position, eye-space normal, color, and depth. Position, normal, and color use the floating-point RGBA format via the OES_texture_float extension, and depth uses the unsigned-short DEPTH_COMPONENT format.

Position texture

Normal texture

Color texture

Depth texture

Light accumulation using g-buffers

This g-buffer layout is simple for our testing. Although four textures is common for a full deferred shading engine, an optimized implementation would try to use the least amount of memory by lowering precision, reconstructing position from depth, packing values together, using different distributions, and so on.

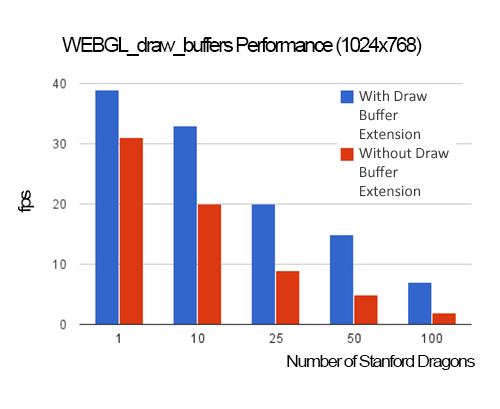

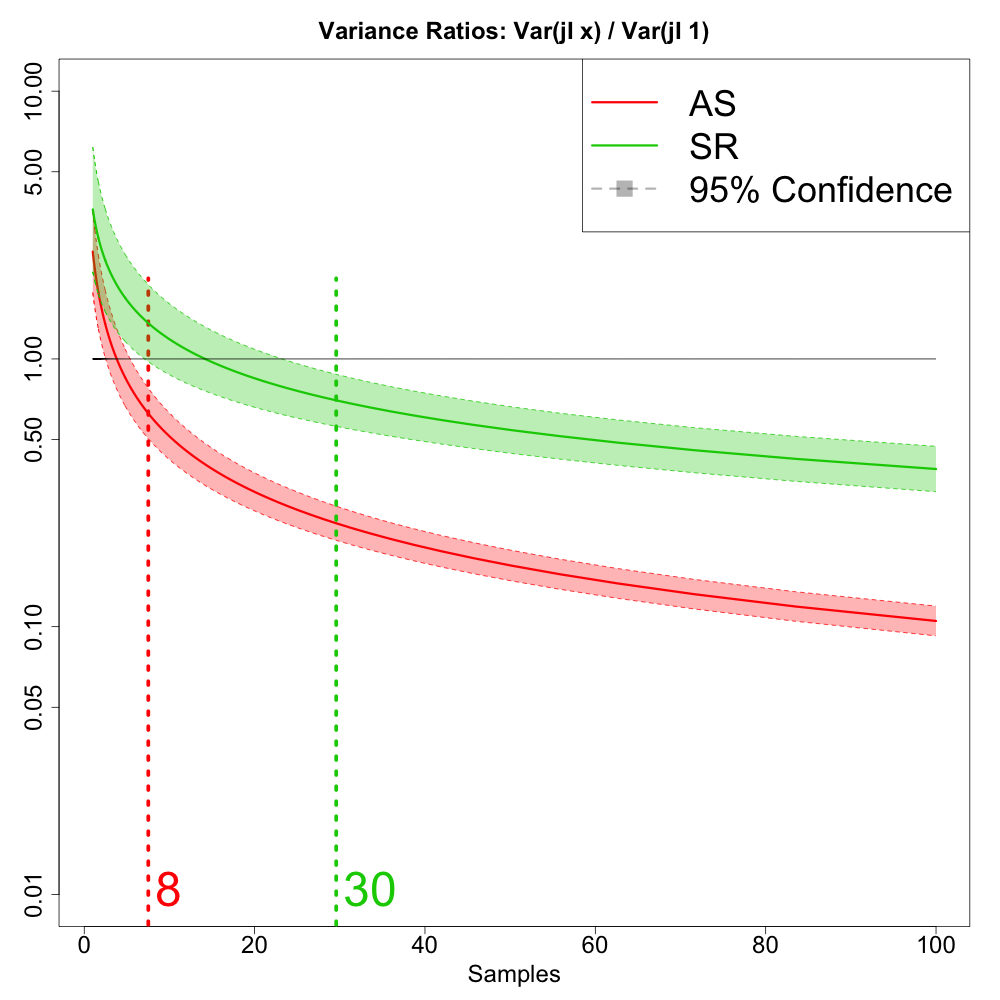

With WEBGL_draw_buffers, we can use a single pass to write each texture in the g-buffer. Compared to using a single pass per texture, this improves performance and reduces the amount of JavaScript code and GLSL shaders. As shown in the graph below, as scene complexity increases so does the benefit of using WEBGL_draw_buffers. Since increasing scene complexity requires more drawElements/drawArrays calls, more JavaScript overhead, and transforms more triangles, WEBGL_draw_buffers provides a benefit by writing the g-buffer in a single pass, not a pass per texture.

All performance numbers were measured using an NVIDIA GT 620M, which is a low-end GPU with 96 cores, in FireFox 26.0 on Window 8. In the above graph, 20 point lights were used. The light intensity decreases proportionally to the square of the distance between the current position and the light position. Each Stanford Dragon is 100,000 triangles and requires five draw calls so, for example, when 25 dragons are rendered, 125 draw calls (and related state changes) are issued, and a total of 2,500,000 triangles are transformed.

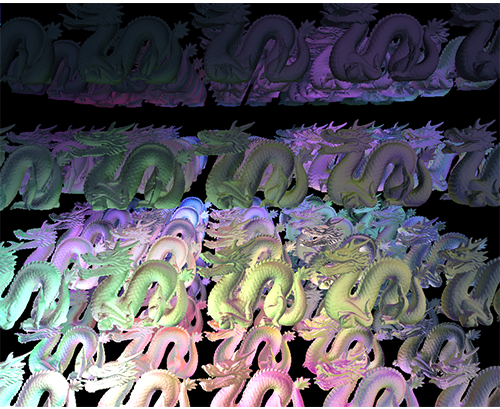

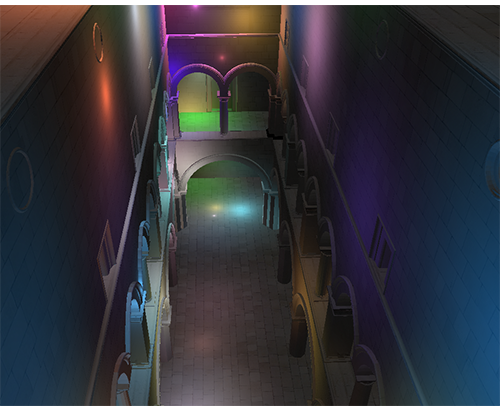

WEBGL_draw_buffers test scene, shown here with 100 Stanford Dragons.

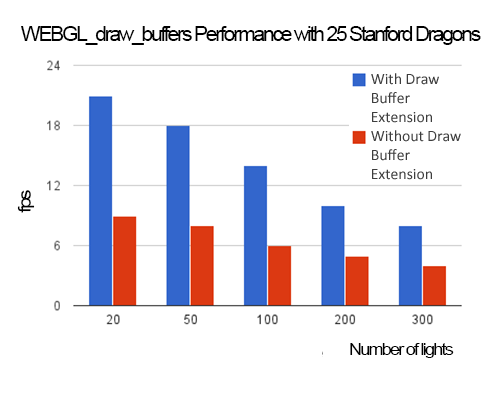

Of course, when scene complexity is very low, like the case of one dragon, the cost of the g-buffer pass is low so the savings from WEBGL_draw_buffers are minimal, especially if there are many lights in the scene, which drives up the cost of the light accumulation pass as shown in the graph below.

Deferred shading requires a lot of GPU memory bandwidth, which can hurt performance and increase power usage. After the g-buffer pass, a naive implementation of the light accumulation pass would render each light as a full-screen quad and read the entirety of each g-buffer. Since most light types, like point and spot lights, attenuate and have a limited volume of effect, the full-screen quad can be replaced with a world-space bounding volume or tight screen-space bounding rectangle. Our implementation renders a full-screen quad per light and uses the scissor test to limit the fragment shader to the light’s volume of effect.

Tile-Based Deferred Shading

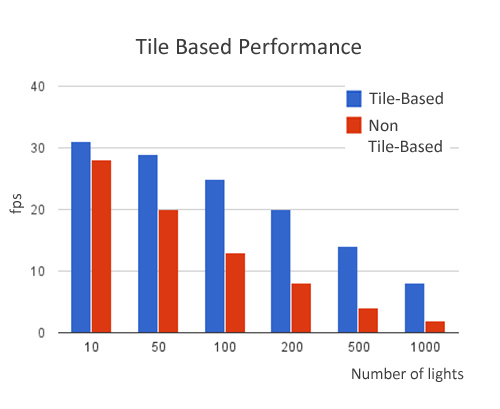

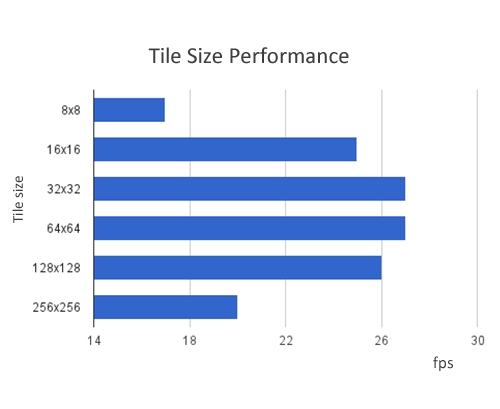

Tile-based deferred shading takes this a step farther and splits the screen into tiles, for example 16×16 pixels, and then determines which lights influence each tile. Light-tile information is then passed to the shader and the g-buffer is only read once for all lights. Since this drastically reduces memory bandwidth, it improves performance. The following graph shows performance for the sponza scene (66,450 triangles and 38 draw calls) at 1024×768 with 32×32 tiles.

Tile size affects performance. Smaller tiles require more JavaScript overhead to create light-tile information, but less computation in the lighting shader. Larger tiles have the opposite tradeoff. Therefore, choosing a suitable tile is important for the performance. The figure below is shown the relationship between tile size and performance with 100 lights.

A visualization of the number of lights in each tile is shown below. Black tiles have no lights intersecting them and white tiles have the most lights.

Shaded version of tile visualization.

Conclusion

WEBGL_draw_buffers is a useful extension for improving the performance of deferred shading in WebGL. Checkout the live demo and our code on github.

Acknowledgements

We implemented this project for the course CIS 565: GPU Programming and Architecture, which is part of the computer graphics program at the University of Pennsylvania. We thank Liam Boone for his support and Eric Haines and Morgan McGuire for reviewing this article.

References

- Deferred Rendering in Killzone 2 by Michal Valient

- Light Pre-Pass by Wolfgang Engel

-

Compact Normal Storage for Small

G-Buffers by Aras Pranckevicius - Tiled Shading by Ola Olsson and Ulf Assarsson

- Deferred Rendering for Current and Future Rendering Pipelines by Andrew Lauritzen

- Z-Prepass Considered Irrelevant by Morgan McGuire

GPU Pro 5 - Object-order Ray Tracing for Fully Dynamic Scenes

Our article presents a method for tracing incoherent secondary rays that integrates well with existing rasterization-based real-time rendering engines. In particular, it requires only linear scene access and supports fully dynamic scene geometry comprised only of triangle soups. All parts of the method that work with scene geometry are implemented in the standard graphics pipeline. Thus, the ability to generate, transform and animate geometry via shaders is fully retained. Moreover, shading can share the same material system that is used in a deferred shading rasterizer.

Consequently, our method allows for a unified rendering architecture that supports both rasterization and ray tracing. The more expensive ray tracing can easily be restricted to complex phenomena that require it, such as reflections and refractions on arbitrarily shaped scene geometry. Other rendering steps can be dealt with using rasterization as usual.

While our method of ray tracing works object-order, we still manage to limit the number of intersection tests that have to be performed: Using a coarse conservative voxel approximation of the scene, we first estimate potential hit points for each ray and subsequently only test triangles with rays that have potential hits nearby. Furthermore, we introduce a multi-pass intersection testing scheme that allows for early termination of rays on first hit (analogous to Z-culling optimizations), which is a recurring problem in methods that deviate from standard (depth-first) traversal of spatial data structures.

Our GPU Pro 5 article provides a detailed description of our ray tracing method as well as a sample implementation that demonstrates the tracing of incoherent secondary rays with reflection rays. Ray tracing is implemented in the context of a traditional rasterization-based deferred shading engine, everything besides reflection rays is rasterized as usual.

Tobias Zirr, Hauke Rehfeld and Carsten Dachsbacher

When a drop falls on a dry surface, our intuition tells us it...

When a drop falls on a dry surface, our intuition tells us it will splash, breaking up into many smaller droplets. Yet this is not always the case. The splashing of a droplet depends on many factors, including surface roughness, viscosity, drop size, and—strangely enough—air pressure. It turns out there is a threshold air pressure below which splashing is suppressed. Instead, a drop will spread and flatten without breaking up, as shown in the video above. For contrast, here is the same fluid splashing at atmospheric pressure. This splash suppression at low pressures is observed for both low and high viscosity fluids. Although the mechanism by which gases affect splashing is still under investigation, measurements show that no significant air layer exists under the spreading droplet except near the very edges. This suggests that the splash mechanism depends on how the spreading liquid encroaches on the surrounding gas. (Video credit: S. Nagel et al.; research credit: M. Driscoll et al.)

Pending Release

Dysis has come a long way since this time last year - and we are nearly ready for it’s public release!

The Dysis engine has been completely re-written in an attempt to make it fully compatible (and controllable) on a much wider variety of computers. The graphics components have been completely stripped down and re-written, all operations are now openGL 3+ compliant (minus some small specific vendor hacks). This means that the base engine runs much faster on every single graphics card. The biggest improvements have been seen on the lower end cards (mostly testing against the Intel HD 3000 here - we saw a jump from 7fps in the base engine to 450fps). This doesn’t reflect the full game fps with all post-processing and units+terrain visible, but it does mean that the game can run quite a bit better on those cards with everything visible.

The shading components in Dysis are completely new - they feature new smooth shadows that scale nicely and are actually more efficient than the old shading method. The biggest news is that Dysis will release with an exciting set of sandbox tools and multiplayer! We are considering this an alpha release - since the main campaign still isn’t in place, and there are many more assets to come (weekly) - however, the sandbox tools, multiplayer and steam interaction should provide hours of playtime!

Let’s take a quick look at the sandbox process flow:

Character Builder

We’ll start here so that we can build some characters first and then place them into a world we build. The character builder gives you access to almost all of the models used in Dysis (anything previous used in any unit, structure or piece of foliage), plus all of the textures associated with them. You can mix and match textures (though you’ll notice that only textures designed to fit a model will look right - but you could tweak a texture and apply that to a model too).

We’ll start out with a robot chassis and add joints from there.

You can add particle effects (flares, fire, smoke, etc.) here, which will make the models look cooler, but are purely aesthetic (users with low end cards may have to turn these effects off during the actual game - so keep this in mind when building your models).

Once you’ve completely built you model, you can designate it’s design to be a structure, unit or piece of foliage. If it’s foliage, it’ll be easily placed on the maps, so I recommend keeping the model simple. Structures are going to be pretty simple too - however you’ll notice they have two animation slots - one for standby, and another for “working”. This is what they will be doing when they are producing units or doing research. Units are the most complicated - they need a whole set of animations, plus they need to designate which structure is capable of building them, and what other unit (if any) sits before them in the tech tree. Previous units and structures you have made will become available to you if you decide to make any.

As Dysis develops, the tech tree and current units and structures may change - so keep in mind that you may need to change your custom characters to keep them working (hopefully we can keep compatibility issues to a minimum though!).

Currently the animation and tech tree features are unfinished in the character builder - they are in progress and need to be finished before release, and then the character builder will be ready.

World Generator

Dysis maps will be available in two forms - randomly generated, and static. The static maps will start from randomly generated (however a user could completely strip down the world and redesign in - there will be a bare generator to be used just for that purpose). The world generator will allow you to design landscapes on a grand scale, determine how mountainous, and what materials you want to see spread throughout the map. You’ll be able to assign foliage to materials, however you won’t be able to choose exactly where it generates.

Everything built in the world generator is random - when you save a generator, the parameters are saved, not the specific map. When you launch a game, you’ll be able to seed the generator (so that you can play the same map again if you want), however it is encouraged to try out new and random seeds. If you design a generator you like a lot, and find a seed that looks really good - that’s what the terrain sculpting tool is for.

The world generator is nearly feature complete, it is missing only a few buttons/graphics to control material density, placement, and restrictions for materials based on height - however these features already exist in code underneath - so I count this as 95% complete.

Terrain Sculpting

This tool lets you tweak randomly generated maps (you will start from a generator, pick a map size, and then start editing), alter the foliage and place your own foliage in specific places. This is effectively a 3D voxel painting tool that also allows for model placing. You will also have control over where the bases go (otherwise placed in the corners based on the number of players in the game), however this feature hasn’t been implemented yet.

There are a bunch of brushes/tools already part of the terrain sculpting, however we would like to finish up some smoothing tools, copy+paste and potentially undo/redo (though we don’t wan’t to get too bogged down making this perfect when there are more pressing matters).

AI Scripting

This feature is currently least complete and doesn’t have an interface yet. The idea here is that each type of unit will have several command protocols - it’s defaults, and it’s set given by you (if you so choose). You’ll be able to set a hierarchy of command protocols, including “finish command”, “evade fire”, “seek and destroy hostiles” and many other things here.

We consider this a very promising detail in Dysis, as this represents what robots may be like in real life. For example, if you have a working robot (gather resources) - in many games, if that unit comes under fire it is likely to be dumb and continue its job and be destroyed. In Dysis, you could set “finish and command” to be the highest command protocol, and the robot would do just that, but you could also tell working bots that “evade fire” is a higher priority, thus telling bots that come into range of hostiles to move away until they are safe (eventually returning to their job).

The base for this code is mostly in the path-planning, which is already integrated into the Dysis code. We believe that micro playing shouldn’t be difficult - you can give your bots a set of priorities and then an instruction, and trust it to carry out your command - hopefully :)

Multiplayer

Dysis has Steam integration! We have lobby creation, searching and matchmaking through Steam - but we are also working on matching that performance with the ability to play over IP/LAN. To keep multiplayer code smooth, we are trying to match (as much as possible) how Steam handles their network traffic and do that same. Dysis contains router hole-punching technology, allowing you to (hopefully) play without fiddling with too many router settings (server will most likely need to open a port to host). Since Dysis contains both RTS and FPS aspects, the multiplayer is definitely tricky, and we haven’t finished coding it yet.

Most (all?) FPS games run UDP network code, which allows for dropped packets, and packets to come in out of order - there are special techniques used to count packets and to ensure that the game plays fair on every computer - but this doesn’t work so well for an RTS game with many units (if a packet is dropped, it changes the entire game state). We therefore are looking to run both TCP and UDP simultaneously, syncing them to allow players to quickly run in with their first person bot, while also ensuring that the game state controlling all of the other units continues to stay the same across every computer playing. Until we are satisfied that this network code is working up to par, we have disabled FPS mode in multiplayer matches (sorry!).

Release

To get to release we would like to get the sandbox tools working really well (or at least work out any current bugs in them), and integrate player content into the game (allowing people to create units/structures/maps and share them with others). We also need to ensure that all sandbox content plays nicely in the game (and we have some in-game work to finish up on the user-interface side of things).

Each component of the sandbox (especially the AI scripting) has a couple days left of work left to be done on it, and the content integration will likely also take a few days. The user interface and some additional network code will likely also take a few days to finish up. At that point, Dysis will be pushed to Steam and I will request evaluation and release on that platform. I will also request keys on Steam, and push the game up to Desura.

Once the game is on Desura, I will issue an announcement, and make sure to send out any keys to people that don’t already have them. I believe I can generate special beta keys for steam (potentially even bypassing the Valve internal approving stage) - giving quick access to the game there (and achievements!). Once I release keys, I will discuss how to gain access to nightly updates, and weekly updates will happen on more secure code (nightly stuff will likely be buggy - but you can opt in/out at will). I also plan on holding polls on the Dysis forums to determine where people want to see the next set of improvements. I will build a quick dev map on the website in the next couple weeks, to show what I’m planning.

All in all, I would like to have a release version on Steam (and of course Desura) before the end of the month. I know that this has been a long time coming, and last year was a slow and hard year - however Dysis has come a huge distance, and I’m excited to say that it is nearly ready for prime time!

A Quarter Century of Tcl

Custom Vector Allocation

(Number 6 in a series of posts about Vectors and Vector based containers.)

A few posts back I talked about the idea of 'rolling your own' STL-style vector class, based on my experiences with this at PathEngine.

In that original post and these two follow-ups I talked about the general approach and also some specific performance tweaks that actually helped in practice for our vector use cases.

I haven't talked about custom memory allocation yet, however. This is something that's been cited in a number of places as a key reason for switching away from std::vector so I'll come back now and look at the approach we took for this (which is pretty simple, but nonstandard, and also pre C++11), and assess some of the implications of using this kind of non-standard approach.

I approach this from the point of view of a custom vector implementation, but I'll be talking about some issues with memory customisation that also apply more generally.

Why custom allocation?

In many situations it's fine for vectors (and other containers) to just use the same default memory allocation method as the rest of your code, and this is definitely the simplest approach.

(The example vector code I posted previously used malloc() and free(), but works equally well with global operator new and delete.)

But vectors can do a lot of memory allocation, and memory allocation can be expensive, and it's not uncommon for memory allocation operations to turn up in profiling as the most significant cost of vector based code. Custom memory allocation approaches can help resolve this.

And some other good reasons for hooking into and customising allocations can be the need to avoid memory fragmentation or to track memory statistics.

For these reasons generalised memory customisation is an important customer requirement for our SDK code in general, and then by extension for the vector containers used by this code.

Custom allocation in std::vector

The STL provides a mechanism for hooking into the container allocation calls (such as vector buffer allocations) through allocators, with vector constructors accepting an allocator argument for this purpose.

I won't attempt a general introduction to STL allocators, but there's a load of material about this on the web. See, for example, this article on Dr Dobbs, which includes some example use cases for allocators. (Bear in mind that this is pre C++11, however. I didn't see any similarly targeted overview posts for using allocators post C++11.)

A non-standard approach

We actually added the possibility to customise memory allocation in our vectors some time after switching to a custom vector implementation. (This was around mid-2012. Before that PathEngine's memory customisation hooks worked by overriding global new and delete, and required dll linkage if you wanted to manage PathEngine memory allocations separately from allocations in the main game code.)

We've generally tried to keep our custom vector as similar as possible to std::vector, in order to avoid issues with unexpected behaviour (since a lot of people know how std::vector works), and to ensure that code can be easily switched between std::vector and our custom vector. When it came to memory allocation, however, we chose a significantly different (and definitely non-standard) approach, because in practice a lot of vector code doesn't actually use allocators (or else just sets allocators in a constructor), because we already had a custom vector class in place, and because I just don't like STL allocators!

Other game developers

A lot of other game developers have a similar opinion of STL allocators, and for many this is actually then also a key factor in a decision to switch to custom container classes.

For example, issues with the design of STL allocators are quoted as one of the main reasons for the creation of the EASTL, a set of STL replacement classes, by Electronic Arts. From the EASTL paper:

Among game developers the most fundamental weakness is the std allocator design, and it is this weakness that was the largest contributing factor to the creation of EASTL.

And I've heard similar things from other developers. For example, in this blog post about the Bitsquid approach to allocators Niklas Frykholm says:

If it weren't for the allocator interface I could almost use STL. Almost.

Let's have a look at some of the reasons for this distaste!

Problems with STL allocators

We'll look at the situation prior to C++11, first of all, and the historical basis for switching to an alternative mechanism.

A lot of problems with STL allocators come out of confusion in the initial design. According to Alexander Stepanov (primary designer and implementer of the STL) the custom allocator mechanism was invented to deal with a specific issue with Intel memory architecture. (Do you remember near and far pointers? If not, consider yourself lucky I guess!) From this interview with Alexander:

Question: How did allocators come into STL? What do you think of them?

Answer: I invented allocators to deal with Intel's memory architecture. They are not such a bad ideas in theory - having a layer that encapsulates all memory stuff: pointers, references, ptrdiff_t, size_t. Unfortunately they cannot work in practice.

And it seems like this original design intention was also only partially executed. From the wikipedia entry for allocators:

They were originally intended as a means to make the library more flexible and independent of the underlying memory model, allowing programmers to utilize custom pointer and reference types with the library. However, in the process of adopting STL into the C++ standard, the C++ standardization committee realized that a complete abstraction of the memory model would incur unacceptable performance penalties. To remedy this, the requirements of allocators were made more restrictive. As a result, the level of customization provided by allocators is more limited than was originally envisioned by Stepanov.

and, further down:

While Stepanov had originally intended allocators to completely encapsulate the memory model, the standards committee realized that this approach would lead to unacceptable efficiency degradations. To remedy this, additional wording was added to the allocator requirements. In particular, container implementations may assume that the allocator's type definitions for pointers and related integral types are equivalent to those provided by the default allocator, and that all instances of a given allocator type always compare equal, effectively contradicting the original design goals for allocators and limiting the usefulness of allocators that carry state.

Some of the key problems with STL allocators (historically) are then:

- Unnecessary complexity, with some boiler plate stuff required for features that are not actually used

- A limitation that allocators cannot have internal state ('all instances of a given allocator type are required to be interchangeable and always compare equal to each other')

- The fact the allocator type is included in container type (with changes to allocator type changing the type of the container)

There are some changes to this situation with C++11, as we'll see below, but this certainly helps explain why a lot of people have chosen to avoid the STL allocator mechanism, historically!

Virtual allocator interface

So we decided to avoid STL allocators, and use a non-standard approach.

The approach we use is based on a virtual allocator interface, and avoids the need to specify allocator type as a template parameter.

This is quite similar to the setup for allocators in the BitSquid engine, as described by Niklas here (as linked above, it's probably worth reading that post if you didn't see this already, as I'll try to avoid repeating the various points he discussed there).

A basic allocator interface can then be defined as follows:

class iAllocator

{

public:

virtual ~iAllocator() {}

virtual void* allocate(tUnsigned32 size) = 0;

virtual void deallocate(void* ptr) = 0;

// helper

template <class T> void

allocate_Array(tUnsigned32 arraySize, T*& result)

{

result = static_cast<T*>(allocate(sizeof(T) * arraySize));

}

};

The allocate_Array() method is for convenience, concrete allocator objects just need to implement allocate() and free().

We can store a pointer to iAllocator in our vector, and replace the direct calls to malloc() and free() with virtual function calls, as follows:

static T*

allocate(size_type size)

{

T* allocated;

_allocator->allocate_Array(size, allocated);

return allocated;

}

void

reallocate(size_type newCapacity)

{

T* newData;

_allocator->allocate_Array(newCapacity, newData);

copyRange(_data, _data + _size, newData);

deleteRange(_data, _data + _size);

_allocator->deallocate(_data);

_data = newData;

_capacity = newCapacity;

}

These virtual function calls potentially add some overhead to allocation and deallocation. It's worth being quite careful about this kind of virtual function call overhead, but in practice it seems that the overhead is not significant here. Virtual function call overhead is often all about cache misses and, perhaps because there are often just a small number of actual allocator instance active, with allocations tending to be grouped by allocator, this just isn't such an issue here.

We use a simple raw pointer for the allocator reference. Maybe a smart pointer type could be used (for better modern C++ style and to increase safety), but we usually want to control allocator lifetime quite explicitly, so we're basically just careful about this.

Allocators can be passed in to each vector constructor, or if omitted will default to a 'global allocator' (which adds a bit of extra linkage to our vector header):

cVector(size_type size, const T& fillWith,

iAllocator& allocator = GlobalAllocator()

)

{

_data = 0;

_allocator = &allocator;

_size = size;

_capacity = size;

if(size)

{

_allocator->allocate_Array(_capacity, _data);

constructRange(_data, _data + size, fillWith);

}

}

Here's an example concrete allocator implementation:

class cMallocAllocator : public iAllocator

{

public:

void*

allocate(tUnsigned32 size)

{

assert(size);

return malloc(static_cast<size_t>(size));

}

void

deallocate(void* ptr)

{

free(ptr);

}

};

(Note that you normally can call malloc() with zero size, but this is something that we disallow for PathEngine allocators.)

And this can be passed in to vector construction as follows:

cMallocAllocator allocator;

cVector<int> v(10, 0, allocator);

Swapping vectors

That's pretty much it, but there's one tricky case to look out for.

Specifically, what should happen in our vector swap() method? Let's take a small diversion to see why there might be a problem.

Consider some code that takes a non-const reference to vector, and 'swaps a vector out' as a way of returning a set of values in the vector without the need to heap allocate the vector object itself:

class cVectorBuilder

{

cVector<int> _v;

public:

//.... construction and other building methods

void takeResult(cVector<int>& result); // swaps _v into result

};

So this code doesn't care about allocators, and just wants to work with a vector of a given type. And maybe there is some other code that uses this, as follows:

void BuildData(/*some input params*/, cVector& result)

{

//.... construct a cVectorBuilder and call a bunch of build methods

builder.takeResult(result);

}

Now there's no indication that there's going to be a swap() involved, but the result vector will end up using the global allocator, and this can potentially cause some surprises in the calling code:

cVector v(someSpecialAllocator); BuildData(/*input params*/, v); // lost our allocator assignment! // v now uses the global allocator

Nobody's really doing anything wrong here (although this isn't really the modern C++ way to do things). This is really a fundamental problem arising from the possibility to swap vectors with different allocators, and there are other situations where this can come up.

You can find some discussion about the possibilities for implementing vector swap with 'unequal allocators' here. We basically choose option 1, which is to simply declare it illegal to call swap with vectors with different allocators. So we just add an assert in our vector swap method that the two allocator pointers are equal.

In our case this works out fine, since this doesn't happen so much in practice, because cases where this does happen are caught directly by the assertion, and because it's generally straightforward to modify the relevant code paths to resolve the issue.

Comparison with std::vector, is this necessary/better??

Ok, so I've outlined the approach we take for custom allocation in our vector class.

This all works out quite nicely for us. It's straightforward to implement and to use, and consistent with the custom allocators we use more generally in PathEngine. And we already had our custom vector in place when we came to implement this, so this wasn't part of the decision about whether or not to switch to a custom vector implementation. But it's interesting, nevertheless, to compare this approach with the standard allocator mechanism provided by std::vector.

My original 'roll-your-own vector' blog post was quite controversial. There were a lot of responses strongly against the idea of implementing a custom vector, but a lot of other responses (often from the game development industry side) saying something like 'yes, we do that, but we do some detail differently', and I know that this kind of customisation is not uncommon in the industry.

These two different viewpoints makes it worthwhile to explore this question in a bit more detail, then, I think.

I already discussed the potential pitfalls of switching to a custom vector implementation in the original 'roll-your-own vector' blog post, so lets look at the potential benefits of switching to a custom allocator mechanism.

Broadly speaking, this comes down to three key points:

- Interface complexity

- Stateful allocator support

- Possibilities for further customisation and memory optimisation

Interface complexity

If we look at an example allocator implementation for each setup we can see that there's a significant difference in the amount of code required. The following code is taken from my previous post, and was used to fill allocated memory with non zero values, to check for zero initialisation:

// STL allocator version

template <class T>

class cNonZeroedAllocator

{

public:

typedef T value_type;

typedef value_type* pointer;

typedef const value_type* const_pointer;

typedef value_type& reference;

typedef const value_type& const_reference;

typedef typename std::size_t size_type;

typedef std::ptrdiff_t difference_type;

template <class tTarget>

struct rebind

{

typedef cNonZeroedAllocator<tTarget> other;

};

cNonZeroedAllocator() {}

~cNonZeroedAllocator() {}

template <class T2>

cNonZeroedAllocator(cNonZeroedAllocator<T2> const&)

{

}

pointer

address(reference ref)

{

return &ref;

}

const_pointer

address(const_reference ref)

{

return &ref;

}

pointer

allocate(size_type count, const void* = 0)

{

size_type byteSize = count * sizeof(T);

void* result = malloc(byteSize);

signed char* asCharPtr;

asCharPtr = reinterpret_cast<signed char*>(result);

for(size_type i = 0; i != byteSize; ++i)

{

asCharPtr[i] = -1;

}

return reinterpret_cast<pointer>(result);

}

void deallocate(pointer ptr, size_type)

{

free(ptr);

}

size_type

max_size() const

{

return 0xffffffffUL / sizeof(T);

}

void

construct(pointer ptr, const T& t)

{

new(ptr) T(t);

}

void

destroy(pointer ptr)

{

ptr->~T();

}

template <class T2> bool

operator==(cNonZeroedAllocator<T2> const&) const

{

return true;

}

template <class T2> bool

operator!=(cNonZeroedAllocator<T2> const&) const

{

return false;

}

};

But with our custom allocator interface this can now be implemented as follows:

// custom allocator version

class cNonZeroedAllocator : public iAllocator

{

public:

void*

allocate(tUnsigned32 size)

{

void* result = malloc(static_cast<size_t>(size));

signed char* asCharPtr;

asCharPtr = reinterpret_cast<signed char*>(result);

for(tUnsigned32 i = 0; i != size; ++i)

{

asCharPtr[i] = -1;

}

return result;

}

void

deallocate(void* ptr)

{

free(ptr);

}

};

As we saw previously a lot of stuff in the STL allocator relates to some obsolete design decisions, and is unlikely to actually be used in practice. The custom allocator interface also completely abstracts out the concept of constructed object type, and works only in terms of actual memory sizes and pointers, which seems more natural and whilst doing everything we need for the allocator use cases in PathEngine.

For me this is one advantage of the custom allocation setup, then, although probably not something that would by itself justify switching to a custom vector.

If you use allocators that depend on customisation of the other parts of the STL allocator interface (other than for data alignment) please let me know in the comments thread. I'm quite interested to hear about this! (There's some discussion about data alignment customisation below.)

Stateful allocator requirement

Stateful allocator support is a specific customer requirement for PathEngine.

Clients need to be able to set custom allocation hooks and have all allocations made by the SDK (including vector buffer allocations) routed to custom client-side allocation code. Furthermore, multiple allocation hooks can be supplied, with the actual allocation strategy selected depending on the actual local execution context.

It's not feasible to supply allocation context to all of our vector based code as a template parameter, and so we need our vector objects to support stateful allocators.

Stateful allocators with the virtual allocator interface

Stateful allocators are straightforward with our custom allocator setup. Vectors can be assigned different concrete allocator implementations and these concrete allocator implementations can include internal state, without code that works on the vectors needing to know anything about these details.

Stateful allocators with the STL

As discussed earlier, internal allocator state is something that was specifically forbidden by the original STL allocator specification. This is something that has been revisited in C++11, however, and stateful allocators are now explicitly supported, but it also looks like it's possible to use stateful allocators in practice with many pre-C++11 compile environments.

The reasons for disallowing stateful allocators relate to two specific problem situations:

- Splicing nodes between linked lists with different allocation strategies

- Swapping vectors with different allocation strategies

C++11 addresses these issues with allocator traits, which specify what to do with allocators in problem cases, with stateful allocators then explicitly supported. This stackoverflow answer discusses what happens, specifically, with C++11, in the vector swap case.

With PathEngine we want to be able to support clients with different compilation environments, and it's an advantage not to require C++11 support. But according to this stackoverflow answer, you can also actually get away with using stateful allocators in most cases, without explicit C++11 support, as long as you avoid these problem cases.

Since we already prohibit the vector problem case (swap with unequal allocators), that means that we probably can actually implement our stateful allocator requirement with std::vector and STL allocators in practice, without requiring C++11 support.

There's just one proviso, with or without C++11 support, due to allowances for legacy compiler behaviour in allocator traits. Specifically, it doesn't look like we can get the same assertion behaviour in vector swap. If propagate_on_container_swap::value is set to false for either allocator then the result is 'undefined behaviour', so this could just swap the allocators silently, and we'd have to be quite careful about these kinds of problem cases!

Building on stateful allocators to address other issues

If you can use stateful allocators with the STL then this changes things a bit. A lot of things become possible just by adding suitable internal state to standard STL allocator implementations. But you can also now use this allocator internal state as a kind of bootstrap to work around other issues with STL allocators.

The trick is wrap up the same kind of virtual allocator interface setup we use in PathEngine in an STL allocator wrapper class. You could do this (for example) by putting a pointer to our iAllocator interface inside an STL allocator class (as internal state), and then forward the actual allocation and deallocation calls as virtual function calls through this pointer.

So, at the cost of another layer of complexity (which can be mostly hidden from the main application code), it should now be possible to:

- remove unnecessary boiler plate from concrete allocator implementations (since these now just implement iAllocator), and

- use different concrete allocator types without changing the actual vector type.

Although I'm still not keen on STL allocators, and prefer the direct simplicity of our custom allocator setup as opposed to covering up the mess of the STL allocator interface in this way, I have to admit that this does effectively remove two of the key benefits of our custom allocator setup. Let's move on to the third point, then!

Refer to the bloomberg allocator model for one example of this kind of setup in practice (and see also this presentation about bloomberg allocators in the context C++11 allocator changes).

Memory optimisation

The other potential benefit of custom allocation over STL allocators is basically the possibility to mess around with the allocation interface.

With STL allocators we're restricted to using the allocate() and deallocate() methods exactly as defined in the original allocator specification. But with our custom allocator we're basically free to mess with these method definitions (in consultation with our clients!), or to add additional methods, and generally change the interface to better suit our clients needs.

There is some discussion of this issue in this proposal for improving STL allocators, which talks about ways in which the memory allocation interface provided by STL allocators can be sub-optimal.

Some customisations implemented in the Bitsquid allocators are:

- an 'align' parameter for the allocation method, and

- a query for the size of allocated blocks

PathEngine allocators don't include either of these customisations, although this is stuff that we can add quite easily if required by our clients. Our allocator does include the following extra methods:

virtual void*

expand(

void* oldPtr,

tUnsigned32 oldSize,

tUnsigned32 oldSize_Used,

tUnsigned32 newSize

) = 0;

// helper

template <class T> void

expand_Array(

T*& ptr,

tUnsigned32 oldArraySize,

tUnsigned32 oldArraySize_Used,

tUnsigned32 newArraySize

)

{

ptr = static_cast<T*>(expand(

ptr,

sizeof(T) * oldArraySize,

sizeof(T) * oldArraySize_Used,

sizeof(T) * newArraySize

));

}

What this does, essentially, is to provide a way for concrete allocator classes to use the realloc() system call, or similar memory allocation functionality in a custom head, if this is desired.

As before, the expand_Array() method is there for convenience, and concrete classes only need to implement the expand() method. This takes a pointer to an existing memory block, and can either add space to the end of this existing block (if possible), or allocate a larger block somewhere else and move existing data to that new location (based on the oldSize_Used parameter).

Implementing expand()

A couple of example implementations for expand() are as follows:

// in cMallocAllocator, using realloc()

void*

expand(

void* oldPtr,

tUnsigned32 oldSize,

tUnsigned32 oldSize_Used,

tUnsigned32 newSize

)

{

assert(oldPtr);

assert(oldSize);

assert(oldSize_Used <= oldSize);

assert(newSize > oldSize);

return realloc(oldPtr, static_cast<size_t>(newSize));

}

// as allocate and move

void*

expand(

void* oldPtr,

tUnsigned32 oldSize,

tUnsigned32 oldSize_Used,

tUnsigned32 newSize

)

{

assert(oldPtr);

assert(oldSize);

assert(oldSize_Used <= oldSize);

assert(newSize > oldSize);

void* newPtr = allocate(newSize);

memcpy(newPtr, oldPtr, static_cast<size_t>(oldSize_Used));

deallocate(oldPtr);

return newPtr;

}

So this can either call through directly to something like realloc(), or emulate realloc() with a sequence of allocation, memory copy and deallocation operations.

Benchmarking with realloc()

With this expand() method included in our allocator it's pretty straightforward to update our custom vector to use realloc(), and it's easy to see how this can potentially optimise memory use, but does this actually make a difference in practice?

I tried some benchmarking and it turns out that this depends very much on the actual memory heap implementation in use.

I tested this first of all with the following simple benchmark:

template <class tVector> static void

PushBackBenchmark(tVector& target)

{

const int pattern[] = {0,1,2,3,4,5,6,7};

const int patternLength = sizeof(pattern) / sizeof(*pattern);

const int iterations = 10000000;

tSigned32 patternI = 0;

for(tSigned32 i = 0; i != iterations; ++i)

{

target.push_back(pattern[patternI]);

++patternI;

if(patternI == patternLength)

{

patternI = 0;

}

}

}

(Wrapped up in some code for timing over a bunch of iterations, with result checking to avoid the push_back being optimised out.)

This is obviously very far from a real useage situation, but the results were quite interesting:

| OS | container type | time |

|---|---|---|

| Linux | std::vector | 0.0579 seconds |

| Linux | cVector without realloc | 0.0280 seconds |

| Linux | cVector with realloc | 0.0236 seconds |

| Windows | std::vector | 0.0583 seconds |

| Windows | cVector without realloc | 0.0367 seconds |

| Windows | cVector with realloc | 0.0367 seconds |

So the first thing that stands out from these results is that using realloc() doesn't make any significant difference on windows. I double checked this, and while expand() is definitely avoiding memory copies a significant proportion of the time, this is either not significant in the timings, or memory copy savings are being outweighed by some extra costs in the realloc() call. Maybe realloc() is implemented badly on Windows, or maybe the memory heap on Windows is optimised for more common allocation scenarios at the expense of realloc(), I don't know. A quick google search shows that other people have seen similar issues.

Apart from that it looks like realloc() can make a significant performance difference, on some platforms (or depending on the memory heap being used). I did some extra testing, and it looks like we're getting diminishing returns after some of the other performance tweaks we made in our custom vector, specifically the tweaks to increase capacity after the first push_back, and the capacity multiplier tweak. With these tweaks backed out:

| OS | container type | time |

|---|---|---|

| Linux | cVector without realloc, no tweaks | 0.0532 seconds |

| Linux | cVector with realloc, no tweaks | 0.0235 seconds |

So, for this specific benchmark, using realloc() is very significant, and even avoids the need for those other performance tweaks.

Slightly more involved benchmark

The benchmark above is really basic, however, and certainly isn't a good general benchmark for vector memory use. In fact, with realloc(), there is only actually ever one single allocation made, which is then naturally free to expand through the available memory space!

A similar benchmark is discussed in this stackoverflow question, and in that case the benefits seemed to reduce significantly with more than one vector in use. I hacked the benchmark a bit to see what this does for us:

template <class tVector> static void

PushBackBenchmark_TwoVectors(tVector& target1, tVector& target2)

{

const int pattern[] = {0,1,2,3,4,5,6,7};

const int patternLength = sizeof(pattern) / sizeof(*pattern);

const int iterations = 10000000;

tSigned32 patternI = 0;

for(tSigned32 i = 0; i != iterations; ++i)

{

target1.push_back(pattern[patternI]);

target2.push_back(pattern[patternI]);

++patternI;

if(patternI == patternLength)

{

patternI = 0;

}

}

}

template <class tVector> static void

PushBackBenchmark_ThreeVectors(tVector& target1, tVector& target2, tVector& target3)

{

const int pattern[] = {0,1,2,3,4,5,6,7};

const int patternLength = sizeof(pattern) / sizeof(*pattern);

const int iterations = 10000000;

tSigned32 patternI = 0;

for(tSigned32 i = 0; i != iterations; ++i)

{

target1.push_back(pattern[patternI]);

target2.push_back(pattern[patternI]);

target3.push_back(pattern[patternI]);

++patternI;

if(patternI == patternLength)

{

patternI = 0;

}

}

}

With PushBackBenchmark_TwoVectors():

| OS | container type | time |

|---|---|---|

| Linux | std::vector | 0.0860 seconds |

| Linux | cVector without realloc | 0.0721 seconds |

| Linux | cVector with realloc | 0.0495 seconds |

With PushBackBenchmark_ThreeVectors():