(Season of Skulls is the third book in the New Management trilogy, following on from Dead Lies Dreaming and Quantum of Nightmares. It's an ongoing story ...)

It was a bright, cold morning in Hyde Park, and a detachment of

Household Cavalry was riding along North Carriage Drive in parade dress,

escorting a tumbril of condemned prisoners to Marble Arch.

Imp—Jeremy Starkey, also known as the Impresario—paused beside the

Peter Pan statue to watch. A tall, skinny man in his early twenties,

with swept-back hair and a narrow, intense face, Imp might have been a

grown-up Pan himself: a Peter Pan who'd lost his wings and grown up hard

and cynical under the aegis of the New Management. He tugged his scarf

with unease, then checked his counterfeit Mickey Mouse watch. He wasn't

going to be late to the meeting with his sister and her lawyer if he

took an extra ten minutes, he decided. Nevertheless, he drew his

disreputable duster tight and hunched his shoulders. A chill wind was

blowing, as if practicing to set the cartful of fettered felons swinging

once they danced the Tyburn tango. It was 2017, yet some things in

Bloody England never changed.

Albeit not quite everything.

Cavalry soldiers in polished silver cuirasses riding huge animals

through the park were nothing new. But beside their cuirasses and

high-plumed helmets these riders wore polished steel plate that covered

them from head to foot, with wireless headsets, grenade launchers, and

quadrotor observation drones whining overhead. Their faces were blank

behind curves of bulletproof mirror glass. Their horselike steeds had

sickle-bladed claws on either side of their hooves: their heads bore

fanged maws and the front-pointing eyes of predators. Someone was

clearly concerned about rescue attempts.

Imp shuddered and looked away from the dour procession. The distant

noise of the crowd gathering around Marble Arch to watch the execution

hurt his ears. He didn't want to hear the taunts of idiot rubberneckers

who couldn't imagine that one day it might be them.

"Not my circus, not my monkeys," Imp muttered under his breath. Not my

holiday, not my hanging, he meant. He brought his roll-up to his lips

and began to inhale, but the joint had burned out, and besides, it was

down to the roach. He walked across to the dog-waste bin and dumped it,

then continued on his way.

It wouldn't do to keep Eve and her solicitor waiting, even though he

feared the coming meeting almost as much as his own personal execution.

* * *

There's a fine line between love and hate, Eve reflected, as she watched

her brother explain his mistake to the solicitor. Will she testify

against me if I murder him? Eve asked herself. Is provocation a

defense?

Like her brother, Eve was tall and lanky, but there the resemblance

ended. She'd carefully curated her image as a blue-eyed ice queen in a

designer suit. A penchant for sudden-death downsizings and the warm and

friendly disposition of an angry wasp went with the territory. It had

been utterly essential while she'd been Rupert's executive assistant.

But now she wondered if the weight of armor she wore was worth the cost:

even the lawyer seemed leery of her.

The solicitor cleared her throat, glanced at Eve for permission, then

addressed Imp. "Let me get this straight, Mr. Starkey. You didn't ask

your sister to confirm that she was undergoing a security clearance

background check. You did not seek professional advice before initialing

every page of the, um, 'nondisclosure agreement,' and the witness

statement attached to it. You didn't read pages two through twenty-six.

You did not ask for a translation of section thirteen, paragraphs four

through six, even though it was written in medieval Norman French. Nor

did you read section fourteen, the special license, which was drafted in

fourteenth-century Church Latin, or the codicil stating that the

contract—most of which you didn't read—was subject to adjudication

under the laws of Skaro—an island in the English Channel with its own

unique legal code—and that by signing you ceded your right to redress

in any other jurisdiction. At no point did the messenger offer you any

payment or inducement for your signature. Is that right?"

Imp nodded sheepishly. "I was very stoned. We'd just buried Dad."

Eve's cheek twitched, but her expression remained as coldly impassive as

the north face of a glacier.

The solicitor clearly found it a struggle to maintain her facade of

professional sympathy. "Well then. To summarize, you signed an affidavit

certifying that you were the oldest living male relative of your sister,

Evelyn Starkey"—the lawyer sent Eve a tiny nod that might have passed

for feminine sympathy—"and signed a binding agreement to marriage by

proxy, solemnized under special license as permitted by the Barony of

Skaro, where the messenger acted as the representative of the groom,

Lord—"

"Baron," Eve corrected automatically, then bit her tongue.

"Baron Rupert de Montfort Bigge, Lord of Skaro." The solicitor sent Eve

another coded look. "Is that your understanding, too, Ms. Starkey? Or

should that be Mrs. de Montfort Bigge?"

Fuck. A very expensive crunch announced the demise of the Montegrappa

Extra Otto Sapphirus Eve held clenched in her fist. She dropped the

wrecked fountain pen on her blotter and flexed her aching fingers. The

writing instrument, carved by hand from solid lapis lazuli, was one of

the most expensive pens on sale anywhere: it had come from Rupert's

desk. The body might be repairable but the converter, siphon, and nib

were a write-off. Emerald ink bled across the absorbent paper like

green-eyed anger.

"I go by Starkey," she said, ruthlessly strangling a scream of rage in

its crib. "So, what are my prospects for an annulment?"

The solicitor switched off her voice recorder and restacked the papers.

"I'll need to do some research, I'm afraid. Skaroese law is a very

esoteric speciality and I can't offer you a professional opinion without

further work, but this is my supposition: in general a proxy marriage

contracted in a jurisdiction where it is legal—the lex loci

celebrationis—is recognized as binding in England and Wales. Assuming

this contract is properly drafted—and there would have been no point

in obtaining your brother's signature if it was not—an annulment would

have to be carried out under that legal system, which means . . . " she

trailed off, side-eying Eve's brother.

Imp looked up, his expression hangdog. Unlike any canine he knew exactly

what he'd done wrong.

"Jeremy." Eve pointed at the door. "Scram."

"Aw—" Whatever protest he'd been forming died unvoiced when he looked

at her: Eve's expression was deathly. He uncoiled from his visitor's

chair and slouched doorward, shoulders hunched. "I'll just be in the

staff break room."

Eve waited for the door to shut. "Finally." She congratulated herself

for her restraint in not strangling him as she rubbed her forehead,

heedless of her foundation. The viciously tight bun she'd put her hair

into in anticipation of this confrontation was giving her a headache.

"How bad is it? Really?"

"Well." The solicitor slid her folio sideways, out of Eve's direct line

of sight. "If your hus— if Baron de Montfort Bigge is dead, and if you

can obtain a death certificate, then you're off the hook. Once you

obtain a death certificate it's all done and dusted and you can remarry

if that's what you want to do."

"Assume he's not dead, just missing. No body, but no proof he's alive

either."

"Then you need to either provide proof that he died or run down the

clock. You can apply for a declaration of presumed death after seven

years in the UK, and Skaroese law probably says the same—I will

confirm that later—so, six years and nine months from now. In the

meantime, if he's missing his continued existence is not an impediment

to you unless you wish to marry someone else—" The solicitor raised

her eyebrow. "Or is it?"

Eve's cheek twitched again. "The law takes no account of magic. Or

aliens and time travel, for that matter."

"Magic—" The solicitor was momentarily nonplussed. Eve hoped she

wasn't one of the materialist holdouts. The arrival of the New

Management (not to mention an elven armored brigade rampaging through

the Yorkshire Dales, vampires taking their seats in the House of Lords,

and superheroes breaking the sound barrier as they sped to intercept

airliners) had sent many people into reality-denying madness. They

called the casualties of the Alfär invasion crisis actors and used

elaborate conspiracy theories to explain the Prime Minister's penumbral

darkling and appetite for human souls. It was all a Russian

disinformation scheme, a viral pandemic that induced delirious

hallucinations, or a conspiracy of (((cosmopolitans))). "Seriously?"

"Contracts have implications for ritual magic." Eve let her smile slip,

allowing her feral desperation to shine through. "If he's not dead then

as long as the marriage is legally binding, I'm—" She shook her head.

"Let's not go there."

Rupert had imposed a geas on her—an obedience compulsion—as one of

the initial conditions of her employment. She'd thought it contemptibly

weak at the time, and he'd never tried to use it, so she'd never tried

to break it. He ensured her compliance through traditional

means—gaslighting and blackmail—and she was completely taken in by

his pantomime of sorcerous incompetence. When she finally discovered the

proxy marriage certificate after his disappearance, it became clear that

he'd known exactly what he was doing with the geas: the bumbling was

a malevolent act.

Skaroese law was based on medieval Norman law, but hadn't been updated

much since the fourteenth century. The Reformation had passed it by, and

it still embodied archaic Catholic assumptions. Skaroese marriage

recognized the archaic status of feme covert: a married woman was

legally of one flesh with her husband, a mere appendage with no more

right to own property or express an opinion in court than his dressing

table or his horse. A geas given additional strength by such a

marriage contract was only distinguishable from chattel slavery because

the rights it granted her husband were nontransferable.

Since discovering the document, Eve had awakened in a bath of cold sweat

at least three times a week, stricken by the conviction that her scheme

to rid the world of Rupert had failed.

The solicitor continued to lay out the dimensions of her prison. "As the

marriage was contracted under Skaroese law, you should apply for an

annulment in that jurisdiction." (And I can bill for more hours, Eve

mentally added, a trifle unfairly.) "You'll need to obtain a decree of

nullity from the diocese, essentially a declaration that the marriage

never existed. That'd be the diocese of Skaro, which is the first stop.

Have you spoken to your priest?"

"Ah, there might be a problem with that." Skaro had been taken over by

the Cult of the Mute Poet a generation ago. The worshippers of

Ppilimtec, god of wine and poetry, did not play well with other

religions—and Rupert was its bishop. There had been no other church on

Skaro for decades. "I might be able to get one, though." If I offer to

cover the repair bills, pay for the exorcism and reconsecration, and

fund a stipend for the priest and his bodyguards, surely the Catholic

Church would assign someone? It wasn't as if she was a believer

herself, but her mother had dragged her through baptism and confirmation

in her childhood, so no loophole there. As for the bodyguards, Ppilimtec

was a jealous deity. Annulment was going to be expensive, but as she had

effective control of the Bigge Organization, money was the one thing she

was not short of.

"Then that brings me to another question." The solicitor paused

apprehensively. She tugged at her jacket, brushed imaginary lint from

her lapel, screwed up her courage, and asked, very quickly, "In

strictest confidence, Ms. Starkey, have you ever engaged in sexual

intercourse with Baron Skaro? Either before or after the proxy

marriage?"

Eve shook her head. "No, I never had sex with—" She paused for a

double take. "Does telephone sex count?"

"Tele—" The solicitor gave her a blank look. "Could you clarify

that?"

No, Eve thought, but her mouth answered regardless. "Rupert would

phone me at any hour of the day or night—I was his executive assistant

and PA, both hats at the same time—and treat me like a phone-sex

worker. The only way to get him to shut up was to tell him a

pornographic bedtime story until he finished wanking." She could feel

her cheeks glowing. "It was workplace sexual harassment, but I couldn't

quit the job—"

Eve felt something cold and moist on the back of her left hand. She

glanced down with carefully concealed distaste. The solicitor had laid a

hand on her wrist: the woman wore an expression of heartfelt sympathy.

"I understand completely," she said. Show me where the bad man touched

you. "I'm pretty certain the Catholic Church doesn't count coerced

telephone sex as intercourse for purposes of obtaining an annulment."

She smiled apologetically.

"In general, the three requirements for a valid marriage in canon law

are that the couple married freely and without reservation, that they

love and honor each other, and they accept children lovingly from God.

None of those apply to you. I see absolutely no way that these

papers"—she tapped her folio—"can be construed as anything other

than an attempt to bypass the intent if not the letter of the law, so I

think it ought to be possible to convince a parish priest to petition

the diocese tribunal for an annulment on your behalf. It normally takes

eighteen months or so to obtain one, with the right supporting

documents. But as I said, I need to research the minutiae of Skaroese

law." She rose. "Is that everything you wanted me to cover?"

Eve suppressed a brief impulse to bang her head on the table. Eighteen

months. Eighteen months of maximum vulnerability, eighteen months of

night terrors, eighteen months of uncertainty and paradox. "It'll have

to do," she said tightly. "If there's anything I can do to speed the

process up—anything, whatever the cost—be sure to let me know."

She stood and led the lawyer to the door of her office. "Day or night,"

she emphasized, showing her out.

* * *

Rupert de Montfort-Bigge, Baron Skaro, had been missing for nearly three

months, lost in the dream roads that connected other lands, times, and

universes. He'd been trying to retrieve a cursed tome that Eve had

procured on his behalf, and creatively mislaid.

If he was still alive, Rupert would be in his late thirties but appear a

decade older. He'd chosen his grandparents well: born to a birthright of

privilege and wealth, he'd had the freedom to squander everything fate

handed him and to write it off as a learning experience.

After schooling at Eton he'd studied Philosophy, Politics, and Economics

at Oxford. While at university he'd joined the Oxford Union, a debating

society and the usual first step toward a Conservative junior minister's

post. He'd also joined other, less savory clubs, and a particularly

damning video shot at a boisterous underground dining club had surfaced

when his name was put forward for a parliamentary seat. Bestiality was a

crime, necrophilia was a crime . . . bestial necrophilia in white-tie

and tails fell into a Twilight Zone loophole that Parliament had

failed to criminalize, but the candidate selection committee

nevertheless felt it best to err on the side of caution. (Questions

about his vulnerability to blackmail had been raised.) Rejected by

politics Rupert sulkily slouched off to the City, apparently intent on

dedicating the rest of his life to the pursuit of drugs, depravity, and

wealth beyond the dreams of avarice.

Had Rupert been just another chinless wonder with a penchant for

Bolivian nose candy, high finance, and the English vice, his subsequent

trajectory would have been undistinguished and short. But somewhere

along the way Rupert conceived a plan. The precise details were

obscure—he fully confided in no one after the Dead Pig Affair—but

this much was clear: Rupert still harbored political ambitions, but not

parliamentary ones. His new path to power centered on the secret cult he

had joined before he was sent down.

Strange faiths that practiced unspeakable rites were springing up

everywhere these days, growing in numbers and spreading like clumps of

horrifyingly poisonous toadstools as the power of magic waxed. Rupert

ascended rapidly through the priesthood of the Mute Poet, using their

sacramental rites to obtain investment guidance from his unholy patron.

And he made use of every available edge, from option trades to obsidian

sacrificial axes, to bloat his fortunes and selectively evangelize the

faith.

The Cult of the Mute Poet presented itself to the public as a somewhat

eccentric Christian sect: the Church of Saint David, patron of poets.

Its inner circle, however, worshipped Our Lord the Undying King, Saint

Ppilimtec the Tongueless, who sits at the right hand of Our Lord the

Smoking Mirror. Fifteenth-century conquistadores in the New World had

witnessed the rites performed by Nahua priests and been impressed by the

results: so they had copied their practices and applied them to their

own corrupted saints. When you recited the right phrases and made

sacrifice appropriately, things that dwelt in other realms might

listen and lend their will to your ends—even if they were not the

beings toward which your pleas were directed. And it wasn't as if the

Catholic Church wasn't syncretistic by design—it was right there in

the name—so what, if it required a little human sacrifice to energize

the power of prayer?

The Church of the Mute Poet was not the worst of the sanguinary cults

that festered beneath the aegis of the Inquisition. Most of the most

gruesome offenders were stamped out before the return of magic. In any

case, all were overshadowed by the New Management, the government of the

Black Pharaoh—N'yar Lat-Hotep—the Crawling Chaos Reborn. But the

followers of Ppilimtec the Tongueless survived, alongside certain

others. They thrived under Rupert's Machiavellian leadership, building

congregations and attracting converts, and with every victim sacrificed

and each service of worship conducted, Rupert funneled power to his

Lord.

In return he received the benefits accrued by a high priest.

But to what end?

Eve neither knew nor truly cared. She just wanted to be free of his

demands. She'd risen to a position of power almost absentmindedly,

unaware of the proxy marriage that made her his magically enslaved

minion (and, inadvertently, his heir). Discovering that as his wife or

his widow she controlled his hedge fund and web of offshore investment

vehicles was a pleasant bonus, but in truth, Eve hadn't tried to kill

him for the money.

Eve had ambitions of her own. She was bent on revenge against another

cult, the Golden Promise Ministries, who had taken the life of her

father and the mind of her mother. Unfortunately, cleaning up the mess

Rupert had left behind—his followers had been up to their eyeballs in

foul schemes—was sucking all the air out of the boardroom and leaving

Eve no time for her own plans.

Then, as if that wasn't bad enough, she'd come to the attention of very

important people.

* * *

Two weeks into the new year—four weeks after she led her security team

to Castle Skaro and interrupted her husband's followers, who had been

attempting to sacrifice a family of superpowered children in order to

summon Rupert's shade from wherever he'd been banished to—Eve received

a visit that she had been both expecting and dreading for some time.

Taking control of Rupert's business empire in his absence was one thing

(she'd already deputized for him over a period of years). But his occult

empire was another matter entirely. His followers were numerous and

murderously devoted to their Lord. He'd sent them instructions via an

after-death email delivery service: Eve dared not read her incoming

messages without first subjecting them to three layers of filtering,

just in case he tried to Renfield her from beyond the grave.

At least she'd cleaned house in the security subsidiary. She'd had to,

after a failed assassination attempt. Sergeant Gunderson had proven to

be trustworthy, although she hadn't managed to extract any useful

intelligence from Eve's would-be killer before he choked himself to

death on his own tongue. And Eve's own basement den was safe, although

she used Rupert's ostentatious luxury suite upstairs for receiving

visitors.

She was reviewing the quarterly figures from the Bigge Organization's

defense procurement subsidiary when her earpiece buzzed. "Starkey," she

snapped irritably. "I'm on do not disturb for a reason. Is the building

is on fire?"

"Ma'am, you have a visitor waiting in reception," said the receptionist

who'd interrupted her spreadsheet-minded musing. Something in her tone

put Eve on notice that perhaps the building was on fire, figuratively

speaking. "She's from the House of Lords and she's asking for you by

name."

Eve shuddered: this was absolutely a metaphorical house fire. "I'll be

right with you," she said, much more politely, and hung up.

Eve secured her laptop then pulled her heels on and checked her makeup,

clearing the decks for action. Those people—the House of

Lords—didn't exactly pay house calls: they had minions to do that sort

of thing. And a mere minion wouldn't turn up unheralded and expect to

see the boss. So, while it might be something minor—a social call on

Baron Skaro's widow, for instance, an invitation to discuss Rupert's tax

returns, a request for a cup of sugar—Eve was fairly certain that it

wasn't minor. And that meant she had to get her game face on. After

all, the sequel to the curse may you live in interesting times was

may you come to the attention of very important people. And they

didn't come much more important than the New Management of Prime

Minister Fabian Everyman.

Under the New Management, the House of Lords wasn't just a sleepy

debating club for aristocratic coffin dodgers. In 2007 the House had

been reconstituted as a serious, albeit unelected, revising chamber that

handled a lot of lawmaking and committee work on behalf of the

government. Then when the New Management arrived, it had been given

executive responsibilities as well. In particular, matters of

thaumaturgy, necromancy, and demonology now fell within the ambit of

what had once been known as the Invisible College, a secretive body

originally established under the governance of the Star Chamber.

Eve was not one to overindulge in Star Wars references, but as she

entered the ground-floor lobby area she sensed a disturbance in the

Force. It felt as if a thunderstorm was about to break: the doorman, the

receptionist, and the duty security guard had frozen like rabbits before

a hungry fox, all of them paralyzed and unable to look away from a woman

of indeterminate age. Her glossy black hair was as tightly controlled as

her bearing, and her suit was vintage Chanel, Eve guessed. And at her

shoulder stood a man whom she recognized immediately as ex-military,

quite likely ex--special forces.

"You must be the Ms. Starkey I've been hearing so much about." (Eve felt

a treacherous flash of relief at her visitor's use of her real name.

Way to make a good first impression.) The woman smiled as she extended

her gloved hand. "Johnny, introduce me," she told her bodyguard.

"Yes, Du— Your Grace." Despite being a mountain of muscle in a suit of

sufficiently generous cut to conceal a small arsenal, and despite having

a vestigial Scottish burr, the bodyguard showed dangerous signs of

sentience. "Ms. Starkey, please allow me to introduce Her Grace,

Baroness Persephone Hazard. Her Grace is the Deputy Minister for

External Assets."

"Please, call me Seph," said the baroness. "I think it's long past time

we had a little talk, don't you agree?" She met Eve's eyes, smiled, and

gazed deep into her soul.

Eve's brain froze as words failed her. She had an apprehension that she

was in the presence of a great predator: perhaps a triumphant

witch-queen, or the viceroy of Tash the Inexorable in a broken Narnia

where Aslan had been crucified before the sacrificed corpses of the

Pevensie children. But then the ward she wore on a charm bracelet around

her left wrist grew hot, the sense of imminent damnation began to

recede, and she blinked, broke eye contact, and regained self-control. A

hot prickling flush of embarrassment and anger spread across her skin.

Baroness Hazard was not only a practitioner but a really strong one:

stronger than Eve, stronger than her father, stronger than Rupert. And

Persephone had just rolled Eve, spearing through her defenses before she

realized she was at risk.

But Eve was still alive. Which meant the baroness wanted something from

her, not just her skull on a spike. So the situation was probably still

salvageable? The gridlock behind her larynx broke. "Follow me," she said

hoarsely, then turned and retreated downstairs to her office. She heard

footsteps following her: the baroness's heels and Johnny's heavier

tread.

Eve's office was much smaller than Rupert's, but it was less prone to

interruption and Eve had ensured it was secure. She sat and waved at the

seats opposite. The visiting sorceress sat, while her bodyguard stepped

inside and closed the door, then took up position beside it. He stood at

parade rest, his unblinking gaze fixed on a spot a meter behind Eve's

forehead. Eve raised an eyebrow at the baroness. "Is he . . . ?"

Seph nodded. "Johnny has my back," she said with complete assurance.

"Always," he rumbled, a hint of warning coloring his voice.

Eve fought the impulse to hunch her shoulders. "I'm sure your time is

very valuable," she said, smiling but keeping her teeth hidden. "How may

I help you?"

Seph crossed her legs, smirked, and asked, "What do you know about the

Cult of the Mute Poet?"

Eve felt as if her life ought to be flashing across her vision at that

moment. It didn't happen in real life, but if it did this was the

right time for it to happen, wasn't it? The baroness was clearly not

asking her to read back the Wikipedia article on the god Ppilimtec,

Prince of Poetry and Song. There was another game in play.

She took a deep breath, then very carefully said, "I am obedient to His

Majesty the Black Pharaoh, N'yar Lat-Hotep, wearer of the Crown of

Chaos. I will swear any oath required of me to confirm my loyalty. Is

that why you're here?"

The baroness cocked her head to one side. In the distance, Eve felt

rather than heard the rumble of the breaking storm. "That is an

acceptable start, but I fear I was insufficiently precise. Let me amend

my question. What is your connection with the Cult of the Mute Poet?"

Eve licked her desert-dry lips, then began to explain everything: from

her mother's fall into a decaying orbit around the black hole of the

Golden Promise Ministries to her own vow of revenge, to her recruitment

by Rupert, the subsequent degradation and depravity, the proxy marriage

she had only found out about the previous month, and finally her

discovery that Rupert was in fact not only an organized-crime kingpin

but the cult's bishop or high priest and that she was, in the eyes of

Skaroese law, of one flesh with him. Midway through her confession her

phone rang. She muted it and carried on. The baroness nodded when she

enumerated her visits to Skaro and to the church in Chickentown,

described the bloody carnage in the conference room and the underground

chapel, then confessed her own desperate anxiety about the question of

Rupert's existence. It was, she felt, as if she had lost control of her

own tongue—but that was impossible, wasn't it? Rupert's office was

thoroughly warded, she herself was warded, she'd know if—

Finally, the baroness spoke.

"I find it interesting that your former employer"—Eve felt a stab of

gratitude that she said former: it implied a certain distance—"left

werewolves in your security detachment. And even more interesting that

the survivor suicided."

"Werewolves?"

"Not actual shape-shifters, skinwalkers don't exist, you can trust me

on that! I mean undercover loyalists with orders to conduct

assassination or terror operations on behalf of their absent leadership.

It's something you usually only see with State-Level Actors." Seph

paused, then looked thoughtful. "Was Rupert an SLA? In your opinion."

"Not on the same level as His Majesty, but"—she recalled the paperwork

from his office, under the battlements of a Norman fortress—"obviously

he has his own jurisdiction, doesn't he? Held in feu as a vassal of

the Duke of Normandy." Unless somehow one of Her Majesty's ancestors'

law clerks had fucked up and—no, don't go there, Eve thought, don't

even think that thought. "He had a surprising number of heavily armed

goons, connections to shady arms dealers, annual revenue measured in the

billions of euros, and his own cult, so yes, I think you could

reasonably make a case that he was a State-Level Actor. Or at least that

he fancied himself as a kind of occult Ernst Stavro Blofeld." (The kind

of necromantic Bond villain who liked to relax by hunting clones of

himself on a private island, or extorting trillion-dollar ransoms from

the United Nations in return for not repopulating New York with

dinosaurs.) "But then he died"—hopes and prayers—"and his mess

landed in my lap."

"Jolly good, Ms. Starkey."

Persephone's smile flickered like heat lightning, liminal and deadly,

and Eve had a sense of barely constrained magic aching for explosive

release. The baroness was clearly of human origin, but sorcerers of such

power rarely stayed human for long. Either the Metahuman Associated

Dementia cored them from the inside out, or they made a pact with the

deadly v-symbionts that thrived in the darkness. Or they transcended

their humanity in some other arcane manner: it mattered not. What

mattered was that whichever path they chose, they trod the Earth like

human-shaped novae and left blackened footsteps in the molten rock,

until they finally burned out and collapsed into some incomprehensible

stellar remnant of proximate godhood. Persephone was still human for the

time being, but Eve uneasily apprehended that she was sharing her office

with a polite but not entirely tame nuclear weapon.

"Understand that I speak now as the mouthpiece of the New Management.

It pleases His Majesty," Persephone continued, and there was an echo

in her voice as if a god was taking note of her words and nodding along

gravely, "for you to do as you wish in the matter of the Church of the

Mute Poet." Which, Eve interpreted, meant she'd just been handed enough

rope to hang herself with—and not a centimeter more.

Then Persephone continued, in more human tones, "But are you absolutely

certain that Rupert de Montfort Bigge is permanently dead?"

Eve froze. "I sincerely hope so!" she burst out.

"You didn't actually see his corpse, did you?" Persephone had the

effrontery to look sympathetic, damn her eyes.

"I didn't," Eve confessed. "But I don't see any way he could have

survived if he followed my directions. And he had no reason not to." She

flinched as an entire herd of black cats padded across her open grave.

"Well." The distant shining darkness entered Persephone's gaze again.

"Let it be understood by all that Rupert de Montfort Bigge, Baron

Skaro, is hereby de-emphasized by order of the New Management. Should he

set foot on these isles again, he shall find no sanctuary in the House

of the Black Pharaoh. He is outside the law, and though he may live or

die, none shall give him aid and comfort. Furthermore, the New

Management decrees that pursuant to the marriage contract executed by

the outlaw Baron Skaro in her absence, being his lawful wife, Evelyn

Starkey is recognized as his sole heir and assignee."

Persephone paused. It was just as well: Eve was on the verge of

hyperventilating and needed an entire minute to regain control once

more. Eventually the black fuzz at the edges of her vision receded.

"I, uh, I . . ." Eve couldn't continue.

Persephone continued, back to speaking in her own voice, "You are

summoned to attend the Court of the Black Pharaoh within the next three

months—you will receive instructions by post in due course—at which

time you will swear allegiance to His Dread Majesty." Or else was a

given. "At that time His Majesty may choose to leave you be or dispose

of you in accordance with his wishes." Eve found it hard not to flinch

again. "That's not necessarily detrimental to you—the government has

numerous executive posts to fill and a shortage of competent vassals. If

you play your cards right you may prosper, never mind merely surviving.

But. Terms and conditions apply, as they say. Your complete submission

is a nonnegotiable and absolute requirement of the New Management: no

ifs, no buts."

Translation: You're drafted! Eve steeled herself and nodded. "What

else?" she asked.

"As heir to Baron Skaro's properties, rights, and duties, His Majesty

holds you responsible for any future transgressions by the Cult of the

Mute Poet. The buck, as they say, stops here." The baroness pointed a

shapely finger at the desktop in front of Eve. "But the New Management

is not hanging you out to dry. You are welcome to call on me for advice

and guidance, and if at any time you feel you really can't cope, you can

petition to be relieved of your responsibilities. That's a card you can

play only once, but it's better than ending up with your head on a

spike."

Eve translated mentally: We're taking over your operation but we're

keeping you on as Corporate Vice President for Cannibal Club Poetry

Slams, unless you fuck up so badly we decide to execute you. She

nodded, her mouth dry. "Is that all?" she asked.

"Not quite!" Baroness Hazard beamed at her. "There's one last thing. His

Majesty appreciates tribute, as a gesture of submission on the part of

new vassals. In view of the uncertainty surrounding the postulated death

of your husband, and in order to prove beyond reasonable doubt that he

is dead, I would strongly advise you—and I am sure this aligns

perfectly with your own preferences—to gift His Majesty with the head

of the outlaw Rupert de Montfort Bigge when you are presented at court.

It would be a perfect addition to His Majesty's cranial collection, and

it would be one less thing for everyone to worry about—both His

Majesty and yourself, if you follow my drift."

With that, she rose. "Welcome to the team, Evelyn. I look forward to

working with you in future. Goodbye!"

* * *

The year is 2017, and in a few months' time it will be the second

anniversary of the arrival of the New Management.

Welcome to the sunlit uplands of the twenty-first century!

One Britain, One Nation! Juche Britannia!

Long live Prime Minister Fabian Everyman! Long live the Black Pharaoh!

Iä! Iä!

(Now that's the spirit of the age, eh what?)

Magic, in abeyance since the dog days of the Victorian era, has been

gradually slithering back into the realm of the possible since the

1950s. Wartime work on digital computation—the works of Alan Turing

and John von Neumann in particular, the codification of the dark

theorems that allowed direct manipulation of the structure of

reality—turn any suitably configured general-purpose computing device

into a tool of hermetic power. Cold War agencies played deep and

frightening head games with demons at the same time their colleagues in

rocketry and nuclear physics reached for the stars and split the atom.

Old dynasties of sorcerers and ritual magicians—those who practiced

magic as an intuitive art rather than methodical science—have

gradually regained their skills and rediscovered their family secrets.

The onslaught of microelectronics cannot be described as anything less

than catastrophic. For five decades, Moore's Law has driven a relentless

exponential increase in performance per dollar, as circuits grow smaller

and engineers cram more transistors onto each semiconductor wafer.

Minicomputers the size of a chest freezer, costing as much as a light

plane, arrived in the 1960s. They rapidly gave way to microcomputers the

size of a typewriter, costing no more than a family car. Speeding up and

shrinking continuously, they became cheap and ubiquitous. Now everybody

has a smartphone as powerful as a 2004 supercomputer. And while most

people use them to watch cat videos and send each other selfies, a small

minority—mere millions of sorcerous software engineers,

worldwide—use them for occult ends.

More brains in the world, and more computers, mean more magic: and the

more magic there is, the easier the practice of magic becomes. It

threatens us with an exponentially worsening explosion of magic, a

sorcerous singularity. When it was first hypothesized in the 1970s, this

possibility seemed so threatening that the security services gave it a

code name (CASE NIGHTMARE GREEN) and considered it a worse threat than

global climate change. Then the floodgates opened, and in the tumultuous

wake of a major incursion that killed tens of thousands, the security

services made a very explicit pact with a lesser demiurge. They pledged

their support to a strong political leader who understands the nature of

the crisis, and who has promised us that He will save the nation—for

dessert, at least.

Long live Prime Minister Fabian Everyman!

Long may the Black Pharaoh reign!

Iä! Iä! N'yar Lat-Hotep!

H. P. Lovecraft (Racist, bigot, and author of numerous fictions of the occult that

are as accurate a guide to the starry wisdom as the recipes for

explosives in The Anarchist Cookbook) misspelled His name and libelously misconstrued His

intentions. Egyptologists questioned his very existence. Nevertheless,

N'yar Lat-Hotep is very real: an ancient being worshipped as a god for

thousands of years who takes a whimsical and deadly interest in human

affairs. For a period of centuries, perhaps millennia, He absented

himself: but then, not so long ago in cosmic terms, He squeezed one of

His pseudopodia through the walls of our world and installed Himself in

a nameless, faceless human vessel. A cypher, a sorcerer overwhelmed, or

one who made a pact with a force beyond his understanding: it makes no

difference how it began, only how it ends.

Having obtained a toehold, He applied himself to the challenge of taking

over a small and fractious landmass on the edge of Europe, a nation with

an overinflated regard for itself, a fallen imperial hub not yet

reconciled to its own loss of primacy. Its complacent rulers were easy

prey for this canny and ancient predator. He took over the ruling party

in the summer of 2015 and declared Himself Prime Minister for Life, to

the unanimous acclaim of Parliament, the royal family—for the PM

approves their civil-list payments—and the media—or at least those

editors who value their lives. (Nobody bothered to ask the public their

opinion: the Little People don't count.)

Since 2015, the New Management has made some changes.

His Dread Majesty is nothing if not a traditionalist, and also a

stickler for the proper forms. By appointing His minions to the House of

Lords He has brought the foremost occult practitioners of the land into

His court—those who are willing to swear obeisance to Him, of course:

there are certain followers of rival gods who are unwilling to bend

their necks, and consequently run the risk of bills of attainder and

execution warrants being laid against them. But there is a silver lining

to His rule! He has very limited tolerance for corruption among those He

entrusts with the business of government in His name. He prizes

efficiency over humanity, consistency over mercy, permanence over

progress. It is possible to thrive and grow wealthy in His service, but

only as long as one's loyalty is above reproach and one strives

tirelessly to build the Temple of the Black Pharaoh. The old oafish ways

of bumbling inefficiency and furtive old-school handshakes passing

envelopes stuffed with banknotes are banished forever! And the nation is

healthier for all that.

It should be clear by now that worshippers of the Red Skull Cult,

members of the Church of the Mute Poet, and devotees of mystery cults

devoted to gods and monsters that claim to be His rivals are not, to put

it mildly, likely to flourish under His Dark Majesty's eye. Which is why

Eve's summons to pay court to the Prime Minister is such a big deal. As

a high priest of Ppilimtec—the Mute Poet—Rupert was swimming against

a riptide: but the Prime Minister is nothing if not strategically

generous, and He has granted Eve an opportunity to kiss the ring, bend

her neck, and distance herself from her missing-presumed-dead master.

At a price, of course.

* * *

To continue reading follow these links to the UK and US publishers' pages with links to retailers and ebook stores:

[British edition: available Thursday 18th] [US edition: available Tuesday 16th]

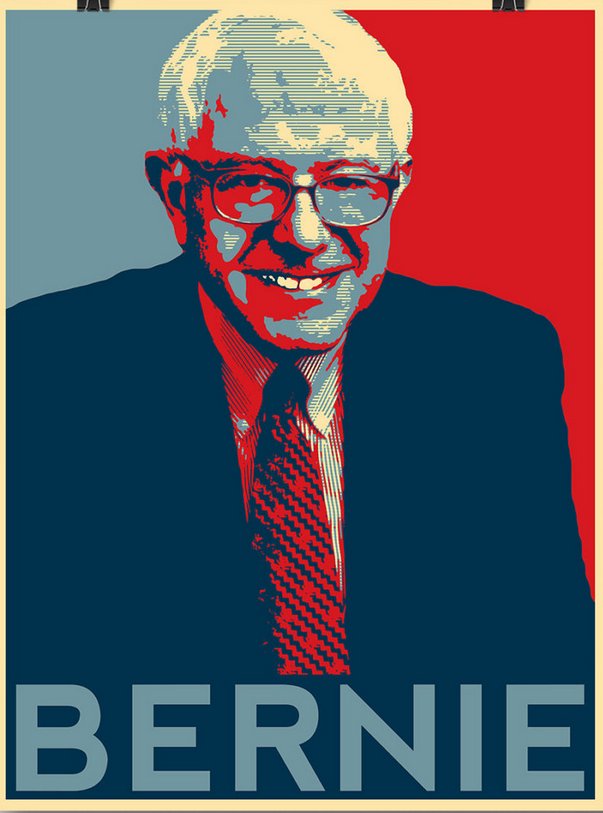

Obama's 2008 run at the presidency was remarkable and game-changing, drawing huge crowds, raising huge sums in small money donations, and mobilizing a massive army of volunteer campaigners. There'd never been a campaign like it, and none had matched it since -- until Bernie Sanders.

Obama's 2008 run at the presidency was remarkable and game-changing, drawing huge crowds, raising huge sums in small money donations, and mobilizing a massive army of volunteer campaigners. There'd never been a campaign like it, and none had matched it since -- until Bernie Sanders.