Andrew Hickey

Shared posts

Practically-A-Book Review: Dying To Be Free

I am the last person with a right to complain about Internet articles being too long. But if I did have that right, I think I would exercise it on Dying To Be Free, the Huffington Post’s 20,000-word article on the current state of heroin addiction treatment. I feel like it could have been about a quarter the size without losing much.

It’s too bad that most people will probably shy away from reading it, because it gets a lot of stuff really right.

The article’s thesis is also its subtitle: “There’s a treatment for heroin addiction that actually works; why aren’t we using it?” To save you the obligatory introductory human interest story: that treatment is suboxone. Its active ingredient is the drug buprenorphine, which is kind of like a safer version of methadone. Suboxone is slow-acting, gentle, doesn’t really get people high, and is pretty safe as long as you don’t go mixing it with weird stuff. People on suboxone don’t experience opiate withdrawal and have greatly decreased cravings for heroin. I work at a hospital that’s an area leader in suboxone prescription, I’ve gotten to see it in action, and it’s literally a life-saver.

Conventional heroin treatment is abysmal. Rehab centers aren’t licensed or regulated and most have little interest in being evidence-based. Many are associated with churches or weird quasi-religious groups like Alcoholics Anonymous. They don’t necessarily have doctors or psychologists, and some actively mistrust them. All of this I knew. What I didn’t know until reading the article was that – well, it’s not just that some of them try to brainwash addicts. It’s more that some of them try to cargo cult brainwashing, do the sorts of things that sound like brainwashing to them, without really knowing how brainwashing works assuming it’s even a coherent goal to aspire to. Their concept of brainwashing is mostly just creating a really unpleasant environment, yelling at people a lot, enforcing intentionally over-strict rules, and in some cases even having struggle-session-type-things where everyone in the group sits in a circle, scream at the other patients, and tell them they’re terrible and disgusting. There’s a strong culture of accusing anyone who questions or balks at any of it of just being an addict, or “not really wanting to quit”.

I have no problem with “tough love” when it works, but in this case it doesn’t. Rehab programs make every effort to obfuscate their effectiveness statistics – I blogged about this before in Part II here – but the best guesses by outside observers is that for a lot of them about 80% to 90% of their graduates relapse within a couple of years. Even this paints too rosy a picture, because it excludes the people who gave up halfway through.

Suboxone treatment isn’t perfect, and relapse is still a big problem, but it’s a heck of a lot better than most rehabs. Suboxone gives people their dose of opiate and mostly removes the biological half of addiction. There’s still the psychological half of addiction – whatever it was that made people want to get high in the first place – but people have a much easier time dealing with that after the biological imperative to get a new dose is gone. Almost all clinical trials have found treatment with methadone or suboxone to be more effective than traditional rehab. Even Cochrane Review, which is notorious for never giving a straight answer to anything besides “more evidence is needed”, agrees that methadone and suboxone are effective treatments.

Some people stay on suboxone forever and do just fine – it has few side effects and doesn’t interfere with functioning. Other people stay on it until they reach a point in their lives when they feel ready to come off, then taper down slowly under medical supervision, often with good success. It’s a good medication, and the growing suspicion it might help treat depression is just icing on the cake.

There are two big roadblocks to wider use of suboxone, and both are enraging.

The first roadblock is the #@$%ing government. They are worried that suboxone, being an opiate, might be addictive, and so doctors might turn into drug pushers. So suboxone is possibly the most highly regulated drug in the United States. If I want to give out OxyContin like candy, I have no limits but the number of pages on my prescription pad. If I want to prescribe you Walter-White-level quantities of methamphetamine for weight loss, nothing is stopping me but common sense. But if I want to give even a single suboxone prescription to a single patient, I have to take a special course on suboxone prescribing, and even then I am limited to only being able to give it to thirty patients a year (eventually rising to one hundred patients when I get more experience with it). The (generally safe) treatment for addiction is more highly regulated than the (very dangerous) addictive drugs it is supposed to replace. Only 3% of doctors bother to jump through all the regulatory hoops, and their hundred-patient limits get saturated almost immediately. As per the laws of suppy and demand, this makes suboxone prescriptions very expensive, and guess what social class most heroin addicts come from? Also, heroin addicts often don’t have access to good transportation, which means that if the nearest suboxone provider is thirty miles from their house they’re out of luck. The List Of Reasons To End The Patient Limits On Buprenorphine expands upon and clarifies some of these points.

(in case you think maybe the government just honestly believes the drug is dangerous – nope. You’re allowed to prescribe without restriction for any reason except opiate addiction)

The second roadblock is the @#$%ing rehab industry. They hear that suboxone is an opiate, and their religious or quasi-religious fanaticism goes into high gear. “What these people need is Jesus and/or their Nondenominational Higher Power, not more drugs! You’re just pushing a new addiction on them! Once an addict, always an addict until they complete their spiritual struggle and come clean!” And so a lot of programs bar suboxone users from participating.

This doesn’t sound so bad given the quality of a lot of the programs. Problem is, a lot of these are closely integrated with the social services and legal system. So suppose somebody’s doing well on suboxone treatment, and gets in trouble for a drug offense. Could be that they relapsed on heroin one time, could be that they’re using something entirely different like cocaine. Judge says go to a treatment program or go to jail. Treatment program says they can’t use suboxone. So maybe they go in to deal with their cocaine problem, and by the time they come out they have a cocaine problem and a heroin problem.

And…okay, time for a personal story. One of my patients is a homeless man who used to have a heroin problem. He was put on suboxone and it went pretty well. He came back with an alcohol problem, and we wanted to deal with that and his homelessness at the same time. There are these organizations called three-quarters houses – think “halfway houses” after inflation – that take people with drug problems and give them an insurance-sponsored place to live. But the catch is you can’t be using drugs. And they consider suboxone to be a drug. So of about half a dozen three-quarters houses in the local area, none of them would accept this guy. I called up the one he wanted to go to, said that he really needed a place to stay, said that without this care he was in danger of relapsing into his alcoholism, begged them to accept. They said no drugs. I said I was a doctor, and he had my permission to be on suboxone. They said no drugs. I said that seriously, they were telling me that my DRUG ADDICTED patient who was ADDICTED TO DRUGS couldn’t go to their DRUG ADDICTION center because he was on a medication for treating DRUG ADDICTION? They said that was correct. I hung up in disgust.

So I agree with the pessimistic picture painted by the article. I think we’re ignoring our best treatment option for heroin addiction and I don’t see much sign that this is going to change in the future.

But the health care system not being very good at using medications effectively isn’t news. I also thought this article was interesting because it touches on some of the issues we discuss here a lot:

The value of ritual and community. A lot of the most intelligent conservatives I know base their conservativism on the idea that we can only get good outcomes in “tight communities” that are allowed to violate modern liberal social atomization to build stronger bonds. The Army, which essentially hazes people with boot camp, ritualizes every aspect of their life, then demands strict obedience and ideological conformity, is a good example. I do sometimes have a lot of respect for this position. But modern rehab programs seem like a really damning counterexample. If you read the article, you will see that this rehabs are trying their best to create a tightly-integrated religiously-inspired community of exactly that sort, and they have abilities to control their members and force their conformity – sometimes in ways that approach outright abuse – that most institutions can’t even dream of. But their effectiveness is abysmal. The entire thing is for nothing. I’m not sure whether this represents a basic failure in the idea of tight communities, or whether it just means that you can’t force them to exist ex nihilo over a couple of months. But I find it interesting.

My love-hate relationship with libertarianism. Also about the rehabs. They’re minimally regulated. There’s no credentialing process or anything. There are many different kinds, each privately led, and low entry costs to creating a new one. They can be very profitable – pretty much any rehab will cost thousands of dollars, and the big-name ones cost much more. This should be a perfect setup for a hundred different models blooming, experimenting, and then selecting for excellence as consumers drift towards the most effective centers. Instead, we get rampant abuse, charlatanry, and uselessness.

On the other hand, when the government rode in on a white horse to try to fix things, all they did was take the one effective treatment, regulate it practically out of existence, then ride right back out again. So I would be ashamed to be taking either the market’s or the state’s side here. At this point I think our best option is to ask the paraconsistent logic people to figure out something that’s neither government nor not-government, then put that in charge of everything.

Society is fixed, biology is mutable. People have tried everything to fix drug abuse. Being harsh and sending drug users to jail. Being nice and sending them to nice treatment centers that focus on rehabilitation. Old timey religion where fire-and-brimstone preachers talk about how Jesus wants them to stay off drugs. Flaky New Age religion where counselors tell you about how drug abuse is keeping you from your true self. Government programs. University programs. Private programs. Giving people money. Fining people money. Being unusually nice. Being unusually mean. More social support. Less social support. This school of therapy. That school of therapy. What works is just giving people a chemical to saturate the brain receptor directly. We know it works. The studies show it works. And we’re still collectively beating our heads against the wall of finding a social solution.

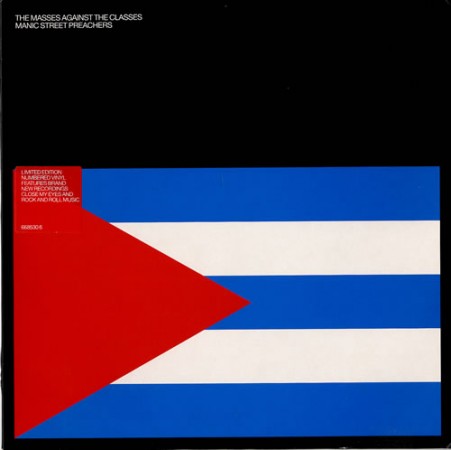

MANIC STREET PREACHERS – “The Masses Against The Classes”

#845, 22nd January 2000

“All over the world I will back the masses against the classes” – William Ewart Gladstone.

“All over the world I will back the masses against the classes” – William Ewart Gladstone.

Hello, it’s us again. Welcome to Popular. Welcome to 21st century pop music, now fifteen years old and dreadfully teenager-ish in its surly refusal to admit to any pigeonhole you might want to place it in. Putting the pop culture of this century’s first decade into a historical context is an unsatisfying job: it’s wriggly and shapeless. Some would gloomily have it that pop descended into an ahistorical inertia in the 00s, cycling through a tatty parade of old signifiers. Others would point to this tribe or that as keeping its vital spirit alive. From either perspective, trying to grab onto this century’s music through its number one records seems a strange proposition.

Maybe Gladstone can help. His famous placing of bets is no kind of socialist endorsement: he was appealing to his notion of a spirit in “the masses” that transcends factional (class) interest – the surges of support for a noble cause that led, in his eyes, to many of Victorian politics’ grand reforming moments, and overturned any partisan support of particular classes for the status quo. By focusing critically on only the best-selling record of any given moment, I’ve tried to place myself to pick up on as many of pop’s broad-based swells of sentiment as I can. There’s a nagging feeling that those kind of hits – the ones that stick around and define a summer, a winter, or a year – are more genuine and worthy of note than the mayfly one-week wonders that might surround them. But this is misguided. The pop charts have always also been about the classes – a mess of overlapping factions and specialisms that sometimes, somehow, get their message through. And the format of Popular also forces me to pay attention to this jabber of enthusiasms that a smoother history might overrule.

So number ones are a volatile balance of the masses and the classes, and that’s why I like to write about them. Still, though, 2000 is a shit of a year for doing it.

There are forty-two singles to cover, more than any year before or since. This berserk turnover is no accident: let’s remind ourselves of what getting a number one took at the turn of the 00s. In general, a hundred thousand sales would do the job. Pick the right week and you could hit the top on barely half that. Competition for number ones was planned to a degree, with release dates shifting back and forth to give bands with strong fanbases the best chance of a week’s glory. Those fanbases knew exactly what was coming, because singles were released to radio weeks in advance so they could build or mobilise an audience. On the relevant Monday, multiple formats in the main record shops helped fluff performance and ensure a high entry and peak. It was an unromantic business: marketers and fans united in what amounted to a business planning exercise, with all the thrill of a well-ordered Gant chart.

With hindsight, you notice two things. First, it’s astonishing the charts of the late 90s and early 00s are as representative as they are. There is a ridiculous number of number ones, but no more injustices than usual. Big records have always missed number one, whole styles have been neglected, but this period is no worse for it than any other. The masses remain in full voice.

The second curious thing is that this system, far from being sewn up, was ripe for gaming, vulnerable to the influence of faction. If you had a big enough fanbase, and picked the right week, you could get anything at all to number one. The charts have never been more open to the possibility of pop theatre than in the 00s: it might have been a golden age for wannabe Maclarens. But almost nobody took advantage of it. Of course, once bands built the kind of support to make trolling the charts a live possibility, most of them simply couldn’t be arsed any more.

It wasn’t just the innate conservatism of the act with an audience to please, though – the very idea of the charts as something that should be “subverted” seemed to belong to a prior age. One of the things that happened after punk was that the relationship of the underground and the mainstream changed. British psychedelic and prog bands didn’t shun the singles charts quite as much as lazy history might summarise, but there was hardly ever an ideological angle to their occasional visits. Punk, and the Pistols specifically, altered that. The near-miss or spiking of “God Save The Queen” became a feat to emulate, a crime to avenge. By 1980 the charts were highly winnable territory – former or tangential punkers like Adam Ant and Paul Weller going straight in at No.1, then pop itself restaffed by the eager and glorious theorists of the New Pop. A series of peaks – of fondly recalled victories – followed: Paul Morley and Trevor Horn’s tactical conquest with Frankie; 4AD getting M/A/R/R/S to the top; the situationist pop chaos of the KLF; the Battle of Britpop. And all these became rolled up into a general sense of an era when the charts “mattered”. But you got the feeling that to many, they mattered because of this possibility of minor, nose-tweaking shock – the classes winning out against the masses, if only for a week or two – and their day-to-day operation was a mere backdrop to that.

The Manic Street Preachers, on the threshold of the 21st century, are almost the last in this tradition. They had the opportunity – a band with enough fans to do something in that torpid millennial January. They had the motive – a band with a long-standing interest in quixotic pop gestures, and a fan’s love of theatrical subversion: they’d even called one of their videos Leaving The 20th Century, after a Situationist slogan. They also had the method – “Masses Against The Classes” was a limited single release, to be deleted after a week, guaranteeing it a compressed sales burst and a high debut placing.

But did they have the song? And who were they actually aiming it at?

It’s very doubtful that “The Masses Against The Classes” is meant as any kind of coherent statement, even more doubtful that you can parse it as one. The Occam’s Razor interpretation is that they wanted a Number One, saw a way of getting it, and slapped a Chomsky quote at the start as a bit of decorative brand-building and because it tickled Nicky Wire. If you take the perspective that a Cuban flag in HMV was an inherent pop good, and the Manics are fixing the charts to provide an alternative to complacency – then “Masses” works as an unfocused blast of wrath. It’s better – a lot better – than Westlife’s “Seasons In The Sun”. High praise, eh? But let’s offer the band the respect of at least trying to read too much into it all.

The quotes it’s topped and tailed by – Albert Camus bringing up the rear – sit in uneasy relationship. “This country was founded on the principle that the primary role of government is to protect property from the majority, and so it remains.”; “A slave begins by demanding justice, and ends by wanting to wear a crown”. Side by side, Camus’ fatalism makes this a glum pairing: the liberation of property (which a slave, by definition, is) inevitably ends in the re-establishment of government, and the cycle begins again. The title – Gladstone’s invocation of the historical spirit of the masses, magically separated from their economic interests – offers some kind of way out, however mystical. Break the cycle by backing the decent impulses of the masses against the classes. It’s not an analysis I would agree with, but that’s how the salad of sources works together, for me.

That’s the title and the quotes. The actual song, meanwhile, takes a rather different approach: it’s a haters-gonna-hate sneer. “Success is an ugly word / Especially in your tiny world”: A lot of people, it seems, didn’t like the furrowed-brow AOR direction the Manic Street Preachers had taken themselves in for This Is My Truth, Tell Me Yours, and the band, in the grand tradition of successful bands throughout history, interpreted boredom as envy. “The Masses” has them striking a defiant pose. Their grumpy old fans are the petty, factional classes, and their stadium-rockin’ newer ones are the noble masses.

It’s a nasty little record by this reading, but to really get how nasty it is, consider what it sounds like. This angry defense of a change of direction is packaged up in a song that’s a deliberate callback to their very first records. The early Manics single “Masses” reminds me of most is “You Love Us” – snotty, scrappy, and the kind of audience- and critic-baiting statement of belief that feels terribly 1992 but no less electric for that. “Masses” is determinedly uglier, though, janking and grinding along on its basic rock undercarriage like a car dragging a broken exhaust along a road. The early Manics never sounded quite this loud, either – boosted by compression steroids into a very deliberate kind of rawness, though compared to those early records what’s been gained in power has been lost in swagger. So what we’ve got is a song played in a manner designed to excited the band’s old fans, powered to number one at a time and using a gimmick that calls back to the Manics’ early theatrical streak, but which is actually a brutal dismissal as elitists of the very people most likely to get enthused by those things. Now there’s subversion. Oof.

“I guess at heart I remain some kind of a crinkly English situationist who wants to have his MTV and critique it too. I am reminded of the story of how high priest of situationism Guy Debord rushed over to London from Paris in the 1960s when he heard that a trained guerilla combat unit was ready for his inspection in Ladbroke Grove. He was directed to military headquarters on the All Saints Road where he discovered a young guy watching Match Of The Day on his sofa with a can of McEwan’s Special Export in his hand…. Debord, quite naturally, stalked off in a rage.” – Steve Beard, Aftershocks: The End Of Style Culture

Life of Brian's Parody of the Sermon on the Mount in Jesus Films

If you need a refresher, here is the scene from Life of Brian:

This Year's Infallible Super Bowl Prediction

I'm not watching.

I predict that every year and so far, I've never been wrong.

Natalie Bennett should beware the sharp elbows of Caroline Lucas

But her performance on the Sunday Politics the other week has led some to wonder whether the Green Party would do better to field Caroline Lucas in the leaders' debates later this year.

And Natalie should be careful, because Caroline Lucas has sharp elbows and previous form.

Brighton Pavilion was the Greens' best prospect of a gain at the last general election largely because a local activist called Keith Taylor had built it up.

Then in 2007 Lucas descended on the town, professed her "admiration and respect" for Taylor and took the nomination from him.

If she expresses her admiration and express for the Green' current leader, Natalie really will be in trouble.

Outside the Government Final: The Five(ish) Doctors Reboot

You don’t need to vote for the Elite Consensus Party, because they’ll win anyway

A couple of snippets from the Sundays to help you understand how British politics works today:

First, the Telegraph is very eager to tell us that Liz Kendall has suddenly emerged as the favourite for a Labour leadership race that may or may not be taking place at some unspecified point in the future. How has she achieved this impressive, yet somewhat nebulous, feat? Some mass mobilisation of Labour members? A series of impressive performances in the House of Commons? Perhaps she’s set out some important new ideas for the future of the Labour Party? Maybe it’s through a long period of helping and campaigning other MPs?

No, it’s because ‘she gave an interview to House magazine saying that for the NHS “What matters is what works”’. Yes, reusing some old bit of Blairite managerialist pabulum – what matters is ‘what works’, ignoring that ‘what works’ is determined by whoever decides what ‘working’ means – is enough to catapult you into the lead. It’s definitely not that she’s stressed the importance of private healthcare in the NHS while her old boss works for Alliance Boots at the same time its chairman is attacking Ed Miliband. What would the Telegraph have to gain by promoting someone who’d push Labour back towards the managerialist centre?

Meanwhile, over at the Independent, John Rentoul’s remarkable career as a political commentator who doesn’t understand the concept of ideology continues apace. Today, he’s setting out just why the country needs a Labour government, but headed by David Cameron. It’s the sort of centrist managerialist fantasy one would expect from an arch-Blairite, yet again stressing that what is important in a politician isn’t believing in something but being possessed of some nebulous form of ‘competence’. Like ‘what works’, ‘competence’ is purely in the eye of the beholder, and the beholder is normally a well-paid newspaper columnist or editor assessing who they’ve been told good things about.

(Incidentally, although I’ve said I don’t think a grand coalition is likely after the next election, the number of times I’ve seen people in the press suggesting it does make me wonder if the ground is being prepared for one, just in case it turns out to be necessary)

What both these articles represent, though, is the cosy consensuses that dominate British politics. Stick firmly within the lines of what’s acceptable within the elite consensus and you’ll be praised to the skies for your competence and put forward as possible leadership material. Question it, or stray outside the mainstream consensus for just a little bit and you’ll be a maverick on the fringes, and you definitely won’t get the media singing your praises. It’s a lot easier for everyone in the elite consensus if politics is just a matter of deciding between competing managerial visions without letting any of that horrible ideology getting there. It’s why the memory of Blairism lingers so much amongst the commentariat: things were easier then when all you had to worry about was ‘what works’ not what anyone might actually want.

Hope and the politics of the future

The ruling New Democracy party is still wondering how its platform of Endless Suffering For Everyone was defeated by Syriza’s competing message of Maybe Not That.

Yes, it’s from the Mash, but as so often one line of satire gets closer to the truth than thousands of pieces of punditry. When traditional politics and traditional parties neglect to offer a positive vision of the future, there’s a natural appeal to anyone who can offer something that resembles a vision. Even if it’s just ‘Maybe Not That’, it’s much more appealing than offering people nothing more than the status quo, perhaps with slightly better gadgets.

This links to what I was saying yesterday – if all that mainstream politics can offer is a red, blue or yellow-tinged version of the elite consensus, and that consensus doesn’t offer a positive vision of the future, then why are we surprised that people are looking for alternatives?

What we don’t have, and what no one seems to be offering in the upcoming election, is a positive vision of the future. David Cameron has been touting the ultimate uninspiring managerialist vision of ‘Britain living within its means’, while Ed Miliband offers ‘maybe that, but not quite that’ and Nick Clegg promises ‘that or that, but perhaps slightly less of it’. Meanwhile, when confronted with any vision of the future, Nigel Farage runs screaming to the past and while the Greens at least acknowledge the future is likely to be radically different, their vision for it is short of hope.

We used to dream about the future. Yes, there were things in those dreams that were never going to happen like flying cars, jet packs and double-digit Jaws sequels, but there was hope in those visions. We had futures where the whole wealth of world knowledge was at our fingertips, instant worldwide communication was simple and the need to work was reduced or even eliminated by robotics and automation. We’ve got those, but the world we live in now resembles a cyberpunk dystopia rather than the utopian dreams of the future we had.

What we need is to reclaim and reinvent the idea that the future can be better and different than today. Our politics and culture aren’t offering that vision anymore, instead retreating into expecting tomorrow to look much like today and offering purely managerial solutions to try and keep things running much the way they have been. The problem, I think, is that even if people can’t articulate it, they know that vision doesn’t work. It might not be as obvious in Britain as it is in somewhere like Greece or Spain, but keeping things the same equates to keeping everyone at the same level of insecurity they’re already feeling. That’s not a future anyone would want to sign up for. Merely offering people endless workism from now until the end of time isn’t a vision, it’s a punishment for sins we never committed.

This is why I think ideas like basic income are important. As Paul Mason explains here, it’s too often seen through the prism of our current system, not as a transformative idea that would change the way our society works. It’s getting back to those old visions of the future, where technology has made fulfilling our basic needs – food, clothing, shelter, heat, light – such a simple task that they’re available to everyone without question or effort, just something you get by dint of being you. Instead, we often end up discussing it in terms of how we’d implement it through current systems as though they’re the only way of doing things.

The future doesn’t have to be about basic income, but I think there is a yearning out there for someone, be it politician or artist, who can provide a vision of something different and better, a future that we hope will come about, not one that we dread finally coming to pass. We have occasional moments when we recognise the importance of hope – even the audacity of it – but then we forget about it, or think that what people hope for is more management and more targets to regulate their lives. That might be some people’s vision of the future – a human finger, clicking off the items on an assessment checklist, forever – but surely we can come up with something better?

The Greens, Citizens Income and how journalists still don’t understand how political parties work

After it flared up into media prominence over the last week, the Telegraph today eagerly covered the news that the Green Party won’t be including Citizens Income as a policy in their General Election manifesto.

However, there seems to be a problem with that news: it’s not true. Reading an account from a Green Party member, it seems that the party’s conference has insisted that the policy is included in the manifesto, and the Telegraph’s report is merely extrapolating wildly from some comments by Caroline Lucas. The member’s account suggests that she has opposed the inclusion of it in the manifesto, but even with that news, the Telegraph appears to be stretching her words. It reports that she said:

“The citizens’ income is not going to be in the 2015 general election manifesto as something to be introduced on May 8th. It is a longer term aspiration; we are still working on it,”

The key point they’re not factoring into their story is ‘as something to be introduced on May 8th’, instead focusing on the first part of the sentence. Let’s be honest, I don’t think even the most hardened support of a basic income scheme thinks it could be introduced quickly, and it helps to show the ignorance of reporters who believe that is the case.

However, I think this comes back to the point I made a couple of weeks ago about how journalists don’t understand how policy making within parties actually works. As someone with experience of seeing similar things in the Lib Dems, it’s almost pleasant to see another party being similarly misunderstood. Journalists like to believe that all political parties are run from the top down, not the bottom up, and of course ‘senior party figures’ are always happy to encourage this impression. So, when Caroline Lucas says something (and it’s misheard) it’s easy for them to leap to ‘the party has changed its policy!’ rather than ‘hmm, better check that for accuracy.’

It does make me think about the Iron Law of Oligarchy – the idea that all political organisations will progress from democracy to oligarchy over time – and whether the media have a role in encouraging and fostering that process. Could one even argue that social pressures and the expectation that an organisation will be run from the top are as much a pressure making it happen as the role of bureaucracy concentrating power in the organisation? Something else to add to the list of things I need to think about and write about some more…

Related Posts

The Parable Of The Talents

[Content note: scrupulosity and self-esteem triggers, IQ, brief discussion of weight and dieting. Not good for growth mindset.]

I.

I sometimes blog about research into IQ and human intelligence. I think most readers of this blog already know IQ is 50% to 80% heritable, and that it’s so important for intellectual pursuits that eminent scientists in some fields have average IQs around 150 to 160. Since IQ this high only appears in 1/10,000 people or so, it beggars coincidence to believe this represents anything but a very strong filter for IQ (or something correlated with it) in reaching that level. If you saw a group of dozens of people who were 7’0 tall on average, you’d assume it was a basketball team or some other group selected for height, not a bunch of botanists who were all very tall by coincidence.

A lot of people find this pretty depressing. Some worry that taking it seriously might damage the “growth mindset” people need to fully actualize their potential. This is important and I want to discuss it eventually, but not now. What I want to discuss now is people who feel personally depressed. For example, a comment from last week:

I’m sorry to leave self a self absorbed comment, but reading this really upset me and I just need to get this off my chest…How is a person supposed to stay sane in a culture that prizes intelligence above everything else – especially if, as Scott suggests, Human Intelligence Really Is the Key to the Future – when they themselves are not particularly intelligent and, apparently, have no potential to ever become intelligent? Right now I basically feel like pond scum.

I hear these kinds of responses every so often, so I should probably learn to expect them. I never do. They seem to me precisely backwards. There’s a moral gulf here, and I want to throw stories and intuitions at it until enough of them pile up at the bottom to make a passable bridge. But first, a comparison:

Some people think body weight is biologically/genetically determined. Other people think it’s based purely on willpower – how strictly you diet, how much you can bring yourself to exercise. These people get into some pretty acrimonious debates.

Overweight people, and especially people who feel unfairly stigmatized for being overweight, tend to cluster on the biologically determined side. And although not all believers in complete voluntary control of weight are mean to fat people, the people who are mean to fat people pretty much all insist that weight is voluntary and easily changeable.

Although there’s a lot of debate over the science here, there seems to be broad agreement on both sides that the more compassionate, sympathetic, progressive position, the position promoted by the kind of people who are really worried about stigma and self-esteem, is that weight is biologically determined.

And the same is true of mental illness. Sometimes I see depressed patients whose families really don’t get it. They say “Sure, my daughter feels down, but she needs to realize that’s no excuse for shirking her responsibilities. She needs to just pick herself up and get on with her life.” On the other hand, most depressed people say that their depression is more fundamental than that, not a thing that can be overcome by willpower, certainly not a thing you can just ‘shake off’.

Once again, the compassionate/sympathetic/progressive side of the debate is that depression is something like biological, and cannot easily be overcome with willpower and hard work.

One more example of this pattern. There are frequent political debates in which conservatives (or straw conservatives) argue that financial success is the result of hard work, so poor people are just too lazy to get out of poverty. Then a liberal (or straw liberal) protests that hard work has nothing to do with it, success is determined by accidents of birth like who your parents are and what your skin color is et cetera, so the poor are blameless in their own predicament.

I’m oversimplifying things, but again the compassionate/sympathetic/progressive side of the debate – and the side endorsed by many of the poor themselves – is supposed to be that success is due to accidents of birth, and the less compassionate side is that success depends on hard work and perseverance and grit and willpower.

The obvious pattern is that attributing outcomes to things like genes, biology, and accidents of birth is kind and sympathetic. Attributing them to who works harder and who’s “really trying” can stigmatize people who end up with bad outcomes and is generally viewed as Not A Nice Thing To Do.

And the weird thing, the thing I’ve never understood, is that intellectual achievement is the one domain that breaks this pattern.

Here it’s would-be hard-headed conservatives arguing that intellectual greatness comes from genetics and the accidents of birth and demanding we “accept” this “unpleasant truth”.

And it’s would-be compassionate progressives who are insisting that no, it depends on who works harder, claiming anybody can be brilliant if they really try, warning us not to “stigmatize” the less intelligent as “genetically inferior”.

I can come up with a few explanations for the sudden switch, but none of them are very principled and none of them, to me, seem to break the fundamental symmetry of the situation. I choose to maintain consistency by preserving the belief that overweight people, depressed people, and poor people aren’t fully to blame for their situation – and neither are unintelligent people. It’s accidents of birth all the way down. Intelligence is mostly genetic and determined at birth – and we’ve already determined in every other sphere that “mostly genetic and determined at birth” means you don’t have to feel bad if you got the short end of the stick.

Consider for a moment Srinivasa Ramanujan, one of the greatest mathematicians of all time. He grew up in poverty in a one-room house in small-town India. He taught himself mathematics by borrowing books from local college students and working through the problems on his own until he reached the end of the solveable ones and had nowhere else to go but inventing ways to solve the unsolveable ones.

There are a lot of poor people in the United States today whose life circumstances prevented their parents from reading books to them as a child, prevented them from getting into the best schools, prevented them from attending college, et cetera. And pretty much all of those people still got more educational opportunities than Ramanujan did.

And from there we can go in one of two directions. First, we can say that a lot of intelligence is innate, that Ramanujan was a genius, and that we mortals cannot be expected to replicate his accomplishments.

Or second, we can say those poor people are just not trying hard enough.

Take “innate ability” out of the picture, and if you meet a poor person on the street begging for food, saying he never had a chance, your reply must be “Well, if you’d just borrowed a couple of math textbooks from the local library at age 12, you would have been a Fields Medalist by now. I hear that pays pretty well.”

The best reason not to say that is that we view Ramanujan as intellectually gifted. But the very phrase tells us where we should classify that belief. Ramanujan’s genius is a “gift” in much the same way your parents giving you a trust fund on your eighteenth birthday is a “gift”, and it should be weighted accordingly in the moral calculus.

II.

I shouldn’t pretend I’m worried about this for the sake of the poor. I’m worried for me.

My last IQ-ish test was my SATs in high school. I got a perfect score in Verbal, and a good-but-not-great score in Math.

And in high school English, I got A++s in all my classes, Principal’s Gold Medals, 100%s on tests, first prize in various state-wide essay contests, etc. In Math, I just barely by the skin of my teeth scraped together a pass in Calculus with a C-.

Every time I won some kind of prize in English my parents would praise me and say I was good and should feel good. My teachers would hold me up as an example and say other kids should try to be more like me. Meanwhile, when I would bring home a report card with a C- in math, my parents would have concerned faces and tell me they were disappointed and I wasn’t living up to my potential and I needed to work harder et cetera.

And I don’t know which part bothered me more.

Every time I was held up as an example in English class, I wanted to crawl under a rock and die. I didn’t do it! I didn’t study at all, half the time I did the homework in the car on the way to school, those essays for the statewide competition were thrown together on a lark without a trace of real effort. To praise me for any of it seemed and still seems utterly unjust.

On the other hand, to this day I believe I deserve a fricking statue for getting a C- in Calculus I. It should be in the center of the schoolyard, and have a plaque saying something like “Scott Alexander, who by making a herculean effort managed to pass Calculus I, even though they kept throwing random things after the little curly S sign and pretending it made sense.”

And without some notion of innate ability, I don’t know what to do with this experience. I don’t want to have to accept the blame for being a lazy person who just didn’t try hard enough in Math. But I really don’t want to have to accept the credit for being a virtuous and studious English student who worked harder than his peers. I know there were people who worked harder than I did in English, who poured their heart and soul into that course – and who still got Cs and Ds. To deny innate ability is to devalue their efforts and sacrifice, while simultaneously giving me credit I don’t deserve.

Meanwhile, there were some students who did better than I did in Math with seemingly zero effort. I didn’t begrudge those students. But if they’d started trying to say they had exactly the same level of innate ability as I did, and the only difference was they were trying while I was slacking off, then I sure as hell would have begrudged them. Especially if I knew they were lazing around on the beach while I was poring over a textbook.

I tend to think of social norms as contracts bargained between different groups. In the case of attitudes towards intelligence, those two groups are smart people and dumb people. Since I was both at once, I got to make the bargain with myself, which simplified the bargaining process immensely. The deal I came up with was that I wasn’t going to beat myself up over the areas I was bad at, but I also didn’t get to become too cocky about the areas I was good at. It was all genetic luck of the draw either way. In the meantime, I would try to press as hard as I could to exploit my strengths and cover up my deficiencies. So far I’ve found this to be a really healthy way of treating myself, and it’s the way I try to treat others as well.

III.

The theme continues to be “Scott Relives His Childhood Inadequacies”. So:

When I was 6 and my brother was 4, our mom decided that as an Overachieving Jewish Mother she was contractually obligated to make both of us learn to play piano. She enrolled me in a Yamaha introductory piano class, and my younger brother in a Yamaha ‘cute little kids bang on the keyboard’ class.

A little while later, I noticed that my brother was now with me in my Introductory Piano class.

A little while later, I noticed that my brother was now by far the best student in my Introductory Piano Class, even though he had just started and was two or three years younger than anyone else there.

A little while later, Yamaha USA flew him to Japan to show him off before the Yamaha corporate honchos there.

Well, one thing led to another, and right now if you Google my brother’s name you get a bunch of articles like this one:

The evidence that Jeremy [Alexander] is among the top jazz pianists of his generation is quickly becoming overwhelming: at age 26, Alexander is the winner of the Nottingham International Jazz Piano Competition, a second-place finisher in the Montreux Jazz Festival Solo Piano Competition, a two-time finalist for the American Pianist Association’s Cole Porter Fellowship, and a two-time second-place finisher at the Phillips Jazz Competition. Alexander, who was recently named a Professor of Piano at Western Michigan University’s School of Music, made a sold-out solo debut at Carnegie Hall in 2012, performing Debussy’s Etudes in the first half and jazz improvisations in the second half.

Meanwhile, I was always a mediocre student at Yamaha. When the time came to try an instrument in elementary school, I went with the violin to see if maybe I’d find it more to my tastes than the piano. I was quickly sorted into the remedial class because I couldn’t figure out how to make my instrument stop sounding like a wounded cat. After a year or so of this, I decided to switch to fulfilling my music requirement through a choir, and everyone who’d had to listen to me breathed a sigh of relief.

Every so often I wonder if somewhere deep inside me there is the potential to be “among the top musicians of my generation.” I try to recollect whether my brother practiced harder than I did. My memories are hazy, but I don’t think he practiced much harder until well after his career as a child prodigy had taken off. The cycle seemed to be that every time he practiced, things came fluidly to him and he would produce beautiful music and everyone would be amazed. And this must have felt great, and incentivized him to practice more, and that made him even better, so that the beautiful music came even more fluidly, and the praise became more effusive, until eventually he chose a full-time career in music and became amazing. Meanwhile, when I started practicing it always sounded like wounded cats, and I would get very cautious praise like “Good job, Scott, it sounded like that cat was hurt a little less badly than usual,” and it made me frustrated, and want to practice less, which made me even worse, until eventually I quit in disgust.

On the other hand, I know people who want to get good at writing, and make a mighty resolution to write two hundred words a day every day, and then after the first week they find it’s too annoying and give up. These people think I’m amazing, and why shouldn’t they? I’ve written a few hundred to a few thousand words pretty much every day for the past ten years.

But as I’ve said before, this has taken exactly zero willpower. It’s more that I can’t stop even if I want to. Part of that is probably that when I write, I feel really good about having expressed exactly what it was I meant to say. Lots of people read it, they comment, they praise me, I feel good, I’m encouraged to keep writing, and it’s exactly the same virtuous cycle as my brother got from his piano practice.

And so I think it would be too easy to say something like “There’s no innate component at all. Your brother practiced piano really hard but almost never writes. You write all the time, but wimped out of practicing piano. So what do you expect? You both got what you deserved.”

I tried to practice piano as hard as he did. I really tried. But every moment was a struggle. I could keep it up for a while, and then we’d go on vacation, and there’d be no piano easily available, and I would be breathing a sigh of relief at having a ready-made excuse, and he’d be heading off to look for a piano somewhere to practice on. Meanwhile, I am writing this post in short breaks between running around hospital corridors responding to psychiatric emergencies, and there’s probably someone very impressed with that, someone saying “But you had such a great excuse to get out of your writing practice!”

I dunno. But I don’t think of myself as working hard at any of the things I am good at, in the sense of “exerting vast willpower to force myself kicking and screaming to do them”. It’s possible I do work hard, and that an outside observer would accuse me of eliding how hard I work, but it’s not a conscious elision and I don’t feel that way from the inside.

Ramanujan worked very hard at math. But I don’t think he thought of it as work. He obtained a scholarship to the local college, but dropped out almost immediately because he couldn’t make himself study any subject other than math. Then he got accepted to another college, and dropped out again because they made him study non-mathematical subjects and he failed a physiology class. Then he nearly starved to death because he had no money and no scholarship. To me, this doesn’t sound like a person who just happens to be very hard-working; if he had the ability to study other subjects he would have, for no reason other than that it would have allowed him to stay in college so he could keep studying math. It seems to me that in some sense Ramanujan was incapable of putting hard work into non-math subjects.

I really wanted to learn math and failed, but I did graduate with honors from medical school. Ramanujan really wanted to learn physiology and failed, but he did become one of history’s great mathematicians. So which one of us was the hard worker?

People used to ask me for writing advice. And I, in all earnestness, would say “Just transcribe your thoughts onto paper exactly like they sound in your head.” It turns out that doesn’t work for other people. Maybe it doesn’t work for me either, and it just feels like it does.

But you know what? When asked about one of his discoveries, a method of simplifying a very difficult problem to a continued fraction, Ramanujan described his thought process as: “It is simple. The minute I heard the problem, I knew that the answer was a continued fraction. ‘Which continued fraction?’ I asked myself. Then the answer came to my mind”.

And again, maybe that’s just how it feels to him, and the real answer is “study math so hard that you flunk out of college twice, and eventually you develop so much intuition that you can solve problems without thinking about them.”

(or maybe the real answer is “have dreams where obscure Hindu gods appear to you as drops of blood and reveal mathematical formulae”. Ramanujan was weird).

But I still feel like there’s something going on here where the solution to me being bad at math and piano isn’t just “sweat blood and push through your brain’s aversion to these subjects until you make it stick”. When I read biographies of Ramanujan and other famous mathematicians, there’s no sense that they ever had to do that with math. When I talk to my brother, I never get a sense that he had to do that with piano. And if I am good enough at writing to qualify to have an opinion on being good at things, then I don’t feel like I ever went through that process myself.

So this too is part of my deal with myself. I’ll try to do my best at things, but if there’s something I really hate, something where I have to go uphill every step of the way, then it’s okay to admit mediocrity. I won’t beat myself up for not forcing myself kicking and screaming to practice piano. And in return I won’t become too cocky about practicing writing a lot. It’s probably some kind of luck of the draw either way.

IV.

I said before that this wasn’t just about poor people, it was about me being selfishly worried for my own sake. I think I might have given the mistaken impression that I merely need to justify to myself why I can’t get an A in math or play the piano. But it’s much worse than that.

The rationalist community tends to get a lot of high-scrupulosity people, people who tend to beat themselves up for not doing more than they are. It’s why I push giving 10% to charity, not as some kind of amazing stretch goal that we need to guilt people into doing, but as a crutch, a sort of “don’t worry, you’re still okay if you only give ten percent”. It’s why there’s so much emphasis on “heroic responsibility” and how you, yes you, have to solve all the world’s problems personally. It’s why I see red when anyone accuses us of entitlement, since it goes about as well as calling an anorexic person fat.

And we really aren’t doing ourselves any favors. For example, Nick Bostrom writes:

Searching for a cure for aging is not just a nice thing that we should perhaps one day get around to. It is an urgent, screaming moral imperative. The sooner we start a focused research program, the sooner we will get results. It matters if we get the cure in 25 years rather than in 24 years: a population greater than that of Canada would die as a result.

If that bothers you, you definitely shouldn’t read Astronomical Waste.

Yet here I am, not doing anti-aging research. Why not?

Because I tried doing biology research a few times and it was really hard and made me miserable. You know how in every science class, when the teacher says “Okay, pour the white chemical into the grey chemical, and notice how it turns green and begins to bubble,” there’s always one student who pours the white chemical into the grey chemical, and it just forms a greyish-white mixture and sits there? That was me. I hated it, I didn’t have the dexterity or the precision of mind to do it well, and when I finally finished my required experimental science classes I was happy never to think about it again. Even the abstract intellectual part of it – the one where you go through data about genes and ligands and receptors in supercentenarians and shake it until data comes out – requires exactly the kind of math skills that I don’t have.

Insofar as this is a matter of innate aptitude – some people are cut out for biology research and I’m not one of them – all is well, and my decision to get a job I’m good at instead is entirely justified.

But insofar as there’s no such thing as innate aptitude, just hard work and grit – then by not being gritty enough, I’m a monster who’s complicit in the death of a population greater than that of Canada.

Insofar as there’s no such thing as innate aptitude, I have no excuse for not being Aubrey de Grey. Or if Aubrey de Grey doesn’t impress you much, Norman Borlaug. Or if you don’t know who either of those two people are, Elon Musk.

I once heard a friend, upon his first use of modafinil, wonder aloud if the way they felt on that stimulant was the way Elon Musk felt all the time. That tied a lot of things together for me, gave me an intuitive understanding of what it might “feel like from the inside” to be Elon Musk. And it gave me a good tool to discuss biological variation with. Most of us agree that people on stimulants can perform in ways it’s difficult for people off stimulants to match. Most of us agree that there’s nothing magical about stimulants, just changes to the levels of dopamine, histamine, norepinephrine et cetera in the brain. And most of us agree there’s a lot of natural variation in these chemicals anyone. So “me on stimulants is that guy’s normal” seems like a good way of cutting through some of the philosophical difficulties around this issue.

…which is all kind of a big tangent. The point I want to make is that for me, what’s at stake in talking about natural variations in ability isn’t just whether I have to feel like a failure for not getting an A in high school calculus, or not being as good at music as my brother. It’s whether I’m a failure for not being Elon Musk. Specifically, it’s whether I can say “No, I’m really not cut out to be Elon Musk” and go do something else I’m better at without worrying that I’m killing everyone in Canada.

V.

The proverb says: “Everyone has somebody better off than they are and somebody worse off than they are, with two exceptions.” When we accept that we’re all in the “not Elon Musk” boat together (with one exception) a lot of the status games around innate ability start to seem less important.

Every so often an overly kind commenter here praises my intelligence and says they feel intellectually inadequate compared to me, that they wish they could be at my level. But at my level, I spend my time feeling intellectually inadequate compared to Scott Aaronson. Scott Aaronson describes feeling “in awe” of Terence Tao and frequently struggling to understand him. Terence Tao – well, I don’t know if he’s religious, but maybe he feels intellectually inadequate compared to God. And God feels intellectually inadequate compared to Johann von Neumann.

So there’s not much point in me feeling inadequate compared to my brother, because even if I was as good at music as my brother, I’d probably just feel inadequate for not being Mozart.

And asking “Well what if you just worked harder?” can elide small distinctions, but not bigger ones. If my only goal is short-term preservation of my self-esteem, I can imagine that if only things had gone a little differently I could have practiced more and ended up as talented as my brother. It’s a lot harder for me to imagine the course of events where I do something different and become Mozart. Only one in a billion people reach a Mozart level of achievement; why would it be me?

If I loved music for its own sake and wanted to be a talented musician so I could express the melodies dancing within my heart, then none of this matters. But insofar as I want to be good at music because I feel bad that other people are better than me at music, that’s a road without an end.

This is also how I feel of when some people on this blog complain they feel dumb for not being as smart as some of the other commenters on this blog.

I happen to have all of your IQ scores in a spreadsheet right here (remember that survey you took?). Not a single person is below the population average. The first percentile for IQ here – the one such that 1% of respondents are lower and 99% of respondents are higher – is – corresponds to the 85th percentile of the general population. So even if you’re in the first percentile here, you’re still pretty high up in the broader scheme of things.

At that point we’re back on the road without end. I am pretty sure we can raise your IQ as much as you want and you will still feel like pond scum. If we raise it twenty points, you’ll try reading Quantum Computing since Democritus and feel like pond scum. If we raise it forty, you’ll just go to Terence Tao’s blog and feel like pond scum there. Maybe if you were literally the highest-IQ person in the entire world you would feel good about yourself, but any system where only one person in the world is allowed to feel good about themselves at a time is a bad system.

People say we should stop talking about ability differences so that stupid people don’t feel bad. I say that there’s more than enough room for everybody to feel bad, smart and stupid alike, and not talking about it won’t help. What will help is fundamentally uncoupling perception of intelligence from perception of self-worth.

I work with psychiatric patients who tend to have cognitive difficulties. Starting out in the Detroit ghetto doesn’t do them any favors, and then they get conditions like bipolar disorder and schizophrenia that actively lower IQ for poorly understood neurological reasons.

The standard psychiatric evaluation includes an assessment of cognitive ability; the one I use is a quick test with three questions. The questions are – “What is 100 minus 7?”, “What do an apple and an orange have in common?”, and “Remember these three words for one minute, then repeat them back to me: house, blue, and tulip”.

There are a lot of people – and I don’t mean floridly psychotic people who don’t know their own name, I mean ordinary reasonable people just like you and me – who can’t answer these questions. And we know why they can’t answer these questions, and it is pretty darned biological.

And if our answer to “I feel dumb and worthless because my IQ isn’t high enough” is “don’t worry, you’re not worthless, I’m sure you can be a great scientist if you just try hard enough”, then we are implicitly throwing under the bus all of these people who are definitely not going to be great scientists no matter how hard they try. Talking about trying harder can obfuscate the little differences, but once we’re talking about the homeless schizophrenic guy from Detroit who can’t tell me 100 minus 7 to save his life, you can’t just magic the problem away with a wave of your hand and say “I’m sure he can be the next Ramanujan if he keeps a positive attitude!” You either need to condemn him as worthless or else stop fricking tying worth to innate intellectual ability.

This is getting pretty close to what I was talking about in my post on burdens. When I get a suicidal patient who thinks they’re a burden on society, it’s nice to be able to point out ten important things they’ve done for society recently and prove them wrong. But sometimes it’s not that easy, and the only thing you can say is “f#@k that s#!t”. Yes, society has organized itself in a way that excludes and impoverishes a bunch of people who could have been perfectly happy in the state of nature picking berries and hunting aurochs. It’s not your fault, and if they’re going to give you compensation you take it. And we had better make this perfectly clear now, so that when everything becomes automated and run by robots and we’re all behind the curve, everybody agrees that us continuing to exist is still okay.

Likewise with intellectual ability. When someone feels sad because they can’t be a great scientist, it is nice to be able to point out all of their intellectual strengths and tell them “Yes you can, if only you put your mind to it!” But this is often not true. At that point you have to say “f@#k it” and tell them to stop tying their self-worth to being a great scientist. And we had better establish that now, before transhumanists succeed in creating superintelligence and we all have to come to terms with our intellectual inferiority.

VI.

But I think the situation can also be somewhat rosier than that.

Ozy once told me that the law of comparative advantage was one of the most inspirational things they had ever read. This was sufficiently strange that I demanded an explanation.

Ozy said that it proves everyone can contribute. Even if you are worse than everyone else at everything, you can still participate in global trade and other people will pay you money. It may not be very much money, but it will be some, and it will be a measure of how your actions are making other people better off and they are grateful for your existence.

(in real life this doesn’t work for a couple of reasons, but who cares about real life when we have a theory?)

After some thought, I was also inspired by this.

I’m never going to be a great mathematician or Elon Musk. But if I pursue my comparative advantage, which right now is medicine, I can still make money. And if I feel like it, I can donate it to mathematics research. Or anti-aging research. Or the same people Elon Musk donates his money to. They will use it to hire smart people with important talents that I lack, and I will be at least partially responsible for those people’s successes.

If I had an IQ of 70, I think I would still want to pursue my comparative advantage – even if that was ditch-digging, or whatever, and donate that money to important causes. It might not be very much money, but it would be some.

Our modern word “talent” comes from the Greek word talenton, a certain amount of precious metal sometimes used as a denomination of money. The etymology passes through a parable of Jesus’. A master calls three servants to him and gives the first five talents, the second two talents, and the third one talent. The first two servants invest the money and double it. The third literally buries it in a hole. The master comes back later and praises the first two servants, but sends the third servant to Hell (metaphor? what metaphor?).

Various people have come up with various interpretations, but the most popular says that God gives all of us different amounts of resources, and He will judge us based on how well we use these resources rather than on how many He gave us. It would be stupid to give your first servant five loads of silver, then your second servant two loads of silver, then immediately start chewing out the second servant for having less silver than the first one. And if both servants invested their silver wisely, it would be silly to chew out the second one for ending up with less profit when he started with less seed capital. The moral seems to be that if you take what God gives you and use it wisely, you’re fine.

The modern word “talent” comes from this parable. It implies “a thing God has given you which you can invest and give back”.

So if I were a ditch-digger, I think I would dig ditches, donate a portion of the small amount I made, and trust that I had done what I could with the talents I was given.

VII.

The Jews also talk about how God judges you for your gifts. Rabbi Zusya once said that when he died, he wasn’t worried that God would ask him “Why weren’t you Moses?” or “Why weren’t you Solomon?” But he did worry that God might ask “Why weren’t you Rabbi Zusya?”

And this is part of why it’s important for me to believe in innate ability, and especially differences in innate ability. If everything comes down to hard work and positive attitude, then God has every right to ask me “Why weren’t you Srinivasa Ramanujan?” or “Why weren’t you Elon Musk?”

If everyone is legitimately a different person with a different brain and different talents and abilities, then all God gets to ask me is whether or not I was Scott Alexander.

This seems like a gratifyingly low bar.

[more to come on this subject later]

Anybody betting on two general elections this year should first read this analysis by Chris Huhne

The political and legal environment make it very difficult

Nearly a year ago the former LD cabinet minister, Chris Huhne, wrote an excellent piece in the Guardian on how the Fixed Term Parliament Act would make it difficult for a second general election shortly after an indecisive outcome – as looks highly likely in May.

” The Fixed-term Parliaments Act means that the prime minister can no longer call an election at a time of his choosing. … Elections are held every five years, except when two thirds of the Commons votes for one, or a government loses a vote of confidence and there is no further successful vote within 14 days.

True, a minority-government prime minister could engineer the loss of a vote of no confidence, but they would then run the risk that the main opposition party would establish a new administration and delay the election. Since the prime minister would only attempt to force an election if he thought he would win, the opposition would have every incentive to avoid losing. So that stratagem looks flawed.

The fixed-term act introduces a further difficulty for minority governments, because the timing of an election would now be in the hands of the combined opposition majority. Any loss of a vote of confidence would trigger an election if the government could not scrabble together a majority. A minority government would constantly be at risk of an election being called at a time of the opposition’s choosing.

The opposition strategy would then be clear: let the government flounder. Deny or amend ministerial legislation. Maybe even deprive the government of money. None of this would cause it to fall, because the fixed-term act requires a specific vote of no confidence. When the administration was looking truly shambolic, you force and win a vote of no confidence, calling an election at the point of the governing party’s maximum disadvantage.

What if Ed Miliband and David Cameron begin to dislike the fixed term? What if they were jointly keen to re-establish the prime minister’s prerogative to call general elections? They could, of course, combine to do so. But why would the opposition to a minority government want to hand over control of the timing of the next general election to its principal opponent?

All of which tells me that minority governments will be less popular in future, and that coalitions are more likely to be the response to a hung parliament. And as for hung parliaments, we shall see. If Labour and the Tories are closely competitive, and if Scotland stays part of the union, it will be hard for winner to take all.”

I find it hard to argue with Huhne’s logic.

Mike Smithson

For 11 years viewing politics from OUTSIDE the Westminster bubble

Not Watching This Weekend: Mr Benn

The Trailer: Voiceover man begins with ‘some heroes wear many costumes’. The whole trailer is shot through heavy filters, mostly dark and grey just to ensure everyone is clear that this is a Serious Film taking the source material Seriously. As it’s a trailer, we see all the best bits of the film mashed together through hyper-kinetic editing, complete with out of context quotes scattered over them.

The Trailer: Voiceover man begins with ‘some heroes wear many costumes’. The whole trailer is shot through heavy filters, mostly dark and grey just to ensure everyone is clear that this is a Serious Film taking the source material Seriously. As it’s a trailer, we see all the best bits of the film mashed together through hyper-kinetic editing, complete with out of context quotes scattered over them.

We see Mr Benn (Benedict Cumberbatch) in a pinstripe suit and bowler hat, hear the Shopkeeper (Jim Broadbent) give a garbled explanation of how this is a role handed down from generation to generation to protect history and fantasy. There’d be flash cuts of fighting as a knight and as gladiator, doing complicated things as a spaceman and casting magic as a wizard, all shot in glorious Grimdark-Serious-O-Vision.

‘Protecting them from who?’ he asks, and the trailer shows the designated Bad Guy (Matt Smith), possibly interspersed with occasional shots of the Official Love Interest (Sienna Miller), cropping up in various times and places. Then the trailer slows to show us the Big Dramatic Scene.

Mr Benn, in a cowboy outfit celebrating something, when a bloodstained fez rolls across the screen and lands against his feet. He picks it up, looks out and sees the Bad Guy wearing a suit and bowler hat.

“You wore a costume and stepped into my world. Didn’t you realise that I could wear one and step into yours too?”

Another blizzard of disconnected images then the screen goes black. Voiceover Guy: ‘This summer, choose your outfit carefully.’ Graphics tell us MR BENN: THE MOVIE is Coming Soon.

Likelihood of director and writer claiming that this was always the intended vision for the character: High

Likelihood of anyone who’s seen the TV series keeping a straight face while watching it: Low

Likelihood of straight-to-streaming sequels with a tiny budget and none of the original cast: High

Slavery and the Creation of a Counterfeit ‘Biblical Civilization’ in America: 1619-1865

You probably weren’t able to get to Oxford University for the 2013 Astor Lecture, delivered by Notre Dame historian Mark Noll. I wasn’t either. But don’t worry — Deane Galbraith has us covered, providing an .mp3 of Noll’s lecture.

“Mark Noll identifies the year 1865 as the year in which the American Bible civilization cracked,” Galbraith writes, teasing Noll’s lecture, titled “Biblical Criticism and the Decline of America’s Biblical Civilisation, 1865-1918.″

Allow me to do a couple of imprudent and dangerous things here. First, I’m going to respond to Noll’s lecture before I’ve had a chance to listen to it. And, second, I’m going to disagree with Mark Noll about the history of Christianity in America. The latter is particularly reckless, since Noll is an incredibly smart and perceptive historian who seems to have read and digested everything about the history of American Christianity and surely knows more about that subject than I ever will.

But, still, this needs to be said: the title of Noll’s lecture is misleading and … well, it’s just wrong. It’s based on a false premise.

Any discussion of “the Decline of America’s Biblical Civilization, 1865-1918″ is doomed from the start because it assumes that America was some kind of “biblical civilization” in 1865. It wasn’t. “America’s Biblical Civilization” could not have cracked in 1865 because the possibility of such a thing ever existing had been negated back in 1619.

Perhaps America Christians in 1865 imagined they were living in a “Biblical Civiliation.” After all, the majority of white American Christians in the centuries leading up to 1865 regarded themselves as “biblical” people. They said as much quite a bit.

But if we’re going to understand America and American Christianity, we can’t just take their word for it. We have to evaluate what they meant by this claim, whether that meaning is meaningful, and whether it is in any sense accurate.

I think that claim was accurate, but I do not think it was meaningful. Because slavery.

The existence of slavery — the reliance upon slavery — renders the claim of “America’s Biblical Civilization: 1619-1865″ absurd and meaningless, as “any man whose judgment is not blinded by prejudice, or who is not at heart a slaveholder, shall not confess to be right and just.”

That last bit is from Frederick Douglass’ 1852 speech “What to the Slave Is the Fourth of July?” wherein Douglass contends directly with the claim that America was ever, in any meaningful sense, a “biblical civilization”:

The church of this country is not only indifferent to the wrongs of the slave, it actually takes sides with the oppressors. It has made itself the bulwark of American slavery, and the shield of American slave-hunters. Many of its most eloquent Divines. who stand as the very lights of the church, have shamelessly given the sanction of religion and the Bible to the whole slave system. They have taught that man may, properly, be a slave; that the relation of master and slave is ordained of God; that to send back an escaped bondman to his master is clearly the duty of all the followers of the Lord Jesus Christ; and this horrible blasphemy is palmed off upon the world for Christianity.

Yeah, that.

Those same “eloquent Divines” were not just the foremost proponents of American-style “biblical” Christianity, they were the inventors and creators of the thing. And the thing was invented and created and designed in order to give “the sanction of religion and the Bible to the whole slave system.”

Christendom never described itself as “biblical civilization” until the 17th century. For the previous 16 centuries of Christianity, the Bible did not play such a role in the way that Christians and “Christian civilization” identified and imagined itself. Such an idea just wasn’t available or possible before then. The transformation of Christendom from “Christian civilization” into “biblical civilization” was not a thing that could have happened until after the printing press and the widespread availability of non-Latin translations.

And as soon as such a thing became possible — as soon as the English-speaking colonists who would later become “Americans” first had the opportunity to redefine themselves and begin to identify as “biblical” Christians — it began to be shaped by the nearly concurrent rise of the institution of slavery.

The King James Version of the Bible was completed in 1611. The first African slaves were imported into Jamestown in 1619. “Biblical” Christianity and the idea of “biblical civilization” grew up alongside slavery. The latter shaped the former, and the two things have been inextricably intertwined ever since.

The invention of “biblical” Christianity and of the idea of “biblical civilization” was for the purpose of accommodating slavery. That may not have been its exclusive purpose, but it was an essential function of the thing. It was a concept shaped and designed and tailored so that it could and would defend and perpetuate slavery.

In broad terms, it was a mechanism to allow American Christians to avoid the inconveniently unambiguous implications of the question “Is slavery Christian?” Put that way, Christians can offer only one answer. But what if we change the question? What if we begin to ask, instead, “Is slavery biblical?” Ah, now we have room to work. Now we can introduce technicalities and proof-texts. Now we can shift the debate from the damning, insurmountable obstacles of the greatest commandment and the imperative to “do justice,” and we can begin, instead, debating arcane questions involving the exposition of disparate isolated texts.

It was a dodge. And that’s a feature, not a bug. This is what American-style “Bible Christianity” was designed and intended to do.

That’s why the perpetual antebellum “debate” among American Christians over whether or not slavery was “biblical” was rigged from the get-go. “Biblical” was an adjective primarily designed to describe a form of Christianity that had mutated to approve and defend the institution of slavery. “Biblical” may have also meant other things, but that was always a part of its meaning. That’s why those pre-1865 debates over whether or not “slavery is biblical” were ultimately just a form of disingenuous theater.

Of course slavery was “biblical” and of course opposition to slavery was “unbiblical.” That was what those words mean. That was the whole point of declaring that American Christians should think of themselves as a biblical civilization rather than a Christian one.

All of which is why the dominant narrative in historical and theological discussion of pre-1865 American Christian “debates” about slavery get the whole thing backwards and upside-down. I love Mark Noll’s The Civil War as Theological Crisis. You should read it. It’s a terrific, incisive, engaging book full of profound questions and insights. But it also gets the core of its argument backwards and upside-down.