Neuronal cells present periodic trains of localized voltage spikes involving a large amount of different ionic channels. A relevant question is whether this is a cooperative effect or it could also be an intrinsic property of individual channels. Here we use a Langevin formulation for the stochastic dynamics of a pair of Na and K ionic channels. These two channels are simple gated pore models where a minimum set of degrees of freedom follow standard statistical physics. The whole system is totally autonomous without any external energy input, except for the chemical energy of the different ionic concentrations across the membrane. As a result it is shown that a unique pair of different ionic channels can sustain membrane potential periodic spikes. The spikes are due to the interaction between the membrane potential, the ionic flows and the dynamics of the internal parts (gates) of each channel structures. The spike involves a series of dynamical steps being the more relevant one the leak of Na ions. Missing spike events are caused by the altered functioning of specific model parts. The time dependent spike structure is comparable with experimental data.

Nosimpler

Shared posts

Periodic spiking by a pair of ionic channels. (arXiv:1804.05786v1 [physics.bio-ph])

Review of Bryan Caplan’s The Case Against Education

If ever a book existed that I’d judge harshly by its cover—and for which nothing inside could possibly make me reverse my harsh judgment—Bryan Caplan’s The Case Against Education would seem like it. The title is not a gimmick; the book’s argument is exactly what it says on the tin. Caplan—an economist at George Mason University, home of perhaps the most notoriously libertarian economics department on the planet—holds that most of the benefit of education to students (he estimates around 80%, but certainly more than half) is about signalling the students’ preexisting abilities, rather than teaching or improving the students in any way. He includes the entire educational spectrum in his indictment, from elementary school all the way through college and graduate programs. He does have a soft spot for education that can be shown empirically to improve worker productivity, such as technical and vocational training and apprenticeships. In other words, precisely the kind of education that many readers of this blog may have spent their lives trying to avoid.

I’ve spent almost my whole conscious existence in academia, as a student and postdoc and then as a computer science professor. CS is spared the full wrath that Caplan unleashes on majors like English and history: it does, after all, impart some undeniable real-world skills. Alas, I’m not one of the CS professors who teaches anything obviously useful, like how to code or manage a project. When I teach undergrads headed for industry, my only role is to help them understand concepts that they probably won’t need in their day jobs, such as which problems are impossible or intractable for today’s computers; among those, which might be efficiently solved by quantum computers decades in the future; and which parts of our understanding of all this can be mathematically proven.

Granted, my teaching evaluations have been [clears throat] consistently excellent. And the courses I teach aren’t major requirements, so the students come—presumably?—because they actually want to know the stuff. And my former students who went into industry have emailed me, or cornered me, to tell me how much my courses helped them with their careers. OK, but how? Often, it’s something about my class having helped them land their dream job, by impressing the recruiters with their depth of theoretical understanding. As we’ll see, this is an “application” that would make Caplan smile knowingly.

If Caplan were to get his way, the world I love would be decimated. Indeed, Caplan muses toward the end of the book that the world he loves would be decimated too: in a world where educational investment no longer exceeded what was economically rational, he might no longer get to sit around with other economics professors discussing what he finds interesting. But he consoles himself with the thought that decisionmakers won’t listen to him anyway, so it won’t happen.

It’s tempting to reply to Caplan: “now now, your pessimism about anybody heeding your message seems unwarranted. Have anti-intellectual zealots not just taken control of the United States, with an explicit platform of sticking it to the educated elites, and restoring the primacy of lower-education jobs like coal mining, no matter the long-term costs to the economy or the planet? So cheer up, they might listen to you!”

Indeed, given the current stakes, one might simply say: Caplan has set himself against the values that are the incredibly fragile preconditions for all academic debate—even, ironically, debate about the value of academia, like the one we’re now having. So if we want such debate to continue, then we have no choice but to treat Caplan as an enemy, and frame the discussion around how best to frustrate his goals.

In response to an excerpt of Caplan’s book in The Atlantic, my friend Sean Carroll tweeted:

It makes me deeply sad that a tenured university professor could write something like this about higher education. There is more to learning than the labor market.

Why should anyone with my basic values, or Sean’s, give Caplan’s thesis any further consideration? As far as I can tell, there are only two reasons: (1) common sense, and (2) the data.

In his book, Caplan presents dozens of tables and graphs, but he also repeatedly asks his readers to consult their own memories—exploiting the fact that we all have firsthand experience of school. He asks: if education is about improving students’ “human capital,” then why are students so thrilled when class gets cancelled for a snowstorm? Why aren’t students upset to be cheated out of some of the career-enhancing training that they, or their parents, are paying so much for? Why, more generally, do most students do everything in their power—in some cases, outright cheating—to minimize the work they put in for the grade they receive? Is there any product besides higher education, Caplan asks, that people pay hundreds of thousands of dollars for, and then try to consume as little of as they can get away with? Also, why don’t more students save hundreds of thousands of dollars by simply showing up at a university and sitting in on classes without paying—something that universities make zero effort to stop? (Many professors would be flattered, and would even add you to the course email list, entertain your questions, and give you access to the assignments—though they wouldn’t grade your assignments.)

And: if the value of education comes from what it teaches you, how do we explain the fact that students forget almost everything so soon after the final exam, as attested by both experience and the data? Why are employers satisfied with a years-ago degree; why don’t they test applicants to see how much understanding they’ve retained?

Or if education isn’t about any of the specific facts being imparted, but about “learning how to learn” or “learning how to think creatively”—then how is it that studies find academic coursework has so little effect on students’ general learning and reasoning abilities either? That, when there is an improvement in reasoning ability, it’s tightly concentrated on the subject matter of the course, and even then it quickly fades away after the course is over?

More broadly, if the value of mass education derives from making people more educated, how do we explain the fact that high-school and college graduates, most of them, remain so abysmally ignorant? After 12-16 years in something called “school,” large percentages of Americans still don’t know that the earth orbits the sun; believe that heavier objects fall faster than lighter ones and that only genetically modified organisms contain genes; and can’t locate the US or China on a map. Are we really to believe, asks Caplan, that these apparent dunces have nevertheless become “deeper thinkers” by virtue of their schooling, in some holistic, impossible-to-measure way? Or that they would’ve been even more ignorant without school? But how much more ignorant can you be? They could be illiterate, yes: Caplan grants the utility of teaching reading, writing, and arithmetic. But how much beyond the three R’s (if those) do typical students retain, let alone use?

Caplan also poses the usual questions: if you’re not a scientist, engineer, or academic (or even if you are), how much of your undergraduate education do you use in your day job? How well did the course content match what, in retrospect, you feel someone starting your job really needs to know? Could your professors do your job? If not, then how were they able to teach you to do it better?

Caplan acknowledges the existence of inspiring teachers who transform their students’ lives, in ways that need not be reflected in their paychecks: he mentions Robin Williams’ character in The Dead Poets’ Society. But he asks: how many such teachers did you have? If the Robin Williamses are vastly outnumbered by the drudges, then wouldn’t it make more sense for students to stream the former directly into their homes via the Internet—as they can now do for free?

OK, but if school teaches so little, then how do we explain the fact that, at least for those students who are actually able to complete good degrees, research confirms that (on average) having gone to school really does pay, exactly as advertised? Employers do pay more for a college graduate—yes, even an English or art history major—than for a dropout. More generally, starting salary rises monotonically with level of education completed. Employers aren’t known for a self-sacrificing eagerness to overpay. Are they systematically mistaken about the value of school?

Synthesizing decades of work by other economists, Caplan defends the view that the main economic function of school is to give students a way to signal their preexisting qualities, ones that correlate with being competent workers in a modern economy. I.e., that school is tacitly a huge system for winnowing and certifying young people, which also fulfills various subsidiary functions, like keeping said young people off the street, socializing them, maybe occasionally even teaching them something. Caplan holds that, judged as a certification system, school actually works—well enough to justify graduates’ higher starting salaries, without needing to postulate any altruistic conspiracy on the part of employers.

For Caplan, a smoking gun for the signaling theory is the huge salary premium of an actual degree, compared to the relatively tiny premium for each additional year of schooling other than the degree year—even when we hold everything else constant, like the students’ academic performance. In Caplan’s view, this “sheepskin effect” even lets us quantitatively estimate how much of the salary premium on education reflects actual student learning, as opposed to the students signaling their suitability to be hired in a socially approved way (namely, with a diploma or “sheepskin”).

Caplan knows that the signaling story raises an immediate problem: namely, if employers just want the most capable workers, then knowing everything above, why don’t they eagerly recruit teenagers who score highly on the SAT or IQ tests? (Or why don’t they make job offers to high-school seniors with Harvard acceptance letters, skipping the part where the seniors have to actually go to Harvard?)

Some people think the answer is that employers fear getting sued: in the 1971 Griggs vs. Duke Power case, the US Supreme Court placed restrictions on the use of intelligence tests in hiring, because of disparate impact on minorities. Caplan, however, rejects this explanation, pointing out that it would be child’s-play for employers to design interview processes that functioned as proxy IQ tests, were that what the employers wanted.

Caplan’s theory is instead that employers don’t value only intelligence. Instead, they care about the conjunction of intelligence with two other traits: conscientiousness and conformity. They want smart workers who will also show up on time, reliably turn in the work they’re supposed to, and jump through whatever hoops authorities put in front of them. The main purpose of school, over and above certifying intelligence, is to serve as a hugely costly and time-consuming—and therefore reliable—signal that the graduates are indeed conscientious conformists. The sheer game-theoretic wastefulness of the whole enterprise rivals the peacock’s tail or the bowerbird’s ornate bower.

But if true, this raises yet another question. In the signaling story, graduating students (and their parents) are happy that the students’ degrees land them good jobs. Employers are happy that the education system supplies them with valuable workers, pre-screened for intelligence, conscientiousness, and conformity. Even professors are happy that they get paid to do research and teach about topics that interest them, however irrelevant those topics might be to the workplace. So if so many people are happy, who cares if, from an economic standpoint, it’s all a big signaling charade, with very little learning taking place?

For Caplan, the problem is this: because we’ve all labored under the mistaken theory that education imparts vital skills for a modern economy, there are trillions of dollars of government funding for every level of education—and that, in turn, removes the only obstacle to a credentialing arms race. The equilbrium keeps moving over the decades, with more and more years of mostly-pointless schooling required to prove the same level of conscientiousness and conformity as before. Jobs that used to require only a high-school diploma now require a bachelors; jobs that used to require only a bachelors now require a masters, and so on—despite the fact that the jobs themselves don’t seem to have changed appreciably.

For Caplan, a thoroughgoing libertarian, the solution is as obvious as it is radical: abolish government funding for education. (Yes, he explicitly advocates a complete “separation of school and state.”) Or if some state role in education must be retained, then let it concentrate on the three R’s and on practical job skills. But what should teenagers do, if we’re no longer urging them to finish high school? Apparently worried that he hasn’t yet outraged liberals enough, Caplan helpfully suggests that we relax the laws around child labor. After all, he says, if we’ve decided anyway that teenagers who aren’t academically inclined should suffer through years of drudgery, then instead of warming a classroom seat, why shouldn’t they apprentice themselves to a carpenter or a roofer? That way they could contribute to the economy, and gain the independence from their parents that most of them covet, and learn skills that they’d be much more likely to remember and use than the dissection of owl pellets. Even if working a real job involved drudgery, at least it wouldn’t be as pointless as the drudgery of school.

Given his conclusions, and the way he arrives at them, Caplan realizes that he’ll come across to many as a cartoon stereotype of a narrow-minded economist, who “knows the price of everything but the value of nothing.” So he includes some final chapters in which, setting aside the charts and graphs, he explains how he really feels about education. This is the context for what I found to be the most striking passages in the book:

I am an economist and a cynic, but I’m not a typical cynical economist. I’m a cynical idealist. I embrace the ideal of transformative education. I believe wholeheardedly in the life of the mind. What I’m cynical about is people … I don’t hate education. Rather I love education too much to accept our Orwellian substitute. What’s Orwellian about the status quo? Most fundamentally, the idea of compulsory enlightenment … Many idealists object that the Internet provides enlightenment only for those who seek it. They’re right, but petulant to ask for more. Enlightenment is a state of mind, not a skill—and state of mind, unlike skill, is easily faked. When schools require enlightenment, students predictably respond by feigning interest in ideas and culture, giving educators a false sense of accomplishment. (p. 259-261)

OK, but if one embraces the ideal, then rather than dynamiting the education system, why not work to improve it? According to Caplan, the answer is that we don’t know whether it’s even possible to build a mass education system that actually works (by his lights). He says that, if we discover that we’re wasting trillions of dollars on some sector, the first order of business is simply to stop the waste. Only later should we entertain arguments about whether we should restart the spending in some new, better way, and we shouldn’t presuppose that the sector in question will win out over others.

Above, I took pains to set out Caplan’s argument as faithfully as I could, before trying to pass judgment on it. At some point in a review, though, the hour of judgment arrives.

I think Caplan gets many things right—even unpopular things that are difficult for academics to admit. It’s true that a large fraction of what passes for education doesn’t deserve the name—even if, as a practical matter, it’s far from obvious how to cut that fraction without also destroying what’s precious and irreplaceable. He’s right that there’s no sense in badgering weak students to go to college if those students are just going to struggle and drop out and then be saddled with debt. He’s right that we should support vocational education and other non-traditional options to serve the needs of all students. Nor am I scandalized by the thought of teenagers apprenticing themselves to craftspeople, learning skills that they’ll actually value while gaining independence and starting to contribute to society. This, it seems to me, is a system that worked for most of human history, and it would have to fail pretty badly in order to do worse than, let’s say, the average American high school. And in the wake of the disastrous political upheavals of the last few years, I guess the entire world now knows that, when people complain that the economy isn’t working well enough for non-college-graduates, we “technocratic elites” had better have a better answer ready than “well then go to college, like we did.”

Yes, probably the state has a compelling interest in trying to make sure nearly everyone is literate, and probably most 8-year-olds have no clue what’s best for themselves. But at least from adolescence onward, I think that enormous deference ought to be given to students’ choices. The idea that “free will” (in the practical rather than metaphysical sense) descends on us like a halo on our 18th birthdays, having been absent beforehand, is an obvious fiction. And we all know it’s fiction—but it strikes me as often a destructive fiction, when law and tradition force us to pretend that we believe it.

Some of Caplan’s ideas dovetail with the thoughts I’ve had myself since childhood on how to make the school experience less horrible—though I never framed my own thoughts as “against education.” Make middle and high schools more like universities, with freedom of movement and a wide range of offerings for students to choose from. Abolish hall passes and detentions for lateness: just like in college, the teacher is offering a resource to students, not imprisoning them in a dungeon. Don’t segregate by age; just offer a course or activity, and let kids of any age who are interested show up. And let kids learn at their own pace. Don’t force them to learn things they aren’t ready for: let them love Shakespeare because they came to him out of interest, rather than loathing him because he was forced down their throats. Never, ever try to prevent kids from learning material they are ready for: instead of telling an 11-year-old teaching herself calculus to go back to long division until she’s the right age (does that happen? ask how I know…), say: “OK hotshot, so you can differentiate a few functions, but can you handle these here books on linear algebra and group theory, like Terry Tao could have when he was your age?”

Caplan mentions preschool as the one part of the educational system that strikes him as least broken. Not because it has any long-term effects on kids’ mental development (it might not), just because the tots enjoy it at the time. They get introduced to a wide range of fun activities. They’re given ample free time, whether for playing with friends or for building or drawing by themselves. They’re usually happy to be dropped off. And we could add: no one normally minds if parents drop their kids off late, or pick them up early, or take them out for a few days. The preschool is just a resource for the kids’ benefit, not a never-ending conformity test. As a father who’s now seen his daughter in three preschools, this matches my experience.

Having said all this, I’m not sure I want to live in the world of Caplan’s “complete separation of school and state.” And I’m not using “I’m not sure” only as a euphemism for “I don’t.” Caplan is proposing a radical change that would take civilization into uncharted territory: as he himself notes, there’s not a single advanced country on earth that’s done what he advocates. The trend has everywhere been in the opposite direction, to invest more in education as countries get richer and more technology-based. Where there have been massive cutbacks to education, the causes have usually been things like famine or war.

So I have the same skepticism of Caplan’s project that I’d have (ironically) of Bolshevism or any other revolutionary project. I say to him: don’t just persuade me, show me. Show me a case where this has worked. In the social world, unlike the mathematical world, I put little stock in long chains of reasoning unchecked by experience.

Caplan explicitly invites his readers to test his assertions against their own lives. When I do so, I come back with a mixed verdict. Before college, as you may have gathered, I find much to be said for Caplan’s thesis that the majority of school is makework, the main purposes of which are to keep the students out of trouble and on the premises, and to certify their conscientiousness and conformity. There are inspiring teachers here and there, but they’re usually swimming against the tide. I still feel lucky that I was able to finagle my way out by age 15, and enter Clarkson University and then Cornell with only a G.E.D.

In undergrad, on the other hand, and later in grad school at Berkeley, my experience was nothing like what Caplan describes. The professors were actual experts: people who I looked up to or even idolized. I wanted to learn what they wanted to teach. (And if that ever wasn’t the case, I could switch to a different class, excepting some major requirements.) But was it useful?

As I look back, many of my math and CS classes were grueling bootcamps on how to prove theorems, how to design algorithms, how to code. Most of the learning took place not in the classroom but alone, in my dorm, as I struggled with the assignments—having signed up for the most advanced classes that would allow me in, and thereby left myself no escape except to prove to the professor that I belonged there. In principle, perhaps, I could have learned the material on my own, but in reality I wouldn’t have. I don’t still use all of the specific tools I acquired, though I do still use a great many of them, from the Gram-Schmidt procedure to Gaussian integrals to finding my way around a finite group or field. Even if I didn’t use any of the tools, though, this gauntlet is what upgraded me from another math-competition punk to someone who could actually write research papers with long proofs. For better or worse, it made me what I am.

Just as useful as the math and CS courses were the writing seminars—places where I had to write, and where my every word got critiqued by the professor and my fellow students, so I had to do a passable job. Again: intensive forced practice in what I now do every day. And the fact that it was forced was now fine, because, like some leather-bound masochist, I’d asked to be forced.

On hearing my story, Caplan would be unfazed. Of course college is immensely useful, he’d say … for those who go on to become professors, like me or him. He “merely” questions the value of higher education for almost everyone else.

OK, but if professors are at least good at producing more people like themselves, able to teach and do research, isn’t that something, a base we can build on that isn’t all about signaling? And more pointedly: if this system is how the basic research enterprise perpetuates itself, then shouldn’t we be really damned careful with it, lest we slaughter the golden goose?

Except that Caplan is skeptical of the entire enterprise of basic research. He writes:

Researchers who specifically test whether education accelerates progress have little to show for their efforts. One could reply that, given all the flaws of long-run macroeconomic data, we should ignore academic research in favor of common sense. But what does common sense really say? … True, ivory tower self-indulgence occasionally revolutionizes an industry. Yet common sense insists the best way to discover useful ideas is to search for useful ideas—not to search for whatever fascinates you and pray it turns out to be useful (p. 175).

I don’t know if common sense insists that, but if it does, then I feel on firm ground to say that common sense is woefully inadequate. It’s easy to look at most basic research, and say: this will probably never be useful for anything. But then if you survey the inventions that did change the world over the past century—the transistor, the laser, the Web, Google—you find that almost none would have happened without what Caplan calls “ivory tower self-indulgence.” What didn’t come directly from universities came from entities (Bell Labs, DARPA, CERN) that wouldn’t have been thinkable without universities, and that themselves were largely freed from short-term market pressures by governments, like universities are.

Caplan’s skepticism of basic research reminded me of a comment in Nick Bostrom’s book Superintelligence:

A colleague of mine likes to point out that a Fields Medal (the highest honor in mathematics) indicates two things about the recipient: that he was capable of accomplishing something important, and that he didn’t. Though harsh, the remark hints at a truth. (p. 314)

I work in theoretical computer science: a field that doesn’t itself win Fields Medals (at least not yet), but that has occasions to use parts of math that have won Fields Medals. Of course, the stuff we use cutting-edge math for might itself be dismissed as “ivory tower self-indulgence.” Except then the cryptographers building the successors to Bitcoin, or the big-data or machine-learning people, turn out to want the stuff we were talking about at conferences 15 years ago—and we discover to our surprise that, just as the mathematicians gave us a higher platform to stand on, so we seem to have built a higher platform for the practitioners. The long road from Hilbert to Gödel to Turing and von Neumann to Eckert and Mauchly to Gates and Jobs is still open for traffic today.

Yes, there’s plenty of math that strikes even me as boutique scholasticism: a way to signal the brilliance of the people doing it, by solving problems that require years just to understand their statements, and whose “motivations” are about 5,000 steps removed from anything Caplan or Bostrom would recognize as motivation. But where I part ways is that there’s also math that looked to me like boutique scholasticism, until Greg Kuperberg or Ketan Mulmuley or someone else finally managed to explain it to me, and I said: “ah, so that’s why Mumford or Connes or Witten cared so much about this. It seems … almost like an ordinary applied engineering question, albeit one from the year 2130 or something, being impatiently studied by people a few moves ahead of everyone else in humanity’s chess game against reality. It will be pretty sweet once the rest of the world catches up to this.”

I have a more prosaic worry about Caplan’s program. If the world he advocates were actually brought into being, I suspect the people responsible wouldn’t be nerdy economics professors like himself, who have principled objections to “forced enlightenment” and to signalling charades, yet still maintain warm fuzzies for the ideals of learning. Rather, the “reformers” would be more on the model of, say, Steve Bannon or Scott Pruitt or Alex Jones: people who’d gleefully take a torch to the universities, fortresses of the despised intellectual elite, not in the conviction that this wouldn’t plunge humanity back into the Dark Ages, but in the hope that it would.

When the US Congress was debating whether to cancel the Superconducting Supercollider, a few condensed-matter physicists famously testified against the project. They thought that $10-$20 billion for a single experiment was excessive, and that they could provide way more societal value with that kind of money were it reallocated to them. We all know what happened: the SSC was cancelled, and of the money that was freed up, 0%—absolutely none of it—went to any of the other research favored by the SSC’s opponents.

If Caplan were to get his way, I fear that the story would be similar. Caplan talks about all the other priorities—from feeding the world’s poor to curing diseases to fixing crumbling infrastructure—that could be funded using the trillions currently wasted on runaway credential signaling. But in any future I can plausibly imagine where the government actually axes education, the savings go to things like enriching the leaders’ cronies and launching vanity wars.

My preferences for American politics have two tiers. In the first tier, I simply want the Democrats to vanquish the Republicans, in every office from president down to dogcatcher, in order to prevent further spiraling into nihilistic quasi-fascism, and to restore the baseline non-horribleness that we know is possible for rich liberal democracies. Then, in the second tier, I want the libertarians and rationalists and nerdy economists and Slate Star Codex readers to be able to experiment—that’s a key word here—with whether they can use futarchy and prediction markets and pricing-in-lieu-of-regulation and other nifty ideas to improve dramatically over the baseline liberal order. I don’t expect that I’ll ever get what I want; I’ll be extremely lucky even to get the first half of it. But I find that my desires regarding Caplan’s program fit into the same mold. First and foremost, save education from those who’d destroy it because they hate the life of the mind. Then and only then, let people experiment with taking a surgical scalpel to education, removing from it the tumor of forced enlightenment, because they love the life of the mind.

Administrators at CUNY and Duke Aren’t Going to Do Anything About Students Who Disrupted Events

In the wake of shutdown attempts led by student-activists, administrators at Duke University and the City University of New York have finally made clear what their battle plan is for deterring such behavior in the future: do nothing.

In the wake of shutdown attempts led by student-activists, administrators at Duke University and the City University of New York have finally made clear what their battle plan is for deterring such behavior in the future: do nothing.

Activists at Duke recently hijacked an alumni event and shouted down their own president, Vincent Price. The students were then shocked and outraged to learn that the administration was considering punishing them—even the mere suggestion of discipline was triggering and would exacerbate their "pre-existing mental health conditions," they claimed.

And so the administration folded. All student conduct investigations have been closed, according to The Daily Tar Heel.

At CUNY, student-protesters crashed a planned speech by South Texas College of Law Professor Josh Blackman. The talked over him for the first ten minutes of the event before leaving, which prevented Blackman from delivering his full remarks and may have intimidated would-be attendees. CUNY Law Dean Mary Lu Bilek essentially said that this was fine—Blackman was able to speak for some the time, so no college policy had been violated. "This non-violent, limited protest was a reasonable exercise of protected free speech, and it did not violate any university policy," she said.

Several CUNY professors—Martin Burke, David Gordon, K.C. Johnson, and David Seidermann—have now written a letter to CUNY Chanellor James Milliken asking him to "reaffirm CUNY's support for the rights of invited speakers to speak and the rights of students in their audience to hear their remarks." According to their letter:

Dean Bilek cited no provision of the student handbook to sustain her claim that "limited" disruptions of an invited speaker's talk do not violate CUNY policy. The handbook, we should note, implies the reverse, holding that "a member of the academic community shall not intentionally obstruct and/or forcibly prevent others from the exercise of their rights. Nor shall she/he interfere with the institution's educational process or facilities, or the rights of those who wish to avail themselves of any of the institution's instructional, personal, administrative, recreational, and community services."

It is noteworthy that, according to Blackman, the disruptors were intimidating enough to discourage some students from entering the room while the protesters were there. He was, he has stated, "not able to give the presentation I wanted—both in terms of duration and content—because of the hecklers. The Dean is simply incorrect when said the protest was only 'limited.'" (Blackman had planned a 45-minute address, to be followed by a question-and-answer session, thereby planning to allow time for CUNY Law students, including his critics, to ask him questions about his arguments.) Photographs of the event show the disruptors not only preventing him from delivering a portion of his planned remarks but also obstructing the audience's view of his PowerPoint presentation.

It's appropriate for university officials to exercise some caution when contemplating disciplinary action against students. But at some point, letting students face absolutely no consequences for such behavior is putting the free speech rights of everyone else at risk.

Lab-Grown Meat Is Coming to Your Supermarket. Ranchers Are Fighting Back: New at Reason

Would you eat a hamburger or a chicken nugget made of meat grown in a laboratory?

Joshua Tetrick, co-founder and CEO of JUST, is betting that you will. The San Francisco-based company has been producing and selling non-animal versions of food, like mayonnaise, since 2013, and it's raised more than $310 million in venture capital.

Tetrick and his team have created products like Just Mayo by identifying plant-based alternatives to common animal products, like eggs, using a combination of lab experiments and machine-learning.

JUST is one of a handful of tech companies working to disrupt the meat production industry.

While many of its competitors are pursuing better plant-based meat substitutes, JUST is pushing ahead with so-called "clean meat," or lab-grown animal tissue that requires no farming, no feeding of livestock, and no slaughterhouses. Only a single sample from a single animal that's duplicated endlessly.

JUST and companies like it are poised to disrupt the livestock industry. So established players are turning to the government to help protect their turf.

The United States Cattlemen's Association, which declined to participate in this story, submitted a petition still under consideration by the United States Department of Agriculture asking that the words "meat" and "beef" exclude any products that "are neither derived from animals, nor slaughtered in the traditional manner."

Tetrick says accurate labeling will be essential when marketing his lab-grown "clean meat," which he hopes will transcend the vegan vs. carnivore paradigm.

"We don't allow the term 'vegan' to be used in our company," says Tetrick. "That word ends up turning off ninety-nine percent of people."

This isn't Tetrick's first fight with entrenched food interests.

When the company's first product, Just Mayo, appeared on the shelves of major retailers, the American Egg Board went on the offensive.

According to internal emails obtained by MIT researchers through the Freedom of Information Act, Egg Board members tried and failed to get Whole Foods to pull the product from its shelves and hired a network of writers to trash the product on food review sites.

Target stopped selling Just Mayo after receiving an anonymous letter about food safety, but a Food and Drug Administration investigation later found that the product was safe. Investigators failed to identify the author of the letter.

At one point, Egg Board members even discussed putting out a "hit" on Tetrick, with one member writing that he should get have his "old buddies from Brooklyn pay him a visit." The officials later told investigators that they were joking.

Whether or not consumers are ready for lab-grown meat is yet to be seen, and the company landed in hot water with the SEC in 2016 after being accused of buying its own products off the shelves to boost sales figures with the goal of raising more venture capital, though the company claims it was a quality control measure. No charges resulted from the investigation.

With JUST products in more than 20,000 stores, plans to release lab-grown clean meat onto the market by the end of the year at a retail price within 30 percent of that of traditional meat, Tetrick is optimistic about the future of the company and the global food system.

"In tomorrow's world, you can eat more meat, hopefully safer meat, even better tasting meat, without eating the animal," says Tetrick.

Produced by Zach Weissmueller. Camera by Alex Manning.

Subscribe to our YouTube channel.

Subscribe to our podcast at iTunes.

"Scuba" by Metre is licensed under a Attribution-NonCommercial-ShareAlike License (https://creativecommons.org/licenses/by-nc-sa/4.0/) Source: http://freemusicarchive.org/music/Metre/Circuit_1731/Scuba_1957

Artist: http://freemusicarchive.org/music/Metre/

"Space Probe" by Metre is licensed under a Attribution-NonCommercial-ShareAlike License (https://creativecommons.org/licenses/by-nc-sa/4.0/) Source: http://freemusicarchive.org/music/Metre/Circuit_1731/Space_Probe

Artist: http://freemusicarchive.org/music/Metre/

"Deluge" by Cellophane Sam is licensed under a Attribution-NonCommercial 3.0 License (https://creativecommons.org/licenses/by-nc/3.0/us/) Source: http://freemusicarchive.org/music/Cellophane_Sam/Sea_Change/01_Deluge

Artist: http://freemusicarchive.org/music/Cellophane_Sam/

"Deluge" by Cellophane Sam is licensed under a Attribution-NonCommercial 3.0 License (https://creativecommons.org/licenses/by-nc/3.0/us/) Source: http://freemusicarchive.org/music/Cellophane_Sam/Sea_Change/01_Deluge

Artist: http://freemusicarchive.org/music/Cellophane_Sam/

Photo Credits: Sasha Gusov / Axiom Photographic/Newscom

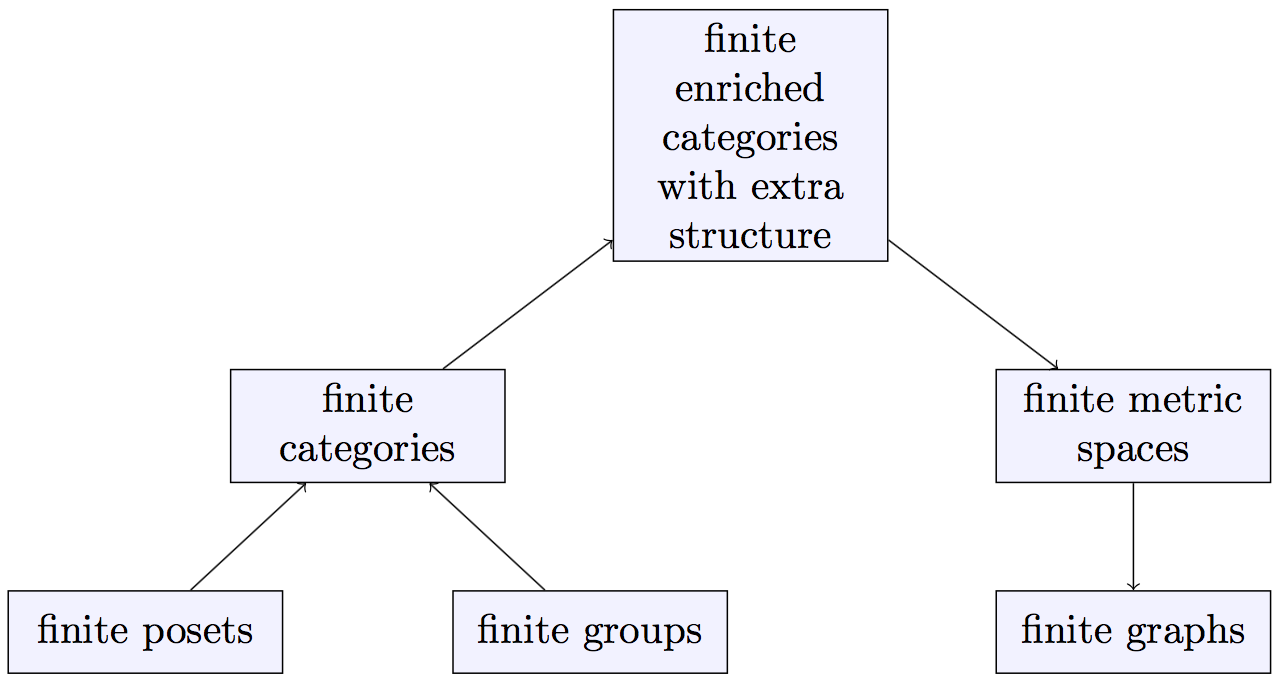

Applied Category Theory Course: Ordered Sets

My applied category theory course based on Fong and Spivak’s book Seven Sketches is going well. Over 250 people have registered for the course, which allows them to ask question and discuss things. But even if you don’t register you can read my “lectures”.

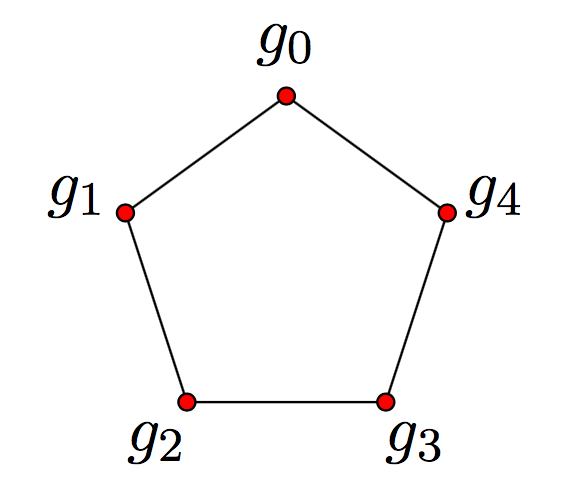

Here are all the lectures on Chapter 1, which is about adjoint functors between posets, and how they interact with meets and joins. We study the applications to logic – both classical logic based on subsets, and the nonstandard version of logic based on partitions. And we show how this math can be used to understand “generative effects”: situations where the whole is more than the sum of its parts!

• Lecture 1 – Introduction

• Lecture 2 – What is Applied Category Theory?

• Lecture 3 – Chapter 1: Preorders

• Lecture 4 – Chapter 1: Galois Connections

• Lecture 5 – Chapter 1: Galois Connections

• Lecture 6 – Chapter 1: Computing Adjoints

• Lecture 7 – Chapter 1: Logic

• Lecture 8 – Chapter 1: The Logic of Subsets

• Lecture 9 – Chapter 1: Adjoints and the Logic of Subsets

• Lecture 10 – Chapter 1: The Logic of Partitions

• Lecture 11 – Chapter 1: The Poset of Partitions

• Lecture 12 – Chapter 1: Generative Effects

• Lecture 13 – Chapter 1: Pulling Back Partitions

• Lecture 14 – Chapter 1: Adjoints, Joins and Meets

• Lecture 15 – Chapter 1: Preserving Joins and Meets

• Lecture 16 – Chapter 1: The Adjoint Functor Theorem for Posets

• Lecture 17 – Chapter 1: The Grand Synthesis

If you want to discuss these things, please visit the Azimuth Forum and register! Use your full real name as your username, with no spaces, and use a real working email address. If you don’t, I won’t be able to register you. Your email address will be kept confidential.

I’m finding this course a great excuse to put my thoughts about category theory into a more organized form, and it’s displaced most of the time I used to spend on Google+ and Twitter. That’s what I wanted: the conversations in the course are more interesting!

'Qui ouvre une école, ferme une prison.'

The title is a quote from Victor Hugo: "Each time you open a new school, you shut down a prison."

Photo cropped for size and emphasis and brightened from the original here.

A concise history of hookworm in the American south

For more than three centuries, a plague of unshakable lethargy blanketed the American South.The rest of the story, with a video, is at PBS.

It began with “ground itch,” a prickly tingling in the tender webs between the toes, which was soon followed by a dry cough. Weeks later, victims succumbed to an insatiable exhaustion and an impenetrable haziness of the mind that some called stupidity. Adults neglected their fields and children grew pale and listless. Victims developed grossly distended bellies and “angel wings”—emaciated shoulder blades accentuated by hunching. All gazed out dully from sunken sockets with a telltale “fish-eye” stare.

The culprit behind “the germ of laziness,” as the South’s affliction was sometimes called, was Necator americanus—the American murderer. Better known today as the hookworm, millions of those bloodsucking parasites lived, fed, multiplied, and died within the guts of up to 40% of populations stretching from southeastern Texas to West Virginia. Hookworms stymied development throughout the region and bred stereotypes about lazy, moronic Southerners...

“You had an entire class of Southern society—including whites, blacks, and Native Americans—that was looked upon as shiftless, lazy good-for-nothings who can’t do a day’s work,” my mom explained to me. “Hookworms tainted the nation’s picture of what a Southerner looked and acted like.”

How to upper-bound the probability of something bad

Scott Alexander has a new post decrying how rarely experts encode their knowledge in the form of detailed guidelines with conditional statements and loops—or what one could also call flowcharts or expert systems—rather than just blanket recommendations. He gives, as an illustration of what he’s looking for, an algorithm that a psychiatrist might use to figure out which antidepressants or other treatments will work for a specific patient—with the huge proviso that you shouldn’t try his algorithm at home, or (most importantly) sue him if it doesn’t work.

Compared to a psychiatrist, I have the huge advantage that if my professional advice fails, normally no one gets hurt or gets sued for malpractice or commits suicide or anything like that. OK, but what do I actually know that can be encoded in if-thens?

Well, one of the commonest tasks in the day-to-day life of any theoretical computer scientist, or mathematician of the Erdös flavor, is to upper bound the probability that something bad will happen: for example, that your randomized algorithm or protocol will fail, or that your randomly constructed graph or code or whatever it is won’t have the properties needed for your proof.

So without further ado, here are my secrets revealed, my ten-step plan to probability-bounding and computer-science-theorizing success.

Step 1. “1” is definitely an upper bound on the probability of your bad event happening. Check whether that upper bound is good enough. (Sometimes, as when this is an inner step in a larger summation over probabilities, the answer will actually be yes.)

Step 2. Try using Markov’s inequality (a nonnegative random variable exceeds its mean by a factor of k at most a 1/k fraction of the time), combined with its close cousin in indispensable obviousness, the union bound (the probability that any of several bad events will happen, is at most the sum of the probabilities of each bad event individually). About half the time, you can stop right here.

Step 3. See if the bad event you’re worried about involves a sum of independent random variables exceeding some threshold. If it does, hit that sucker with a Chernoff or Hoeffding bound.

Step 4. If your random variables aren’t independent, see if they at least form a martingale: a fancy word for a sum of terms, each of which has a mean of 0 conditioned on all the earlier terms, even though it might depend on the earlier terms in subtler ways. If so, Azuma your problem into submission.

Step 5. If you don’t have a martingale, but you still feel like your random variables are only weakly correlated, try calculating the variance of whatever combination of variables you care about, and then using Chebyshev’s inequality: the probability that a random variable differs from its mean by at most k times the standard deviation (i.e., the square root of the variance) is at most 1/k2. If the variance doesn’t work, you can try calculating some higher moments too—just beware that, around the 6th or 8th moment, you and your notebook paper will likely both be exhausted.

Step 6. OK, umm … see if you can upper-bound the variation distance between your probability distribution and a different distribution for which it’s already known (or is easy to see) that it’s unlikely that anything bad happens. A good example of a tool you can use to upper-bound variation distance is Pinsker’s inequality.

Step 7. Now is the time when you start ransacking Google and Wikipedia for things like the Lovász Local Lemma, and concentration bounds for low-degree polynomials, and Hölder’s inequality, and Talagrand’s inequality, and other isoperimetric-type inequalities, and hypercontractive inequalities, and other stuff that you’ve heard your friends rave about, and have even seen successfully used at least twice, but there’s no way you’d remember off the top of your head under what conditions any of this stuff applies, or whether any of it is good enough for your application. (Just between you and me: you may have already visited Wikipedia to refresh your memory about the earlier items in this list, like the Chernoff bound.) “Try a hypercontractive inequality” is surely the analogue of the psychiatrist’s “try electroconvulsive therapy,” for a patient on whom all milder treatments have failed.

Step 8. So, these bad events … how bad are they, anyway? Any chance you can live with them? (See also: Step 1.)

Step 9. You can’t live with them? Then back up in your proof search tree, and look for a whole different approach or algorithm, which would make the bad events less likely or even kill them off altogether.

Step 10. Consider the possibility that the statement you’re trying to prove is false—or if true, is far beyond any existing tools. (This might be the analogue of the psychiatrist’s: consider the possibility that evil conspirators really are out to get your patient.)

Poem of the Day: In a Word, a World

Source: The Poet, the Lion, Talking Pictures, El Farolito, a Wedding in St. Roch, the Big Box Store, the Warp in the Mirror, Spring, Midnights, Fire & All(Copper Canyon Press, 2016)

C. D. Wright

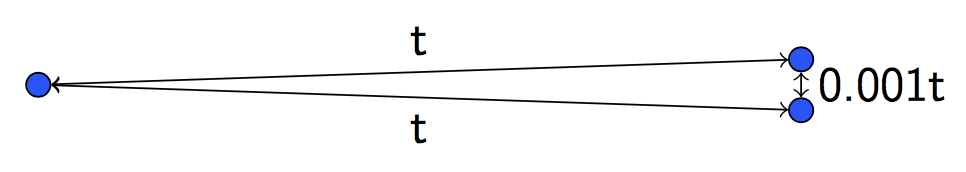

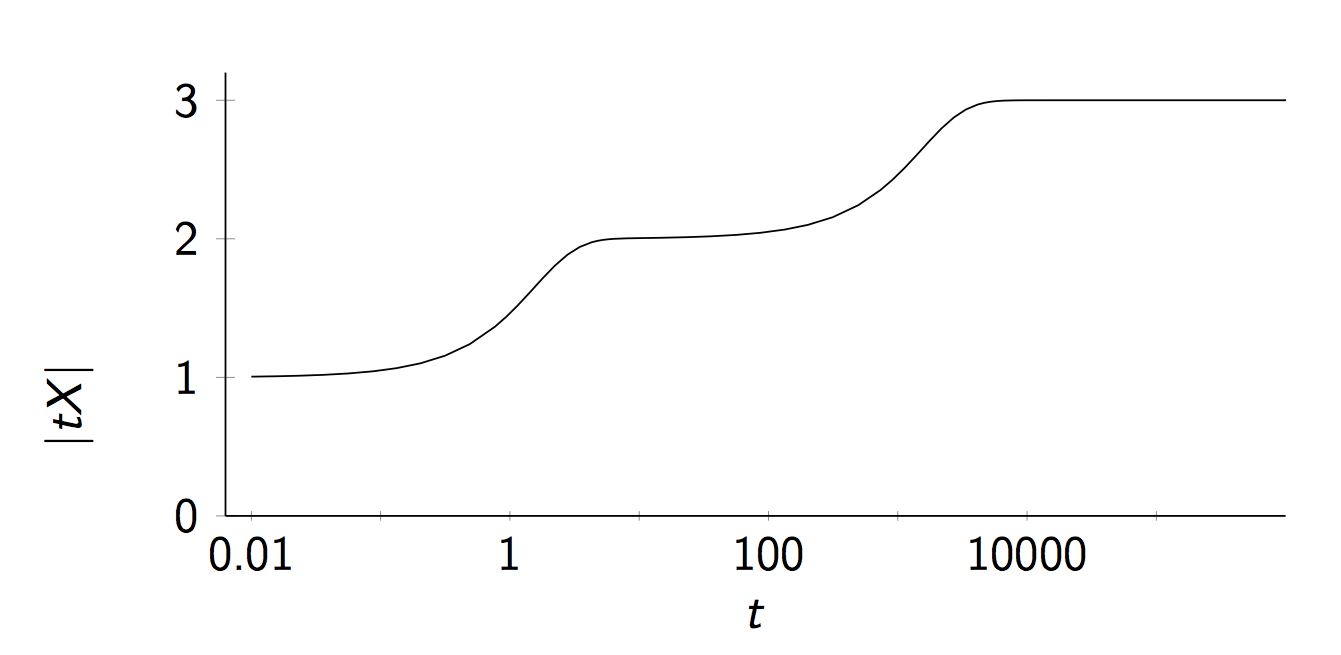

Dynamical Systems and Their Steady States

guest post by Maru Sarazola

Now that we know how to use decorated cospans to represent open networks, the Applied Category Theory Seminar has turned its attention to open reaction networks (aka Petri nets) and the dynamical systems associated to them.

In A Compositional Framework for Reaction Networks (summarized in this very blog by John Baez not too long ago), authors John Baez and Blake Pollard put Fong’s results to good use and define cospan categories RxNet\mathbf{RxNet} and Dynam\mathbf{Dynam} of (open) reaction networks and (open) dynamical systems. Once this is done, the main goal of the paper is to show that the mapping that associates to an open reaction network its corresponding dynamical system is compositional, as is the mapping that takes an open dynamical system to the relation that holds between its constituents in steady state. In other words, they show that the study of the whole can be done through the study of the parts.

I would like to place the focus on dynamical systems and the study of their steady states, taking a closer look at this correspondence called “black-boxing”, and comparing it to previous related work done by David Spivak.

Baez–Pollard’s approach

The category Dynam\mathbf{Dynam} of open dynamical systems

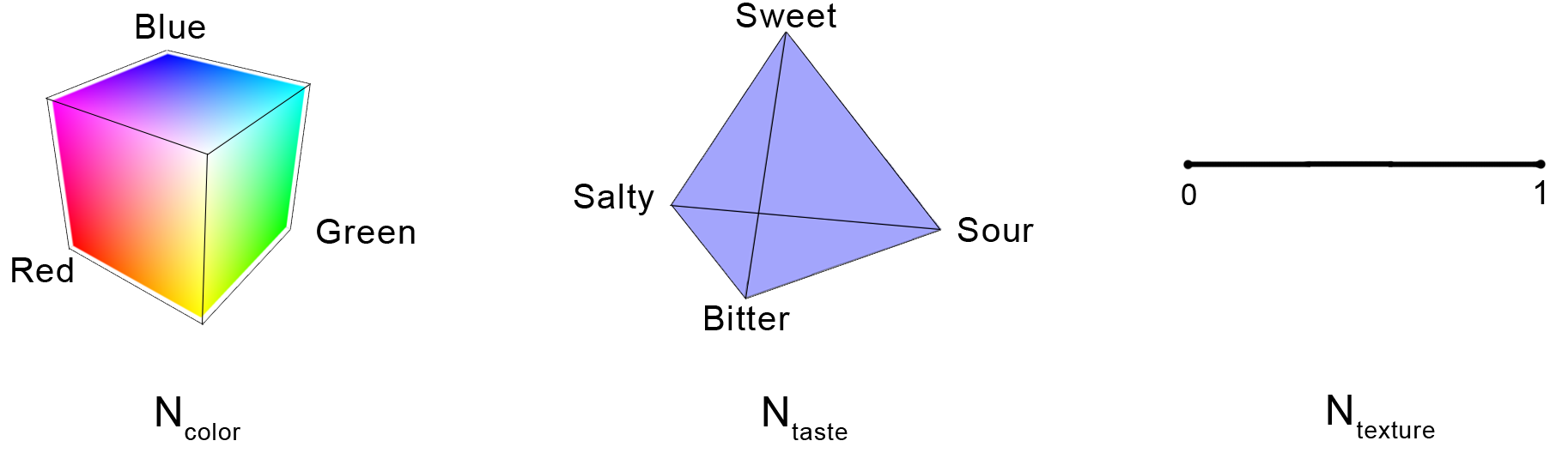

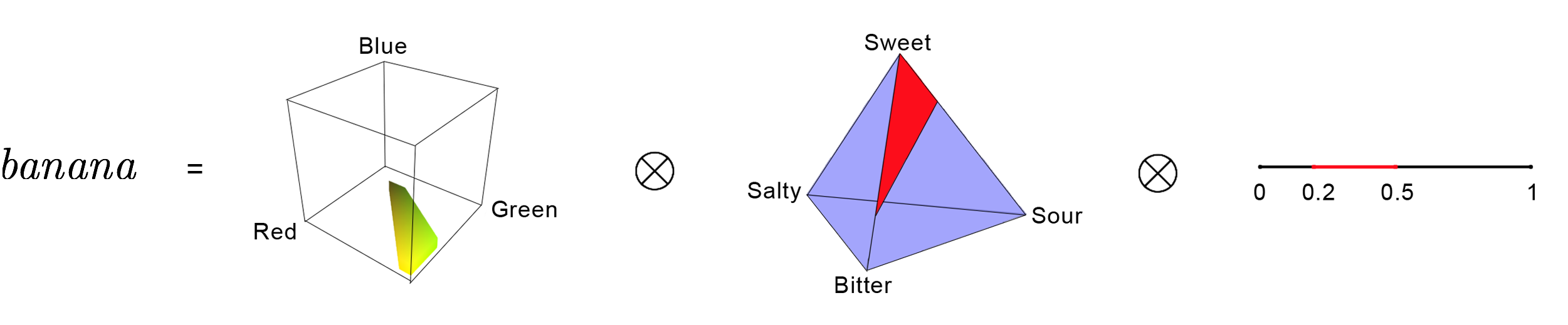

Let’s start by introducing the main players. A dynamical system is usually defined as a manifold MM whose points are “states”, together with a smooth vector field on MM saying how these states evolve in time. Since the motivation in this paper comes from chemistry, our manifolds will be euclidean spaces ℝ S\mathbb{R}^S, where SS should be thought of as the finite set of species involved, and a vector c∈ℝ Sc\in\mathbb{R}^S gives the concentration of each species. Then, the dynamical system is a differential equation

dc(t)dt=v(c(t))\frac{d c(t)}{d t}=v(c(t))

where c:ℝ→ℝ Sc:\mathbb{R}\to\mathbb{R}^S gives the concentrations as a function of time, and vv is a vector field on ℝ S\mathbb{R}^S.

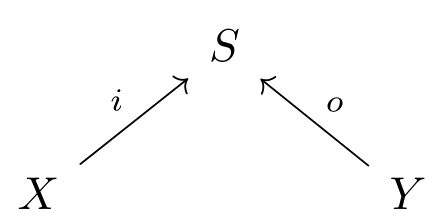

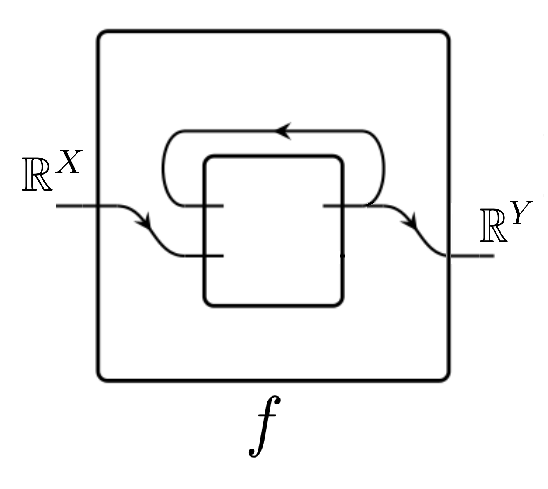

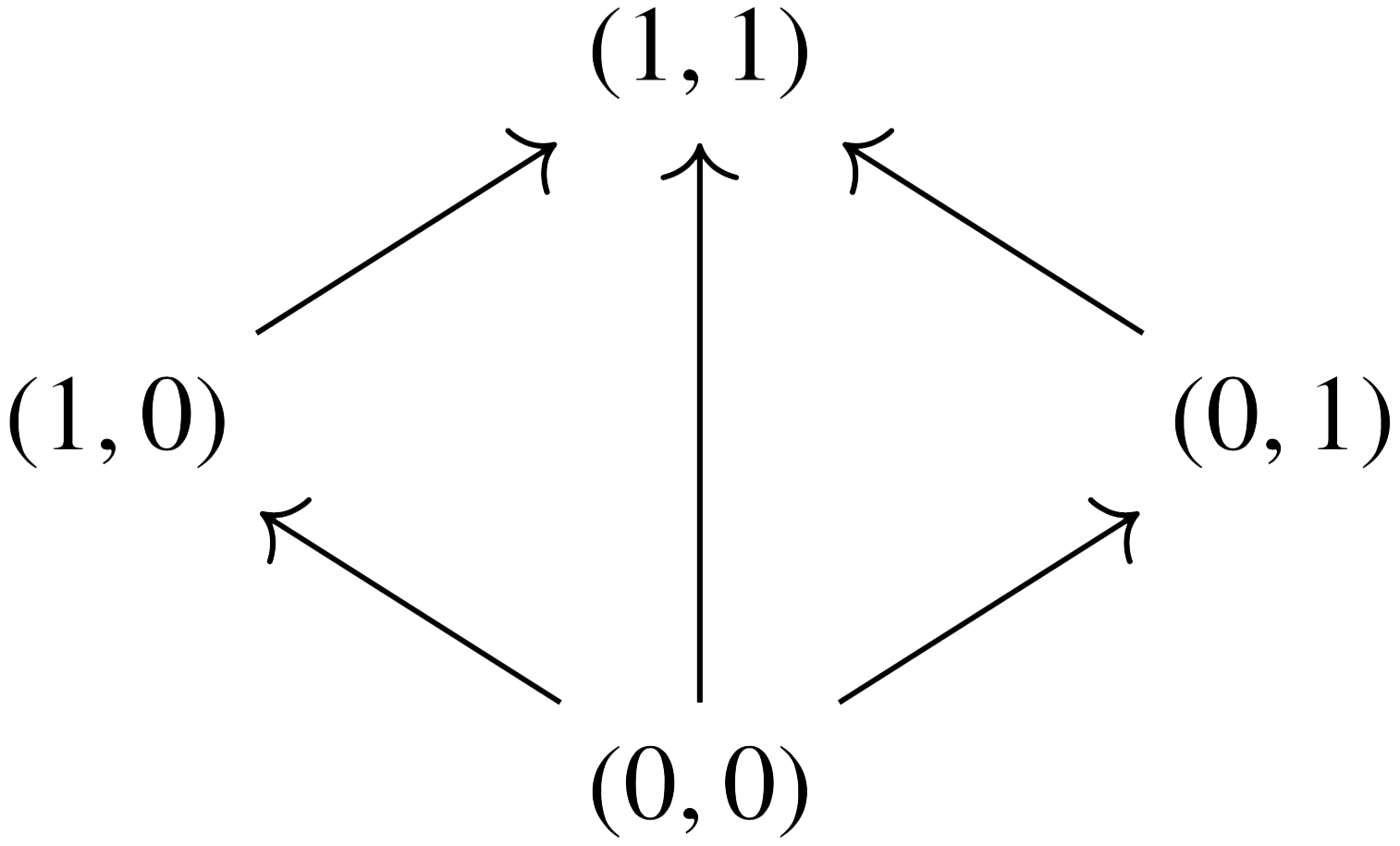

Now imagine our motivating chemical system is open; that is, we are allowed to inject molecules of some chosen species, and remove some others. An open dynamical system is a cospan of finite sets

together with a vector field vv on ℝ S\mathbb{R}^S. Here the legs of the cospan mark the species that we’re allowed to inject and remove, labeled ii (oo) for input (output).

So, how can we build a category from this? Loosely citing a result of Fong, if the decorations of the cospan (in this case, the vector fields) can be given through a functor F:(FinSet,+)→(Set,×)F:(\mathbf{FinSet},+)\to(\mathbf{Set},\times ) that is lax monoidal, then we can form a category whose objects are finite sets, and whose morphisms are (iso classes of) decorated cospans.

Indeed, this can be done in a very natural way, and therefore gives rise to the category Dynam\mathbf{Dynam}, whose morphisms are open dynamical systems.

The black-boxing functor ▪:Dynam→Rel\blacksquare :\mathbf{Dynam}\to\mathbf{Rel}

Given a dynamical system, one of the first things we might like to do is to study its fixed points; in our case, study the concentration vectors that remain constant in time. When working with an open dynamical system, it’s clear that the amounts that we choose to inject and remove will alter the change in concentration of our species, and hence it makes sense to consider the following.

For an open dynamical system (X→iS←oY,v)(X\xrightarrow{i} S \xleftarrow{o} Y, v), together with a constant inflow I∈ℝ XI\in\mathbb{R}^X and constant outflow O∈ℝ YO\in\mathbb{R}^Y, a steady state (with inflows II and outflows OO) is a constant vector of concentrations c∈ℝ Sc\in\mathbb{R}^S such that

v(c)+i *(I)−o *(O)=0v(c)+i_{\ast} (I)-o_{\ast} (O)=0

Here i *(I)i_{\ast} (I) is the vector in ℝ S\mathbb{R}^S given by i *(I)(s)=∑ x∈X:i(x)=sI(x)i_{\ast} (I)(s)=\sum_{x\in X: i(x)=s} I(x); that is, the inflow concentration of all species as marked by the input leg of the cospan. As the authors concisely put it, “in a steady state, the inflows and outflows conspire to exactly compensate for the reaction velocities”.

Note that the inflow and outflow functions II and OO won’t affect any species not marked by the legs of the cospan, and so any steady state cc must be such that v(c)=0v(c)=0 when restricted to these inner species that we can’t reach.

What we want to do next is build a functor that, given an open dynamical system, records all possible combinations of input concentrations, output concentrations, inflows and outflows that hold in steady state. This process will be called black-boxing, since it discards information that can’t be seen at the inputs and outputs.

The black-boxing functor ▪:Dynam→Rel\blacksquare:\mathbf{Dynam}\to \mathbf{Rel} takes a finite set XX to the vector space ℝ X⊕ℝ X\mathbb{R}^X\oplus\mathbb{R}^X, and a morphism, that is, an open dynamical system f=(X→iS←oY,v)f=(X\xrightarrow{i} S \xleftarrow{o} Y, v), to the subset

▪(f)⊆ℝ X⊕ℝ X⊕ℝ Y⊕ℝ Y\blacksquare(f)\subseteq\mathbb{R}^X\oplus\mathbb{R}^X\oplus\mathbb{R}^Y\oplus\mathbb{R}^Y

▪(f)={(i *(c),I,o *(c),O):c is a steady state with inflows I and outflows O}\blacksquare(f)=\{(i^{\ast} (c),I,o^{\ast} (c),O): c \text{ is a steady state with inflows } I \text{ and outflows } O\}

where i *(c)i^{\ast} (c) is the vector in ℝ X\mathbb{R}^X defined by i *(c)(x)=c(i(x))i^{\ast} (c) (x)=c(i(x)); that is, the concentration of the input species.

The authors prove that black-boxing is indeed a functor, which implies that if we want to study the steady states of a complex open dynamical system, we can break it up into smaller, simpler pieces and study their steady states. In other words, studying the steady states of a big system, which is given by the composition of smaller systems (as morphisms in the category Dynam\mathbf{Dynam}) amounts to studying the steady states of each of the smaller systems, and composing them (as morphisms in Rel\mathbf{Rel}).

Spivak’s approach

The category 𝒲\mathcal{W} of wiring diagrams

Instead of dealing with dynamical systems from the start, Spivak takes a step back and develops a syntax for boxes, which are things that admit inputs and outputs.

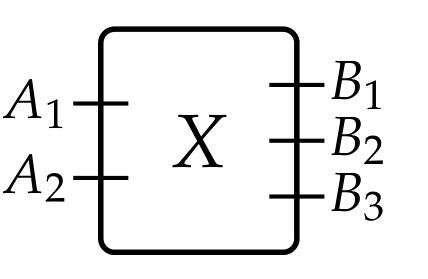

Let’s define the category 𝒲 𝒞\mathcal{W}_\mathcal{C} of 𝒞\mathcal{C}-boxes and wiring diagrams, for a category 𝒞\mathcal{C} with finite products. Its objects are pairs

X=(X in,X out)X=(X^\text{in},X^\text{out})

where each of these coordinates is a finite product of objects of 𝒞\mathcal{C}. For example, we interpret the pair (A 1×A 2,B 1×B 2×B 3)(A_1\times A_2, B_1\times B_2\times B_3) as a box with input ports (a 1,a 2)∈A 1×A 2(a_1 ,a_2)\in A_1\times A_2 and output ports (b 1,b 2,b 3)∈B 1×B 2×B 3(b_1 ,b_2 ,b_3 )\in B_1\times B_2\times B_3.

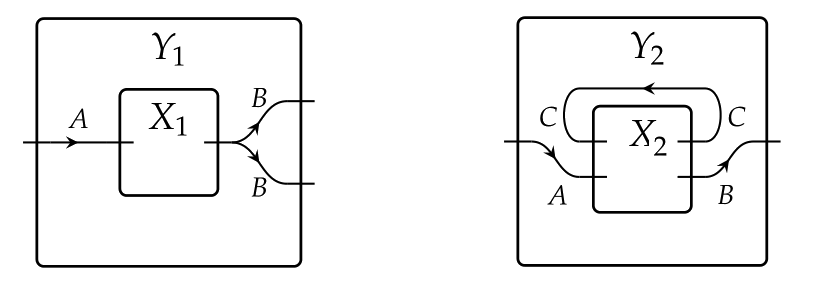

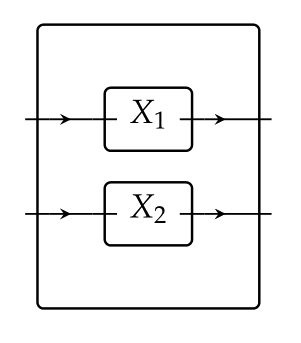

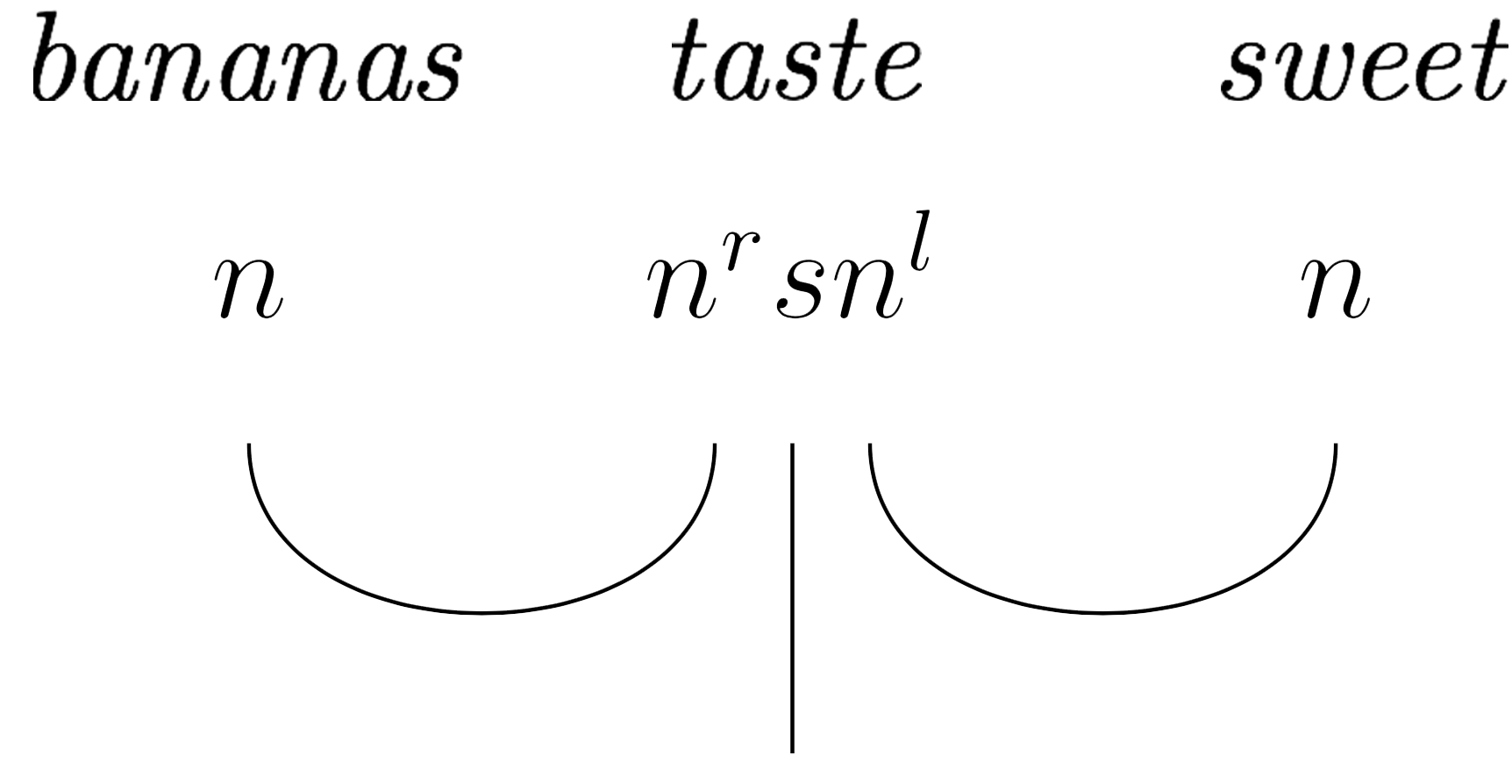

Its morphisms are wiring diagrams φ:X→Y\varphi:X\to Y, that is, pairs of maps (φ in,φ out)(\varphi^\text{in},\varphi^\text{out}) which we interpret as a rewiring of the box XX inside of the box YY. The function φ in\varphi^\text{in} indicates whether an input port of XX should be attached to an input of YY or to an output of XX itself; the function φ out\varphi^\text{out} indicates how the outputs of XX feed the outputs of YY. Examples of wirings are

Composition is given by a nesting of wirings.

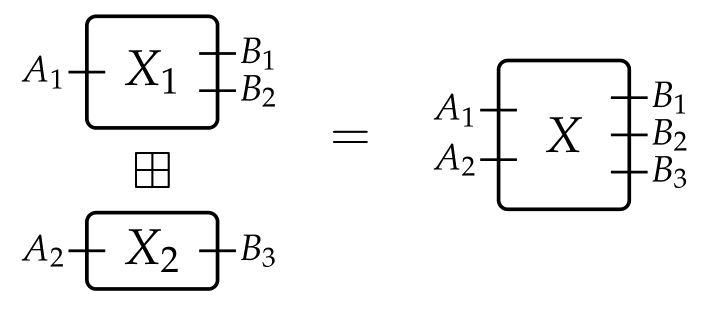

Given boxes XX and YY, we define their parallel composition by

X⊠Y=(X in×Y in,X out×Y out)X\boxtimes Y=(X^\text{in}\times Y^\text{in},X^\text{out}\times Y^\text{out})

This gives a monoidal structure to the category 𝒲 𝒞\mathcal{W}_\mathcal{C}. Parallel composition is true to its name, as illustrated by

The huge advantage of this approach is that one can now fill the boxes with suitable “inhabitants”, and model many different situations that look like wirings at their core. These inhabitants will be given through functors 𝒲 𝒞→Set\mathcal{W}_\mathcal{C}\to\mathbf{Set}, taking a box to the set of its desired interpretations, and giving a meaning to the wiring of boxes.

The functor ODS:𝒲 Euc→SetODS:\mathcal{W}_{\mathbf{Euc}}\to\mathbf{Set} of open dynamical systems

The first of our inhabitants will be, as you probably guessed by now, open dynamical systems. Here 𝒞=Euc\mathcal{C}=\mathbf{Euc} is the category of Euclidean spaces ℝ n\mathbb{R}^n and smooth maps.

From the perspective of Spivak’s paper, an (ℝ X,ℝ Y)(\mathbb{R}^X,\mathbb{R}^Y)-open dynamical system is a 3-tuple (ℝ S,f dyn,f rdt)(\mathbb{R}^S,f^\text{dyn},f^\text{rdt}) where

ℝ S\mathbb{R}^S is the state space

f dyn:ℝ X×ℝ S→ℝ Sf^\text{dyn}:\mathbb{R}^X\times\mathbb{R}^S\to\mathbb{R}^S is a vector field parametrized by the inputs ℝ X\mathbb{R}^X, giving the differential equation of the system

f rdt:ℝ S→ℝ Yf^\text{rdt}:\mathbb{R}^S\to\mathbb{R}^Y is the readout function at the outputs ℝ Y\mathbb{R}^Y.

One should notice the similarity with our previously defined dynamical systems, although it’s clear that the two definitions are not equivalent.

The functor ODS:𝒲 Euc→SetODS:\mathcal{W}_{\mathbf{Euc}}\to\mathbf{Set} exhibiting dynamical systems as inhabitants of input-output boxes, takes a box X=(X in,X out)X=(X^\text{in},X^\text{out}) to the set of all (ℝ X in,ℝ X out)(\mathbb{R}^{X^\text{in}},\mathbb{R}^{X^\text{out}})-dynamical systems

ODS(X)={(ℝ S,f dyn:ℝ X in×ℝ S→ℝ S,f rdt:ℝ S→ℝ X out)}ODS(X)=\{(\mathbb{R}^S,f^\text{dyn}:\mathbb{R}^{X^\text{in}}\times\mathbb{R}^S\to\mathbb{R}^S,f^\text{rdt}:\mathbb{R}^S\to\mathbb{R}^{X^\text{out}})\}

You can surely figure out how ODSODS acts on wirings by drawing a picture and doing a bit of careful bookkeeping.

Note that there’s a natural notion of parallel composition of two dynamical systems, which amounts to carrying out the processes indicated by the two dynamical systems in parallel. Spivak shows that ODSODS is a functor, and, furthermore, that

ODS(X⊠Y)≃ODS(X)⊠ODS(Y)ODS(X\boxtimes Y)\simeq ODS(X)\boxtimes ODS(Y)

The functor Mat:𝒲 𝒞→SetMat:\mathcal{W}_{\mathcal{C}}\to\mathbf{Set} of Set\mathbf{Set}-matrices

Our second inhabitants will be given by matrices of sets. For objects X,YX,Y, an (X,Y)(X,Y)-matrix of sets is a function MM that assigns to each pair (x,y)(x,y) a set M x,yM_{x,y}. In other words, it is a matrix indexed by X×YX\times Y that, instead of coefficients, has sets in each position.

The functor Mat:𝒲 𝒞→SetMat:\mathcal{W}_{\mathcal{C}}\to\mathbf{Set} exhibiting Set\mathbf{Set}-matrices as inhabitants of input-output boxes, takes a box X=(X in,X out)X=(X^\text{in},X^\text{out}) to the set of all (X in,X out)(X^\text{in},X^\text{out})-matrices of sets

Mat(X)={{M i,j} X in×X out:M i,j is a set}Mat(X)=\{\{M_{i,j}\}_{X^\text{in}\times X^\text{out}} : M_{i,j} \text{ is a set}\}

Once again, it’s not too hard to figure out how MatMat should act on wirings.

Like before, there’s a notion of parallel composition of two matrices of sets, and the author shows that MatMat is a functor such that

Mat(X⊠Y)≃Mat(X)⊠Mat(Y)Mat(X\boxtimes Y)\simeq Mat(X)\boxtimes Mat(Y)

The steady-state natural transformation Stst:ODS→MatStst:ODS\to Mat

Finally, we explain how to use all this to study steady states of dynamical systems.

Given an (ℝ X,ℝ Y)(\mathbb{R}^X,\mathbb{R}^Y)-dynamical system f=(ℝ S,f dyn,f rdt)f=(\mathbb{R}^S,f^\text{dyn},f^\text{rdt}) and an element (I,O)∈ℝ X×ℝ Y(I,O)\in\mathbb{R}^X\times\mathbb{R}^Y, an (I,O)(I,O)-steady state is a state c∈ℝ Sc\in\mathbb{R}^S such that

f dyn(I,c)=0 and f rdt(c)=Of^\text{dyn}(I,c)=0 \text{ and } f^\text{rdt}(c)=O

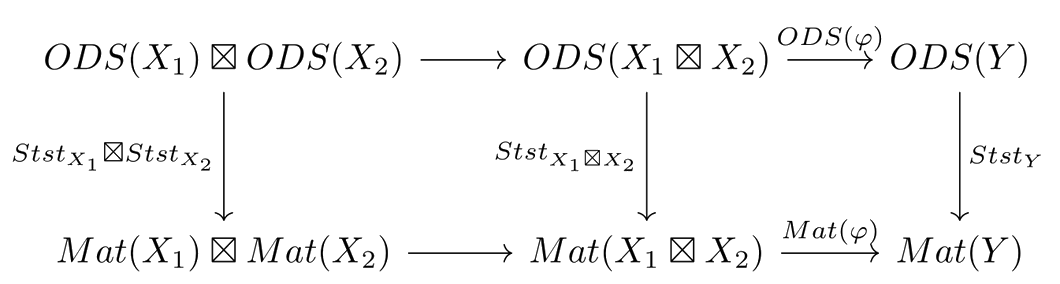

Since dynamical systems are encoded by the functor ODSODS, it makes sense to study steady states through a natural transformation out of ODSODS. We define Stst:ODS→MatStst:ODS\to Mat as the transformation that assigns to each box XX, the function

Stst X:ODS(X)⟶Mat(X)Stst_X:ODS(X)\longrightarrow Mat(X)

taking a dynamical system (ℝ S,f dyn,f rdt)(\mathbb{R}^S,f^\text{dyn},f^\text{rdt}) to its matrix of steady states

M I,O={c∈ℝ S:f dyn(I,c)=0, f rdt(c)=O}M_{I,O}=\{c\in\mathbb{R}^S : f^\text{dyn}(I,c)=0, f^\text{rdt}(c)=O\}

where (I,O)∈ℝ X in×ℝ X out(I,O)\in \mathbb{R}^{X^\text{in}}\times \mathbb{R}^{X^\text{out}}. The author proceeds to show that StstStst is a monoidal natural transformation.

Is it possible to use this machinery to draw the same conclusion as before, that is, that the steady states of a composition of systems comes from the composition of the steady states of the parts?

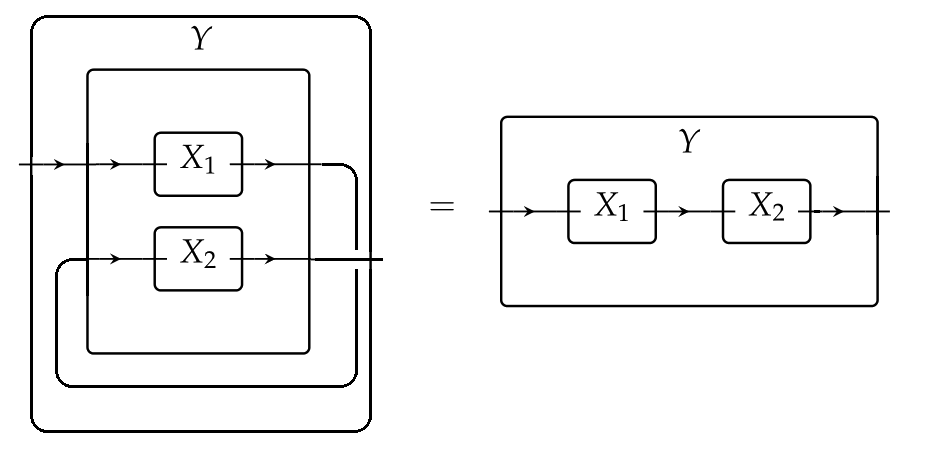

Indeed, it is! Given two boxes X 1X_1 and X 2X_2, we recover the usual notion of (serial) composition by first setting them in parallel X 1⊠X 2X_1 \boxtimes X_2,

and wiring this by φ:X 1⊠X 2→Y\varphi:X_1 \boxtimes X_2\to Y as follows:

The fact that StstStst is a monoidal natural transformation, combined with the facts that the functors ODSODS and MatMat respect parallel composition, allows us to write the following diagram, where both squares are commutative

Then, chasing the diagram along the top and left sides gives the steady states of the serial composition of the dynamical systems X 1X_1 and X 2X_2, while chasing it along the right and bottom sides gives the composition of the steady states of X 1X_1 and of X 2X_2, and the two must agree.

The two approaches, side by side

So how are these two perspectives related? Looking at the definitions we can immediately see that Spivak’s approach has a broader scope than Baez and Pollard’s, so it’s apparent that his results won’t be implied by theirs.

For the converse direction, recall that in the first paper, a dynamical system is given by a decorated cospan f=(X→iS←oY,v)f=(X\xrightarrow{i} S \xleftarrow{o} Y, v), and a steady state with inflows II and outflows OO is a constant vector of concentrations c∈ℝ Sc\in\mathbb{R}^S such that

v(c)+i *(I)−o *(O)=0v(c)+i_{\ast} (I)-o_{\ast} (O)=0

Thus, studying the steady states for this cospan system corresponds to studying the box system

f=(ℝ S,f dyn:ℝ X×ℝ S→ℝ S,f rdt:ℝ S→ℝ Y)f=(\mathbb{R}^S, f^\text{dyn}:\mathbb{R}^X\times\mathbb{R}^S\to\mathbb{R}^S, f^\text{rdt}:\mathbb{R}^S\to\mathbb{R}^Y)

with dynamics given by f dyn(I,c)=v(c)+i *(I)−o *(f rdt(c))f^\text{dyn}(I,c)=v(c)+i_{\ast} (I)-o_{\ast} (f^\text{rdt}(c)), since its (I,O)(I,O)-steady states are vectors c∈ℝ Sc\in\mathbb{R}^S such that

f dyn(I,c)=0 and f rdt(c)=Of^\text{dyn}(I,c)=0 \text{ and } f^\text{rdt}(c)=O

Thus, the study of the steady states of a given cospan dynamical system can be done just as well by looking at it as a box dynamical system and running it through Spivak’s machinery. However, setting two such box systems in serial composition will not yield the box system representing the composition of the cospan systems as one would (naively?) hope, so it doesn’t seem that Spivak’s compositional results will imply those of Baez and Pollard.

This is a bit disconcerting, but instead of it being discouraging, I believe it should be seen as an invitation to delve into the semantics of open dynamical systems and find the right perspective, which manages to subsume both of the approaches presented here.

A molecule that manufactures asymmetry

A molecule that manufactures asymmetry

A molecule that manufactures asymmetry, Published online: 10 April 2018; doi:10.1038/d41586-018-04256-4

Compound’s variations could spawn catalysts that favour certain chiral forms.What is computational neuroscience? (XXIX) The free energy principle

Nosimpler"Surprise me."

The free energy principle is the theory that the brain manipulates a probabilistic generative model of its sensory inputs, which it tries to optimize by either changing the model (learning) or changing the inputs (action) (Friston 2009; Friston 2010). The “free energy” is related to the error between predictions and actual inputs, or “surprise”, which the organism wants to minimize. It has a more precise mathematical formulation, but the conceptual issues I want to discuss here do not depend on it.

Thus, it can be seen as an extension of the Bayesian brain hypothesis that accounts for action in addition to perception. It shares the conceptual problems of the Bayesian brain hypothesis, namely that it focuses on statistical uncertainty, inferring variables of a model (called “causes”) when the challenge is to build and manipulate the structure of the model. It also shares issues with the predictive coding concept, namely that there is a conflation between a technical sense of “prediction” (expectation of the future signal) and a broader sense that is more ecologically relevant (if I do X, then Y will happen). In my view, these are the main issues with the free energy principle. Here I will focus on an additional issue that is specific of the free energy principle.

The specific interest of the free energy principle lies in its formulation of action. It resonates with a very important psychological theory called cognitive dissonance theory. That theory says that you try to avoid dissonance between facts and your system of beliefs, by either changing the beliefs in a small way or avoiding the facts. When there is a dissonant fact, you generally don’t throw your entire system of beliefs: rather, you alter the interpretation of the fact (think of political discourse or in fact, scientific discourse). Another strategy is to avoid the dissonant facts: for example, to read newspapers that tend to have the same opinions as yours. So there is some support in psychology for the idea that you act so as to minimize surprise.

Thus, the free energy principle acknowledges the circularity of action and perception. However, it is quite difficult to make it account for a large part of behavior. A large part of behavior is directed towards goals; for example, to get food and sex. The theory anticipates this criticism and proposes that goals are ingrained in priors. For example, you expect to have food. So, for your state to match your expectations, you need to seek food. This is the theory’s solution to the so-called “dark room problem” (Friston et al., 2012): if you want to minimize surprise, why not shut off stimulation altogether and go to the closest dark room? Solution: you are not expecting a dark room, so you are not going there in the first place.

Let us consider a concrete example to show that this solution does not work. There are two kinds of stimuli: food, and no food. I have two possible actions: to seek food, or to sit and do nothing. If I do nothing, then with 100% probability, I will see no food. If I seek food, then with, say, 20% probability, I will see food.

Let’s say this is the world in which I live. What does the free energy principle tell us? To minimize surprise, it seems clear that I should sit: I am certain to not see food. No surprise at all. The proposed solution is that you have a prior expectation to see food. So to minimize the surprise, you should put yourself into a situation where you might see food, ie to seek food. This seems to work. However, if there is any learning at all, then you will quickly observe that the probability of seeing food is actually 20%, and your expectations should be adjusted accordingly. Also, I will also observe that between two food expeditions, the probability to see food is 0%. Once this has been observed, surprise is minimal when I do not seek food. So, I die of hunger. It follows that the free energy principle does not survive Darwinian competition.

Thus, either there is no learning at all and the free energy principle is just a way of calling predefined actions “priors”; or there is learning, but then it doesn’t account for goal-directed behavior.

The idea to act so as to minimize surprise resonates with some aspects of psychology, like cognitive dissonance theory, but that does not constitute a complete theory of mind, except possibly of the depressed mind. See for example the experience of flow (as in surfing): you seek a situation that is controllable but sufficiently challenging that it engages your entire attention; in other words, you voluntarily expose yourself to a (moderate amount of) surprise; in any case certainly not a minimum amount of surprise.

Role of the 5-HT2A Receptor in Self- and Other-Initiated Social Interaction in Lysergic Acid Diethylamide-Induced States: A Pharmacological fMRI Study

NosimplerI can't wait for more of these studies.

Distortions of self-experience are critical symptoms of psychiatric disorders and have detrimental effects on social interactions. In light of the immense need for improved and targeted interventions for social impairments, it is important to better understand the neurochemical substrates of social interaction abilities. We therefore investigated the pharmacological and neural correlates of self- and other-initiated social interaction. In a double-blind, randomized, counterbalanced, crossover study 24 healthy human participants (18 males and 6 females) received either (1) placebo + placebo, (2) placebo + lysergic acid diethylamide (LSD; 100 μg, p.o.), or (3) ketanserin (40 mg, p.o.) + LSD (100 μg, p.o.) on three different occasions. Participants took part in an interactive task using eye-tracking and functional magnetic resonance imaging completing trials of self- and other-initiated joint and non-joint attention. Results demonstrate first, that LSD reduced activity in brain areas important for self-processing, but also social cognition; second, that change in brain activity was linked to subjective experience; and third, that LSD decreased the efficiency of establishing joint attention. Furthermore, LSD-induced effects were blocked by the serotonin 2A receptor (5-HT2AR) antagonist ketanserin, indicating that effects of LSD are attributable to 5-HT2AR stimulation. The current results demonstrate that activity in areas of the "social brain" can be modulated via the 5-HT2AR thereby pointing toward this system as a potential target for the treatment of social impairments associated with psychiatric disorders.

SIGNIFICANCE STATEMENT Distortions of self-representation and, potentially related to this, dysfunctional social cognition are central hallmarks of various psychiatric disorders and critically impact disease development, progression, treatment, as well as real-world functioning. However, these deficits are insufficiently targeted by current treatment approaches. The administration of lysergic acid diethylamide (LSD) in combination with functional magnetic resonance imaging and real-time eye-tracking offers the unique opportunity to study alterations in self-experience, their relation to social cognition, and the underlying neuropharmacology. Results demonstrate that LSD alters self-experience as well as basic social cognition processing in areas of the "social brain". Furthermore, these alterations are attributable to 5-HT2A receptor stimulation, thereby pinpointing toward this receptor system in the development of pharmacotherapies for sociocognitive deficits in psychiatric disorders.

What is computational neuroscience? (XXVIII)The Bayesian brain

Our sensors give us an incomplete, noisy, and indirect information about the world. For example, estimating the location of a sound source is difficult because in natural contexts, the sound of interest is corrupted by other sound sources, reflections, etc. Thus it is not possible to know the position of the source with certainty. The ‘Bayesian coding hypothesis’ (Knill & Pouget, 2014) postulates that the brain represents not the most likely position, but the entire probability distribution of the position. It then uses those distributions to do Bayesian inference, for example, when combining different sources of information (say, auditory and visual). This would allow the brain to optimally infer the most likely position. There is indeed some evidence for optimal inference in psychophysical experiments – although there is also some contradicting evidence (Rahnev & Denison, 2018).

The idea has some appeal. The problem is that, by framing perception as a statistical inference problem, it focuses on the most trivial type of uncertainty, statistical uncertainty. It is illustrated by the following quote: “The fundamental concept behind the Bayesian approach to perceptual computations is that the information provided by a set of sensory data about the world is represented by a conditional probability density function over the set of unknown variables”. Implicit in this representation is a particular model, for which variables are defined. Typically, one model describes a particular experimental situation. For example, the model would describe the distribution of auditory cues associated with the position of the sound source. Another situation would be described by a different model, for example one with two sound sources would require a model with two variables. Or if the listening environment is a room and the size of that room might vary, then we would need a model with the dimensions of the room as variables. In any of these cases where we have identified and fixed parametric sources of variation, then the Bayesian approach works fine, because we are indeed facing a problem of statistical inference. But that framework doesn’t fit any real life situation. In real life, perceptual scenes have variable structure, which corresponds to the model in statistical inference (there is one source, or two sources, we are in a room, the second source comes from the window, etc). The perceptual problem is therefore not just to infer the parameters of the model (dimensions of the room etc), but also the model itself, its structure. Thus, it is not possible in general to represent an auditory scene by a probability distribution on a set of parameters, because the very notion of a parameter already assumes that the structure of the scene is known and fixed.

Inferring parameters for a known statistical model is relatively easy. What is really difficult, and is still challenging for machine learning algorithms today, is to identify the structure of a perceptual scene, what constitutes an object (object formation), how objects are related to each other (scene analysis). These fundamental perceptual processes do not exist in the Bayesian brain. This touches on two very different types of uncertainty: statistical uncertainty, variations that can be interpreted and expected in the framework of a model; and epistemic uncertainty, the model is unknown (the difference has been famously explained by Donald Rumsfeld).

Thus, the “Bayesian brain” idea addresses an interesting problem (statistical inference), but it trivializes the problem of perception, by missing the fact that the real challenge is epistemic uncertainty (building a perceptual model), not statistical uncertainty (tuning the parameters): the world is not noisy, it is complex.

Duality, Fundamentality, and Emergence. (arXiv:1803.09443v1 [physics.hist-ph])

We argue that dualities offer new possibilities for relating fundamentality, levels, and emergence. Namely, dualities often relate two theories whose hierarchies of levels are inverted relative to each other, and so allow for new fundamentality relations, as well as for epistemic emergence. We find that the direction of emergence typically found in these cases is opposite to the direction of emergence followed in the standard accounts. Namely, the standard emergence direction is that of decreasing fundamentality: there is emergence of less fundamental, high-level entities, out of more fundamental, low-level entities. But in cases of duality, a more fundamental entity can emerge out of a less fundamental one. This possibility can be traced back to the existence of different classical limits in quantum field theories and string theories.

Oopsie

After a fairly seamless, high-profile launch in Pittsburgh, the rollout in San Francisco was bumpy right from the beginning. First, the DMV issued a warning to Uber that it had not obtained the proper testing permits for its pilot program. Then, a few hours after the trial began, The Verge reported that one of Uber’s cars ran a red light, nearly hitting a (human-driven) Lyft car.

Uber reviewed the case and determined it was actually the fault of the human driver sitting in the car—remember, Uber still has human drivers who can “take over” from the self-driving system as needed.

Then there was the bike lane problem. Uber’s vehicles had a nasty habit of driving into San Francisco’s bike lanes without warning. This was not the fault of humans but a software error, claimed Uber, noting that the problem had not come up in Pittsburgh, which also has a robust cycling network. Uber pledged to fix it.

Wasn't that long ago:

Arizona has since built upon the governor’s action to become a favored partner for the tech industry, turning itself into a live laboratory for self-driving vehicles. Over the past two years, Arizona deliberately cultivated a rules-free environment for driverless cars, unlike dozens of other states that have enacted autonomous vehicle regulations over safety, taxes and insurance.

...

Mr. Ducey, a native of Ohio who came to Arizona for college and then stayed, was elected governor in 2014 on a pro-business and innovation platform. He quickly lifted restrictions on medical testing for companies like Theranos, a Silicon Valley company that later faced scrutiny for its business practices. He also touted Apple’s decision to build a $2 billion data center in the state.

“We can beat California in every metric; lower taxes, less regulations, cost of living, quality of life,” he said several months after he became governor.

Oh well.

PHOENIX, Ariz., March 26 (Reuters) - The governor of Arizona on Monday suspended Uber’s ability to test self-driving cars on public roads in the state following a fatal crash last week that killed a 49-year-old woman pedestrian.

In a letter sent to Uber Chief Executive Dara Khosrowshahi and shared with the media, Governor Doug Ducey said he found a video released by police of the crash “disturbing and alarming, and it raises many questions about the ability of Uber to continue testing in Arizona.”

Good calls, bro. All of them.

...I wrote this last night, but the local fishwrap is on it, also, too.

Derivation of the Boltzmann Equation for Financial Brownian Motion: Direct Observation of the Collective Motion of High-Frequency Traders

Author(s): Kiyoshi Kanazawa, Takumi Sueshige, Hideki Takayasu, and Misako Takayasu