Since I was unable to travel for a couple of years during the pandemic, I decided to take my new-found time and really lean into Rust. After writing over 100k lines of Rust code, I think I am starting to get a feel for the language and like every cranky engineer I have developed opinions and because this is the Internet I’m going to share them.

The reason I learned Rust was to flesh out parts of the Xous OS written by Xobs. Xous is a microkernel message-passing OS written in pure Rust. Its closest relative is probably QNX. Xous is written for lightweight (IoT/embedded scale) security-first platforms like Precursor that support an MMU for hardware-enforced, page-level memory protection.

In the past year, we’ve managed to add a lot of features to the OS: networking (TCP/UDP/DNS), middleware graphics abstractions for modals and multi-lingual text, storage (in the form of an encrypted, plausibly deniable database called the PDDB), trusted boot, and a key management library with self-provisioning and sealing properties.

One of the reasons why we decided to write our own OS instead of using an existing implementation such as SeL4, Tock, QNX, or Linux, was we wanted to really understand what every line of code was doing in our device. For Linux in particular, its source code base is so huge and so dynamic that even though it is open source, you can’t possibly audit every line in the kernel. Code changes are happening at a pace faster than any individual can audit. Thus, in addition to being home-grown, Xous is also very narrowly scoped to support just our platform, to keep as much unnecessary complexity out of the kernel as possible.

Being narrowly scoped means we could also take full advantage of having our CPU run in an FPGA. Thus, Xous targets an unusual RV32-IMAC configuration: one with an MMU + AES extensions. It’s 2022 after all, and transistors are cheap: why don’t all our microcontrollers feature page-level memory protection like their desktop counterparts? Being an FPGA also means we have the ability to fix API bugs at the hardware level, leaving the kernel more streamlined and simplified. This was especially relevant in working through abstraction-busting processes like suspend and resume from RAM. But that’s all for another post: this one is about Rust itself, and how it served as a systems programming language for Xous.

Rust: What Was Sold To Me

Back when we started Xous, we had a look at a broad number of systems programming languages and Rust stood out. Even though its `no-std` support was then-nascent, it was a strongly-typed, memory-safe language with good tooling and a burgeoning ecosystem. I’m personally a huge fan of strongly typed languages, and memory safety is good not just for systems programming, it enables optimizers to do a better job of generating code, plus it makes concurrency less scary. I actually wished for Precursor to have a CPU that had hardware support for tagged pointers and memory capabilities, similar to what was done on CHERI, but after some discussions with the team doing CHERI it was apparent they were very focused on making C better and didn’t have the bandwidth to support Rust (although that may be changing). In the grand scheme of things, C needed CHERI much more than Rust needed CHERI, so that’s a fair prioritization of resources. However, I’m a fan of belt-and-suspenders for security, so I’m still hopeful that someday hardware-enforced fat pointers will make their way into Rust.

That being said, I wasn’t going to go back to the C camp simply to kick the tires on a hardware retrofit that backfills just one poor aspect of C. The glossy brochure for Rust also advertised its ability to prevent bugs before they happened through its strict “borrow checker”. Furthermore, its release philosophy is supposed to avoid what I call “the problem with Python”: your code stops working if you don’t actively keep up with the latest version of the language. Also unlike Python, Rust is not inherently unhygienic, in that the advertised way to install packages is not also the wrong way to install packages. Contrast to Python, where the official docs on packages lead you to add them to system environment, only to be scolded by Python elders with a “but of course you should be using a venv/virtualenv/conda/pipenv/…, everyone knows that”. My experience with Python would have been so much better if this detail was not relegated to Chapter 12 of 16 in the official tutorial. Rust is also supposed to be better than e.g. Node at avoiding the “oops I deleted the Internet” problem when someone unpublishes a popular package, at least if you use fully specified semantic versions for your packages.

In the long term, the philosophy behind Xous is that eventually it should “get good enough”, at which point we should stop futzing with it. I believe it is the mission of engineers to eventually engineer themselves out of a job: systems should get stable and solid enough that it “just works”, with no caveats. Any additional engineering beyond that point only adds bugs or bloat. Rust’s philosophy of “stable is forever” and promising to never break backward-compatibility is very well-aligned from the point of view of getting Xous so polished that I’m no longer needed as an engineer, thus enabling me to spend more of my time and focus supporting users and their applications.

The Rough Edges of Rust

There’s already a plethora of love letters to Rust on the Internet, so I’m going to start by enumerating some of the shortcomings I’ve encountered.

“Line Noise” Syntax

This is a superficial complaint, but I found Rust syntax to be dense, heavy, and difficult to read, like trying to read the output of a UART with line noise:

Trying::to_read::<&'a heavy>(syntax, |like| { this. can_be( maddening ) }).map(|_| ())?;

In more plain terms, the line above does something like invoke a method called “to_read” on the object (actually `struct`) “Trying” with a type annotation of “&heavy” and a lifetime of ‘a with the parameters of “syntax” and a closure taking a generic argument of “like” calling the can_be() method on another instance of a structure named “this” with the parameter “maddening” with any non-error return values mapped to the Rust unit type “()” and errors unwrapped and kicked back up to the caller’s scope.

Deep breath. Surely, I got some of this wrong, but you get the idea of how dense this syntax can be.

And then on top of that you can layer macros and directives which don’t have to follow other Rust syntax rules. For example, if you want to have conditionally compiled code, you use a directive like

#[cfg(all(not(baremetal), any(feature = “hazmat”, feature = “debug_print”)))]

Which says if either the feature “hazmat” or “debug_print” is enabled and you’re not running on bare metal, use the block of code below (and I surely got this wrong too). The most confusing part of about this syntax to me is the use of a single “=” to denote equivalence and not assignment, because, stuff in config directives aren’t Rust code. It’s like a whole separate meta-language with a dictionary of key/value pairs that you query.

I’m not even going to get into the unreadability of Rust macros – even after having written a few Rust macros myself, I have to admit that I feel like they “just barely work” and probably thar be dragons somewhere in them. This isn’t how you’re supposed to feel in a language that bills itself to be reliable. Yes, it is my fault for not being smart enough to parse the language’s syntax, but also, I do have other things to do with my life, like build hardware.

Anyways, this is a superficial complaint. As time passed I eventually got over the learning curve and became more comfortable with it, but it was a hard, steep curve to climb. This is in part because all the Rust documentation is either written in eli5 style (good luck figuring out “feature”s from that example), or you’re greeted with a formal syntax definition (technically, everything you need to know to define a “feature” is in there, but nowhere is it summarized in plain English), and nothing in between.

To be clear, I have a lot of sympathy for how hard it is to write good documentation, so this is not a dig at the people who worked so hard to write so much excellent documentation on the language. I genuinely appreciate the general quality and fecundity of the documentation ecosystem.

Rust just has a steep learning curve in terms of syntax (at least for me).

Rust Is Powerful, but It Is Not Simple

Rust is powerful. I appreciate that it has a standard library which features HashMaps, Vecs, and Threads. These data structures are delicious and addictive. Once we got `std` support in Xous, there was no going back. Coming from a background of C and assembly, Rust’s standard library feels rich and usable — I have read some criticisms that it lacks features, but for my purposes it really hits a sweet spot.

That being said, my addiction to the Rust `std` library has not done any favors in terms of building an auditable code base. One of the criticisms I used to leverage at Linux is like “holy cow, the kernel source includes things like an implementation for red black trees, how is anyone going to audit that”.

Now, having written an OS, I have a deep appreciation for how essential these rich, dynamic data structures are. However, the fact that Xous doesn’t include an implementation of HashMap within its repository doesn’t mean that we are any simpler than Linux: indeed, we have just swept a huge pile of code under the rug; just the `collection`s portion of the standard library represents about 10k+ SLOC at a very high complexity.

So, while Rust’s `std` library allows the Xous code base to focus on being a kernel and not also be its own standard library, from the standpoint of building a minimum attack-surface, “fully-auditable by one human” codebase, I think our reliance on Rust’s `std` library means we fail on that objective, especially so long as we continue to track the latest release of Rust (and I’ll get into why we have to in the next section).

Ideally, at some point, things “settle down” enough that we can stick a fork in it and call it done by well, forking the Rust repo, and saying “this is our attack surface, and we’re not going to change it”. Even then, the Rust `std` repo dwarfs the Xous repo by several multiples in size, and that’s not counting the complexity of the compiler itself.

Rust Isn’t Finished

This next point dovetails into why Rust is not yet suitable for a fully auditable kernel: the language isn’t finished. For example, while we were coding Xous, a thing called `const generic` was introduced. Before this, Rust had no native ability to deal with arrays bigger than 32 elements! This limitation is a bit maddening, and even today there are shortcomings such as the `Default` trait being unable to initialize arrays larger than 32 elements. This friction led us to put limits on many things at 32 elements: for example, when we pass the results of an SSID scan between processes, the structure only reserves space for up to 32 results, because the friction of going to a larger, more generic structure just isn’t worth it. That’s a language-level limitation directly driving a user-facing feature.

Also over the course of writing Xous, things like in-line assembly and workspaces finally reached maturity, which means we need to go back a revisit some unholy things we did to make those critical few lines of initial boot code, written in assembly, integrated into our build system.

I often ask myself “when is the point we’ll get off the Rust release train”, and the answer I think is when they finally make “alloc” no longer a nightly API. At the moment, `no-std` targets have no access to the heap, unless they hop on the “nightly” train, in which case you’re back into the Python-esque nightmare of your code routinely breaking with language releases.

We definitely gave writing an OS in `no-std` + stable a fair shake. The first year of Xous development was all done using `no-std`, at a cost in memory space and complexity. It’s possible to write an OS with nothing but pre-allocated, statically sized data structures, but we had to accommodate the worst-case number of elements in all situations, leading to bloat. Plus, we had to roll a lot of our own core data structures.

About a year ago, that all changed when Xobs ported Rust’s `std` library to Xous. This means we are able to access the heap in stable Rust, but it comes at a price: now Xous is tied to a particular version of Rust, because each version of Rust has its own unique version of `std` packaged with it. This version tie is for a good reason: `std` is where the sausage gets made of turning fundamentally `unsafe` hardware constructions such as memory allocation and thread creation into “safe” Rust structures. (Also fun fact I recently learned: Rust doesn’t have a native allocater for most targets – it simply punts to the native libc `malloc()` and `free()` functions!) In other words, Rust is able to make a strong guarantee about the stable release train not breaking old features in part because of all the loose ends swept into `std`.

I have to keep reminding myself that having `std` doesn’t eliminate the risk of severe security bugs in critical code – it merely shuffles a lot of critical code out of sight, into a standard library. Yes, it is maintained by a talented group of dedicated programmers who are smarter than me, but in the end, we are all only human, and we are all fair targets for software supply chain exploits.

Rust has a clockwork release schedule – every six weeks, it pushes a new version. And because our fork of `std` is tied to a particular version of Rust, it means every six weeks, Xobs has the thankless task of updating our fork and building a new `std` release for it (we’re not a first-class platform in Rust, which means we have to maintain our own `std` library). This means we likewise force all Xous developers to run `rustup update` on their toolchains so we can retain compatibility with the language.

This probably isn’t sustainable. Eventually, we need to lock down the code base, but I don’t have a clear exit strategy for this. Maybe the next point at which we can consider going back to `nostd` is when we can get the stable `alloc` feature, which allows us to have access to the heap again. We could then decouple Xous from the Rust release train, but we’d still need to backfill features such as Vec, HashMap, Thread, and Arc/Mutex/Rc/RefCell/Box constructs that enable Xous to be efficiently coded.

Unfortunately, the `alloc` crate is very hard, and has been in development for many years now. That being said, I really appreciate the transparency of Rust behind the development of this feature, and the hard work and thoughtfulness that is being put into stabilizing this feature.

Rust Has A Limited View of Supply Chain Security

I think this position is summarized well by the installation method recommended by the rustup.rs installation page:

`curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh`

“Hi, run this shell script from a random server on your machine.”

To be fair, you can download the script and inspect it before you run it, which is much better than e.g. the Windows .MSI installers for vscode. However, this practice pervades the entire build ecosystem: a stub of code called `build.rs` is potentially compiled and executed whenever you pull in a new crate from crates.io. This, along with “loose” version pinning (you can specify a version to be, for example, simply “2” which means you’ll grab whatever the latest version published is with a major rev of 2), makes me uneasy about the possibility of software supply chain attacks launched through the crates.io ecosystem.

Crates.io is also subject to a kind of typo-squatting, where it’s hard to determine which crates are “good” or “bad”; some crates that are named exactly what you want turn out to just be old or abandoned early attempts at giving you the functionality you wanted, and the more popular, actively-maintained crates have to take on less intuitive names, sometimes differing by just a character or two from others (to be fair, this is not a problem unique to Rust’s package management system).

There’s also the fact that dependencies are chained – when you pull in one thing from crates.io, you also pull in all of that crate’s subordinate dependencies, along with all their build.rs scripts that will eventually get run on your machine. Thus, it is not sufficient to simply audit the crates explicitly specified within your Cargo.toml file — you must also audit all of the dependent crates for potential supply chain attacks as well.

Fortunately, Rust does allow you to pin a crate at a particular version using the `Cargo.lock` file, and you can fully specify a dependent crate down to the minor revision. We try to mitigate this in Xous by having a policy of publishing our Cargo.lock file and specifying all of our first-order dependent crates to the minor revision. We have also vendored in or forked certain crates that would otherwise grow our dependency tree without much benefit.

That being said, much of our debug and test framework relies on some rather fancy and complicated crates that pull in a huge number of dependencies, and much to my chagrin even when I try to run a build just for our target hardware, the dependent crates for running simulations on the host computer are still pulled in and the build.rs scripts are at least built, if not run.

In response to this, I wrote a small tool called `crate-scraper` which downloads the source package for every source specified in our Cargo.toml file, and stores them locally so we can have a snapshot of the code used to build a Xous release. It also runs a quick “analysis” in that it searches for files called build.rs and collates them into a single file so I can more quickly grep through to look for obvious problems. Of course, manual review isn’t a practical way to detect cleverly disguised malware embedded within the build.rs files, but it at least gives me a sense of the scale of the attack surface we’re dealing with — and it is breathtaking, about 5700 lines of code from various third parties that manipulates files, directories, and environment variables, and runs other programs on my machine every time I do a build.

I’m not sure if there is even a good solution to this problem, but, if you are super-paranoid and your goal is to be able to build trustable firmware, be wary of Rust’s expansive software supply chain attack surface!

You Can’t Reproduce Someone Else’s Rust Build

A final nit I have about Rust is that builds are not reproducible between different computers (they are at least reproducible between builds on the same machine if we disable the embedded timestamp that I put into Xous for $reasons).

I think this is primarily because Rust pulls in the full path to the source code as part of the panic and debug strings that are built into the binary. This has lead to uncomfortable situations where we have had builds that worked on Windows, but failed under Linux, because our path names are very different lengths on the two and it would cause some memory objects to be shifted around in target memory. To be fair, those failures were all due to bugs we had in Xous, which have since been fixed. But, it just doesn’t feel good to know that we’re eventually going to have users who report bugs to us that we can’t reproduce because they have a different path on their build system compared to ours. It’s also a problem for users who want to audit our releases by building their own version and comparing the hashes against ours.

There’s some bugs open with the Rust maintainers to address reproducible builds, but with the number of issues they have to deal with in the language, I am not optimistic that this problem will be resolved anytime soon. Assuming the only driver of the unreproducibility is the inclusion of OS paths in the binary, one fix to this would be to re-configure our build system to run in some sort of a chroot environment or a virtual machine that fixes the paths in a way that almost anyone else could reproduce. I say “almost anyone else” because this fix would be OS-dependent, so we’d be able to get reproducible builds under, for example, Linux, but it would not help Windows users where chroot environments are not a thing.

Where Rust Exceeded Expectations

Despite all the gripes laid out here, I think if I had to do it all over again, Rust would still be a very strong contender for the language I’d use for Xous. I’ve done major projects in C, Python, and Java, and all of them eventually suffer from “creeping technical debt” (there’s probably a software engineer term for this, I just don’t know it). The problem often starts with some data structure that I couldn’t quite get right on the first pass, because I didn’t yet know how the system would come together; so in order to figure out how the system comes together, I’d cobble together some code using a half-baked data structure.

Thus begins the descent into chaos: once I get an idea of how things work, I go back and revise the data structure, but now something breaks elsewhere that was unsuspected and subtle. Maybe it’s an off-by-one problem, or the polarity of a sign seems reversed. Maybe it’s a slight race condition that’s hard to tease out. Nevermind, I can patch over this by changing a <= to a <, or fixing the sign, or adding a lock: I’m still fleshing out the system and getting an idea of the entire structure. Eventually, these little hacks tend to metastasize into a cancer that reaches into every dependent module because the whole reason things even worked was because of the “cheat”; when I go back to excise the hack, I eventually conclude it’s not worth the effort and so the next best option is to burn the whole thing down and rewrite it…but unfortunately, we’re already behind schedule and over budget so the re-write never happens, and the hack lives on.

Rust is a difficult language for authoring code because it makes these “cheats” hard – as long as you have the discipline of not using “unsafe” constructions to make cheats easy. However, really hard does not mean impossible – there were definitely some cheats that got swept under the rug during the construction of Xous.

This is where Rust really exceeded expectations for me. The language’s structure and tooling was very good at hunting down these cheats and refactoring the code base, thus curing the cancer without killing the patient, so to speak. This is the point at which Rust’s very strict typing and borrow checker converts from a productivity liability into a productivity asset.

I liken it to replacing a cable in a complicated bundle of cables that runs across a building. In Rust, it’s guaranteed that every strand of wire in a cable chase, no matter how complicated and awful the bundle becomes, is separable and clearly labeled on both ends. Thus, you can always “pull on one end” and see where the other ends are by changing the type of an element in a structure, or the return type of a method. In less strictly typed languages, you don’t get this property; the cables are allowed to merge and affect each other somewhere inside the cable chase, so you’re left “buzzing out” each cable with manual tests after making a change. Even then, you’re never quite sure if the thing you replaced is going to lead to the coffee maker switching off when someone turns on the bathroom lights.

Here’s a direct example of Rust’s refactoring abilities in action in the context of Xous. I had a problem in the way trust levels are handled inside our graphics subsystem, which I call the GAM (Graphical Abstraction Manager). Each Canvas in the system gets a `u8` assigned to it that is a trust level. When I started writing the GAM, I just knew that I wanted some notion of trustability of a Canvas, so I added the variable, but wasn’t quite sure exactly how it would be used. Months later, the system grew the notion of Contexts with Layouts, which are multi-Canvas constructions that define a particular type of interaction. Now, you can have multiple trust levels associated with a single Context, but I had forgotten about the trust variable I had previously put in the Canvas structure – and added another trust level number to the Context structure as well. You can see where this is going: everything kind of worked as long as I had simple test cases, but as we started to get modals popping up over applications and then menus on top of modals and so forth, crazy behavior started manifesting, because I had confused myself over where the trust values were being stored. Sometimes I was updating the value in the Context, sometimes I was updating the one in the Canvas. It would manifest itself sometimes as an off-by-one bug, other times as a concurrency error.

This was always a skeleton in the closet that bothered me while the GAM grew into a 5k-line monstrosity of code with many moving parts. Finally, I decided something had to be done about it, and I was really not looking forward to it. I was assuming that I messed up something terribly, and this investigation was going to conclude with a rewrite of the whole module.

Fortunately, Rust left me a tiny string to pull on. Clippy, the cheerfully named “linter” built into Rust, was throwing a warning that the trust level variable was not being used at a point where I thought it should be – I was storing it in the Context after it was created, but nobody every referred to it after then. That’s strange – it should be necessary for every redraw of the Context! So, I started by removing the variable, and seeing what broke. This rapidly led me to recall that I was also storing the trust level inside the Canvases within the Context when they were being created, which is why I had this dangling reference. Once I had that clue, I was able to refactor the trust computations to refer only to that one source of ground truth. This also led me to discover other bugs that had been lurking because in fact I was never exercising some code paths that I thought I was using on a routine basis. After just a couple hours of poking around, I had a clear-headed view of how this was all working, and I had refactored the trust computation system with tidy APIs that were simple and easier to understand, without having to toss the entire code base.

This is just one of many positive experiences I’ve had with Rust in maintaining the Xous code base. It’s one of the first times I’ve walked into a big release with my head up and a positive attitude, because for the first time ever, I feel like maybe I have a chance of being able deal with hard bugs in an honest fashion. I’m spending less time making excuses in my head to justify why things were done this way and why we can’t take that pull request, and more time thinking about all the ways things can get better, because I know Clippy has my back.

Caveat Coder

Anyways, that’s a lot of ranting about software for a hardware guy. Software people are quick to remind me that first and foremost, I make circuits and aluminum cases, not code, therefore I have no place ranting about software. They’re right – I actually have no “formal” training to write code “the right way”. When I was in college, I learned Maxwell’s equations, not algorithms. I could never be a professional programmer, because I couldn’t pass even the simplest coding interview. Don’t ask me to write a linked list: I already know that I don’t know how to do it correctly; you don’t need to prove that to me. This is because whenever I find myself writing a linked list (or any other foundational data structure for that matter), I immediately stop myself and question all the life choices that brought me to that point: isn’t this what libraries are for? Do I really need to be re-inventing the wheel? If there is any correlation between doing well in a coding interview and actual coding ability, then you should definitely take my opinions with the grain of salt.

Still, after spending a couple years in the foxhole with Rust and reading countless glowing articles about the language, I felt like maybe a post that shared some critical perspectives about the language would be a refreshing change of pace.

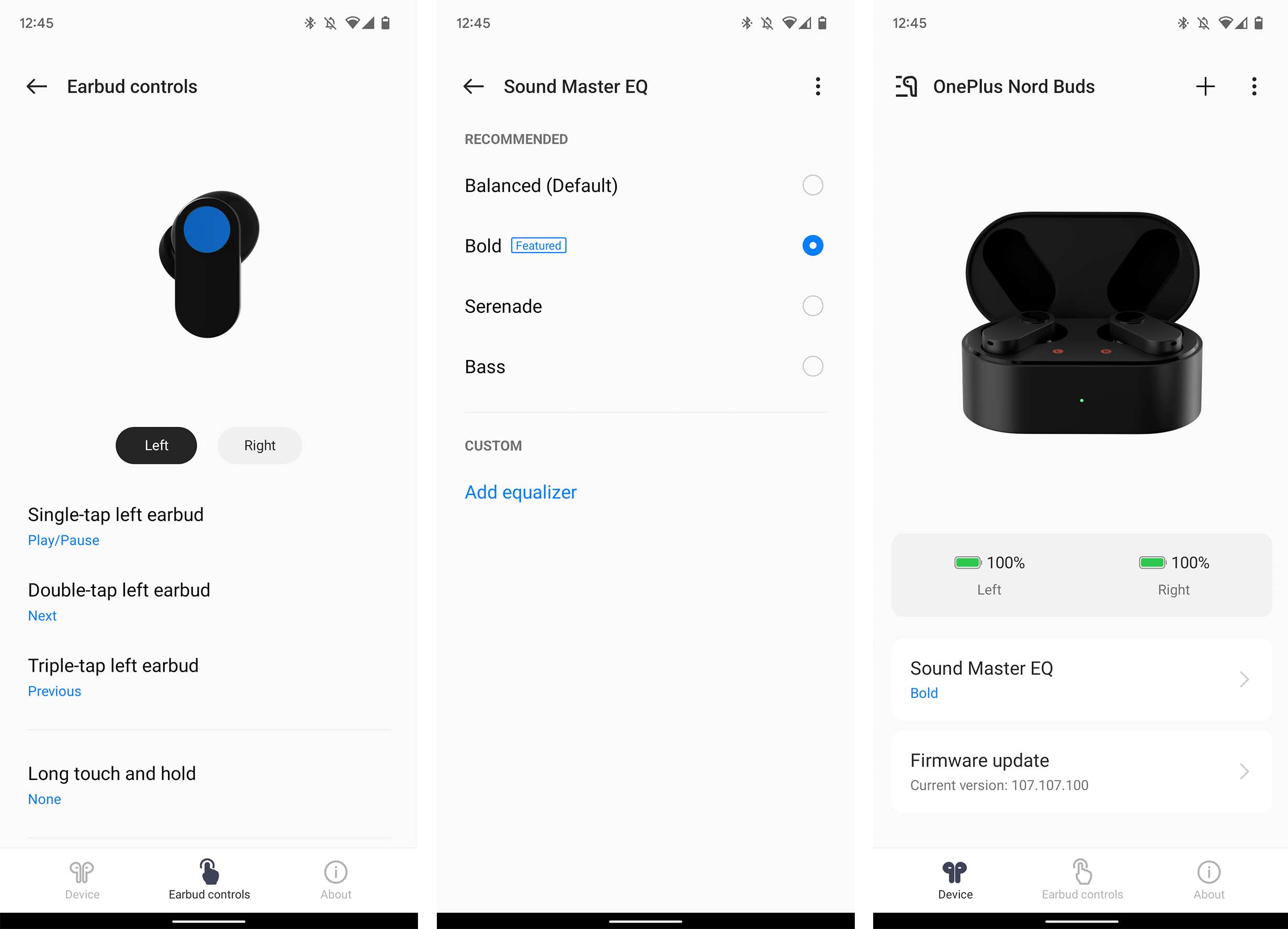

OnePlus actually managed to jam in a 12.4mm speaker driver into each earbud. Even if it's not the biggest earbud speaker around, it's still loud enough and larger than the 11mm option inside the Nothing Ear 1s.

OnePlus actually managed to jam in a 12.4mm speaker driver into each earbud. Even if it's not the biggest earbud speaker around, it's still loud enough and larger than the 11mm option inside the Nothing Ear 1s. As I alluded to above, one of my favourite things about the Nord Buds is how light and comfortable they are. They have a small stemmed design that looks pretty cool and keeps the earbud's centre of gravity close to my head, so they always feel secure.

As I alluded to above, one of my favourite things about the Nord Buds is how light and comfortable they are. They have a small stemmed design that looks pretty cool and keeps the earbud's centre of gravity close to my head, so they always feel secure.

Google recently complied with the US government sanctions against Russia because of the ongoing war with Ukraine. The Russian authorities have retaliated with actions that could force Google’s Russian subsidiary to file for bankruptcy. A Reuters report says the Kremlin has stopped just short of blocking access to the search giant’s services.

Google recently complied with the US government sanctions against Russia because of the ongoing war with Ukraine. The Russian authorities have retaliated with actions that could force Google’s Russian subsidiary to file for bankruptcy. A Reuters report says the Kremlin has stopped just short of blocking access to the search giant’s services.