As with most everything else in our lives, the past few months have utterly transformed the symbolic significance and economic status of masks. For decades, many nations (including Germany, France, Austria, Belgium, Ukraine, Russia, Canada, and the U.S.) and municipalities had been attempting to enforce laws against face covering in public spaces, motivated generally by institutionalized Islamophobia and the imperative to securitize urban environments. As recently as last October, Hong Kong sought to outlaw public masking in an effort to clamp down on protests. Now, in a matter of weeks, such policies have been reversed. Authorities are increasingly mandating the public use of masks, scrambling the social semiotics of face covering. Once, to walk the streets with a hidden face was to make oneself an outlaw; now it is becoming a sign of civic responsibility (if not cosmopolitan elitism).

Unequally distributed consequences of mask wearing demonstrate the shallowness of “all in this together” tropes

The connotation of masks is shifting, but the rate and extent has been uneven both within and between countries. East Asian nations, marked by recent experience of SARS and H1N1, have typically been quick to adopt mass mask usage. In most of Europe and North America, with no equivalent memories to draw on, the situation is more conflicted. People with East Asian heritage, who have tended to be early adopters in the U.S. and the U.K., have been the target of racist attacks, a trend aggravated by the Sinophobic rhetoric of some politicians. Meanwhile, for certain demographics — black men, for example — the risks of appearing masked in public can be as grave as catching the virus itself. The unequally distributed consequences of mask wearing are another demonstration of the shallowness of the “all in this together” tropes that have captioned government responses to the crisis.

After initial confusion, a scientific consensus appears to have developed around the recommendation that healthy people wear masks in public — not because it meaningfully reduces their chances of contracting Covid-19 but because (alongside established hygiene and distancing measures) it mitigates the danger of asymptomatic carriers transmitting it to others. This pandemic has exposed us all to a strange, inverted form of paranoia, whereby our every action becomes a possible source of contagion and a prospective vector for another’s suffering. Covering my face seemingly allows me to opt out of this global cycle of misery, dispossession, and death by partitioning my body from the pattern of viral circulation — a case of clean hands allowing for a clear conscience.

However, the question of who gets to wear a mask, and of what kind, is far from an issue of simple personal responsibility. The sudden visibility (or visible absence) of masks on the faces of the people around us reveals our common implication in the transnational political economy of protective equipment, which is currently working to distribute the harms of this crisis according to the prerogatives of class power and the profit motive.

Media coverage of the mask issue has been haunted by a recurring set of questions. If “the science” increasingly supports masking as potentially mitigating the spread of Covid-19, why are there still so many contradictory policies about them? Why hasn’t there been a consistent message across the board, backed up by the necessary distribution of resources? The fact is, “the science” is only one factor bearing on state decision-making, and a relatively weak one at that. The stances that governments take on masks have as much to do with their increasing economic and symbolic value as their medical benefits.

The saga around Trump and Pence’s refusal to mask up is one particularly obvious example. But more broadly, the pandemic has transformed masks from just another cheap, low-margin bulk good shipped around the world by the ton to a desperately prized resource in a savagely competitive market.

Before the outbreak began, China was the world’s primary medical mask manufacturer, producing half the global yearly stock. But this didn’t mean the country was ready to equip its own population in the event of a crisis. When Covid-19 hit, the Chinese government was forced to restrict exports and even had to import protective equipment from Japan, India, the U.S., and Tanzania. This rapidly drained supply from the global market, hampering the efforts of soon-to-be affected countries to build their own stockpiles.

China is once more in a position to export (in part because it has repurposed factories that had been making toys, clothes, or electronics), but in the interim, the global mask market has completely changed. Buyers must now navigate a volatile landscape in which nation-states, medical-supply companies, and independent brokers compete over a limited stock, all the while contending with surging demand, distribution bottlenecks, and widespread quality-control issues. The bidding has become increasingly acrimonious, with governments prepared to go to remarkable lengths to secure their own supply. In March, Germany impounded a shipment of masks headed for Switzerland; a month later, officials in Berlin accused the U.S. of “piracy” when it allegedly swiped 200,000 masks earmarked for the municipal police department. Private buyers have repeatedly outbid state procurers. The New York Times, for instance, has reported on a group of French officials who were supposedly “outbid at the last minute by unknown American buyers for a stock of masks on the tarmac of a Chinese airport.”

The question of who gets to wear a mask, and what kind, is far from an issue of simple personal responsibility

Under these circumstances, any governmental prevarication can be catastrophic. Both the U.S. and the U.K. have failed miserably at providing health-care staff and other at-risk workers with adequate protective equipment. There have been widespread reports of medical staff treating patients while wearing substandard or improvised gear: nurses wearing trash bags, doctors performing tracheotomies in ski goggles. These procurement failures have undoubtedly impacted policy announcements, with the U.K. government’s reluctance to issue more concrete advice on mask wearing likely motivated in part by fears that citizens would buy up supplies needed for health workers.

The U.S.’s domestic medical supplies market has become a bewildering microcosm of the global mask economy: Hospital buyers are obliged to compete with other health-care providers, federal officials, and medical-supply companies, frequently attempting to source stock direct from China or through unknown and uncredentialed “entrepreneurs.” There are reports of shipments of masks being flipped multiple times by brokers before arriving at the point of use — if they arrive at all. So dire is the situation that on April 1, the Wall Street Journal felt obliged to explain to its readers “Why the Richest Country on Earth Can’t Get You a Face Mask.”

In a sense, the attitude of spoiled incredulity in that headline cuts to the heart of the matter. Until now, it might have seemed that the elaborate tangle of capital’s supply chains was organized precisely to service the desires of the American consumer, but the underlying logic of this system is to maximize profit, pure and simple.

The inability of global supply chains to deliver masks to those who need them the most marks not their failure but proof of their continuing functionality. By concentrating production in the areas with the cheapest labor, mandating lean inventories and “just in time” logistics, and insisting that, even during a crisis, distribution is channeled primarily through markets, the infrastructure of global capitalism is configured to protect profit-seeking above all other considerations. As Ingrid Burrington wrote for Data and Society, “the decisions that tend to make supply chains fragile … are decisions that tend to be rewarded by markets and governments … You’ve destroyed all of your competitors and now we can only get testing swabs from one part of Italy? That’s not a weak supply chain, it’s proof that the market rewards the best products.”

For national governments desperate to retain authority and legitimacy in the face of this crisis, masks have become a powerful symbol of state efficacy. Just as mask wearing is becoming a sign of individual civic responsibility, so has consistent supply of medical masks and other personal protective equipment become an index of a state’s ability to protect its citizens. The efforts of governments to cover up their failings indicate how seriously they are taking the optics of this issue. In February, Western media was horrified by China’s attempts to censor whistleblowing doctors; now in the U.K., National Health Service management are threatening to discipline staff who speak out about working conditions, while ministers bluster that the country is now the “international buyer of choice for personal protective equipment.”

Under these circumstances, masks have acquired special geopolitical importance. Western political commentators have coined the term “mask diplomacy” to describe the efforts of certain nations (i.e., China) to build global hegemony through strategic displays of beneficence. E.U. foreign policy chief Josep Borrell has spoken of a “global battle of narratives” in which “China is aggressively pushing the message that, unlike the U.S., it is a responsible and reliable partner.” The U.S., meanwhile, has helpfully demonstrated what the opposite of “responsible” and “reliable” looks like. Trump’s attempts at mask diplomacy have included an increasingly impotent stream of anti-China rhetoric, squabbling with American medical supplies producer 3M over mask exports, and defunding the WHO, one of the few multilateral institutions that could, at least theoretically, supervise the distribution of masks and other supplies on the basis of need.

How many people will die because of the deranged international political economy of masks? On April 17, the WHO warned that Africa could become the next epicenter of the pandemic. How will the nations of the Global South fare in the increasingly brutal world market for medical supplies? The WHO, the UN, and the Africa Centres for Disease Control and Prevention are beginning to disseminate masks, gowns, and other necessities to under-resourced health-care systems across the continent, but African nations may still be forced to rely on scraps from the table of China, Europe, and the U.S. The inability of global capitalism to support the equitable distribution of basic, universal human needs will only become more transparent in the coming months.

The current international mask market is, to borrow a term from Achille Mbembe, a global “necropolitics”: a world system for the unequal allocation of risk, harm, and death. This encapsulates everything from the U.S.-China trade war to equipment shortages at our local hospitals to ourselves, eyeing up that 10-pack of disposable surgical masks on the Amazon marketplace, finger hovering over the “Buy Now” button. At every point in this chain there is a structural tension between the necessity of finding collective means to mitigate risk and provide care for everyone, and a politico-economic model which ceaselessly channels us toward individualized, transactional responses designed to distance each of us from the suffering of others.

In other words, if the question “should I wear a mask?” feels fraught with implications of craven self-preservation and the unjust distribution of affliction, this is the structural effect of factors that extend far beyond the scope of personal choice and responsibility.

In an article for the Guardian, Samanth Subramanian writes of the “various tribes of mask-wearers”: shoppers wearing medical-grade N95 masks in the pasta aisle; a “finance bro” jogging in a designer “Vogmask”; “the extreme worrier in the GVS Elipse P100.” The capacity to express one’s response to the crisis in terms of boutique consumer choices is, however, available only to certain social strata. While the affluently anxious mull over the ethics of buying airtight respirators for themselves and their family, delivery drivers and grocery clerks are lucky if they get a disposable plastic mask. Others make do with improvised homemade coverings.

This is how global politico-economic dynamics, mediated by national and regional formations of race and class, are currently playing out — on the streets, in shops and depots, and over our faces. Covid-19 presents a fundamentally collective hazard, against which we are only as safe as our most vulnerable neighbor. Structures of geopolitical and economic competition have thus far prevented us from developing an appropriately cooperative response at the international level; at the local scale, this results in a situation where the personal management of individuated risk starts to seem like the only available recourse.

How many people will die because of the deranged international political economy of masks?

It does not have to be this way, however. One of the most emphatic effects of the pandemic has been to illustrate the condition of mutual exposure and vulnerability imposed on us all by the vicissitudes of globalized society. Masking — both the practice of wearing and the struggle to obtain them — knits us more tightly to this reality, implicating us directly in the economic and geopolitical processes currently driving the crisis response. What forms of political subjectivity might this moment disclose? What forms of solidarity can sustain local and transnational systems of care, while resisting capture by nations and markets? What coalition can be built of the sickly, the frightened, the outraged, and the faceless?

All over the world, workers are fighting for safer working conditions, proper protective equipment and better pay. Amazon, for instance, rakes in record profits and grabs market share while firing and attempting to smear warehouse worker Christian Smalls for speaking out about unsafe working conditions. As with all forms of disaster capitalism, this is an opportunistic extension of business as usual, stealing a march in the struggle between workers and bosses, collective action and self-interested greed. Yet it is profoundly encouraging that even among the atomizing conditions of lockdown, the actions of Amazon and other exploitive corporations do not go unresisted.

Masks are playing an increasingly symbolic role in these struggles. In the U.K., the state’s failure to provide NHS staff with the equipment necessary to work without putting their lives at undue risk (at least 100 health-care workers have now died from Covid-19) has become a focal point for outrage at the government’s handling of the crisis. The acronyms PPE (personal protective equipment) FFP3 (the U.K. designation for the airtight masks required by medics) are now shorthand for the incompetence and venality of Boris Johnson’s administration. Meanwhile, in the U.S., May Day saw a wave of coordinated strikes by workers at Amazon, Whole Foods, Instacart, Target, and Shipt, all demanding adequate protective equipment, improved safety standards, and proper hazard and sick pay. For these workers — generally among the most precarious and poorly remunerated, now suddenly understood to be “essential”— the mask is both an emblem of their solidarity and part of the physical substance of their demands.

In this context, masking begins to demonstrate how “personal protection” can be conceived of as a genuinely collective project. When a group of warehouse workers form a picket line, sporting a motely array of careworn bandanas, sliced-up pillowcases and mutilated T-shirts, it is evidence that, refusing to be crushed by desperate circumstances, they have found the courage necessary to care for one another at a time when both their boss and their government have proved willing to leave them for dead. More than just an indictment of corporate brutality, this act of group masking affirms the ability of people to develop forms of cooperative security against the intertwined threats of Covid-19 and capitalist necropolitics. Here, the mask acquires a fresh double significance: a necessary condition of survival and the common basis on which vital structures of communal solidarity might be reaffirmed.

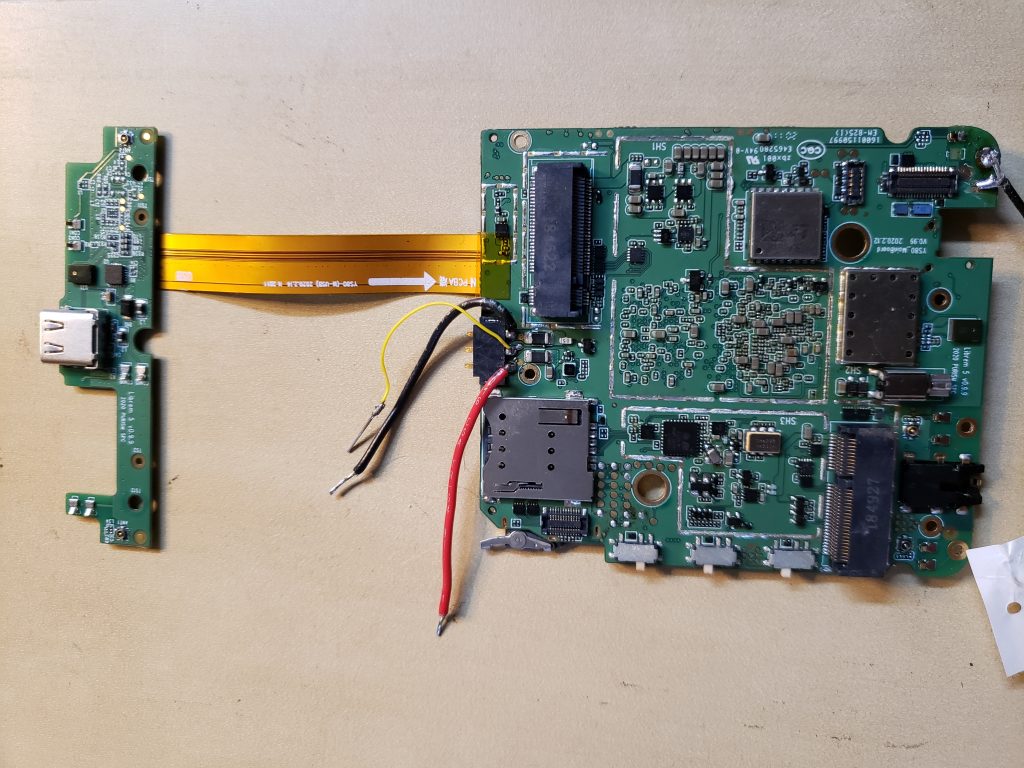

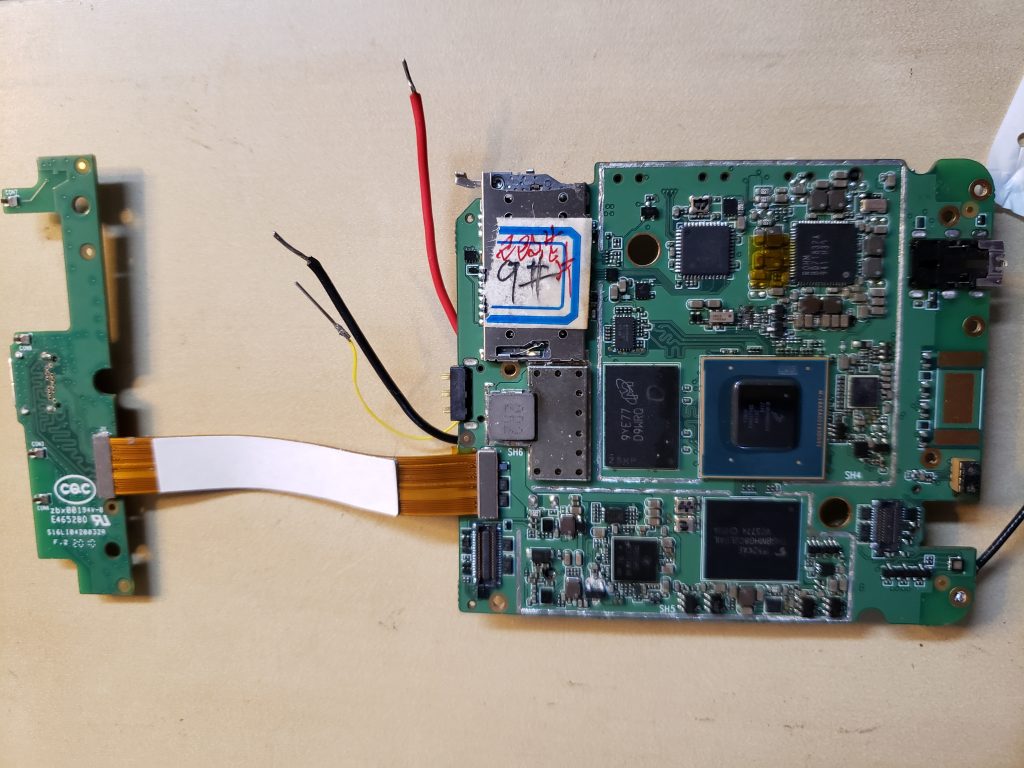

The US government barred Huawei and ZTE last year from doing any business with US-based firms. This was a massive blow to the companies as they were stopped from launching new phones with GMS (Google Mobile Services) and supply network equipment to US network carriers. Huawei had hoped that the ban would be lifted sometime this year, but that won’t happen until at least mid-2021.

The US government barred Huawei and ZTE last year from doing any business with US-based firms. This was a massive blow to the companies as they were stopped from launching new phones with GMS (Google Mobile Services) and supply network equipment to US network carriers. Huawei had hoped that the ban would be lifted sometime this year, but that won’t happen until at least mid-2021.