I used OpenBSD on the original Surface Go back in 2018 and many things worked with the big exception of the internal Atheros WiFi. This meant I had to keep it tethered to a USB-C dock for Ethernet or use a small USB-A WiFi dongle plugged into a less-than-small USB-A-to-USB-C adapter.

Microsoft has switched to Intel WiFi chips on their recent Surface devices, making the Surface Go 2 slightly more compatible with OpenBSD.

Hardware

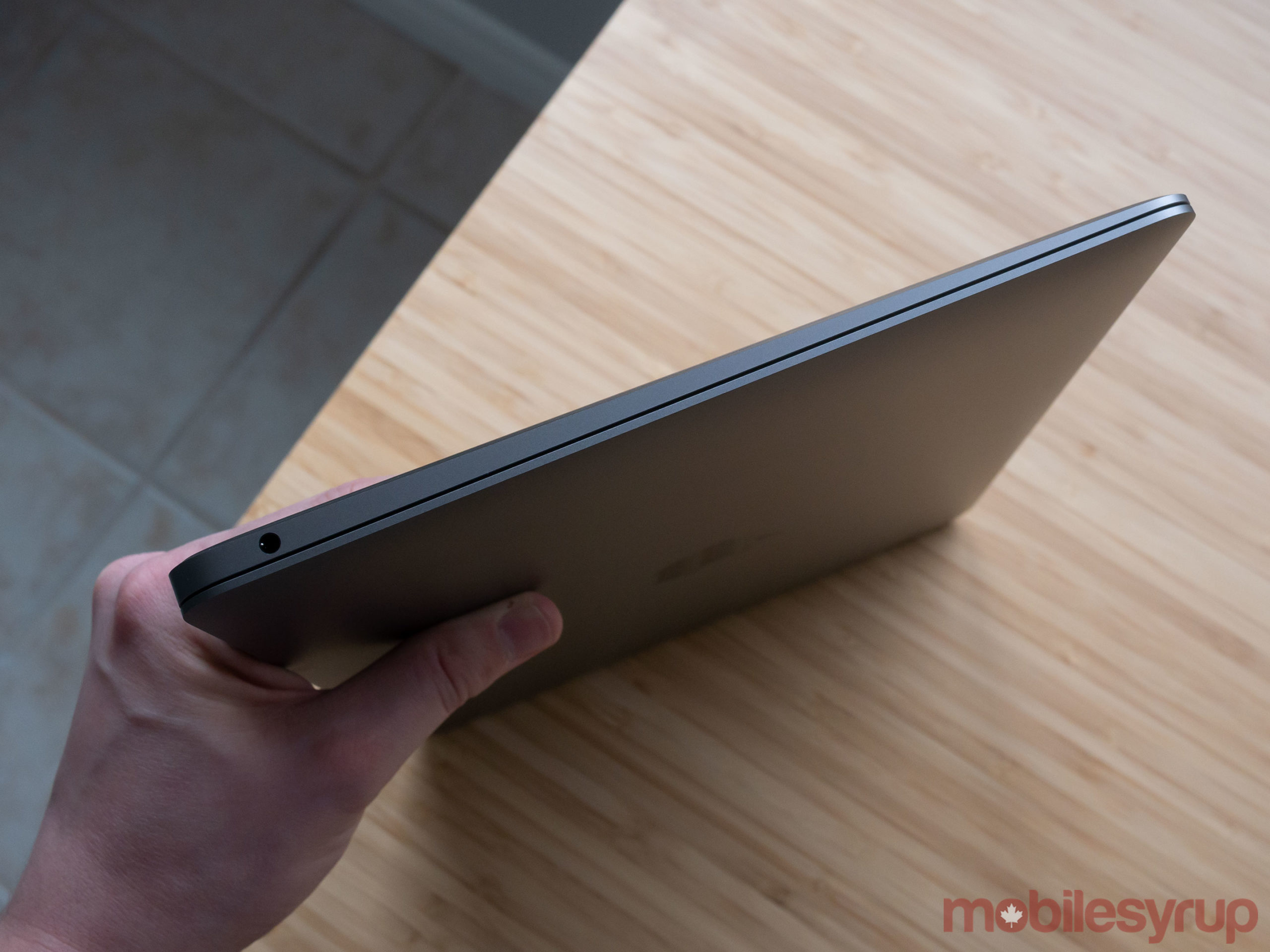

As with the original Surface Go, I opted for the WiFi-only model with 8Gb of RAM and a 128Gb NVMe SSD. The processor is now an 8th generation dual-core Intel Core m3-8100Y.

The tablet still measures 9.65" across, 6.9" tall, and 0.3" thick, but the screen bezel has been reduced a half inch to enlarge the screen to 10.5" diagonal with a resolution of 1920x1280, up from 1800x1200.

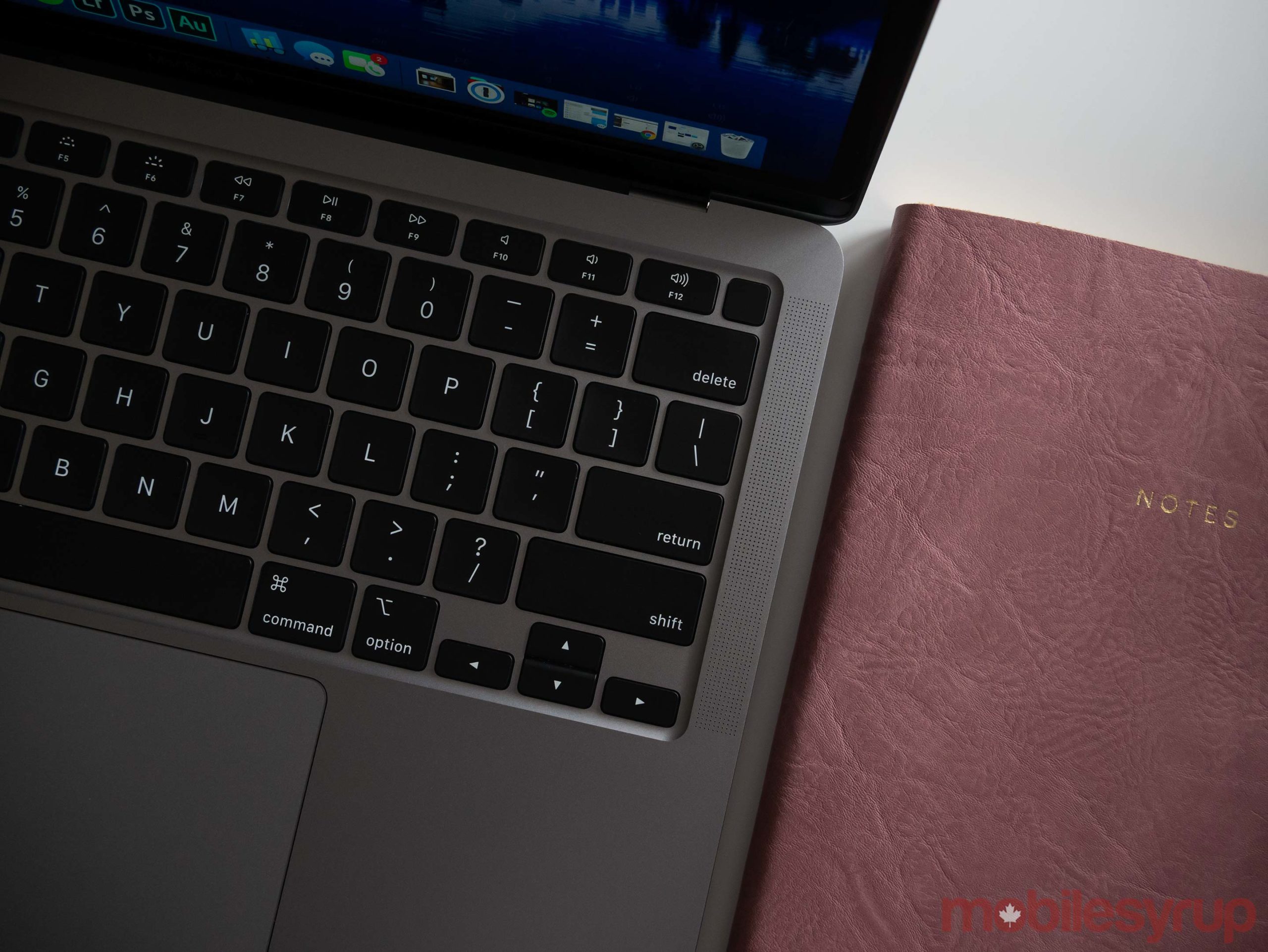

The removable USB-attached Surface Go Type Cover contains the backlit keyboard and touchpad. I opted for the "ice blue" alcantara color, as the darker "cobalt blue" version I purchased last time is no longer available. The Type Cover attaches magnetically along the bottom edge and can be folded up against the front of the screen magnetically, issuing an ACPI sleep signal, or against the back, automatically disabling the keyboard and touchpad in expectation of being touched while holding the Surface Go.

One unfortunate side-effect of the smaller screen bezel is that the top of the Type Cover keyboard now rests very close to the bottom of the screen when the keyboard is in its raised position. With one's fingers on the keyboard, the text at the very bottom of the screen such as the status bar in a web browser can be covered up by the left hand.

The Type Cover's keyboard is quiet but has a very satisfying tactile bounce. The keys are small considering the entire keyboard is only 9.75" across, but it works for my small hands. The touchpad has a slightly less hollow-sounding click than I remember on the previous model which is a plus.

The touchscreen is an Elantech model connected by HID-over-I2C and supports pen input. The Surface Pen is available separately and requires a AAAA battery, though it works without any pairing necessary with the exception of the top eraser button which requires Bluetooth for some reason. The pen attaches magnetically to the left side of the screen when not in use.

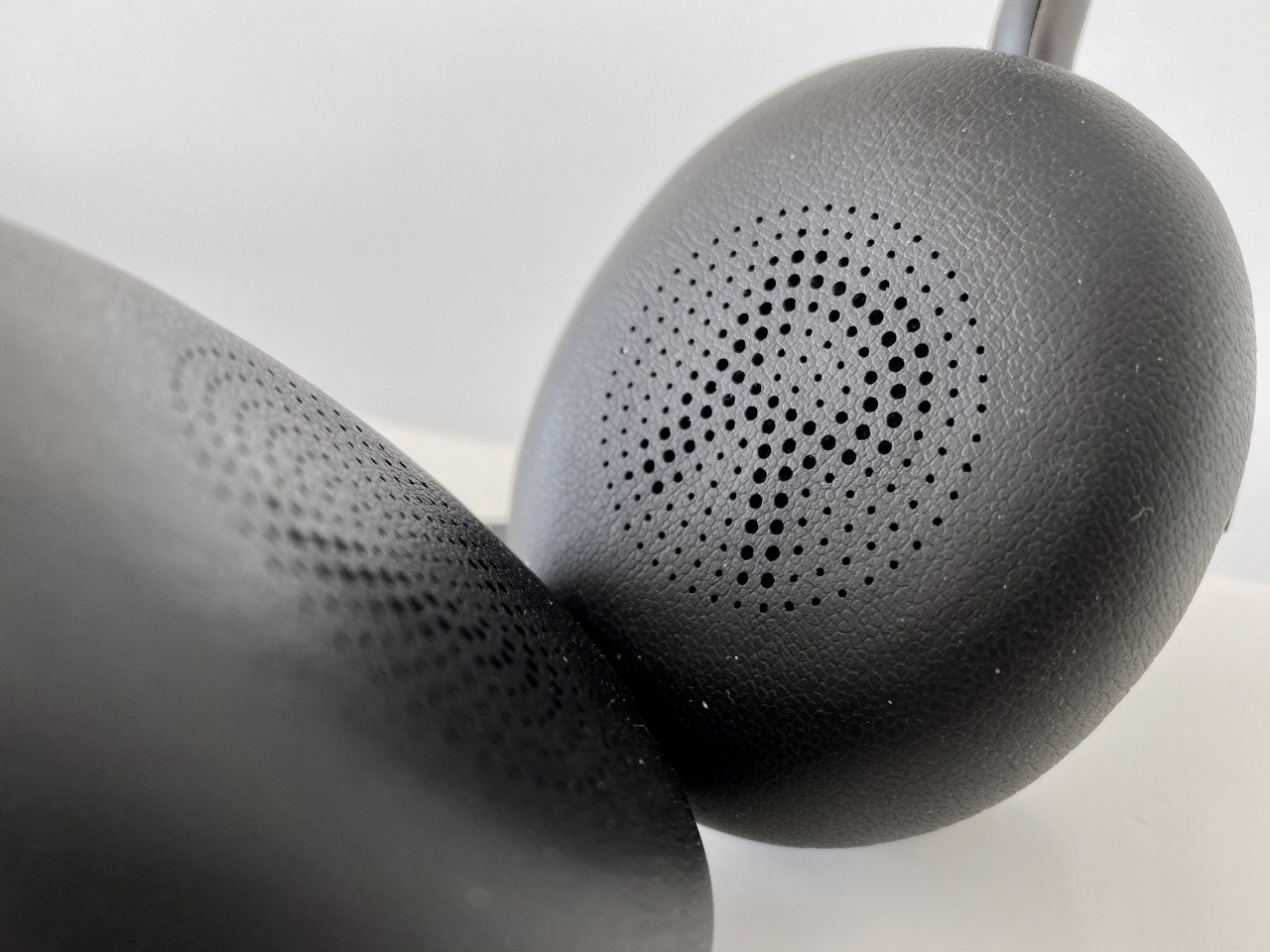

A set of stereo speakers face forward in the top left and right of the screen bezel but they lack bass. Along the top of the unit are a power button and physical volume rocker buttons. Along the right side are a 3.5mm headphone jack, USB-C port, Surface dock/power port, and microSD card slot located behind the kickstand.

Wireless connectivity is now provided by an Intel Wi-Fi 6 AX200 802.11ax chip which also provides Bluetooth connectivity.

Firmware

The Surface Go's BIOS/firmware menu can be entered by holding down the Volume Up button, then pressing and releasing the Power button, and releasing Volume Up when the menu appears. Secure Boot as well as various hardware components can be disabled in this menu. Boot order can also be adjusted.

When powered on, holding down the power button will eventually force a power-off of the device like other PCs, but the button must be held down for what seems like forever.

Installing OpenBSD

Due to my previous OpenBSD work on the original Surface Go, most components work as expected during installation and first boot.

To boot the OpenBSD installer, dd the install67.fs image to a USB

disk, enter the BIOS as noted above and disable Secure Boot, then

set the USB device as the highest boot priority.

When partitioning the 128Gb SSD, one can safely delete the Windows Recovery partition which takes up 1Gb, as it can't repair a totally deleted Windows partition anyway and a full recovery image can be downloaded from Microsoft's website and copied to a USB disk.

After installing OpenBSD but before rebooting, mount the EFI partition

(sd0i) and delete the /EFI/Microsoft directory.

Without that, it may try to boot the Windows Recovery loader.

OpenBSD's EFI bootloader at /EFI/Boot/BOOTX64.EFI will otherwise load

by default.

One annoyance remains that I noted in my previous review: if the touchpad is touched or F1-F7 keys are pressed, the Type Cover will detach all of its USB devices and then reattach them. I'm not sure if this is by design or some Type Cover firmware problem, but once OpenBSD is booted into X, it will open the keyboard and touchpad USB data pipes and they work as expected.

OpenBSD Support Log

2020-05-12: Upon unboxing, I booted directly into the firmware screen

to disable Secure Boot and then installed OpenBSD.

Upon first full boot of OpenBSD, the kernel panicked due to acpivout

not being able to initialize its index of BCL elements.

I disabled acpivout for the moment.

The Intel AX200 chip is detected by iwx, the firmware loads (installed via

fw_update), and the device can do an active network scan, but when trying

to authenticate to a network, the device firmware reports a fatal error.

2020-05-13: I created a new umstc driver that attaches to the

uhidev device of the Type Cover and responds to the volume and brightness

keys without having to use usbhidaction.

More importantly, it holds the data pipe open so the Type Cover doesn't

reboot when the F1-F7 keys are pressed at the console.

A solution will still be needed to prevent the Type Cover from rebooting

when the touchpad is accidentally touched when not in X.

I tried S3 suspend and resume, but when pressing the power button to wake it up, it immediately displays the initial firmware logo and boots as if it were restarted. S4 hibernation works fine. S3 suspend works in Ubuntu, so I'll need to do some debugging to figure out where OpenBSD is failing.

2020-05-31: I

imported

my umstc driver into OpenBSD.

2020-06-02: I

imported

my acpihid driver into OpenBSD.

2020-07-05: Stefan Sperling (stsp@) has done a lot of work on iwx over

the past couple months, so WiFi is much more stable and performant now.

Current OpenBSD Support Summary

Status is relative to my OpenBSD-current tree as of 2020-06-02.

| Component | Works? | Notes |

|---|---|---|

| AC adapter | Yes | Supported via acpiac and status available via apm and hw.sensors, also supports charging via USB-C. |

| Ambient light sensor | No | Connected behind a PCI Intel Sensor Hub device which requires a new driver. |

| Audio | Yes | HDA audio with a Realtek 298 codec supported by azalia. |

| Battery status | Yes | Supported via acpibat and status available via apm and hw.sensors. |

| Bluetooth | No | Intel device, shows up as a ugen device but OpenBSD does not support Bluetooth. Can be disabled in the BIOS. |

| Cameras | No | There are apparently front, rear, and IR cameras, none of which are supported. Can be disabled in the BIOS. |

| Gyroscope | No | Connected behind a PCI Intel Sensor Hub device which requires a new driver which could feed our sensor framework and then tell xrandr to rotate the screen. |

| Hibernation | Yes | Works via the ZZZ command. |

| MicroSD slot | Yes | Realtek RTS522A, supported by rtsx. |

| SSD | Yes | SK hynix NVMe device accessible via nvme. |

| Surface Pen | Yes | Works on the touchscreen via ims. The button on the top of the pen requires Bluetooth support so it is not supported. |

| Suspend/resume | No | TODO |

| Touchscreen | Yes | HID-over-I2C, supported by ims. |

| Type Cover Keyboard | Yes | USB, supported by ukbd and my umstc. 3 levels of backlight control are adjustable by the keyboard itself with F1. |

| Type Cover Touchpad | Yes | USB, supported by the umt driver for 5-finger multitouch, two-finger scrolling, hysteresis, and to be able to disable tap-to-click which is otherwise enabled by default in normal mouse mode. |

| USB | Yes | The USB-C port works fine for data and charging. |

| Video | Yes |

inteldrm has Kaby Lake support adding accelerated video, DPMS, gamma control, integrated backlight control, and proper S3 resume. |

| Volume buttons | Yes | Supported by my acpihid driver. |

| Wireless | Yes | Intel AX200 802.11ax wireless chip supported by iwx. |

Part of Oliver’s tribe in conversation today

Part of Oliver’s tribe in conversation today