Mike had a different perspective on wiring and cable management- because he worked at a factory which made wires and cables. It was the early–90s, and he was in charge of babysitting a couple of VAXes and their massive, 85lb hard drives. It was an easy job: the users knew the system and needed very little support, the VAXes were practically unstoppable, and the backup battery system could keep the entire thing running for over an hour.

The computers supported HR and accounting, which meant as the year ticked towards its end, Mike had to prep the system for its heaviest period of use- the year end closing processes. Through the last weeks of December, his users would be rushing to get their reports done and filed so they could take off early and enjoy the holidays.

Mike had been through this rodeo before, but Reginald, the plant manager, called him up to his office. There was going to be a wrench in the works this year. Mike sat down in Reginald’s cramped and stuffy office next to Barry, the chief electrician for the plant.

“Our factory draws enough power from the main grid that the power company has a substation that’s essentially dedicated to us,” Reginald explained. “But they’ve got a problem with some transformers loaded with PCBs that they want to replace, so they need to shut down that substation for a week while they do the work.”

The factory portion was easy to deal with- mid-December was a period when the assembly line was usually quiet anyway, so the company could just shift production to another facility that had some capacity. But there was no way their front-office could delay their year-end closing processes.

“So, to keep the office running, we’ll be bringing in a generator truck,” Reginald said. “And that means we’re going to need to set up a cut-over from the main grid to the generator.”

From the computer-room side, the process was easy, but that didn’t stop Mike from writing up a checklist, taping it to the wall beside his desk and sharing a copy with Barry. Before the generator truck arrived, he’d already tested the process several times, ensuring that he could go from mains power to battery and back to mains power without any problem.

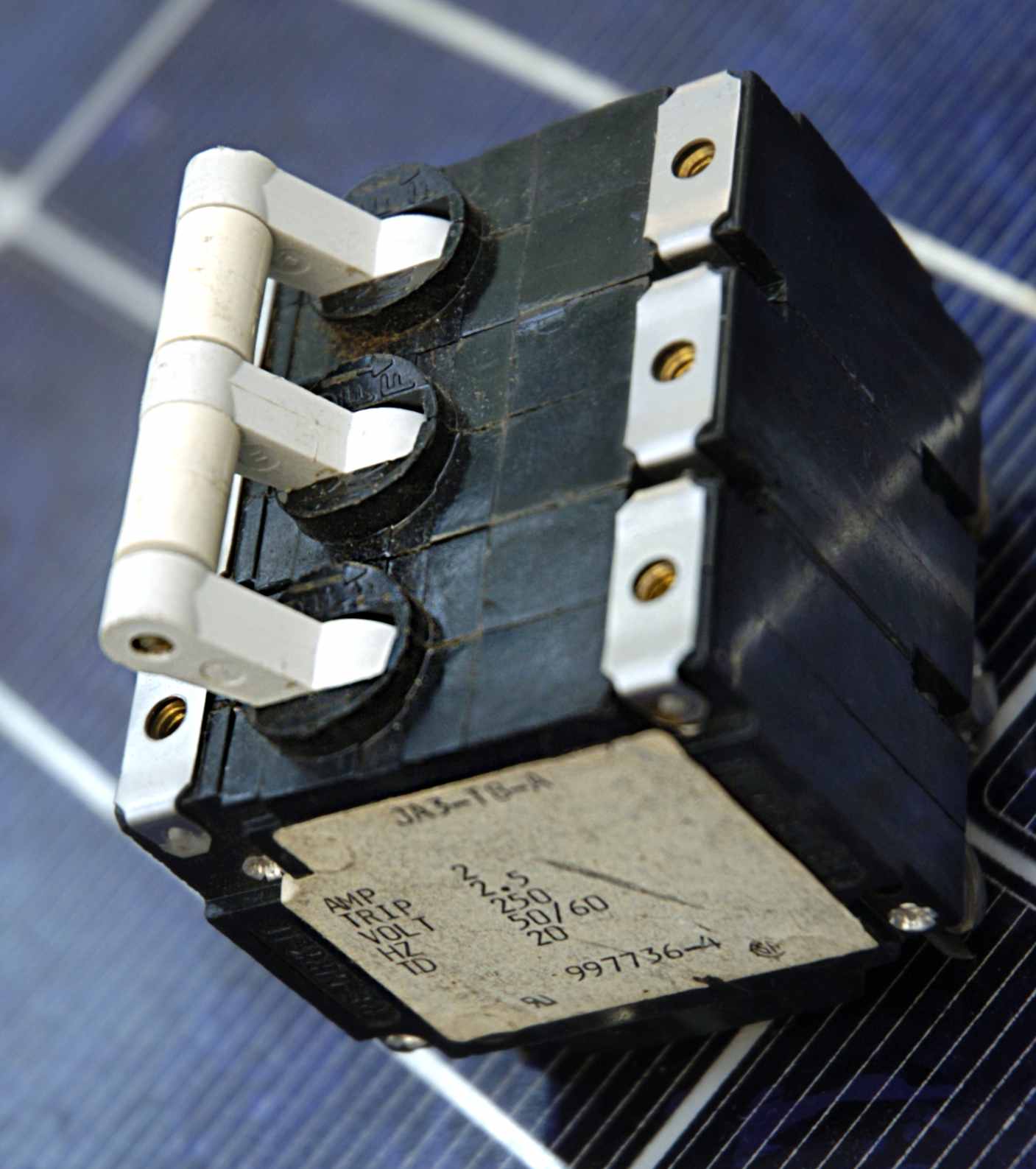

The generator truck arrived a week before the changeover. The electricians ignored it for a few days. Just as Mike was starting to get worried about deadlines, he looked out a window and saw a trio of electricians, lead by Barry, starting to connect cables to it. Later that day, when Mike left to go to lunch, he walked past the generator truck, and noticed something odd about the cables- they were clearly single phase power cables.

Typical residential power systems are single-phase alternating current- one peak, one trough. This creates “dead” moments in the cycle, where no work is being done. That’s fine for residential use- but industrial systems need three-phase power- three offset AC cycles that, when added together, guarantee current is always flowing.

“Hey,” Mike said to one of the electricians loitering near the truck, “you’re planning to run some three-phase cabling, right?”

“Nope. The factory’s shut down- this thing is just gonna run lights and heating.”

“And computers,” Mike said. “The hard drives need three-phase power.”

“We’ll have to order some parts,” the electrician said.

A few more days ticked by with no three-phase connections, and Mike checked in with the electricians again.

“The parts are coming.”

At this point, Reginald came down from his office to the computer room. “Mike, Barry’s telling me you’re being a disruption.”

“What?”

“Look, there’s a chain of command,” Reginald said. “And you can’t tell the electricians how to do their job.”

“I’m no-”

“From now on, if you have any concerns, bring them to me.”

The day of the cut-over, the three-phase cabling finally arrived. Barry and his electricians quickly strung those cables from the generator. Mike wasn’t exactly watching them like a hawk, but he was worried- there was no way they could test the configuration while they were working so hastily. Unlike single-phase power, three-phase power could be out-of-sync, which would wreak havoc on the hard drives. He thought about bringing this up to the electricians, but recalled Reginald’s comments on the chain of command. He went to Reginald instead.

“Mike,” Reginald said, shaking his head. “I know you computer guys think you know everything, but you’re not an electrician. This is a factory, Mike, and you’ve got to learn a little about what it’s like to work for a living.”

Now, the electricians and Mike needed to coordinate their efforts, but Reginald strictly enforced the idea of the chain of command. One electrician would power on the generator and announce it, “Generator’s running.” Another electrician would relay this to Barry: “Generator’s running.” Barry would relay that to Reginald. “Generator’s on.” Reginald, finally, would tell Mike.

At 1PM, the electric company cut the power to the factory. The lights went out, but the computers kept humming along, running off battery power. A few minutes later, and a few games of telephone between electricians and Reginald, the lights came back on.

Mike stopped holding a breath that he didn’t know he’d been holding. Maybe he’d been too worried. Maybe he was jumping at shadows. Everything was going well so far.

“Tell the computer guy to switch back to mains power,” called one of the electricians.

“Tell the computer guy to switch back to the mains,” Barry repeated.

“Mike, switch back to the mains,” Reginald ordered.

Mike threw the switch.

BOOOOOOOOOOOOOOMMMMMM

The computer room shook so violently that Mike briefly thought the generator had exploded. But it wasn’t the generator- it was the hard drives. They didn’t literally explode, but 85lbs of platter spinning at about 3,000 RPMs had a lot of momentum. The motor that drove the spindle depended on properly sequenced three-phase power. The electricans had wired the power backwards, and when Mike switched to mains power, the electric motor suddenly reversed direction. Angular momentum won. The lucky drives just broke the belt which drove the spindle, but a few of them actually shrugged their platters from the spindle, sending the heavy metal disks slamming into the sides of the enclosure with a lot of energy.

For the first and only time in his career, Mike slammed a fist into the emergency stop button, cutting all power from the computer room.

Year end closing got delayed. It took the better part of a month for Mike to recover from the disaster. While he focused on recovering data, the rest of the organization kept playing their “chain-of-command telephone”.

The electricians couldn’t be at fault, because they took their orders from Barry. Barry couldn’t be at fault, because he took his orders from Reginald. Reginald couldn’t be at fault, because he followed the chain of command. Mike hadn’t always followed the chain of command. Therefore, this must be Mike’s fault.

Once the data recovery was finished, he was fired.

[Advertisement] Release!

is a light card game about software and the people who make it. Play with 2-5 people, or up to 10 with two copies - only $9.95 shipped!

[Advertisement] Release!

is a light card game about software and the people who make it. Play with 2-5 people, or up to 10 with two copies - only $9.95 shipped!