From high in the sky to under the sea, these 18 restaurants with unique surroundings are a feast for the eyes. Whether you’re stepping into the past or gazing as far as the eye can see, these restaurants are sure to provide a memorable dining experience for your next meal.

If you know of any other restaurants with unique surroundings, be sure to let us know in the comments below so we can do a follow-up post!

1. Under the Sea

Ithaa Restaurant, Maldives

© 2012 Conrad Hotels & Resorts

Located 16 feet (4.87m) below sea level, Ithaa restaurant offers unparalleled 180° views of sea life while you dine. The restaurant is part of the Conrad Maldives Rangali Island resort and is truly one of the most interesting dining experiences in the world.

Ithaa, which means mother-of-pearl in Dhivehi, is a mostly acrylic structure with a 14-person capacity. The space is approximately 5m x 9m (16ft x 30ft) and was designed and constructed by M.J. Murphy. The restaurant officially opened on April 15, 2005. Entrance to Ithaa is by way of a spiral staircase in a thatched pavilion at the end of a jetty. For more information please visit the official site at: Conrad Maldives Rangali Island Resort

2. Inside a Cave Overlooking the Water

Grotta Palazzese – Polignano a Mare, Italy

In the town of Polignano a Mare in southern Italy (province of Bari, Apulia), lies a most intriguing dining experience at the Grotta Palazzese. Open only during the summer months, a restaurant is created inside a vaulted limestone cave, looking outwards toward the sea. The restaurant is part of the Grotta Palazzese hotel located above.

HOTEL RISTORANTE GROTTA PALAZZESE

Via Narciso, 59 – Polignano a Mare (Bari) Puglia

Tel. +39 (0)80 4240677 – Fax +39 (0)80 4240767

Email: grottapalazzese@grottapalazzese.it

Website: http://www.grottapalazzese.it

3. Surrounded by Snow

SnowRestaurant – Kemi, Finland

SnowRestaurant is located at the LumiLinna Snowcastle in Kemi, Finland. The restaurant is rebuilt annually and typically opens around the end of January for lunch and dinner (daily). The temperature in the restaurant is always around -5 degrees Celsius. Reservations must be made in advance and you can see the menu for the upcoming season here.

4. Dinner in the Sky

Locations Around the World

Dinner in the Sky is hosted at a table suspended at a height of 50 metres by a team of professionals and can be installed anywhere in the world as long as there is a surface of approximately 500 m² that can be secured. It is available for a session of 8 hours and can accommodate up to 22 people around the table at every session with three staff in the middle (chef, waiter, entertainer). To date, there have been Dinner in the Sky events in 40 different countries. Visit the official site for more information.

5. Surrounded by Mountains

Berggasthaus Aescher – Wasserauen, Switzerland

Berggasthaus Aescher is located 1454 meters (4,770 ft) above sea level and is open from 1 May – 31 October annually. The cliffside restaurant rewards hikers with remarkable views of the surrounding Alpstein area in Wasserauen, Switzerland. The vertical cliff face it hangs to is over 100 meters high. There is also a cable car that can take you back down to the valley. For more information visit MySwitzerland.com and TripAdvisor. You can also find the restaurant’s contact information below for any inquiries.

Beny & Claudia Knechtle-Wyss

CH-9057 Weissbad AI

T: 071 799 11 42 oder 071 799 14 49

E-Mail: info@aescher-ai.ch

6. Restaurant on a Rock

The Rock – Zanzibar, Tanzania

The Rock is located on the south-east of Zanzibar island on the Michamwi Pingwe peninsula (about 45 minutes from Stone Town). The restaurant is situated on a rock not far from shore. Patrons can reach the restaurant by foot during low tide and by boat at high tide (offered by the restaurant). Visit the official site for more information: www.therockrestaurantzanzibar.com/

7. Inside a Thousand-Year-Old Cistern

Sarnic Restaurant – Istanbul, Turkey

A cistern is a waterproof receptacle often built to catch and store rainwater. In Istanbul, Turkey, this thousand-year-old cistern features high domes supported on six stone piers situated at the top of the hill at the end of the row of small hotels in the narrow street immediately behind St. Sophia. It is now the site of a unique restaurant called Sarnic.

Sarnic Restaurant

Address: Sogukcesme Sokagi 34220 Sultanahmet / Istanbul

Phone: 0212 512 42 91 – 513 36 60

8. Open Air Rooftop Dining

Sirocco @ Lebua Hotel – Bangkok, Thailand

Located on the 63rd floor of Lebua Hotel in Bangkok, Thailand; is the award-winning, open air rooftop restaurant, Sirocco. Patrons are graced with incredible views of the bustling city below as they dine on authentic Mediterranean fare. For reservations and additional information, visit the official site.

9. Tree-Top Dining with Zip-Line Service

Treepod @ Soneva Kiri – Koh Kood, Bangkok

Guests at the Soneva Kiri resort in Koh Kood, Bangkok are invited to experience Treepod dining. Guests are seated in a bamboo pod after being hoisted up into the tropical foliage of Koh Kood’s ancient rainforest. You can gaze out across the boulder-covered shoreline while your food and drink is delivered via the zip-line acrobatics of your personal waiter.

For more information, visit the official site.

10. Wet Feet and Waterfalls

Labassin Waterfall Restaurant – Philippines

Located at the Villa Escudero Plantations and Resort in the Philippines is the Labassin Waterfall Restaurant. The restaurant is only open for lunch and guests dine from a buffet-style menu and eat at bamboo dining tables. It’s not a natural waterfall but a spillway from the Labasin Dam. The dam’s reservoir has been turned into a lake where visitors can go rafting in traditional bamboo rafts and explore the Filipino culture at shows, facilities and additional restaurants on the sprawling property. Visit Villa Escudero’s official site for more information.

11. A Reclaimed Bank and a VIP Vault Room

The Bedford – Chicago, United Sates

Located in Chicago’s Wicker Park, The Bedford reclaimed a historic private bank from 1926 and transformed the space into a supper club. The 8,000-square foot (743 sq. m) lower-level interior features terracotta, marble and terrazzo, all reclaimed and restored from the original bank. Inside the VIP vault room, the walls are lined with more than 6,000 working copper lock boxes.

The Bedford – Wicker Park, Chicago

1612 West Division Street (At Ashland Avenue)

Chicago, IL 60622

773-235-8800 Phone

12. World’s Highest Restaurant

At.mosphere @ Burj Khalifa – Dubai

Sprawling over 1,030 meters on level 122 of the world’s tallest building (at a height of over 442 meters/1,450 ft), and two levels below the At the Top observatory deck, At.mosphere is the holder of the Guinness World Record for the ‘highest restaurant from ground level’.

For reservations and more information call +971 4 888 3444 or visit the official website.

13. Eating in the Outback

Sounds of Silence @ Ayers Rock Resort – Australia

At the Sounds of Silence experience you can dine under the canopy of the desert night. The experience begins with canapés and chilled sparkling wine served on a viewing platform overlooking the Uluru-Kata Tjuta National Park. A bush tucker inspired buffet that incorporates native bush ingredients such as crocodile, kangaroo, barramundi and quandong is offered.

Afterwards, a resident star talker decodes the southern night sky, locating the Southern Cross, the signs of the zodiac, the Milky Way, as well as planets and galaxies that are visible due. Visit the official site for more information.

14. Luxury Ferris Wheel Dining

Sky Dining @ The Singapore Flyer – Singapore

At a height of 165 meters (541 ft), Singapore Flyer is the world’s largest giant observation wheel offering panorama views of Marina Bay’s skyline with a glimpse of neighbouring Malaysia and Indonesia. The Full Butler Sky Dining experience comes with exclusive Gueridon service, a 4-course menu, wine pairing options, personalized butler service and skyline views in the comfort of a spacious capsule.

For more information visit the official site.

15. Spiritual Dining

Pitcher & Piano – Nottingham, England

Set within a deconsecrated church in the Lace Market, Pitcher & Piano Nottingham features stained glass windows, church candles, vintage bric-a-brac and cosy Chesterfields. An all-day food menu with standard pub fare is offered for breakfast, lunch and dinner.

Pitcher & Piano Nottingham

The Unitarian Church, High Pavement, Nottingham, NG1 1HN

0115 958 6081

nottingham@pitcherandpiano.com

16. Historic Dining

Ristorante Da Pancrazio – Rome, Italy

Ristorante Da Pancrazio is built over the Theater of Pompey’s ruins, the well known 1st Century B.C. theater where Julius Caesar was murdered. The restaurant has became famous for the unique halls as you dine on traditional Roman cuisine.

Ristorante Da Pancrazio

piazza del Biscione 92

00186 Roma

17. Precarious Dining Procurement

Fanweng Restaurant – Yichang, China

Located in China’s Hubei province, Fanweng Restaurant is located in the Happy Valley of the Xiling Gorge near the city of Yichang. Carved into a cliff, the restaurant floor hangs several hundred feet above ground, offering views of the Yangtze River below. Only a portion of the dining area is set over the cliff as the rest of the space is set inside a natural cave. For more information visit this blog, or call the restaurant direct at 0717-8862179.

18. Monumental Dining

58 Tour Eiffel – Paris, France

58 Tour Eiffel is an award-winning restaurant located on the first level of the iconic Eiffel Tower. Diners enjoy a stunning view of the Champ de Mars, Les Invalides, Montparnasse Tower, Montmartre and surrounding cityscape of Paris. Fine French cuisine is prepared by chef Alain Soulard. Visit the official site for more information.

If you enjoyed this post, the Sifter

highly recommends:

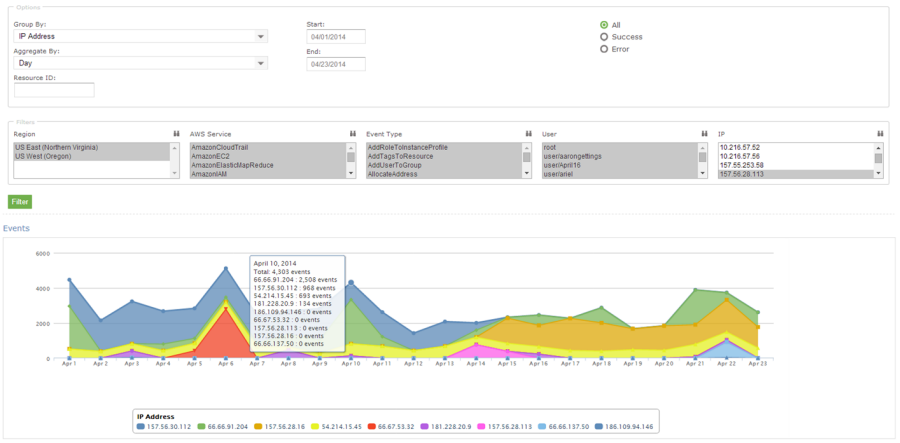

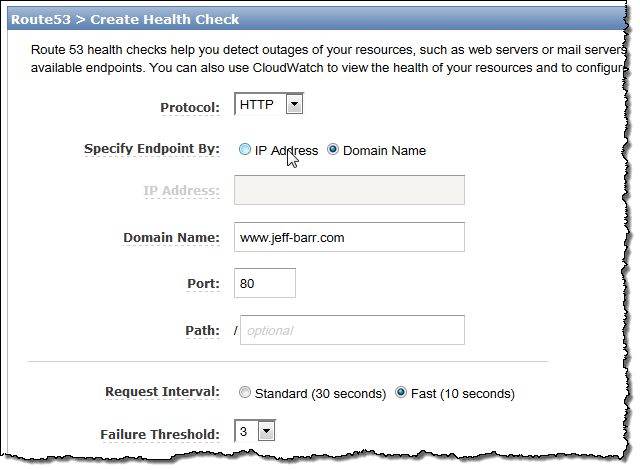

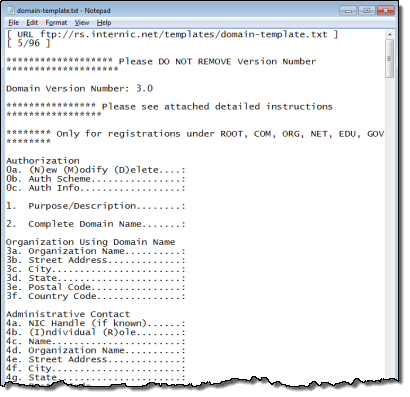

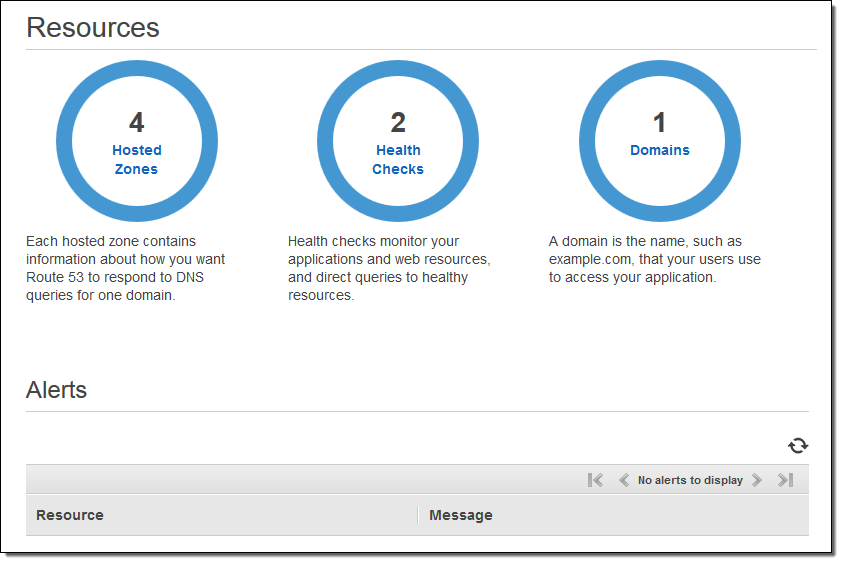

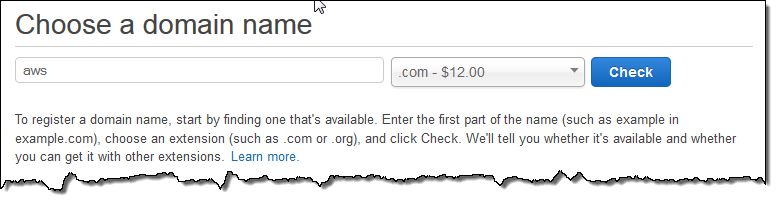

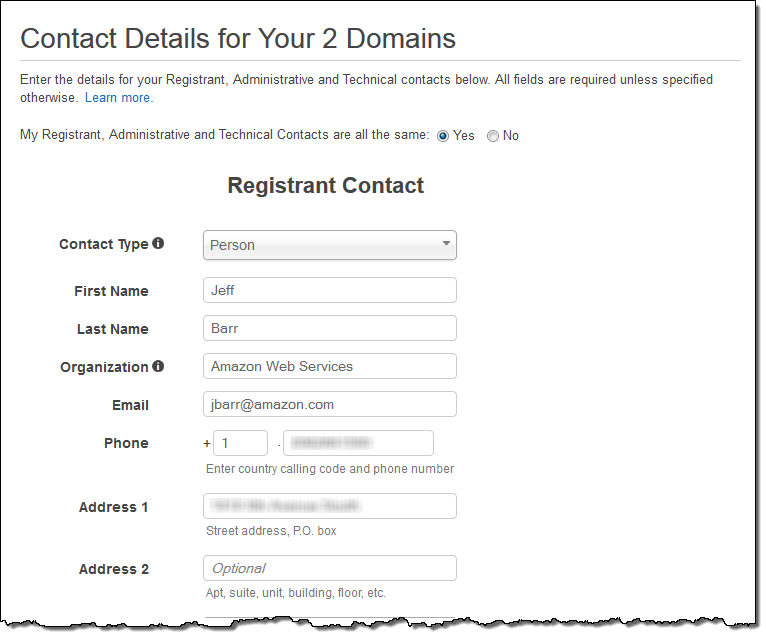

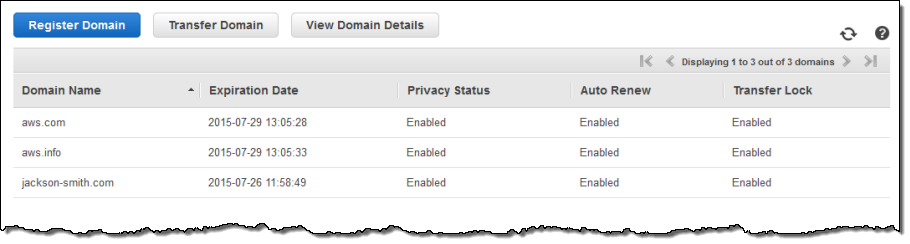

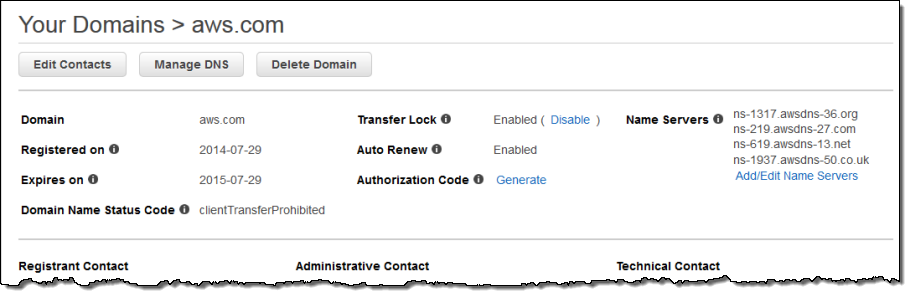

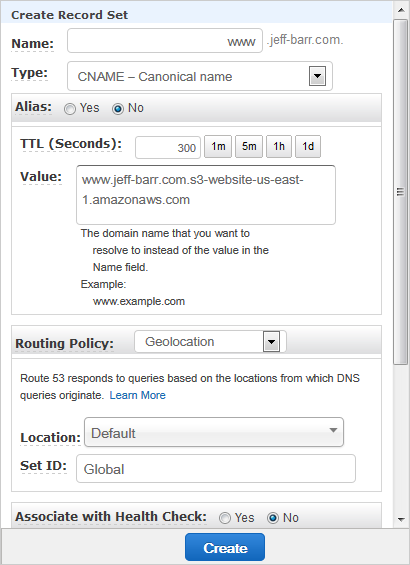

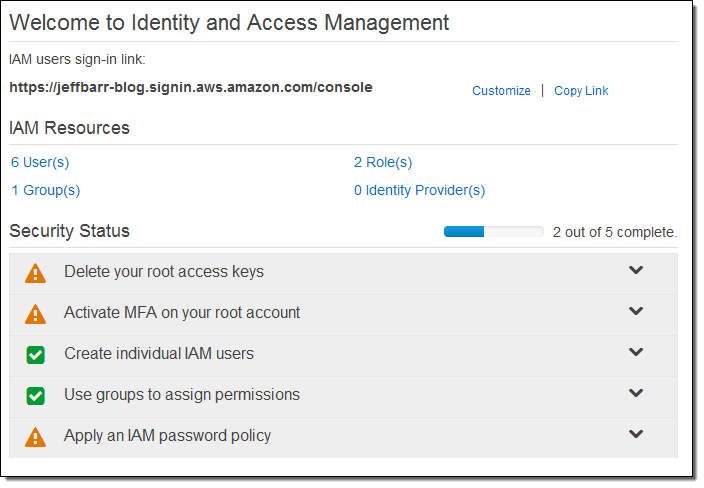

I registered my first domain name in 1995! Back then, just about every aspect of domain

management and registration was difficult, expensive, and manual. After you found a good name,

you had to convince one or two of your tech-savvy friends to host your DNS records, register

the name using an email-based form, and then bring your site online. With the advent of web-based

registration and multiple registrars the process became a lot smoother and more economical.

I registered my first domain name in 1995! Back then, just about every aspect of domain

management and registration was difficult, expensive, and manual. After you found a good name,

you had to convince one or two of your tech-savvy friends to host your DNS records, register

the name using an email-based form, and then bring your site online. With the advent of web-based

registration and multiple registrars the process became a lot smoother and more economical.

If you, like many other

If you, like many other

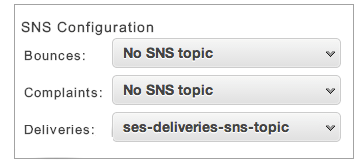

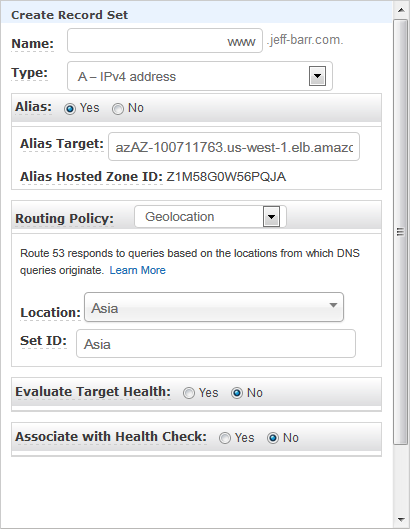

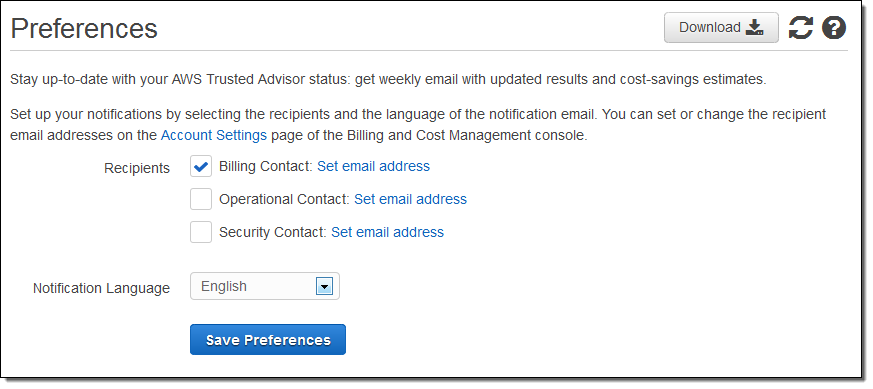

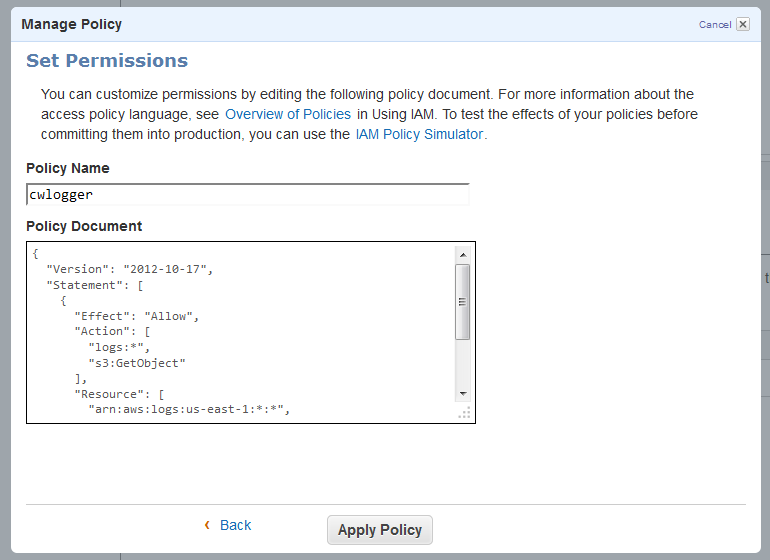

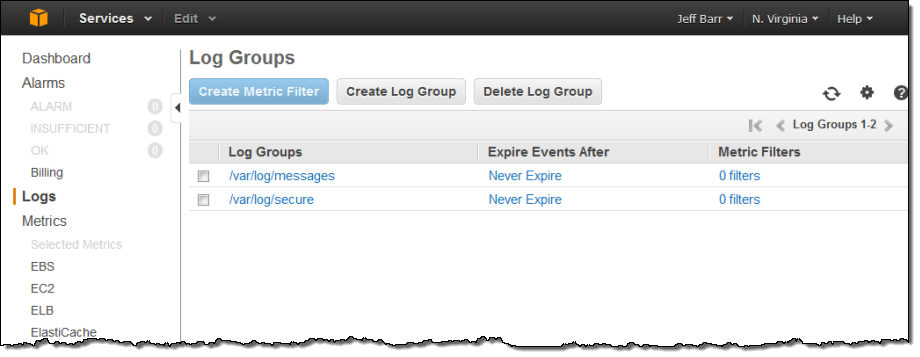

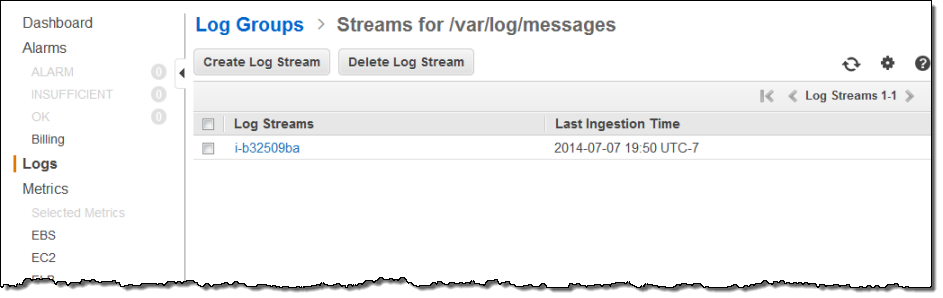

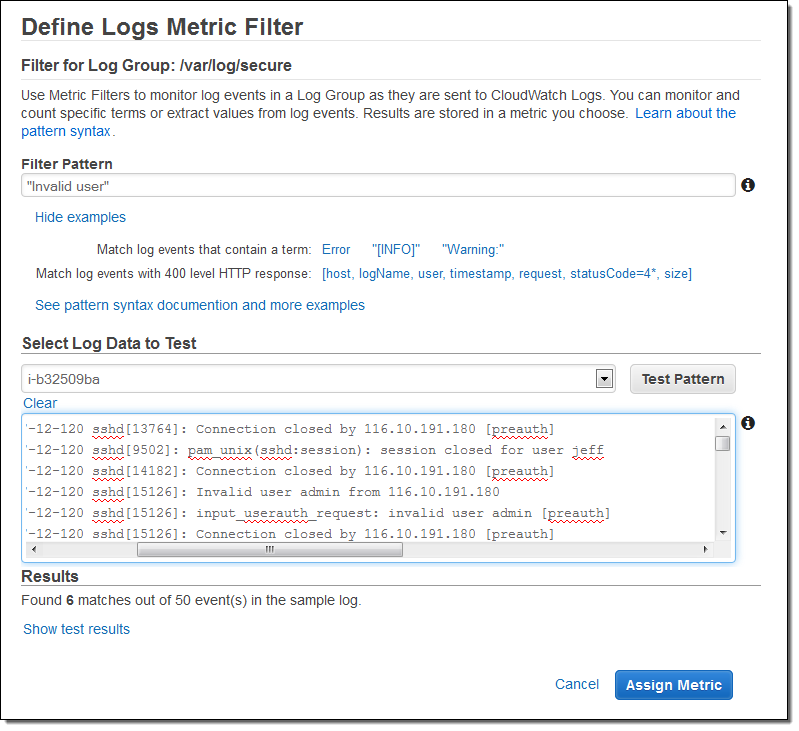

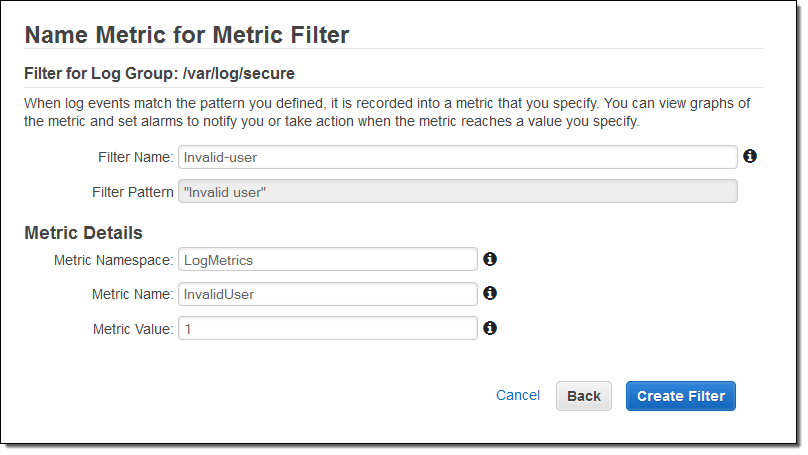

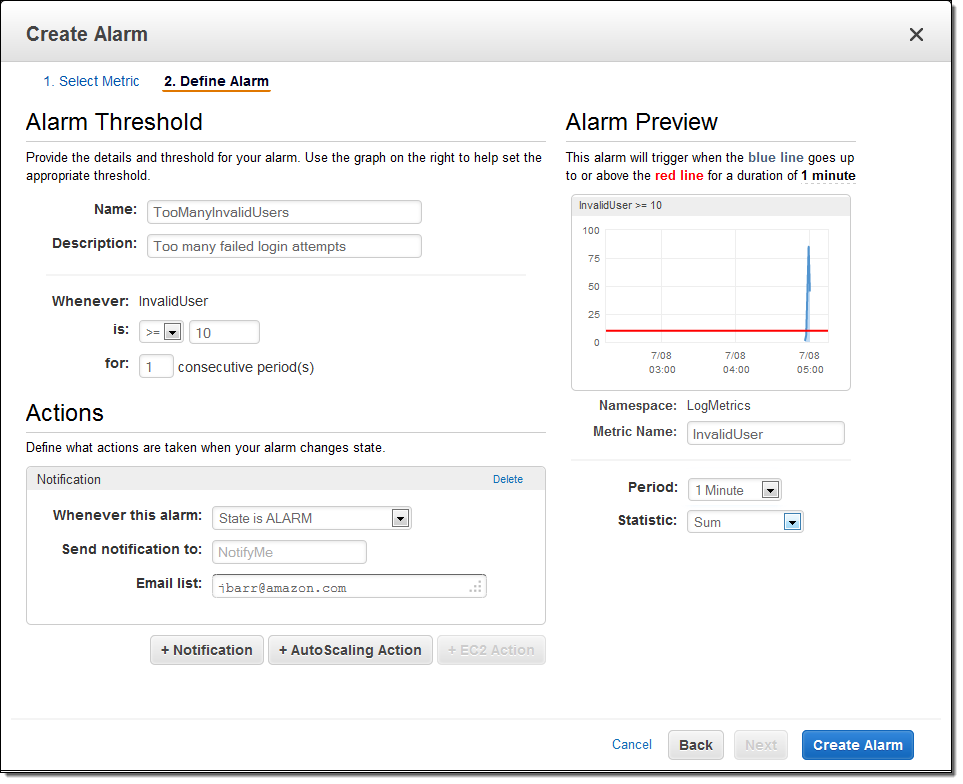

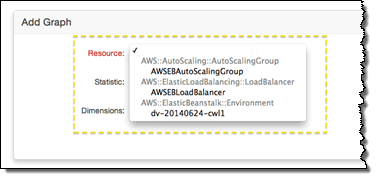

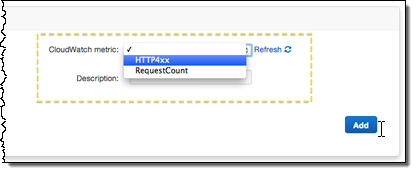

You can use this feature in many different ways. Here are a few ideas to get you started:

You can use this feature in many different ways. Here are a few ideas to get you started:

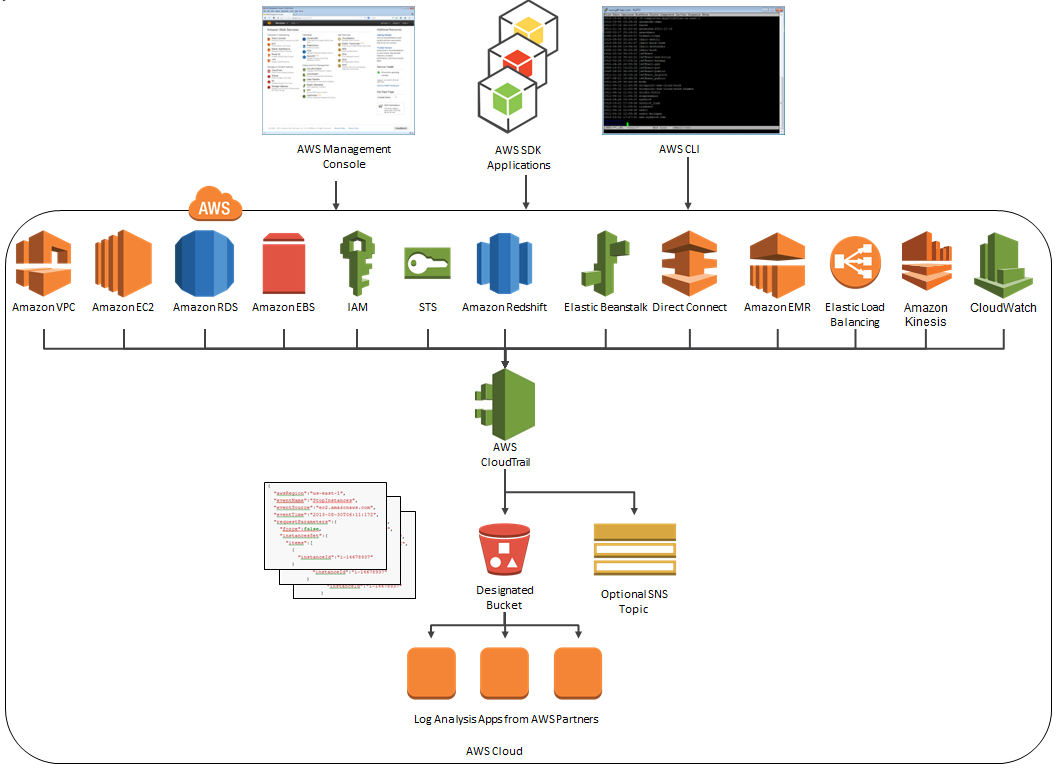

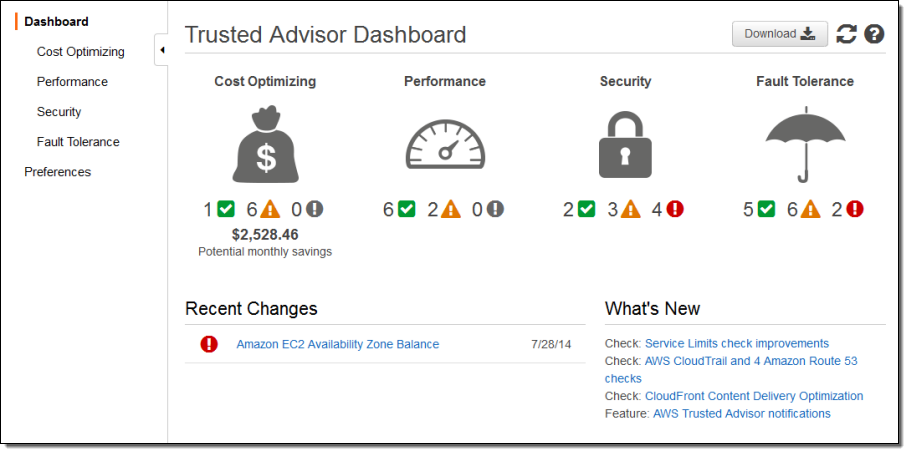

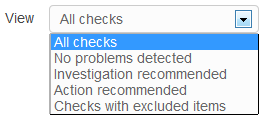

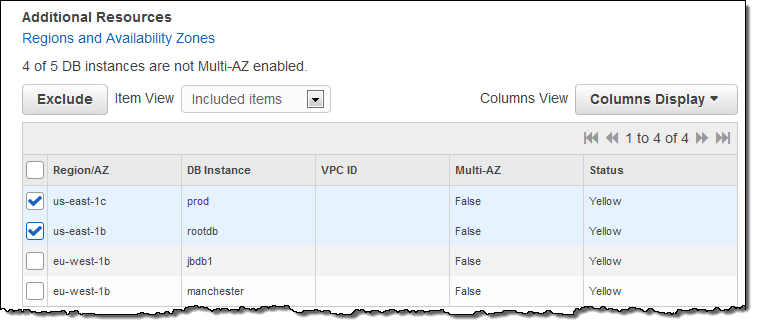

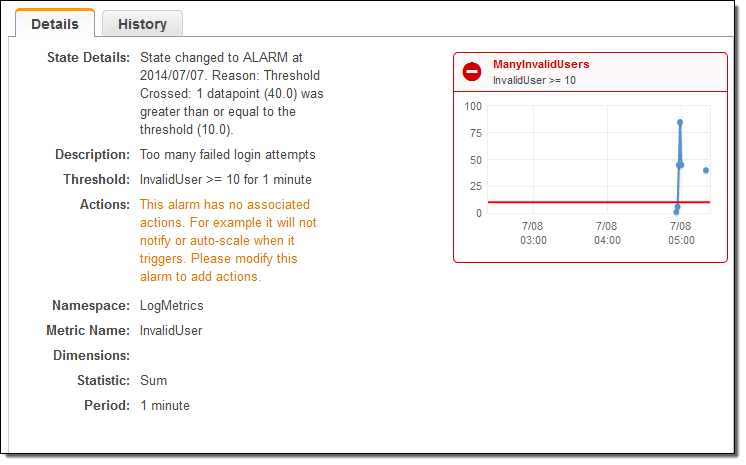

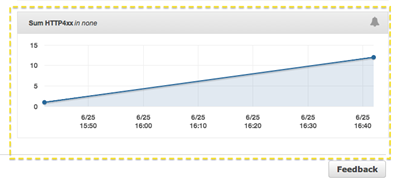

Service Limits Check - This check inspects your position with regard to

the most important service limits for each AWS product. It alerts you when you are using

more than 80% of your allocation resources such as EC2 instances and

Service Limits Check - This check inspects your position with regard to

the most important service limits for each AWS product. It alerts you when you are using

more than 80% of your allocation resources such as EC2 instances and

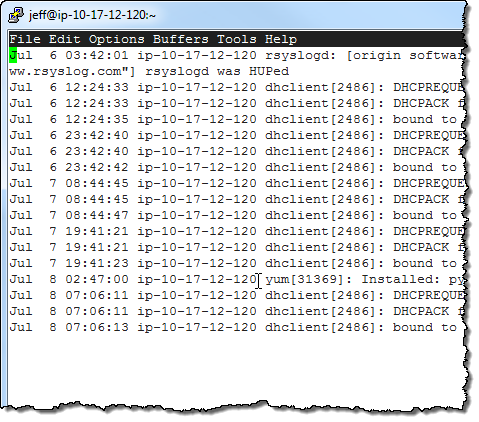

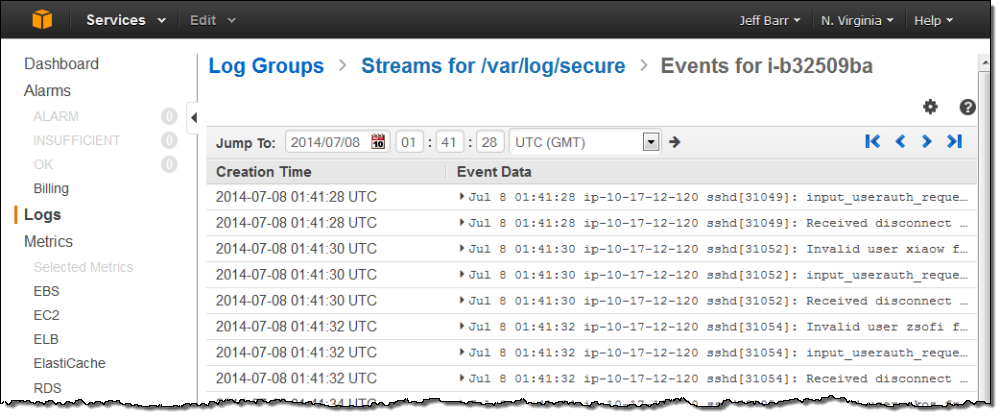

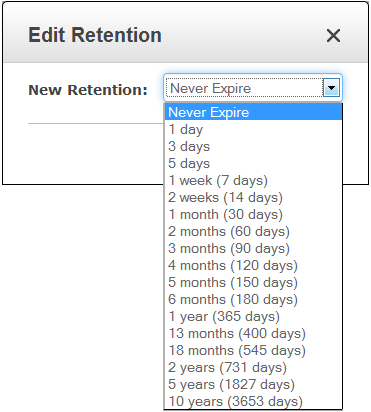

Log Event - A Log Event is an

activity recorded by the application or resource

being monitored. It contains a timestamp and raw message data in UTF-8 form.

Log Event - A Log Event is an

activity recorded by the application or resource

being monitored. It contains a timestamp and raw message data in UTF-8 form.

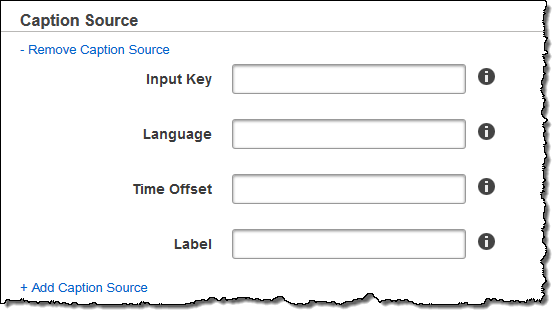

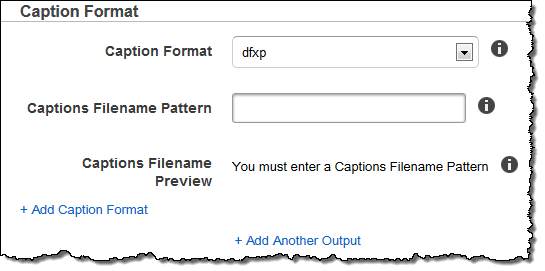

I learned quite a bit about captions and captioning as I prepared to write this blog

post. The term "closed captioning" refers to captions that must be activated by the

viewer. The alternative ("open," "burned-in", or "hard-coded" captions) are visible

to everyone. Since the public debut of closed captioning in 1973, a number of different

standards have emerged. Originally, caption information for TV shows was concealed within

a portion of the broadcast signal (also known as

I learned quite a bit about captions and captioning as I prepared to write this blog

post. The term "closed captioning" refers to captions that must be activated by the

viewer. The alternative ("open," "burned-in", or "hard-coded" captions) are visible

to everyone. Since the public debut of closed captioning in 1973, a number of different

standards have emerged. Originally, caption information for TV shows was concealed within

a portion of the broadcast signal (also known as