Lex Lavalier

Shared posts

Greenish look from DCP

I have recently extracted a ProRes file from a DCP with easydcp player+ to burn a blu ray for a preview. As usual, I set the color space conversion from XYZ to Rec.709 since I'm working in a REC 709 environment.

Unfortunately, the production shipped the DCP only and I don't know how the original movie looks like but, compared to a trailer, the dcp has a greenish tint/mood when viewed in easydcp player+ on a REC 709 monitor or when extracted to ProRes. It doesn't look wrong... It looks...

Greenish look from DCP

At the Bench: Fujinon MK50-135 Lens

The other day I had the chance to get a peek at the new Fujinon MK50-135 zoom lens. This lens compliments the recently-announced MK18-55 (watch Matt Porwoll’s video on the 18-55, part of his Behind the Lens documentary zoom series). The 50-135 shares many of the attributes of its wide angle companion: it is parfocal, meaning that it maintains focus through its entire zoom range, and as well maintains a T 2.9 stop from wide to tight. Macro focusing – down to 2′ 9″ – and a back focus adjustment are available as well. Watch my video above to get a closer look at the MK50-135 as well as some test footage.

[FS: SUPPORT] O'connor follow focus CFF- 1 with a case and an extension for 19mm rods . 1200$

-

IMG_5592-1.jpg

(94.5 KB)

IMG_5592-1.jpg

(94.5 KB)

The Business of Bots

I recently attended the AI Business Summit at the Business Design Centre in London. The operative word here being ‘business’, which I had for some reason failed to heed, resulting in me feeling somewhat out of place in my rainbow jelly shoes amongst a sea of navy suits and patent court heels.

But this isn’t a story about fashion (or indeed, my lack of it). It’s about AI. Well, really, it’s about chatbots. And maybe a bit of video-analysis, because it turns out that that’s pretty much what AI means in business at the moment. I don’t mean that disparagingly; it’s all a necessary part of moving technology through the inevitable hype cycle. But AI is currently a loaded term, and I want to manage your expectations.

As one of the speakers pointed out, a great way to start a conversation at the event would be to ask people ‘what do you mean by AI?’ because there was by no means a straight answer. Machine Learning, Data Science, Deep Neural Networks, and plenty of other terms were used roughly interchangeably with AI, which is fine for most practical purposes, but it might also be useful to try and draw some distinctions.

Firstly, I consider ‘AI’ to be an outcome, not an approach. Early research in the field used symbolic, rule-based methods, and is sometimes known as ‘Good Old Fashioned AI’ (GOFAI) to distinguish it from modern Machine Learning approaches. At the business summit, there were some products that (as far as I could tell) weren’t implemented with the kind of Machine Learning algorithms that we currently tend to associate with AI, but this shouldn’t necessarily rule them out from hopping on the AI bandwagon. If a system passes the Turing test (that is; a human evaluator can’t tell whether they are interacting with a machine or a human) or whatever other measure of synthetic intelligence we decide to apply, the implementation is kind of irrelevant.

Machine Learning, as I’ve just given away, is one route to AI. Deep Learning is a Machine Learning technique which uses Neural Networks with multiple hidden layers, and is probably the most popular approach to AI at the moment. There are also other types of Machine Learning that are less commonly used for AI but are instead used to achieve other goals. Data Science is the discipline of extracting insights from data - this may or may not be used as part of a Machine Learning approach, and may or may not be used to achieve the goal of AI.

Confused yet? This is why I try not to be too pedantic, except when it really matters.

I came to realise that ‘AI’ was often being used to mean ‘simulating an interaction with a human’. This was most evident in the case of chatbots, which were primarily being touted as an alternative to humans in customer service. Interestingly, one of the speakers pointed out that in their trials, customers were pretty satisfied with the experience as long as they knew they were interacting with a bot and not a human. Is this because users value the transparency? Or is it because they subconsciously moderate their language to make it easier for the bot? This might mean that they perceive the bot as performing more effectively than it really is.

Data-cleaning services seem to be a lucrative area. A number of companies were offering to take messy, unstructured data and turn it into something useful for a neural network. It seems this process is still done manually by thousands of distributed workers. Often, the data was limited to images or 30 seconds video clips, so sadly it doesn’t yet look like a feasible way to process the data goldmine that is the BBC’s vast archive of video.

One thing that is of interest to the BBC is the emerging set of cloud-based video-analysis platforms from the likes of Google and Microsoft. Convolutional Neural Networks have been making waves in the academic literature for a few years due to their better-than-human ability to process images, and it now seems that they are making their way into actual commercial products. BBC R&D is doing its own work on video-analysis for media and broadcasting applications, and is actively looking at the commercial and open-source offerings and see how well they match BBC use cases.

A common theme was emphasising the ‘human in the loop’; AI working in harmony with humans to augment, rather than replace them. It was unclear whether this was to avoid the ‘robots are taking our jobs!’ narrative, or because the products themselves aren’t yet mature enough to work without any human intervention.

With conventional programming, instructions are given explicitly step-by-step, so you know exactly why things happen. Machine Learning on the other hand is less transparent - it’s not always obvious how and why the system comes to certain conclusions. Another common theme of the summit was the need to see inside this so-called ‘black box’. High-profile cases of algorithmic bias have clearly made businesses nervous about trusting the opaque decision-making of AI systems, and a number of companies showcased products and services that claim to offer some insight. This is interesting from an ethical perspective and I’m glad to see concerns about bias and discrimination being taken seriously. However, it was usually marketed as a way of helping companies justify controversial decisions and avoid legal complications. Perhaps this is where the BBC’s public-service remit could really make the difference - could we be a leader in the ethical application of AI?

Tweet This - Share on Facebook

BBC R&D - Editorial Algorithms

Manchester Futurists - AI Overview

Which One(s) do you like?

Which do you like?

You can choose multiples and heck you might even see the validity to them all.

Just curious and thanks.

A friend passed away, how hard would it be to make an accurate model of them for use in VR?

Perhaps an unusual request. If it's not too much of a task where would be the best place to start? I'm happy to spend some considerable time on this. Not necessarily animated, just detailed enough to be convincing

Or if anyone can assist then much obliged

[link] [comments]

Docker for Windows Beta announced

I'm continuing to learn about Docker and how it works in a developer's workflow (and Devops, and Production, etc as you move downstream). This week Docker released a beta of their new Docker for Mac and Docker for Windows. They've included OS native apps that run in the background (the "tray") that make Docker easier to use and set up. Previously I needed to disable Hyper-V and use VirtualBox, but this new Docker app automates Hyper-V automatically which more easily fits into my workflow, especially if I'm using other Hyper-V features, like the free Visual Studio Android Emulator.

I'm continuing to learn about Docker and how it works in a developer's workflow (and Devops, and Production, etc as you move downstream). This week Docker released a beta of their new Docker for Mac and Docker for Windows. They've included OS native apps that run in the background (the "tray") that make Docker easier to use and set up. Previously I needed to disable Hyper-V and use VirtualBox, but this new Docker app automates Hyper-V automatically which more easily fits into my workflow, especially if I'm using other Hyper-V features, like the free Visual Studio Android Emulator.

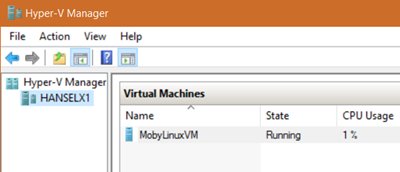

I signed up at http://beta.docker.com. Once installed, when you run the Docker app with Hyper-V enabled Docker automatically creates the Linux "mobylinux" VM you need in Hyper-V, sets it up and starts it up.

After Docker for Windows (Beta) is installed, you just run PowerShell or CMD and type "docker" and it's already set up with the right PATH and Environment Variables and just works. It gets setup on your local machine as http://docker but the networking goes through Hyper -V, as it should.

The best part is that Docker for Windows supports "volume mounting" which means the container can see your code on your local device (they have a "wormhole" between the container and the host) which means you can do a "edit and refresh" type scenarios for development. In fact, Docker Tools for Visual Studio uses this feature - there's more details on this "Edit and Refresh "support in Visual Studio here.

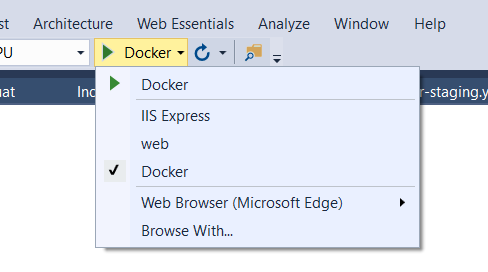

The Docker Tools for Visual Studio can be downloaded at http://aka.ms/dockertoolsforvs. It adds a lot of nice integration like this:

This makes the combination of Docker for Windows + Docker Tools for Visual Studio pretty sweet. As far as the VS Tools for Docker go, support for Windows is coming soon, but for now, here's what Version 0.10 of these tools support with a Linux container:

- Docker assets for Debug and Release configurations are added to the project

- A PowerShell script added to the project to coordinate the build and compose of containers, enabling you to extend them while keeping the Visual Studio designer experiences

- F5 in Debug config, launches the PowerShell script to build and run your docker-compose.debug.yml file, with Volume Mapping configured

- F5 in Release config launches the PowerShell script to build and run your docker-compose.release.yml file, with an image you can verify and push to your docker registry for deployment to other environment

You can read more about how Docker on Windows works at Steve Lasker's Blog and also watch his video about Visual Studio's support for Docker in his video on Ch9 and again, sign up for Docker Beta at http://beta.docker.com.

Sponsor: Thanks to Seq for sponsoring the feed this week! Need to make sense of complex or distributed apps? Structured logging helps your team cut through that complexity and resolve issues faster. Learn more about structured logging with Serilog and Seq at https://getseq.net.

© 2016 Scott Hanselman. All rights reserved.