This is a guest post from Mike Rousos

Introduction

ASP.NET Core Identity automatically supports cookie authentication. It is also straightforward to support authentication by external providers using the Google, Facebook, or Twitter ASP.NET Core authentication packages. One authentication scenario that requires a little bit more work, though, is to authenticate via bearer tokens. I recently worked with a customer who was interested in using JWT bearer tokens for authentication in mobile apps that worked with an ASP.NET Core back-end. Because some of their customers don’t have reliable internet connections, they also wanted to be able to validate the tokens without having to communicate with the issuing server.

In this article, I offer a quick look at how to issue JWT bearer tokens in ASP.NET Core. In subsequent posts, I’ll show how those same tokens can be used for authentication and authorization (even without access to the authentication server or the identity data store).

Offline Token Validation Considerations

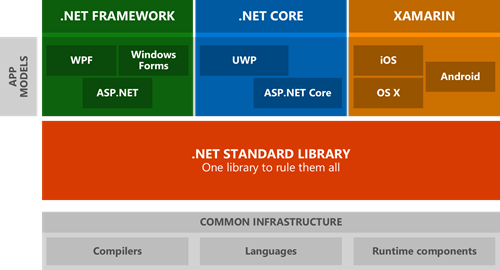

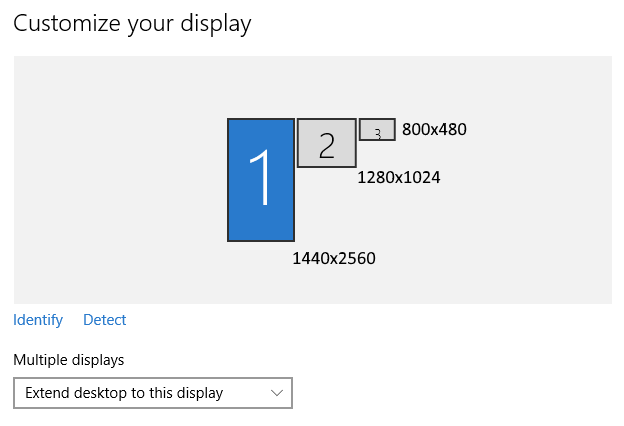

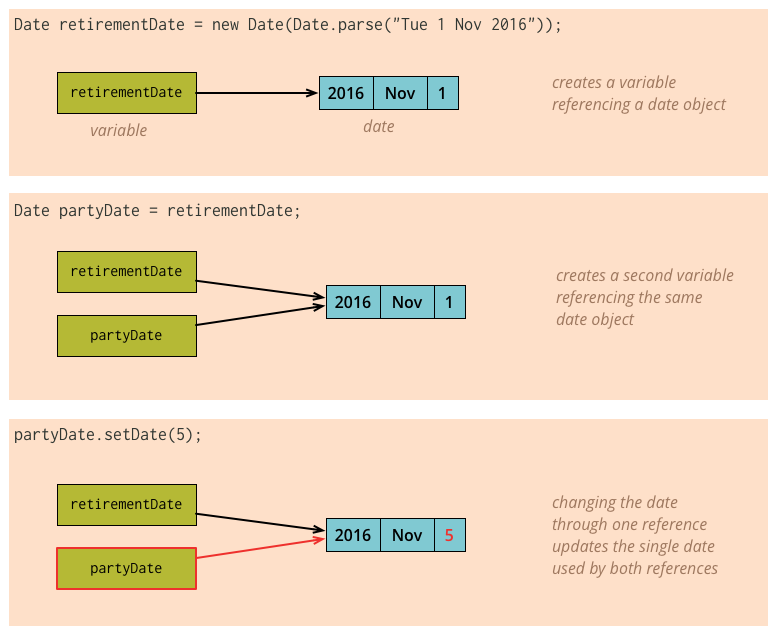

First, here’s a quick diagram of the desired architecture.

The customer has a local server with business information which will need to be accessed and updated periodically by client devices. Rather than store user names and hashed passwords locally, the customer prefers to use a common authentication micro-service which is hosted in Azure and used in many scenarios beyond just this specific one. This particular scenario is interesting, though, because the connection between the customer’s location (where the server and clients reside) and the internet is not reliable. Therefore, they would like a user to be able to authenticate at some point in the morning when the connection is up and have a token that will be valid throughout that user’s work shift. The local server, therefore, needs to be able to validate the token without access to the Azure authentication service.

This local validation is easily accomplished with JWT tokens. A JWT token typically contains a body with information about the authenticated user (subject identifier, claims, etc.), the issuer of the token, the audience (recipient) the token is intended for, and an expiration time (after which the token is invalid). The token also contains a cryptographic signature as detailed in RFC 7518. This signature is generated by a private key known only to the authentication server, but can be validated by anyone in possession of the corresponding public key. One JWT validation work flow (used by AD and some identity providers) involves requesting the public key from the issuing server and using it to validate the token’s signature. In our offline scenario, though, the local server can be prepared with the necessary public key ahead of time. The challenge with this architecture is that the local server will need to be given an updated public key anytime the private key used by the cloud service changes, but this inconvenience means that no internet connection is needed at the time the JWT tokens are validated.

Issuing Authentication Tokens

As mentioned previously, Microsoft.AspNetCore.* libraries don’t have support for issuing JWT tokens. There are, however, several other good options available.

First, Azure Active Directory Authentication provides identity and authentication as a service. Using Azure AD is a quick way to get identity in an ASP.NET Core app without having to write authentication server code.

Alternatively, if a developer wishes to write the authentication service themselves, there are a couple third-party libraries available to handle this scenario. IdentityServer4 is a flexible OpenID Connect framework for ASP.NET Core. Another good option is OpenIddict. Like IdentityServer4, OpenIddict offers OpenID Connect server functionality for ASP.NET Core. Both OpenIddict and IdentityServer4 work well with ASP.NET Identity 3.

For this demo, I will use OpenIddict. There is excellent documentation on accomplishing the same tasks with IdentityServer4 available in the IdentityServer4 documentation, which I would encourage you to take a look at, as well.

A Disclaimer

Please note that both IdentityServer4 and OpenIddict are pre-release packages currently. OpenIddict is currently released as a beta and IdentityServer4 as an RC, so both are still in development and subject to change!

Setup the User Store

In this scenario, we will use a common ASP.NET Identity 3-based user store, accessed via Entity Framework Core. Because this is a common scenario, setting it up is as easy as creating a new ASP.NET Core web app from new project templates and selecting ‘individual user accounts’ for the authentication mode.

This template will provide a default ApplicationUser type and Entity Framework Core connections to manage users. The connection string in appsettings.json can be modifier to point at the database where you want this data stored.

Because JWT tokens can encapsulate claims, it’s interesting to include some claims for users other than just the defaults of user name or email address. For demo purposes, let’s include two different types of claims.

Adding Roles

ASP.NET Identity 3 includes the concept of roles. To take advantage of this, we need to create some roles which users can be assigned to. In a real application, this would likely be done by managing roles through a web interface. For this short sample, though, I just seeded the database with sample roles by adding this code to startup.cs:

// Initialize some test roles. In the real world, these would be setup explicitly by a role manager

private string[] roles = new[] { "User", "Manager", "Administrator" };

private async Task InitializeRoles(RoleManager<IdentityRole> roleManager)

{

foreach (var role in roles)

{

if (!await roleManager.RoleExistsAsync(role))

{

var newRole = new IdentityRole(role);

await roleManager.CreateAsync(newRole);

// In the real world, there might be claims associated with roles

// _roleManager.AddClaimAsync(newRole, new )

}

}

}

I then call InitializeRoles from my app’s Startup.Configure method. The RoleManager needed as a parameter to InitializeRoles can be retrieved by IoC (just add a RoleManager parameter to your Startup.Configure method).

Because roles are already part of ASP.NET Identity, there’s no need to modify models or our database schema.

Adding Custom Claims to the Data Model

It’s also possible to encode completely custom claims in JWT tokens. To demonstrate that, I added an extra property to my ApplicationUser type. For sample purposes, I added an integer called OfficeNumber:

public virtual int OfficeNumber { get; set; }

This is not something that would likely be a useful claim in the real world, but I added it in my sample specifically because it’s not the sort of claim that’s already handled by any of the frameworks we’re using.

I also updated the view models and controllers associated with creating a new user to allow specifying role and office number when creating new users.

I added the following properties to the RegisterViewModel type:

[Display(Name = "Is administrator")]

public bool IsAdministrator { get; set; }

[Display(Name = "Is manager")]

public bool IsManager { get; set; }

[Required]

[Display(Name = "Office Number")]

public int OfficeNumber { get; set; }

I also added cshtml for gathering this information to the registration view:

<div class="form-group">

<label asp-for="OfficeNumber" class="col-md-2 control-label"></label>

<div class="col-md-10">

<input asp-for="OfficeNumber" class="form-control" />

<span asp-validation-for="OfficeNumber" class="text-danger"></span>

</div>

</div>

<div class="form-group">

<label asp-for="IsManager" class="col-md-2 control-label"></label>

<div class="col-md-10">

<input asp-for="IsManager" class="form-control" />

<span asp-validation-for="IsManager" class="text-danger"></span>

</div>

</div>

<div class="form-group">

<label asp-for="IsAdministrator" class="col-md-2 control-label"></label>

<div class="col-md-10">

<input asp-for="IsAdministrator" class="form-control" />

<span asp-validation-for="IsAdministrator" class="text-danger"></span>

</div>

</div>

Finally, I updated the AccountController.Register action to set role and office number information when creating users in the database. Notice that we add a custom claim for the office number. This takes advantage of ASP.NET Identity’s custom claim tracking. Be aware that ASP.NET Identity doesn’t store claim value types, so even in cases where the claim is always an integer (as in this example), it will be stored and returned as a string. Later in this post, I explain how non-string claims can be included in JWT tokens.

var user = new ApplicationUser { UserName = model.Email, Email = model.Email, OfficeNumber = model.OfficeNumber };

var result = await _userManager.CreateAsync(user, model.Password);

if (result.Succeeded)

{

if (model.IsAdministrator)

{

await _userManager.AddToRoleAsync(user, "Administrator");

}

else if (model.IsManager)

{

await _userManager.AddToRoleAsync(user, "Manager");

}

var officeClaim = new Claim("office", user.OfficeNumber.ToString(), ClaimValueTypes.Integer);

await _userManager.AddClaimAsync(user, officeClaim);

...

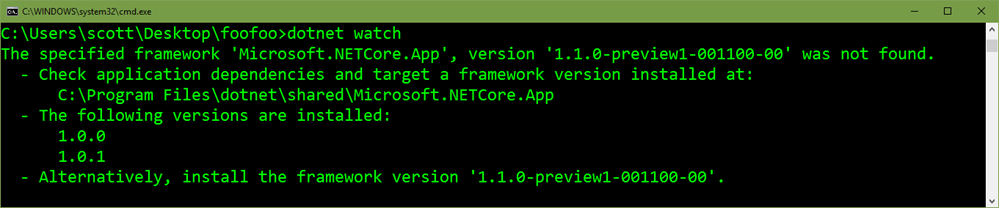

Updating the Database Schema

After making these changes, we can use Entity Framework’s migration tooling to easily update the database to match (the only change to the database should be to add an OfficeNumber column to the users table). To migrate, simply run dotnet ef migrations add OfficeNumberMigration and dotnet ef database update from the command line.

At this point, the authentication server should allow registering new users. If you’re following along in code, go ahead and add some sample users at this point.

Issuing Tokens with OpenIddict

The OpenIddict package is still pre-release, so it’s not yet available on NuGet.org. Instead, the package is available on the aspnet-contrib MyGet feed.

To restore it, we need to add that feed to our solution’s NuGet.config. If you don’t yet have a NuGet.config file in your solution, you can add one that looks like this:

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<packageSources>

<add key="nuget.org" value="https://api.nuget.org/v3/index.json" />

<add key="aspnet-contrib" value="https://www.myget.org/F/aspnet-contrib/api/v3/index.json" />

</packageSources>

</configuration>

Once that’s done, add a reference to "OpenIddict": "1.0.0-beta1-*" and "OpenIddict.Mvc": "1.0.0-beta1-*" in your project.json file’s dependencies section. OpenIddict.Mvc contains some helpful extensions that allow OpenIddict to automatically bind OpenID Connect requests to MVC action parameters.

There are only a few steps needed to enable OpenIddict endpoints.

Use OpenIddict Model Types

The first change is to update your ApplicationDBContext model type to inherit from OpenIddictDbContext instead of IdentityDbContext.

After making this change, migrate the database to update it, as well (dotnet ef migrations add OpenIddictMigration and dotnet ef database update).

Configure OpenIddict

Next, it’s necessary to register OpenIddict types in our ConfigureServices method in our Startup type. This can be done with a call like this:

services.AddOpenIddict<ApplicationDbContext>()

.AddMvcBinders()

.EnableTokenEndpoint("/connect/token")

.UseJsonWebTokens()

.AllowPasswordFlow()

.AddSigningCertificate(jwtSigningCert);

The specific methods called on the OpenIddictBuilder here are important to understand.

-

AddMvcBinders. This method registers custom model binders that will populate

OpenIdConnectRequest parameters in MVC actions with OpenID Connect requests read from incoming HTTP request’s context. This isn’t required, since the OpenID Connect requests can be read manually, but it’s a helpful convenience.

-

EnableTokenEndpoint. This method allows you to specify the endpoint which will be serving authentication tokens. The endpoint shown above (/connect/token) is a pretty common default endpoint for token issuance. OpenIddict needs to know the location of this endpoint so that it can be included in responses when a client queries for information about how to connect (using the .well-known/openid-configuration endpoint, which OpenIddict automatically provides). OpenIddict will also validate requests to this endpoint to be sure they are valid OpenID Connect requests. If a request is invalid (if it’s missing mandatory parameters like

grant_type, for example), then OpenIddict will reject the request before it even reaches the app’s controllers.

-

UseJsonWebTokens. This instructs OpenIddict to use JWT as the format for bearer tokens it produces.

-

AllowPasswordFlow. This enables the password grant type when logging on a user. The different OpenID Connect authorization flows are documented in RFC and OpenID Connect specs. The password flow means that client authorization is performed based on user credentials (name and password) which are provided from the client. This is the flow that best matches our sample scenario.

-

AddSigningCertificate. This API specifies the certificate which should be used to sign JWT tokens. In my sample code, I produce the

jwtSigningCert argument from a pfx file on disk (var jwtSigningCert = new X509Certificate2(certLocation, certPassword);). In a real-world scenario, the certificate would more likely be loaded from the authentication server’s certificate store, in which case a different overload of AddSigningCertificate would be used (one which takes the cert’s thumbprint and store name/location).

- If you need a self-signed certificate for testing purposes, one can be produced with the

makecert and pvk2pfx command line tools (which should be on the path in a Visual Studio Developer Command prompt).

-

makecert -n "CN=AuthSample" -a sha256 -sv AuthSample.pvk -r AuthSample.cer This will create a new self-signed test certificate with its public key in AuthSample.cer and it’s private key in AuthSample.pvk.

-

pvk2pfx -pvk AuthSample.pvk -spc AuthSample.cer -pfx AuthSample.pfx -pi [A password] This will combine the pvk and cer files into a single pfx file containing both the public and private keys for the certificate (protected by a password).

- This pfx file is what needs to be loaded by OpenIddict (since the private key is necessary to sign tokens). Note that this private key (and any files containing it) must be kept secure.

-

DisableHttpsRequirement. The code snippet above doesn’t include a call to

DisableHttpsRequirement(), but such a call may be useful during testing to disable the requirement that authentication calls be made over HTTPS. Of course, this should never be used outside of testing as it would allow authentication tokens to be observed in transit and, therefore, enable malicious parties to impersonate legitimate users.

Enable OpenIddict Endpoints

Once AddOpenIddict has been used to configure OpenIddict services, a call to app.UseOpenIddict(); (which should come after the existing call to UseIdentity) should be added to Startup.Configure to actually enable OpenIddict in the app’s HTTP request processing pipeline.

Implementing the Connect/Token Endpoint

The final step necessary to enable the authentication server is to implement the connect/token endpoint. The EnableTokenEndpoint call made during OpenIddict configuration indicates where the token-issuing endpoint will be (and allows OpenIddict to validate incoming OIDC requests), but the endpoint still needs to be implemented.

OpenIddict’s owner, Kévin Chalet, gives a good example of how to implement a token endpoint supporting a password flow in this sample. I’ve restated the gist of how to create a simple token endpoint here.

First, create a new controller called ConnectController and give it a Token post action. Of course, the specific names are not important, but it is important that the route matches the one given to EnableTokenEndpoint.

Give the action method an OpenIdConnectRequest parameter. Because we are using the OpenIddict MVC binder, this parameter will be supplied by OpenIddict. Alternatively (without using the OpenIddict model binder), the GetOpenIdConnectRequest extension method could be used to retrieve the OpenID Connect request.

Based on the contents of the request, you should validate that the request is valid.

- Confirm that the grant type is as expected (‘Password’ for this authentication server).

- Confirm that the requested user exists (using the ASP.NET Identity

UserManager).

- Confirm that the requested user is able to sign in (since ASP.NET Identity allows for accounts that are locked or not yet confirmed).

- Confirm that the password provided is correct (again, using a

UserManager).

If everything in the request checks out, then a ClaimsPrincipal can be created using SignInManager.CreateUserPrincipalAsync.

Roles and custom claims known to ASP.NET identity will automatically be present in the ClaimsPrincipal. If any changes are needed to the claims, those can be made now.

One set of claims updates that will be important is to attach destinations to claims. A claim is only included in a token if that claim includes a destination for that token type. So, even though the ClaimsPrincipal will contain all ASP.NET Identity claims, they will only be included in tokens if they have appropriate destinations. This allows some claims to be kept private and others to be included only in particular token types (access or identity tokens) or if particular scopes are requested. For the purposes of this simple demo, I am including all claims for all token types.

This is also an opportunity to add additional custom claims to the ClaimsPrincipal. Typically, tracking the claims with ASP.NET Identity is sufficient but, as mentioned earlier, ASP.NET Identity does not remember claim value types. So, if it was important that the office claim be an integer (rather than a string), we could instead add it here based on data in the ApplicationUser object returned from the UserManager. Claims cannot be added to a ClaimsPrincipal directly, but the underlying identity can be retrieved and modified. For example, if the office claim was created here (instead of at user registration), it could be added like this:

var identity = (ClaimsIdentity)principal.Identity;

var officeClaim = new Claim("office", user.OfficeNumber.ToString(), ClaimValueTypes.Integer);

officeClaim.SetDestinations(OpenIdConnectConstants.Destinations.AccessToken, OpenIdConnectConstants.Destinations.IdentityToken);

identity.AddClaim(officeClaim);

Finally, an AuthenticationTicket can be created from the claims principal and used to sign in the user. The ticket object allows us to use helpful OpenID Connect extension methods to specify scopes and resources to be granted access. In my sample, I pass the requested scopes filtered by those the server is able to provide. For resources, I provide a hard-coded string indicating the resource this token should be used to access. In more complex scenarios, the requested resources (request.GetResources()) might be considered when determining which resource claims to include in the ticket. Note that resources (which map to the audience element of a JWT) are not mandatory according to the JWT specification, though many JWT consumers expect them.

Put all together, here’s a simple implementation of a connect/token endpoint:

[HttpPost]

public async Task<IActionResult> Token(OpenIdConnectRequest request)

{

if (!request.IsPasswordGrantType())

{

// Return bad request if the request is not for password grant type

return BadRequest(new OpenIdConnectResponse

{

Error = OpenIdConnectConstants.Errors.UnsupportedGrantType,

ErrorDescription = "The specified grant type is not supported."

});

}

var user = await _userManager.FindByNameAsync(request.Username);

if (user == null)

{

// Return bad request if the user doesn't exist

return BadRequest(new OpenIdConnectResponse

{

Error = OpenIdConnectConstants.Errors.InvalidGrant,

ErrorDescription = "Invalid username or password"

});

}

// Check that the user can sign in and is not locked out.

// If two-factor authentication is supported, it would also be appropriate to check that 2FA is enabled for the user

if (!await _signInManager.CanSignInAsync(user) || (_userManager.SupportsUserLockout && await _userManager.IsLockedOutAsync(user)))

{

// Return bad request is the user can't sign in

return BadRequest(new OpenIdConnectResponse

{

Error = OpenIdConnectConstants.Errors.InvalidGrant,

ErrorDescription = "The specified user cannot sign in."

});

}

if (!await _userManager.CheckPasswordAsync(user, request.Password))

{

// Return bad request if the password is invalid

return BadRequest(new OpenIdConnectResponse

{

Error = OpenIdConnectConstants.Errors.InvalidGrant,

ErrorDescription = "Invalid username or password"

});

}

// The user is now validated, so reset lockout counts, if necessary

if (_userManager.SupportsUserLockout)

{

await _userManager.ResetAccessFailedCountAsync(user);

}

// Create the principal

var principal = await _signInManager.CreateUserPrincipalAsync(user);

// Claims will not be associated with specific destinations by default, so we must indicate whether they should

// be included or not in access and identity tokens.

foreach (var claim in principal.Claims)

{

// For this sample, just include all claims in all token types.

// In reality, claims' destinations would probably differ by token type and depending on the scopes requested.

claim.SetDestinations(OpenIdConnectConstants.Destinations.AccessToken, OpenIdConnectConstants.Destinations.IdentityToken);

}

// Create a new authentication ticket for the user's principal

var ticket = new AuthenticationTicket(

principal,

new AuthenticationProperties(),

OpenIdConnectServerDefaults.AuthenticationScheme);

// Include resources and scopes, as appropriate

var scope = new[]

{

OpenIdConnectConstants.Scopes.OpenId,

OpenIdConnectConstants.Scopes.Email,

OpenIdConnectConstants.Scopes.Profile,

OpenIdConnectConstants.Scopes.OfflineAccess,

OpenIddictConstants.Scopes.Roles

}.Intersect(request.GetScopes());

ticket.SetResources("http://localhost:5000/");

ticket.SetScopes(scope);

// Sign in the user

return SignIn(ticket.Principal, ticket.Properties, ticket.AuthenticationScheme);

}

Testing the Authentication Server

At this point, our simple authentication server is done and should work to issue JWT bearer tokens for the users in our database.

OpenIddict implements OpenID Connect, so our sample should support a standard /.well-known/openid-configuration endpoint with information about how to authenticate with the server.

If you’ve followed along building the sample, launch the app and navigate to that endpoint. You should get a json response similar to this:

{

"issuer": "http://localhost:5000/",

"jwks_uri": "http://localhost:5000/.well-known/jwks",

"token_endpoint": "http://localhost:5000/connect/token",

"code_challenge_methods_supported": [ "S256" ],

"grant_types_supported": [ "password" ],

"subject_types_supported": [ "public" ],

"scopes_supported": [ "openid", "profile", "email", "phone", "roles" ],

"id_token_signing_alg_values_supported": [ "RS256" ]

}

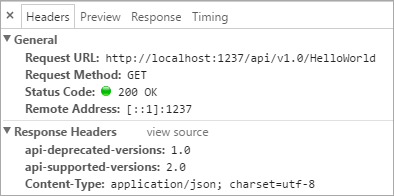

This gives clients information about our authentication server. Some of the interesting values include:

- The

jwks_uri property is the endpoint that clients can use to retrieve public keys for validating token signatures from the issuer.

-

token_endpoint gives the endpoint that should be used for authentication requests.

- The

grant_types_supported property is a list of the grant types supported by the server. In the case of this sample, that is only password.

-

scopes_supported is a list of the scopes that a client can request access to.

If you’d like to check that the correct certificate is being used, you can navigate to the jwks_uri endpoint to see the public keys used by the server. The x5t property of the response should be the certificate thumbprint. You can check this against the thumbprint of the certificate you expect to be using to confirm that they’re the same.

Finally, we can test the authentication server by attempting to login! This is done via a POST to the token_endpoint. You can use a tool like Postman to put together a test request. The address for the post should be the token_endpoint URI and the body of the post should be x-www-form-urlencoded and include the following items:

-

grant_type must be ‘password’ for this scenario.

-

username should be the username to login.

-

password should be the user’s password.

-

scope should be the scopes that access is desired for.

-

resource is an optional parameter which can specify the resource the token is meant to access. Using this can help to make sure that a token issued to access one resource isn’t reused to access a different one.

Here are the complete request and response from me testing the connect/token API:

Request

POST /connect/token HTTP/1.1

Host: localhost:5000

Cache-Control: no-cache

Postman-Token: f1bb8681-a963-2282-bc94-03fdaea5da78

Content-Type: application/x-www-form-urlencoded

grant_type=password&username=Mike%40Fabrikam.com&password=MikePassword1!&scope=openid+email+name+profile+roles

Response

{

"token_type": "Bearer",

"access_token": "eyJhbGciOiJSUzI1NiIsImtpZCI6IkU1N0RBRTRBMzU5NDhGODhBQTg2NThFQkExMUZFOUIxMkI5Qzk5NjIiLCJ0eXAiOiJKV1QifQ.eyJ1bmlxdWVfbmFtZSI6Ik1pa2VAQ29udG9zby5jb20iLCJBc3BOZXQuSWRlbnRpdHkuU2VjdXJpdHlTdGFtcCI6ImMzM2U4NzQ5LTEyODAtNGQ5OS05OTMxLTI1Mzk1MzY3NDEzMiIsInJvbGUiOiJBZG1pbmlzdHJhdG9yIiwib2ZmaWNlIjoiMzAwIiwianRpIjoiY2UwOWVlMGUtNWQxMi00NmUyLWJhZGUtMjUyYTZhMGY3YTBlIiwidXNhZ2UiOiJhY2Nlc3NfdG9rZW4iLCJzY29wZSI6WyJlbWFpbCIsInByb2ZpbGUiLCJyb2xlcyJdLCJzdWIiOiJjMDM1YmU5OS0yMjQ3LTQ3NjktOWRjZC01NGJkYWRlZWY2MDEiLCJhdWQiOiJodHRwOi8vbG9jYWxob3N0OjUwMDEvIiwibmJmIjoxNDc2OTk3MDI5LCJleHAiOjE0NzY5OTg4MjksImlhdCI6MTQ3Njk5NzAyOSwiaXNzIjoiaHR0cDovL2xvY2FsaG9zdDo1MDAwLyJ9.q-c6Ld1b7c77td8B-0LcppUbL4a8JvObiru4FDQWrJ_DZ4_zKn6_0ud7BSijj4CV3d3tseEM-3WHgxjjz0e8aa4Axm55y4Utf6kkjGjuYyen7bl9TpeObnG81ied9NFJTy5HGYW4ysq4DkB2IEOFu4031rcQsUonM1chtz14mr3wWHohCi7NJY0APVPnCoc6ae4bivqxcYxbXlTN4p6bfBQhr71kZzP0AU_BlGHJ1N8k4GpijHVz2lT-2ahYaVSvaWtqjlqLfM_8uphNH3V7T7smaMpomQvA6u-CTZNJOZKalx99GNL4JwGk13MlikdaMFXhcPiamhnKtfQEsoNauA",

"expires_in": 1800

}

The access_token is the JWT and is nothing more than a base64-encoded string in three parts ([header].[body].[signature]). A number of websites offer JWT decoding functionality.

The access token above has these contents:

{

"alg": "RS256",

"kid": "E57DAE4A35948F88AA8658EBA11FE9B12B9C9962",

"typ": "JWT"

}.

{

"unique_name": "Mike@Contoso.com",

"AspNet.Identity.SecurityStamp": "c33e8749-1280-4d99-9931-253953674132",

"role": "Administrator",

"office": "300",

"jti": "ce09ee0e-5d12-46e2-bade-252a6a0f7a0e",

"usage": "access_token",

"scope": [

"email",

"profile",

"roles"

],

"sub": "c035be99-2247-4769-9dcd-54bdadeef601",

"aud": "http://localhost:5001/",

"nbf": 1476997029,

"exp": 1476998829,

"iat": 1476997029,

"iss": "http://localhost:5000/"

}.

[signature]

Important fields in the token include:

-

kid is the key ID that can be use to look-up the key needed to validate the token’s signature.

-

x5t, similarly, is the signing certificate’s thumbprint.

-

role and office capture our custom claims.

-

exp is a timestamp for when the token should expire and no longer be considered valid.

-

iss is the issuing server’s address.

These fields can be used to validate the token.

Conclusion and Next Steps

Hopefully this article has provided a useful overview of how ASP.NET Core apps can issue JWT bearer tokens. The in-box abilities to authenticate with cookies or third-party social providers are sufficient for many scenarios, but in other cases (especially when supporting mobile clients), bearer authentication is more convenient.

Look for a follow-up to this post coming soon covering how to validate the token in ASP.NET Core so that it can be used to authenticate and signon a user automatically. And in keeping with the original scenario I ran into with a customer, we’ll make sure the validation can all be done without access to the authentication server or identity database.

Resources

“Scalable performance is critical with our IoT platform for oil and gas that must run 24/7/365. The addition of In-Memory OLTP tables and native-compiled stored procedures on Azure SQL Database for a few key operations immediately reduced our overall DTU consumption by seventy percent. Without in-memory tables, our growth would have required significant effort to multiple areas of the platform to maintain performance. For data-centric services, in-memory support provides instant scale to existing applications with little to no changes outside of the database.” Mark Freydl, solution architect, Quorum Business Solutions

“Scalable performance is critical with our IoT platform for oil and gas that must run 24/7/365. The addition of In-Memory OLTP tables and native-compiled stored procedures on Azure SQL Database for a few key operations immediately reduced our overall DTU consumption by seventy percent. Without in-memory tables, our growth would have required significant effort to multiple areas of the platform to maintain performance. For data-centric services, in-memory support provides instant scale to existing applications with little to no changes outside of the database.” Mark Freydl, solution architect, Quorum Business Solutions