We spread ourselves thin, but this is the moment you’ve been awaiting – TypeScript 2.1 is here!

For those who are unfamiliar, TypeScript is a language that brings you all the new features of JavaScript, along with optional static types. This gives you an editing experience that can’t be beat, along with stronger checks against typos and bugs in your code.

This release comes with features that we think will drastically reduce the friction of starting new projects, make the type-checker much more powerful, and give you the tools to write much more expressive code.

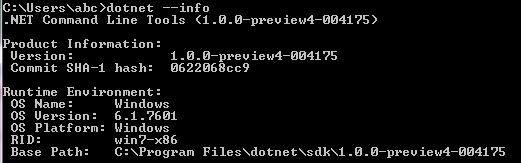

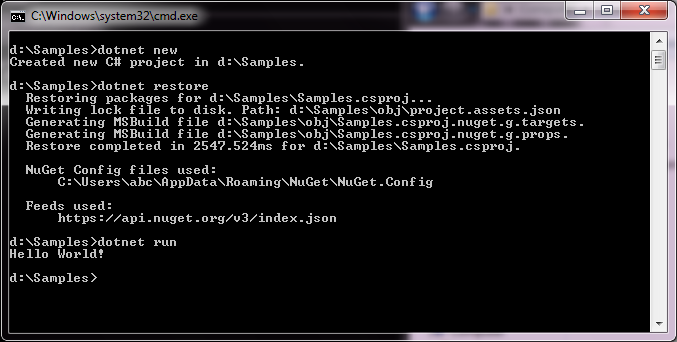

To start using TypeScript you can use NuGet, or install it through npm:

npm install -g typescript

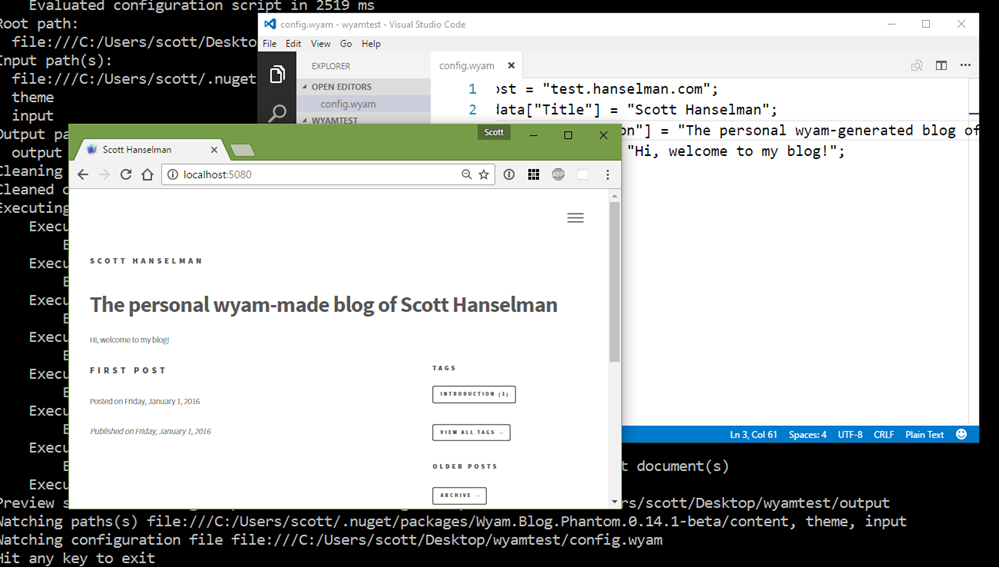

You can also grab the TypeScript 2.1 installer for Visual Studio 2015 after getting Update 3.

Visual Studio Code will usually just prompt you if your TypeScript install is more up-to-date, but you can also follow instructions to use TypeScript 2.1 now with Visual Studio Code or our Sublime Text Plugin.

We’ve written previously about some great new things 2.1 has in store, including downlevel async/await and significantly improved inference, in our announcement for TypeScript 2.1 RC, but here’s a bit more about what’s new in 2.1.

Async Functions

It bears repeating: downlevel async functions have arrived! That means that you can use async/await and target ES3/ES5 without using any other tools.

Bringing downlevel async/await to TypeScript involved rewriting our emit pipeline to use tree transforms. Keeping parity meant not just that existing emit didn’t change, but that TypeScript’s emit speed was on par as well. We’re pleased to say that after several months of testing, neither have been impacted, and that TypeScript users should continue to enjoy a stable speedy experience.

Object Rest & Spread

We’ve been excited to deliver object rest & spread since its original proposal, and today it’s here in TypeScript 2.1. Object rest & spread is a new proposal for ES2017 that makes it much easier to partially copy, merge, and pick apart objects. The feature is already used quite a bit when using libraries like Redux.

With object spreads, making a shallow copy of an object has never been easier:

let copy = { ...original };

Similarly, we can merge several different objects so that in the following example, merged will have properties from foo, bar, and baz.

let merged = { ...foo, ...bar, ...baz };

We can even add new properties in the process:

let nowYoureHavingTooMuchFun = {

hello: 100,

...foo,

world: 200,

...bar,

}

Keep in mind that when using object spread operators, any properties in later spreads “win out” over previously created properties. So in our last example, if bar had a property named world, then bar.world would have been used instead of the one we explicitly wrote out.

Object rests are the dual of object spreads, in that they can extract any extra properties that don’t get picked up when destructuring an element:

let { a, b, c, ...defghijklmnopqrstuvwxyz } = alphabet;

Many libraries take advantage of the fact that objects are (for the most part) just a map of strings to values. Given what TypeScript knows about each value’s properties, there’s a set of known strings (or keys) that you can use for lookups.

That’s where the keyof operator comes in.

interface Person {

name: string;

age: number;

location: string;

}

let propName: keyof Person;

The above is equivalent to having written out

let propName: "name" | "age" | "location";

This keyof operator is actually called an index type query. It’s like a query for keys on object types, the same way that typeof can be used as a query for types on values.

The dual of this is indexed access types, also called lookup types. Syntactically, they look exactly like an element access, but are written as types:

interface Person {

name: string;

age: number;

location: string;

}

let a: Person["age"];

This is the same as saying that n gets the type of the age property in Person. In other words:

let a: number;

When indexing with a union of literal types, the operator will look up each property and union the respective types together.

// Equivalent to the type 'string | number' let nameOrAge: Person["name" | "age"];

This pattern can be used with other parts of the type system to get type-safe lookups, serving users of libraries like Ember.

function get<T, K extends keyof T>(obj: T, propertyName: K): T[K] {

return obj[propertyName];

}

let x = { foo: 10, bar: "hello!" };

let foo = get(x, "foo"); // has type 'number'

let bar = get(x, "bar"); // has type 'string'

let oops = get(x, "wargarbl"); // error!

Mapped Types

Mapped types are definitely the most interesting feature in TypeScript 2.1.

Let’s say we have a Person type:

interface Person {

name: string;

age: number;

location: string;

}

Much of the time, we want to take an existing type and make each of its properties entirely optional. With Person, we might write the following:

interface PartialPerson {

name?: string;

age?: number;

location?: string;

}

Notice we had to define a completely new type.

Similarly, we might want to perform a shallow freeze of an object:

interface FrozenPerson {

readonly name: string;

readonly age: number;

readonly location: string;

}

Or we might want to create a related type where all the properties are booleans.

interface BooleanifiedPerson {

name: boolean;

age: boolean;

location: boolean;

}

Notice all this repetition – ideally, much of the same information in each variant of Person could have been shared.

Let’s take a look at how we could write BooleanifiedPerson with a mapped type.

type BooleanifiedPerson = {

[P in "name" | "age" | "location"]: boolean

};

Mapped types are produced by taking a union of literal types, and computing a set of properties for a new object type. They’re like list comprehensions in Python, but instead of producing new elements in a list, they produce new properties in a type.

In the above example, TypeScript uses each literal type in "name" | "age" | "location", and produces a property of that name (i.e. properties named name, age, and location). P gets bound to each of those literal types (even though it’s not used in this example), and gives the property the type boolean.

Right now, this new form doesn’t look ideal, but we can use the keyof operator to cut down on the typing:

type BooleanifiedPerson = {

[P in keyof Person]: boolean

};

And then we can generalize it:

type Booleanify<T> = {

[P in keyof T]: boolean

};

type BooleanifiedPerson = Booleanify<Person>;

With mapped types, we no longer have to create new partial or readonly variants of existing types either.

// Keep types the same, but make every property optional.

type Partial<T> = {

[P in keyof T]?: T[P];

};

// Keep types the same, but make each property to be read-only.

type Readonly<T> = {

readonly [P in keyof T]: T[P];

};

Notice how we leveraged TypeScript 2.1’s new indexed access types here by writing out T[P].

So instead of defining a completely new type like PartialPerson, we can just write Partial<Person>. Likewise, instead of repeating ourselves with FrozenPerson, we can just write Readonly<Person>!

Originally, we planned to ship a type operator in TypeScript 2.1 named partial which could create an all-optional version of an existing type.

This was useful for performing partial updates to values, like when using React‘s setState method to update component state. Now that TypeScript has mapped types, no special support has to be built into the language for partial.

However, because the Partial and Readonly types we used above are so useful, they’ll be included in TypeScript 2.1. We’re also including two other utility types as well: Record and Pick. You can actually see how these types are implemented within lib.d.ts itself.

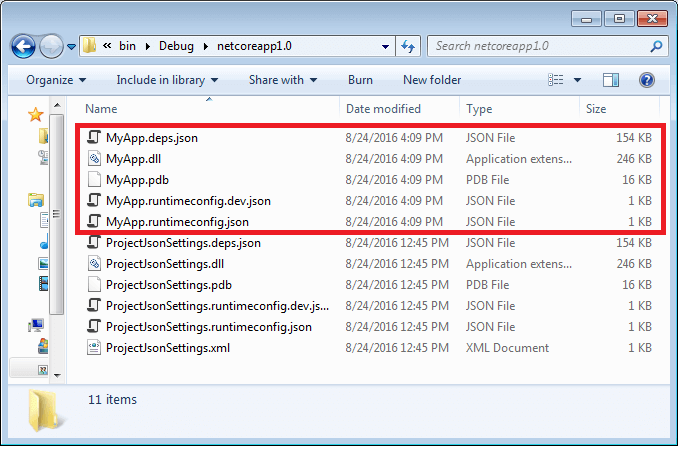

Easier Imports

TypeScript has traditionally been a bit finnicky about exactly how you can import something. This was to avoid typos and prevent users from using packages incorrectly.

However, a lot of the time, you might just want to write a quick script and get TypeScript’s editing experience. Unfortunately, it’s pretty common that as soon as you import something you’ll get an error.

“But I already have that package installed!” you might say.

The problem is that TypeScript didn’t trust the import since it couldn’t find any declaration files for lodash. The fix is pretty simple:

npm install --save @types/lodash

But this was a consistent point of friction for developers. And while you can still compile & run your code in spite of those errors, those red squiggles can be distracting while you edit.

So we focused on on that one core expectation:

But I already have that package installed!

and from that statement, the solution became obvious. We decided that TypeScript needs to be more trusting, and in TypeScript 2.1, so long as you have a package installed, you can use it.

Do be careful though – TypeScript will assume the package has the type any, meaning you can do anything with it. If that’s not desirable, you can opt in to the old behavior with --noImplicitAny, which we actually recommend for all new TypeScript projects.

Enjoy!

We believe TypeScript 2.1 is a full-featured release that will make using TypeScript even easier for our existing users, and will open the doors to empower new users. 2.1 has plenty more including sharing tsconfig.json options, better support for custom elements, and support for importing helper functions, all which you can read about on our wiki.

As always, we’d love to hear your feedback, so give 2.1 a try and let us know how you like it! Happy hacking!