If you don’t know which model of community you’re building (and the conventions of that model), you’re probably going to struggle.

Community models can be as distinct from one another as Abrahamic religions. We might share a common faith in connecting people, but the best practices of each model vary greatly.

If you follow #CMGR on Twitter, it often feels like community builders live in different worlds sharing conflicting advice and ideas. The truth is most people are sharing advice that works for their model of community building.

Whether it works for you or not depends largely on if you’re following a similar model to them.

Community Building Models Which Work in 2022

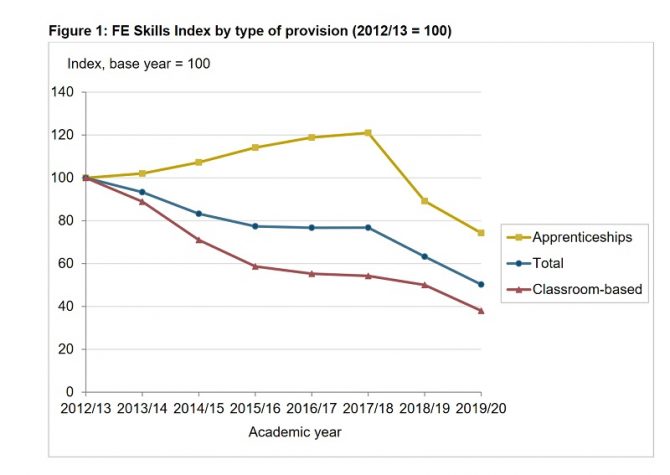

The main brand (emphasis on the word brand here) community building models today fall within five buckets. Each bucket has unique conventions.

(click here for full image)

(click here for full image)

For example, if you’re building a support community, creating a sense of community isn’t such a big deal. Most people just want to get answers to their questions and be on their way. However, if you’re building peer groups or user groups, then creating that sense of belonging is far more important (this is the essence of this debate).

For sure, you absolutely can succeed by defying these models and blazing your own trail. But you’re making life much harder for yourself. This is because the success of each model depends upon embracing the conventions of that model.

What Are Community Building Conventions?

Like writing a movie, it’s best to pick a genre and stick to the conventions of that genre.

Sure, sometimes you can defy conventions and succeed, but there’s a reason we don’t see many romantic horror movies; audiences have preferences.

This is true for communities too. Conventions of community models reflect the preferences of their audiences. These preferences cover four key areas:

1) Value to members. This is the unique value you need to offer (and promote) to members. Without the right value, members won’t visit.

2) Critical success factors. This is what needs to be present within your community for members to get that value. Without these, members won’t stick around for long.

3) Key challenges. This is the hard part of making that model work. It’s what your strategy should be designed to overcome.

4) Platform. This is the technology members prefer to achieve that outcome (defy this at your own risk).

So, for example:

- If you’re building a support community, pick a forum platform which is easy to scan and search for information, nurture a group of superusers, and do everything you can to redirect people with questions from other channels to the community.

- If you want people to share how they use products, embrace blogs, videos, and social media where people already share expertise. Aggregate them into a single place. Prune the bad and feature the best.

- If you want advocates to say nice things about you to others, use a dedicated advocacy platform and provide rewards advocates actually want. Put strong training and quality controls in place.

- If you want to nurture peer groups (especially elite peer groups), you need to keep it exclusive, spend time welcoming each person, and invest heavily in great facilitation (rather than moderation!) on a simple group messaging tool.

- If you want user groups, then find the right leaders, use dedicated (virtual) event software, and create systems to scale it up rapidly.

The more conventions of the model you embrace, the more successful you’re likely to be.

When you defy the models, you’re going to struggle because you’re trying to change the preferences of your audience.

Member preferences are so critically important (and, sadly, so commonly ignored).

Let’s Be Honest About Member Preferences

Understanding the real preferences of members will save you a lot of time and money.

Often we do things that are clearly against the preferences of our audiences.

Here’s a couple of questions:

How many brand communities do you regularly participate in by choice (i.e. not because you need a problem fixed?)

When you engage with peers in the industry, how often do you do it in forums hosted by a major brand?

How often do you contribute articles to knowledge bases in a brand community?

If you answered ‘none/never’ to all of the above, why would you think your audience is any different?

If you’re trying to persuade your members to do something you’ve never done (or wouldn’t do), you’re probably not being honest about member preferences (which usually aren’t so dissimilar from your own).

Too often we try to build communities that are clearly running contrary to the preferences of members. This means we’re going to end up fighting against the odds.

Different Audiences Have Different Conventions Too!

To make matters a little more confusing, sometimes there are unique conventions for specific audiences too.

One recent prospect complained that since they moved their developer community from Discourse to their integrated Salesforce platform, participation had plummeted. Developers had simply begun using a member-hosted Slack channel instead.

Why did this happen?

Because Discourse is better for developers, it has features developers like and are more familiar with. It’s a widely accepted convention that developers use Discourse. When you battle natural preferences, you’re usually going to lose.

Likewise, I recently talked a game developer out of launching a forum for gamers to hang out and chat. That’s simply not where gamers go anymore. They prefer Discord, Reddit and other channels.

It doesn’t take much research to identify the conventions you’re working with.

You can battle against user preferences if you want, but it is going to be a battle.

If You Don’t Embrace The Right Conventions, You’re Probably Fighting The Odds

Sometimes you see the same mistakes so often you start to wonder why no-one seems to learn from them?

For example, around 95% of groups hosted within hosted brand communities are devoid of any activity.

This means your odds of making sub-groups work within your hosted community are around 5%.

Unless you’re playing the lottery, those are terrible odds.

But that doesn’t stop community after community trying to make it work. Some don’t know the odds, others simply think that their situation is different.

And you might well be different. You might just have the audience and unique circumstance to make it work for you, but you’re always going to be fighting the odds.

This is the crux of the problem:

Instead of embracing the natural preferences and behaviors of our audiences, we try to persuade them to change their preferences.

Once you start trying to change preferences of members, you’re stacking the odds against you.

Sadly, many people seem to have a completely false idea of the odds against some of their community plans.

Five Reasons We Don’t Fully Appreciate The Odds Of Success

This isn’t a comprehensive list, but in my experience there tend to be five common reasons we don’t fully understand the odds of success in a community activity (or don’t understand the preferences of members).

1) Success bias. Failures are naturally removed from the web. This only leaves successful examples. Yet for every success there might be dozens of failures. The reverse is also true. Often a single successful example inspires dozens of failures.

2) Imagining people who don’t really exist. We imagine there are time-rich people unlike ourselves who are happy to take the time to learn new tools, write long knowledge articles, and share their success stories with others. If you can’t find an abundance of real people with preferences to match your idea, you need to change your idea (rather than try to change the people!).

3) Preferences change. There was a time when people happily hung out in forums chatting to friends. For some older generations, this may still be true. But I’m betting few (if any) of us still do it today. Preferences have changed and we haven’t stayed current with the modern preferences of our audiences.

4) Software vendors promote their solutions for every situation. There is a big difference between a platform offering a feature and members wanting to use the feature (on that platform). In theory, groups, knowledge bases, and gamification can all be really useful, but most of the time members won’t use (or care about) any of these features. If you haven’t used any of these features on another brand’s community before, why do you think your members will?

5) The desire to ‘Do Something’. A new VP of marketing arrives, sees a collection of disparate platforms, and decides this is confusing, wasteful, and cluttered. She decides to do something and suggests a far better solution; bring everything together into one integrated experience. But this runs contrary to the preferences of members as we’ll explore in a moment.

Each of these really skews the real odds of success when you run against member preferences.

You can’t resist them entirely, but try to be mindful of their impact.

Can You Embrace Multiple Community Building Models At The Same Time?

Yes, but you have to embrace all the conventions (and challenges) of each model!

You can’t just combine them together and hope it works.

For example, many support communities (people asking/answering questions) also try to be a ‘success’ community (where people proactively share best practices).

Typically, this is a struggle. Preferences today suggest people don’t go to forums to learn. In most fields today, people share knowledge and learn directly from blogs, video sharing sites, and other social media tools.

You become a ‘success’ community by either finding members willing to submit this kind of content into your community (difficult) or aggregating and filtering the content already out there (a lot easier). Patagonia and Peleton are good examples of this.

Likewise, if you want to build private peer groups where people hang out and chat, that’s not likely to happen within a hosted community platform. You need to use platforms where people are most likely to hang out and chat. That’s usually group messaging apps (Slack, WhatsApp groups, even email works well at small scale). You need to keep each small, private, and exclusive (i.e. don’t invite everyone). You need to link these to the community (and vice versa).

This makes measurement difficult.

But, believe me, it’s far better to make life difficult for your metrics than your members.

Some Simple Questions To Guide The Model You Use

FeverBee invests a ridiculous amount of time researching and understanding the preferences of our clients’ audiences.

We do this because it’s a lot easier to embrace preferences than try to change them.

If we embrace the preferences, we stack the odds of success in our favour.

Sadly, so many strategies today are built upon wishful thinking rather than preferences identified by members. This usually reflects a failure to take a data-driven approach and not spending anywhere near enough time really understanding the audience today.

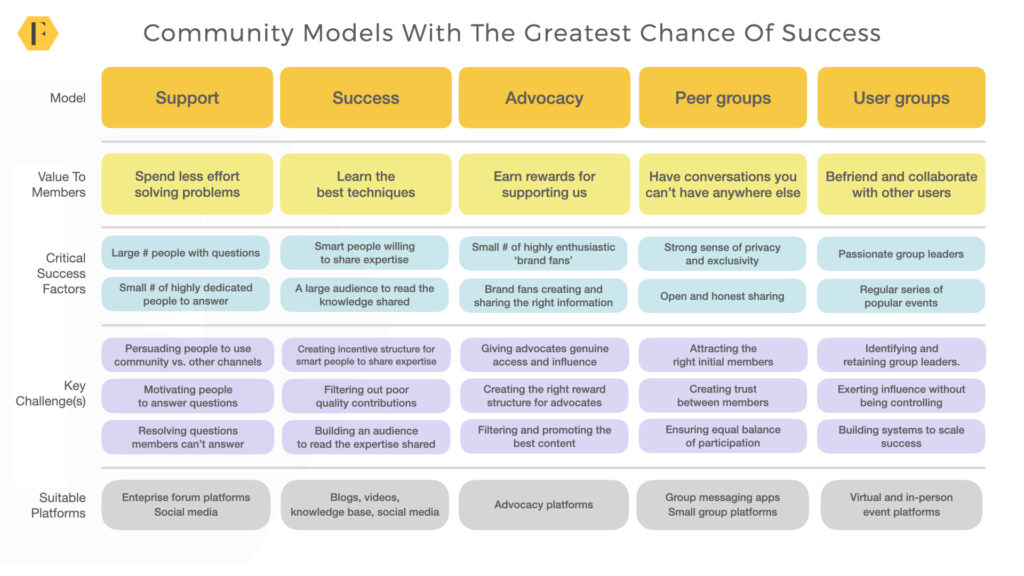

In fact, just by asking a handful of simple questions (shown below) you can uncover a great deal of member preferences.

(click here for full image)

This isn’t a comprehensive list but you get the idea.

Begin the process with a blank slate and a completely open mind and you’re far more likely to find the right model and platform for you.

Summary

The model of community you’re developing influences everything you do.

It highlights the value you should communicate to members, the key metrics you need to track, the major challenges you should overcome, and the platform you select.

If your community isn’t where you want it to be today, there’s a very strong chance you either have the wrong model (or you’re not embracing the conventions of that model).

I’d suggest a few key steps here:

1) Use the template questions above to identify value and platform preferences. You will likely find they fall the platform preferences match the model.

2) Get everyone aligned on the model. Everyone involved in building the community needs to understand the model you’re using and the conventions of that model. Defy the conventions at your peril.

3) Develop your strategy to achieve the critical success factors of the model. Align everything you do to get those critical success factors in place. Use the resources and strengths you have to overcome the challenges of each model.

Good luck!

The post Five Brand Community Building Models That Succeed (and why many fail) first appeared on FeverBee.

Cynefin

Cynefin