ISESteroids originally started as a simple add-on to add professional editor capabilities to the built-in PowerShell ISE editor. Meanwhile, it evolved to a slick high-end PowerShell script editor. PowerShell Magazine has covered the basic highlights … [visit site to read more]

Pete Cook

Shared posts

Active Directory Week: Essential Steps for PowerShell when Upgrading

Summary: Learn three essential steps for Windows PowerShell when upgrading from Windows Server 2003.

Microsoft Scripting Guy, Ed Wilson, is here. Today we have the final post in the series about Active Directory PowerShell by Ashley McGlone. Before you begin, you might enjoy reading these posts from the series:

- Get Started with Active Directory PowerShell

- Explore Group Membership with PowerShell

- Active Directory Week: Stale Object Cleanup Guidance—Part 1

- Active Directory Week: Stale Object Cleanup Guidance—Part 2

Over the years Microsoft has released a number of new features to enhance Active Directory functionality. (For more information, see Active Directory Features in Different Versions of Windows Server.) If you are just now upgrading from Windows Server 2003, you have much to be thankful for. You will get to use new features like the Active Directory Recycle Bin and “Protect from accidental deletion.” But first you must raise the forest functional level to at least Windows Server 2008 R2. Let’s look at how to turn on these features.

Raise the functional level

In the Windows Server 2008 R2 era, many new Active Directory features were dependent on domain or forest functional level. One significant change with Windows Server 2012 R2 and Windows Server 2012 is that the product group tried to reduce the dependency on functional level for new features. At a minimum, you want to move your forest functional level to the Windows Server 2008 R2. You can raise it to Windows Server 2012 R2 if all of your domain controllers are on the current release.

Of course, these steps can be done in the graphical interface, but this post is about Windows PowerShell. It is actually quite easy to do from the Windows PowerShell console. First, let’s check the current functional modes:

PS C:\> (Get-ADDomain).DomainMode

PS C:\> (Get-ADForest).ForestMode

Note If you are running these commands on Windows Server 2008 R2, you must first run this line:

Import-Module ActiveDirectory

DomainMode and ForestMode are properties of the ADDomain and ADForest, respectively. Lucky for us there is a cmdlet to set each of these. Look at this syntax:

$domain = Get-ADDomain

Set-ADDomainMode -Identity $domain -Server $domain.PDCEmulator -DomainMode Windows2012Domain

$forest = Get-ADForest

Set-ADForestMode -Identity $forest -Server $forest.SchemaMaster -ForestMode Windows2012Forest

Note You must target the PDC Emulator for domain mode changes and the Schema Master for forest mode changes.

The following table shows the available domain and forest mode parameter values:

|

Set-ADDomainMode |

Set-ADForestMode |

|

Win2003Domain Win2008Domain Win2008R2Domain Win2012Domain Win2012R2Domain |

Windows2000Forest Windows2003InterimForest Windows2003Forest Windows2008Forest Windows2008R2Forest Windows2012Forest Windows2012R2Forest |

Here are some points to consider:

- If you raise the forest functional level, it will automatically attempt to raise the level of all the domains first.

- Generally, these commands only raise functional level. You cannot lower the level. (There is a minor exception, which is documented in How to Revert Back or Lower the Active Directory Forest and Domain Functional Levels in Windows Server 2008 R2.)

- All domain controllers must be at the same or higher operating system level as the functional mode.

- Be sure that you have a good backup of the forest for any possible recovery scenario afterward.

For more information about raising functional level, see What is the Impact of Upgrading the Domain or Forest Functional Level?

Enable the Active Directory Recycle Bin

Hopefully, this feature is old news to you by now. The key point is that it is not automatic. You must enable the Active Directory Recycle Bin before you can restore a deleted account. Here is the easiest way to enable the Active Directory Recycle Bin from Windows PowerShell:

Enable-ADOptionalFeature 'Recycle Bin Feature' -Scope ForestOrConfigurationSet `

-Target (Get-ADForest).RootDomain -Server (Get-ADForest).DomainNamingMaster

This command is written so that it will work in any environment. Note that it must target the forest Domain Naming Master role holder.

For more information and potential troubleshooting steps, see:

Now you can use the Restore-ADObject cmdlet or the Active Directory Administrative Center (ADAC) graphical interface to recover deleted objects. This is so much easier than an Active Directory authoritative restore!

Protect from accidental deletion

Have you noticed a theme yet? “Recycle bin” and “accidental deletion”...

We want to help you recover faster. The “Protect from accidental deletion” feature will hopefully keep you from needing the Recycle Bin. The following image shows the check box for the setting in the graphical interface:

With the Active Directory cmdlets, we can find the status by using the ProtectedFromAccidentalDeletion object property like this:

Get-ADuser ProtectMe -Properties ProtectedFromAccidentalDeletion

This value will be True or False, depending on whether the box is selected. To turn on the protection, we can use this syntax:

Get-ADUser -Identity ProtectMe | Set-ADObject -ProtectedFromAccidentalDeletion:$true

It would be inefficient to do this one-at-a-time for all objects, wouldn’t it? Here are some commands you could use to turn it on more broadly across your environment:

Get-ADUser -Filter * | Set-ADObject -ProtectedFromAccidentalDeletion:$true

Get-ADGroup -Filter * | Set-ADObject -ProtectedFromAccidentalDeletion:$true

Get-ADOrganizationalUnit -Filter * | Set-ADObject -ProtectedFromAccidentalDeletion:$true

The next logical question would be, “OK. Then how do I delete something when it is not an accident?”

I am glad you asked. We can turn off the protection and delete an object like this:

Get-ADUser ProtectMe |

Set-ADObject -ProtectedFromAccidentalDeletion:$false -PassThru |

Remove-ADUser -Confirm:$false

Notice that we use the -PassThru switch to keep the user object moving through the pipeline after the Set command.

This delete protection is not enabled by default. It must be explicitly set on each object that you want to protect. For information about how to make this automatic for new objects, you can read the comments that follow this post on the Ask the Directory Services Team blog: Two lines that can save your AD from a crisis.

Note If you would like to know more about how this feature works, we explain this topic in greater detail in Module 7 of the Microsoft Virtual Academy videos, Active Directory Attribute Recovery With PowerShell.

Bonus tips

In this post, we discussed three essentials steps when upgrading from Windows Server 2003:

- Raise the domain and forest functional level

- Enable Recycle Bin

- Protect from accidental deletion

Of course, there are many other new features to leverage. I recommend that you check out the following resources in the Microsoft Virtual Academy videos:

- In Module 7, we discuss a recovery strategy that uses Active Directory snapshots. This is a friendly way to recover corrupted Active Directory properties without the hassle of a full authoritative restoration. I recommend that all customers start taking Active Directory snapshots (not to be confused with virtual machine snapshots) on a regular basis to aid in the recovery process.

- In Module 8, we discuss three tips to help you deploy domain controllers faster during your upgrade. Note that DCPROMO was depreciated in Windows Server 2012 R2.

In addition, you should consider migrating SYSVOL from NTFRS to DFSR replication. This is another benefit after the functional level change, and it requires a manual step to turn it on. This is not addressed in the videos, but these steps are documented on TechNet and in a number of blog posts. For example, see, SYSVOL Replication Migration Guide: FRS to DFS Replication.

Congratulations on your move from Windows Server 2003! You will find that the later operating systems have many more features and tools to help with routine administration, maintenance, and security. With the tips from this post, you have a jumpstart for automating new features to aid in recovery scenarios.

Watch my free training videos for Active Directory PowerShell on Microsoft Virtual Academy to learn more insider tips on topics such as getting started with Active Directory PowerShell, routine administration, stale accounts, managing replication, disaster recovery, domain controller deployment.

~Ashley

And that ends our series about Active Directory PowerShell by Ashley McGlone! Join me tomorrow when I seek a way to find the latitude and longitude for a specific address.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

Set-NTP

This script will be use to set NTP on computers. This is the answer of the question: How to configure my NTP server.

Created by: Mickaël LOPES Published date: 11/26/2014 |

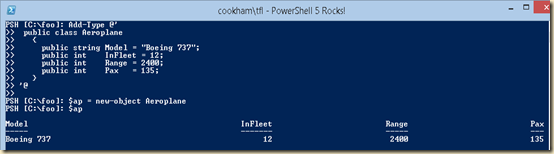

Writing Classes With PowerShell V5 – Part 2

But what if there are no applicable .NET objects and you need to create your own class? Some admins might be asking: why bother? The answer is one of flexibility and reuse. If you are writing scripts to automate your day to day operations, you are inevitably passing objects between scripts, functions, cmdlets. There are always going to be cases where you'd like to create your own object simple as a means of transporting sets of data between hosts/scripts/etc.

In PowerShell V5, Microsoft has included the ability to create your own classes. When I started writing this set of articles, I had initially intended to just introduce Classes in V5, but as I looked at it, you can already create your own objects using earlier versions of PowerShell. These are not fully fledged classes, but are more than adequate when you just want to create a simple object to pass between your scripts/functions.

Creating Customised Objects

There are several ways you can achieve this. The first, but possibly hardest for the IT pro: use Visual Studio, author your classes in C# then compile them into a DLL. Then in PowerShell, you use Add-Type to add the classes to your PowerShell environment. The fuller details of this, and how to speed up loading by using Ngen.Exe are outside the scope of this blog post.

Bringing C# Into PowerShell

Now for the semi-developer audience amongst you, there's a kind of halfway house. In my experience, IT pros typically want what I call data-only classes. That is a class that just holds data and can be exchanged between scripts. For such cases, there's a simple way to create your class, although It does require a bit of C# knowledge (and some trial and error).

Here's a simple example of how to so this:

Add-Type @'This code fragment defines a very small class – one with just 4 members (Model, number in fleet, range, and max number of passengers). Once you run this, you can create objects of the type AeroPlane, like this:

public class Aeroplane

{

public string Model = "Boeing 737";

public int InFleet = 12;

public int Range = 2400;

public int Pax = 135;

}

'@

As you can see from the screen shot, you can create a new instance of the class by using New-Object and selecting your newly created class.

If you are just creating a data-only class – one that you might pass from a lower level working function or script to some higher level bit of code – then this method works acceptably. Of course, you have to be quite careful with C# syntax. Little things like capitalising the token Namespace or String will create what I can only term unhelpful error messages.

Using Select-Object and Hash Tables

Another way to create a custom object is to use Select-Object. Usually, Select object is used to subset an occurrence – to just select a few properties from an object in order to reduce the amount of data that is to be transferred. In some cases, this may be good enough and would look like this:

Dir c:\foo\*.ps1 | Select-Object name,fullname| gmNote that when you use Select-Object like this, the object's type name changes. In this case, the dir (Get-ChildItem) cmdlet was run against the File Store provider and yielded objects of the type: System.Io.FileInfo. The Select-Object, however, changes the type name to SELECTED.System.IO.FileInfo (emphasis here is mine). This usually is no big deal, but it might affect formatting in some cases.

TypeName: Selected.System.IO.FileInfo

Name MemberType Definition

---- ---------- ----------

Equals Method bool Equals(System.Object obj)

GetHashCode Method int GetHashCode()

GetType Method type GetType()

ToString Method string ToString()

FullName NoteProperty System.String FullName=C:\foo\RESTART-DNS.PS1

Name NoteProperty System.String Name=RESTART-DNS.PS1

But you can also specify a hash table with the select object to create new properties, like this:

PSH [C:\foo]: $Filesize = @{As you can see form this code snippet, you can use Select-object to create subset objects and can extend the object using a hash table. One issue with this approach is that the member type for the selected properties (the ones included from the original object and those added) become NoteProperties, and not String, or Int, etc. In most cases, IT Pros will find this good enough.

Name = 'FileSize '

Expression = { '{0,8:0.0} kB' -f ($_.Length/1kB) }

}

Dir c:\foo\*.ps1 | Select-Object name,fullname, $Filesize

Name FullName FileSize

---- -------- -----------

RESTART-DNS.PS1 C:\foo\RESTART-DNS.PS1 1.1 kB

s1.ps1 C:\foo\s1.ps1 0.1 kB

scope.ps1 C:\foo\scope.ps1 0.1 kB

script1.ps1 C:\foo\script1.ps1 0.5 kB

…

PSH [C:\foo]: Dir c:\foo\*.ps1 | Select-Object name,fullname, $Filesize| gm

TypeName: Selected.System.IO.FileInfo

Name MemberType Definition

---- ---------- ----------

Equals Method bool Equals(System.Object obj)

GetHashCode Method int GetHashCode()

GetType Method type GetType()

ToString Method string ToString()

FileSize NoteProperty System.String FileSize = 1.1 kB

FullName NoteProperty System.String FullName=C:\foo\RESTART-DNS.PS1

Name NoteProperty System.String Name=RESTART-DNS.PS1

In the next instalment in this series, I will be looking at using New-Object to create a bare bones new object and then adding members to it by using the Add-Member cmdlet and how to change the generated type name to be more format-friendly.

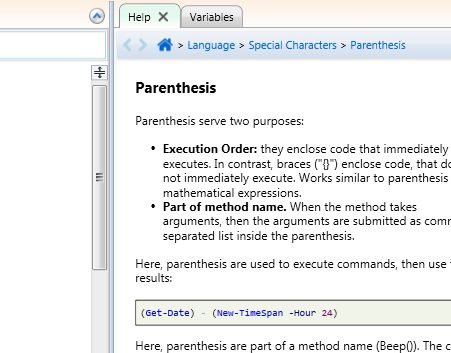

boosting the powershell ise with ise steroids

Ever since the PowerShell ISE was released, I slowly moved away from using some of the other things I was pretty fond of like PowerShellPlus and PrimalScript. It’s mostly because it’s super convenient.

Along came ISE Steroids. I can’t really speak to 1.0 since I just started on 2.0 and just started very recently, actually. So far, I’m pretty impressed. The best part of using it, is it doesn’t force the convenience factor to change at all. Installing it is as simple as unzipping the files to your module path ($env:PSModulePath -split ';'). After that, you launch it with Start-Steroids. That gives me the convenience of using the plain ol’ ISE or switching into a hyper-capable ISE.

I’ve only begun scratching the surface of its capabilities though here are some things I’ve been using so far:

VERTICAL ADD-ON TOOLS PANE

Help. I love this feature. Anything I click on in a script, the help add-on will attempt to look up and present relevant information.

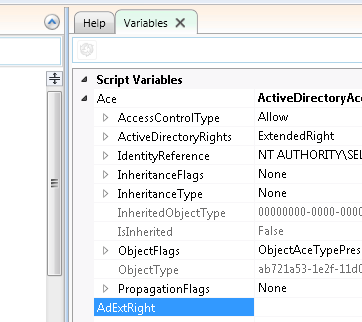

Variables. This is another feature I love. Having a variables window makes debugging so much easier.

REFACTORING

Is there someone on your team that codes in a manner that only their mother could love? If so, you might benefit from using the Refactor process. It’s basically a series of scripts that will comb the hair and wash behind the ears of your PowerShell script. It’s not perfect, but it performs admirably. It’s also configurable if you need to tune things down from default. Here’s an example:

Bad

foreach ($item in $smsobjects){

#write-host $item.name

$machinesfromSMS = $machinesfromSMS + $item.name}

foreach ($item in $sms2012objects){

#write-host $item.name

$machinesfromSMS = $machinesfromSMS + $item.name}Better

foreach ($item in $smsobjects)

{

#write-host $item.name

$machinesfromsms = $machinesfromsms + $item.name

}

foreach ($item in $sms2012objects)

{

#write-host $item.name

$machinesfromsms = $machinesfromsms + $item.name

}Which would you rather read and interpret?

ROOM FOR IMPROVEMENT

I would love to see the context sensitive help add-on retrieve things from the console or at least a search box to look up information manually. At this time, I have an empty script where I type in the command to make it show me help information.

SUMMARY

ISE Steroids isn’t a new shell, a giant development environment, or anything that fancy. It’s a lot of little things that tunes out the default PowerShell ISE into a highly functional shell and scripting environment. It’s extensible with other add-ons and supports launching applications from the ISE. (ILSpy and WinMerge come loaded.)

It’s my new favorite. I’m hooked. If you like the PowerShell ISE environment, you should check it out. There are many more features I haven’t brought up (signing, version control, wizards, etc.)

Active Directory Week: Get Started with Active Directory PowerShell

Summary: Microsoft premier field engineer (PFE), Ashley McGlone, discusses the Active Directory PowerShell cmdlets.

Microsoft Scripting Guy, Ed Wilson, is here. Today we start a series about Active Directory PowerShell, written by Ashley McGlone...

Ashley is a Microsoft premier field engineer (PFE) and a frequent speaker at events like PowerShell Saturday, Windows PowerShell Summit, and TechMentor. He has been working with Active Directory since the release candidate of Windows 2000. Today he specializes in Active Directory and Windows PowerShell, and he helps Microsoft premier customers reach their full potential through risk assessments and workshops. Ashley’s TechNet blog focuses on real-world solutions for Active Directory using Windows PowerShell. You can follow Ashley on Twitter, Facebook, or TechNet as GoateePFE.

Since I joined Microsoft, the Scripting Guy and the Scripting Wife have become dear friends. Ed has mentored my career and opened doors for me as I engaged the Windows PowerShell community. It is an honor for me to write this week’s blog series about Active Directory PowerShell as Ed is taking some personal time off. Thank you, Ed.

Active Directory PowerShell

Perhaps your job responsibilities now include Active Directory, or perhaps you are finally moving off of Windows Server 2003. There is no better time than the present to learn how to use Windows PowerShell with Active Directory. You will find that you can quickly bulk load users, update attributes, install domain controllers, and much more by using the modules provided.

Background

The Active Directory PowerShell module was first released with Windows Server 2008 R2. Prior to that, we used the Active Directory Services Interface (ADSI) to script against Active Directory. I did that for years with VBScript, and I was glad to see the Windows PowerShell module. It certainly makes things much easier.

In Windows Server 2012, we added several cmdlets to round out the core functionality. But we also released a companion module called ADDSDeployment. This module replaced the functionality we had in DCPROMO. With Windows Server 2012 R2 we added some cmdlets for the new authentication security features.

Now we have a fairly robust set of cmdlets to manage directory services in Windows.

How do I get these cmdlets?

The version of the cmdlets you use depends on the Remote Server Administration Tools (RSAT) that you install, and that depends on the operating system you have. See the following graphic to determine which version of the cmdlets you should use.

For example, if you have Windows 7 on your administrative workstation, you can use the first release of the ActiveDirectory module. The cmdlets can target any domain controller that has the AD Web Service. (Windows Server 2008 and Windows Server 2003 require the AD Management Gateway Service as a separate installation. For more information, see Step-by-Step: How to Use Active Directory PowerShell Cmdlets against Windows Server 2003 Domain Controllers.)

If you have a Windows 8.1 workstation, you can install the latest version of the RSAT and get all the fun new cmdlets, including the ADDSDeployment module. If you are stuck in Windows 7, and you want to use the latest cmdlets, see How to Use The 2012 Active Directory PowerShell Cmdlets from Windows 7 for a work around.

Alternatively, if you use a Windows Server operating system for your tools box, you can install the AD RSAT like this:

Install-WindowsFeature RSAT-AD-PowerShell

The following command will give you all of the graphical administrative tools and the Windows PowerShell modules:

Install-WindowsFeature RSAT-AD-Tools -IncludeAllSubFeature

Where do I begin?

I recommend for most people to start with the Active Directory Administrative Center (ADAC). This is the graphical management tool introduced in Windows Server 2012 that uses Windows PowerShell to run all administrative tasks.

The nice part is that you can see the actual Windows PowerShell commands at the bottom of the screen. Find the WINDOWS POWERSHELL HISTORY pane at the bottom of the tool, and click the Up arrow at the far right of the window. Select the Show All box. Then start clicking through the administrative interface. You can see the actual Windows PowerShell commands being used:

Yes. Read the Help.

If you are using Windows PowerShell 4.0 or Windows PowerShell 3.0 or newer, you need to install the Help content. From an elevated Windows PowerShell console type:

Update-Help -Module ActiveDirectory -Verbose

Although it is optional, I usually add the ‑Verbose switch so that I can tell what was updated. This also installs the Active Directory Help topics:

Get-Help about_ActiveDirectory -ShowWindow

Get-Help about_ActiveDirectory_Filter -ShowWindow

Get-Help about_ActiveDirectory_Identity -ShowWindow

Get-Help about_ActiveDirectory_ObjectModel -ShowWindow

Note that you may have to import the Active Directory module before you can discover the about_help topics:

Import-Module ActiveDirectory

You can also find these Help topics on TechNet: Active Directory for Windows PowerShell About Help Topics.

I strongly advise reading through these about_help topics as you get started. They explain a lot about how the cmdlets work, and it will save you much trial and error as you learn about new cmdlets.

Type your first commands

Now that you have the module imported, you can try the following commands from the Windows PowerShell console:

Get-ADForest

Get-ADDomain

Get-ADGroup “Domain Admins”

Get-ADUser Guest

Congratulations! You are now on your way to scripting Active Directory.

Move to Active Directory PowerShell cmdlets

The next step is to replace the familiar command-line utilities you have used for years with new Windows PowerShell commands. I have published a four page reference chart to help you get started: CMD to PowerShell Guide for Active Directory.

For example, instead of the DSGET or DSQUERY command-line utilities, you can use Get‑ADUser, Get‑ADComputer, or Get‑ADGroup. Instead of CSVDE, you can use Get‑ADUser | Export‑CSV.

With this knowledge, you can find some of your batch files or VBScripts for Active Directory and start converting them to Windows PowerShell. Beginning with a goal is a great way to learn.

Ready, Set, Go!

I hope you have enjoyed this quick start for Active Directory PowerShell. You now have the necessary steps to get started on the journey. Stay tuned this week for more posts about scripting with Active Directory. You can also check out four years of Active Directory scripts over at the GoateePFE blog.

~ Ashley

Thanks for the beginning of a great series, Ashley! Ashley recently recorded a full day of free Active Directory PowerShell training: Microsoft Virtual Academy: Using PowerShell for Active Directory. Watch these videos to learn more insider tips on topics like getting started with Active Directory PowerShell, routine administration, stale accounts, managing replication, disaster recovery, and domain controller deployment.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

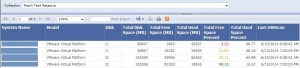

Managing Azure IaaS with Windows PowerShell: Part 5

Summary: Use Windows PowerShell to manage virtual machines in Azure.

Honorary Scripting Guy, Sean Kearney, is here flying through the digital stratosphere on our final day with Windows PowerShell and Azure!

We started by creating a virtual network for our Azure workstations and escalated to spinning up some virtual machines. To read more, see the previous topics in this series:

- Managing Azure IaaS with Windows PowerShell: Part 1

- Managing Azure IaaS with Windows PowerShell: Part 2

- Managing Azure IaaS with Windows PowerShell: Part 3

- Managing Azure IaaS with Windows PowerShell: Part 4

Pretty cool! But we're dealing with the fun stuff today...

Normally in Azure, if you were to initiate a shutdown in Windows or Linux, the operating system shuts down, but the Azure resources remain live in the service. The answer to this issue is to access your management portal and shut down the virtual machine from the Portal in the following manner.

First select the virtual machine (in this case, the one we previous created called 'brandnew1'):

Then choose the Shut Down option at the bottom of the management portal:

This process is pretty simple and it doesn't take more than a minute or so. But, of course, it would be so much nicer to have the ability to shut down or start environments by using a script. This allows for better cost control in Azure and less time with you at the mouse going clickity click click click.

In Hyper-V, we would normally identify the virtual machines with Get-VM and then parse the output to the pipeline to Stop-VM.

Azure is not too different—other than the names of the cmdlets and the visual output.

With Azure, we have a cmdlet called Get-AzureVM. If you are properly authenticated, you can get a list of all virtual machines that are tied to your subscription.

With a magic wave of my wand, I cast the magical cmdlet:

Get-AzureVM

Now we need to note the state of the virtual machine is different on the eyes. In Hyper-V, I would see a virtual machine that is operating like this:

In Hyper-V, it shows up as Running. In Azure, it shows up as ReadyRole. But filtering is similar. In Azure, if I need to show the virtual machines that are running, I run this command:

Get-AzureVM | where { $_.Status –eq 'ReadyRole' }

This will produce only the virtual machines that are operating. I can now pipe this directly to a cmdlet from Azure called (Oh! Hello, Captain Obvious!) Stop-AzureVM:

Get-AzureVM | where { $_.Status –eq 'ReadyRole' } | Stop-AzureVM

Odds are that you don't want to shut down your entire Azure infrastructure in one command. You're probably trying to shut down a single virtual machine (or a set of virtual machines).

In that case, target the name provided by the Get-AzureVM cmdlet. In our example, it's called 'brandnew1'.

There's one more piece. We need to tell Azure which Azure service we are targeting. Remember, you can identify your current service by using the Get-AzureService cmdlet:

$Service=(Get-AzureService).Label

You can then stop that virtual machine by its name and Azure service, for example:

Stop-AzureVM –Name 'brandnew1' –service $service

This will eventually yield a state of 'StoppedDeallocated', which means that you have the configuration and data from your C: partition, but the virtual machine is no longer active, consuming CPU time or active monitoring within the Azure environment.

You would think 'StoppedDeallocated' and 'ReadyRole' are the only two states to consider. But this is not the case.

Let's start the previous virtual machine in Azure and review the states it reveals via the Get-AzureVM cmdlet.

To start a virtual machine in Azure, we use the Start-AzureVM cmdlet and provide the virtual machine name and Azure service name. It's identical to Stop-AzureVM in its use.

Start-AzureVM –name 'Brandnew1' –service $service

As the virtual machine is starting up in Azure, note the two additional states it yields. You can see a Status of CreatingVM. In the management portal, this would be displayed as "Starting (Provisioning)":

It will be followed by "StartingVM" (normally viewed as simply "Starting" in the management portal. At this point, the virtual machine has been created and the internal operating system is starting:

When the process is complete, the virtual machine will return to its normal state of 'ReadyRole'.

A third condition to be aware of is when the computer has received a shutdown command within the operating system. In this condition, the resources are not deallocated within Azure and the virtual machine is not in a 'ReadyRole' state. Its status will show up as a 'StoppedVM':

This is going to be quicker to start; but be aware that in this state, your virtual machine is still pulling money on your account and you are being billed for it. If you'd like to turn it off properly, run Start-AzureVM. If you'd like to save some money, use Stop-AzureVM.

Pretty simple.

If you're trying to pull information on using the Azure cmdlets, I invite you to check out the Microsoft Azure PowerShell Reference Guide on Michael Washam's blog (he is the author of the cmdlets). In addition, there are some excellent posts from Keith Mayer at Microsoft on the subject, including Microsoft Azure Virtual Machines: Reset Forgotten Admin Passwords with Windows PowerShell.

I invite you to follow The Scripting Guys on Twitter and Facebook. If you have any questions, send an email to The Scripting Guys at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, remember to eat your vmdlets every day with a dash of creativity.

Sean Kearney, Windows PowerShell MVP and Honorary Scripting Guy

How to Configure Internet Explorer 11 Enterprise Mode Logging

In a previous Ask the Admin, I showed you how to configure Enterprise Mode for Internet Explorer 11 on Windows 7 SP1 and Windows 8.1 Update. Today, I’m going to show you how configure a web server to capture Enterprise Mode logs.

Install Internet Information Services (IIS)

Internet Explorer (IE) Enterprise Mode doesn’t use the Windows Event Log, but instead sends messages to an Active Server Pages (ASP) web page, which can be read in the web server’s log files. The quickest way to set up IIS on Windows Server 2012 R2 is to run the following PowerShell command as a local administrator:

install-windowsfeature -name web-server, web-asp –includemanagementtools

The cmdlet installs IIS 8.5 on Windows Server 2012 R2 with all the default features, management tools, and ASP components.

Configure Internet Information Services

Once the installation has completed, follow the instructions below to set up an ASP page that will listen for messages from your IE Enterprise Mode clients. In the example below, we’ll configure the website to use a non-standard port to make it easier to separate Enterprise Mode log traffic.

Configure IIS for Internet Explorer Enterprise Mode logging (Image Credit: Russell Smith)

- Open Server Manager from the Start screen or icon on the desktop taskbar.

- In Server Manager, open Internet Information Services (IIS) Manager from the Tools menu.

- In the left pane of IIS Manager, expand the local server, Sites and click Default Web Site.

- On the right in the Actions pane, click Bindings under Edit Site.

- In the Site Bindings dialog, select the http binding and click Edit… on the right.

- In the Edit Site Binding dialog, type 81 in the Port field and click OK.

- Click Close in the Site Bindings dialog.

- In the central pane, double click the Logging icon.

- Click Select Fields in the Log File section.

- In the W3C Logging Fields dialog, make sure that only Date, Client IP, User Name, and URI Query are checked, and then click OK.

- In the Actions pane on the right, click Apply.

To check that the webserver is working, open Internet Explorer on the server and type http://localhost:81/ in the address bar. You should see the default IIS welcome page in IE. To test connectivity from a remote machine, replace localhost with the name of the server.

Create the ASP Page

Now that IIS is configured, let’s put the ASP page into the root of our webserver. The root of the default website is c:\inetpub\wwwroot. In the wwwroot folder, save a text file called EmIE.asp containing the code shown below:

<% @ LANGUAGE=javascript %>

<% Response.AppendToLog(" ;" + Request.Form("URL") + " ;" + Request.Form("EnterpriseMode")); %>

Create a Group Policy Object

The Let users turn on and use Enterprise Mode from the Tools menu policy setting can be enabled to allow users to manually toggle Enterprise Mode on and off. The logging URL in the Options section is the .asp web page that listens for POST messages that we created in the previous steps. If you just want to enable the toggle option in IE, leave the logging field blank.

Configure Group Policy for Internet Explorer Enterprise Mode logging (Image Credit: Russell Smith)

- In the Group Policy Management Editor window for your Group Policy Object, expand Computer Configuration > Policies > Administrative Templates > Windows Components

- In the left pane, click Internet Explorer.

- In the right pane, scroll down the list of policy settings and double click Let users turn on and use Enterprise Mode from the Tools menu.

- In the Let users turn on and use Enterprise Mode from the Tools menu policy setting window, check Enabled.

- In the Options section, type the URL for the ASP page created in the previous steps, for example http://contosodc1:81/emIE.asp on my server, and then click OK.

- Close the Group Policy Management Editor window.

Link the GPO to a site, domain or Organizational Unit (OU) in the Group Policy Management Console (GPMC) as required.

View the IIS Logs

Assuming the default logging location hasn’t been changed, you’ll find the IIS log files in c:\inetpub\logs\logfiles\w3svc1 on the web server.

Internet Explorer Enterprise Mode log data (Image Credit: Russell Smith)

Note that logs can take a few minutes to be updated, so be patient if you don’t see any new entries immediately.

The post How to Configure Internet Explorer 11 Enterprise Mode Logging appeared first on Petri.

Building a PowerShell Troubleshooting Toolkit Revisited

Recently we posted an article about a PowerShell script you could use to build a USB key that contains free troubleshooting and diagnostic utilities. Those scripts relied on a list of links that were processed sequentially by the Invoke-Webrequest cmdlet. The potential downside is that it might take a bit of time to completely download everything. Wouldn’t it be much nicer if we you could download files, let’s say in batches of five?

Unfortunately, there are no cmdlets or parameters that you can use to throttle a set of commands. You could try to create some sort of throttling mechanism with PowerShell’s job infrastructure. If you are proficient with .NET, then you could try your hand at runspaces and runspace pools. Frankly, those make my head hurt, and I don’t expect IT pros to have to be .NET developers to use PowerShell. Fortunately, there is an alternative that I think is a good compromise between usability and systems programming: workflow.

PowerShell 3.0 brought us the ability to create workflows in PowerShell script. The premise of a workflow is that you can orchestrate a series of activities that can run unattended on 10, 100, or 1,000 remote computers. I don’t have space here to fully explain workflows. There is a chapter on workflow in the PowerShell in Depth book from Manning. But one of the great features in my opinion is the ability to execute multiple commands simultaneously.

In a workflow, you can use a ForEach construct with the –Parallel parameter.

Foreach -parallel ($item in $items) {

do-something $item

}The –Parallel parameter only works in a workflow. If there are 10 items, then the Do-Something command will run all 10 at the same time. Or you can throttle the number of simultaneous commands.

Foreach –parallel –throttle 5 ($item in $items) {

do-something $item

}

Now only five commands will run at a time. As one command finishes, the next one in the queue is processed. You don’t have to write any complicated .NET code to take advantage of this feature. Use a workflow. And there’s no rule that says I have to use a workflow with a remote computer. I can create a workflow and run it locally, which is what I’ve done with my original script.

#requires -version 4.0

#create a USB tool drive using a PowerShell Workflow

Workflow Get-MyToolsWF {

<#

.Synopsis

Download tools from the Internet.

.Description

This PowerShell workflow will download a set of troubleshooting and diagnostic tools from the Internet. The path will typically be a USB thumbdrive. If you use the -Sysinternals parameter, then all of the SysInternals utilities will also be downloaded to a subfolder called Sysinternals under the given path.

You can limit the number of concurrent downloads with the ThrottleLimit parameter which has a default value of 5.

.Example

PS C:> Get-MyToolsWF -path G: -sysinternals

Download all tools from the web and the Sysinternals utilities. Save to drive G:.

.Notes

Last Updated: September 30, 2014

Version : 1.0

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Invoke-WebRequest

#>

[cmdletbinding()]

Param(

[Parameter(Position=0,Mandatory=$True,HelpMessage="Enter the download path")]

[ValidateScript({Test-Path $_})]

[string]$Path,

[switch]$Sysinternals,

[int]$ThrottleLimit=5

)

Write-Verbose -Message "$(Get-Date) Starting $($workflowcommandname)"

Function _download {

[cmdletbinding()]

param([string]$Uri,[string]$Path)

$out = Join-path -path $path -child (split-path $uri -Leaf)

Write-Verbose -Message "Downloading $uri to $out"

#hash table of parameters to splat to Invoke-Webrequest

$paramHash = @{

UseBasicParsing = $True

Uri = $uri

OutFile = $out

DisableKeepAlive = $True

ErrorAction = "Stop"

}

Try {

Invoke-WebRequest @paramHash

Get-Item -Path $out

}

Catch {

Write-Warning -Message "Failed to download $uri. $($_.exception.message)"

}

} #end function

Sequence {

<#

csv data of downloads

The product should be a name or description of the tool.

The URI is a direct download link. The link must end in the executable file name (or zip or msi).

The file will be downloaded and saved locally using the last part of the URI.

#>

$csv = @"

product,uri

HouseCallx64,http://go.trendmicro.com/housecall8/HousecallLauncher64.exe

HouseCallx32,http://go.trendmicro.com/housecall8/HousecallLauncher.exe

"RootKit Buster x32",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180.exe

"Rootkit Buster x64",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180x64.exe

RUBotted,http://files.trendmicro.com/products/rubotted/RUBottedSetup.exe

"Hijack This",http://go.trendmicro.com/free-tools/hijackthis/HiJackThis.exe

WireSharkx64,http://wiresharkdownloads.riverbed.com/wireshark/win64/Wireshark-win64-1.12.1.exe

WireSharkx32,http://wiresharkdownloads.riverbed.com/wireshark/win32/Wireshark-win32-1.12.1.exe

"WireShark Portable",http://wiresharkdownloads.riverbed.com/wireshark/win32/WiresharkPortable-1.12.1.paf.exe

SpyBot,http://spybotupdates.com/files/spybot-2.4.exe

CCleaner,http://download.piriform.com/ccsetup418.exe

"Malware Bytes",http://data-cdn.mbamupdates.com/v2/mbam/consumer/data/mbam-setup-2.0.2.1012.exe

"Emisoft Emergency Kit",http://download11.emsisoft.com/EmsisoftEmergencyKit.zip

"Avast! Free AV",http://files.avast.com/iavs5x/avast_free_antivirus_setup.exe

"McAfee Stinger x32",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger32.exe

"McAfee Stinger x64",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger64.exe

"Microsoft Fixit Portable",http://download.microsoft.com/download/E/2/3/E237A32D-E0A9-4863-B864-9E820C1C6F9A/MicrosoftFixit-portable.exe

"Cain and Abel",http://www.oxid.it/downloads/ca_setup.exe

"@

#convert CSV data into objects

#save converted objects to a variable seen in the entire workflow

$workflow:download = $csv | ConvertFrom-Csv

} #end sequence

Sequence {

foreach -parallel -throttle $ThrottleLimit ($item in $download) {

Write-Verbose -message "Downloading $($item.product)"

_download -Uri $item.uri -Path $path

} #foreach item

} #end sequence

Sequence {

#region SysInternals

if ($Sysinternals) {

#test if subfolder exists and create it if missing

$subfolder = Join-Path -Path $path -ChildPath Sysinternals

if (-Not (Test-Path -Path $subfolder)) {

New-item -ItemType Directory -Path $subfolder

}

#get the page

$sysint = Invoke-WebRequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

#get the links

$links = $sysint.links | Select -Skip 1

foreach -parallel -throttle $ThrottleLimit ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

Write-Verbose -message "Downloading $uri"

_download -Uri $uri -Path $subfolder

} #foreach

} #if SysInternals

} #end sequence

Write-verbose -message "$(Get-Date) Finished $($workflowcommandname)"

} #end workflowThere are rules for a workflow so I had to make a few adjustments. Workflows aren’t designed to run from pipelined input so the CSV data is embedded in the script. Otherwise, you should recognize most of the code. The key difference is that downloads now happen in parallel and throttled.

foreach -parallel -throttle $ThrottleLimit ($item in $download) {

Write-Verbose -message "Downloading $($item.product)"

_download -Uri $item.uri -Path $path

} #foreach itemI do the same thing for the SysInternals links.

foreach -parallel -throttle $ThrottleLimit ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

Write-Verbose -message "Downloading $uri"

_download -Uri $uri -Path $subfolder

} #foreachOnce the workflow is loaded into my session, which you can do by dot-sourcing the script, I can run it the same way I would any command.

PS C:> Get-MyToolsWF -path G: -sysinternals

Use the –Verbose cmdlet if you want to see more detail. Using the workflow and parallel processing, I can download everything in about five minutes! Personally, I don’t find much use for workflows, but I love this parallel feature and hope that someday we’ll see it as part of the main PowerShell language.

The post Building a PowerShell Troubleshooting Toolkit Revisited appeared first on Petri.

Windows 10 GodMode

Michael Pietroforte - 0 comments

Michael Pietroforte is the founder and editor of 4sysops. He is a Microsoft Most Valuable Professional (MVP) with more than 30 years of experience in system administration.

The Windows 10 GodMode lists links to all administration tools in one folder. In addition, you can use Shell Commands and Shell Locations to create more shortcuts to system tools.

Background

The term “god mode” was originally used as cheat code in video games and sometimes also on UNIX systems for a user with no restrictions on the system. On Windows, it is just a shortcut to a folder that lists links to the vast majority of Windows configuration tools. It does not give you privileges that go beyond those of the administrator account.

… read more of Windows 10 GodMode

Copyright © 2006-2014, 4sysops, Digital fingerprint: 3db371642e7c3f4fe3ee9d5cf7666eb0

Related

- Microsoft Application Compatibility Toolkit and Windows XP (1)

- Work Folders – Part 4: Setting up clients (0)

- What’s new in MDOP 2013 R2 (4)

- Can a Surface RT 2 replace a laptop? Absolutely! (13)

- How to upgrade to the Microsoft Deployment Toolkit (MDT) 2013 (0)

Build a Troubleshooting Toolkit using PowerShell

If you are an IT pro, then you are most likely the IT pro that’s on call for your family, friends and neighbors. You get a call that a neighbor’s computer is running slow or experiencing odd behavior. Virus? Malware? Rootkit? Application issues? If you are also like me, then you tend to rely on a collection of free and incredibly useful tools like Trend Micro’s HouseCall, Hijack This or CCleaner. Perhaps you might even need a copy of the latest tools from the Sysinternals site. In the past I’ve grabbed a spare USB key, plugged it in and started downloading files. But this is a time consuming and boring process, which makes it a prime candidate for automation. And in my case that means PowerShell.

Using Invoke-WebRequest

PowerShell 3.0 brought us a new command, Invoke-WebRequest. This cmdlet eliminated the need to use the .NET Framework in scripts. We no longer needed to figure out how to use the Webclient class. Cmdlets are almost always easier to use. If you look at the help for Invoke-WebRequest, then you’ll see how easy it is. All you really need to specify is the URI to the web resource. So for my task all I need is a direct download link to the tool I want to grab.

Invoke-webrequest –uri http://go.trendmicro.com/housecall8/HousecallLauncher64.exe

However, in this situation, I don’t want to write the result to the PowerShell pipeline, I want to save it to a file. Invoke-Webrequest has a parameter for that.

Invoke-webrequest –uri http://go.trendmicro.com/housecall8/HousecallLauncher64.exe -outfile d:HouseCallx64.exe -DisableKeepAlive -UseBasicParsing

I am using a few other parameters since I’m not doing anything else with the connection once I’ve downloaded the file. This should also make this command safer to run in the PowerShell ISE on 3.0 systems. In v3 there was a nasty memory leak when using Invoke-Webrequest in the PowerShell ISE. That has been fixed in v4. So within a few seconds I have the setup file downloaded to drive D:. That is the central part of my download script.

#requires -version 3.0

#create a USB tool drive

<#

.Synopsis

Download tools from the Internet.

.Description

This command will download a set of troubleshooting and diagnostic tools from the Internet. The path will typically be a USB thumbdrive. If you use the -Sysinternals parameter, then all of the SysInternals utilities will also be downloaded to a subfolder called Sysinternals under the given path.

.Example

PS C:> c:scriptsget-mytools.ps1 -path G: -sysinternals

Download all tools from the web and the Sysinternals utilities. Save to drive G:.

.Notes

Last Updated: September 30, 2014

Version : 1.0

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Invoke-WebRequest

#>

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[Parameter(Position=0,Mandatory=$True,HelpMessage="Enter the download path")]

[ValidateScript({Test-Path $_})]

[string]$Path,

[switch]$Sysinternals

)

Write-verbose "Starting $($myinvocation.MyCommand)"

#hashtable of parameters to splat to Write-Progress

$progParam = @{

Activity = "$($myinvocation.MyCommand)"

Status = $Null

CurrentOperation = $Null

PercentComplete = 0

}

#region data

<#

csv data of downloads

The product should be a name or description of the tool.

The URI is a direct download link. The link must end in the executable file name (or zip or msi).

The file will be downloaded and saved locally using the last part of the URI.

#>

$csv = @"

product,uri

HouseCallx64,http://go.trendmicro.com/housecall8/HousecallLauncher64.exe

HouseCallx32,http://go.trendmicro.com/housecall8/HousecallLauncher.exe

"RootKit Buster x32",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180.exe

"Rootkit Buster x64",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180x64.exe

RUBotted,http://files.trendmicro.com/products/rubotted/RUBottedSetup.exe

"Hijack This",http://go.trendmicro.com/free-tools/hijackthis/HiJackThis.exe

WireSharkx64,http://wiresharkdownloads.riverbed.com/wireshark/win64/Wireshark-win64-1.12.1.exe

WireSharkx32,http://wiresharkdownloads.riverbed.com/wireshark/win32/Wireshark-win32-1.12.1.exe

"WireShark Portable",http://wiresharkdownloads.riverbed.com/wireshark/win32/WiresharkPortable-1.12.1.paf.exe

SpyBot,http://spybotupdates.com/files/spybot-2.4.exe

CCleaner,http://download.piriform.com/ccsetup418.exe

"Malware Bytes",http://data-cdn.mbamupdates.com/v2/mbam/consumer/data/mbam-setup-2.0.2.1012.exe

"Emisoft Emergency Kit",http://download11.emsisoft.com/EmsisoftEmergencyKit.zip

"Avast! Free AV",http://files.avast.com/iavs5x/avast_free_antivirus_setup.exe

"McAfee Stinger x32",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger32.exe

"McAfee Stinger x64",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger64.exe

"Microsoft Fixit Portable",http://download.microsoft.com/download/E/2/3/E237A32D-E0A9-4863-B864-9E820C1C6F9A/MicrosoftFixit-portable.exe

"Cain and Abel",http://www.oxid.it/downloads/ca_setup.exe

"@

#convert CSV data into objects

$download = $csv | ConvertFrom-Csv

#endregion

#region private function to download files

Function _download {

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[string]$Uri,

[string]$Path

)

$out = Join-path -path $path -child (split-path $uri -Leaf)

Write-Verbose "Downloading $uri to $out"

#hash table of parameters to splat to Invoke-Webrequest

$paramHash = @{

UseBasicParsing = $True

Uri = $uri

OutFile = $out

DisableKeepAlive = $True

ErrorAction = "Stop"

}

if ($PSCmdlet.ShouldProcess($uri)) {

Try {

Invoke-WebRequest @paramHash

get-item -Path $out

}

Catch {

Write-Warning "Failed to download $uri. $($_.exception.message)"

}

} #should process

} #end download function

#endregion

#region process CSV data

$i=0

foreach ($item in $download) {

$i++

$percent = ($i/$download.count) * 100

Write-Verbose "Downloading $($item.product)"

$progParam.status = $item.Product

$progParam.currentOperation = $item.uri

$progParam.PercentComplete = $percent

Write-Progress @progParam

_download -Uri $item.uri -Path $path

} #foreach item

#endregion

#region SysInternals

if ($Sysinternals) {

#test if subfolder exists and create it if missing

$sub = Join-Path -Path $path -ChildPath Sysinternals

if (-Not (Test-Path -Path $sub)) {

mkdir $sub | Out-Null

}

#get the page

$sysint = invoke-webrequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

#get the links

$links = $sysint.links | Select -Skip 1

#reset counter

$i=0

foreach ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

$i++

$percent = ($i/$links.count) * 100

Write-Verbose "Downloading $uri"

$progParam.status ="SysInternals"

$progParam.currentOperation = $item.innerText

$progParam.PercentComplete = $percent

Write-Progress @progParam

_download -Uri $uri -Path $sub

} #foreach

} #if SysInternals

#endregion

Write-verbose "Finished $($myinvocation.MyCommand)"

Within the script, there’s a string of CSV data. The data contains a description and direct link for all the tools I want to download. You can add or delete these as you see fit. Just make sure the download link ends in a file name. The download function will parse out the last part of the URI and use it to create the local file name.

$out = Join-path -path $path -child (split-path $uri -Leaf)

All you need to do is specify the path, which will usually be a USB thumb drive.

The script has an optional parameter for downloading utilities from the Live.SysInernals.com website. If you opt for this, then the script will create a subfolder for SysInternals tools. That’s the way I like it. To download the tools I first use Invoke-WebRequest to get the listing page.

$sysint = invoke-webrequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

Within this object is a property called Links, which will have links to each tool.

$links = $sysint.links | Select -Skip 1

The first link is to the parent directory, which I don’t want which is why I’m skipping 1. Then for each link I can build the URI from the HREF property.

$uri = http://live.sysinternals.com$($item.href)

The only other thing I’ve done that you might not understand is that I’ve created a function with a non-standard name. I always try to avoid repeating commands or blocks of code. I created the _download function with the intent that it will never be exposed outside of the script. And this is a script which means to run it you need to specify the full path.

PS C:> c:scriptsget-mytools.ps1 -path G: -sysinternals

As I mentioned, I included the CSV data within the script which makes it very portable. But you might want to keep the download data separate from the script. In that case you’ll need a CSV file like this:

product,uri HouseCallx64,http://go.trendmicro.com/housecall8/HousecallLauncher64.exe HouseCallx32,http://go.trendmicro.com/housecall8/HousecallLauncher.exe "RootKit Buster x32",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180.exe "Rootkit Buster x64",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180x64.exe RUBotted,http://files.trendmicro.com/products/rubotted/RUBottedSetup.exe "Hijack This",http://go.trendmicro.com/free-tools/hijackthis/HiJackThis.exe WireSharkx64,http://wiresharkdownloads.riverbed.com/wireshark/win64/Wireshark-win64-1.12.1.exe WireSharkx32,http://wiresharkdownloads.riverbed.com/wireshark/win32/Wireshark-win32-1.12.1.exe "WireShark Portable",http://wiresharkdownloads.riverbed.com/wireshark/win32/WiresharkPortable-1.12.1.paf.exe SpyBot,http://spybotupdates.com/files/spybot-2.4.exe CCleaner,http://download.piriform.com/ccsetup418.exe "Malware Bytes",http://data-cdn.mbamupdates.com/v2/mbam/consumer/data/mbam-setup-2.0.2.1012.exe "Emisoft Emergency Kit",http://download11.emsisoft.com/EmsisoftEmergencyKit.zip "Avast! Free AV",http://files.avast.com/iavs5x/avast_free_antivirus_setup.exe "McAfee Stinger x32",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger32.exe "McAfee Stinger x64",http://downloadcenter.mcafee.com/products/mcafee-avert/Stinger/stinger64.exe "Microsoft Fixit Portable",http://download.microsoft.com/download/E/2/3/E237A32D-E0A9-4863-B864-9E820C1C6F9A/MicrosoftFixit-portable.exe "Cain and Abel",http://www.oxid.it/downloads/ca_setup.exe

And this version of the script.

#requires -version 3.0

#create a USB tool drive

Function Get-MyTool2 {

<#

.Synopsis

Download tools from the Internet.

.Description

This command will download a set of troubleshooting and diagnostic tools from the Internet. The path will typically be a USB thumbdrive. If you use the -Sysinternals parameter, then all of the SysInternals utilities will also be downloaded to a subfolder called Sysinternals under the given path.

.Example

PS C:> Import-csv c:scriptstools.csv | get-mytool2 -path G: -sysinternals

Import a CSV of tool data and pipe to this command. This will download all tools from the web and the Sysinternals utilities. Save to drive G:.

.Notes

Last Updated: September 30, 2014

Version : 1.0

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Invoke-WebRequest

#>

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[Parameter(Position=0,Mandatory=$True,HelpMessage="Enter the download path")]

[ValidateScript({Test-Path $_})]

[string]$Path,

[Parameter(Mandatory=$True,HelpMessage="Enter the tool's direct download URI",

ValueFromPipelineByPropertyName=$True)]

[ValidateNotNullorEmpty()]

[string]$URI,

[Parameter(Mandatory=$True,HelpMessage="Enter the name or tool description",

ValueFromPipelineByPropertyName=$True)]

[ValidateNotNullorEmpty()]

[string]$Product,

[switch]$Sysinternals

)

Begin {

Write-Verbose "Starting $($myinvocation.MyCommand)"

#hashtable of parameters to splat to Write-Progress

$progParam = @{

Activity = "$($myinvocation.MyCommand)"

Status = $Null

CurrentOperation = $Null

PercentComplete = 0

}

Function _download {

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[string]$Uri,

[string]$Path

)

$out = Join-path -path $path -child (split-path $uri -Leaf)

Write-Verbose "Downloading $uri to $out"

#hash table of parameters to splat to Invoke-Webrequest

$paramHash = @{

UseBasicParsing = $True

Uri = $uri

OutFile = $out

DisableKeepAlive = $True

ErrorAction = "Stop"

}

if ($PSCmdlet.ShouldProcess($uri)) {

Try {

Invoke-WebRequest @paramHash

get-item -Path $out

}

Catch {

Write-Warning "Failed to download $uri. $($_.exception.message)"

}

} #should process

} #end download function

} #begin

Process {

Write-Verbose "Downloading $product"

$progParam.status = $Product

$progParam.currentOperation = $uri

Write-Progress @progParam

_download -Uri $uri -Path $path

} #process

End {

if ($Sysinternals) {

#test if subfolder exists and create it if missing

$sub = Join-Path -Path $path -ChildPath Sysinternals

if (-Not (Test-Path -Path $sub)) {

mkdir $sub | Out-Null

}

#get the page

$sysint = Invoke-WebRequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

#get the links

$links = $sysint.links | Select -Skip 1

#reset counter

$i=0

foreach ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

$i++

$percent = ($i/$links.count) * 100

Write-Verbose "Downloading $uri"

$progParam.status ="SysInternals"

$progParam.currentOperation = $item.innerText

$progParam.PercentComplete = $percent

Write-Progress @progParam

_download -Uri $uri -Path $sub

} #foreach

} #if SysInternals

Write-verbose "Finished $($myinvocation.MyCommand)"

} #end

} #end functionThis version has additional parameters that accept pipeline binding by property name, which means you can now run the command like this:

PS C:> Import-csv c:scriptstools.csv | get-mytool2 -path G: -sysinternals

You will need to dot-source this second script to load the function into your session. Otherwise, it works essentially the same. There is one potential drawback to these scripts in that the downloads are all sequential, which means it can take 10 minutes or more to download everything. To build a toolkit even faster, take a look at this alternate approach.

By the way, if you have any favorite troubleshooting or diagnostic tools I hope you’ll let me know. If you can include a direct download link that would be even better.

The post Build a Troubleshooting Toolkit using PowerShell appeared first on Petri.

Another Night in Bora Bora

EXIF Info

Remember, there are two ways to see the EXIF info for each of my shots. You can hover the mouse over and see them, or if you click through to SmugMug, you can click on the little “i” and see this information! BTW, I don’t even know what some of that means… like “Brightness -24026/256″??? Maybe one of you smarties can tell me what that means!

Camera SONY ILCE-7R (Sony A7r)

ISO 800

Exposure Time 25s (25/1)

Name The Bungalows at Night.jpg

Size 7837 x 5884

Date Taken 2014-06-29 19:55:35

Date Modified 2014-07-04 08:47:18File Size 23.88 MB

Flash flash did not fire, compulsory flash mode

Metering pattern

Exposure Program manual

Exposure Bias 0 EV

Exposure Mode manual

Light Source unknown

White Balance auto

Digital Zoom 1.0x

Contrast 0

Saturation 0

Sharpness 0

Color Space sRGB

Brightness -24026/256

Daily Photo – Another Night in Bora Bora

Was every night in Bora Bora this pretty, or just the ones where I took photos? It was EVERY NIGHT! It was so pretty every night, how could you NOT go out and take photos? With that mountain and the amazing architecture over the water… it was so awesome and a real treat to be here.

Another Night in Bora Bora

Photo Information

- Date Taken

- CameraILCE-7R

- Camera MakeSony

- Exposure Time25

- Aperture

- ISO800

- Focal Length

- FlashOff, Did not fire

- Exposure ProgramManual

- Exposure Bias

Creating Colorful HTML Reports

All PowerShell versions

To turn results into colorful custom HTML reports, simply define three script blocks: one that writes the start of the HTML document, one that writes the end, and one that is processed for each object you want to list in the report.

Then, hand over these script blocks to ForEach-Object. It accepts a begin, a process, and an end script block.

Here is a sample script that illustrates this and creates a colorful service state report:

$path = "$env:temp\report.hta"

$beginning = {

@'

<html>

<head>

<title>Report</title>

<STYLE type="text/css">

h1 {font-family:SegoeUI, sans-serif; font-size:20}

th {font-family:SegoeUI, sans-serif; font-size:15}

td {font-family:Consolas, sans-serif; font-size:12}

</STYLE>

</head>

<image src="http://www.yourcompany.com/yourlogo.gif" />

<h1>System Report</h1>

<table>

<tr><th>Status</th><th>Name</th></tr>

'@

}

$process = {

$status = $_.Status

$name = $_.DisplayName

if ($status -eq 'Running')

{

'<tr>'

'<td bgcolor="#00FF00">{0}</td>' -f $status

'<td bgcolor="#00FF00">{0}</td>' -f $name

'</tr>'

}

else

{

'<tr>'

'<td bgcolor="#FF0000">{0}</td>' -f $status

'<td bgcolor="#FF0000">{0}</td>' -f $name

'</tr>'

}

}

$end = {

@'

</table>

</html>

</body>

'@

}

Get-Service |

ForEach-Object -Begin $beginning -Process $process -End $end |

Out-File -FilePath $path -Encoding utf8

Invoke-Item -Path $path

Download VMware Remote Console 7.0

Download VMware Remote Console 7.0

Shellshock and Arch Linux

I’m guessing most people have heard about the security issue that was discovered in bash earlier in the week, which has been nicknamed Shellshock. Most of the details are covered elsewhere, so I thought I would post a little about the handling of the issue in Arch.

I am the Arch Linux contact on the restricted oss-securty mailing list. On Monday (at least in my timezone…), there was a message saying that a significant security issue in bash would be announced on Wednesday. I let the Arch bash maintainer and he got some details.

This bug was CVE-2014-6271. You can test if you are vulnerable by running

x="() { :; }; echo x" bash -c :

If your terminal prints “x“, then you are vulnerable. This is actually more simple to understand than it appears… First we define a function x() which just runs “:“, which does nothing. After the function is a semicolon, followed by a simple echo statement – this is a bit strange, but there is nothing stopping us from doing that. Then this whole function/echo bit is exported as an environmental variable to the bash shell call. When bash loads, it notices a function in the environment and evaluates it. But we have “hidden” the echo statement in that environmental variable and it gets evaluated too… Oops!

The announcement of CVE-2014-6271 was made at 2014-09-24 14:00 UTC. Two minutes and five seconds later, the fix was committed to the Arch Linux [testing] repository, where it was tested for a solid 25 minutes before releasing into our main repositories.

About seven hours later, it was noticed that the fix was incomplete. The simplified version of this breakage is

X='() { function a a>\' bash -c echo

This creates a file named “echo” in the directory where it was run. To track the incomplete fix, CVE-2014-7169 was assigned. A patch was posted by the upstream bash developer to fix this issue on the Wednesday, but not released on the bash ftp site for over 24 hours.

With a second issue discovered so quickly, it is important not to take an overly reactive approach to updating as you run the risk of introducing even worse issues (despite repeated bug reports and panic in the forum and IRC channels). While waiting for the dust to settle, there was another patch posted by Florian Weimer (from Red Hat). This is not a direct fix for any vulnerability (however see below), but rather a hardening patch that attempts to limit potential issues importing functions from the environment. During this time there was also patches posted that disabled importing functions from the environment all together, but this is probably an over reaction.

About 36 hours after the first bug fix, packages were released for Arch Linux that fixed the second CVE and included the hardening patch (which upstream appears to be adopting with minor changes). There were also two other more minor issues found during all of this that were fixed as well – CVE-2014-7186 and CVE-2014-7187.

And that is the end of the story… Even the mystery CVE-2014-6277 is apparently covered by the unofficial hardening patch that has been applied. You can test you bash install using the bashcheck script. On Arch Linux, make sure you have bash>=4.3.026-1 installed.

And because this has been a fun week, upgrade NSS too!

PowerTip: Display Hidden Properties from Object

Summary: Learn how to use Windows PowerShell to display hidden properties from an object.

How can I see if there are any hidden properties on an object I have returned from Windows PowerShell?

How can I see if there are any hidden properties on an object I have returned from Windows PowerShell?

Use the -Force parameter with either Format-List or Format-Table, for example:

Use the -Force parameter with either Format-List or Format-Table, for example:

Get-ChildItem C: | Format-List * -Force

Complete Sci-Fi Spaceship Size Comparison Chart

Here at Geek Beat, our love of all things sci-fi is no secret. Who else do you know with a life-size Han Solo in Carbonite, or a transporter room? So when we heard about this truly epic sci-fi spaceship size comparison chart, we just had to share it!

Put together by German artist Dirk Löchel, the huge 4268 x 5690 pixel graphic features ships from popular science fiction movies, TV shows and video games from the past 40+ years, including: Star Trek, Star Wars, Battlestar Galactica, Stargate, Doctor Who, Babylon 5, EVE online, Mass Effect, Halo and more. The first version was actually created a year ago, but having updated it with a ton of extra information, Löchel says the chart is now complete.

For sake of image quality and organization, only spacecraft between 100 meters and 24000 metres are included – which is why the Death Star and a number of other large ships aren’t present. And sorry Whovians, as Löchel points out on his deviantART page, the TARDIS is both too big and too small to be included.

To help provide a sense of real-world perspective, the diagram also features the International Space Station, which looks tiny in comparison to almost everything else.

Pretty impressive, isn’t it?

[Via: Nerdist]

The post Complete Sci-Fi Spaceship Size Comparison Chart appeared first on Geek Beat.

Azure for Longer Term Backup

I’ve been teaching the AOTG (Ahead of the Game) partner training around the UK, and shortly in Eire. It’s been very interesting talking to Microsoft’s SMB partners and looking at how they sell and utilise Azure. One of the Azure Products this training is advocating is Azure Backup. Last week, when I was teaching this in Manchester, one delegate pointed out that the big downside to Azure backup was that there was not much of a retention period and as such was not helpful to the delegate’s customers.

Fast forward a few days, and the wish has come true. I had a conference call this morning with the Azure backup who told me that this request had been heard loud and clear and is now in place. He pointed me to the blog post at: http://azure.microsoft.com/blog/2014/09/11/announcing-long-term-retention-for-azure-backup/

Sure enough, you can get all the backup you need (well all reasonable backups!). And the maximum retention period is 9 years, as the blog post explains.

One word: Awesome!

PowerCLI in the vSphere Web Client–Announcing PowerActions

You don’t know how excited I am to write this! Around a year ago I presented something we were working on internal as a tech preview for my VMworld session, the response was phenomenal, if you were there you would remember people standing up and clapping and asking when this awesomeness would be available, its taken a while but its here and its worth the wait. So what is this that I am so excited about?

PowerActions is a new fling from VMware which can be downloaded here, it adds the automation power of PowerCLI into the web client for you to use your scripts and automation power back inside the client, have you ever wanted to right click an object in the web client and run a custom automation action, maybe return the results and then work with them further all from the convenience of the web client…. Now you can!

This works in 2 ways….

PowerShell console in the Web Client

Firstly you can access a PowerCLI console straight in the web interface, even in safari, this fling allows for a dedicated host to be used as a PowerShell host and this machine will be responsible for running the PowerCLI actions, once its setup you will access the console from within the web client and all commands will run remotely on the PowerShell host, it even uses your current logged on credentials to launch the scripts meaning you don’t have to connect the PowerCLI session.

You can use tab completion on your cmdlets and even use other PowerShell snapins and modules to control any PowerShell enabled infrastructure to extend your automation needs within the vSphere Web Client.

Right Click your objects

Right Click your objects

Secondly you can now right click an object in the Web Client and create a dedicated script which will work against this object, have the ability to extend your web client and take the object as an input to use inside your script.

This comes with 2 options, Create a script and also execute a script.

My Scripts and Shared Scripts

Not only can you create your own scripts to run against objects in the web client but advanced admins can create scripts and share them with all users of the web client by storing them in the Shared Scripts section of this fling, read the documentation to find out more about how to do this. This gives the great ability to have not only shared scripts but actually a golden set of scripts which all users of the web client can use while you keep your items in a separate area “My Scripts”, enabling each user to have their own custom actions.

Download and read more

Download the fling from the VMware Labs site here, also make sure you grab the document from the same site and also check out the great post on the PowerCLI Blog for more information here.

Check out the video for a quick introduction

To help with the details I shot a quick install and usage video that covers the basics, make sure you read the PDF that comes with the fling and make sure you are active, if you like this then let is know, if you want more then let us know…. basically give us feedback!

This post was original written and posted by Alan Renouf here: PowerCLI in the vSphere Web Client–Announcing PowerActions

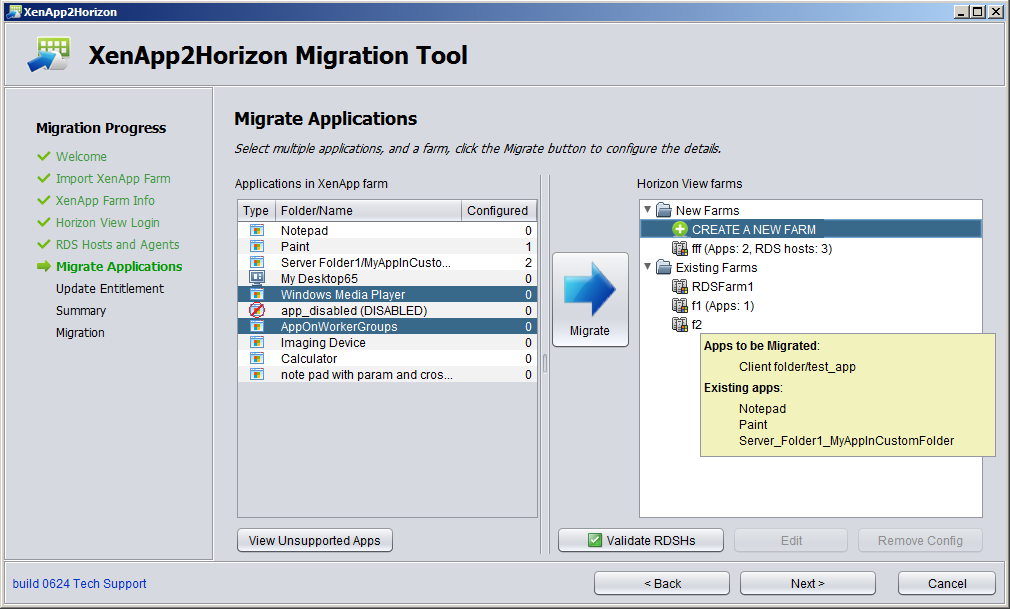

Latest Fling from VMware Labs - XenApp2Horizon

- Validate the View agent status on RDS hosts (from View connection server, and XenApp server)

- Create farms

- Validate application availability on RDS hosts

- Migrate application/desktop to one or multiple farms (new or existing)

- Migrate entitlements to new or existing applications/desktops. Combination of application entitlements are supported

- Check environment

- Identify incompatible features and configuration

Remove Lingering Objects that cause AD Replication error 8606 and friends

Introducing the Lingering Object Liquidator

Hi all, Justin Turner here ---it's been a while since my last update. The goal of this post is to discuss what causes lingering objects and show you how to download, and then use the new GUI-based Lingering Object Liquidator (LOL) tool to remove them. This is a beta version of the tool, and it is currently not yet optimized for use in large Active Directory environments.

This is a long article with lots of background and screen shots, so plug-in or connect to a fast connection when viewing the full entry. The bottom of this post contains a link to my AD replication troubleshooting TechNet lab for those that want to get their hands dirty with the joy that comes with finding and fixing AD replication errors. I’ve also updated the post with a link to my Lingering Objects hands-on lab from TechEd Europe.

Overview of Lingering Objects

Lingering objects are objects in AD than have been created, replicated, deleted, and then garbage collected on at least the DC that originated the deletion but still exist as live objects on one or more DCs in the same forest. Lingering object removal has traditionally required lengthy cleanup sessions using tools like LDP or repadmin /removelingeringobjects. The removal story improved significantly with the release of repldiag.exe. We now have another tool for our tool belt: Lingering Object Liquidator. There are related topics such as “lingering links” which will not be covered in this post.

Lingering Objects Drilldown

The dominant causes of lingering objects are

1. Long-term replication failures

While knowledge of creates and modifies are persisted in Active Directory forever, replication partners must inbound replicate knowledge of deleted objects within a rolling Tombstone Lifetime (TSL) # of days (default 60 or 180 days depending on what OS version created your AD forest). For this reason, it is important to keep your DCs online and replicating all partitions between all partners within a rolling TSL # of days. Tools like REPADMIN /SHOWREPL * /CSV, REPADMIN /REPLSUM and AD Replication Status should be used to continually identify and resolve replication errors in your AD forest.

2. Time jumps