Today we released a major set of updates to Microsoft Azure. Today’s updates include:

-

DocumentDB: Preview of a New NoSQL Document Service for Azure

-

Search: Preview of a New Search-as-a-Service offering for Azure

-

Virtual Machines: Portal support for SQL Server AlwaysOn + community-driven VMs

-

Web Sites: Support for Web Jobs and Web Site processes in the Preview Portal

-

Azure Insights: General Availability of Microsoft Azure Monitoring Services Management Library

-

API Management: Support for API Management REST APIs

All of these improvements are now available to use immediately (note that some features are still in preview). Below are more details about them:

DocumentDB: Announcing a New NoSQL Document Service for Azure

I’m excited to announce the preview of our new DocumentDB service - a NoSQL document database service designed for scalable and high performance modern applications. DocumentDB is delivered as a fully managed service (meaning you don’t have to manage any infrastructure or VMs yourself) with an enterprise grade SLA.

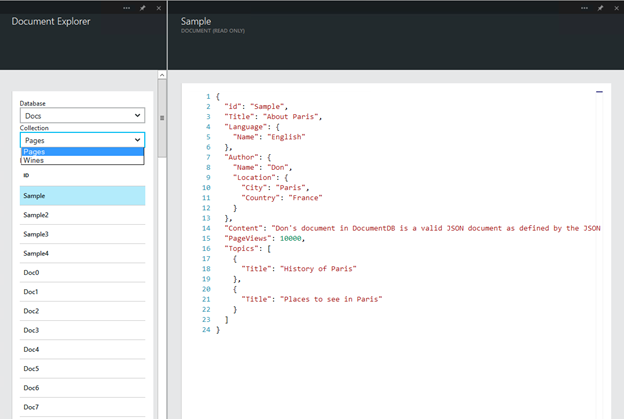

As a NoSQL store, DocumentDB is truly schema-free. It allows you to store and query any JSON document, regardless of schema. The service provides built-in automatic indexing support – which means you can write JSON documents to the store and immediately query them using a familiar document oriented SQL query grammar. You can optionally extend the query grammar to perform service side evaluation of user defined functions (UDFs) written in server-side JavaScript as well.

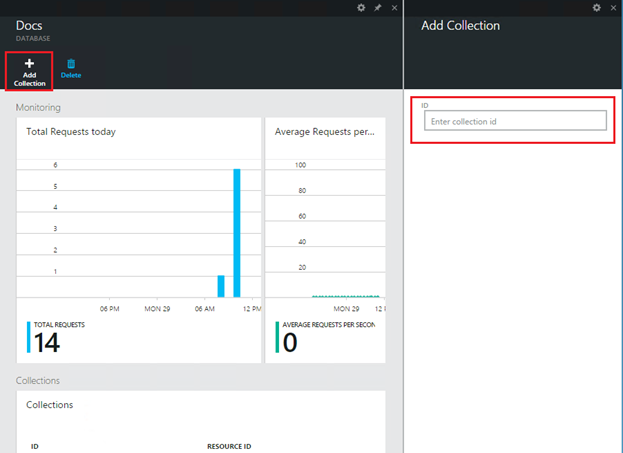

DocumentDB is designed to linearly scale to meet the needs of your application. The DocumentDB service is purchased in capacity units, each offering a reservation of high performance storage and dedicated performance throughput. Capacity units can be easily added or removed via the Azure portal or REST based management API based on your scale needs. This allows you to elastically scale databases in fine grained increments with predictable performance and no application downtime simply by increasing or decreasing capacity units.

Over the last year, we have used DocumentDB internally within Microsoft for several high-profile services. We now have DocumentDB databases that are each 100s of TBs in size, each processing millions of complex DocumentDB queries per day, with predictable performance of low single digit ms latency. DocumentDB provides a great way to scale applications and solutions like this to an incredible size.

DocumentDB also enables you to tune performance further by customizing the index policies and consistency levels you want for a particular application or scenario, making it an incredibly flexible and powerful data service for your applications. For queries and read operations, DocumentDB offers four distinct consistency levels - Strong, Bounded Staleness, Session, and Eventual. These consistency levels allow you to make sound tradeoffs between consistency and performance. Each consistency level is backed by a predictable performance level ensuring you can achieve reliable results for your application.

DocumentDB has made a significant bet on ubiquitous formats like JSON, HTTP and REST – which makes it easy to start taking advantage of from any Web or Mobile applications. With today’s release we are also distributing .NET, Node.js, JavaScript and Python SDKs. The service can also be accessed through RESTful HTTP interfaces and is simple to manage through the Azure preview portal.

Provisioning a DocumentDB account

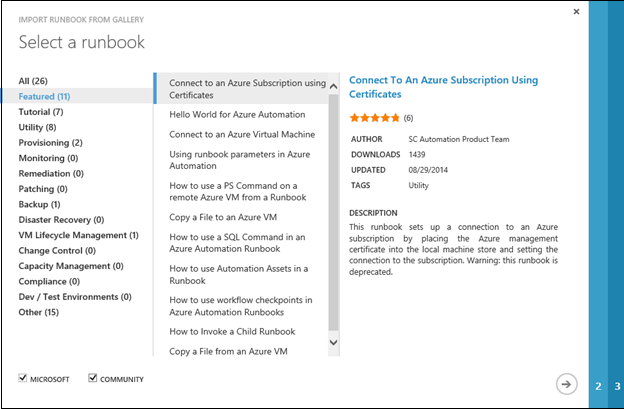

To get started with DocumentDB you provision a new database account. To do this, use the new Azure Preview Portal (http://portal.azure.com), click the Azure gallery and select the Data, storage, cache + backup category, and locate the DocumentDB gallery item.

Once you select the DocumentDB item, choose the Create command to bring up the Create blade for it.

In the create blade, specify the name of the service you wish to create, the amount of capacity you wish to scale your DocumentDB instance to, and the location around the world that you want to deploy it (e.g. the West US Azure region):

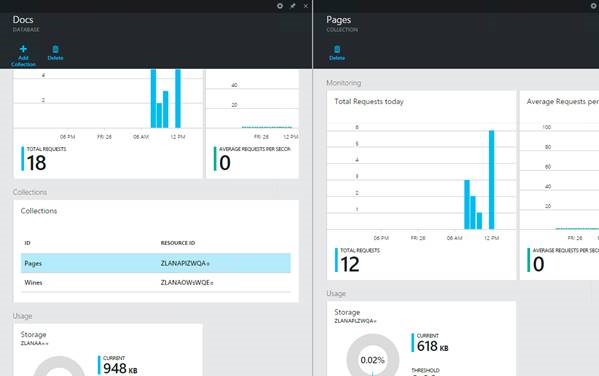

Once provisioning is complete, you can start to manage your DocumentDB account by clicking the new instance icon on your Azure portal dashboard.

The keys tile can be used to retrieve the security keys to use to access the DocumentDB service programmatically.

Developing with DocumentDB

DocumentDB provides a number of different ways to program against it. You can use the REST API directly over HTTPS, or you can choose from either the .NET, Node.js, JavaScript or Python client SDKs.

The JSON data I am going to use for this example are two families:

// AndersonFamily.json file

{

"id": "AndersenFamily",

"lastName": "Andersen",

"parents": [

{ "firstName": "Thomas" },

{ "firstName": "Mary Kay" }

],

"children": [

{ "firstName": "John", "gender": "male", "grade": 7 }

],

"pets": [

{ "givenName": "Fluffy" }

],

"address": { "country": "USA", "state": "WA", "city": "Seattle" }

}

and

// WakefieldFamily.json file

{

"id": "WakefieldFamily",

"parents": [

{ "familyName": "Wakefield", "givenName": "Robin" },

{ "familyName": "Miller", "givenName": "Ben" }

],

"children": [

{

"familyName": "Wakefield",

"givenName": "Jesse",

"gender": "female",

"grade": 1

},

{

"familyName": "Miller",

"givenName": "Lisa",

"gender": "female",

"grade": 8

}

],

"pets": [

{ "givenName": "Goofy" },

{ "givenName": "Shadow" }

],

"address": { "country": "USA", "state": "NY", "county": "Manhattan", "city": "NY" }

}

Using the NuGet package manager in Visual Studio, I can search for and install the DocumentDB .NET package into any .NET application. With the URI and Authentication Keys for the DocumentDB service that I retrieved earlier from the Azure Management portal, I can then connect to the DocumentDB service I just provisioned, create a Database, create a Collection, Insert some JSON documents and immediately start querying for them:

using (client = new DocumentClient(new Uri(endpoint), authKey))

{

var database = new Database { Id = "ScottsDemoDB" };

database = await client.CreateDatabaseAsync(database);

var collection = new DocumentCollection { Id = "Families" };

collection = await client.CreateDocumentCollectionAsync(database.SelfLink, collection);

//DocumentDB supports strongly typed POCO objects and also dynamic objects

dynamic andersonFamily = JsonConvert.DeserializeObject(File.ReadAllText(@".\Data\AndersonFamily.json"));

dynamic wakefieldFamily = JsonConvert.DeserializeObject(File.ReadAllText(@".\Data\WakefieldFamily.json"));

//persist the documents in DocumentDB

await client.CreateDocumentAsync(collection.SelfLink, andersonFamily);

await client.CreateDocumentAsync(collection.SelfLink, wakefieldFamily);

//very simple query returning the full JSON document matching a simple WHERE clause

var query = client.CreateDocumentQuery(collection.SelfLink, "SELECT * FROM Families f WHERE f.id = 'AndersenFamily'");

var family = query.AsEnumerable().FirstOrDefault();

Console.WriteLine("The Anderson family have the following pets:");

foreach (var pet in family.pets)

{

Console.WriteLine(pet.givenName);

}

//select JUST the child record out of the Family record where the child's gender is male

query = client.CreateDocumentQuery(collection.DocumentsLink, "SELECT * FROM c IN Families.children WHERE c.gender='male'");

var child = query.AsEnumerable().FirstOrDefault();

Console.WriteLine("The Andersons have a son named {0} in grade {1} ", child.firstName, child.grade);

//cleanup test database

await client.DeleteDatabaseAsync(database.SelfLink);

}

As you can see above – the .NET API for DocumentDB fully supports the .NET async pattern, which makes it ideal for use with applications you want to scale well.

Server-side JavaScript Stored Procedures

If I wanted to perform some updates affecting multiple documents within a transaction, I can define a stored procedure using JavaScript that swapped pets between families. In this scenario it would be important to ensure that one family didn’t end up with all the pets and another ended up with none due to something unexpected happening. Therefore if an error occurred during the swap process, it would be crucial that the database rollback the transaction and leave things in a consistent state. I can do this with the following stored procedure that I run within the DocumentDB service:

function SwapPets(family1Id, family2Id) {

var context = getContext();

var collection = context.getCollection();

var response = context.getResponse();

collection.queryDocuments(collection.getSelfLink(), 'SELECT * FROM Families f where f.id = "' + family1Id + '"', {},

function (err, documents, responseOptions) {

var family1 = documents[0];

collection.queryDocuments(collection.getSelfLink(), 'SELECT * FROM Families f where f.id = "' + family2Id + '"', {},

function (err2, documents2, responseOptions2) {

var family2 = documents2[0];

var itemSave = family1.pets;

family1.pets = family2.pets;

family2.pets = itemSave;

collection.replaceDocument(family1._self, family1,

function (err, docReplaced) {

collection.replaceDocument(family2._self, family2, {});

});

response.setBody(true);

});

});

}

If an exception is thrown in the JavaScript function due to for instance a concurrency violation when updating a record, the transaction is reversed and system is returned to the state it was in before the function began.

It’s easy to register the stored procedure in code like below (for example: in a deployment script or app startup code):

//register a stored procedure

StoredProcedure storedProcedure = new StoredProcedure

{

Id = "SwapPets",

Body = File.ReadAllText(@".\JS\SwapPets.js")

};

storedProcedure = await client.CreateStoredProcedureAsync(collection.SelfLink, storedProcedure);

And just as easy to execute the stored procedure from within your application:

//execute stored procedure passing in the two family documents involved in the pet swap

dynamic result = await client.ExecuteStoredProcedureAsync<dynamic>(storedProcedure.SelfLink, "AndersenFamily", "WakefieldFamily");

If we checked the pets now linked to the Anderson Family we’d see they have been swapped.

Learning More

It’s really easy to get started with DocumentDB and create a simple working application in a couple of minutes. The above was but one simple example of how to start using it. Because DocumentDB is schema-less you can use it with literally any JSON document. Because it performs automatic indexing on every JSON document stored within it, you get screaming performance when querying those JSON documents later. Because it scales linearly with consistent performance, it is ideal for applications you think might get large.

You can learn more about DocumentDB from the new DocumentDB development center here.

Search: Announcing preview of new Search as a Service for Azure

I’m excited to announce the preview of our new Azure Search service. Azure Search makes it easy for developers to add great search experiences to any web or mobile application.

Azure Search provides developers with all of the features needed to build out their search experience without having to deal with the typical complexities that come with managing, tuning and scaling a real-world search service. It is delivered as a fully managed service with an enterprise grade SLA. We also are releasing a Free tier of the service today that enables you to use it with small-scale solutions on Azure at no cost.

Provisioning a Search Service

To get started, let’s create a new search service. In the Azure Preview Portal (http://portal.azure.com), navigate to the Azure Gallery, and choose the Data storage, cache + backup category, and locate the Azure Search gallery item.

Locate the “Search” service icon and select Create to create an instance of the service:

You can choose from two Pricing Tier options: Standard which provides dedicated capacity for your search service, and a Free option that allows every Azure subscription to get a free small search service in a shared environment.

The standard tier can be easily scaled up or down and provides dedicated capacity guarantees to ensure that search performance is predictable for your application. It also supports the ability to index 10s of millions of documents with lots of indexes.

The free tier is limited to 10,000 documents, up to 3 indexes and has no dedicated capacity guarantees. However it is also totally free, and also provides a great way to learn and experiment with all of the features of Azure Search.

Managing your Azure Search service

After provisioning your Search service, you will land in the Search blade within the portal - which allows you to manage the service, view usage data and tune the performance of the service:

I can click on the Scale tile above to bring up the details of the number of resources allocated to my search service. If I had created a Standard search service, I could use this to increase the number of replicas allocated to my service to support more searches per second (or to provide higher availability) and the number of partitions to give me support for higher numbers of documents within my search service.

Creating a Search Index

Now that the search service is created, I need to create a search index that will hold the documents (data) that will be searched. To get started, I need two pieces of information from the Azure Portal, the service URL to access my Azure Search service (accessed via the Properties tile) and the Admin Key to authenticate against the service (accessed via the Keys title).

Using this search service URL and admin key, I can start using the search service APIs to create an index and later upload data and issue search requests. I will be sending HTTP requests against the API using that key, so I’ll setup a .NET HttpClient object to do this as follows:

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("api-key", "19F1BACDCD154F4D3918504CBF24CA1F");

I’ll start by creating the search index. In this case I want an index I can use to search for contacts in my dataset, so I want searchable fields for their names and tags; I also want to track the last contact date (so I can filter or sort on that later on) and their address as a lat/long location so I can use it in filters as well. To make things easy I will be using JSON.NET (to do this, add the NuGet package to your VS project) to serialize objects to JSON.

var index = new

{

name = "contacts",

fields = new[]

{

new { name = "id", type = "Edm.String", key = true },

new { name = "fullname", type = "Edm.String", key = false },

new { name = "tags", type = "Collection(Edm.String)", key = false },

new { name = "lastcontacted", type = "Edm.DateTimeOffset", key = false },

new { name = "worklocation", type = "Edm.GeographyPoint", key = false },

}

};

var response = client.PostAsync("https://scottgu-dev.search.windows.net/indexes/?api-version=2014-07-31-Preview",

new StringContent(JsonConvert.SerializeObject(index), Encoding.UTF8, "application/json")).Result;

response.EnsureSuccessStatusCode();

You can run this code as part of your deployment code or as part of application initialization.

Populating a Search Index

Azure Search uses a push API for indexing data. You can call this API with batches of up to 1000 documents to be indexed at a time. Since it’s your code that pushes data into the index, the original data may be anywhere: in a SQL Database in Azure, DocumentDb database, blob/table storage, etc. You can even populate it with data stored on-premises or in a non-Azure cloud provider.

Note that indexing is rarely a one-time operation. You will probably have an initial set of data to load from your data source, but then you will want to push new documents as well as update and delete existing ones. If you use Azure Websites, this is a natural scenario for Webjobs that can run your indexing code regularly in the background.

Regardless of where you host it, the code to index data needs to pull data from the source and push it into Azure Search. In the example below I’m just making up data, but you can see how I could be using the result of a SQL or LINQ query or anything that produces a set of objects that match the index fields we identified above.

var batch = new