Some generous soul has re-traced a bunch of the Apple emoji to provide much higher res versions of them for use in presentations.

Rolandt

Shared posts

4 lessons I learned (the hard way) about health, overwork, and life as an academic

I almost died this summer of 2022. Four times.

This summer, I learned (the hard way) 4 lessons about health, overwork, and life as an academic.

(1) “If you don’t make time for your wellness, you’ll be forced to make time for your sickness” — Joyce Sunada

I was, in fact, forced to take time off because of COVID and its sequelae. This week I sat down with my physician and we did a “post mortem” of my illness. He said: “you have overworked for a very long time. You push your physical limits all the time. You are very energetic, active and passionate about your work, but you keep pushing yourself. Not healthy”.

This was really embarrassing to hear from my treating doctor and a wake up call: I keep advocating for NOT overworking, and yet, in some twisted way, I kept doing it because it didn’t feel (yet) like I was exhausted.

Until it did.

In May, I went to Germany and the US. In normal times, and under normal circumstances, these two trips would have been a piece of cake because I am/was used to travel All The Time. However, this year has been particularly busy with teaching, administrative duties, and course preparation, reading theses, providing feedback. SUPER BUSY.

So (we all know where this is going…) I did not pay attention to my tiredness (in May 2022) because I attributed it to jet lag from going to Germany. But when I went to Washington DC, I was already tired, and kept pushing myself. The last day, two dear friends of mine said: “you look TIRED. You need to take care of yourself. We need you healthy.” (Thanks, Leila and Sameer).

When I returned from Washington DC, my Mom got COVID, so I had to take care of her. She had a very mild case, but I think being stressed about her health was the straw that broke the camel’s back. I then got COVID, and my body was already very weakened from travel, stress and overwork.

We all know how this went. I spent all of June sick (and taking care of a COVID patient and then getting it myself!), July so sick with COVID sequelae that I almost died 3-4 times (depends on how you count), and August in slow-but-steady recovery.

The second lesson is, therefore:

(2) Pay attention to signs of potential burnout.

I had felt burnt out before and could recognise the signs: de-motivated, didn’t want to read academic articles, exhausted with no apparent reason. But again, the travel hid all the signs. I had them all, I just didn’t see them. This is particularly important in academia: we attribute burnout to other factors: “maybe I’m just tired this week”, or “it will get better once I get all these 457 things out of the way and I can clear my deck”. Well, I got news for you: the deck is never cleared.

I’ve written on my blog several times about the importance of not overwork, but for some reason, when it came down to it, I did not recognise the signs that clearly showed anybody except me (because I was too blind to see them) that I was entirely, completely and absolutely burnt out.

(3) Seek support (and this includes emotional support).

In desperation about my lack of health improvement, I tweeted “I’ve lost all interest in academia and all I care about is being healthy again”. I received HUNDREDS of responses sending love and wishes for good health.

The unthinkable has happened: for 9 weeks, I’ve been sick. For 5 of those I’ve struggled to remain alive. And I’ve lost all interest in doing any academic work. My only interest right now is staying alive and improving my health.

— Dr Raul Pacheco-Vega (@raulpacheco) August 5, 2022

The bird app can be hell sometimes, but it is definitely a truth that my Twitter community kept me afloat (my Facebook friends also deserve a very big Thank You because they kept checking in on me, daily). I did not realize I could have so much support from the Twitter hellsite, and it really helped me improve. I received so much emotional support that I began feeling extremely hopeful that I would be, eventuallly, able to recover fully (and I am currently in the process of doing just that).

My physician has prohibited me from returning to my usual hyper-energetic self. He said, deadpan: “I want you to return to normal people’s normal, not YOUR normal — this means dialing it down on the workload and intensity”. As a neoinstitutional theorist, I follow rules to a T. And I have no plans of dying any time soon, so I am paying close attention to my body and how I well am feeling on an hourly, daily and weekly basis. If I need to take a rest, I take it, work life be damned.

But I did not get well UNTIL I went to see a pulmonologist.

So the fourth lesson is:

(4) Be your own advocate for your health.

I went to a general MD, then the otorrhinolaryngologist, and it wasn’t until I went to the pulmonologist that we figured out what was wrong and how to fix it. COVID is an extraordinarily strange illness, and it’s so unpredictable nobody really knows the potential outcomes. I am lucky to be alive. Given that I had an immune system weakened from overwork and exhaustion, it was pure sheer luck that I made it alive and in one piece.

What really brought home the severity of my illness and the importance of taking care of myself was this utterance by my pulmonologist: “you survived this time – you probably won’t get another chance – your body won’t withstand another crisis like this. TAKE CARE!”.

YIKES.

In closing: Academic friends: look at yourselves in my mirror. Take care of yourselves *before* you are forced to take time off to take do exactly that: take care of yourselves.

I HAVE, finally, learned my lesson.

The Public Packet Infrastructure

Stable Diffusion is a really big deal

If you haven't been paying attention to what's going on with Stable Diffusion, you really should be.

Stable Diffusion is a new "text-to-image diffusion model" that was released to the public by Stability.ai six days ago, on August 22nd.

It's similar to models like Open AI's DALL-E, but with one crucial difference: they released the whole thing.

You can try it out online at beta.dreamstudio.ai (currently for free). Type in a text prompt and the model will generate an image.

You can download and run the model on your own computer (if you have a powerful enough graphics card). Here's an FAQ on how to do that.

You can use it for commercial and non-commercial purposes, under the terms of the Creative ML OpenRAIL-M license - which lists some usage restrictions that include avoiding using it to break applicable laws, generate false information, discriminate against individuals or provide medical advice.

In just a few days, there has been an explosion of innovation around it. The things people are building are absolutely astonishing.

I've been tracking the r/StableDiffusion subreddit and following Stability.ai founder Emad Mostaque on Twitter.

img2img

Generating images from text is one thing, but generating images from other images is a whole new ballgame.

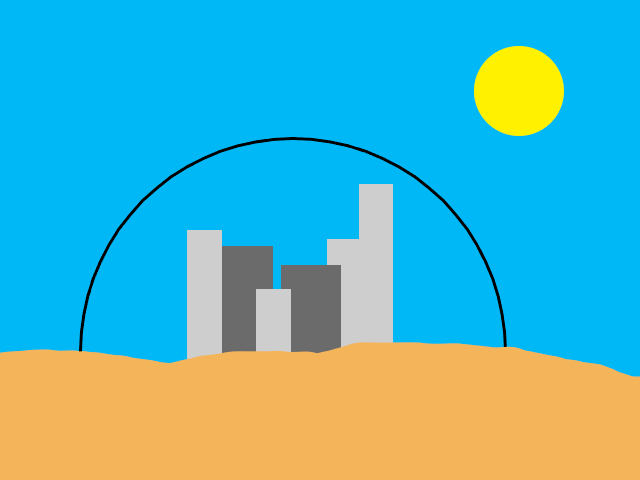

My favourite example so far comes from Reddit user argaman123. They created this image:

And added this prompt (or "something along those lines"):

A distant futuristic city full of tall buildings inside a huge transparent glass dome, In the middle of a barren desert full of large dunes, Sun rays, Artstation, Dark sky full of stars with a shiny sun, Massive scale, Fog, Highly detailed, Cinematic, Colorful

The model produced the following two images:

These are amazing. In my previous experiments with DALL-E I've tried to recreate photographs I have taken, but getting the exact composition I wanted has always proved impossible using just text. With this new capability I feel like I could get the AI to do pretty much exactly what I have in my mind.

Imagine having an on-demand concept artist that can generate anything you can imagine, and can iterate with you towards your ideal result. For free (or at least for very-cheap).

You can run this today on your own computer, if you can figure out how to set it up. You can try it in your browser using Replicate, or Hugging Face. This capability is apparently coming to the DreamStudio interface next week.

There's so much more going on.

stable-diffusion-webui is an open source UI you can run on your own machine providing a powerful interface to the model. Here's a Twitter thread showing what it can do.

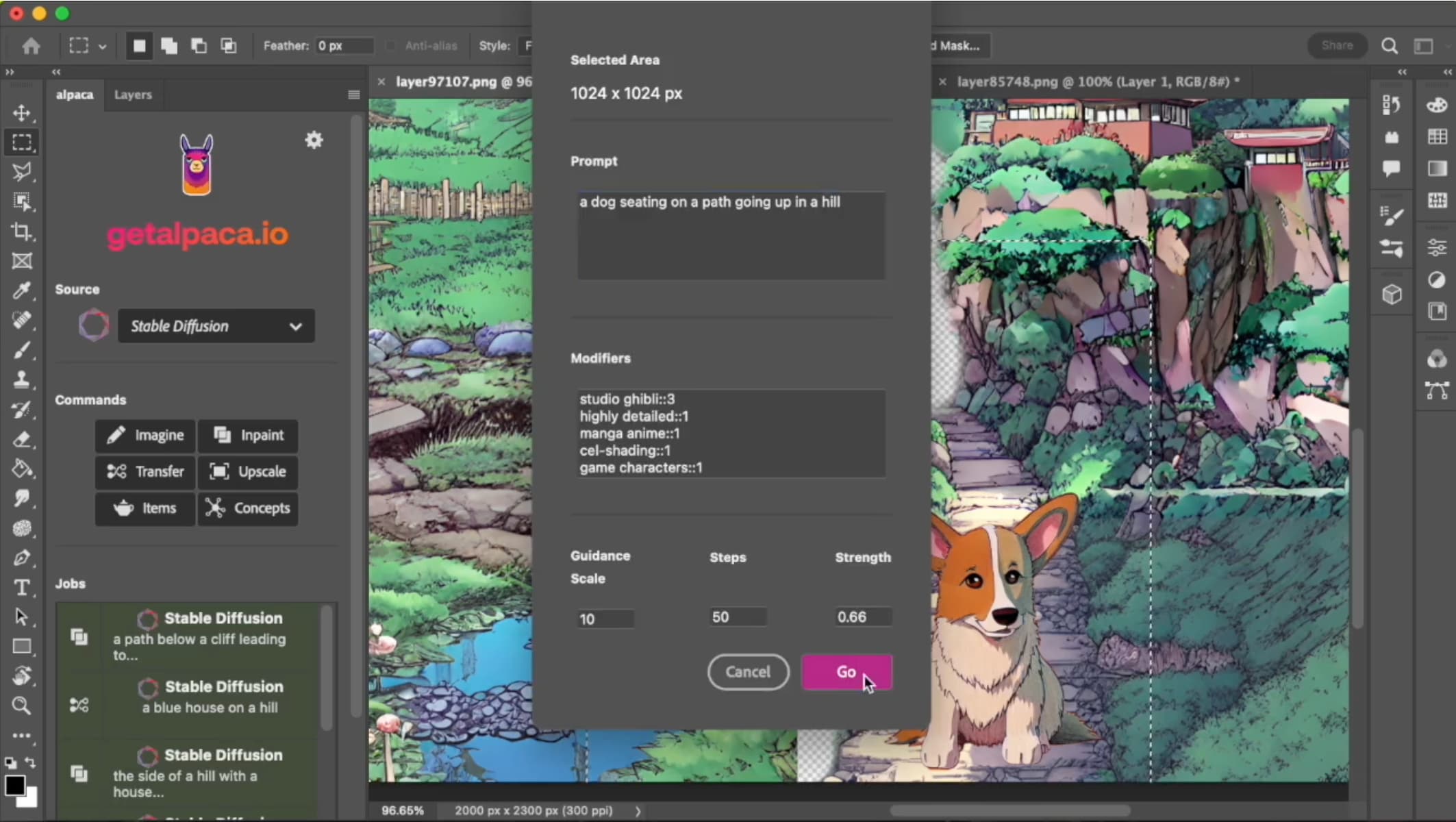

Reddit user alpacaAI shared a video demo of a Photoshop plugin they are developing which has to be seen to be believed. They have a registration form up on getalpaca.io for people who want to try it out once it's ready.

Reddit user Hoppss ran a 2D animated clip from Disney's Aladdin through img2img frame-by frame, using the following parameters:

--prompt "3D render" --strength 0.15 --seed 82345912 --n_samples 1 --ddim_steps 100 --n_iter 1 --scale 30.0 --skip_grid

The result was a 3D animated video. Not a great quality one, but pretty stunning for a shell script and a two word prompt!

The best description I've seen so far of an iterative process to build up an image using Stable Diffusion comes from Andy Salerno: 4.2 Gigabytes, or: How to Draw Anything.

Ben Firshman has published detailed instructions on how to Run Stable Diffusion on your M1 Mac’s GPU.

And there's so much more to come

All of this happened in just six days since the model release. Emad Mostaque on Twitter:

We use as much compute as stable diffusion used every 36 hours for our upcoming open source models

This made me think of Google's Parti paper, which included a demonstration that showed that once the model was trained to 200bn parameters it could generate images with correctly spelled text!

Ethics: will you be an AI vegan?

I'm finding the ethics of all of this extremely difficult.

Stable Diffusion has been trained on millions of copyrighted images scraped from the web.

The Stable Diffusion v1 Model Card has the full details, but the short version is that it uses LAION-5B (5.85 billion image-text pairs) and its laion-aesthetics v2 5+ subset (which I think is ~600M pairs filtered for aesthetics). These images were scraped from the web.

I'm not qualified to speak to the legality of this. I'm personally more concerned with the morality.

The final model is I believe around 4.2GB of data - a binary blob of floating point numbers. The fact that it can compress such an enormous quantity of visual information into such a small space is itself a fascinating detail.

As such, each image in the training set contributes only a tiny amount of information - a few tweaks to some numeric weights spread across the entire network.

But... the people who created these images did not give their consent. And the model can be seen as a direct threat to their livelihoods. No-one expected creative AIs to come for the artist jobs first, but here we are!

I'm still thinking through this, and I'm eager to consume more commentary about it. But my current mental model is to think about this in terms of veganism, as an analogy for people making their own personal ethical decisions.

I know many vegans. They have access to the same information as I do about the treatment of animals, and they have made informed decisions about their lifestyle, which I fully respect.

I myself remain a meat-eater.

There will be many people who will decide that the AI models trained on copyrighted images are incompatible with their values. I understand and respect that decision.

But when I look at that img2img example of the futuristic city in the dome, I can't resist imagining what I could do with that capability.

If someone were to create a vegan model, trained entirely on out-of-copyright images, I would be delighted to promote it and try it out. If its results were good enough, I might even switch to it entirely.

Understanding the training data

Update: 30th August 2022. Andy Baio and I worked together on a deep dive into the training data behind Stable Diffusion. Andy wrote up some of our findings in Exploring 12 Million of the 2.3 Billion Images Used to Train Stable Diffusion’s Image Generator.

Indistinguishable from magic

Just a few months ago, if I'd seen someone on a fictional TV show using an interface like that Photoshop plugin I'd have grumbled about how that was a step too far even by the standards of American network TV dramas.

Science fiction is real now. Machine learning generative models are here, and the rate with which they are improving is unreal. It's worth paying real attention to what they can do and how they are developing.

I'm tweeting about this stuff a lot these days. Follow @simonw on Twitter for more.

Join the World Photography Day 2022 photo contest!

Update: World Photography Day Contest dates extended! You’re right on time—Submissions close September 19.

World Photography Day 2022 is August 19, and for the second year in a row, we’re celebrating with a multi-category photo contest! This year, besides some fantastic prizes, we will have a new, special category dedicated to virtual photography. Are your photos ready for the spotlight?

The categories for this year’s World Photography Day contest are:

Nature: Give us your view of the natural world, whether that’s aerial shots, landscapes, underwater, astrophotography, weather, or anything else based in nature.

People: Get ready for your close-up with photos of fashion, portrait, wedding, family, travel, street, documentary, and more.

Animals: Put your four-legged friends (or six-legged, or winged!) in the spotlight, with a category focused on pets, wildlife, and insects.

Objects and structures: Show us your unique vision with this category made for architecture, abstract, still life, and other objects and gadgets of the photography world.

Virtual: Bring digital worlds to life with our virtual photography category, encompassing digital avatars, in-game photography, screen captures, and more.

How to enter?

To join the fun, add up to five (5) photos to the World Photography Day Contest 2022 group photo pool and tag them with one of the following category tags: WPD22Nature, WPD22People, WPD22Animals, WPD22Objects, or WPD22Virtual—depending on which category or categories you are entering. One category tag per entry, please! The contest will remain open until September 19, 2022, and winners will be announced on or about September 22, 2022.

More questions? Find answers in the contest FAQs here.

What can I win?

Five winners (one from each category) will receive:

A camera strap of your choice from our partners at Peak Design

A free 1-Year Flickr Pro subscription

An 11”x14” Flickr Metal Print of your prize-winning photo

We’re looking forward to seeing your photos in one or all of these contest categories, so get over to the World Photography Day group to join in and read the full contest details. Happy shooting!

The Language Game

It is well worth the time for any educator to become familiar with Wittgenstein's concept of the 'language game' - that is, the idea that language is not a set of rules, meanings and syntax but rather a "community-wide game of charades, where each new game builds on those that have gone before." This is important because "it is constantly re-contrived generation after generation." Indeed, "we talk without knowing the rules of our language just as we play tennis without knowing the laws of physics, or sing without knowing music theory. In this very real sense, we speak, and do so skillfully and effectively, without knowing our language at all."

Web: [Direct Link] [This Post]Democracy dies behind a paywall

It doesn't matter what the story is, I'm just glad someone besides me is using this phrase, because it's exactly right. Poynter is very limited in its advocacy: "Paywalls bolster news organizations' bottom lines, but leave Americans in the dark. As a public service, let everyone read election stories for free." It should be more than just election stories, and it should be for everyone. There's a very close link to being able to access knowledge and being able to govern ourselves, as the developers of the first libraries knew very well.

Web: [Direct Link] [This Post]Why online learning must remain part of the education toolkit

Now that our institutions are rebounding back to 'the way things were' after the pandemic, we're seeing the first glimpses of people saying "no, no, I don't want to go back" in the media (though tbh the piece feels like a paid placement). This article (with a wonderfully puzzling illustration of online learning in which almost everything is completely analog) is an indicator of that. "We have a generation of young people who have grown up on personal devices that allow them to customise anything important to them: playlists, blogs, chats. They successfully communicate with friends, family and partners, expressing their passions and interests via remote devices. For the most part, they understand how to use those devices effectively for their needs. Why can't it be the same with learning?"

Web: [Direct Link] [This Post]Slow Travel

Suppose you believe, as I do, that we have to slash our carbon emissions drastically and urgently to ameliorate the worst effects of the climate catastrophe that is beginning to engulf us. And that you believe, as I do, that travel is a good thing for individuals and societies, and that in principle every human should enjoy the opportunity to visit every neighborhood around our fair planet. Can these beliefs be reconciled?

I used to joke, when people looked askance at my refusal to leave Vancouver, that it was the center of the world: “Ten hours to Heathrow, ten hours to Narita!” I was right, but…

Earth can’t afford for everyone to routinely hop on a jet-fueled aluminium cylinder and fly ten hours at a time.

Narita and Heathrow both suck. Arriving at either of them after one of those long flights is usually pretty awful. I speak from painful experience.

The unpleasantness of Narita and Heathrow is not entirely their own fault. After you’ve flown ten hours east or west, when the plane comes down you’ve descended into a nasty fog of jet-lag. Words cannot express my loathing for this condition — tossing and turning in an unfamiliar hotel bed during the wee hours, fighting off nap attacks in the middle of business meetings, feeling paralyzed after a single glass of wine with dinner.

The lame old anecdote applies here: “Doctor, it hurts when I do this!” “So, stop doing that.” No kidding, and you might help save the planet too.

I have read that recovery from jet-lag takes on the order of one day per timezone crossed. Thus, our current mode of travel, which crosses roughly one time zone per hour while spewing CO2 to poison our children’s futures is simply a bad thing and we should Stop Doing That.

Disclosure: I have been an egregious lifelong travel offender. I’ve enjoyed Damascus and Antibes and São Paolo and Tokyo and Memphis and, well, the list goes on. A world in which those destination options are no longer open to anyone would be a sad place.

What are you suggesting?

That we travel more slowly, which is to say more humanely, and which will enable us to cut down on the greenhouse gas per unit of distance.

Concretely, that for every trip we want to take, we maximize the distance that is covered by train, and minimize those legs that require becoming airborne.

For example

So let’s suppose I want to sample the delights of the Côte d'Azur one more time. Starting, of course, from Vancouver.

The big problem, of course, is the Atlantic ocean. Water travel is unacceptably slow and not notably energy-efficient. So let’s concede that it has to be done by air.

Today, I’d fly to Heathrow (sigh), 7,574km. Then I’d spend unpleasant airport time waiting for my connection to Nice or Cannes or somewhere, another 1000km or so. And I’d arrive feeling like deep-fried shit, wouldn’t really be in the swing of things for another couple of days.

(By the way, I’ve done this, back in the Nineties. There were two immigration lines in Cannes and when we got to the front of ours I noticed that each was being served by a young woman and both of them were flushing and giggling. I glanced left and there was Andre Agassi arriving in France to play in the Monaco open.)

Let’s do this more humanely. By consulting Google Earth I observe that the minimum as-the-plane-flies distance between the North American mainland and Europe is probably Portland, Maine to Brest, France. So our trip becomes three-legged:

Train: Vancouver to Portland, ME: 4,023km.

Fly: Portland to Brest: 4,944km.

Train: Brest to Cannes: 1,034k.

How long is this going to take? Let’s assume that all train travel averages 300km/h. Don’t tell me that’s crazy, I traveled 2000km from Hong Kong to Beijing that way in 2019, averaging 306km/h. It can and should be done.

Modern aircraft cruise at somewhere around 900km/h. Thus:

Vancouver to Portland: 13.4hrs.

Portland to Brest: 5.5hrs

Brest to Cannes: 3.4hrs

Realistically, I probably would have to go to Seattle to catch this imagined future train. But anyhow, the idea is that you wouldn’t do all three legs as a single 22.3hr marathon. You’d start the first leg early and get to Portland late, and stay overnight in a hotel with real beds and real breakfasts. Presumably since Portland is now a major rail hub the locals would have recognized a business opportunity and made it attractive to spend a day there taking the sights in, eating lobster, catching up on minor jetlag, and spending another night before you took off for Brest.

I’ve been to Brest, but only the train station on a trip to the countryside of Brittany. It’s well-worth a day or two’s visit, poking around taking in the standing stones and ciders and country cooking. Why not do that while you recover from the trans-Atlantic jet-lag? Remember that a 5000km leg will hurt less than the currently-typical 7500km jolt.

And then that last leg is a doddle, although France being France you probably have to go through Paris to get from Brest to the Riviera. Why not stop? Yes, you’re a tourist so Parisians hate you, but it’s still a cool place. And I guarantee that with four nights off, you’ll show up at the edge of the Mediterranean in a much better condition to enjoy it.

But wait, there’s more!

The thing that bothers me about this trip is still that trans-Atlantic leg. Yes, we’ve chopped a third off the carbon cost by shortening the route, but it’s still damn expensive, measured in CO2. Is there an alternative?

Maybe so. The airships being manufactured by Luftschiffbau Zeppelin cruise at 115km/h. So the leg from Portland to Brest would take about 43 hours. And to be realistic, the Zeppelin products do not have the scale or range to do this economically.

But let’s throw this challenge in the face of the world’s aircraft designers: Build us a way to travel 5000km or so that spews less carbon and provides a reasonably pleasant experience. We don’t require the 900km/h velocity of the current product, but we’d like it to be faster than 115km/h.

The profession built the current nasty-experience climate-destroying product line because they could, and because we decided incorrectly that we needed to travel one time-zone per hour. Let’s just drop that last constraint, and rejoice in doing so.

The real cost

It’s time, of course. Instead of waking up in Vancouver and going to bed in the South of France, it’s taken us the best part of a week to get there. That’s awful!

No it isn’t, it’s civilized. We’ve seen interesting places, we’ve eaten good meals, we’ve arrived in a decent condition to enjoy ourselves, and we’ve avoided pissing on our children’s future.

Of course, I’ve just ruined everyone’s vacation plans because they don’t get enough time off work for this kind of extravaganza. Well, that’s a bug too. As is the notion that it’s ever a good idea to travel at one time-zone per hour.

On Faith

What is “faith”, anyhow? The answers can become abstract, but concretely it’s what gets Salman Rushdie stabbed in the face. Oh wait, is that statement anti-Islamic? Guilty as charged; but then I’m also anti-Christian, anti-Hindu, anti-Buddhist, and, well, there are too many organized religions to list them all. Herewith too many words on Faith and Truth, albeit with pretty pictures. I do find positive things to say, but at the end of the day, well, no.

I’m not a moderate on this issue. I don’t believe, in general, that any supernatural event has ever occurred or, in particular, that any prayer has ever been answered. In my lifetime the outputs of organized religion have been mostly war, sexual abuse, and political support for venal rightist hypocrites.

Does it even matter?

Maybe religion has become irrelevant to your life, a common experience these days. It’s worth studying anyhow, as an example of the human propensity to believe things that are not just untrue, but wildly unlikely. Things that entirely lack supporting evidence. Things that make you shake your head.

I may not partake in faith but I’m a keen amateur student of religions. Perhaps it comes of having grown up in Lebanon, where a whole lot of ‘em run up against each other with a mostly disastrous impact on the civic fabric. I’ve visited Jerusalem and Damascus and Chartres and Stonehenge and Avebury and Carnac and Kamakura and Izumo and Beijing’s Temple of Heaven, and some of the world’s great forests.

Faith is real

I think that in my youth I maybe knew a saint. No, really; Father Leonard Guay, a Jesuit architect and astronomer. He built a university in Baghdad which was taken over by the Ba’ath, so he built another in Aleppo; the same thing happened. He offered no regrets. An American Midwesterner, he was in a combination monastery, winery, and observatory in rural Lebanon when we knew him. He loved coming over to talk English, eat corn-on-the-cob, and swap crossword-puzzle books; he took regular puzzles and did them diagramless, with just the clues. He loved kids, knew a million utterly lame jokes, and enjoyed telling us about the current research in the observatory, apologizing for using pagan language, as in the names of constellations and Zodiac signs. His faith glowed within him and around him.

This is the challenge to my personal aversion to the supernatural. I observe empirically that faith exists and that it’s real and that it appears to be good for some people. But my mind recoils at all the crazily baroque apparatus that is inextricably attached to every organized religion. I believe in belief and have no faith in faith.

But I went to Sunday School and my Dad even taught it in his youth. Once, when I boarded for a semester with family friends, I attended a Southern Baptist congregation which even at the age of twelve struck me as pretty looney-tunes. In my own mainstream-Protestant scientist family, the religious pressure was more or less zero.

Jodo Buddhist Mission in Lahaina, Maui

What I’m not buying

So I understand pretty well what it is. I’m not buying a deity who hardens Pharaoh’s heart, provoking plague and child slaughter across a whole population. Nor, in general, gods that purport to exhibit gender. Nor any who thrive on praise and consider it essential from their devotees. Hindutva smells to me like old-fashioned ethnofascism with Pujas. I scoff at a putative Savior who lectures that lust is morally equivalent to adultery. I’m not down with Wahhabi support for autocratic murdering princes nor with Crusading nor with throwing settler garbage down into lower Hebron. And the phrase “blasphemy law” makes me shiver with anger.

Faith and humans

But religion comes naturally to Homo sapiens. Perhaps the first big reason is that for much of humanity’s passage across time we were manifestly not in control of our destiny, just ephemeral sparks of life blown about by whims of climate and disease and geography and the population dynamics of our prey and predators, those sparks often snuffed out with no warning. It would have been comforting to think that Someone was in control. Maybe the improved ability to steer one’s own life, enjoyed today by those in developed societies and with an education, is partly responsible for the fading of faith?

Second, worship is a human built-in. We are small after all, regularly confronted by things much greater than ourselves: Our starfield, away from light pollution. The meeting of the Eastern Pacific with the Western Americas. Canyons and waterfalls and great ancient trees.

Candles in Chartres cathedral.

Of course, we build some of the things we worship. I know of two people who say they acquired faith following on a visit to Chartres, and I believe them. If you can enter that great stone poem without your sense of worship activating, I think you’re weird. The first time I walked in, it felt like a giant hand round my chest, interfering with my breathing.

But I dunno, I’ve had the same feeling at concerts by Laurie Anderson and Slava Rostropovich and The Clash and a host of other artists. Is my gratitude for being alive at the same time as these exceptional people “worship”?

Trees

My family has the great good fortune to own a cabin on a small island in Howe Sound near Vancouver, where I regularly experience worship of that Pacific perimeter, and especially the island’s great evergreens and bigleaf maples that tower into the filtered forest light, never still, wind never entirely absent in the greenery. I keep telling my kids they should shut up and listen to the damn trees and they’ll learn things and I’m right but they don’t, usually.

Feeling reverent around trees has the advantage that they’re not avatars of anything that is said to be twitchily concerned about how and with whom you deploy your genitals, or whose intercedents will require your cash to support their lifestyles.

Positives

I think it probable that religion will continue to decline, to the extent that its concerns are absent from public discourse. In my own civic landscape it already has.

That’s not entirely a good thing; you can admire aspects of religion without actually believing it. One of them is ritual, prescribed and choreographed public actions. It’s a thing that a high proportion of humans once experienced on a weekly basis; but no longer. I think we miss it. Military services retain rituals, as do the centers of government – consider America’s State of the Union address, or the opening of various nations’ parliaments. Weddings and funerals retain a ritual dimension but are infrequent. While I have no patience for Catholic dogma I often tune in their midnight Christmas mass for its own sake – the singing and chanting, the inner space of St Peter’s basilica, and priestly processions carrying the Host. I love the opening and closing of each Olympic games.

Sacred texts

Every faith has them. While I decline to honor their claims, it’s good to believe that written-down words are important, because they are. The use of language defines what’s special about our species as much as anything else does and I believe the single greatest cultural shift in humanity’s story came when it could be written, and lessons could outlast the storage provided by a human skull.

Shinto shrine at Izumo

The worlds of sci-fi, fantasy, and computer games are full of powerful and magical texts; obviously this notion speaks to many people. Religious texts are also historically important because they were replicated a lot and are thus well-represented among the fragments of language that have survived the ravages of centuries. Some of the books and the verses and words are very beautiful.

In fact, the Christian Bible, particularly in its seventeenth-century “King James” embodiment, has been at the center of the cultural experience of my own ethnic group to the extent that I think it probably deserves routine study at some point in the standard curriculum. A whole lot of our ancestral history and much wonderful literature and art is going to elude understanding without at least a basic grasp of its scriptural embedding.

If you want to get inside the head of someone who really held close to those values, go listen to Hildegard von Bingen’s O vis aeternitatis (“The Power of Eternity), probably written around 1150. It’s wonderful music! The world Hildegard inhabited, of faith made real in cloisters and their communities, is as remote from mine as that lived by the characters in the sci-fi I enjoy reading.

At the Abbey of Montserrat, near Barcelona

The flavor of truth

Of course, since sacred texts are said to express eternal absolutes, they must necessarily be immutable. Which seems boring and just wrong. It is a core value of scientists and engineers and philosophers and reference publishers that truth is contingent and dynamic; always capable of being better-expressed or deepened or falsified. On top of which, the language we use to express truths grows and mutates across the centuries. I’m not holding my breath waiting for Christendom to convene a Third Council of Nicaea and revise the doctrine of the Trinity. Still, we should respect and preserve and study the sacred texts because they are full of lessons about the people who wrote them and believe them.

Exegesis

Around those scriptural mountains are the rolling hills of exegesis; works of commentary and analysis, for example the Hadith and the Talmud. Christian exegesis is unimaginably vast in scale although it lacks a single named center.

I got lost in the exegetical maze during a failed youthful attempt to write a novel about the rise and wreck of the Tower of Babel, when I tried to understand what that crazy story might really be about.

Exegesis is fun to read! Intellectually challenging on its own terms, and if you have any familiarity at all with the Bible, the depth of meaning apparently waiting to be uncovered in the crevasses between adjacent words is astonishing. Next time you’re in a good library, I recommend looking up “Anchor Bible” in the catalog and poking around the stacks where the call-number takes you. If you’re like me your mind will be boggled at the vastness and complexity of the collection.

On this subject, if you ever find yourself in a colloquy of theologians or bibliophiles or antiquarians, even a brief mention of “The Church Fathers” will get you nods and smiles. They constitute the first few waves of Christian theological writing, there were really a lot of them (no Church Mothers, though), and they wrote an incredible number of books, many beautiful in form and content.

At Jing’an Temple in Shanghai

What a certain number of these theologians are trying to do is very similar to the goals of Physics theorists: An explanation of the universe from pure logical principles, showing how it really couldn’t possibly be any way other than the way it is. Christian theologians assert that this must be the Best of All Possible Worlds because what else could God have made?

They want the necessary outcome, via pure logical reasoning, to include an omnipotent omniscient male-gendered Creator and also a Savior, a single instance of God-made-flesh, plus a really hard to understand “Holy Spirit”. Which is to say, they have a heavier lift than physicists do. But to this day, Proofs of the Existence of God remain an amusing sub-sub-domain of theology and exegesis.

[I think you were supposed to be writing about the redeeming features? -Ed.] [Oh, right, thanks. -T.] Finally, it would be unfair to consider religion without acknowledging its leading role in philanthropy across the generations and continents.

Faith why?

None of which means we need to believe what the religiosos claim is true. But why, in the 21st century, do they still believe it? I really don’t have much to add to the two points I made above: The feeling that Someone must be in charge, and our built-in capacity for worship.

Let me offer an incredibly cynical but kind of entertaining take on the subject from Edward Gibbon, in his monumental Decline and Fall of the Roman Empire, six massive volumes dating from the late 1700s. [You didn’t actually buy it and read it, did you? -Ed.] [Sometime around 1984 I joined the “Book of the Month Club” and these great-looking books were the sign-up bonus. I read a lot of it, but got bored around 1000AD in Vol. 5, all that endless Byzantine treachery. -T.]

In a garden across the street from a Catholic school

Gibbon is discussing the rise of Christianity in the Empire, which he argues contributed to its fall, but that’s neither here nor there. He includes a sprawling survey of the religious landscape and, while discussing the Jews, notes that unlike many other faiths of the time, they weren’t prepared to go along and get along, host an occasional sacrifice to one Caesar or another in their temple; they resisted militantly and to the death. So, quoting from Chapter XV, Part I:

But the devout and even scrupulous attachment to the Mosaic religion, so conspicuous among the Jews who lived under the second temple, becomes still more surprising, if it is compared with the stubborn incredulity of their forefathers. When the law was given in thunder from Mount Sinai, when the tides of the ocean and the course of the planets were suspended for the convenience of the Israelites, and when temporal rewards and punishments were the immediate consequences of their piety or disobedience, they perpetually relapsed into rebellion against the visible majesty of their Divine King, placed the idols of the nations in the sanctuary of Jehovah, and imitated every fantastic ceremony that was practised in the tents of the Arabs, or in the cities of Phœnicia.10 As the protection of Heaven was deservedly withdrawn from the ungrateful race, their faith acquired a proportionable degree of vigor and purity. The contemporaries of Moses and Joshua had beheld with careless indifference the most amazing miracles. Under the pressure of every calamity, the belief of those miracles has preserved the Jews of a later period from the universal contagion of idolatry; and in contradiction to every known principle of the human mind, that singular people seems to have yielded a stronger and more ready assent to the traditions of their remote ancestors, than to the evidence of their own senses.11

10 For the enumeration of the Syrian and Arabian deities, it may be observed that Milton has comprised, in one hundred and thirty very beautiful lines, the two large and learned syntagmas which Selden had composed on that abstruse subject.

11 “How long will this people provoke me? And how long will it be ere they believe me, for all the signs which I have shewn among them?” (Numbers xiv 11). It would be easy, but it would be unbecoming, to justify the complaint of the Deity, from the whole tenor of the Mosaic history.

(Gibbons’ footnotes, included just for fun.)

Um, is that anti-Semitic? Maybe… and some other things Gibbon said definitely were. But he was also anti-Muslim and arguably anti-Christian. And his scoffing seems more aimed at theologies than ethnicities. In fact, the only religion to get many kind words was Rome’s indigenous paganism, because of its tolerance.

While his text is loaded with nods to Christianity being The Right Answer because of Its Divine Provenance, those passages glisten with cynicism (see above) and he was frequently attacked as an enemy of the faith. Gibbon is fun to read.

A miracle

Finally, let’s consider what miracles are: Things that happen for which there is no conventional explanation in our physical understanding of the universe. At least one miracle has happened. The universe, including its cosmic background radiation, its galaxy clusters, its black holes, me and my thread of consciousness, you and yours, and the text I’m writing and you’re reading: They all exist. There’s no explanation at hand as to why anything at all should. Miraculous!

However, check out Why Does the World Exist?: An Existential Detective Story, by Jim Holt (Norton, 2012), a serious but entertaining tour through metaphysics and religion looking for an answer to the question in the title. Spoiler alert: While Holt’s best attempts are stimulating, I was still left thinking of the existence of anything and everything as a miracle.

Former Lutheran church,

property soon to be filled with condos.

Future?

You could think of religion as a pathology of society as a whole, consequent on ignorance, fear, and certain built-in features of the human mind. I don’t think it’s going away, although a faith-free world would probably be a kinder, more humane place.

With these words, I may have offended some who partake in faith. I can’t honestly apologize, because an apology is at some level a promise to Stop Doing That.

I really, really just don’t buy it.

China’s possible blockade around Taiwan

It appears China wants to impose a blockade around Taiwan with ships, submarines, and airplanes. The New York Times mapped the possibility and how it could disrupt life in and around the island.

Tags: blockade, China, New York Times, Taiwan

BC’s Mysterious CoVid-19 Deaths Underreporting

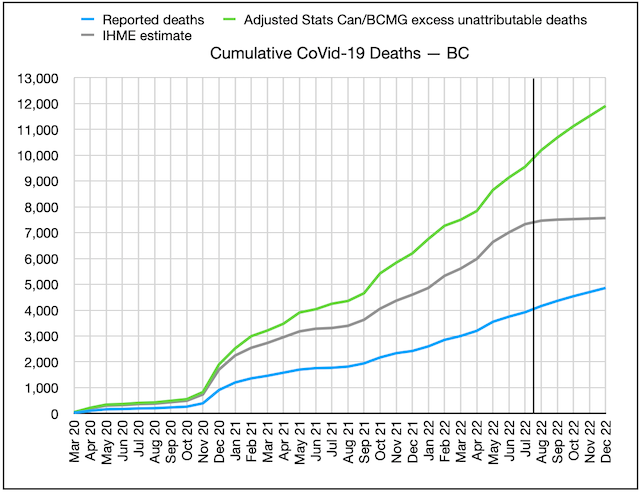

Cumulative CoVid-19 deaths for BC, three sources: BC CDC (blue), BC CoVid-19 Modelling Group (green), U of Washington IHME (grey)

Early in the pandemic, there was some statistical evidence that BC had been slow on the uptake in capturing and reporting CoVid-19 deaths in the province, and had missed 300-400 deaths in the first months of the pandemic. The health officers shrugged it off, and there is of course always some debate about whether the “cause” of a death was CoVid-19, just because the patient happened to have the disease when they died.

Since the provincial health officer, Dr Bonnie Henry, seemed to be providing candid and complete disclosures about the pandemic (she has won several awards, and commendations bordering on adulation from her peers and fans) I was inclined to give her the benefit of the doubt.

I was one of the earliest advocates of using “excess deaths” as a more reliable way of computing the pandemic’s true toll. It can be dicey of course: In the case of BC, the skyrocketing increase of deaths from toxic street drugs has outpaced the reported CoVid-19 death rate, and severely skewed the “excess deaths” number, as did the 2021 “heat dome” that took a minimum of 600 and perhaps double that number of lives, largely among the same demographic dying of CoVid-19.

And there are people who would have died if there’d been no pandemic (auto accident and industrial accident victims in 2020 and 2021 for example were down sharply). And there were people who died because they delayed surgery and other health interventions because they were afraid of getting the disease or because the hospitals were full.

There has been a fair bit of evidence that, on balance, the excess deaths number is probably a much better surrogate for actual CoVid-19 deaths than the reported deaths number, especially in jurisdictions with poor health reporting or which deliberately suppressed CoVid-19 numbers for political reasons. Over a large enough population, any significant deviation from past year’s average total death tolls almost certainly has a reason, and CoVid-19 is the obvious one.

Sure enough, when you look at global excess deaths data, the patterns and numbers, based on each country’s political, economic, and health care system, start to look not only consistent but predictable. These excess death numbers also align much better with seroprevalence and other data on the actual proportion of the country that’s been infected and inoculated.

It’s when you get down to the sub-national level that these data start to get a bit mind-boggling. In Canada, for instance, excess deaths in the three westernmost provinces have been on average twice the number of reported CoVid-19 deaths, while in Québec and some Atlantic provinces excess deaths have been less than reported CoVid-19 numbers. Québec has a very different reporting system, but the other provinces purport to follow consistent reporting standards.

So are the three westernmost provinces radically underreporting actual CoVid-19 deaths, or not, and if they are, how and why? Alberta has an extreme right-wing CoVid-19-denying and -minimizing government, while BC appeared to be letting Dr Henry lay it all out there and call the shots on what to mandate, at least in the early part of the pandemic. Yet the two provinces have very similar discrepancies between excess deaths (even adjusting for the toxic street drug epidemic and the ‘heat dome’) and reported CoVid-19 deaths. So what’s going on here?

Dr Henry continues to say that, while she doesn’t deny the Statistics Canada excess deaths data, she believes the reported numbers are quite accurate, and that there may be other, perfectly valid reasons for the discrepancy.

But a few months ago, BC changed both the frequency (to once a week, with a 10-day lag) and method of computing deaths, and pretty much stopped reporting case data entirely, using ‘surrogates’ in lieu of precise tabulations. They stressed that data before the change was not comparable to data after the changes, so they should not be combined. In other words, if you want to know how many people have actually died of CoVid-19 in BC, you’re pretty much out of luck.

Unless you use “excess deaths”, that is. At the same time the politicians have shrugged off the use of excess deaths as a most likely estimate of CoVid-19 deaths, and basically taken the podium away from health officers, they have failed to provide any useful data to use instead.

The chart for cumulative reported CoVid-19 deaths versus cumulative excess deaths since the pandemic began is shown above.

It suggests 10,000 British Columbians, not 4,000, have perished from CoVid-19 so far, rising at an annual rate of 3,000, unless you assume, as IHME does, that we’ve seen the last wave. That’s 1 in 7 British Columbians over age 80.

So sorry, Dr Henry, but until you actually present some data to show otherwise, I have to think that your estimate of the province’s CoVid-19 deaths is wildly wrong. Eight people per day, not four, are dying of CoVid-19 in BC this month, and this level of understatement has been going on since the pandemic began. How, and why? I think we need some answers from you.

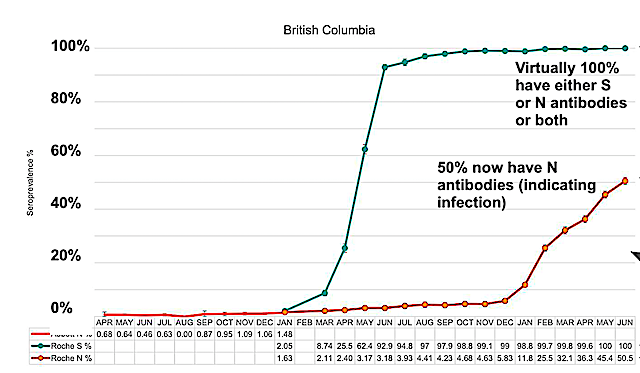

Turning from deaths to cases: Here’s the chart of seroprevalence data showing how the percentage of British Columbians catching the disease is skyrocketing since Omicron emerged:

data from BC COVID-19 Modelling Group and CoVid-29 Immunity Task Force

As of August 13, total reported cases in BC equate to 7% of the population, while seroprevalence studies suggest 56% of the population, 8 times this number, has actually contracted the disease at some point during the pandemic. Current reported daily new cases in the province average about 125, while the seroprevalence data suggests actual new cases in BC are currently running about 13,500 per day.

As this data shows, the BC CDC only catches and reports a tiny percentage of new cases (about 1%, according to most recent estimates). They are now forcing us to use seroprevalence data (mostly from regular blood donors, demographically adjusted) or sewer water prevalence, to figure out how many people are now getting the disease. This data suggests that about 8% of the population, or 400,000 British Columbians are catching the disease or being reinfected each month, and about 2% of the population, or 100,000 British Columbians, are actively infectious today.

In other words, if you are a British Columbian, it is likely that one out of every 50 people you work with, or share a restaurant or bus or train ride with, each day, is actively infectious, and that number is not declining. And more than one out of every 12 of us will be infected, or reinfected, this month. That means your chances of getting it, or getting it again, this month, are, unless you take unusual precautions, one in 12 this month. And probably next month. And the month after that.

““`

The good news, if there is any, is that estimates of the proportion of the infected population (which will soon be just about everyone) getting significant ‘Long CoVid’ symptoms have come down from as high as 1-in-3 to about 1-in-8-or-10, and for those previously fully vaccinated and boostered, the risk is significantly lower again (as is the risk of hospitalization or death when you do get the disease).

That’s still a staggering number of Long Covid patients, one that threatens to wreak long-term havoc on our already-teetering health care system, and on participation in our labour force.

The data for most other provinces and states in North America are comparable to the above BC data — it’s just that, until we took a closer look, we thought we in BC had been doing so much better than everyone else.

So, of course, with that high risk of infection, we should be N95 masking in all indoor locations outside the home, and whenever we’re in a crowded location. And testing and isolating and letting people know when learn we’ve been exposed to someone with the disease until we again test negative or have no fever or symptoms remaining. We’re still only at half-time in this pandemic.

And with the still-unacceptably-high risk of death (at least for those over 60, or obese, or immunocompromised) and of Long Covid, we should be taking extra precautions, avoiding crowds and risky environments (like restaurants and parties) where there is no testing and low mask use. And, of course, getting all our vaccinations and boosters.

As a recent report in the Tyee put it, quoting the above new research: “If the public knew just how much BA5 we have at the moment, we’d see a lot more masking than we currently have.”

So why doesn’t the public know this? And why is our province apparently understating its CoVid-19 death toll by more than half? And what are we going to do when we get yet another surge this coming winter?

I don’t have any answers. And I can’t seem to find anyone that has.

GitHub for English teachers

I’ve long imagined a tool that would enable a teacher to help students learn how to write and edit. In Thoughts in motion I explored what might be possible in Federated Wiki, a writing tool that keeps version history for each paragraph. I thought it could be extended to enable the kind of didactic editing I have in mind, but never found a way forward.

In How to write a press release I tried bending Google Docs to this purpose. To narrate the process of editing a press release, I dropped a sample release into a GDoc and captured a series of edits as named versions. Then I captured the versions as screenshots and combined them with narration, so the reader of the blog post can see each edit as a color-coded diff with an explanation.

The key enabler is GDoc’s File -> Version history -> Name current version, along with File -> See version history‘s click-driven navigation of the set of diffs. It’s easy to capture a sequence of editing steps that way.

But it’s much harder to present those steps as I do in the post. That required me to make, name, and organize a set of images, then link them to chunks of narration. It’s tedious work. And if you want to build something like this for students, that’s work you shouldn’t be doing. You just want to do the edits, narrate them, and share the result.

This week I tried a different approach when editing a document written by a colleague. Again the goal was not only to produce an edited version, but also to narrate the edits in a didactic way. In this case I tried bending GitHub to my purpose. I put the original doc in a repository, made step-by-step edits in a branch, and created a pull request. We were then able to review the pull request, step through the changes, and review each as a color-coded diff with an explanation. No screenshots had to be made, named, organized, or linked to the narration. I could focus all my attention on doing and narrating the edits. Perfect!

Well, perfect for someone like me who uses GitHub every day. If that’s not you, could this technique possibly work?

In GitHub for the rest of us I argued that GitHub’s superpowers could serve everyone, not just programmers. In retrospect I felt that I’d overstated the case. GitHub was, and remains, a tool that’s deeply optimized for programmers who create and review versioned source code. Other uses are possible, but awkward.

As an experiment, though, let’s explore how awkward it would be to recreate my Google Docs example in GitHub. I will assume that you aren’t a programmer, have never used GitHub, and don’t know (or want to know) anything about branches or commits or pull requests. But you would like to be able to create a presentation that walks a learner though a sequence of edits, with step-by-step narration and color-coded diffs. At the end of this tutorial you’ll know how to do that. The method isn’t as straightforward as I wish it were. But I’ll describe it carefully, so you can try it for yourself and decide whether it’s practical.

Here’s the final result of the technique I’ll describe.

If you want to replicate that, and don’t already have a GitHub account, create one now and log in.

Ready to go? OK, let’s get started.

Step 1: Create a repository

Click the + button in the top right corner, then click New repository.

Here’s the next screen. All you must do here is name the repository, e.g. editing-step-by-step, then click Create repository. I’ve ticked the Add a README file box, and chosen the Apache 2.0 license, but you could leave the defaults — box unchecked, license None — as neither matters for our purpose here.

Step 2: Create a new file

On your GitHub home page, click the Repositories tab. Your new repo shows up first. Click its link to open it, then click the Add file dropdown and choose Create new file. Here’s where you land.

Step 3: Add the original text, create a new branch, commit the change, and create a pull request

What happens on the next screen is bewildering, but I will spare you the details because I’m assuming you don’t want to know about branches or commits or pull requests, you just want to build the kind of presentation I’ve promised you can. So, just follow this recipe.

- Name the file (e.g. sample-press-release.txt

- Copy/paste the text of the document into the edit box

- Select Create a new branch for this commit and start a pull request

- Name the branch (e.g. edits)

- Click Propose new file

On the next screen, title the pull request (e.g. edit the press release) and click Create pull request.

Step 4: Visit the new branch and begin editing

On the home page of your repo, use the main dropdown to open the list of branches. There are now two: main and edits. Select edits

Here’s the next screen.

Click the name of the document you created (e.g. sample-press-release.txt to open it.

Click the pencil icon’s dropdown, and select Edit this file.

Make and preview your first edit. Here, that’s my initial rewrite of the headline. I’ve written a title for the commit (Step 1: revise headline), and I’ve added a detailed explanation in the box below the title. You can see the color-coded diff above, and the rationale for the change below.

Click Commit changes, and you’re back in the editor ready to make the next change.

Step 5: Visit the pull request to review the change

On your repo’s home page (e.g. https://github.com/judell/editing-step-by-step), click the Pull requests button. You’ll land here.

Click the name of the pull request (e.g. edit the press release) to open it. In the rightmost column you’ll see links with alphanumeric labels.

Click the first one of those to land here.

This is the first commit, the one that added the original text. Now click Next to review the first change.

This, finally, is the effect we want to create: a granular edit, with an explanation and a color-coded diff, encapsulated in a link that you can give to a learner who can then click Next to step through a series of narrated edits.

Lather, rinse, repeat

To continue building the presentation, repeat Step 4 (above) once per edit. I’m doing that now.

… time passes …

OK, done. Here’s the final edited copy. To step through the edits, start here and use the Next button to advance step-by-step.

If this were a software project you’d merge the edits branch into the main branch and close the pull request. But you don’t need to worry about any of that. The edits branch, with its open pull request, is the final product, and the link to the first commit in the pull request is how you make it available to a learner who wants to review the presentation.

GitHub enables what I’ve shown here by wrapping the byzantine complexity of the underlying tool, Git, in a much friendlier interface. But what’s friendly to a programmer is still pretty overwhelming for an English teacher. I still envision another layer of packaging that would make this technique simpler for teachers and learners focused on the craft of writing and editing. Meanwhile, though, it’s possible to use GitHub to achieve a compelling result. Is it practical? That’s not for me to say, I’m way past being able to see this stuff through the eyes of a beginner. But if that’s you, and you’re motivated to give this a try, I would love to know whether you’re able to follow this recipe, and if so whether you think it could help you to help learners become better writers and editors.

Task backlog waiting times are power laws

Once it has been agreed to implement new functionality, how long do the associated tasks have to wait in the to-do queue?

An analysis of the SiP task data finds that waiting time has a power law distribution, i.e.,  , where

, where  is the number of tasks waiting a given amount of time; the LSST:DM Sprint/Story-point/Story has the same distribution. Is this a coincidence, or does task waiting time always have this form?

is the number of tasks waiting a given amount of time; the LSST:DM Sprint/Story-point/Story has the same distribution. Is this a coincidence, or does task waiting time always have this form?

Queueing theory analyses the properties of systems involving the arrival of tasks, one or more queues, and limited implementation resources.

A basic result of queueing theory is that task waiting time has an exponential distribution, i.e., not a power law. What software task implementation behavior is sufficiently different from basic queueing theory to cause its waiting time to have a power law?

As always, my first line of attack was to find data from other domains, hopefully with an accompanying analysis modelling the behavior. It’s possible that my two samples are just way outside the norm.

Eventually I found an analysis of the letter writing response time of Darwin, Einstein and Freud (my email asking for the data has not yet received a reply). Somebody writes to a famous scientist (the scientist has to be famous enough for people to want to create a collection of their papers and letters), the scientist decides to add this letter to the pile (i.e., queue) of letters to reply to, eventually a reply is written. What is the distribution of waiting times for replies? Yes, it’s a power law, but with an exponent of -1.5, rather than -1.

The change made to the basic queueing model is to assign priorities to tasks, and then choose the task with the highest priority (rather than a random task, or the one that has been waiting the longest). Provided the queue never becomes empty (i.e., there are always waiting tasks), the waiting time is a power law with exponent -1.5; this behavior is independent of queue length and distribution of priorities (simulations confirm this behavior).

However, the exponent for my software data, and other data, is not -1.5, it is -1. A 2008 paper by Albert-László Barabási ( detailed analysis)showed how a modification to the task selection process produces the desired exponent of -1. Each of the tasks currently in the queue is assigned a probability of selection, this probability is proportional to the priority of the corresponding task (i.e., the sum of the priorities/probabilities of all the tasks in the queue is assumed to be constant); task selection is weighted by this probability.

So we have a queueing model whose task waiting time is a power law with an exponent of -1. How well does this model map to software task selection behavior?

One apparent difference between the queueing model and waiting software tasks is that software tasks are assigned to a small number of priorities (e.g., Critical, Major, Minor), while each task in the model queue has a unique priority (otherwise a tie-break rule would have to be specified). In practice, I think that the developers involved do assign unique priorities to tasks.

Why wouldn’t a developer simply select what they consider to be the highest priority task to work on next?

Perhaps each developer does select what they consider to be the highest priority task, but different developers have different opinions about which task has the highest priority. The priority assigned to a task by different developers will have some probability distribution. If task priority assignment by developers is correlated, then the behavior is effectively the same as the queueing model, i.e., the probability component is supplied by different developers having different opinions and the correlation provides a clustering of priorities assigned to each task (i.e., not a uniform distribution).

If this mapping is correct, the task waiting time for a system implemented by one developer should have a power law exponent of -1.5, just like letter writing data.

The number of sprints that a story is assigned to, before being completely implemented, is a power law whose exponent varies around -3. An explanation of this behavior based on priority queues looks possible; we shall see…

The queueing models discussed above are a subset of the field known as bursty dynamics; see the review paper Bursty Human Dynamics for human behavior related aspects.

Razer’s Basilisk V3 Pro might be my new favourite mouse

Earlier this month, I wrote about Razer's DeathAdder V3 Pro. It's a great wireless mouse, but those looking for the ultimate gaming mouse experience should consider Razer's latest mouse, the Basilisk V3 Pro.

Although it was released on August 23rd, I've been using one for a little longer and am quite impressed with it so far. It sports everything I liked about the DeathAdder V3 Pro, but with a more ergonomic shape. Plus, I really like the fancy wireless charger / wireless dongle combo, the Razer Mouse Dock Pro.

First, let's run through the specs: the Basilisk V3 Pro sports a HyperScroll Tilt Wheel, Optical Mouse Switches Gen-3, a ton of programmable buttons, Focus Pro 30K Optical Sensor, and all the RGB lighting you could want in a gaming mouse.

Even better, if you get Razer's Mouse Dock Pro (more on this below), the RGB lights on the dock and mouse can sync up, and when charging, they show the battery level too.

Although the Basilisk V3 Pro is a gaming mouse, I primarily used it while working during the testing period. I gamed with it too, of course, but these days the majority of my time at my computer is spent working. Regardless, the Basilisk V3 Pro worked great for both.

Wireless charging is sick until you need to use the mouse while charging

https://youtu.be/cUxzYlSFalA

Still, the Basilisk V3 Pro isn't perfect. It's heavier than the DeathAdder (112g to 64g, respectively), and in my experience, the battery life isn't as good. Razer claims up to 90 hours when using the HyperSpeed wireless dongle, but I found the mouse on the charger every few days. To be fair, that was in part due to using the higher 4,000Hz polling rate, but even after going back to 1,000Hz, I found the Basilisk V3 Pro didn't last that long. (As an aside, I personally didn't notice a significant difference between 1,000 and 4,000Hz polling, but at least the feature is there for those who want it.)

Depending on your set-up, however, the battery life may range from mildly annoying to downright inconvenient. I was testing Razer's Mouse Dock Pro alongside the Basilisk. The Mouse Dock effectively replaces the need to use the included wireless dongle, as it includes a built-in HyperSense transceiver with support for up to 4,000Hz polling (the HyperSense dongle included with the Basilisk V3 Pro only does up to 1,000Hz). The Mouse Dock Pro lets you wirelessly charge the Basilisk, which is honestly really cool, and I loved it. The downside, however, is if your mouse dies when you need to use it, you can't charge it on the Mouse Dock.

The Basilisk V3 Pro sports a USB-C port on the front so you can plug it in and use it while charging, which means you can keep using it when the battery's dead if you forgo the wireless charging. The only real complaint here is that the cable included with the Mouse Dock Pro isn't ideal for use when plugged into the mouse (the cable that comes with the Basilisk V3 Pro is lighter and more flexible). Either way, these are nitpicking in the grand scheme -- if you keep an eye on your battery level and put the mouse on the Mouse Dock Pro when you're not using it, keeping the battery topped off isn't a problem.

One other thing worth noting about the Basilisk V3 Pro is it relies on a 'wireless charging puck' to use the Mouse Dock Pro or other wireless chargers. You get one with the Mouse Dock Pro, or you can buy one separately for $24.99 -- either way, you'll need to swap out the plastic placeholder puck on the bottom of the mouse with the wireless charging puck before you can use wireless charging.

A few software goodies and other nice features

Before I wrap up, there were a few other small things I appreciated about the Basilisk V3 Pro during my time with it. First, and not really specific to the Basilisk, is the ease of remapping certain keys.

I don't often remap keys on mice, but the Basilisk V3 Pro sports a thumb button for activating the 'Sensitivity Clutch,' a feature to temporarily reduce mouse sensitivity. It's handy for certain games, like first-person shooters, when you're trying to line up that perfect snipe. However, it's something I've never really used, and the Sensitivity Clutch button's placement felt more accessible than the other thumb buttons, which I often use to activate abilities in games like Destiny 2 or Apex Legends. So, I remapped it, and it's been great.

Another feature that stood out to me was the 'Smart-Reel' option, which lets the scroll wheel flip between tactile and free-spin modes on the fly. I thought I'd be a fan of this since I generally prefer tactile scroll but occasionally appreciate free-spin scrolling when I'm working and need to zip around a long article on MobileSyrup. However, in practice, I found Smart-Reel easily flipped between the two, and it felt really weird when I was using it. I'd love to see an option to customize the activation threshold for Smart-Reel in the future. Still, it's great to have both options available on the Basilisk since the DeathAdder only had tactile scroll.

Finally, the Basilisk V3 Pro sports a button to cycle through different DPI settings. That's a fairly common inclusion on mice these days, but what I appreciated with the Basilisk V3 is it would show the DPI on my computer screen when I cycled through. This is much more accessible than showing a little LED light with a different colour for each DPI setting, especially since I could never remember which LED colour was for the setting I actually wanted.

The Basilisk V3 Pro is overall great, but it's pricey too

After my time with the Basilisk V3 Pro, I'm a fan and will likely keep using it as my daily driver. Ultimately, I'd like something just a tad lighter, but the ergonomics of the Basilisk will keep it on my desk over other, lighter options.

Unfortunately, the Basilisk V3 Pro doesn't come cheap. There are a few options to pick from, which I'll highlight below:

- Basilisk V3 Pro - $219.99

- Basilisk V3 Pro with Wireless Charging Puck - $231.99 (regular $244.98)

- Basilisk V3 Pro with Mouse Dock Pro - $268.99 (regular $309.98)

Again, the wireless charging puck costs $24.99 on its own, and works with Qi charging pads, while the Mouse Dock Pro costs $89.99 and comes with a charging puck.

You can learn more about Basilisk V3 Pro, or buy one, on Razer's website.

15aug2022

MiniRust, by Ralf Jung. “The purpose of MiniRust is to describe the semantics of an interesting fragment of Rust in a way that is both precise and understandable to as many people as possible.”

Combinatory Logic and Combinators in Array Languages (PDF), by Conor Hoekstra. “This paper will look at the existence of combinators in the modern array languages Dyalog APL, J and BQN and how the support for them differs between these languages.”

Trealla Prolog, a compact, efficient Prolog interpreter with ISO compliant aspirations.

The APL/J/K Zoo, run many APL variants in your browser!

The PicoEMP is a low-cost Electromagnetic Fault Injection (EMFI) tool, designed specifically for self-study and hobbiest research.

Getting the World Record in HATETRIS, extremely in-depth. You way wanna read the prologue first!

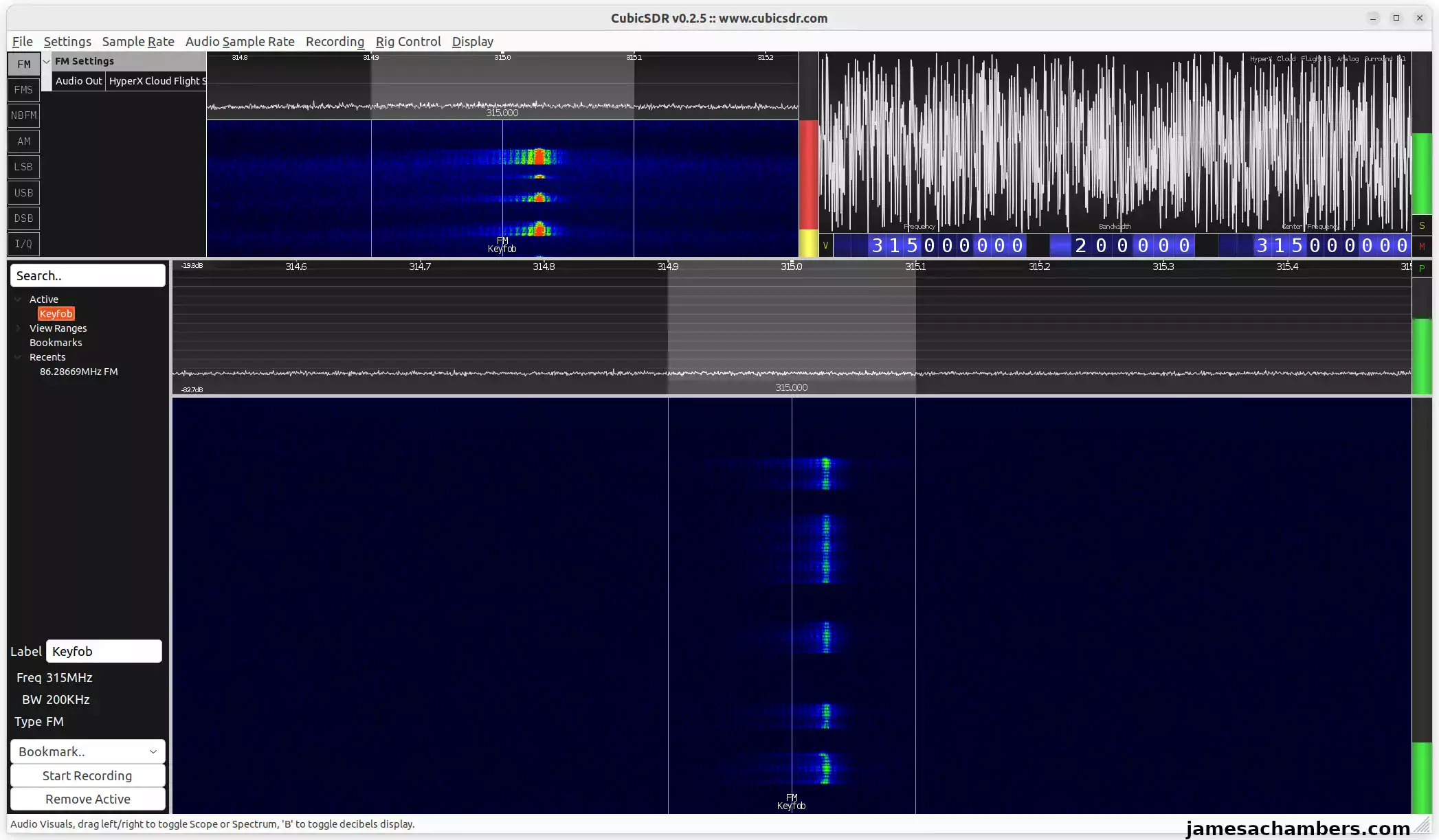

Use HackRF SDR to Lock / Unlock Car

I've previously covered getting your HackRF set up in Linux and getting the firmware updated. In that guide we installed the very easy to use CubicSDR application and were able to easily tune to various audio signals.

Today we're going to do something more interactive and actually use the transmitter. We're going to unlock and lock my vehicle using the HackRF! Let's get started.

I've previously covered getting your HackRF set up in Linux and getting the firmware updated. In that guide we installed the very easy to use CubicSDR application and were able to easily tune to various audio signals.

Today we're going to do something more interactive and actually use the transmitter. We're going to unlock and lock my vehicle using the HackRF! Let's get started.

Instagram, TikTok, and the Three Trends

Trends in medium, AI, and user interaction underpin Instagram's response to TikTok, and will determine Meta's long-term moat.

Back in 2010, during my first year of Business School, I helped give a presentation entitled “Twitter 101”:

My section was “The Twitter Value Proposition”, and after admitting that yes, you can find out what people are eating for lunch on Twitter, I stated “The truth is you can find anything you want on Twitter, and that’s a good thing.” The Twitter value proposition was that you could “See exactly what you need to see, in real-time, in one place, and nothing more”; I illustrated this by showing people how they could unfollow me:

The point was that Twitter required active management of your feed, but if you put in the effort, you could get something uniquely interesting to you that was incredibly valuable.

Most of the audience didn’t take me up on it.

Facebook Versus Instagram

If there is one axiom that governs the consumer Internet — consumer anything, really — it is that convenience matters more than anything. That was the problem with Twitter: it just wasn’t convenient for nearly enough people to figure out how to follow the right people. It was Facebook, which digitized offline relationships, that dominated the social media space.

Facebook’s social graph was the ultimate growth hack: from the moment you created an account Facebook worked assiduously to connect you with everyone you knew or wish you knew from high school, college, your hometown, workplace, you name an offline network and Facebook digitized it. Of course this meant that there were far too many updates and photos to keep track of, so Facebook ranked them, and presented them in a feed that you could scroll endlessly.

Users, famously, hated the News Feed when it was first launched: Facebook had protesters outside their doors in Palo Alto when it was introduced, and far more online; most were, ironically enough, organized on Facebook. CEO Mark Zuckerberg penned an apology:

We really messed this one up. When we launched News Feed and Mini-Feed we were trying to provide you with a stream of information about your social world. Instead, we did a bad job of explaining what the new features were and an even worse job of giving you control of them. I’d like to try to correct those errors now…

The errors to be corrected were better controls over what might be shared; Facebook did not give the users what they claimed to want, which was abolishing the News Feed completely. That’s because the company correctly intuited a significant gap between its users stated preference — no News Feed — and their revealed preference, which was that they liked News Feed quite a bit. The next fifteen years would prove the company right.

It was hard to not think of that non-apology apology while watching Adam Mosseri’s Instagram update three weeks ago; Mosseri was clear that videos were going to be an ever great part of the Instagram experience, along with recommended posts. Zuckerberg reiterated the point on Facebook’s earnings call, noting that recommended posts in both Facebook and Instagram would continue to increase. A day later Mosseri told Casey Newton on Platformer that Instagram would scale back recommended posts, but was clear that the pullback was temporary:

“When you discover something in your feed that you didn’t follow before, there should be a high bar — it should just be great,” Mosseri said. “You should be delighted to see it. And I don’t think that’s happening enough right now. So I think we need to take a step back, in terms of the percentage of feed that are recommendations, get better at ranking and recommendations, and then — if and when we do — we can start to grow again.” (“I’m confident we will,” he added.)

Michael Mignano calls this recommendation media in an article entitled The End of Social Media:

In recommendation media, content is not distributed to networks of connected people as the primary means of distribution. Instead, the main mechanism for the distribution of content is through opaque, platform-defined algorithms that favor maximum attention and engagement from consumers. The exact type of attention these recommendations seek is always defined by the platform and often tailored specifically to the user who is consuming content. For example, if the platform determines that someone loves movies, that person will likely see a lot of movie related content because that’s what captures that person’s attention best. This means platforms can also decide what consumers won’t see, such as problematic or polarizing content.

It’s ultimately up to the platform to decide what type of content gets recommended, not the social graph of the person producing the content. In contrast to social media, recommendation media is not a competition based on popularity; instead, it is a competition based on the absolute best content. Through this lens, it’s no wonder why Kylie Jenner opposes this change; her more than 360 million followers are simply worth less in a version of media dominated by algorithms and not followers.

Sam Lessin, a former Facebook executive, traced this evolution from the analog days to what is next in a Twitter screenshot entitled “The Digital Media ‘Attention’ Food Chain in Progress”:

The coming fall of the Kardashians in context of how entertainment is evolving… (aka why they are so pissed about tiktok) pic.twitter.com/wtYrvxbS35

— sam lessin 🏴☠️ (@lessin) July 26, 2022

Lessin’s five steps:

- The Pre-Internet ‘People Magazine’ Era

- Content from ‘your friends’ kills People Magazine

- Kardashians/Professional ‘friends’ kill real friends

- Algorithmic everyone kills Kardashians

- Next is pure-AI content which beats ‘algorithmic everyone’

This is a meta observation and, to make a cheap play on words, the first reason why it made sense for Facebook to change its name: Facebook the app is eternally stuck on Step 2 in terms of entertainment (the app has evolved to become much more of a utility, with a focus on groups, marketplace, etc.). It’s Instagram that is barreling forward. I wrote last summer about Instagram’s Evolution:

The reality, though, is that this is what Instagram is best at. When Mosseri said that Instagram was no longer a photo-sharing app — particularly a “square photo-sharing app” — he was not making a forward-looking pronouncement, but simply stating what has been true for many years now. More broadly, Instagram from the very beginning — including under former CEO Kevin Systrom — has been marked first and foremost by evolution.

To put this in Lessin’s framework, Instagram started out as a utility for adding filters to photos put on other social networks, then it developed into a social network in its own right. What always made Instagram different than Facebook, though, is the fact that its content was default-public; this gave the space for the rise of brands, meme and highlight accounts, and the Instagram influencer. Sure, some number of people continued to use Instagram primarily as a social network, but Meta, more than anyone, had an understanding of how Instagram usage had evolved over time.

In other words, when Kylie Jenner posts a petition demanding that Meta “Make Instagram Instagram again”, the honest answer is that changing Instagram is the most Instagram-like behavior possible.

Three Trends

Still, it’s understandable why Instagram did back off, at least for now: the company is attempting to navigate three distinct trends, all at the same time.