This is the 13th in a series of articles on CoVid-19. I am not a medical expert, but have worked with epidemiologists and have some expertise in research, data analysis and statistics. I am producing these articles in the belief that reasonably researched writing on this topic can’t help but be an improvement over the firehose of misinformation that represents far too much of what is being presented on this topic in social (and some other) media.

Throughout the current pandemic, there have been ‘experts’ of all kinds trying to build a reputation (political, professional, social) by badmouthing what the world’s public health officers have been saying, doing, and urging the public to do, in the face of CoVid-19.

Some of these have been conspiracy theorists and their repeater-drones, who are not worth wasting further effort engaging. But others are seemingly well-meaning, intelligent, mentally balanced people. There is a propensity I think in most of us to challenge popular wisdom: “Everything you thought you knew about X is wrong:”. In many cases, when that skepticism is based on reason, research and reasonable doubt, such challenges can be very useful, even essential. But in other cases (as with the climate collapse skeptics) they can cause harmful confusion and delay, and destroy the morale of people doing important work, especially in a crisis.

I’ve been critical of the anti-mask professionals (“There’s no definitive proof they do any good”), and the anti-social distancing and anti-shutdown advocates. Our society has suffered immensely from our seemingly inherent incapacity to exercise the precautionary principle, even in times of crisis. The majority’s collective global response to CoVid-19 to date has been a rare positive signal that we can exercise this principle, and this discipline, when we have a mind to do it. If we can, then there’s hope for resilience in the crises to come.

What the “until there’s unequivocal proof I won’t accept it” argument leads to is the antithesis of resilience. It leads to resistance to change, inability to adapt, and refusal to give others, and perilous situations, the benefit of the doubt. Whether it’s spoken by doctrinaire conservatives or doctrinaire libertarians, in sufficient numbers it can paralyze our capacity to deal effectively with complex situations of all kinds. It leads to dithering, backsliding, and denial that there’s a problem — the kind of thinking and emotional responses that have been so much on display among the political leaders of the US, UK and Brasil in particular in the face of CoVid-19 and all the other crises that have arisen recently and will become more and more commonplace in the years ahead.

Public health leaders have been saying for decades that we need to be prepared to take drastic, immediate, global action to deal with the threat of pandemics in our ever more crowded and ever more mobile world. Except in a small handful of countries that have breezed through the current crisis, they have been largely ignored.

What the latest group of nay-sayers are now proclaiming is that the mortality of CoVid-19 is evolving to be much lower than was thought or feared, and as it moves more aggressively through younger populations around the world the death toll will prove to be much lower than the 0.68% IFR (translating to 50 million global deaths) that scientists and public health experts have been computing it to be, and assuming, in the absence of better estimates, it to be. It is possible they are correct. It could be as much as five times lower (0.13%, translating to only 10 million global deaths at most).

In a typical year a half million globally die of influenza viruses (50,000 in the US); most of those who die are old and/or suffer from complicating medical conditions, and for that reason the ’cause’ of death may be complicated and hence the numbers are very imprecise). So if the upper limit of global CoVid-19 deaths is 5 million a year over a couple of years, that’s only 10 times the normal influenza rate, and it’s only half the number of people who die each year from cancer. Still a pandemic, and awful, but not the historical catastrophe many feared.

What this group then goes on to suggest, however, is that public health experts and politicians knew this to be the case, and have used the “pandemic” as an excuse to grab executive power and throttle civil liberties. This implication is IMO dishonest, dangerous, and an insult to our public health community and the health care professionals dealing with the disease.

If it turns out that CoVid-19 is only, at worst, ten times as bad as a normal flu season, the obvious questions are (a) how could we have been so wrong, and (b) if we’d known, what would we have done differently?

For a start, we really have little way to know what the actual infection and death toll numbers are. Data collection methods in most places are crude to non-existent, decisions on what ’caused’ a particular death (especially with other preconditions) can be all over the map, and there was (and still largely is) no accurate method to detect who is infected and who is infectious, though we’re getting better at it. So estimating the IFR is a wildly imprecise and ever-changing exercise. Due to lack of testing there were early estimates that IFR could be as high as 3-4%, though opinions varied by as much as an order of magnitude. We are still wildly behind in processing what little credible death data we have, and will never know how many CoVid-19 deaths we missed, though several studies suggest that we’ve missed at least a third of them in our reports in most affluent nations, and 90% of them in some struggling nations, based on excess deaths data. Even with the benefit of hindsight, estimated deaths from the 1918 H1N1 pandemic still vary by an order of magnitude, from 10 million to 100 million — the 50 million most often cited is really, still, just a crude guess.

The data from the SARS-1 and MERS coronaviruses, the two most recent “novel” coronaviruses (novel in that they’re not “common” like the many common cold coronaviruses), suggest that they had terrifyingly high IFRs of 10-50%, but fortunately were not very easily transmitted, so keeping the number of infections under tight control (isolation) allowed the death toll to be reduced to a tiny proportion of what might have occurred if it had had the transmissibility of CoVid-19.

So when China announced CoVid-19, there was understandable terror that this might be “the big one” that epidemiologists worldwide had feared. The combination of high virulence (IFR) and high transmissibility is a nightmare, with the potential to lead to a repeat of 1918 or the global plagues of much earlier times, or worse.

It should therefore be no surprise that the world’s public health leaders, with the best available information, strenuously recommended lockdowns, social distancing, and (in some cases) masks to mitigate this risk. The explosion of deaths, overloaded hospitals and huge positivity rates in March and April did nothing to ease these fears. Some surveys in several countries suggested that the IFR looked to be about 1%, while others suggested it was between 0.2% and 2%. Public health advice was based on the 1% assumption, which seemed consistent over several months — the best guess at the time.

More recently we’ve discovered that many people contract the disease asymptomatically, and including them in the case count lowered the best guess of the IFR to about 0.68%, which is the estimate that is still being used by health authorities as more data comes in. As noted above, a 0.68% IFR in a highly transmissible disease (ie where everyone will be exposed until a herd immunity level where around 70% of the population has been infected and is hence immune, is reached) translates to a potential death toll of 0.68% x 7.8 billion = 50 million, less whatever proportion is not affected due to herd immunity, and barring an effective vaccine. That’s less than the potential 80 million deaths that a 1% IFR could have produced, but it’s still in 1918 range in total numbers killed, and two orders of magnitude more than “normal” annual influenza deaths. Worth a lockdown? Most people thought so.

By the end of September, the global reported death toll was more than a million, with estimates of global actual deaths averaging closer to twice that number. Reported deaths for the past four months have been very consistent at about 180,000/month, and reported cases and positivity rates in several early-hard-hit areas have been spiking upwards again. More troubling, even though the reported newly infected were much younger and expected to die in much smaller numbers when infected, CoVid-19 hospitalization numbers in the US and some other areas jumped back up to record levels in the late summer. But reported deaths did not rise nearly as much, causing some to question whether more people had actually been infected without incident than earlier thought (although models already suggested that as few as 10% of infections had actually been reported). Could the underreporting of cases be even more extreme than thought, and could the IFR be much lower than what the data so far had been pointing to?

That’s where we are now. The biggest challenge is that our tools for testing who has been infected are still limited, and not terribly reliable. Antigen tests until recently had suggested that even in the hardest-hit areas, only 20% of the population had been infected (far below herd immunity levels), and that worldwide on average only about 2% had been infected — still supporting the 0.68% IFR (2% x 7.8B x 0.68% = 1 million global deaths).

It was strange, therefore, to hear the head of the WHO suggest recently that as much as 10% of the world’s population, five times as many, had been infected. This suggests they believe that the actual IFR could be five times lower, about 0.13%, and that we’re looking at a maximum of 10 million CoVid-19 deaths, not 50 million. Indeed, assuming that the current reported deaths continue at a 180,000/month rate, ie 2 million a year, then it would be a surprise to hit even 10 million deaths before a vaccine is perfected, even assuming a third to a half of CoVid-19 deaths remain unreported. And indeed, some studies in India, which has many CoVid-19 deaths but surprisingly few deaths per million people, suggest that as many as a half of the people in parts of that country have been infected.

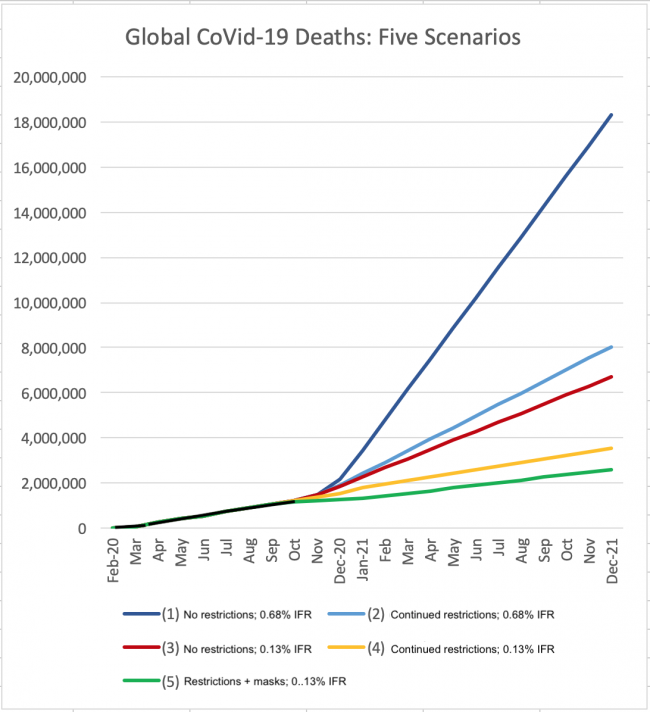

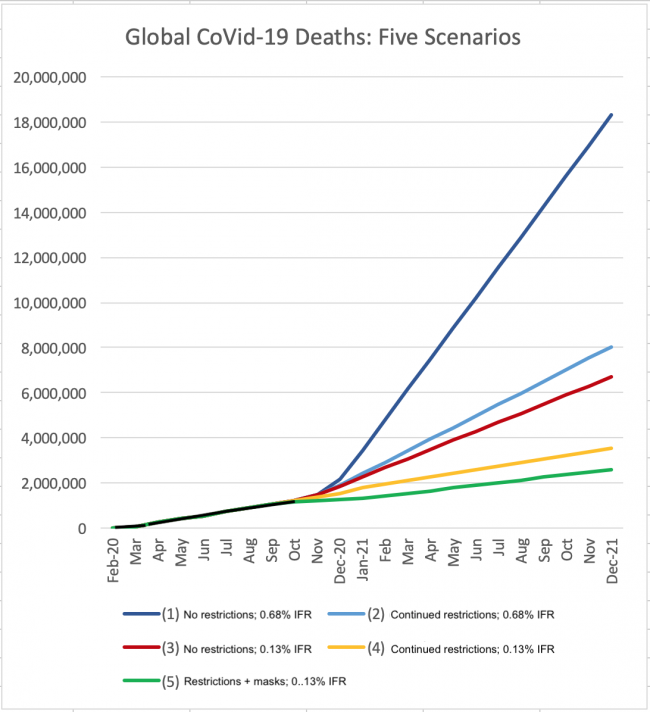

Many are not convinced. The IHME continues to use the 0.68% IFR and projects that by the end of January global reported deaths will hit 2.5 million, and the daily death rate will be 16,000, three times the current rate, which, if it continues unabated, would lead to 6 million more deaths next year until and unless an effective virus is implemented. Without sustaining at least some social distancing and masks restricting the spread, they say, the daily death rate will soar to 50,000 by January, an annual rate of 18 million more reported deaths. On this basis, it is easy to understand why public health experts feared that as many as 50 million might die without restrictions in place, if an effective vaccine was still years away.

But suppose they’re wrong, and the analyses that supported the 0.68% IFR were flawed, which is possible for a host of perfectly understandable, benign reasons. Suppose the antigen and other tests done to date have missed most infections, and that as the WHO is now proposing, 10% of the world’s population has already been infected and the actual IFR is hence only 0.13%. Suppose too that an effective vaccine will have been universally administered by December 2021. And finally, let’s suppose that by the end of this year, with death rates flat and hospitalizations manageable, pandemic fatigue has set in and we allow the disease to more or less run its course, continuing to ease restrictions in most of the world.

If herd immunity cuts in at 70%, that would mean the final death toll is likely to be 0.13% x 7.8B x 70% = 7 million deaths. That corresponds to about 300,000 deaths in the US and 35,000 in Canada. If the 0.13% is correct then that means already nearly half the US population has been infected, so it would be 2/3 the way to herd immunity. It would mean that about 20% of Canadians have already been infected, so it’s less than 1/3 the way to herd immunity. Another 25,000 deaths may well be unacceptable to Canadians (only 10,000 have died here so far, mostly in Québec), in which case the US may shrug and return to business as normal, while Canadians may well keep restrictions in place.

But if we do reach a consensus that this disease spread five times faster and farther than we thought, and is hence much less lethal than we thought, what does that mean for our assessment of public health’s performance to date, and what to do next?

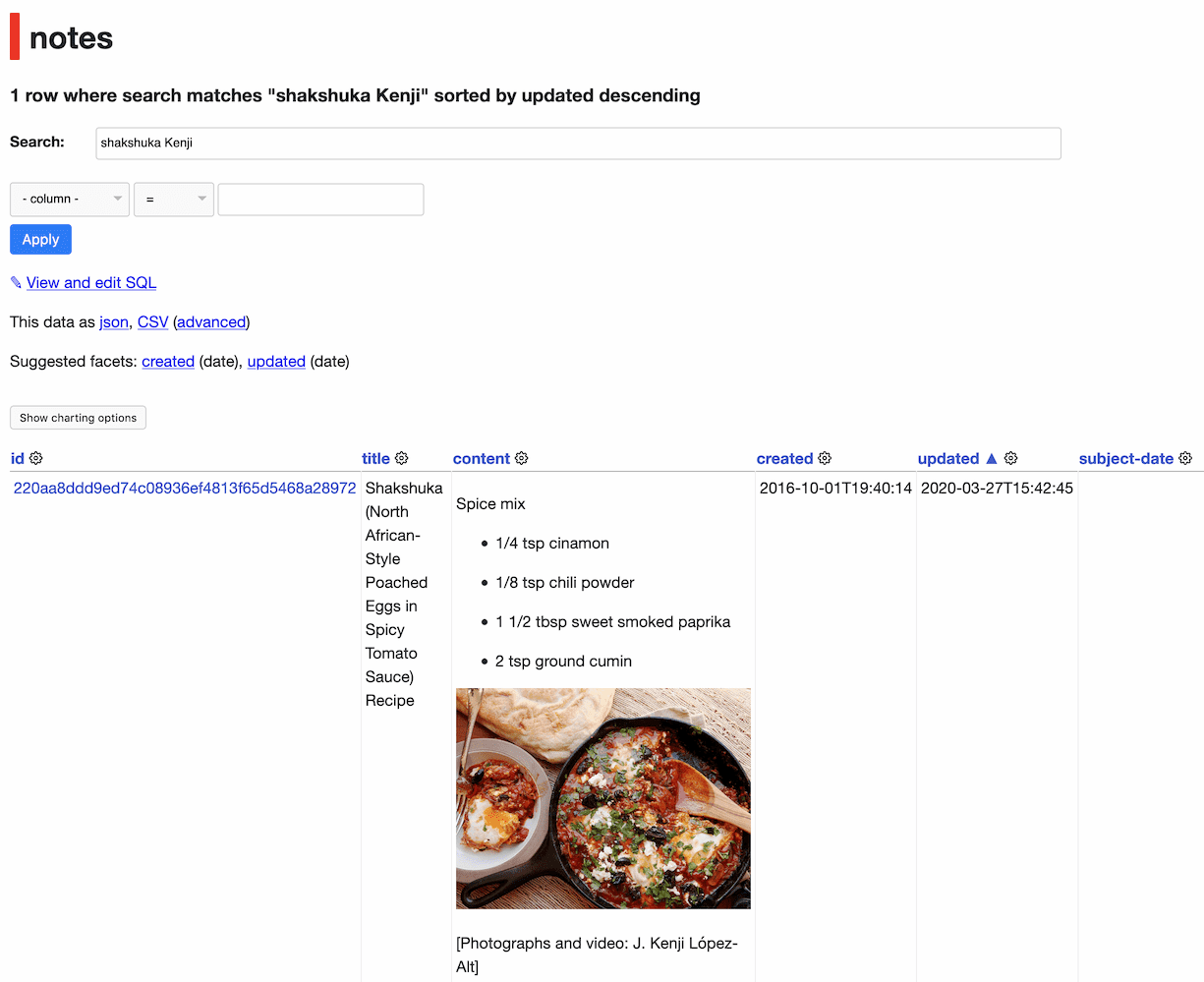

To the conservatives and libertarians who think the whole exercise was overreach, the knee-jerk reaction is that the economy was shuttered unnecessarily, and putting public health in charge of decision-making, instead of the usual corporatist clowns, was a mistake. I think most public health leaders and organizations (except in the US, where absurd underfunding and political interference has seriously undermined the credibility of most of its public health institutions) has performed masterfully throughout this crisis. If you look at the chart at the top of this post, you can see that they were led (by the best data available at the time) to believe that the choice was between scenarios (1) and (2) shown in blue — ie between a “herd immunity” strategy (1), with 20 or 50 million likely to die, or a severe restrictions strategy (2), with a worst-case outcome of around 8 million deaths. Their choice was obvious, and responsible.

But suppose we now find out that the IFR is actually 0.13%, one fifth of what everyone thought it was, and that nearly half of Americans (except in isolated places like North Dakota, which this month has the highest daily death rate per million in the world) have already been infected? In that case we set scenarios (1) and (2) aside and look instead at 0.13% IFR scenarios (3) — “let herd immunity stop it” — and (4) — “let’s keep the restrictions on to save unnecessary deaths”. That’s a 7 million versus 3.5 million deaths choice. I know what my choice would (still) be. A lower IFR would be great news, and it would change nothing insofar as what should have been done and what we should continue to be doing.

I’d like to go further and see masks made mandatory everywhere — not as a criminal offence for the non-compliant but as a global social convention, a small sacrifice to tell the world we care about each other’s health and lives more than we care about a minor physical discomfort. That could save another million lives, and if it were universally accepted it might well stop this disease cold in its tracks — new cases reduced to zero, virus extinct — in a matter of weeks or at most a few months. Would that ever be a lesson for when (not if) the “big one” (the pandemic that is both highly virulent and highly transmissible, with deaths potentially in the billions) inevitably hits us. If we’re not careful, we now may end up becoming more complacent about pandemics if this one turns out to be “not so bad”, and that could prove disastrous in preparing for and addressing the next one.

A few other points that I’ve made over and over that still bear repeating:

- We still don’t know how this disease kills and sickens us. We’re still too busy fighting the fires to address how much damage they’ve done — how much damage the virus has done to our lungs, brains, hearts and other virus-susceptible organs. Even asymptomatic infected people have shown signs of concerning organ damage whose long-term effect is unknown. In a recent study 88% of CoVid-19 patients hospitalized showed permanent “lung abnormalities” and 75% were still struggling with serious symptoms three months after admission.

- Viruses can mutate, and new ones thrive among overcrowded breeding grounds like our cities and residential institutions (like prisons), especially when the inhabitants are chronically or acutely unwell. The second wave in 1918 did far more damage than the first, despite preparedness, and it mostly killed the young and healthy. We must be much better prepared for the viruses to come. It’s not that expensive.

- Pandemics were rare before 1918, and back then depended on ignorance and unsanitary conditions. They are common now, for three reasons we show no willingness or capacity to address — (1) factory farming, which has been the likely breeding ground for most recent “novel” virus strains, as well as being the source of poisoned, toxic foods that cause many times more human deaths and chronic diseases than pandemics; (2) the plundering of the planet’s last wilderness areas, which house untold numbers of viruses and bacteria to which humans have zero immunity; and (3) the exploitation, for food and quack medicines, of exotic creatures (like bats and pangolins) with very different immune systems from ours, which likewise expose us to all the viruses and bacteria to which they’re immune, when we “consume” them. Until we deal with these causes of pandemics, we had better damned well be ready to sacrifice convenience and profit to deal with their consequences.

- Much has been made about the “trade-off” between health and economy. In case anyone hasn’t been paying attention, our industrial growth economy is killing our world, poisoning and exhausting its life forms, its soils, its air and its water, to the point it’s precipitated the sixth great extinction of life on earth. And all of it — all of it! — accrues to the benefit of the richest 1%, simply to keep their stocks, land, products, options and bonuses growing in value. A temporary halt to that madness isn’t a sacrifice; it’s a chance to take stock of whether the way we live our lives is part of the solution or part of the problem, and hopefully start to remedy it.

Our public health and health care workers are doing their best under terribly trying conditions, and using very, very imperfect information. Let’s please give them the benefit of the doubt and stop with the blaming and shaming and second-guessing of ulterior motives. Nobody knows what’s going on. We’re just trying to figure it out, with everything we’ve got.

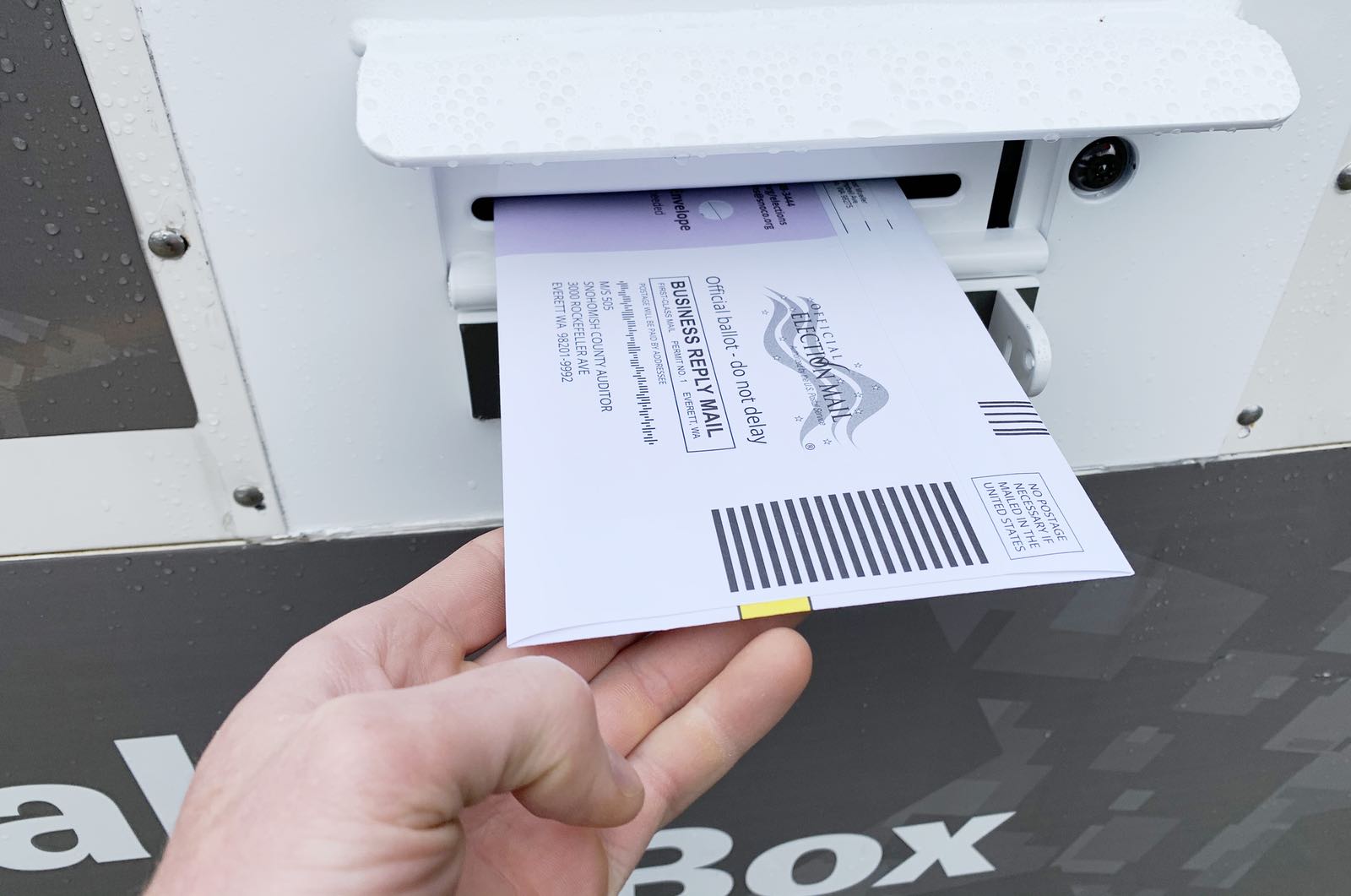

Thanks for reading. Please vote — British Columbians Oct 24th; Americans Nov 3rd, if not before.

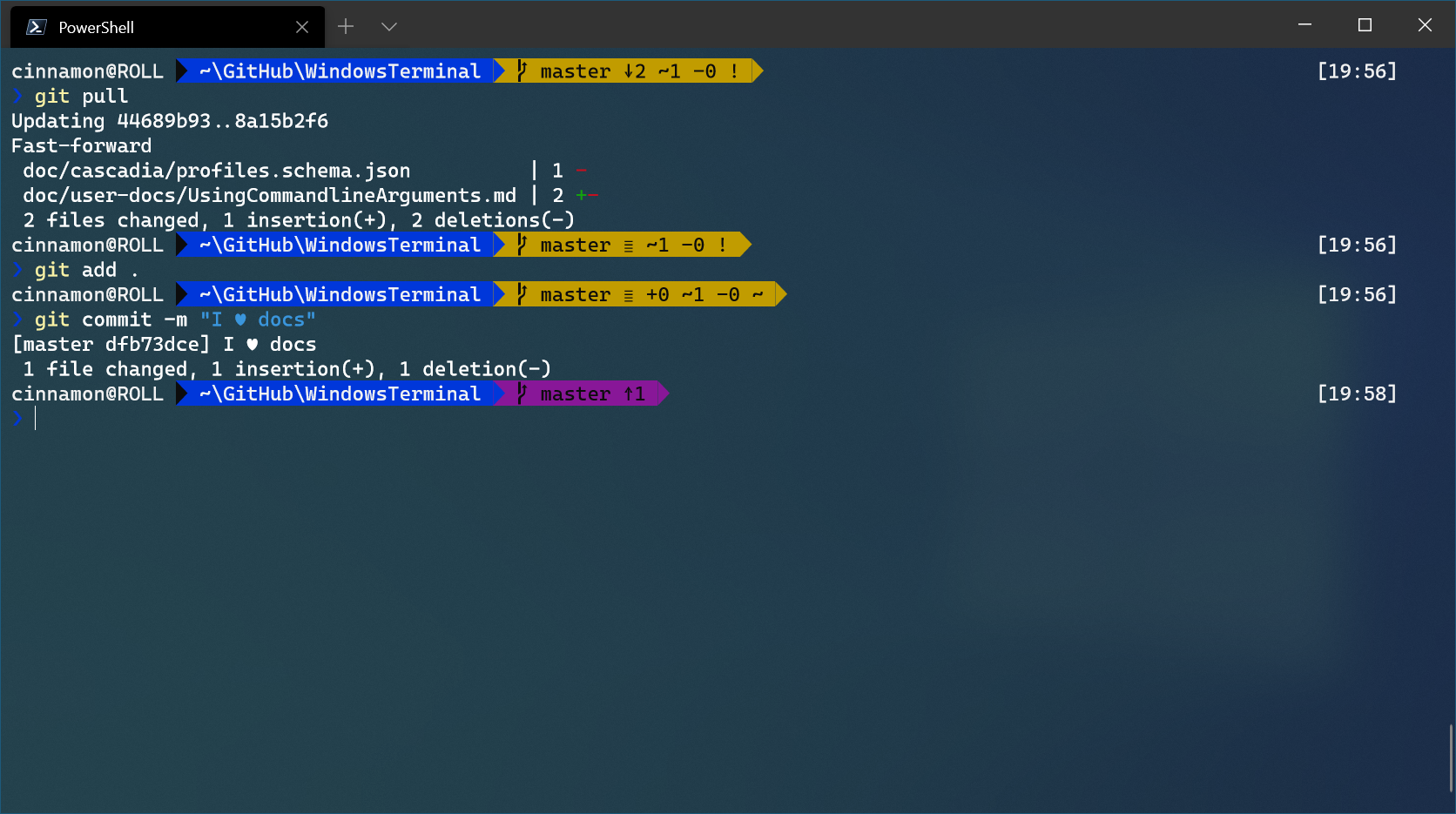

Note: You will have to download the distro icons as they do not come shipped inside the terminal.

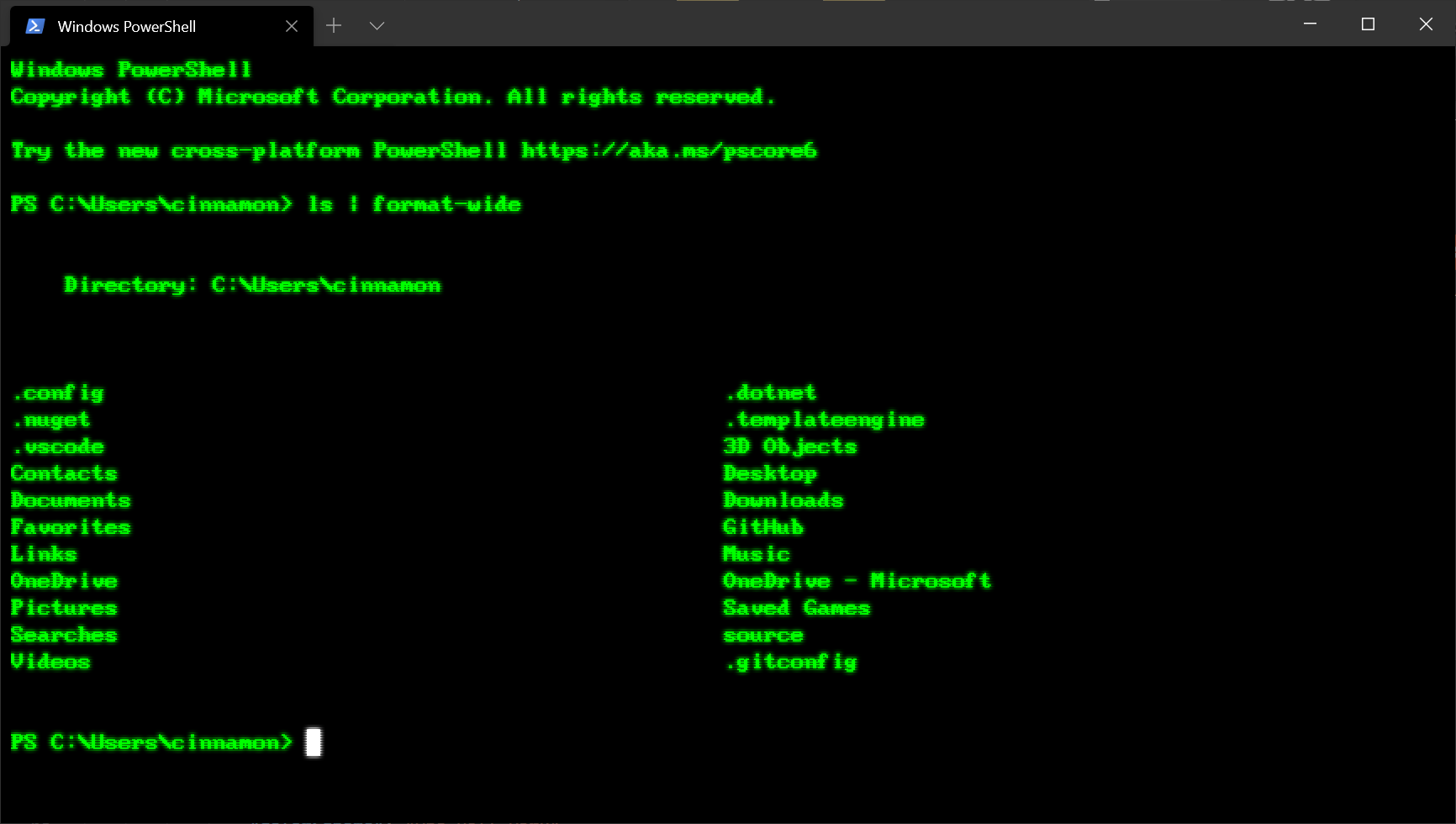

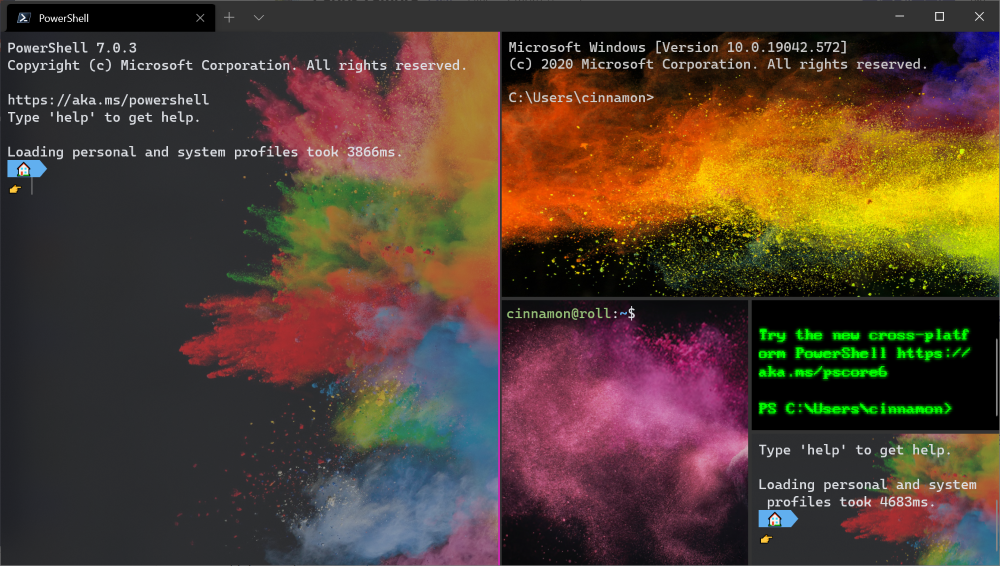

Note: You will have to download the distro icons as they do not come shipped inside the terminal. Tip: You can match your custom color scheme to a background image by using the

Tip: You can match your custom color scheme to a background image by using the