My dear friend danah boyd led a fascinating day-long workshop at Data and Society in New York City today focused on algorithmic governance of the public sphere. I’m still not sure why she asked me to give opening remarks at the event, but I’m flattered she did, and it gave me a chance to dust off one of my favorite historical stories, as well as showing off a precious desktop toy, an action figure of Ben Franklin, given to me by my wife.

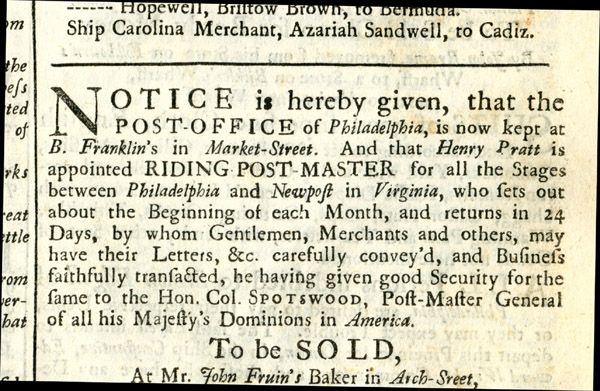

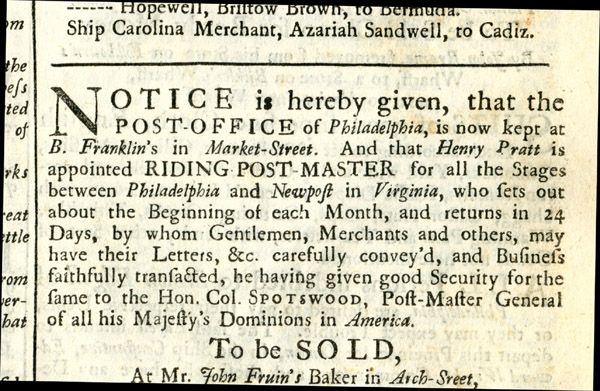

If you’re going to have a favorite founding father, Ben Franklin is not a bad choice. He wasn’t just an inventor, a scientist, a printer and a diplomat – he was a hustler. (As the scholar P. Diddy might have put it, he was all about the Benjamin.) Ben was a businessman, an entrepreneur, and he figured out that one of the best ways to have financial and political power in the Colonies was to control the means of communication. The job he held the longest was as postmaster, starting as postmaster of Philadelphia in 1737 and finally getting fired from his position as postmaster general of the Colonies in 1774, when the British finally figured out that he was a revolutionary who could not be trusted.

Being in charge of the postal system had a lot of benefits for Ben. He had ample opportunities to hand out patronage jobs to his friends and family, and he wasn’t shy about using franking privileges to send letters for free. But his real genius was in seeing the synergies between the family business – printing – and the post. Early in his career as a printer, Franklin bumped into one of the major challenges to publishers in the Colonies – if the postmaster didn’t like what you were writing about, you didn’t get to send your paper out to your subscribers. Once Ben had control over the post, he instituted a policy that was both progressive and profitable. Any publisher could distribute his newspaper via the post for a small, predictable, fixed fee.

What resulted from this policy was the emergence of a public sphere in the United States that was very different from the one Habermas describes, but one that was uniquely well suited to the American experiment. It was a distributed public sphere of newspapers and letters. And for a nation that spanned the distance between Boston and Charleston, a virtual, asynchronous public sphere mediated by print made more sense that one that centered around physical coffee houses.

Franklin died in 1790, but physician and revolutionary Benjamin Rush expanded on Franklin’s vision for a post office that would knit the nation together and provide a space for the political discussions necessary for a nation of self-governing citizens to rule themselves. In 1792, Rush authored The Post Office Act, which is one of the subtlest and most surprising pieces of 18th century legislation that you’ve never heard of.

The Post Office Act established the right of the government to control postal routes and gave citizens rights to privacy of their mail – which was deeply undermined by the Alien and Sedition Acts of 1798, but hey, who’s counting. But what may be most important about the Post Office Act is that it set up a very powerful cross subsidy. Rather than charging based on weight and distance, as they had before Franklin’s reforms, the US postal system offered tiered service based on the purpose of the speech being exchanged. Exchanging private letters was very costly, while sending newspapers was shockingly cheap: it cost a small fraction of the cost of a private letter to send a newspaper. As a result, newspapers represented 95% of the weight of the mails and 15% of the revenue in 1832. This pricing disparity led to the wonderful phenomenon of cheapskates purchasing newspapers, underlining or pricking holes with a pin under selected words and sending encoded letters home.

The low cost of mailing newspapers as well as the absence of stamp taxes or caution money, which made it incredibly prohibitively expensive to operate a press in England, allowed half of all American households to have a newspaper subscription in 1820, a rate that was orders of magnitude higher than in England or France. But the really crazy subsidy was the “exchange copy”. Newspapers could send copies to each other for free, with carriage costs paid by the post office. By 1840, The average newspaper received 4300 exchange copies a year – they were swimming in content, and thanks to extremely loose enforcement of copyright laws, a huge percentage of what appeared in the average newspaper was cut and pasted from other newspapers. This giant exchange of content was subsidized by high rates on those who used the posts for personal and commercial purposes.

This system worked really well, creating a postal service that was fiscally sustainable, and which aspired to universal service. By 1831, three quarters of US government civilian jobs were with the postal service. In an almost literal sense, the early US state was a postal service with a small representative government attached to it. But the postal system was huge because it needed to be – there were 8700 post offices by 1830, including over 400 in Massachusetts alone, which is saying something, as there are only 351 towns in Massachusetts.

I should note here that I don’t really know anything about early American history – I’m cribbing all of this from Paul Starr’s brilliant The Creation of the Media. But it’s a story I teach every year to my students because it helps explain the unique evolution of the public sphere in the US. Our founders built and regulated the postal system in such a way that its function as a sphere of public discourse was primary and its role as a tool for commerce and personal communication was secondary. They took on this massive undertaking explicitly because they believed that to have a self-governing nation, we needed not only representation in Congress, but a public sphere, a space for conversation about what the nation would and could be. And because the US was vast, and because the goal was to expand civic participation far beyond the urban bourgeois (not universal, of course, limited to property-owning white men), it needed to be a distributed, participatory public sphere.

As we look at the challenge we face today – understanding the influence of algorithms over the public sphere – it’s worth understanding what’s truly novel, and what’s actually got a deep historical basis. The notion of a private, commercial public sphere isn’t a new one. America’s early newspapers had an important civic function, but they were also loaded with advertising – 50-90% of the total content, in the late 18th century, which is why so many of them were called The Advertiser. What is new is our distaste for regulating commercial media. Whether through the subsidies I just described or through explicit mandates like the Fairness Doctrine, we’ve not historically been shy in insisting that the press take on civic functions. The anti-regulatory, corporate libertarian stance, built on the questionable assumptions that any press regulation is a violation of the first amendment and that any regulation of tech-centric industries will retard innovation, would likely have been surprising to our founders.

An increase in inclusivity of the public sphere isn’t new – in England, the press was open only to the wealthy and well-connected, while the situation was radically different in the colonies. And this explosion of media led to problems of information overload. Which means that gatekeeping isn’t new either – those newspapers that sorted through 4300 exchange copies a year to select and reprint content were engaged in curation and gatekeeping. Newspapers sought to give readers what an editor thought they wanted, much as social media algorithms promise to help us cope with the information explosion we face from our friends streams of baby photos. The processes editors have used to filter information were never transparent, hence the enthusiasm of the early 2000s for unfiltered media. What may be new is the pervasiveness of the gatekeeping that algorithms make possible, the invisibility of that filtering and the difficulty of choosing which filters you want shaping your conversation.

Ideological isolation isn’t new either. The press of the 1800s was fiercely opinionated and extremely partisan. In many ways, the Federalist and Republican parties emerged from networks of newspapers that shared ideologically consonant information – rather than a party press, the parties actually emerged from the press. But again, what’s novel now is the lack of transparency – when you read the New York Evening Post in 1801, you knew that Alexander Hamilton had founded it, and you knew it was a Federalist paper. Research by Christian Sandvig and Karrie Karahalios suggests that many users of Facebook don’t know that their friend feed is algorithmically curated, and don’t realize the way it may be shaped by the political leanings of their closest friends.

So I’m not here as a scholar of US press and postal history, or a researcher on algorithmic shaping of the public sphere. I’m here as a funder, as a board member of Open Society Foundation, one of the sponsors of this event. OSF works on a huge range of issues around the world, but a common thread to our work is our interest in the conditions that make it possible to have an open society. We’ve long been convinced that independent journalism is a key enabling factor of an open society, and despite the fact that George Soros is not exactly an active Twitter user, we are deeply committed to the idea that being able to access, publish, curate and share information is also an essential precursor to an open society, and that we should be engaged with battles against state censorship and for a neutral internet.

A little more than a year ago, OSF got together with a handful of other foundations – our co-sponsor MacArthur, the Ford Foundation, Knight, Mozilla – and started talking about the idea that there were problems facing the internet that governments and corporations were unlikely to solve. We started asking whether there was a productive role the foundation and nonprofit community could play in this space, around issues of privacy and surveillance, accessibility and openness, and the ways the internet can function as a networked public sphere. We launched the Netgain challenge last February, designed to solicit ideas on what problems foundations might take on. This summer, we held a deep dive on the question of the pipeline of technical talent into public service careers and have started funding projects focused on identifying, training, connecting and celebrating public interest technologists.

The NetGain Challenge: Ethan Zuckerman from Ford Foundation on Vimeo.

We know that the digital public sphere is important. What we don’t know is what, if anything, we should be doing to ensure that it’s inclusive, generative, more civil… less civil? We know we need to know more, which is why we’re here today.

I want to understand what role algorithms are really playing in this emergent public sphere, and I’m a big fan of entertaining the null hypothesis. I think it’s critical to ask what role algorithms are really playing, and whether – as Etyan Basky and Lada Adamic’s research suggests – that echo chambers are more a product of user’s choices than algorithmic intervention. (I argue in Rewire that while filter bubbles may be real, the power of homophily in constraining your access to information is far more powerful.) We need to situate the power of algorithms in relation to cultural and individual factors.

We need to understand what are potential risks and what are real risks. Much of my current work focuses on the ways making and disseminating media is a way of making social change, especially through attempting to shape and mold social norms. Algorithmic control of the public sphere is a very powerful factor if that’s the theory of change you’re operating within. But the feeling of many of my colleagues in the social change space is that the work we’re doing here today is important because we don’t fully understand what algorithmic control means for the public sphere, which means it’s essential that we study it.

danah and her team have brought together an amazing group of scholars, people doing cutting edge work on understand what algorithmic governance and control might and can mean. What I want to ask you to do is expand out beyond the scholarly questions you’re taking on and enter the realm of policy. As we figure out what algorithms are and aren’t doing to our civic dialog, what would we propose to do? How do we think about engineering a public sphere that’s inclusive, diverse and constructive without damaging freedom of speech, freedom to dissent, freedom to offend. How do we propose shaping engineered systems without damaging the freedom to innovate and create?

I’m finding that many of my questions these days boil down to this one: what do we want citizenship to be? That’s the essential question we need to ask when we consider what we want a public sphere to do – what do we expect of citizens, and what would they – we – need to fully and productively engage in civics. That’s a question our founders were asking almost three hundred years ago when Franklin started turning the posts and print into a public sphere, and it’s the question I hope we’ll take up today.

In April of last year, Samsung showed off the A-series of Galaxy Tab tablets, and now it looks like a new version, plus a new letter member, is on the way.

In April of last year, Samsung showed off the A-series of Galaxy Tab tablets, and now it looks like a new version, plus a new letter member, is on the way.

Compared to last year, the difference between the Samsung Galaxy S7 and its edge variant are more pronounced this year. The only key difference between the Galaxy S6 and Galaxy S6 edge last year, was that the latter had a curved screen, a 50mAh higher capacity battery, and Edge UX.

Compared to last year, the difference between the Samsung Galaxy S7 and its edge variant are more pronounced this year. The only key difference between the Galaxy S6 and Galaxy S6 edge last year, was that the latter had a curved screen, a 50mAh higher capacity battery, and Edge UX.

This is the first in a series of posts I'm going to write leading up to the

This is the first in a series of posts I'm going to write leading up to the