I had never wanted to be a programmer exactly, but as I was introduced to Haskell, certain aspects of it appealed to me and I grew to love it. I present them here to help me organize my own thoughts and remember why Haskell is fun. One of the things I most enjoy in life is when a bunch of facts that you usually learn independently and seem separate can be fitted together into a tidy, coherent package or progression. Haskell offers a lot of opportunities for this.

Today I’d like to share with you the good news about currying. Most of what follows is in the book and there are approximately 10,000 blog posts about currying already, so why write one more? Because I’d like to take it a step farther than most people do.

The joy of currying for me was in finding that there was consistency in how kinds work – kinds being the types of types – such that I could know how some functors would work based on what I already knew.

Maybe I take joy in strange things.

The Function Datatype

It took me some time to come to grips with the idea that a lot of types aren’t just types; they’re type constructors and function-like in that they must be applied to some argument to construct a value of that type. It took me a bit longer to understand that the function arrow you see in type signatures is also a type constructor. It doesn’t look or act precisely like other type constructors, but don’t let that fool you.

The (->) type constructor is a product type with two parameters and no data constructors:

data (->) a bWe have two polymorphic type parameters here, a and b. A function takes an a argument and returns b. It has no data constructors, but it does construct a value – constructing a function constructs value of type a -> b.

But these two parameters only allow for one input and one output. So it must be true that Haskell functions take one argument and return one result.

We don’t know anything about our parameters, though, so they can be any type – including more functions. They may be different types, or they may be the same type. In other words, one function could take a function as the input argument and return a function as the output. If it does one or the other or both, we have a higher-order function.

Currying takes advantage of this ability of functions to return more functions. We know that all functions take one argument and return one result, but we also need the ability to write multiparameter functions. Currying, which happens by default in Haskell, allows us to write multiparameter functions that are really nested single-parameter functions.

The (->) associates to the right so that this:

function :: a -> b -> c -> dthat appears to have three arguments is really:

function :: a -> (b -> (c -> d))Applying the function to an a results in another function and so on.

So, any “multiparameter” function is a series of nested one-argument functions, and any of those arguments could theoretically be functions. It’s this default currying that enables the cool thing called partial application. We’ll look at that in a moment.

Higher-Order Functions

Right, so the (->) type constructor has two parameters, and either argument can itself be a function. When we pass a function in as input to a function, we write the type like this:

function :: (a -> b -> c) -> b -> a -> cwith parentheses around that first input that is itself a function. If we didn’t, it would associate to the right, and we’d end up with a mess. Parenthesizing it means that function is one argument to our larger function. The right associativity and currying still apply, so in some way, what we have is:

function :: (a -> (b -> c)) -> (b -> (a -> c))but this is done by default.

One of the important points about functional programming is supposed to be that functions are first class. Since Haskell is my first language, I suppose I take it for granted and never understood the significance (I get why higher-order functions are useful and good, but it just seems so bloody natural to me). But what it means is what we’ve seen: functions can be passed as arguments or returned as values. They may also be stored in variables or data structures, like other values.

Currying and Partial Application

Partial application and why it’s useful is covered quite well by a number of other blog posts so I’ll try not to go on too long about it, and anyway I want to get to the exciting stuff soon.

While the function type associates to the right, function application associates to the left. So,

f x y

-- is really

(f x) ywhere f is the function and x and y are the input arguments.

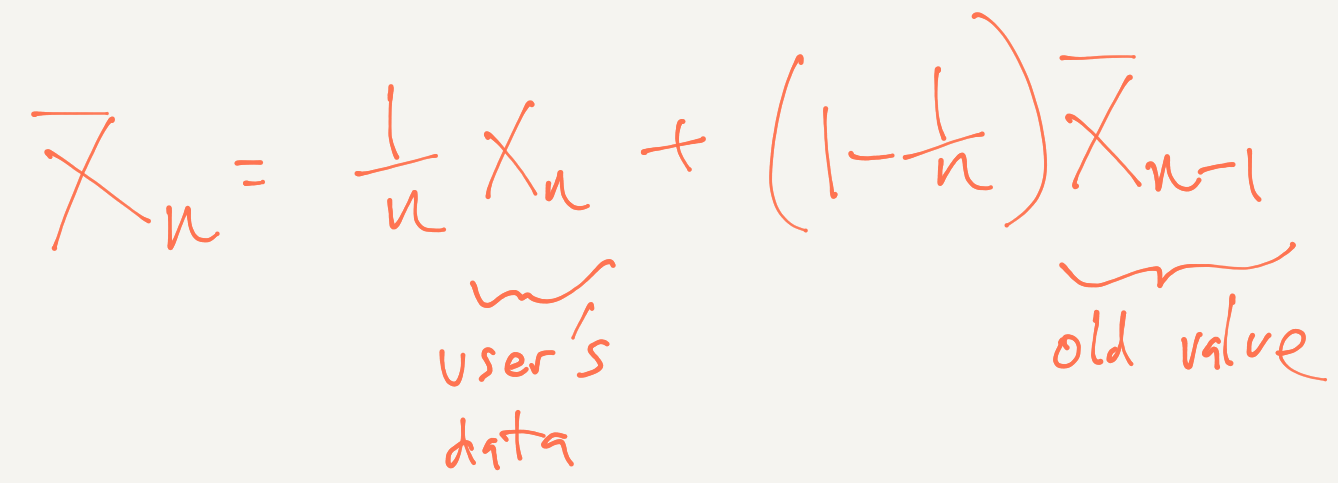

When we partially apply a function, we see a reduction in the parameters of the function’s type signature:

(+) :: Num a => a -> a -> a

-- partially applied

Prelude> let add5 y = 5 + y

Prelude> :t add5

add5 :: Num a => a -> aOur new function, add5, will accept one input argument and return a result. Not terribly exciting, but, in fact, this gives us a way to define any number of useful functions, such as:

sum = foldr (+) 0

reverse = foldl (flip (:)) []In both of those, we’ve partially applied folding functions to create functions that take one list input, without having to rewrite the other arguments over and over. Pretty sweet.

We can even compose partially applied functions:

(take 5 . filter even . enumFrom) 4enumFrom takes one input and generates a list, while both filter and take require lists as their second inputs, not their first ones:

take :: Int -> [a] -> [a]

filter :: (a -> Bool) -> [a] -> [a]By applying them to one argument each (5 and even), they become functions prepared to accept the list generated by enumFrom as input.

Currying allows you to build more specialized functions via partial application, so the order of arguments is a design concern with functions you’ll want to reuse. For example, you’re more likely to want to build a filtering function that will filter all the zeroes out of any list it is given, which can be easily done by partially applying filter to a test for nonequality with 0, than to build one where filter is partially applied to some list first and awaits its filtering criterion. A similar idea gives us the partially applied folds that work as sum and reverse functions.

Kinds

All of that is very nice, but for me the really cool part came when I discovered that the types of types, called kinds, work the same way, and knowing this about kinds gives you quite a lot of information about how other things work, things that seem complicated and mind-bending when you approach them cold. So many things just made sense when I realized they’re a logical progression from how the function type works.

So, in case you’re not familiar with kinds already, here’s a very short rundown. Kinds are represented by stars, (*). Some datatypes, the ones that don’t take any arguments, are essentially type constants rather than type constructors, just as some data constructors are constant values (think of True and False – they don’t construct a value, really, they just are). Those datatypes, such as Bool are a concrete type, so they have one star:

Prelude> :kind Bool

Bool :: *That tells us that the kind of Bool is just one star. It’s not a constructor; that is, it’s not a function. It’s a concrete type – like a value at the type level.

On the other hand, List and Maybe, dataytypes which must be applied to a type argument before they can construct a value of that type, are represented at the kind level with a function arrow:

Prelude> :kind []

[] :: * -> *

Prelude> :kind Maybe

Maybe :: * -> *See, they’re functions! You apply them to an argument and construct a type.

Types that have more than two arguments have even more stars. A datatype called Either takes two parameters:

data Either a b = Left a | Right band the a is in the Left side of the sum type, while the b goes to the Right. Two parameters means it has three stars:

Prelude> :kind Either

Either :: * -> * -> *To construct data of this type, then, we need to apply it to two arguments. Now, just like functions, this associates to the right, and you can partially apply it:

Prelude> :k (Either Int)

(Either Int) :: * -> *

Prelude> :k (Either Int String)

(Either Int String) :: *Woot! It does just what we expect from watching what happened to the types of functions as we partially applied them.

And just the way there’s an interaction between argument order and likelihood of partial application for functions, there’s a similar interaction here. To understand why, let’s look at how Functor works.

The Functor will see you now

In case you’re not familiar with Functor already, a hand-wavy explanation is that it’s a generalization of a map function. Just as you can map a function over a list:

Prelude> map (<3) [1, 2, 3]

[True,True,False]You can fmap a function over a lot of different types of structured data:

Prelude> fmap (<3) (Just 4)

Just FalseBut Functor needs a type that is kind * -> * – if it’s * then there’s nothing to apply a function to; if it’s * -> * -> * then there’s too much to apply a function to, so * -> * is the kind that’s just right for Functor:

class Functor (f :: * -> *) where

fmap :: (a -> b) -> f a -> f bThe f structure that we lift the function over needs to be kind * -> *. Incidentally, the infix operator for fmap is <$> so the following are equivalent:

fmap (*8) [4, 5]

(*8) <$> [4, 5]So, how do we get a Functor for Either? We saw above what partially applying Either does to its kind signature, and we see that fmapping over Either values looks like this:

Prelude> fmap (+1) (Left 1)

Left 1

Prelude> fmap (+1) (Right 1)

Right 2Our function can’t be applied to whatever is inside the Left because what is inside Left is our a and that’s what we had to partially apply to make Either have the kind * -> *. When we write a Functor instance for Either (or tuples, or any datatype with two parameters) to tell the compiler how fmap should work for that type, we have to partially apply the type constructor which makes the first type argument (or arguments, if our datatype has more than two parameters) part of the structure that Functor lifts over.

Anyway, so we’re finally getting to the part that was really exciting for me, although it seems to follow so naturally from what came before, it’s almost a disappointment. Almost.

The Functor of Functions

Friends, the function type itself has a Functor instance. It also has Applicative and Monad instances, but first thing’s first.

Since the function type has two parameters, it is kind * -> * -> * so right away you know we have to apply that first argument before we can get a Functor for it.

It feels a little different from Either, though, because now it isn’t whatever type is safely contained within the Left a of our Either type that becomes part of the structure – now, it’s the first input to our function that will be part of the “structure.”

For the Functor, this only amounts to function composition. We can see this by comparing the types:

-- function composition

(.) :: (b -> c) -> (a -> b) -> (a -> c)

-- fmap

<$> :: (a -> b) -> f a -> f b

-- we can change letters without changing the meaning

:: (b -> c) -> (a -> b) -> (a -> c)

:: (b -> c) -> f b -> f c

-- f here is ((->) a)

-- that is, a partially applied function

:: (b -> c) -> (a -> b) -> (a -> c)

:: (b -> c) -> ((->) a) b -> ((->) a) c

-- change the prefix notation into infix

:: (b -> c) -> (a -> b) -> (a -> c)

:: (b -> c) -> (a -> b) -> (a -> c)They’re the same! Which means the Functor of functions isn’t terribly exciting! What is exciting is that something that at first seemed weird and alienating – what do you mean you fmap a partially applied function over another partially applied function? – isn’t so weird. It follows from what we already knew.

The Applicative of functions is pretty exciting, though, because it allows us to create a context in which two functions are awaiting the same input and a third function can apply to the result of both of those functions, once that input is supplied.

Waaaaat? No, for real:

Prelude> ((+) <$> (^2) <*> (+10)) 4

30Our two partially applied functions, (^2) and (+10), are waiting for an input; the tie-fighter-looking operator between them is our applicative operator. We’re also fmapping (+) over that whole deal so that the results of both those functions will be the two arguments to the addition function. Essentially, something like this:

(+) (4^2) (4+10)

-- 16 + 14

30Meh, what a lot of trouble. Who wants to write all that out all the time?

Of course we don’t want to do that, so we just give it a name:

function :: Integer -> Integer

function = ((+) <$> (^2) <*> (+10))

Prelude> function 4

30

Prelude> function 5

40These trivial arithmetic examples in blog posts admittedly always seem…well, trivial. But it turns out to be a handy pattern:

function = (&&) <$> (> 14.5) <*> (< 20.1)

Prelude> function 3

False

Prelude> function 16

TrueWe’ll return True whenever the input is between 14.5 and 20.1 (but not equal to either).

So partial application and currying have allowed us to create a context in which we have functions sort of hanging around waiting for some input to come from somewhere in the environment, and we don’t need to keep stringing that argument explicitly through every function. The Monad and transformer versions, called Reader and ReaderT, are quite commonly used because the pattern is so useful.

Sometimes people ask us why we don’t split the book into two volumes. What it comes down to is that all the really important stuff is in the first half, but people always think that to understand Haskell, they need the second half (and they do! there’s a lot of stuff in there that would be difficult and tiresome to work out for yourself!). But much of what comes in the second half is just more complex ways to nest lambdas and apply and compose functions, which is what the first half is all about.

Many thanks to John DeGoes and Adam McCullough for their thorough, though not at all brutal, feedback.

Next post is likely to be a disquisition about disjunction.

You can see their picture

You can see their picture