2016-08-13 UPDATE: Fortune

has a story on this and it does seem to be tax-related vs ideology.

@thosjleeper suggested something similar as well about a week ago.

If you’re even remotely following the super insane U.S. 2016 POTUS circus election you’ve no doubt seen a resurgence of “if X gets elected, I’m moving to Y” claims by folks who are “anti” one candidate or another. The Washington Examiner did a story on last quarter’s U.S. expatriation numbers. I didn’t realize we had a department in charge of tracking and posting that data, but we do thanks to inane bureaucratic compliance laws.

I should have put “posting that data” in quotes as it’s collected quarterly and posted ~2 months later in non-uniform HTML and PDF form across individual posts in a unique/custom Federal Register publishing system. How’s that hope and change in “open government data” working out for y’all?

The data is organized enough that we can take a look at the history of expatriation with some help from R. Along the way we’ll:

- see how to make parameterized web requests a bit cleaner with

httr

- get even more practice using the

purrr package

- perhaps learn a new “trick” when using the

stringi package

- show how we can “make do” living in a non-XPath 2 world (it’s actually pretty much at XPath 3 now, too #sigh)

A manual hunt on that system will eventually reveal a search URL that you can use in a read.csv() (to grab a list of URLs with the data, not the data itself #ugh). Those URLs are gnarly (you’ll see what I mean if you do the hunt) but we can take advantage of the standardized URL query parameter that are used in the egregiously long URLs in a far more readable fashion if we use httr::GET() directly, especially since httr::content() will auto-convert the resultant CSV to a tibble for us since the site sets the response MIME type appropriately.

Unfortunately, when using the 6039G search parameter (the expatriate tracking form ID) we do need to filter out non-quarterly report documents since the bureaucrats must have their ancillary TPS reports.

library(dplyr)

library(httr)

library(rvest)

library(purrr)

library(lubridate)

library(ggplot2) # devtools::install_github("hadley/ggplot2")

library(hrbrmisc) # devtools::install_github("hrbrmstr/hrbrmisc")

library(ggalt)

library(grid)

library(scales)

library(magrittr)

library(stringi)

GET("https://www.federalregister.gov/articles/search.csv",

query=list(`conditions[agency_ids][]`=254,

`conditions[publication_date][gte]`="01/01/2006",

`conditions[publication_date][lte]`="7/29/2016",

`conditions[term]`="6039G",

`conditions[type][]`="NOTICE")) %>%

content("parsed") %>%

filter(grepl("^Quarterly", title)) -> register

glimpse(register)

## Observations: 44

## Variables: 9

## $ citation <chr> "81 FR 50058", "81 FR 27198", "81 FR 65...

## $ document_number <chr> "2016-18029", "2016-10578", "2016-02312...

## $ title <chr> "Quarterly Publication of Individuals, ...

## $ publication_date <chr> "07/29/2016", "05/05/2016", "02/08/2016...

## $ type <chr> "Notice", "Notice", "Notice", "Notice",...

## $ agency_names <chr> "Treasury Department; Internal Revenue ...

## $ html_url <chr> "https://www.federalregister.gov/articl...

## $ page_length <int> 9, 17, 16, 20, 8, 20, 16, 12, 9, 15, 8,...

## $ qtr <date> 2016-06-30, 2016-03-31, 2015-12-31, 20...

Now, we grab the content at each of the html_urls and save them off to be kind to bandwidth and/or folks with slow connections (so you don’t have to re-grab the HTML):

docs <- map(register$html_url, read_html)

saveRDS(docs, file="deserters.rds")

That generates a list of parsed HTML documents.

The reporting dates aren’t 100% consistent (i.e. not always “n” weeks from the collection date), but the data collection dates embedded textually in the report are (mostly…some vary in the use of upper/lower case). So, we use the fact that these are boring legal documents that use the same language for various phrases and extract the “quarter ending” dates so we know what year/quarter the data is relevant for:

register %<>%

mutate(qtr=map_chr(docs, ~stri_match_all_regex(html_text(.), "quarter ending ([[:alnum:], ]+)\\.",

opts_regex=stri_opts_regex(case_insensitive=TRUE))[[1]][,2]),

qtr=mdy(qtr))

I don’t often use that particular magrittr pipe, but it “feels right” in this case and is handy in a pinch.

If you visit some of the URLs directly, you’ll see that there are tables and/or lists of names of the expats. However, there are woefully inconsistent naming & formatting conventions for these lists of names and (as I noted earlier) there’s no XPath 2 support in R. Therefore, we have to make a slightly more verbose XPath query to target the necessary table for scraping since we need to account for vastly different column name structures for the tables we are targeting.

NOTE: Older HTML pages may not have HTML tables at all and some only reference PDFs, so don’t rely on this code working beyond these particular dates (at least consistently).

We’ll also tidy up the data into a neat tibble for plotting.

map(docs, ~html_nodes(., xpath=".//table[contains(., 'First name') or

contains(., 'FIRST NAME') or

contains(., 'FNAME')]")) %>%

map(~html_table(.)[[1]]) -> tabs

data_frame(date=register$qtr, count=map_int(tabs, nrow)) %>%

filter(format(as.Date(date), "%Y") >= 2006) -> left

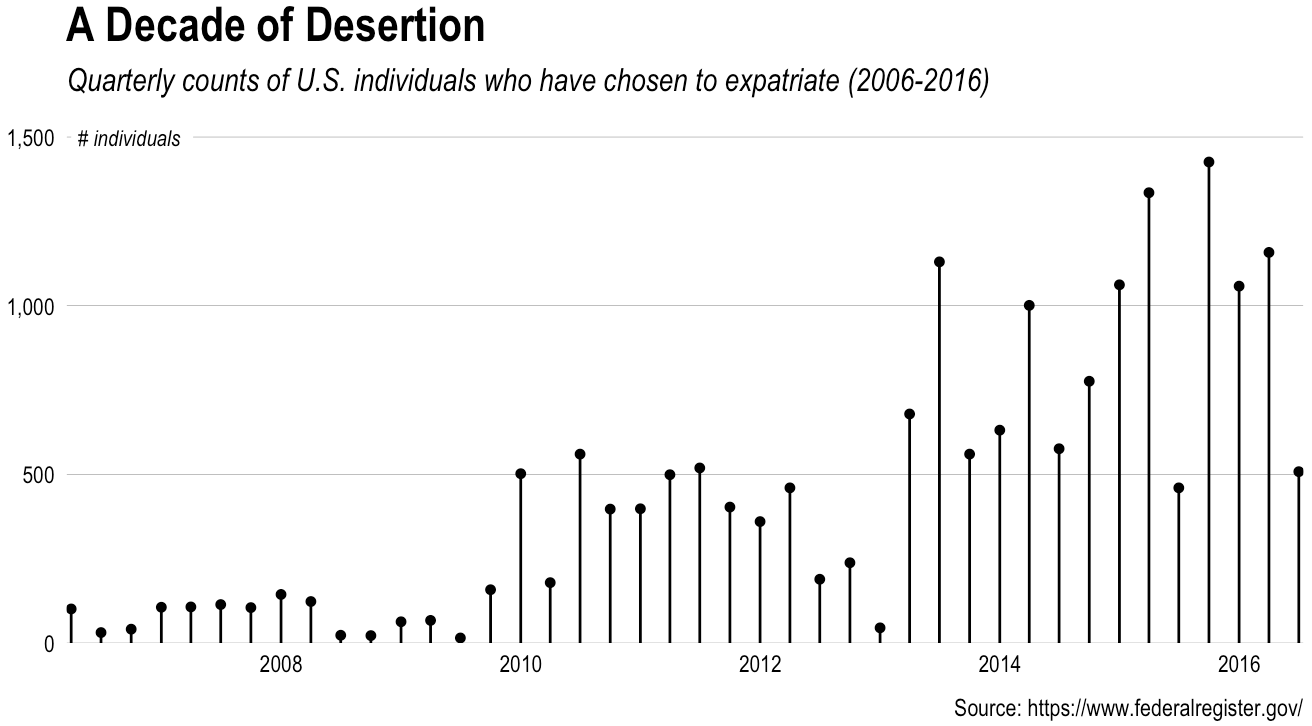

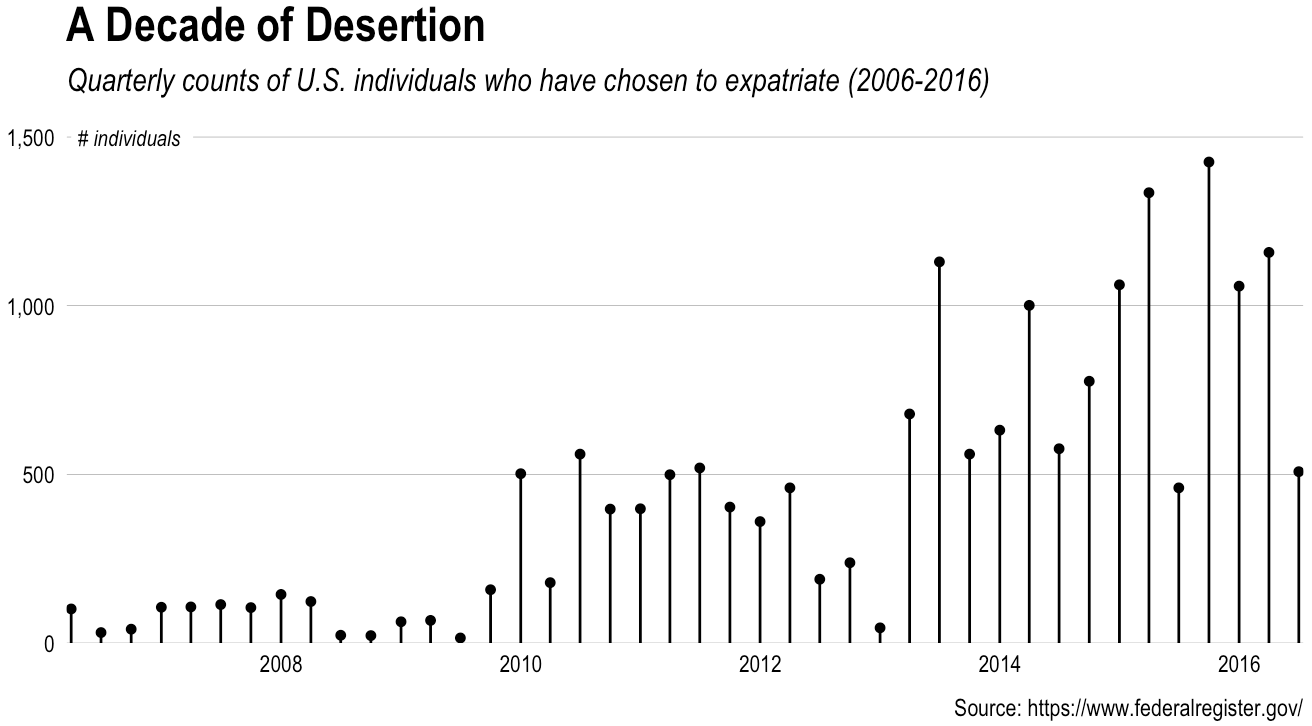

With the data wrangling work out of the way, we can tally up the throngs of folks desperate for greener pastures. First, by quarter:

gg <- ggplot(left, aes(date, count))

gg <- gg + geom_lollipop()

gg <- gg + geom_label(data=data.frame(),

aes(x=min(left$date), y=1500, label="# individuals"),

family="Arial Narrow", fontface="italic", size=3, label.size=0, hjust=0)

gg <- gg + scale_x_date(expand=c(0,14), limits=range(left$date))

gg <- gg + scale_y_continuous(expand=c(0,0), label=comma, limits=c(0,1520))

gg <- gg + labs(x=NULL, y=NULL,

title="A Decade of Desertion",

subtitle="Quarterly counts of U.S. individuals who have chosen to expatriate (2006-2016)",

caption="Source: https://www.federalregister.gov/")

gg <- gg + theme_hrbrmstr_an(grid="Y")

gg

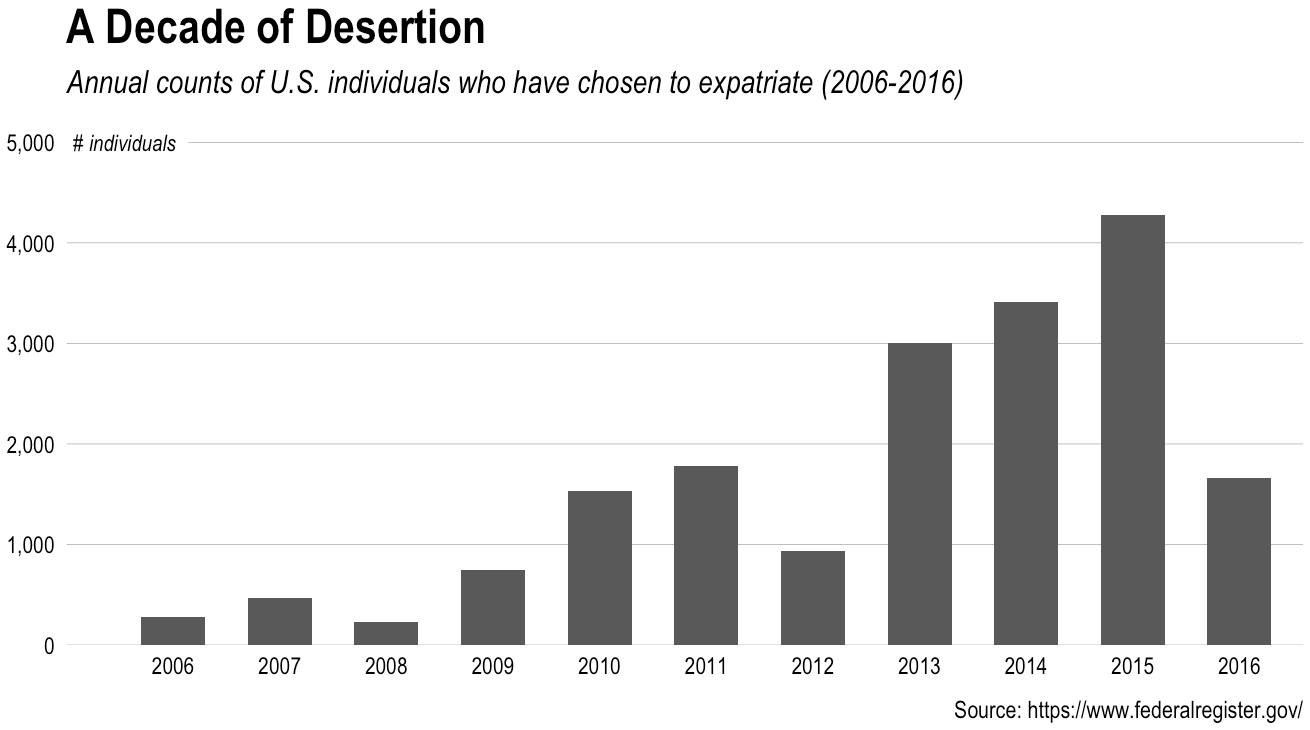

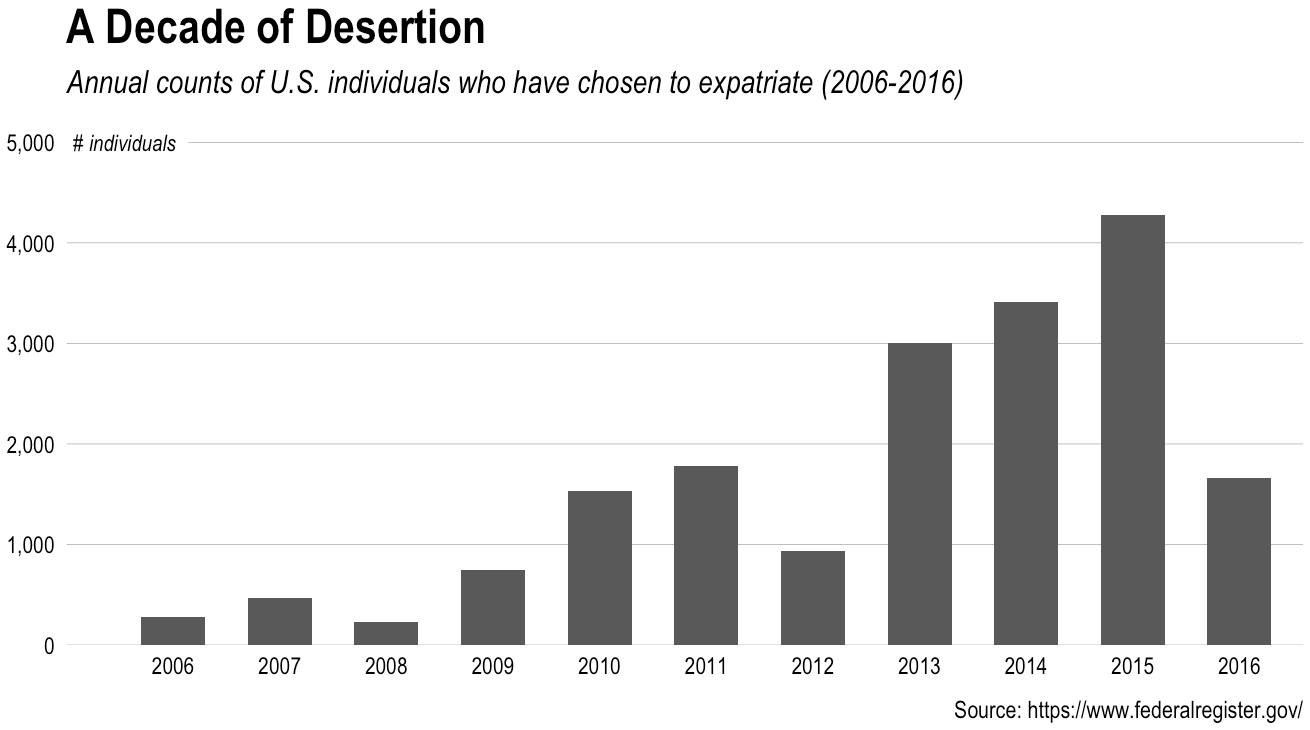

and, then annually:

left %>%

mutate(year=format(date, "%Y")) %>%

count(year, wt=count) %>%

ggplot(aes(year, n)) -> gg

gg <- gg + geom_bar(stat="identity", width=0.6)

gg <- gg + geom_label(data=data.frame(), aes(x=0, y=5000, label="# individuals"),

family="Arial Narrow", fontface="italic", size=3, label.size=0, hjust=0)

gg <- gg + scale_y_continuous(expand=c(0,0), label=comma, limits=c(0,5100))

gg <- gg + labs(x=NULL, y=NULL,

title="A Decade of Desertion",

subtitle="Annual counts of U.S. individuals who have chosen to expatriate (2006-2016)",

caption="Source: https://www.federalregister.gov/")

gg <- gg + theme_hrbrmstr_an(grid="Y")

gg

The exodus isn’t massive but it’s actually more than I expected. It’d be interesting to track various US tax code laws, enactment of other compliance regulations and general news events to see if there are underlying reasons for the overall annual increases but also the dips in some quarters (which could just be data collection hiccups by the Feds…after all, this is government work). If you want to do all the math for correcting survey errors, it’d also be interesting to normalize this by population and track all the data back to 1996 (when HIPPA mandated the creation & publication of this quarterly list) and then see if you can predict where we’ll be at the end of this year (though I suspect political events are a motivator for at least a decent fraction of some of the quarters).

Google today announced that it has officially started rolling out the “WiFi only” mode for Google Maps on Android. The feature was earlier in a limited beta testing by the company.

Google today announced that it has officially started rolling out the “WiFi only” mode for Google Maps on Android. The feature was earlier in a limited beta testing by the company.