The Elder Scrolls V: Skyrim VR is right around the corner with a release date of November 17th, 2017 — that’s only mere days away. With its launch this Friday it will easily rank as the largest, most detailed, and most elaborate game available in VR to date. With hundreds of hours of gameplay between the core campaign, side content, and three expansions players will be able to lose themselves once again in the frosted wastelands of Skyrim.

When Skyrim originally released all the way back in 2011 it was lauded as a landmark achievement for roleplaying games and its impact on the industry is still being felt to this day. Bethesda teamed up with Escalation Studios to port the title over to VR and rebuild many of the controls and interfacing options from the ground up. On PSVR you can play either with a Dualshock 4 gamepad or two PS Move controllers with full head-tracking and a host of movement options. Casting spells means controlling each hand individually, swinging the sword with your hand, and blocking attacks with a shield strapped to your arm. It’s Skyrim like you’ve never experienced it before.

We also got the chance to send over a handful of questions to the Lead Producer at Bethesda Game Studios, Andrew Scharf, before the game’s launch to learn more about its development and what it took to bring the vast world of Tamriel to VR for the very first time.

UploadVR: All of Skyrim is in VR, which is quite ambitious. What were the biggest challenges with porting the game to a new format like VR?

Andrew Scharf: PlayStation VR games need to be running at 60 fps at all times, otherwise it can be an uncomfortable experience for the player. We’re working with a great team at Escalation Studios, who are among the best VR developers in the industry and with their help, we were able to not only get the game running smoothly, but redesign and shape Skyrim’s mechanics to feel good in VR.

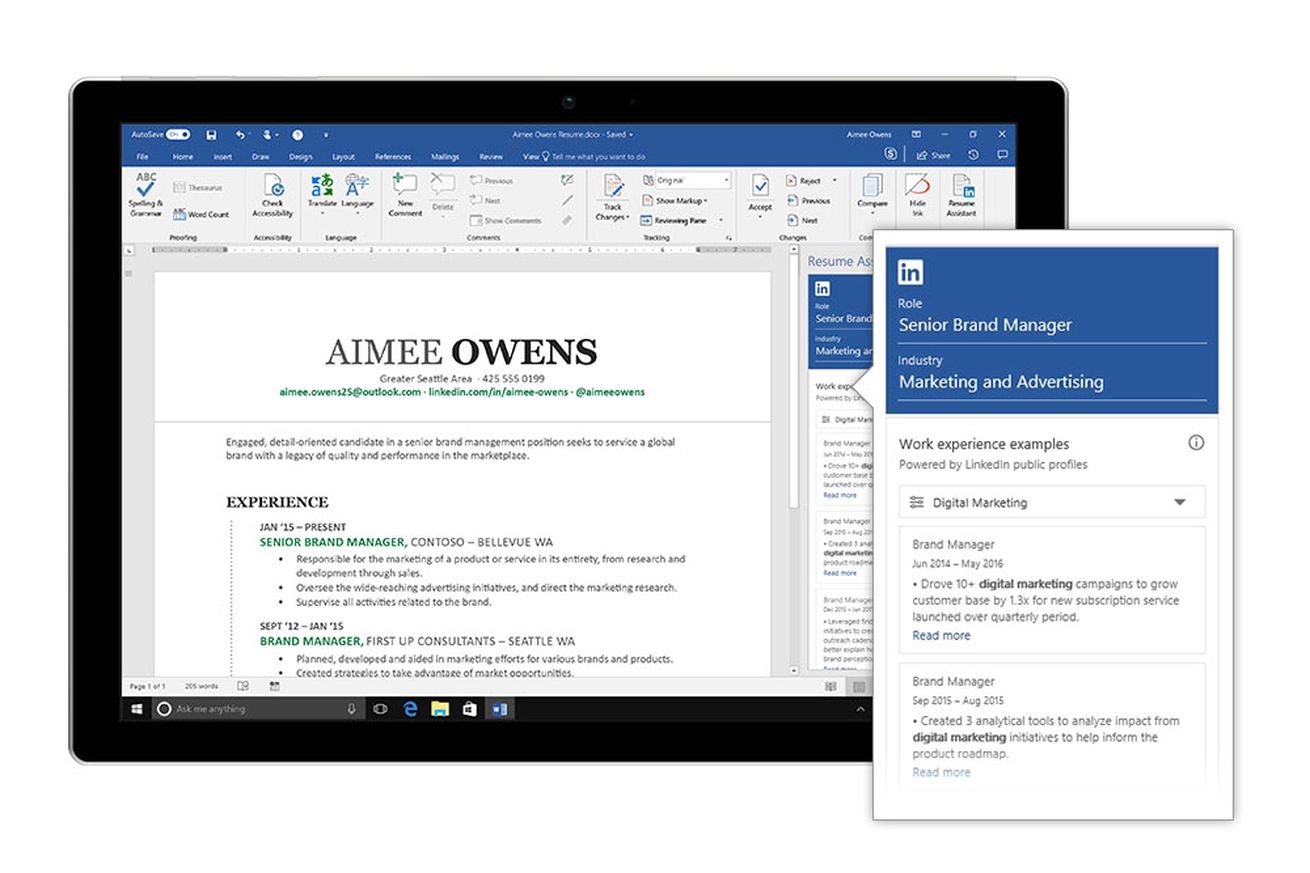

It’s definitely a challenge figuring out the best way to display important information in VR. Take the World Map and the Skills menu for example — we wanted to give the player an immersive 360 degree view when choosing where to travel, or viewing the constellations and deciding which perk to enable. For the HUD, we needed to make sure important information was in an area where the player could quickly refer to it, while also preventing the player from feeling claustrophobic by being completely surrounded by user interface elements.

UploadVR: What are the major differences with developing for PSVR and HTC Vive?

Andrew Scharf: The major difference that took iteration is the single camera that the PSVR uses, versus the HTC Vive base stations. In order to ensure an optimal VR experience, the PS Move Controllers need to be in view of the camera which means players need to always be facing in that direction. Early on, we found that this was a bit of a challenge – players would put on the headset and then turn all the way around and start going in a random direction. One solution to help keep players facing the right way was to anchor important UI elements so if you can see the compass in front of you, you’re facing in the right direction.

The PlayStation Move Controllers also has several buttons while the HTC Vive Controllers primarily have a multi-function trackpad, so figuring out input and control schemes that felt natural to the platform was tricky, but we feel really good where we’ve landed with controls and locomotion for both Skyrim and Fallout VR.

UploadVR: What are some of the ways that you think VR adds to the game? For example, in my last demo deflecting arrows with my shield felt really, really satisfying. How has VR redefined how you enjoy Skyrim?

Andrew Scharf: It’s a huge perspective shift which completely changes how you approach playing the game. Part of the fun of making combat feel natural in VR is now you have some tricks up your sleeve that you didn’t have before. You can fire the bow and arrow as fast and you’re able to nock and release, lean around corners to peek at targets, shield bash with your left hand while swinging a weapon with your right, and my favorite is being able to attack two targets at the same time with weapons or spells equipped in each hand.

You can also precisely control where you pick up and drop objects, and in general be able to interact with the world a little more how you’d expect – so like me, you’re probably going to take this skill and use it to obsessively organize your house.

UploadVR: Were there ever considerations to add voice recognition for talking with NPCs? Selecting floating words as dialog choices breaks the immersion a little bit.

Andrew Scharf: We thought about a lot of different options for new features and interfaces during development. In the end we chose to prioritize making the game feel great in VR. At this time, voice recognition for dialogue is not included in the game.

UploadVR: Was smooth locomotion with PS Move something that was always planned for the game, or was it added after feedback from fans at E3?

Andrew Scharf: There were a bunch of options we were considering from the very beginning of development. We wanted to ensure that people who were susceptible to VR motion sickness could still experience the world of Skyrim comfortably, so we focused on new systems we would have to add (like our teleportation movement scheme) to help alleviate any tolerance issues first.

For smooth locomotion, there’s a good number of us here who spend a lot of time playing VR games and see what works well and what doesn’t, but ultimately it came down to figuring out the best approach for us. There were unique challenges with Skyrim that we had to iterate on, from having both main and offhand weapons, the design of the PlayStation Move controllers, long-term play comfort, and ultimately, making sure you can still play Skyrim in whichever playstyle you prefer.

UploadVR: Mods won’t be in the game at launch, but what about the future? Do you want to bring other Elder Scrolls games into VR? And what about Fallout 4 VR on PSVR?

Andrew Scharf: We’ve definitely learned a lot, but as far as what future features or titles we will or won’t bring to VR, that remains to be seen. For now our focus is on launching and supporting the VR versions of Skyrim and Fallout 4.

Our goal with all our VR titles is to bring it to as many platforms as possible. When and if we have more information to share we will let everyone know.

During a short “Making Of” video, Bethesda revealed more details about Skyrim VR’s development and some of the updates they made to bring it to life once again:

We’ll have more details on Skyrim VR very soon — including a full review and livestream tomorrow. You can also read more about Bethesda’s approach to VR in this interview with the company’s VP of Marketing, Pete Hines. Skyrim VR releases for PSVR this Friday, November 17th. For more impressions you can read about our latest hands-on demo and watch actual gameplay footage. Let us know what you think — and any questions you might have — down in the comments below!

Tagged with: Bethesda, Skyrim VR, The Elder Scrolls

Meural’s second-generation Canvas digital art display is now available, and I’ve been testing one out for the past couple of weeks to see how it stacks up. This is my first experience with any kind of digital canvas product, and I have to admit I had very low expectations going into it – but the Meural is actually an outstanding gadget, provided you have the means to commit…

Meural’s second-generation Canvas digital art display is now available, and I’ve been testing one out for the past couple of weeks to see how it stacks up. This is my first experience with any kind of digital canvas product, and I have to admit I had very low expectations going into it – but the Meural is actually an outstanding gadget, provided you have the means to commit…

Agtech has largely seemed underserved by emerging startups, though farmers have largely proven more receptive to adopting new tech than most might assume. Ceres Imaging has a fairly straightforward pitch. Pay for a low-flying plane to snap shots of your farm with spectral cameras and proprietary sensors, and soon after get delivered insights that can help farmers determine water and…

Agtech has largely seemed underserved by emerging startups, though farmers have largely proven more receptive to adopting new tech than most might assume. Ceres Imaging has a fairly straightforward pitch. Pay for a low-flying plane to snap shots of your farm with spectral cameras and proprietary sensors, and soon after get delivered insights that can help farmers determine water and…