Jean-Philippe Encausse

Shared posts

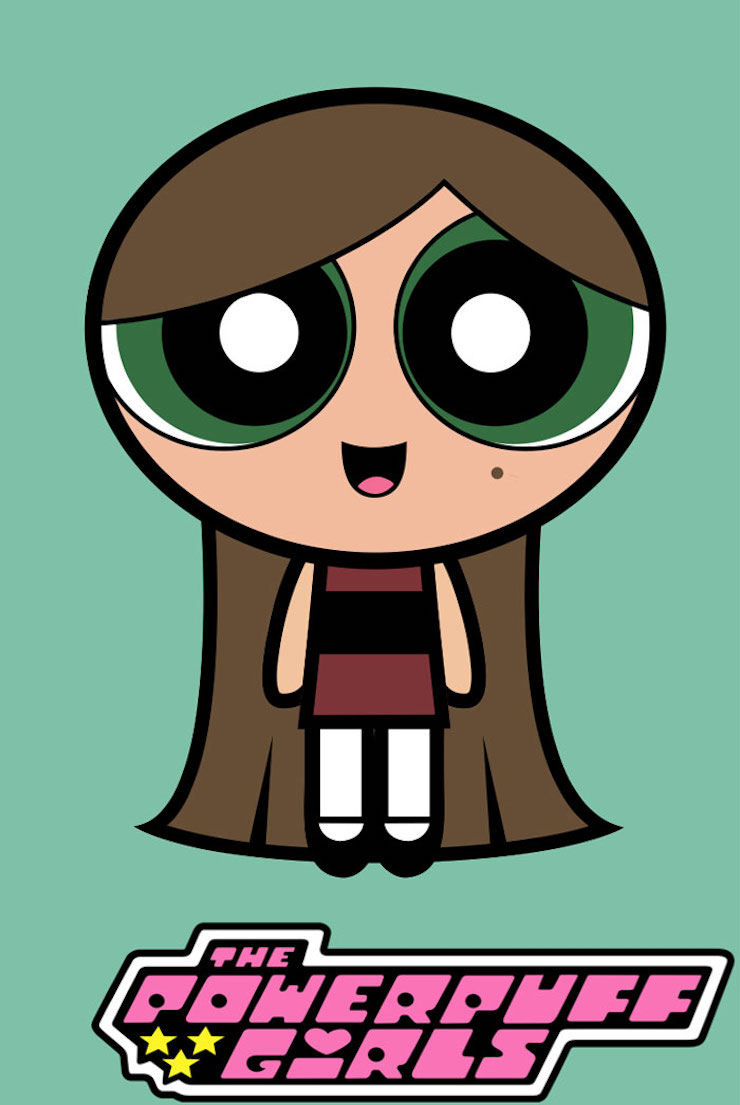

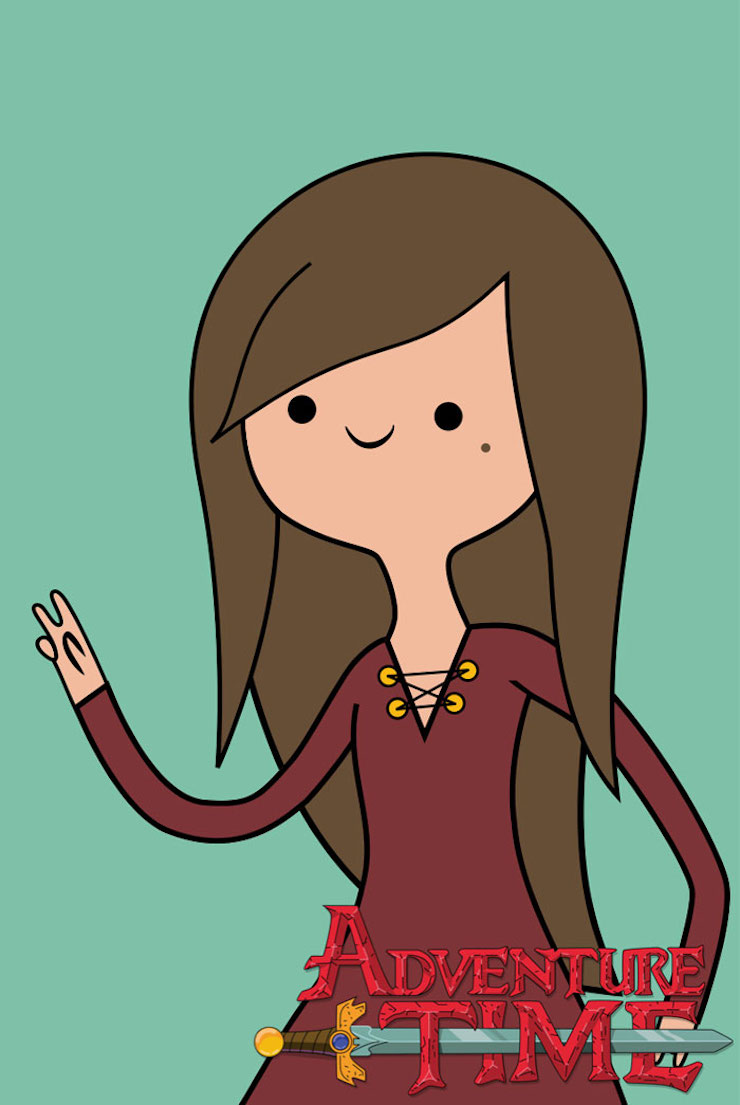

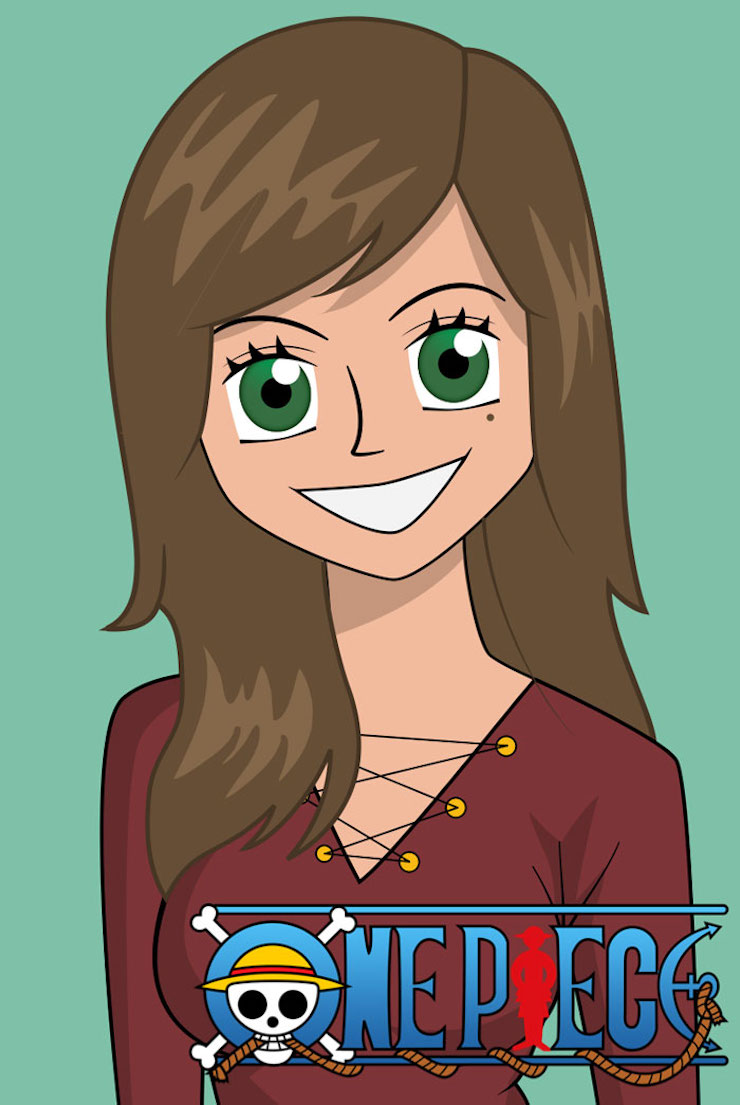

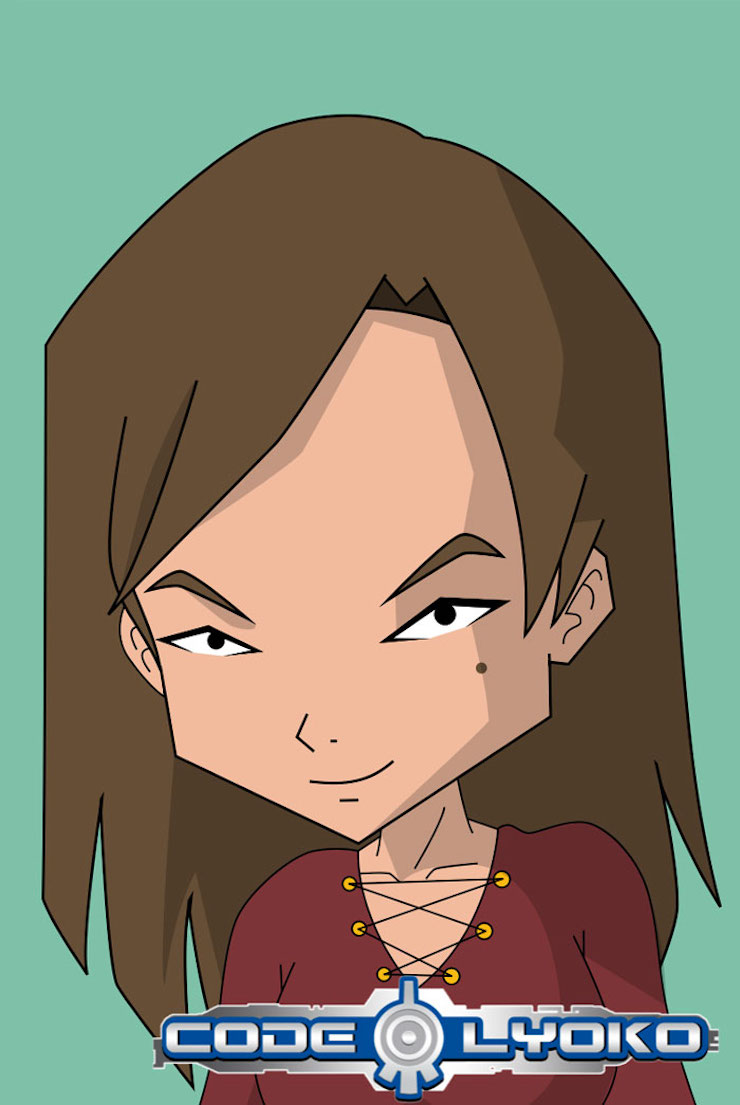

Elle réalise son autoportrait dans 50 styles de dessins animés différents

L’illustratrice Sam Skinner s’est amusée à revisiter son propre autoportrait en s’inspirant du style de 50 dessins animés et bandes dessinées célèbres. Un gimmick désormais connu… mais toujours aussi distrayant et efficace !

Si vous repensez à votre enfance, il y a forcément un ou plusieurs dessins animés qui vous ont marqués. Pour Sam Skinner, l’artiste dont nous allons vous parler aujourd’hui, il y en a une bonne cinquantaine. À tout juste 24 ans, cette jeune créative maîtrise différents styles artistiques. Elle est passée maître dans l’art de réinterpéter le style des autres artistes… et vous allez très vite comprendre pourquoi.

Sam Skinner s’est amusée à revisiter son propre autoportrait dans le style de 50 dessins animés différents. Les Simpson, Futurama, Adventure Time, Totally Spies, American Dad… Tout y passe et on doit avouer qu’elle fait ça plutôt bien. “J’ai décidé de relever le défi parce que j’ai vu tellement de gens le faire en ligne que j’ai eu envie de le faire également” explique Sam Skinner. L’artiste avoue avoir réalisé ce projet pour améliorer ses compétences en dessin.

Crédits : Sam Skinner

En effet, son but ultime est de s’inspirer des plus grands pour trouver son propre style artistique et créer elle même sa propre bande dessinée. Et en attendant de voir son projet réalisé, on vous invite à vous rendre sur son compte Instagram pour en savoir plus sur l’artiste et son art. Dans le même esprit, vous pouvez également découvrir le projet de l’illustrateur Kells O’Hickey qui s’est amusé à revisiter ses photos de couple dans 10 styles dessins animés différents.

Les Super Nanas

Crédits : Sam Skinner

Adventure Time

Crédits : Sam Skinner

Tim Burton

Crédits : Sam Skinner

Sailor Moon

Crédits : Sam Skinner

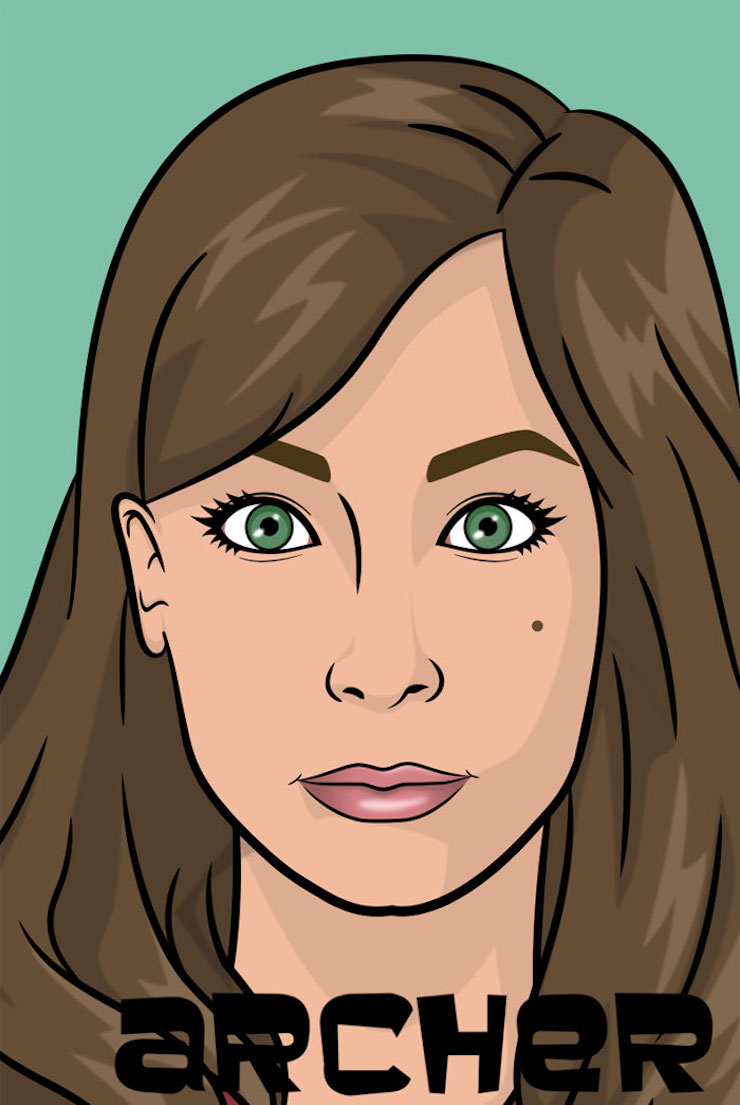

Archer

Crédits : Sam Skinner

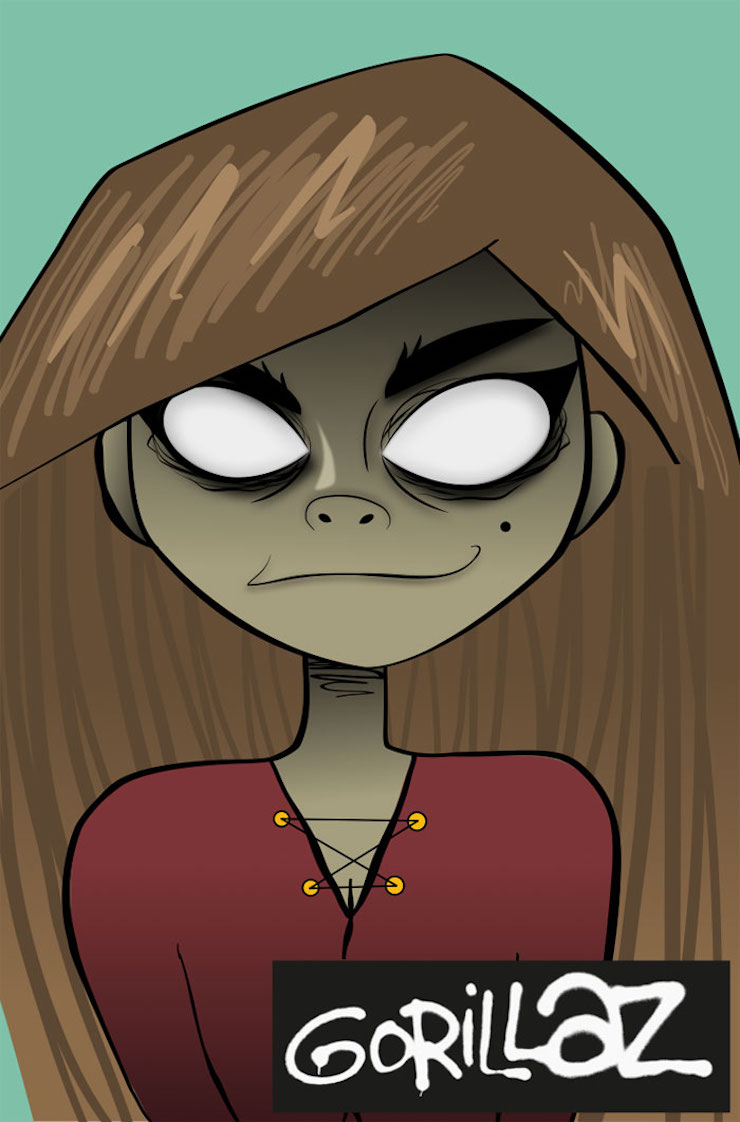

Gorillaz

Crédits : Sam Skinner

Disney

Crédits : Sam Skinner

The Simpson

Crédits : Sam Skinner

Bob l’Éponge

Crédits : Sam Skinner

Le Laboratoire de Dexter

Crédits : Sam Skinner

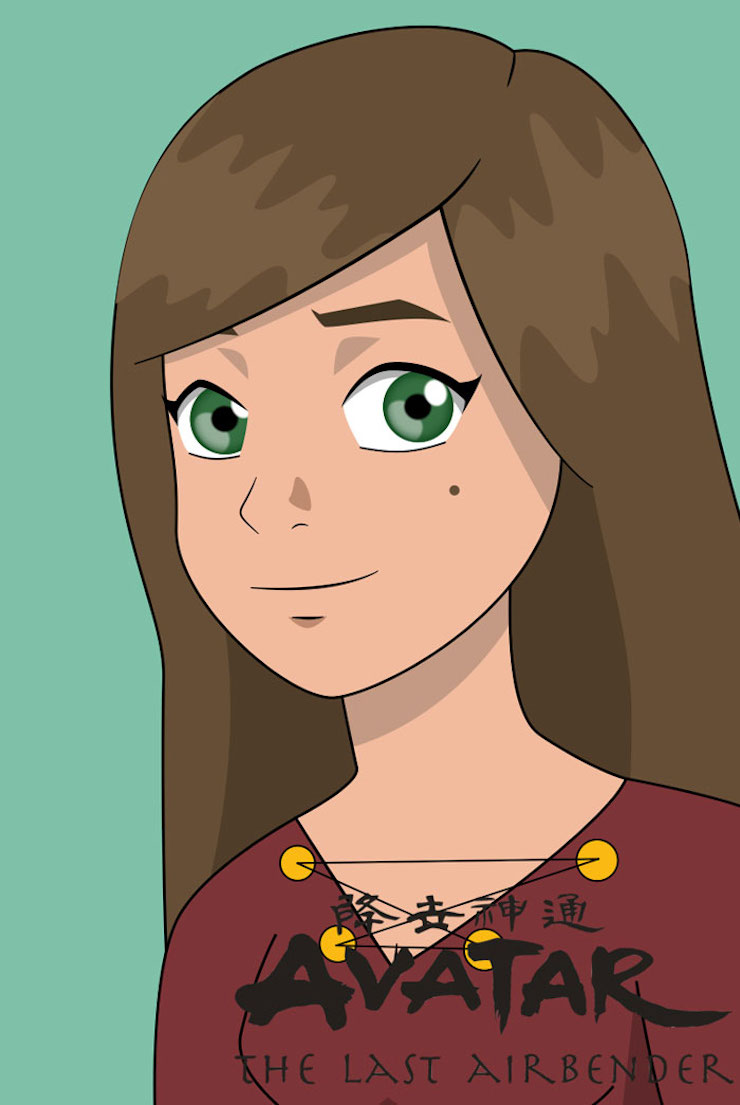

Avatar

Crédits : Sam Skinner

Futurama

Crédits : Sam Skinner

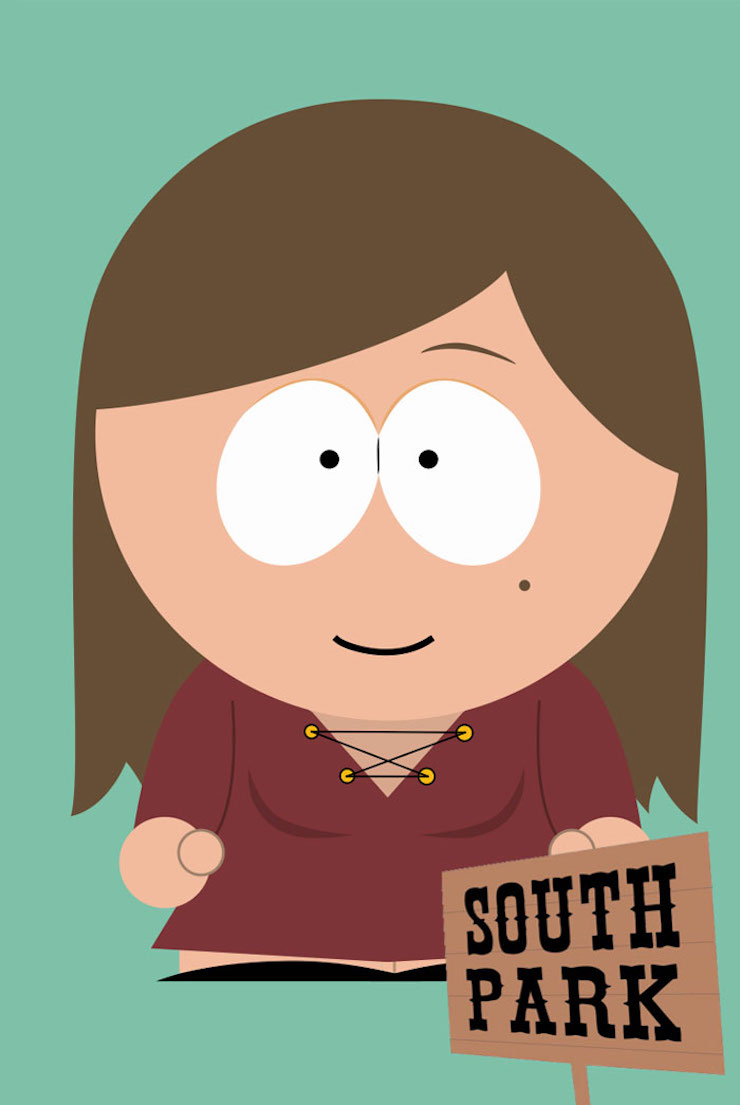

South Park

Crédits : Sam Skinner

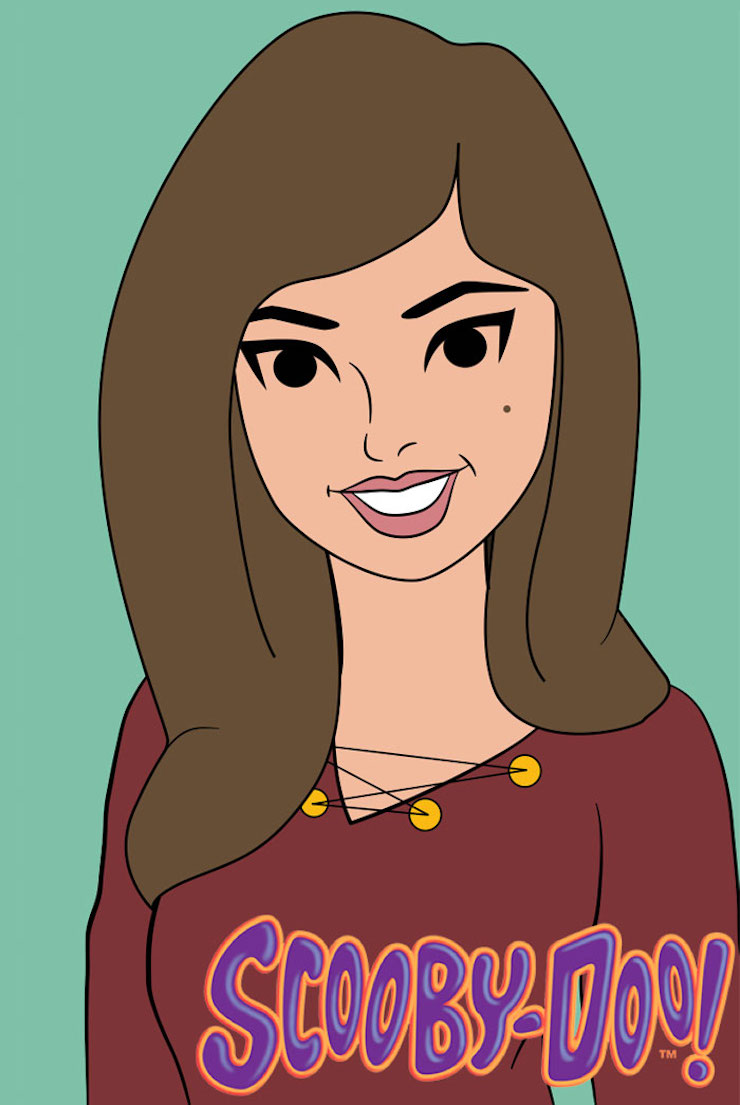

Scooby-Doo

Crédits : Sam Skinner

Danny Fantôme

Crédits : Sam Skinner

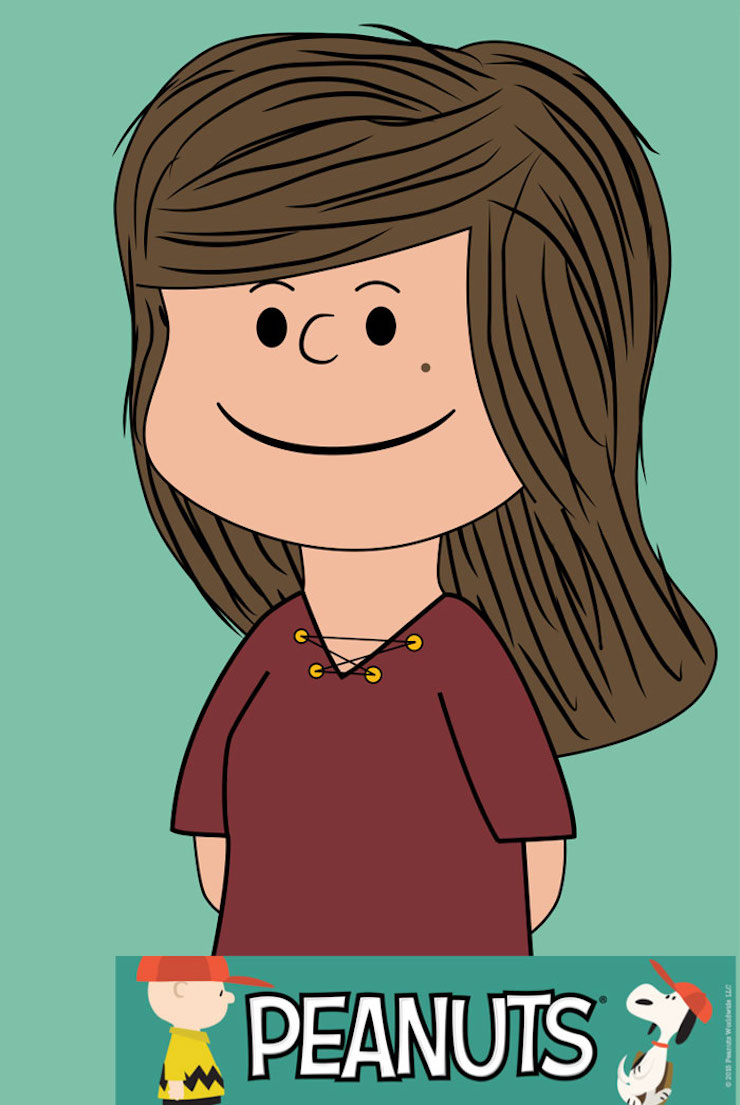

Peanuts

Crédits : Sam Skinner

Souvenirs de Gravity Falls

Crédits : Sam Skinner

Mes Parrains Sont Magiques

Crédits : Sam Skinner

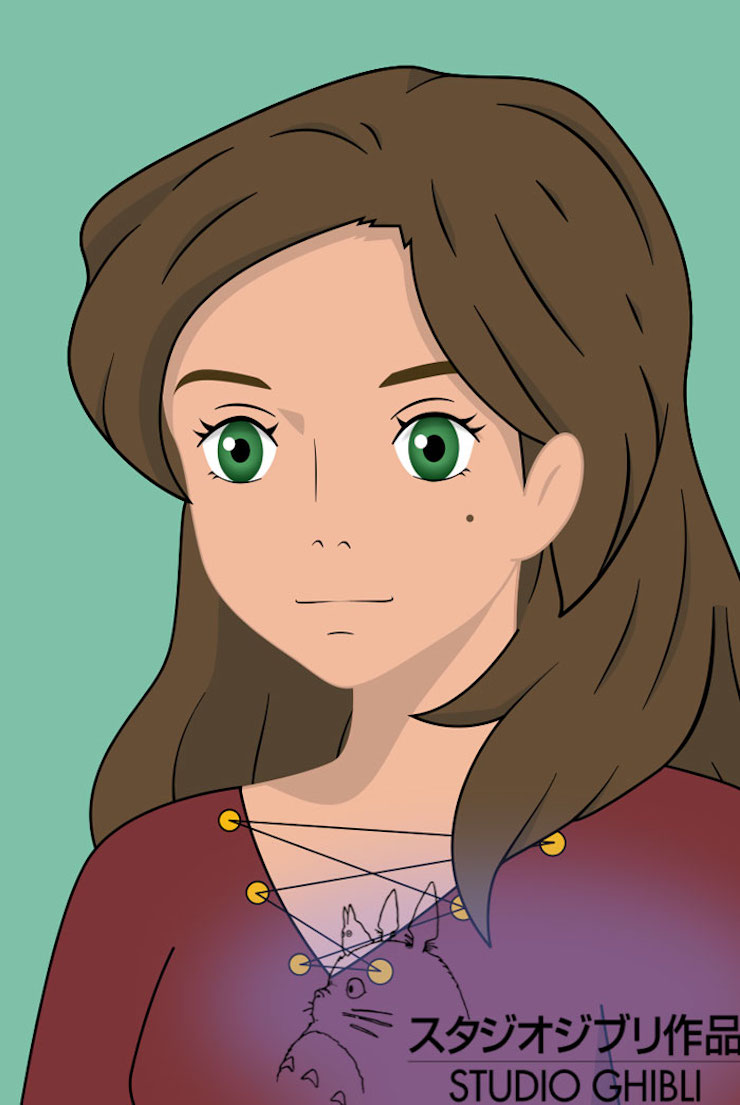

Studio Ghibli

Crédits : Sam Skinner

Rick et Morty

Crédits : Sam Skinner

Family Guy

Crédits : Sam Skinner

Bob’s Burgers

Crédits : Sam Skinner

Minecraft

Crédits : Sam Skinner

Super Mario Bros

Crédits : Sam Skinner

Phinéas et Ferb

Crédits : Sam Skinner

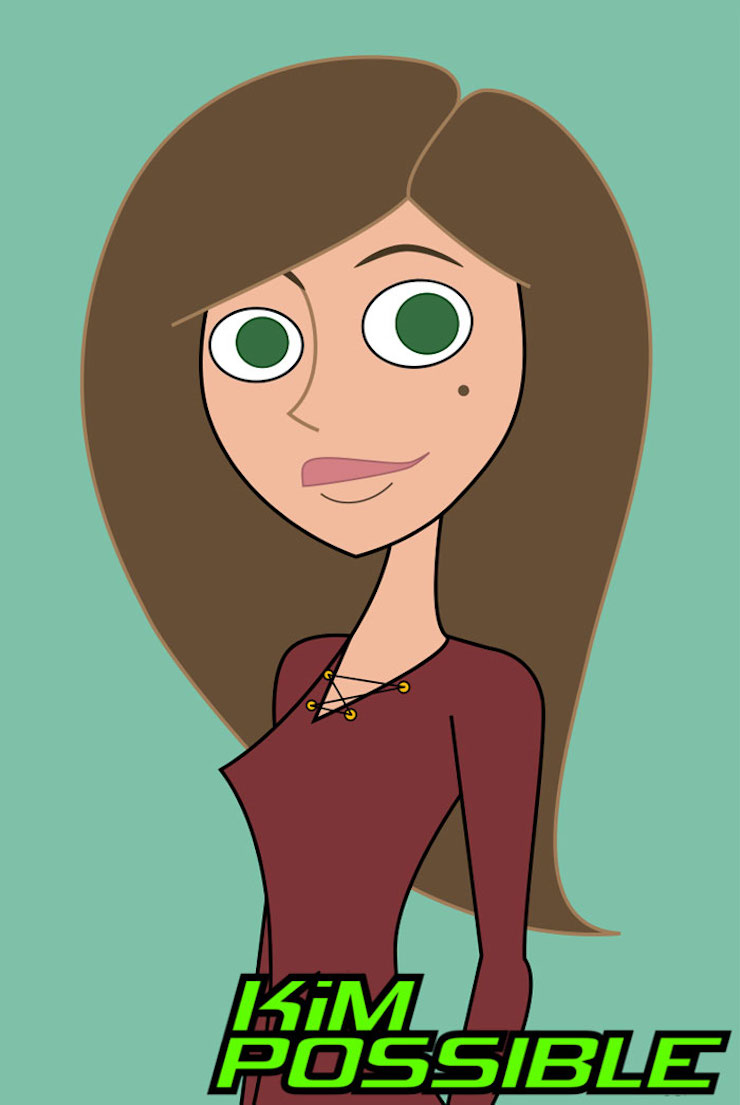

Kim Possible

Crédits : Sam Skinner

Happy Tree Friends

Crédits : Sam Skinner

Teen Titans Go

Crédits : Sam Skinner

Totally Spies

Crédits : Sam Skinner

Winx Club

Crédits : Sam Skinner

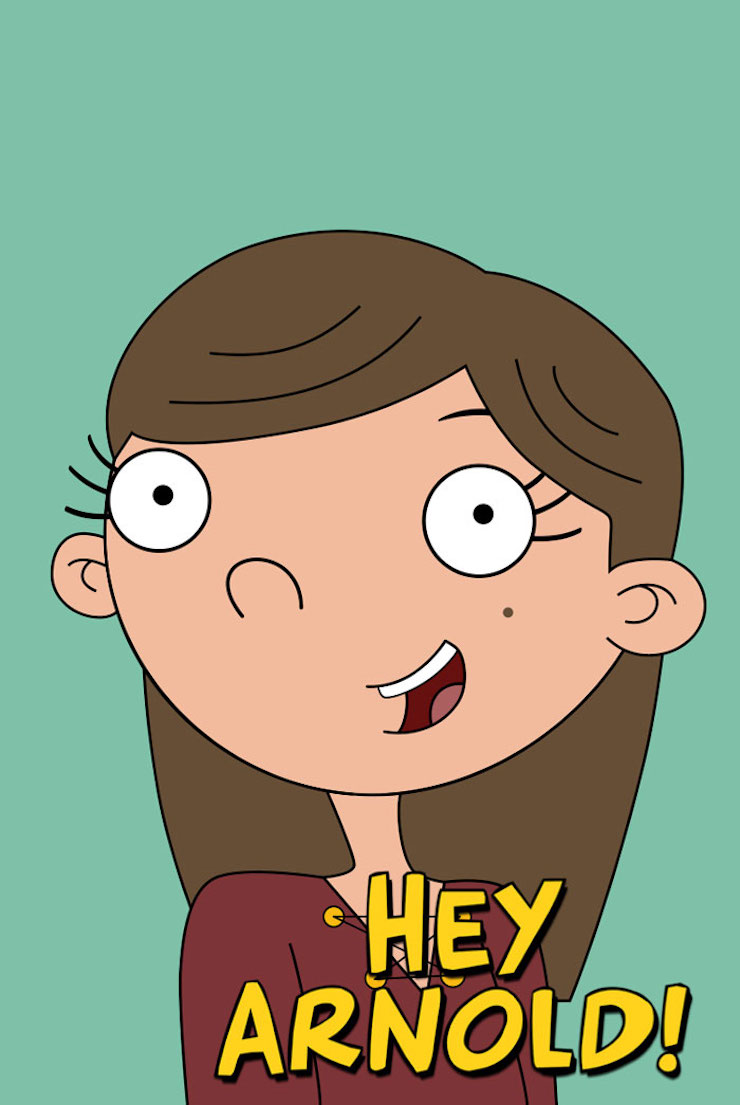

Hey Arnold

Crédits : Sam Skinner

Monster High

Crédits : Sam Skinner

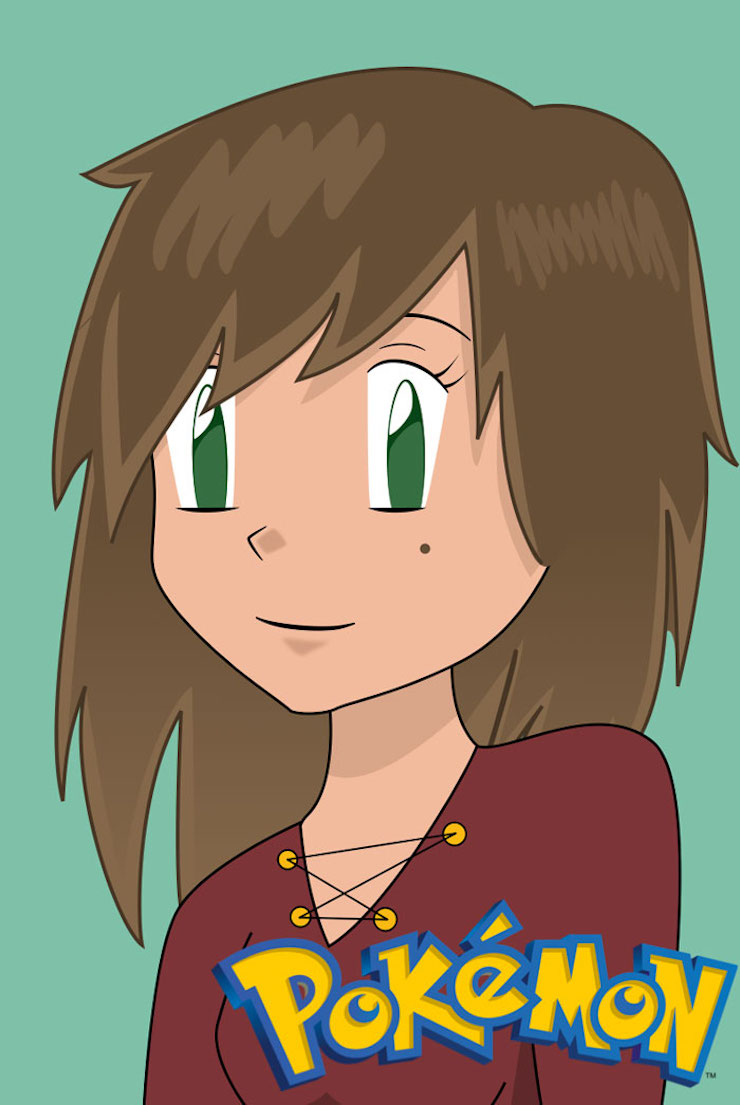

Pokémon

Crédits : Sam Skinner

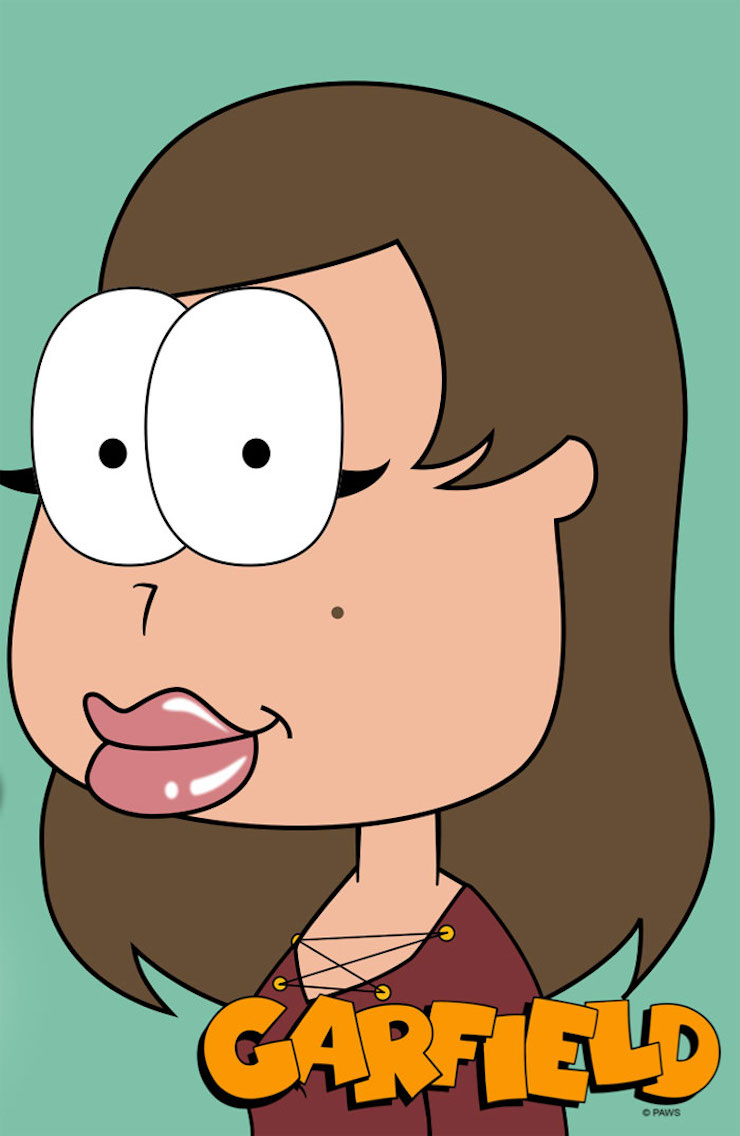

Garfield

Crédits : Sam Skinner

Sonic

Crédits : Sam Skinner

American Dad

Crédits : Sam Skinner

Justice League

Crédits : Sam Skinner

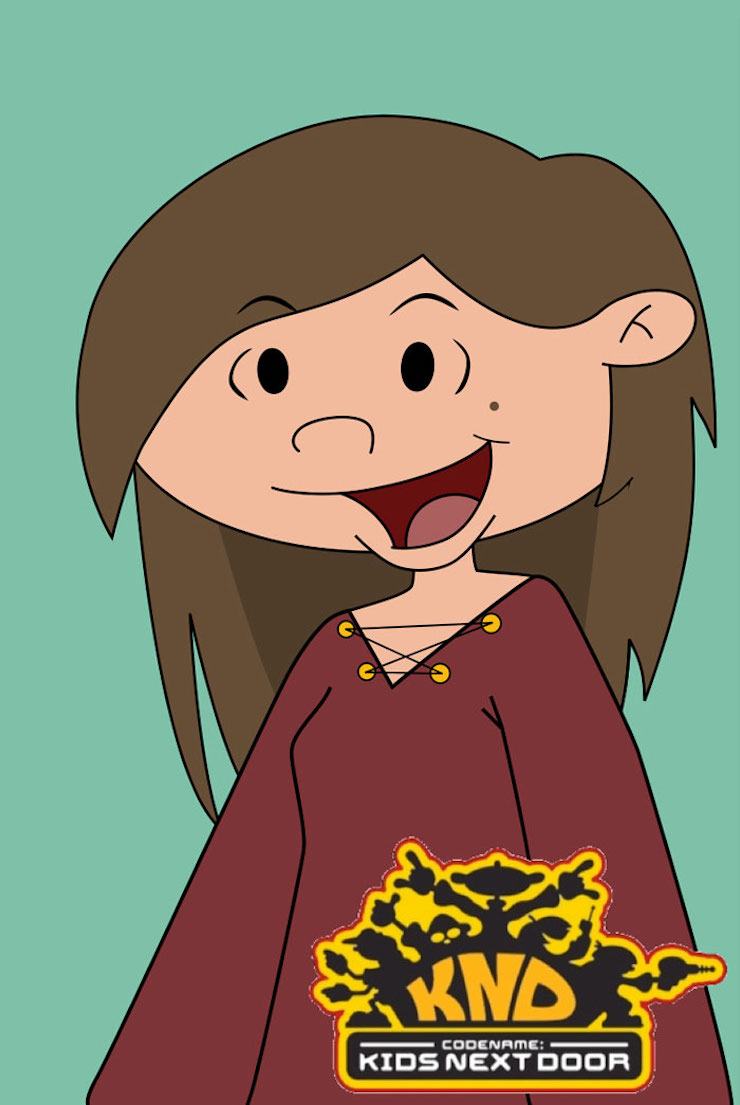

Nom de Code : Kids Next Door

Crédits : Sam Skinner

L’Île des Défis Extrêmes

Crédits : Sam Skinner

Naruto

Crédits : Sam Skinner

Yu-Gi-Oh!

Crédits : Sam Skinner

Jenny Robot

Crédits : Sam Skinner

6teen

Crédits : Sam Skinner

Samurai Jack

Crédits : Sam Skinner

Invader Zim

Crédits : Sam Skinner

Johnny Test

Crédits : Sam Skinner

One Piece

Crédits : Sam Skinner

Code Lyoko

Crédits : Sam Skinner

Juniper Lee

Crédits : Sam Skinner

Imaginé par : Sam Skinner

Source : boredpanda.com

Cet article Elle réalise son autoportrait dans 50 styles de dessins animés différents provient du blog Creapills, le média référence des idées créatives et de l'innovation marketing.

Hands-on with the RED Hydrogen One, a wildly ambitious smartphone

Tiny Sideways Tetris on a Business Card

Everyone recognizes Tetris, even when it’s tiny Tetris played sideways on a business card. [Michael Teeuw] designed these PCBs and they sport small OLED screens to display contact info. The Tetris game is actually a hidden easter egg; a long press on one of the buttons starts it up.

It turns out that getting a playable Tetris onto the ATtiny85 microcontroller was a challenge. Drawing lines and shapes is easy with resources like TinyOLED or Adafruit’s SSD1306 library, but to draw those realtime graphics onto the 128×32 OLED using that method requires a buffer size that wouldn’t fit the ATtiny85’s available RAM.

It turns out that getting a playable Tetris onto the ATtiny85 microcontroller was a challenge. Drawing lines and shapes is easy with resources like TinyOLED or Adafruit’s SSD1306 library, but to draw those realtime graphics onto the 128×32 OLED using that method requires a buffer size that wouldn’t fit the ATtiny85’s available RAM.

To solve this problem, [Michael] avoids the need for a screen buffer by calculating the data to be written to the OLED on the fly. In addition, the fact that the smallest possible element is a 4×4 pixel square reduces the overall memory needed to track the screen contents. As a result, the usual required chunk of memory to use as a screen buffer is avoided. [Michael] also detailed the PCB design and board assembly phases for those of you interested in the process of putting together the cards using a combination of hot air reflow and hand soldering.

PCB business cards showcase all kinds of cleverness. The Magic 8-Ball Business Card is refreshingly concise, and the project that became the Arduboy had milled cutouts to better fit components, keeping everything super slim.

Microsoft acquires conversational AI startup Semantic Machines to help bots sound more lifelike

Microsoft announced today that it has acquired Semantic Machines, a Berkeley-based startup that wants to solve one of the biggest challenges in conversational AI: making chatbots sound more human and less like, well, bots.

In a blog post, Microsoft AI & Research chief technology officer David Ku wrote that “with the acquisition of Semantic Machines, we will establish a conversational AI center of excellence in Berkeley to push forward the boundaries of what is possible in language interfaces.”

According to Crunchbase, Semantic Machines was founded in 2014 and raised about $20.9 million in funding from investors including General Catalyst and Bain Capital Ventures.

In a 2016 profile, co-founder and chief scientist Dan Klein told TechCrunch that “today’s dialog technology is mostly orthogonal. You want a conversational system to be contextual so when you interpret a sentence things don’t stand in isolation.” By focusing on memory, Semantic Machines claims its AI can produce conversations that not only answer or predict questions more accurately, but also flow naturally, something that Siri, Google Assistant, Alexa, Microsoft’s own Cortana and other virtual assistants still struggle to accomplish.

Instead of building its own consumer products, Semantic Machines focused on enterprise customers. This means it will fit in well with Microsoft’s conversational AI-based products. These include Microsoft Cognitive Services and Azure Bot Service, which the company says are used by one million and 300,000 developers, respectively, and its virtual assistants Cortana and Xiaolce.

What Is Electro Swing?

Electro Swing Explained in 2 minutes...(Read...)

No one knows how Google Duplex will work with eavesdropping laws

In Google’s demonstration of its new AI assistant Duplex this week, the voice assistant calls a hair salon to book an appointment, carrying on a human-seeming conversation, with the receptionist at the other end seemingly unaware that she is speaking to an AI. Robots don’t literally have ears, and in order to “hear” and analyze the audio coming from the other end, the conversation is being recorded. But about a dozen states — including California — require everyone in the phone call to consent before a recording can be made.

It’s not clear how these eavesdropping laws affect Google Duplex. In fact, it’s so unclear that we can’t get a straight answer out of Google.

A Google spokesperson told The Verge during I/O, where Duplex was...

We’re DOOMED: Boston Dynamics’ Atlas Robot Can Now Run Outside and Jump Autonomously! [Video]

We’re doomed. DOOMED. Watch:

Atlas is the latest in a line of advanced humanoid robots we are developing. Atlas’ control system coordinates motions of the arms, torso and legs to achieve whole-body mobile manipulation, greatly expanding its reach and workspace. Atlas’ ability to balance while performing tasks allows it to work in a large volume while occupying only a small footprint. The Atlas hardware takes advantage of 3D printing to save weight and space, resulting in a remarkable compact robot with high strength-to-weight ratio and a dramatically large workspace. Stereo vision, range sensing and other sensors give Atlas the ability to manipulate objects in its environment and to travel on rough terrain. Atlas keeps its balance when jostled or pushed and can get up if it tips over.

I now want to see the Atlas robot running after Spotmini, Boston Dynamics’ dog robot.

Oh, and speaking of Spotmni, it can now “navigate” autonomously:

The post We’re DOOMED: Boston Dynamics’ Atlas Robot Can Now Run Outside and Jump Autonomously! [Video] appeared first on Geeks are Sexy Technology News.

Introducing ML Kit

Posted by Brahim Elbouchikhi, Product Manager

In today's fast-moving world, people have come to expect mobile apps to be intelligent - adapting to users' activity or delighting them with surprising smarts. As a result, we think machine learning will become an essential tool in mobile development. That's why on Tuesday at Google I/O, we introduced ML Kit in beta: a new SDK that brings Google's machine learning expertise to mobile developers in a powerful, yet easy-to-use package on Firebase. We couldn't be more excited!

Machine learning for all skill levels

Getting started with machine learning can be difficult for many developers. Typically, new ML developers spend countless hours learning the intricacies of implementing low-level models, using frameworks, and more. Even for the seasoned expert, adapting and optimizing models to run on mobile devices can be a huge undertaking. Beyond the machine learning complexities, sourcing training data can be an expensive and time consuming process, especially when considering a global audience.

With ML Kit, you can use machine learning to build compelling features, on Android and iOS, regardless of your machine learning expertise. More details below!

Production-ready for common use cases

If you're a beginner who just wants to get the ball rolling, ML Kit gives you five ready-to-use ("base") APIs that address common mobile use cases:

- Text recognition

- Face detection

- Barcode scanning

- Image labeling

- Landmark recognition

With these base APIs, you simply pass in data to ML Kit and get back an intuitive response. For example: Lose It!, one of our early users, used ML Kit to build several features in the latest version of their calorie tracker app. Using our text recognition based API and a custom built model, their app can quickly capture nutrition information from product labels to input a food's content from an image.

ML Kit gives you both on-device and Cloud APIs, all in a common and simple interface, allowing you to choose the ones that fit your requirements best. The on-device APIs process data quickly and will work even when there's no network connection, while the cloud-based APIs leverage the power of Google Cloud Platform's machine learning technology to give a higher level of accuracy.

See these APIs in action on your Firebase console:

Heads up: We're planning to release two more APIs in the coming months. First is a smart reply API allowing you to support contextual messaging replies in your app, and the second is a high density face contour addition to the face detection API. Sign up here to give them a try!

Deploy custom models

If you're seasoned in machine learning and you don't find a base API that covers your use case, ML Kit lets you deploy your own TensorFlow Lite models. You simply upload them via the Firebase console, and we'll take care of hosting and serving them to your app's users. This way you can keep your models out of your APK/bundles which reduces your app install size. Also, because ML Kit serves your model dynamically, you can always update your model without having to re-publish your apps.

But there is more. As apps have grown to do more, their size has increased, harming app store install rates, and with the potential to cost users more in data overages. Machine learning can further exacerbate this trend since models can reach 10's of megabytes in size. So we decided to invest in model compression. Specifically, we are experimenting with a feature that allows you to upload a full TensorFlow model, along with training data, and receive in return a compressed TensorFlow Lite model. The technology behind this is evolving rapidly and so we are looking for a few developers to try it and give us feedback. If you are interested, please sign up here.

Better together with other Firebase products

Since ML Kit is available through Firebase, it's easy for you to take advantage of the broader Firebase platform. For example, Remote Config and A/B testing lets you experiment with multiple custom models. You can dynamically switch values in your app, making it a great fit to swap the custom models you want your users to use on the fly. You can even create population segments and experiment with several models in parallel.

Other examples include:

- storing your image labels in Cloud Firestore

- measuring processing latency with Performance Monitoring

- understand the impact of user engagement with Google Analytics

- and more

Get started!

We can't wait to see what you'll build with ML Kit. We hope you'll love the product like many of our early customers:

Get started with the ML Kit beta by visiting your Firebase console today. If you have any thoughts or feedback, feel free to let us know - we're always listening!

Microsoft’s Snip Insights puts A.I. technology into a screenshot-taking tool

A team of Microsoft interns have thought up a new way to put A.I. technology to work – in a screenshot snipping tool. Microsoft today is launching their project, Snip Insights, a Windows desktop app that lets you retrieve intelligent insights – or even turn a scan of a textbook or report into an editable document – when you take a screenshot on your PC.

The team’s manager challenged the interns to think up a way to integrate A.I. into a widely used tool, used by millions.

They decided to try a screenshotting tool, like the Windows Snipping Tool or Snip, a previous project from Microsoft’s internal incubator, Microsoft Garage. The team went with the latter, because it would be easier to release as an independent app.

Their new tool leverages Cloud AI services in order to do more with screenshots – like convert images to translated text, automatically detect and tag image content, and more.

For example, you could screenshot a photo of a great pair of shoes you saw on a friend’s Facebook page, and the tool could search the web to help you find where to buy them. (This part of its functionality is similar to what’s already offered today by Pinterest).

The tool can also take a scanned image of a document, and turn a screenshot of that into editable text.

And it can identify famous people, places or landmarks in the images you capture with a screenshot.

Although it’s a relatively narrow use case for A.I., the Snip Insights tool is an interesting example of how A.I. technology can be integrated into everyday productivity tools – and the potential that lies ahead as A.I. becomes a part of even simple pieces of software.

The tool is being released as Microsoft Garage project, but it’s open-sourced.

The Snip Insights GitHub repository will be maintained by the Cloud AI team going forward.

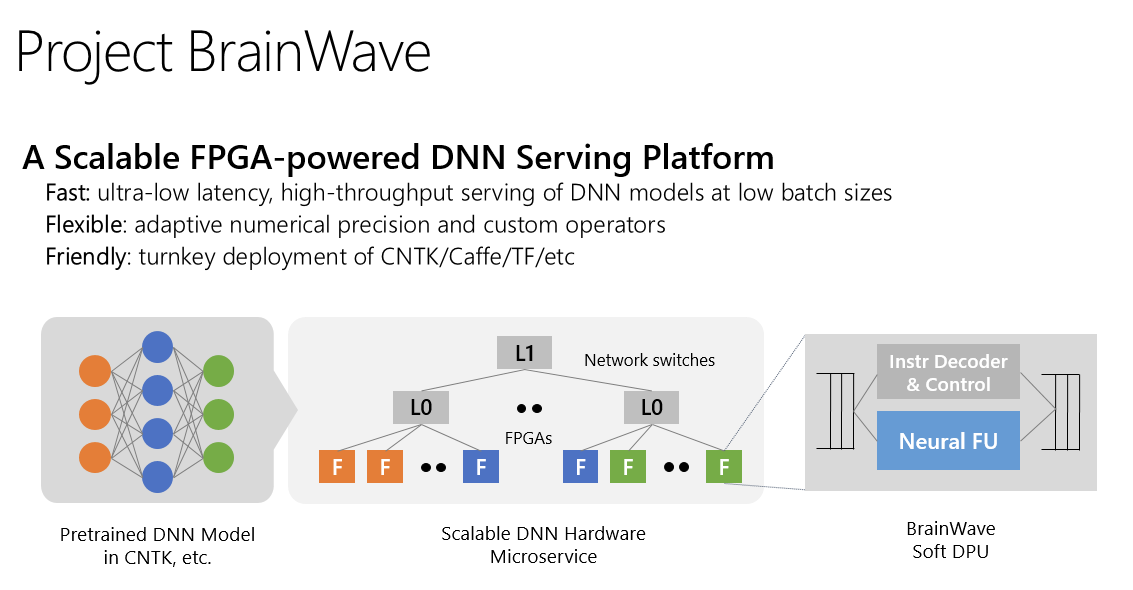

Microsoft launches Project Brainwave, its deep learning acceleration platform

Microsoft today announced at its Build conference the preview launch of Project Brainwave, its platform for running deep learning models in its Azure cloud and on the edge in real time.

While some of Microsoft’s competitors, including Google, are betting on custom chips, Microsoft continues to bet on FPGAs to accelerate its models, and Brainwave is no exception. Microsoft argues that FPGAs give it more flexibility than designing custom chips and that the performance it achieves on standard Intel Stratix FPGAs is at least comparable to that of custom chips.

Last August, the company first detailed some aspects of BrainWave, which consists of three distinct layers: a high-performance distributed architecture; a hardware deep neural networking engine that has been synthesized onto the FPGAs; and a compiler and runtime for deploying the pre-trained models.

Microsoft is attaching the FPGAs right to its overall data center network, which allows them to become something akin to hardware microservices. The advantage here is high throughput and a large latency reduction because this architecture allows Microsoft to bypass the CPU of a traditional server to talk directly to the FPGAs.

When Microsoft first announced BrainWave, the software stack supported both the Microsoft Cognitive Toolkit and Google’s TensorFlow frameworks.

Brainwave is now in preview on Azure and Microsoft also promises to bring support for it to Azure Stack and the Azure Data Box appliance.

Microsoft Kinect lives on as a new sensor package for Azure

Microsoft’s Kinect motion-sensing camera for its Xbox consoles was walking dead for the longest time. Last October, it finally passed away peacefully — or so we thought. At its Build developer conference today, Microsoft announced that it is bringing back the Kinect brand and its standout time-of-flight camera tech, but not for a game console. Instead, the company announced Project Kinect for Azure, a new package of sensors that combines the Kinect camera with an onboard computer and a small package that developers can integrate into their own projects.

The company says that Project Kinect for Azure can handle fully articulated hand tracking and that it can be used for high-fidelity spatial mapping. Based on these capabilities, it’s easy to imagine the use of Project Kinect for many robotics and surveillance applications.

“Project Kinect for Azure unlocks countless new opportunities to take advantage of Machine Learning, Cognitive Services and IoT Edge,” Microsoft’ technical fellow — and father of the HoloLens — Alex Kipman writes today. “We envision that Project Kinect for Azure will result in new AI solutions from Microsoft and our ecosystem of partners, built on the growing range of sensors integrating with Azure AI services.”

The camera will have a 1024×1024 resolution, the company says, and it’ll also use this same camera in the next generation of its HoloLens helmet.

“Project Kinect for Azure brings together this leading hardware technology with Azure AI to empower developers with new scenarios for working with ambient intelligence,” Microsoft explains in today’s announcement. And indeed, it looks like the main idea here is to combine the company’s camera tech with its cloud-based machine learning tools — the pre-build and customized models from the Microsoft Cognitive Services suite and the IoT Edge platform for edge computing workloads.

Make Your Very Own Infinity Gauntlet at Home [Video]

Here’s a tutorial on how to make your very own light-up foam Infinity Gauntlet! Cosplayer and artist Hendo made hers using a pair of Darth Vader gloves from Amazon. Check it out!

The post Make Your Very Own Infinity Gauntlet at Home [Video] appeared first on Geeks are Sexy Technology News.

We Now Have A Working Nuclear Reactor for Other Planets — But No Plan For Its Waste

If the power goes out in your home, you can usually settle in with some candles, a flashlight, and a good book. You wait it out, because the lights will probably be back on soon.

But if you’re on Mars, your electricity isn’t just keeping the lights on — it’s literally keeping you alive. In that case, a power outage becomes a much bigger problem.

NASA scientists think they’ve found a way to avoid that possibility altogether: creating a nuclear reactor. This nuclear reactor, known as Kilopower, is about the size of a refrigerator and can be safely launched into space alongside any celestial voyagers; astronauts can start it up either while they’re still in space, or after landing on an extraterrestrial body.

The Kilopower prototype just aced a series of major tests in Nevada that simulated an actual mission, including failures that could have compromised its safety (but didn’t).

This nuclear reactor would be a “game changer” for explorers on Mars, Lee Mason, NASA Space Technology Mission Directorate (STMD) principal technologist for Power and Energy Storage, said in a November 2017 NASA press release. Just one device could provide enough power to support an extraterrestrial outpost for 10 years, and do so without some of the issues inherent to solar power, namely: being interrupted at night or blocked for weeks or months during Mars’ epic dust storms.

“It solves those issues and provides a constant supply of power regardless of where you are located on Mars,” Mason said in the press release. He also noted that a nuclear-powered habitat could mean that humans could land in a greater number of landing sites on Mars, including high latitudes where there’s not much light but potentially lots of ice for astronauts to use.

Nuclear reactors are not an unusual feature in space; the Voyager 1 and 2 spacecraft, now whizzing through deep space after departing our solar system, have been running on nuclear energy since they launched in the 1970s. The same is true for the Mars rover Curiosity since it landed on the Red Planet in 2012.

But we’d need a lot more reactors to colonize planets. And that could pose a problem of what to do with the waste.

According to Popular Mechanics, Kilopower reactors create electricity through active nuclear fission — in which atoms are cleaved apart to release energy. You need solid uranium-235 to do it, which is housed in a reactor core about the size of a roll of paper towels. Eventually, that uranium-235 is going to be “spent,” just like fuel rods in Earth-based reactors, and put nearby humans at risk.

When that happens, the uranium core will have to be stored somewhere safe; spent reactor fuel is still dangerously radioactive, and releases lots of heat. On Earth, most spent fuel rods stored in pools of water that keep the rods cool, preventing them from catching fire and blocking radiating radioactivity. But on another planet, we’d need any available water to, you know, keep humans alive.

So we’d need another way to cool spent radioactive fuel. It’s possible that the spent fuel could be stored in shielded casks in lava tubes or designated parts of the surface, since the Moon and Mars are so cold, though that introduces the risk that someone might accidentally bump into them.

Right now, all we can do is speculate — as far as we know, NASA doesn’t have any publicly available plan for what to do with spent nuclear fuel on extraterrestrial missions. That could be because the Kilopower prototype just proved itself actually feasible. But not knowing what to do with the waste from it seems like an unusual oversight, since NASA is planning to go back to the Moon, and then to Mars, by the early 2030s.

And in case you were wondering, no, you can’t just shoot the nuclear waste off into deep space or into the sun; NASA studied that way back in the 1970s and determined it was a pretty terrible idea. Back to the drawing board.

The post We Now Have A Working Nuclear Reactor for Other Planets — But No Plan For Its Waste appeared first on Futurism.

A Telepresence System That’s Starting To Feel Like A Holodeck

[Dr. Roel Vertegaal] has led a team of collaborators from [Queen’s University] to build TeleHuman 2 — a telepresence setup that aims to project your actual-size likeness in 3D.

Developed primarily for business videoconferencing, the setup requires a bit of space on both ends of the call. A ring of stereoscopic z-cameras capture the subject from all angles which the corresponding projector on the other end displays. Those projectors are arranged in similar halo above a human-sized, retro-reflective cylindrical screen which can be walked around — viewing the image from any angle without a VR headset or glasses — in real-time!

One of the greatest advantages of this method is that ring of projectors makes your likeness viewable to a group since the image isn’t locked to the perspective of one individual. Conceivably, this also allows for one person to be in many places at once — so to speak. The team argues that as body language is an integral part of communication, this telepresence method will ultimately bring long-distance interactions closer the feeling of being a face-to face.

It would be awesome to see this technology develop further, where the cameras and projectors could allow the user an area of free movement — for, say, more sweeping gestures or pacing about — on both ends of the call to really sell the illusion of the person being in the same physical space.

Ok that might be a little far-fetched for now. We haven’t advanced to holodeck tech quite yet, but we’re getting there.

[Thanks for the tip, Qes!]

Belgium defines video game loot boxes as illegal gambling

The Belgian Gaming Commission has ruled after a preliminary investigation that loot boxes in several games — Overwatch, FIFA 18, and Counter Strike: Global Offensive — are considered games of chance that are subject to Belgian gaming law, via Ars Technica.

According to a statement from Belgian Minister of Justice Koen Geens, the games currently constitute criminal violations of Belgium’s gaming legislation, and developers could be subject to prison sentences of up to five years and fines of up to 800,000 euros if the loot boxes aren’t removed. Per Justice Geens’ statement, the commission used four parameters to determine whether or not loot boxes in a game were considered a game of chance: if there was a game element that allowed bets...

NVIDIA AI Reconstructs Photos with Super Realistic Results [Video]

Researchers from NVIDIA, led by Guilin Liu, introduced a state-of-the-art deep learning method that can edit images or reconstruct a corrupted image, one that has holes or is missing pixels. The method can also be used to edit images by removing content and filling in the resulting holes. Learn more about their research paper “Image Inpainting for Irregular Holes Using Partial Convolutions”

I hope this is going to be available to the public one day!

[Nvidia]

The post NVIDIA AI Reconstructs Photos with Super Realistic Results [Video] appeared first on Geeks are Sexy Technology News.

These portable projector deals let you enjoy big-screen video without a TV

Looking for a big-screen experience without the bulk and expense of a large HDTV? If you're throwing a party, hosting a sleepover for the kids, or want to have a backyard movie night, we’ve picked out the best portable projector deals.

The post These portable projector deals let you enjoy big-screen video without a TV appeared first on Digital Trends.

Twilio adds support for LINE

The developer-centric communications platform Twilio today announced that it has added support for LINE to Twilio Channels. With this, Twilio developers now have the ability to reach users on this service, which has 168 million monthly active users, most of whom live in Japan, Thailand, Taiwan and Indonesia. LINE support in Twilio Channels is currently in beta but open to all developers who want to give it a try.

With this, Twilio Channels, which allows for sending and receiving messages, now supports many of the most popular messaging platforms, ranging from Facebook Messenger and Slack to WeChat, Kik and the new RCS text messaging standard. Missing from this list are the likes of WhatsApp and SnapChat, though they don’t have APIs that Twilio could easily integrate.

Unsurprisingly, the LINE support also extends to Twilio Studio, the company’s drag-and-drop app builder, and Flex, Twilio’s recently announced contact center solution.

“The most successful organizations realize that delivering a seamless, elegant experience for customers on their preferred channels is a way to differentiate,” said Patrick Malatack, vice president and general manager of Messaging at Twilio in today’s announcement. “When developers use Twilio to build these experiences – they trust that they will be able to use one API, now and in the future, to support the communication channels their customers want to use. We are thrilled to add support for LINE to the Twilio platform and can’t wait to see what our customers build.”

Everything Is Smart In The Future, Even The Freakin’ Walls

In the future, everything is connected. Even our walls will be watching us binge watch TV on our couches.

Yes, the internet is already connected to doorbells, toasters, and light bulbs, changing the way we interact with them. Even our fridges are possessed by an AI that can shame us for not picking the free-range, organic eggs.

Now, researchers from the Carnegie Mellon University teamed up with researchers from Disney (yep, the Disney of World, Land, and Cinderella) to transform those lame, “dumb” walls into smart walls. They function as a gigantic trackpad, sensing a user and their movements.

In the paper presented at the 2018 Conference on Human Factors in Computing Systems in Montreal, Quebec, the researchers note their goal to give walls the ability to track a user’s touch and gestures through “airborne electromagnetic noise” — more or less (mostly less) the same kind of sensing your smartphone screen does.

Their pitch had me hooked from the start: “Houses, offices, restaurants, schools, museums — walls are everywhere, yet they are inactive.” Totally. All walls do is, you know, hold up structures and separate the noisy goings-on in various rooms to, like, give people privacy and stuff. We’re tired of giving walls a free ride; it’s time walls started holding up their own weight around here.

Rather than using a camera to locate a user and track their movement, as other systems do, this system relies on a grid of “large electrodes” covered in a layer of water-based paint that conducts electricity. Water-based paint is less smelly and looks better than other conductive paints, like nickel-based ones. And all it took to connect the electrodes was copper tape. Cha-ching, goes the checkout lane at Home Depot, for a measly 20 bucks per square meter.

The result: a wall so smart, it could play a game of vertical Twister with you, and also tell if you were cheating. It can even sense if you’re holding a hair dryer really close to it through electromagnetic resonance (why you would hold a hair dryer really close to a wall is a separate question).

But the team does have some actual uses in mind for their invention: users could play videogames by using different poses to control them, change the channel on their TV with a wave of their arm, or slap the wall directly to turn off the lights, no need for light switches.

Yes, indeed, we are truly living in the future.

The post Everything Is Smart In The Future, Even The Freakin’ Walls appeared first on Futurism.

USA : 22% des utilisateurs d'assistants intelligents achètent vocalement

20% des personnes interrogées dans le cadre d'une étude de Narvar utilisent ces outils pour poster des commentaires ou des notes en ligne sur certains articles.

Lire l'articleAlibaba is bringing its smart assistant to cars from Daimler, Audi and Volvo

Alibaba is jumping behind the wheel after it announced that Daimler, Audi and Volvo will bring its voice assistant to their vehicles.

The assistant — Tmall Genie — is scheduled to go into cars “in the near future.” When it does, Alibaba said it will enable drivers to check fuel levels, mileage, battery levels and engine status use voice controls. Beyond diagnostics, it’ll also cover door windows, air conditioning and other settings.

Tmall Genie was launched last year when Alibaba unveiled a first smart speaker in the style of Amazon’s Echo products and Google Home, although the AI also connects to third-party hardware, too. The Chinese firm said it has sold over two million of its smart devices so far, and it is working on linking them with the car-based AI to allow users to check their vehicle from their home.

This week’s Genie auto launch is one of the first moves from Alibaba’s AI Lab institution, which was announced as part of a $15 billion push into artificial intelligence, machine learning, IOT, quantum computing and other emerging technologies last year.

Look Out Magic Leap, Vive Pro Is Now An AR Dev Kit

HTC just released its software development kit for the front-facing cameras on the Vive Pro. The new update should help turn the device into the kind of augmented reality developer kit Magic Leap is distributing quietly in small numbers.

Magic Leap is raising billions of dollars for a a see-through AR headset that is hard to get access to as a developer, with digital images few can even see without signing a non-disclosure agreement. HTC is very different. The HTC Vive Pro is a high-quality VR headset shipping today in an $1,100 package, and you’ll need to bring your own high-end PC to power it. Unlike Magic Leap, though, Vive Pro is available for purchase immediately. It uses an opaque display with outward-facing cameras that can show you the world outside the headset while collecting information about the environment to merge both realities.

Here’s an example from Project Ghost Studios, which worked on the feature with HTC as an early partner.

This means you have some choice if you’re a developer looking to build software for headsets that mix a digital reality with the real world. You can sign up for the Magic Leap SDK and try to get your hands on hard-to-get hardware from the company while swearing yourself to secrecy. Or you can think about getting a product like the Zed Mini or Vive Pro to more quickly and openly explore your ideas.

With the latest tools HTC just released, creators can use depth and spatial mapping to do things like “Placing virtual objects in the foreground or background” and “Live interactions with virtual objects and simple hand interactions.”

“We have the option to map the environment (its part of the SDK), but since our game is so simple, we found that only using the game space with a flat surface works fine,” wrote Gaspar Ferreiro, president of Project Ghost Studios, about the example provided above.

Here are some more examples:

The tools are available as an early testing release on the Vive developer site.

Tagged with: Magic Leap, Vive Pro

Ghostbusters External GPU Case Design

External GPU cases have been around for a few years. You take a desktop graphics card, stuff it into one of these cases, then plug it into a laptop for a performance boost in graphics. Ideal for turning a sluggish laptop into a gaming laptop.

One design idea for such a case is the Ghostbusters ghost trap. Not only would it be life-size, but it could store 2 graphics cards (I don’t think external GPU cases can juggle multiple cards just yet). Also NVIDIA cards have LED logos on the side, so they can emit a ghost green light as well, perfect for a ghost trap.

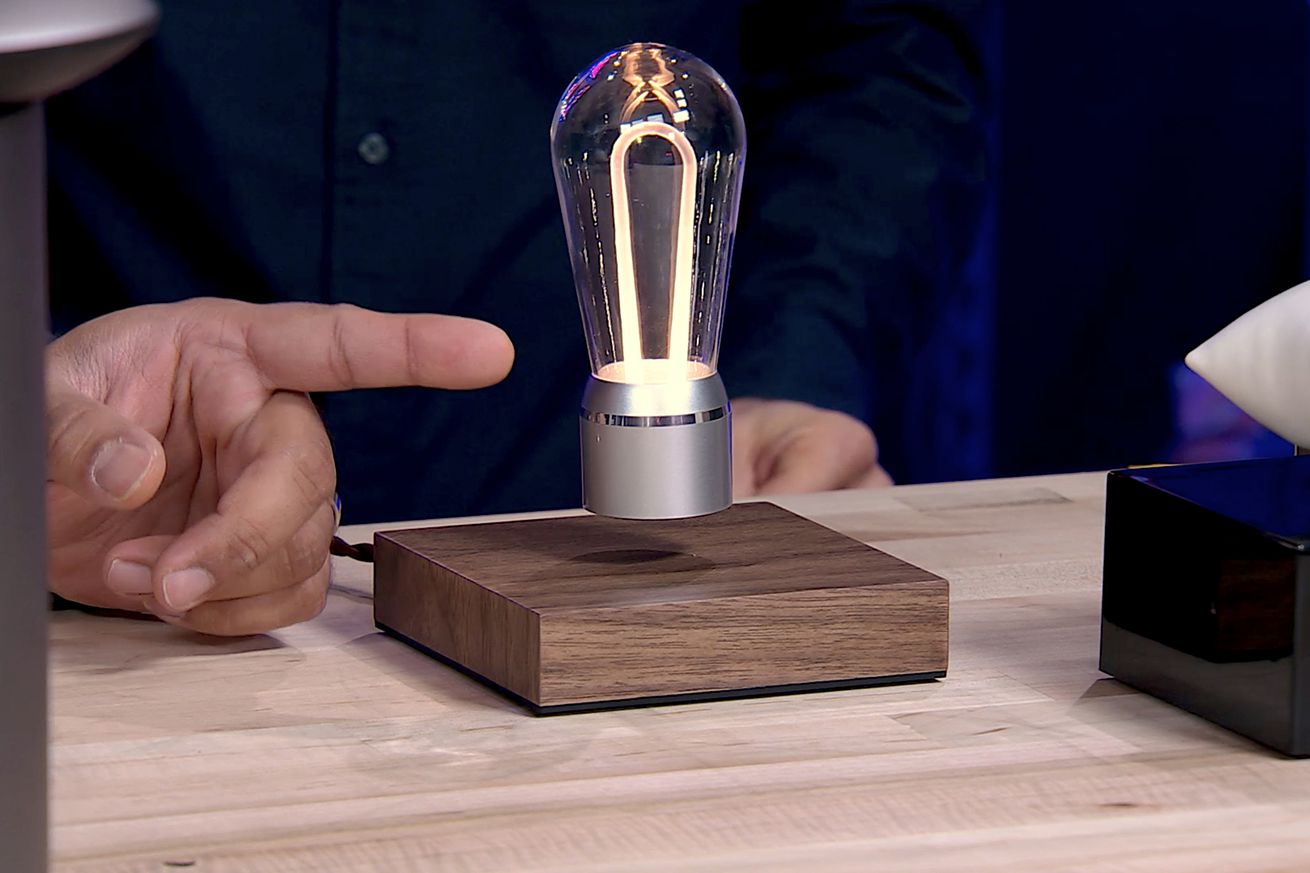

Levitating gadgets will disappoint you

I’m here to issue an important but disappointing warning: that super cool new gadget on Kickstarter that floats? You should not back it. It will, I can say with almost complete certainty, disappoint you.

I’ve been fascinated with hovering gadgets for a couple years now because they just keep popping back up. Floating clocks, turntables, speakers, incense holders, light bulbs, and a surprising number of plants have successfully made their way through crowdfunding sites. I did some quick math based on the results of a Kickstarter search for levitating products and found that, so long as it’s successful, the average campaign for a levitating product raises around $140,000. Most successful campaigns raise less than $10,000.

Levitating...

Pour la première fois, une intelligence artificielle est habilitée à établir un diagnostic médical aux États-Unis

What’s the Deal with Transparent Aluminum?

It looks like a tube made of glass but it’s actually aluminum. Well, aluminum with an asterisk beside it — this is not elemental aluminum but rather a material made using it.

We got onto the buzz about “transparent aluminum” as a result of a Tweet from whence the image above came. This Tweet was posted by [Jo Pitesky], a Science Systems Engineer at the Jet Propulsion Lab in Pasadena. [Jo] reported that at a recent JPL technology open house she had the chance to handle a tube of material that looks for all the world like a section of glass tubing, but was billed as transparent aluminum. [Jo] tweeted this because it was an interesting artifact that few people get to play with and she’s right, this is fascinating!

The the material itself is intriguing, and I immediately had practical questions like what is this stuff? What is it good for? How is it made? And is it really aluminum rendered transparent by some science fiction process?

Can Aluminum Be Transparent?

As with many things in life, the answer to that question is, “It depends.” In this case, it depends on how you define aluminum. Or more precisely, it depends on what your expectations are for a material that purports to be aluminum. Regular old aluminum is an abundant metal with all the expected properties of metals — electrically and thermally conductive, ductile, malleable, and lustrous. You can melt it and cast it into useful shapes, beat it flat into a foil to wrap a sandwich, or crush an empty can made from it against your forehead, if you’re so inclined.

If you’re expecting transparent aluminum to have all of those properties, you’ll be disappointed. Although she doesn’t identify the material specifically, the material [Jo] got to handle was most likley not a metal at all, but a ceramic called aluminum oxynitride, composed of equal parts aluminum, oxygen, and nitrogen and known by the chemical formula AlON.

Aluminum oxynitride ceramics have been around since the 1980s, so it’s not new stuff by any means. Coincidentally, AlON development was underway more or less at the same time that Star Trek IV: The Voyage Home was being produced; it was from the now classic scene from that film were Scotty uses a mouse as a microphone in an attempt to trade the formula for “transparent aluminum” for sheets of plexiglass that AlON and similar transparent ceramics get their colloquial name.

What’s It Good For?

Despite clearly not being a metal — and not a glass either; glasses are amorphous solids, while ceramics are crystalline — AlON and the other transparent ceramics that have been developed since have some amazing properties. AlON, marketed as the uncreatively named ALON by its manufacturer, Surmet Corporation, is produced by sintering. Powdered ingredients are poured into a mold, compacted under tremendous pressure, and cooked at high temperatures for days. The resulting translucent material is ground and polished to transparency before use.

Aside from being optically clear, ALON is also immensely tough. Tests show that a laminated pane of ALON 1.6″ thick can stop a 50 caliber rifle round, something even 3.7″ of traditional “bullet-proof” glass can’t do. ALON also has better optical properties than regular glass in the infrared wavelengths; where most glasses absorbs IR, ALON is essentially transparent to it. That makes ALON a great choice for the windows on heat seeking missiles and other IR applications.

On the downside, ALON is expensive — in the armored glass market, it’s about 5 times the price of traditional laminated glass. But it has so many benefits, not least of which is superior scratch resistance, that for some applications it’s the material of choice. Chances are good that increased demand for the material will drive costs down, and it may not be long before Gorilla Glass is replaced by transparent aluminum smartphone screens that might actually damage the pavement when you drop your phone.

So, sorry Scotty — there’s no such thing as transparent aluminum metal. But the stuff we’re calling transparent aluminum is just as fascinating and just as sci-fi as it sounds. Isn’t material science cool? If you have other interesting materials like AlON that we should dig into, let us know about it in the comments below.

[image Source: Screen Rant, Memory Alpha]

Here are the five things I learned installing a smart mirror

I recently received a review unit of the Embrace Smart Mirror. It’s essentially a 24-inch Android tablet mounted behind a roughly 40-inch mirror. It works well when third-party software is installed. Here’s what I learned.

It’s impossible to get a good photo of the smart mirror

I tried a tripod, a selfie stick and every possible angle and I couldn’t get a picture that does this mirror justice. It looks better in person than these photos show. When the light in the bathroom is on, the text on the mirror appears to float on the surface. It looks great. The time is nice and large, and the data below it is accessible when standing a few feet away.

When the room is dark, the Android device’s screen’s revealed because it can’t reach real black. The screen behind the mirror glows gray. This isn’t a big deal. The Android device turns off after a period of inactivity and is often triggered when the light to the bathroom is turned on. More times than not, people walking into the room will be greeted with a standard mirror until the light is turned on.

There are a handful of smart mirror apps, but few are worthwhile

This smart mirror didn’t ship with any software outside of Android. That’s a bummer, but not a deal-breaker. There are several smart mirror Android apps in the Play Store, though I only found one I like.

I settled on Mirror Mirror (get it) because the interface is clean, uses pleasant fonts and there’s just enough customization, though it would be nice to select different locations for the data modules. The app was last updated in July of 2017, so use at your own risk.

Another similar option is this software developed by Max Braun, a robotistic at Google’s X. His smart mirror was a hit in 2016, and he included instructions on how to build it here and uploaded the software to GitHub here.

Kids love it

I have great kids that grew up around technology. Nothing impresses these jerks, though, and that’s my fault. But they like this smart mirror. They won’t stop touching it, leaving fingerprints all over it. They quickly figured out how to exit the mirror software and download a bunch of games to the device. I’ve walked in on both kids huddled in the dark bathroom playing games and watching YouTube, instead, of you know, playing games or watching YouTube on the countless other devices in the house.

That’s the point of the device, though. The company that makes this model advertises it as a way to get YouTube in the bathrooms so a person can apply their makeup while watching beauty YouTubers. It works for that, too. There is just a tiny bit of latency when pressing on the screen through the mirror. This device isn’t as quick to use as a new Android tablet, but because it’s sealed in a way to keep out moisture, it’s safe to go in a steamy bathroom.

Adults will find it frivolous

I have a lot of gadgets in my house, and my friends are used to it. Their reaction to this smart mirror has been much different from any other device, though.

“What the hell is this, Matt,” they’ll say from behind the closed bathroom door. I’ll yell back, “It’s a smart mirror.” They flush the toilet, walk out and give me the biggest eye roll.

I’ve yet to have an adult say anything nice about this mirror.

It is frivolous

A smart mirror is a silly gadget. To some degree, it’s a crowd-pleaser, but in the end, it’s just another gadget to tell you the weather. It collects fingerprints like mad, and the Android screen isn’t bright enough to use it as a regular video viewer or incognito TV.

As for this particular smart mirror, the Embrace Smart Mirror, the hardware is solid but doesn’t include any smart mirror software. The Mirror is rather thin and easily hangs on a wall thanks to a VESA port. There are physical controls hidden along the bottom of the unit, including a switch to manually turn off the camera. It’s certified IP65, so it can handle a bathroom. A motion detector does a good job turning the device on. If you don’t have kids, it should stay smudge-free.

The Embrace Smart Mirror does not ship with any smart mirror software. The instructions and videos tell users to add widgets to the Android home screen. This doesn’t work for me, and I expect a product such as this to include at least necessary software. Right now, after this product is taken out of the box, it’s just an Android tablet behind a mirror, and that’s lame. Thankfully there are a couple of free apps on the Play Store to remedy this problem.

At $1,299, the Embrace Smart Mirror is a hard sell, but is among the cheapest available smart mirrors on the market. Of course, you can always build one yourself — as The Verge points out, it’s rather easy.

Keynote: Laura Dekker, The Machine as Alien Ethnographer

HKG18-500K2 – Keynote: Laura Dekker – The Machine as Alien Ethnographer: Advanced Computation, Open Source Systems and Art

The last decade or so of development in open source hardware, software and data has brought an astonishing richness of resources for artists: Python and C++ libraries for natural language processing, biological simulation, data programming and machine learning – such as TensorFlow, NLTK, openFrameworks and project Gutenberg. As well as continually expanding functionality, increased accessibility has drastically brought down the barriers to entry and exploration.