My conclusions: Awesome book. Buy it here: Now You See It: Simple Visualization Techniques for Quantitative Analysis .

.

With the advent of computerized visuals in the late 1960’s, statistician John Tukey pointed out that exploring data would be one of the greatest strengths of interactive computers. In Now You See It: Simple Visualization Techniques for Quantitative Analysis , Stephen Few deconstructs this idea and provides a fantastic guide to using modern statistical and visualization tools, emphasizing interactivity, practical visualization, and simplification. Using Tableau and other popular tools extensively, Few’s work is engaging and really exhaustive. I’ll be honest: I really enjoyed this book.

, Stephen Few deconstructs this idea and provides a fantastic guide to using modern statistical and visualization tools, emphasizing interactivity, practical visualization, and simplification. Using Tableau and other popular tools extensively, Few’s work is engaging and really exhaustive. I’ll be honest: I really enjoyed this book.

PART 1

I did not entirely know what to think when I started the book, and Few does begin with a different approach than I had expected. He writes,

“…we’ve largely ignored the primary tool that makes information meaningful and useful: the human brain. While concentrating on the technologies, we’ve forgotten the human skills that are required to make sense of the data.”

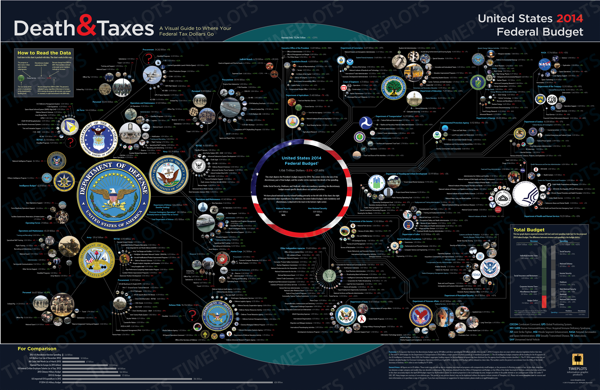

Strong start. In “Building Core Skills for Visual Analysis,” Few goes into detail about the history of data visualization and the way that we perceive that data. The chapter on the history of information visualization was particularly enlightening, and really made me wonder what tools and methods we will be using in 10 years or even 100. Human history is a one of visualizing our world, and the methods we use to accomplish that appear to become increasingly comprehensible, even as the amount of data we use increases.

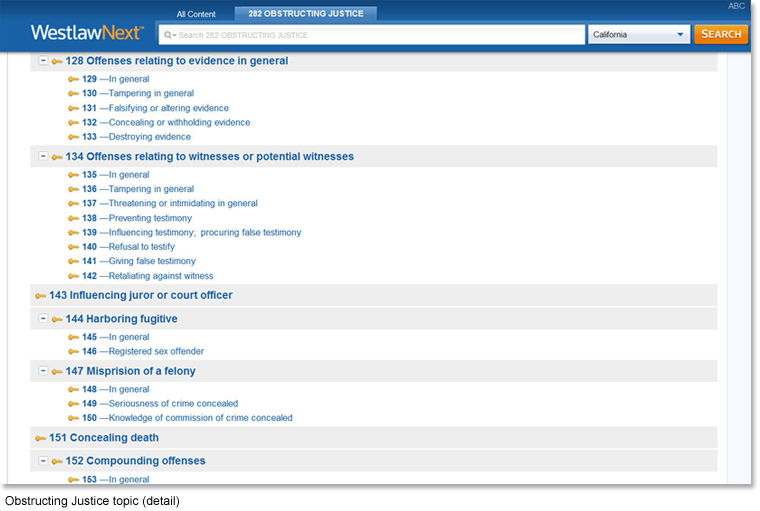

Particularly in Chaper 4, entitled Analytical Interaction and Navigation, I enjoyed how Few looked at the types of software used in analysis. His list of the most important elements of good software really articulates something I have though before: Excel is somewhat limited. In fact, the chapter would serve as a good guide for those seeking to create their own, novel data analysis tools. While a little out of my area, I found it quite compelling.

PART 2

In the second half of the book, Few looks at specific techniques in data analysis. In all, the second half is extremely image-heavy, which I appreciated. He compares effective and ineffective visualizations, breaking down the various patterns and techniques into

– Time-series Data

– Ranking Relationships

– Deviations

– Data Distribution

– Correlation between Variables

– Patterns in Data with Multiple Variables

Entitled “Honing Skills for Diverse Types of Visual Analysis,” Few explores different ways of showing the data, ranging from complex data analyses to simple Excel charts. Each chapter is very detailed, and full of very detailed visuals. He concludes each chapter by compiling a list of best practices—for example, when comparing percent change of data with different beginning points, Few recommends using logarithmic scales.

I found these chapters to be the most useful, and ultimately I believe could effectively be used as a reference for nearly any quantitative project. Especially taking into account the best practices section, I think the second half is an absolute necessity to researchers interested in visualization.

PART 3

Entitled “Further Thoughts and Hopes,” Few ends with some conclusions on the future of data analysis and visualizations. Particularly in examining the implications of ubiquitous computing and the cloud, I enjoyed the last section, although it is certainly not as directly useful to students or researchers.

RECOMMENDATION

While reading Now You See It: Simple Visualization Techniques for Quantitative Analysis , I was frequently reminded of Edward Tufte, only modernized and sometimes a little more directly useful to practitioners. Instead of static graphs, Few emphasizes interactive visualizations—and even though it is a textbook, it reads in a very engaging way. And it grows on you.

, I was frequently reminded of Edward Tufte, only modernized and sometimes a little more directly useful to practitioners. Instead of static graphs, Few emphasizes interactive visualizations—and even though it is a textbook, it reads in a very engaging way. And it grows on you.

I want to return back to something I mentioned earlier: the book does make Excel seem limited. Indeed, Few has noted elsewhere that popular business tools do not visualize data in a very productive way. However, although no single software should be expected to do everything, I did find that most of the examples in the book can be roughly replicated in Excel. If you are die-hard Excel fanatic, I still think there is a great deal of value in the book. This brings me to my last point.

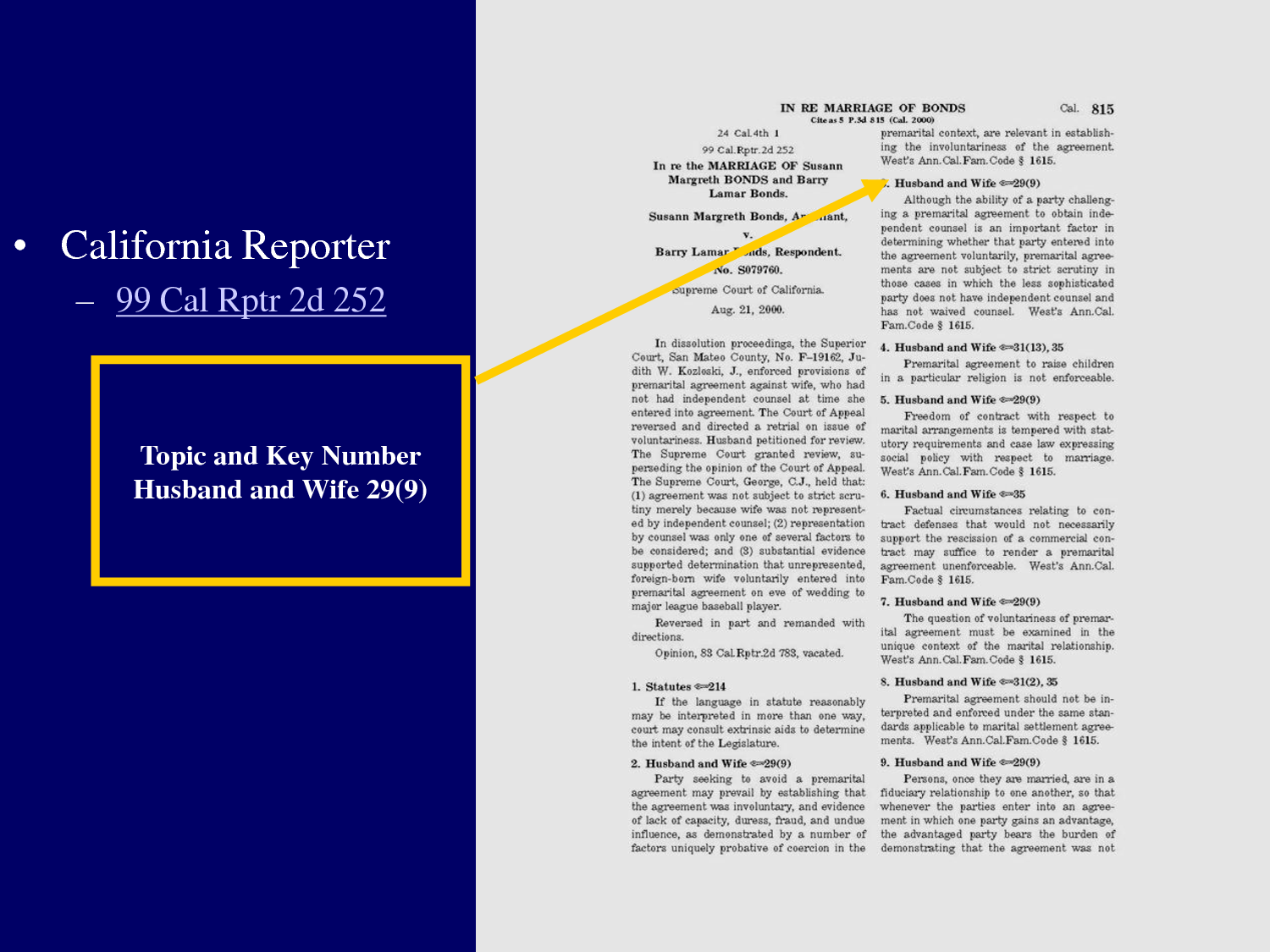

To be frank, when I finished reading it was not exactly sure what to make of it. I have a number of reference books on data analytics and visualization, and I wasn’t sure I had actually learned something meaningful. However, I was soon confronted with a project from a school district with whom I am working, and I realized I kept returning back to Few’s book over and over. I was trying to be more visual and more interactive, and the charts in the book are extremely accessible. While Edward Tufte tends to emphasize design tools like Adobe Illustrator, Few uses Tableau and R (which incidentally is free).

Although I have seen many of the charts in the book elsewhere, reading the book was enlightening and truly compelled me to explore new options in terms of visualizing in novel ways. I would highly recommend Now You See It: Simple Visualization Techniques for Quantitative Analysis to any practitioner, student, or even any person simply interested in visualization in general.

to any practitioner, student, or even any person simply interested in visualization in general.

Finally, I feel that his conclusions align with my beliefs about the brain as a pattern recognition machine. In the past few days, I have been attending a Professional Development by Quantum Learning, which emphasizes using research from neuroscience to guide teaching. And indeed, like both Few and the Quantum Learning team have noted, the brain is our most powerful tool–if used right–and we need to become more adept at making the way we display information ‘brain friendly.’ As Few writes,

Computers can’t make sense of data; only people can.

Buy it here: Now You See It: Simple Visualization Techniques for Quantitative Analysis .

.

Sources:

Few, Stephen. Now You See It: Simple Visualization Techniques for Quantitative Analysis. Analytic Press, 2009. Print.

by Randy Krum.

by Randy Krum.