At Build 2016 we announced the first developer preview of the new generation of authentication SDKs for Microsoft identities, the Microsoft Authentication Library (MSAL) for .NET.

Today I am excited to announce the release of production-ready previews of MSAL .NET, MSAL iOS, MSAL Android, and MSAL Javascript.

In the past year, we made significant progress advancing the features of the v2 protocol endpoint of Azure AD and Azure AD B2C. The new MSALs take advantage of those new capabilities and, combined with the excellent feedback you gave us about the first preview, will simplify integrating authentication features in your apps like never before.

The libraries we are releasing today are still in preview, which means that there’s still time for you to give us feedback and change their API surface – however they are fully supported in production: you can use them in your apps confidently, and if you have issues you’ll be able to use all the usual support channels at your disposal for fully released products. More details below.

Introducing MSAL

MSAL is an SDK that makes it easy for you to obtain the tokens required to access web API protected by Microsoft identities, that is to say by the v2 protocol endpoint of Azure AD (work and school accounts or Personal Microsoft Accounts), Azure AD B2C, or the new ASP.NET Core Identity. Examples of web API include Microsoft Cloud API, such as the Microsoft Graph, or any other 3rd party API (including your own) configured to accept tokens issued by Microsoft identities.

MSAL offers an essential set of primitives, helping you to work with tokens with few concise lines of code.

Under the hood, MSAL takes care of many complex and high risk programming tasks that you would otherwise be required to code yourself. Specifically, MSAL takes care of displaying authentication and consent UX when appropriate, selecting the appropriate protocol flows for the current scenario, emitting the correct authorization messages and handling the associated responses, negotiating policy driven authentication levels, taking advantage of device authentication features, storing tokens for later use and transparent renewal, and much more. It’s thanks to that sophisticated logic that you can take advantage of secure APIs and advanced enterprise-grade access control features even if you never read a single line of the OAuth2 or OpenId Connect specifications. You don’t even need to learn about Azure AD internals: using MSALs both you and your administrator can be confident that access policies will be automatically applied at runtime, without the need for dedicated code.

You might be wondering, should I be using MSAL or the Active Directory Authentication Library (ADAL)? The answer is straightforward: if you are building an application that needs to support both Azure AD work and school accounts and Microsoft personal accounts (with the v2 protocol endpoint of Azure AD), or building an app that uses Azure AD B2C, then use MSAL. If you’re building an application that needs to support just Azure AD work and school accounts, then use ADAL. In the future, we’ll recommend all apps use MSAL, but not yet. We still have a bit more work to do on MSAL and the v2 protocol endpoint of Azure AD. More on ADAL later in the post.

MSAL programming model

Developing with MSAL is simple.

Everything starts with registering your app in the v2 protocol endpoint of Azure AD, Azure AD B2C, or ASP.NET Core Identity. Here you’ll specify some basic info about your app (is it a mobile app? Is it a web app or a web API?) and get back an identifier for your app. Let’s say that you are creating a .NET desktop application meant to work with work & school and MSA accounts (v2 protocol endpoint of Azure AD).

In code, you always begin by creating an instance of *ClientApplication - a representation in your code of your Azure AD app; in this case, you’ll initialize a new instance of PublicClientApplication, passing the identifier you obtained at registration time.

[sourcecode language='csharp' padlinenumbers='true'] string clientID = "a7d8cef0-4145-49b2-a91d-95c54051fa3f"; PublicClientApplication myApp = new PublicClientApplication(clientID); [/sourcecode]

Say that you want to call the Microsoft Graph to gain access to the email messages of a user. All you need to do is to call AcquireTokenAsync, specifying the scope required for the API you want to invoke (in this case, Mail.Read).

[sourcecode language='csharp' ] string[] scopes = { "Mail.Read" }; AuthenticationResult ar = await myApp.AcquireTokenAsync(scopes); [/sourcecode]

The call to AcquireTokenAsync will cause a popup to appear, prompting the user to authenticate with the account of his or her choice, applying whatever authentication policy has been established by the administrator of the user’s directory. For example, if I were to run that code and use my microsoft.com account, I would be forced to use two-factor authentication – while, if I’d use a user from my own test tenant, I would only be asked for username and password.

After successful authentication, the user is promoted to grant consent for the permission requested, and some other permissions related to accessing personal information (such as name, etc).

As soon as the user accepts, the call to AcquireTokenAsync finalizes the token acquisition flow and returns it (along with other useful info) in an AuthenticationResult. All you need to do is extract it (via ar.AccessToken) and include it in your API call.

MSAL features a sophisticated token store, which automatically caches tokens at every AcquireTokenAsync call. MSAL offers another primitive, AcquireTokenSilentAsync, which transparently inspects the cache to determine whether an access token with the required characteristics (scopes, user, etc) is already present or can be obtained without showing any UX to the user. Azure AD issues powerful refresh tokens, which can often be used to silently obtain new access tokens even for new scopes or different organizations, and MSAL codifies all the logic to harness those features to minimize prompts. This means that from now on, whenever I need to call the mail API, I can simply call AcquireTokenSilentAsync as below and know that I am guaranteed to always get back a fresh access token; and if something goes wrong, for example of the user revoked consent to my app, I can always fall back on AcquireTokenAsync to prompt the user again.

[sourcecode language='csharp' ] try { ar = await App.AcquireTokenSilentAsync(scopes, myApp.Users.FirstOrDefault()); } catch (MsalUiRequiredException UiEx) { ar = await App.PCA.AcquireTokenAsync(scopes); } [/sourcecode]

This is the main MSAL usage pattern. All others are variations that account for differences among platforms, programming languages, application types and scenarios – but in essence, once you mastered this couple of calls you know how MSAL works.

Platforms lineup

We are making MSAL available on multiple platforms. The concepts remain the same across the board, but they are exposed to you using the primitives and best practices that are typical of each of the targeted platforms.

Like every library coming from the identity division in the last three years, MSAL is OSS and available on github. We develop MSAL in the open, and we welcome community contributions. For example, I would like to acknowledge Oren Novotny, Principal Architect at BlueMetal and Microsoft MVP, who was instrumental in refactoring MSAL to work with .NET Standard targets. Thank you, Oren!

Below you can find some details about the MSALs previews we are releasing today.

MSAL .NET

You can find the source at https://github.com/AzureAD/microsoft-authentication-library-for-dotnet.

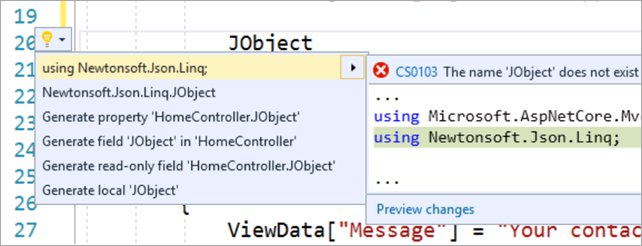

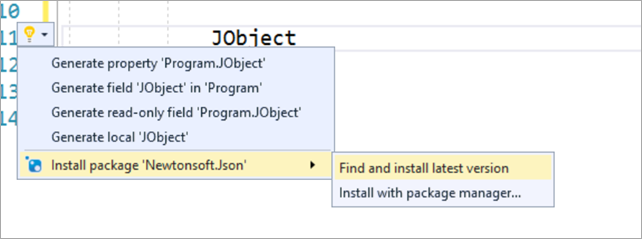

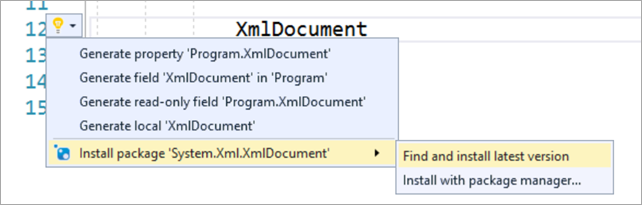

MSAL .NET is distributed via Nuget.org: you can find the package at https://www.nuget.org/packages/Microsoft.Identity.Client.

MSAL .NET works on .NET Desktop (4.5+), .NET Standard 1.4, .NET Core, UWP and Xamarin Forms for iOS/Android/UWP.

MSAL.NET supports the development of both native apps (desktop, console, mobile) and web apps (code behind of ASP.NET web apps, for example).

There are many code samples you can choose from: there’s one on developing a WPF app, one on developing a Xamarin forms app targeting UWP/iOS/Android, one on developing a web app with incremental consent, one on server to server communication, and we’ll add more in the coming weeks. We’ll also have various samples demonstrating how to use MSAL with B2C: a Xamarin Forms app, a .Net WebApp, a .Net Core WebApp and a .Net WPF App.

MSAL JavaScript

You can find the source at https://github.com/AzureAD/microsoft-authentication-library-for-js.

You can install MSAL JS using NPM, as described in the libraries’ readme. We also have an entry in CDN, at https://secure.aadcdn.microsoftonline-p.com/lib/0.1.0/js/msal.min.js.

You can find a sample demonstrating a simple SPA here. A sample demonstrating use of MSAL JS with B2C can be found here.

MSAL iOS

You can find the source at https://github.com/AzureAD/microsoft-authentication-library-for-objc.

MSAL iOS is distributed directly from GitHub, via Carthage.

A sample for iOS can be found here. A sample demonstrating use of MSAL iOS with B2C can be found here.

MSAL Android

You can find the source at – https://github.com/AzureAD/microsoft-authentication-library-for-android.

You can get the binaries via Gradle, as shown in the repo’s readme.

A sample showing canonical usage of MSAL Android can be found here.

System webviews

MSAL iOS and MSAL Android display authentication and consent UX taking advantage of OS level features such as SafariViewController and Chrome Custom Tabs, respectively.

This approach has various advantages over the embedded browser control view used in ADAL: it allows SSO sharing between native apps and web apps accessed through the device browser, makes it possible to leverage SSL certificates on the device, and in general offers better security guarantees.

The use of system webviews aligns MSAL to the guidance provided in the OAuth2 for Native Apps best current practice document.

What does “production-supported preview” means in practice

MSAL iOS, MSAL Android and MSAL JS are making their debut this week; and MSAL .NET is incorporating features of the v2 protocol endpoint of Azure AD and Azure AD B2C that were not available in last year’s preview.

We still need to hear your feedback and retain the freedom to incorporate it, which means that we might still need to change the API surface before committing to it long term. Furthermore, both teh v2 protocol endpoint of Azure AD and B2C are still adding features that we believe must be part of a well-rounded SDK release, and although we already have a design for those, we need them to go through the same preview process as the functionality already available today.

That means that, although the MSAL bits were thoroughly tested and we are confident that we can support their use in production, we aren’t ready to call the library generally available yet.

Saying that MSAL is a “production-supported preview” means that we are granting you a golive license for the MSAL previews released this week. You can use those MSALs in your production apps, being confident that you’ll be able to receive support through the usual Microsoft support channels (premier support, StackOverflow, etc).

However, this remains a developer preview, which means that if you pick it up you’ll need to be prepared to deal with some of the dust of a work in progress. To be concrete:

- Each future preview refresh can (and likely will) change the API surface. That means that if you were waiting for the next refresh to fix a bug affecting you, you will need to be prepared to make code changes when ingesting the new release – even if those changes are unrelated to the bug affecting you, and just happen to be coming out in the same release.

- When MSAL will reach general availability, you will have 6 months to update your apps to use the GA version of the SDK. Once the 6 months elapse, we will no longer support the preview libraries – and, although we’ll try our best to avoid it, we won’t guarantee that the v2 protocol endpoint of Azure AD and Azure AD B2C will keep working with the preview bits.

We want to reach general availability as soon as viable; however, our criteria are quality driven, not date driven. MSAL will be on the critical path of many applications and we want to make sure we’ll get it right. The good news is that now you are unblocked, and you can confidently take advantage of Microsoft identities in your production apps!

What about ADAL?

For all intents and purposes, MSAL can be considered ADAL vNext. Many of the primitives remain the same (AcquireTokenAsync, AcquireTokenSilentAsync, AuthenticationResults, etc) and the goal, making it easy to access API without becoming a protocol expert, remain the same. If you’ve been developing with ADAL, you’ll feel right at home with MSAL. If you rummage through the repos, you’ll see that there was significant DNA lateral transfer between libraries.

At the same time, MSAL has a significantly larger scope: whereas ADAL only works with work and school accounts via Azure AD and ADFS), MSAL works with work and school accounts, MSAs, Azure AD B2C and ASP.NET Core Identity, and eventually (in a future release) with ADFS… all in a single, consistent object model. Along with that, the difference between the Azure AD v1 and v2 endpoints is important – and inevitably reflected in the SDK: incremental consent, scopes instead of resources, elimination of the resource owner password grant, and so on.

Furthermore, the experience and feedback we accumulated through multiple versions of ADAL (the ADAL.NET nuget alone has been downloaded about 2.8 million times) led us to introduce some important changes in the programming model, changes that perhaps go beyond the breaking changes you’d normally expect between major versions of the same SDK.

For those reasons, we decided to clearly signal differences by picking a new name that better reflects the augmented scope – Microsoft Authentication Library.

If you have significant investment in ADAL , don’t worry: ADAL is fully supported, and remains the correct choice when you are building an application that only needs to support Azure AD work and school accounts.

Feedback

If you are at Build and you want to see MSAL in action, stop by session B8084 on Thursday at 4:00 PM. The session recording will appear 24 to 48 hours later at https://channel9.msdn.com/Events/Build/2017/B8084.

Keep your great feedback coming on UserVoice and Twitter (@azuread, @vibronet). If you have questions, get help using Stack Overflow (use the ‘MSAL‘ tag).

Best,

Vittorio Bertocci (Twitter: @vibronet – Blog: http://www.cloudidentity.com/)

Principal Program Manager

Microsoft Identity Division

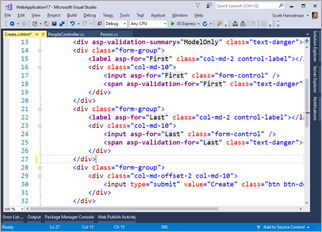

This little post is just a reminder that while Model Binding in ASP.NET is very cool, you should be aware of the properties (and semantics of those properties) that your object has, and whether or not your HTML form includes all your properties, or omits some.

This little post is just a reminder that while Model Binding in ASP.NET is very cool, you should be aware of the properties (and semantics of those properties) that your object has, and whether or not your HTML form includes all your properties, or omits some.