I've told this story before; but it bears repeating in more detail because of some facts I've recently learned.

The year was probably 1967. I was 15 years old, and a Freshman in High-school. I was sitting in the lunch room watching a man roll in a machine that looked, to me, like the helm of the Starship Enterprise.

The machine was called ECP-18. It was the size and shape of an office desk. Indeed, it was a desk. You could sit in front of it, and it had a large flat writing surface. Protruding up from that surface was a console -- a fascinating array of dozens and dozens of buttons and lights arranged in parallel rows. Indeed, the buttons were lights. I watched, enthralled, as the man pushed those buttons making them light up in a kaleidoscope of patterns.

I was already a computer geek by this time. I had taught myself binary math, and knew the binary representation of the octal digits by heart. I knew boolean algebra, and had been constructing logic circuits from old telephone relays in my basement. I had read book after book on computers; and had an inkling of what a computer language, like Basic, was. But I had never touched a real computer until this day.

I watched as the man toggled in the bootstrap loader. I didn't know it was the bootstrap loader at the time. He didn't tell me what he was doing. I was the annoying kid looking too closely over his shoulder, intently staring at every gesture, listening raptly to every word. I'm sure I weirded him out.

He would push the button/lights in the row marked address and mutter under his breath: "at address two-zero-zero". I could see the octal 0200 in the lights! I knew what he was saying! Egad! I understood!

He would push the button/lights in the row marked Memory Buffer, and mutter under his breath: "Store in two one five". The row of lights read 150215. I saw the 0215 at the end, and inferred that the '15' meant store. OH! Those numbers are instructions stored in the memory!

Bit by bit, as I watched that man toggle in diagnostic programs, and execute them, I picked up enough knowledge to formulate a working hypothesis about how this machine worked. When he left for the day, I leapt to the machine and toggled in some simple programs. And, by God, I got them to work!

I cannot recall a moment in my life when I have felt quite so victorious as that moment, when I first touched a real computer, and got that real computer to do my bidding. I was going to be a programmer. At that moment, I knew it in my bones. And from that moment on virtually every thought and every action in my young life was directed towards that goal.

My exposure to that machine was short lived. A week later it was wheeled away, and I never saw it again. But the course of my life had been set.

Judy Allen

I repeated that story so I could tell you this.

A few months ago a man named Tom Polk wrote to me. His letter said:

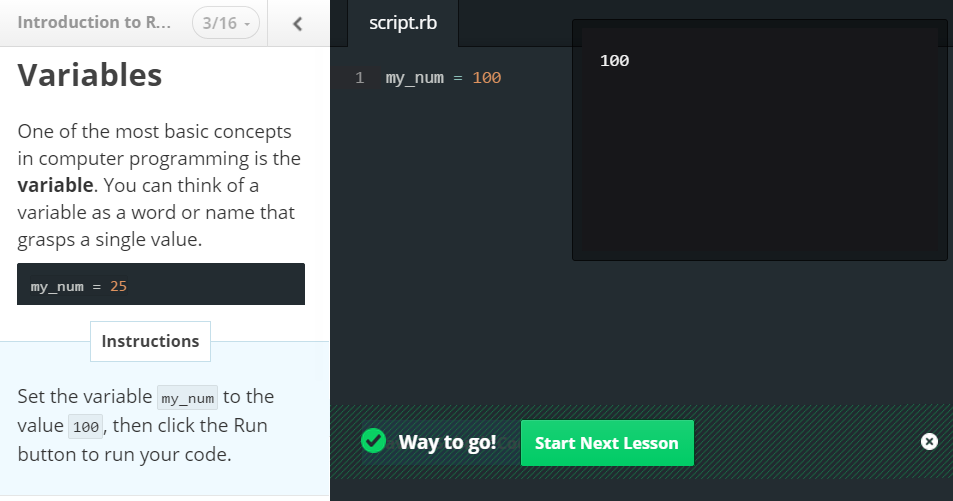

Hi, Bob, Tom Polk here. I Googled ECP-18 and found you. Below is a picture of a couple of my classmates in Big Spring, Texas around 1968 or so. You can see our school's ECP-18 in it. I'm sorry I don't have a bigger picture, but you'll recognize this. I learned to program on this machine and I really enjoyed your comments.

Tom and I struck up a conversation, and from him I learned much more about that machine, and the people behind it. One thing he said struck a special chord in me:

If your sales tech was a small woman, it was probably the lady who wrote this. She mentions her 40% interest in the company and selling it to a high profile educational company in Texas.

No, the tech was male. I recall nothing more about him. My focus was on those lights, and on his voice, and on his fingers pushing those buttons. But I am absolutely certain he was male.

And it is that certainty that shall be the topic of the rest of this blog.

Go read Judy Allen's story at the link Tom shared above. Read three or four of her short chapters, perhaps as far as "The Perkin's Pub Protest". It won't take you long. You'll know when you can return here. But I warn you, you won't want to stop; and you'll almost certainly later return to her fascinating, and inspiring story.

Whoa!

OK, pardon my language, but there's no other way to say this.

This woman was Bad ASS! She wrote a M_____ F____ two-pass assembler, in binary machine language, in 1024 words, in a couple of months, using nothing but the front panel switches, and a 10 character per second teletype with a paper tape reader/punch -- while taking care of a household, a husband, and a gaggle of young kids. God Damn! I dare you to try that!

And she wasn't just a good/great/radical programmer either. Did you read the part where she faced down the Union bullies? Did you read the part where she faced down the executive who couldn't imagine paying a man's salary to a woman? Did you read the Perkin's Pub Story?

No, this was no ordinary woman. Judy Allen was a force to be reckoned with.

But lest you think she was unique, lest you think she was a fluke, let me tell you about my early career.

Almost 50-50

I got my first job as a programmer in 1970 when I was 18 years old. I was hired to help write a large on-line time-sharing union accounting system on a Varian 620i minicomputer. This was a 16 bit machine with 32K x 16 of Core, and two 16 bit registers. Our team consisted of three teenage boys, two men, and three women. That was a pretty typical ratio of men to women in this company. Just under half the programmers were women who were writing COBOL, BAL, PL/1, and minicomputer assembler.

After that time, I watched the ratio of women to men in software plunge. By 1972 I was working for a large company, and the ratio had already dropped to less than 10%. By 1977 the ratio was virtually zero. The women had simply disappeared.

Ironically, in the '60s it was common for women to be programmers. Indeed, in the early '60s there could very well have been more female programmers than male programmers. And the reason for this was simple: To some extent programming was considered to be Women's Work.

Women's Work

Men were engineers. They conceived of machines and built them with their hands. They wielded the creative energies. The drudgery of tabulating and calculating was left to women.

This is a tradition that goes all the way back to Charles Babbage, and probably beyond. Ada Lovelace had been commissioned to translate into English, the lecture notes written in French by an Italian student of Babbage's. A drudgery if there ever was one. And yet, in so doing, she conceived of and documented the notion of programming Babbage's machine with algorithms that could deal with non-mathematical topics. She may have been the first person to realize that a computer manipulates symbols, not numbers. She may have been the first person to understand what that implies.

In the 1880s, women were commonly recruited, but seldom paid, to do the painstaking measurements and calculations requires by the male scientists of the day. This was especially true in Astronomy. Charles Pickering assembled a rather large squadron of such women (They were called: Pickering's Harem) to analyze the huge quantity of photographic plates being produced by the telescopes.

|

| 'Pickering's Computers' standing in front of Building C at the Harvard College Observatory, 13 May 1913. |

The women were not allowed to touch the telescopes. That was Man's Work. But the women could do the drudgery of the computations. Computing was Women's Work. Indeed, they called the women: Computers.

Those "computers" did fantastic work. It was the deeply insightful work of Women like Annie Jump Cannon, and Henrietta Levitt, that allowed us to understand and measure nothing less than the scale and composition of the universe.

Bletchley Park

The tradition continued through the early part of the twentieth century, and into the '30s. At Bletchley Park, where Alan Turing and his team were breaking the German Enigma ciphers, there were perhaps 10,000 people; two thirds of whom were women. Teams of these women were gathered together to do the vital, but painstaking, work of listening, gathering, and collating the German messages. They learned to operate and "program" the machines that Turing and his team had built.

|

| The Women of Bletchley Park |

Grace Hopper

After the second World War, this role for women continued. Grace Hopper, for example, worked in the Navy programming the Mark I computer. Later she was hired by EMCC to work on the software for the UNIVAC. In 1952 she conceived of, and wrote, the very first compiler, and coined the term. She was the first Director of Automatic Programming at Remington Rand, and was the visionary behind the development of COBOL.

|

| Grace Murray Hopper at the UNIVAC keyboard, c. 1960 (Uploaded by Jan Arkesteijn) |

There were other women in software in those days. And all these women did fantastic, ground-breaking, work. And yet it was Women's Work. It was considered appropriate that Women should deal with the drudgery of programming. Men built the machines!

The Revelation of Symbols.

When did it change? When did programming become Man's work? When did Men invade this traditional role for Women, and drive drive the women out?

I think there's a clue in one of Grace Hopper's statements. She had developed the very first compiler but she later said:

"Nobody believed that, I had a running compiler and nobody would touch it. They told me computers could only do arithmetic."

Only Arithmetic? They didn't understand, did they? They didn't see the implications. They didn't see what a computer really was.

- Ada Lovelace had seen it. She had seen that Babbage's engine, and engines like it were not merely calculators. Ada Lovelace saw that these machines could manipulate symbols.

- Alan Turing certainly saw it. Indeed, he could be said to have given the notion it's mathematical foundation.

- Grace Hopper saw it for sure. She implemented it by writing the very first compiler.

- Judy Allen saw it. She wrote a symbolic assembler in binary.

- Heinlein saw it. The Moon is a Hash Mistress was as clear as a Clarion.

- Arthur C. Clarke And Stanley Kubrick saw it. Boy, did they ever.

- Gene Roddenberry saw it. And he popularized it in a way that nobody else ever had.

- And I saw it, as I stared over that male technician's shoulder.

These machines manipulated symbols. And if you can manipulate symbols, you can do anything! If you can manipulate symbols, you have power!

Power.

Power has a gender, and that gender is Male.

Judy?

That's when it shifted. At least that's my hypothesis. In the late '60s, and early '70s the popular culture began to see computers as more than just calculators. On Star Trek, Captan Kirk could talk to the computer. In 2001: a Space Odyssey, a computer was simultaneously sympathetic and malevolent. As a society we were beginning to see that computers had capabilities that were almost boundless. And it was becoming clear that it was the software, more than the hardware, from which those boundless possibilities arose.

The change was in the preception of who had the power. And in the late '60s we were realizing that it was the programmers, and not the hardware developers, who would wield the power.

When programmers became powerful, programming became Man's Work.

I felt that power. I knew it for what it was, while looking over that male technician's shoulder, watching him push those buttons. I felt that power, and I knew that I would have that power. I absorbed the power from him as I watched, and learned.

It was as man, who's shoulder I was looking over, wasn't it? It had to be! You couldn't absorb that kind of power from a woman, could you? It couldn't have been a woman, it couldn't have been -- Judy Allen, -- could it?

Oh Christ Almighty, could it?