Christophe.colombier

Shared posts

Science décalée : le smartphone transforme la main humaine

Go Range Loop Internals

Avoid Design by Committee: Generate Apart, Evaluate Together

This post was first published in its entirety on CoderHood as Avoid Design by Committee: Generate Apart, Evaluate Together. CoderHood is a blog dedicated to the human dimension of software engineering.

The tech industry thrives on innovation. Building innovative software products requires constant design and architecture of creative solutions. In that context, I am not a fan of design by committee; in fact, I believe that it is more of a disease than a strategy. It afflicts teams with no leadership or unclear leadership; the process is painful, and the results are abysmal.

Usability issues plague software products designed by committee. Such products look like collections of features stuck together without a unifying vision or a unique feeling; they are like onions, built as a series of loosely connected layers. They cannot bring emotions to the user because emotions are the product of individual passion.

Decisions and design by committee feel safe because of the sense of shared accountability, which is no accountability at all. A group's desire to strive for unanimity overrides the motivation to consider and debate alternative views. It reduces creativity and ideation to an exercise of consensus building.

Building consensus is the act of pushing a group of people to think alike. On that topic, Walter Lippmann famously said:

When all think alike, then no one is thinking.

In other words, building consensus is urging people to talk until they stop thinking and until they finally agree. Not many great ideas or designs come out that way.

I am a believer that the seeds of great ideas are generated as part of a creative process that is unique to each person. If you force a process where all designs must be produced and evaluated on the spot in a group setting, you are not giving introverted creative minds the time to enter their best focused and creative state. On the other hand, if you force all ideas to be generated in isolation without group brainstorming, you miss the opportunity to harvest collective wisdom.

That is why I like to implement a mixed model that I call "Generate apart, Evaluate Together." I am going to talk about this model in the context of software and architecture design, but you can easily expand it to anything that requires both individual creativity and group wisdom. Like most models, I am not suggesting that you should apply it to every design or decision. It is not a silver bullet. Look at it as another tool in your toolbelt. I recommend it mostly for medium to large undertakings, significant design challenges, medium to large shifts and foundational architecture choices.

Generate Apart, Evaluate Together

I believe in the power of a creative process that starts with one or more group-brainstorms designed to frame a problem, evaluate requirements and bring-out and evaluate as many initial thoughts as possible. In that process, a technical leader guides the group to discuss viable alternatives but keeps the discussion at a "box and arrows" high-level. Imagine a data flow diagram on a whiteboard or a mindmap of the most important concepts. Groups of people discussing design and architecture should not get bogged down in the weeds.

At some point during that high-level ideation session, the group selects one person to be an idea/proposal generation driver. After the group session is over, that person is in charge of doing research, create, document and eventually present more ideas and detailed solution options. If the problem-space is vast, the group might select several drivers, one for each of the different areas.

The proposal generation driver doesn't have to do all the work in isolation. He or she can collaborate with others to come up with proposals, but he or she needs to lead the conversation and idea generation.

After the driver generated data, ideas, and proposals, the group meets again to brainstorm, evaluate and refine the material. At that point, the group might identify new drivers (or maintain the old ones), and the process repeats iteratively.

As mentioned, I refer to this iterative process with the catchphrase, "Generate Apart, Evaluate Together." Please, do not interpret that too literally and as an absolute. First of all, there is idea generation happening as a group, but it is mostly at a high-level. Also, there is an evaluation of ideas that happens outside of the group. "Generate apart, evaluate together" is a reminder to avoid design by committee, in-the-weeds group meetings, and isolated evaluation and selection of all ideas and solutions.

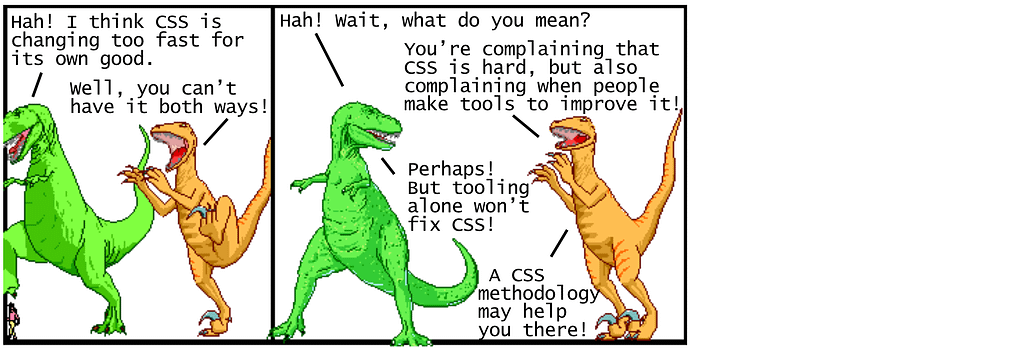

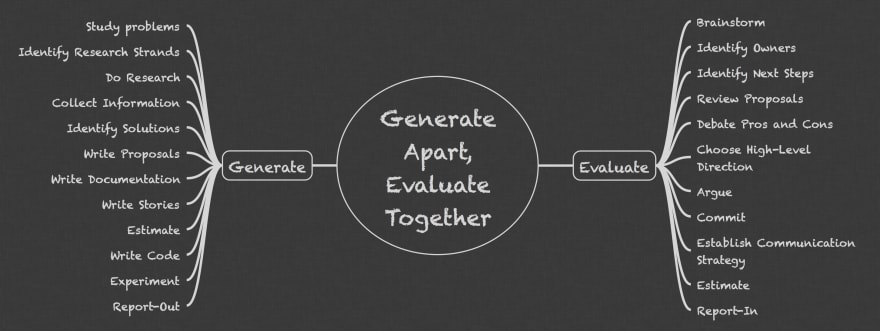

I synthesized the process with this quick back-of-the-napkin sequence diagram:

Evaluate

The "evaluate" part of "Generate apart, evaluate together" refers to one of the two different phases of evaluation mentioned above.

The Initial Evaluation is a group assessment of the business requirements followed by one or more brainstorms. The primary goal is to frame the problem and create an initial list of possible high-level architectural and technical choices and issues. The secondary objective is to select a driver for idea generation.

The Iterative Evaluation is the periodic group evaluation and refinement of ideas and proposals generated by the driver in non-group settings.

Some of the activities executed during both types of evaluation are:

- Brainstorm business requirements and solutions.

- Identification of the proposal generation driver or drivers.

- Identification of next steps.

- Review proposals.

- Debate pros and cons of proposed solutions.

- Choose a high-level technical direction.

- Argue.

- Agree and commit or disagree and commit.

- Establish communication strategy.

- Estimate.

- Report-in, meaning the driver reports and presents ideas and recommendations to the group.

Note that there is no attempt to generate consensus. The group evaluates and commits to a direction, regardless if there is unanimous agreement or not. Strong leadership is required to make this work.

Generate

The "generate" part of "Generate apart, evaluate together" refers to the solitary or small group activities of an individual who works to drive the creation of proposals and detailed solutions to present to the larger group. Such activities include:

- Study problems

- Identify research strands and do research.

- Collect information.

- Identify solutions.

- Write proposals, documentation, user stories.

- Estimate user stories.

- Write/test/maintain code.

- Experiment.

- Report-out (meaning the driver reports to the group the results of research, investigation, etc.)

MindMap

For future reference, you can remember the main ideas of this method with a MindMap:

Final Thoughts

I didn't invent the catchphrase "Generate apart, evaluate together," but I am not sure who did, where I heard it, or what was the original intent. I tried to find out, but Google didn't help much. Regardless, in this post, I described how I use it, what it means to me, and what kinds of problems it resolves. I used this model for a long time, and I only recently gave it a tagline that sticks to mind and can be used to describe the method without too much necessary explanation.

If you enjoyed this article, keep in touch!

Developer's Guide to Email

Obsolescence programmée : le procès à charge contre Epson élude le vrai problème

Par Grégoire Dubost.

Poursuivi en justice pour « obsolescence programmée », Epson a d’ores et déjà été condamné par un tribunal médiatique. Dans un numéro d’Envoyé spécial diffusé sur France 2 le 29 mars 2018, les journalistes Anne-Charlotte Hinet et Swanny Thiébaut ont repris avec une étonnante crédulité les accusations assénées par l’association HOP (Halte à l’obsolescence programmée), à l’origine d’une plainte déposée l’année dernière contre l’industriel japonais.

Des constats troublants

Certains constats rapportés dans cette enquête s’avèrent effectivement troublants : on y découvre qu’une imprimante prétendument inutilisable peut tout à fait sortir plusieurs pages après l’installation d’un pilote pirate ; quant aux cartouches, elles semblent loin d’être vides quand l’utilisateur est appelé à les changer. La preuve n’est-elle pas ainsi faite qu’un complot est ourdi contre des consommateurs aussi malheureux qu’impuissants ?

Cet article pourrait vous interesser

La musique accompagnant ce reportage, angoissante, laisse entendre qu’un danger planerait sur ceux qui se risqueraient à le dénoncer. On tremble à l’évocation de cet homme qui « a fini par briser le silence » ; quel aura été le prix de sa témérité ? Un passionné d’électronique susceptible d’assister les enquêteurs vit en marge de la société, reclus loin des villes et de leurs menaces : « il a fallu avaler quelques kilomètres en rase campagne pour trouver un expert », racontent les journalistes. Un expert censé décrypter le contenu d’une puce électronique à l’aide d’un tournevis… Succès garanti.

Les imprimantes incriminées sont vendues autour d’une cinquantaine d’euros. « Le fabricant n’a pas intérêt à avoir des imprimantes de ce prix-là qui soient réparables, sinon il n’en vendra plus », croit savoir l’un des témoins interrogés. Étrange conviction : si un fabricant était en mesure de prolonger la durée de vie de ses produits et d’étendre leur garantie en conséquence, sans en renchérir le coût ni en compromettre les fonctionnalités, n’aurait-il pas intérêt à le faire, dans l’espoir de gagner des parts de marché aux dépens de ses concurrents ?

Vendre de l’encre très chère

De toute façon, pour Epson, l’activité la plus lucrative n’est pas là : « autant vendre une imprimante pas chère pour vendre ensuite de l’encre très chère », souligne une autre personne interrogée, cette fois-ci bien inspirée. Il est vrai qu’au fil du temps, les fabricants de cartouches génériques parviennent à contourner les verrous mis en place par Epson et ses homologues pour s’arroger d’éphémères monopoles sur le marché des consommables.

Cela étant, même s’il préfère acheter de l’encre à moindre coût, le possesseur d’une imprimante Epson fonctionnelle sera toujours un acheteur potentiel des cartouches de la marque ; ce qu’il ne sera plus, assurément, quand son imprimante sera tombée en panne… Ce constat, frappé du sceau du bon sens, semble avoir échappé aux enquêteurs, qui prétendent pourtant que « les fabricants font tout pour vous faire acheter leurs propres cartouches ». Peut-être cela pourrait-il expliquer l’obligation de changer une cartouche pour utiliser le scanner d’une imprimante multi-fonctions… Mais quelle garantie Epson aurait-il que ses clients lui restent fidèles au moment de renouveler leur matériel dont il aurait lui-même programmé l’obsolescence ? Ils le seront d’autant moins s’ils ont été déçus par leur achat – notamment s’ils jugent que leur imprimante les a lâchés prématurément.

Pourquoi ces aberrations ?

La pertinence d’une stratégie d’obsolescence programmée est donc sujette à caution. Comment, dès lors, expliquer certaines aberrations ? Epson se montre peu prolixe à ce sujet ; sa communication s’avère même calamiteuse ! « Une imprimante est un produit sophistiqué », affirme-t-il dans un communiqué. Pas tellement en fait. Du moins l’électronique embarquée dans un tel appareil n’est-elle pas des plus élaborée. Assistant au dépeçage d’une cartouche, les journalistes ont mimé l’étonnement à la découverte de sa puce : « Surprise ! […] Pas de circuit électrique, rien qui la relie au réservoir d’encre. Elle est juste collée. Comment diable cette puce peut-elle indiquer le niveau d’encre si elle n’est pas en contact avec l’encre ? » Que croyaient-ils trouver dans un consommable au recyclage notoirement aléatoire ? Ou dans un appareil vendu seulement quelques dizaines d’euros ? Quoi qu’en dise Epson, sans doute la consommation de l’encre et l’état du tampon absorbeur sont-ils évalués de façon approximative. Apparemment, le constructeur voit large, très large même ! C’est évidemment regrettable, mais qu’en est-il des alternatives ? Les concurrents d’Epson proposent-ils des solutions techniques plus efficaces sur des produits vendus à prix comparable ? Encore une question qui n’a pas été posée…

Concernant les cartouches, dont l’encre est en partie gaspillée, la malignité prêtée au constructeur reste à démontrer. On n’achète pas une cartouche d’encre comme on choisit une brique de lait ni comme on fait un plein d’essence. Si le volume d’encre qu’elle contient est bien mentionné sur l’emballage, cette information n’est pas particulièrement mise en valeur. D’une marque à l’autre, d’ailleurs, elle n’est pas la même ; elle ne constitue pas un repère ni un élément de comparaison. En pratique, on n’achète pas des millilitres d’encre, mais des cartouches de capacité dite standard, ou bien de haute capacité, avec la promesse qu’elles nous permettront d’imprimer un certain nombre de pages. Dans ces conditions, quel intérêt y aurait-il, pour un constructeur, à restreindre la proportion d’encre effectivement utilisée ? Autant réduire le volume présent dans les cartouches ! Pour le consommateur, cela reviendrait au même : il serait condamné à en acheter davantage ; pour l’industriel, en revanche, ce serait évidemment plus intéressant, puisqu’il aurait moins d’encre à produire pour alimenter un nombre identique de cartouches vendues au même prix. Un représentant d’Epson, filmé à son insu, a tenté de l’expliquer au cours du reportage, avec toutefois une extrême maladresse. Les enquêteurs n’ont pas manqué de s’en délecter, prenant un malin plaisir à mettre en scène la dénonciation d’un mensonge éhonté.

Des allégations sujettes à caution

Force est de constater qu’ils n’ont pas fait preuve du même zèle pour vérifier les allégations des militants qui les ont inspirés. Quand il juge nécessaire de changer le tampon absorbeur d’une imprimante, Epson en interdit l’usage au motif que l’encre risquerait de se répandre n’importe où. Parmi les utilisateurs d’un pilote pirate permettant de contourner ce blocage, « on a eu aucun cas de personnes qui nous écrivaient pour dire que cela avait débordé », rétorque la représentante de l’association HOP. Pourquoi les journalistes n’ont-ils pas tenté l’expérience de vider quelques cartouches supplémentaires dans ces conditions ? À défaut, peut-être auraient-ils pu arpenter la Toile à la recherche d’un éventuel témoignage. « Il y a quelques années j’ai dépanné une imprimante qui faisait de grosses traces à chaque impression, dont le propriétaire avait, un an auparavant, réinitialisé le compteur […] pour permettre de reprendre les impressions », raconte un internaute, TeoB, dans un commentaire publié le 19 janvier sur le site LinuxFr.org ; « le tampon était noyé d’encre qui avait débordé et qui tapissait tout le fond de l’imprimante », précise-t-il ; « ça m’a pris quelques heures pour tout remettre en état, plus une nuit de séchage », se souvient-il. Dans le cas présent, ce qui passe pour de l’obsolescence programmée pourrait relever en fait de la maintenance préventive… Les journalistes l’ont eux-mêmes rapporté au cours de leur reportage : Epson assure remplacer gratuitement ce fameux tampon ; pourquoi ne l’ont-ils pas sollicité pour évaluer le service proposé ?

Ils ont préféré cautionner l’idée selon laquelle une imprimante affectée par un consommable réputé en fin de vie – à tort ou à raison – devrait être promise à la casse. La confusion à ce sujet est entretenue au cours du reportage par un technicien présenté comme un « spécialiste de l’encre et de la panne ». Les militants de l’association HOP témoignent en cela d’une inconséquence patente : ils pourraient déplorer le discours sibyllin des constructeurs d’imprimantes, dont les manuels d’utilisation ou les messages à l’écran ne semblent pas faire mention des opérations de maintenance gracieuses promises par ailleurs ; mais ils préfèrent entretenir le mythe d’un sabotage délibéré de leurs produits, confortant paradoxalement leurs utilisateurs dans la conviction qu’ils seraient irréparables… Si la balle se trouve parfois dans le camp des industriels, ceux-ci ne manquent pas de la renvoyer aux consommateurs ; encore faut-il que ces derniers s’en saisissent, plutôt que de fuir leurs responsabilités éludées par une théorie complotiste.

Liens :

https://www.francetvinfo.fr/

https://www.epson.fr/insights/

https://linuxfr.org/users/

L’article Obsolescence programmée : le procès à charge contre Epson élude le vrai problème est apparu en premier sur Contrepoints.

What kind of managers will Generation Z bring?

They're smart, conscientious, ambitious, and forward-thinking. And of course, they're the first true digital natives. Growing up in a world where information changes by the second, Generation Z — the generation born roughly after 1995 — is ready to take the workplace by storm.

As the group that follows the millennials, Generation Z — or "Gen Z" as they are often called — is changing the way we do business. They see the world through a different lens. Their passions and inspirations will define what they do for a career, and consequently drive many of their decisions about how they see themselves in the workplace. These are the managers, big thinkers, and entrepreneurs of tomorrow. What will America's businesses look like with them at the helm?

They'll encourage risk-taking

Laura Handrick, an HR and workplace analyst with FitSmallBusiness.com, believes the workers that will come from Generation Z are not limited by a fear of not knowing. "They recognize that everything they need or want to know is available from the internet, their friends, or crowdsourcing — so, they don't fear the unknown," she says.

Gen Z-ers know they can figure anything out if they have the right people, experts, and thought leaders around them. That's why Handrick believes they are more likely to try new things — and expect their peers and coworkers to do so, too. This could manifest itself as a kind of entrepreneurial spirit we haven't seen in years.

But they won't be reckless

While Gen Z is likely to encourage new ideas, most of the risks they'll be willing to take will be calculated ones. None of this "move fast and break things" mindset that has so driven today's entrepreneurs. After all, many of them grew up during the financial crisis of the mid- to late-2000s, and they know what happens when you price yourself out of your home, max out your credit cards, or leave college with $30,000 in student loans. Many were raised in homes that were foreclosed, or had parents who declared bankruptcy. Because of this, most of them will keep a close eye on the financial side of a business.

"Think of them as the bright eyes and vision of millennials with the financial-savvy of boomers, having lived through the financial crash of 2008," says Morgan Chaney, head of marketing for Blueboard, an HR tech startup.

Indeed, according to a survey done by Monster.com, 70 percent of Generation Z-ers say they're most motivated by money, compared to 63 percent of millennials. In other words, putting a Gen Z-er in charge of your business is not going to make it go "belly up." They'll be more conservative with cash and very wary of bad business investments. This generation is unique in its understanding of money and how it works.

Get ready for more inclusive workplaces

Chaney believes Gen Z managers will be empathetic, hands-on, and purpose-driven. Since they believe in working one-on-one to ensure each employee is nurtured and has a purpose-driven career path, Generation Z is going to manage businesses with a "people-first" approach.

This means the workplaces they run will be less plagued by diversity problems than the generations that came before them. "They'll value each person for their experience, expertise, and uniqueness — race or gender won't be issues for them," says Handrick.

Gen Z-er Timothy Woods, co-founder of ExpertiseDirect.com, which connects customers with experts in various fields, says his generation will be adaptive and empathetic managers, with a steely determination to succeed. "This generation is no longer only competing with the person in the next office, but instead with a world of individuals, each more informed and successful than the next, constantly broadcasting their achievements online and setting the bar over which one must now climb," he explains.

The 40-hour work week will disappear

Generation Z managers will also be very disciplined: Fifty-eight percent of those surveyed said they were willing to work nights and weekends, compared to 45 percent of millennials. Gone are the days of a working a typical 9-to-5 job. Generation Z managers will tolerate and likely expect non-traditional hours. "They'll have software apps to track productivity and won't feel a need to have people physically sitting in the same office to know that work is getting done," Handrick explains. "Video conference, texting, and mobile apps are how they'll manage their teams."

The catch? They have to feel like their work matters. According to the Monster.com survey, "Gen Z stands out as the generation that most strongly believes work should have a greater purpose." Seventy-four percent of Generation Z-ers surveyed said they want their work to be about more than just money. That's compared to 45 percent of millennials, and the numbers fall even further for Gen-X and boomers.

So, yes, they'll work hard, but only if they know it's for a good reason. And that's a positive thing. In this way, the leaders of tomorrow will merge money and purpose to create a whole new way of doing business.

the secret life of NaN

The floating point standard defines a special value called Not-a-Number (NaN) which is used to represent, well, values that aren’t numbers. Double precision NaNs come with a payload of 51 bits which can be used for whatever you want– one especially fun hack is using the payload to represent all other non-floating point values and their types at runtime in dynamically typed languages.

%%%%%% update (04/2019) %%%%%%

I gave a lightning talk about the secret life of NaN at !!con West 2019– it has fewer details, but more jokes; you can find a recording here.

%%%%%%%%%%%%%%%%%%%%%%%

the non-secret life of NaN

When I say “NaN” and also “floating point”, I specifically mean the representations defined in IEEE 754-2008, the ubiquitous floating point standard. This standard was born in 1985 (with much drama!) out of a need for a canonical representation which would allow code to be portable by quelling the anarchy induced by the menagerie of inconsistent floating point representations used by different processors.

Floating point values are a discrete logarithmic-ish approximation to real numbers; below is a visualization of the points defined by a toy floating point like representation with 3 bits of exponent, 3 bits of mantissa (the image is from the paper “How do you compute the midpoint of an interval?”, which points out arithmetic artifacts that commonly show up in midpoint computations).

Since the NaN I’m talking about doesn’t exist outside of IEEE 754-2008, let’s briefly take a look at the spec.

An extremely brief overview of IEEE 754-2008

The standard defines these logarithmic-ish distributions of values with base-2 and base-10. For base-2, the standard defines representations for bit-widths for all powers of two between 16 bits wide and 256 bits wide; for base-10 it defines representations for bit-widths for all powers of two between 32 bits wide and 128 bits wide. (Well, almost. For the exact spec check out page 13 spec). These are the only standardized bitwidths, meaning, if a processor supports 32 bit floating point values, then it’s highly likely it will support it in the standard compliant representation.

Speaking of which, let’s take a look at what the standard compliant representation is. Let’s look at binary16, the base-2 16 bit wide format:

1 sign bit | 5 exponent bits | 10 mantissa bits

S E E E E E M M M M M M M M M M

I won’t explain how these are used to represent numeric values because I’ve got different fish to fry, but if you do want an explanation, I quite like these nice walkthroughs.

Briefly, though, here are some examples: the take-away is you can use these 16 bits to encode a variety of values.

0 01111 0000000000 = 1

0 00000 0000000000 = +0

1 00000 0000000000 = -0

1 01101 0101010101 = -0.333251953125

Cool, so we can represent some finite, discrete collection of real numbers. That’s what you want from your numeric representation most of the time.

More interestingly, though, the standard also defines some special values: ±infinity, and “quiet” & “signaling” NaN. ±infinity are self-explanatory overflow behaviors: in the visualization above, ±15 are the largest magnitude values which can be precisely represented, and computations with values whose magnitudes are larger than 15 may overflow to ±infinity. The spec provides guidance on when operations should return ±infinity based on different rounding modes.

What IEEE 754-2008 says about NaNs

First of all, let’s see how NaNs are represented, and then we’ll straighten out this “quiet” vs “signaling” business.

The standard reads (page 35, §6.2.1)

All binary NaN bit strings have all the bits of the biased exponent field E set to 1 (see 3.4). A quiet NaN bit string should be encoded with the first bit (d1) of the trailing significand field T being 1. A signaling NaN bit string should be encoded with the first bit of the trailing significand field being 0.

For example, in the binary16 format, NaNs are specified by the bit patterns:

s 11111 1xxxxxxxxxx = quiet (qNaN)

s 11111 0xxxxxxxxxx = signaling (sNaN) **

Notice that this is a large collection of bit patterns! Even ignoring the sign bit, there are 2^(number mantissa bits - 1) bit patterns which all encoded a NaN! We’ll refer to these leftover bits as the payload. **: a slight complication: in the sNaN case, at least one of the mantissa bits must be set; it cannot have an all zero payload because the bit pattern with a fully set exponent and fully zeroed out mantissa encodes infinity.

It seems strange to me that the bit which signifies whether or not the NaN is signaling is the top bit of the mantissa rather than the sign bit; perhaps something about how floating point pipelines are implemented makes it less natural to use the sign bit to decide whether or not to raise a signal.

Modern commodity hardware commonly uses 64 bit floats; the double-precision format has 52 bits for the mantissa, which means there are 51 bits available for the payload.

Okay, now let’s see the difference between “quiet” and “signaling” NaNs (page 34, §6.2):

Signaling NaNs afford representations for uninitialized variables and arithmetic-like enhancements (such as complex-affine infinities or extremely wide range) that are not in the scope of this standard. Quiet NaNs should, by means left to the implementer’s discretion, afford retrospective diagnostic information inherited from invalid or unavailable data and results. To facilitate propagation of diagnostic information contained in NaNs, as much of that information as possible should be preserved in NaN results of operations.

Under default exception handling, any operation signaling an invalid operation exception and for which a floating-point result is to be delivered shall deliver a quiet NaN.

So “signaling” NaNs may raise an exception; the standard is agnostic to whether floating point is implemented in hardware or software so it doesn’t really say what this exception is. In hardware this might translate to the floating point unit setting an exception flag, or for instance, the C standard defines and requires the SIGFPE signal to represent floating point computational exceptions.

So, that last quoted sentence says that an operation which receives a signaling NaN can raise the alarm, then quiet the NaN and propagate it along. Why might an operation receive a signaling NaN? Well, that’s what the first quoted sentence explains: you might want to represent uninitialized variables with a signaling NaN so that if anyone ever tries to perform an operation on that value (without having first initialized it) they will be signaled that that was likely not what they wanted to do.

Conversely, “quiet” NaNs are your garden variety NaN– qNaNs are what are produced when the result of an operation is genuinely not a number, like attempting to take the square root of a negative number. The really valuable thing to notice here is the sentence:

To facilitate propagation of diagnostic information contained in NaNs, as much of that information as possible should be preserved in NaN results of operations.

This means the official suggestion in the floating point standard is to leave a qNaN exactly as you found it, in case someone is using it propagate “diagnostic information” using that payload we saw above. Is this an invitation to jerryrig extra information into NaNs? You bet it is!

What can we do with the payload?

This is really the question I’m interested in; or, rather, the slight refinement: what have people done with the payload?

The most satisfying answer that I found to this question is, people have used the NaN payload to pass around data & type information in dynamically typed languages, including implementations in Lua and Javascript. Why dynamically typed languages? Because if your language is dynamically typed, then the type of a variable can change at runtime, which means you absolutely must also pass around some type information; the NaN payload is an opportunity to store both that type information and the actual value. We’ll take a look at one of these implementations in detail in just a moment.

I tried to track down other uses but didn’t find much else; this textbook has some suggestions (page 86):

One possibility might be to use NaNs as symbols in a symbolic expression parser. Another would be to use NaNs as missing data values and the payload to indicate a source for the missing data or its class.

The author probably had something specific in mind, but I couldn’t track down any implementations which used NaN payloads for symbols or a source indication for missing data. If anyone knows of other uses of the NaN payload in the wild, I’d love to hear about them!

Okay, let’s look at how JavaScriptCore uses the payload to store type information:

Payload in Practice! A look at JavaScriptCore

We’re going to look at an implementation of a technique called NaN-boxing. Under NaN-boxing, all values in the language & their type tags are represented in 64 bits! Valid double-precision floats are left to their IEEE 754 representations, but all of that leftover space in the payload of NaNs is used to store every other value in the language, as well as a tag to signify what the type of the payload is. It’s like instead of saying “not a number” we’re saying “not a double precision float”, but rather a “<some other type>”.

We’re going to look at how JavaScriptCore (JSC) uses NaN-boxing, but JSC isn’t the only real-world industry-grade implementation that stores other types in NaNs. For example, Mozilla’s SpiderMonkey JavaScript implementation also uses NaN-boxing (which they call nun-boxing & pun-boxing), as does LuaJIT, which they call NaN-tagging. The reason I want to look at JSC’s code is it has a really great comment explaining their implementation.

JSC is the JavaScript implementation that powers WebKit, which runs Safari and Adobe’s Creative Suite. As far as I can tell, the code we’re going to look at is actually currently being used in Safari- as of March 2018, the file had last been modified 18 days ago.

NaN-Boxing explained in a comment

Here is the file we’re going to look at. The way NaN-boxing works is when you have non-float datatypes (pointers, integers, booleans) you store them in the payload, and use the top bits to encode the type of the payload. In the case of double-precision floats, we have 51 bits of payload which means we can store anything that fits in those 51 bits. Notably we can store 32 bit integers, and 48 bit pointers (the current x86-64 pointer bit-width). This means that we can store every value in the language in 64 bits.

Sidenote: according to the ECMAScript standard, JavaScript doesn’t have a primitive integer datatype- it’s all double-precision floats. So why would a JS implementation want to represent integers? One good reason is integer operations are so much faster in hardware, and many of the numeric values used in programs really are ints. A notable example is an index variable in a for-loop which walks over an array. Also according to the ECMAScript spec, arrays can only have 2^32 elements so it is actually safe to store array index variables as 32-bit ints in NaN payloads.

The encoding they use is:

* The top 16-bits denote the type of the encoded JSValue: * * Pointer { 0000:PPPP:PPPP:PPPP * / 0001:****:****:**** * Double { ... * \ FFFE:****:****:**** * Integer { FFFF:0000:IIII:IIII * * The scheme we have implemented encodes double precision values by performing a * 64-bit integer addition of the value 2^48 to the number. After this manipulation * no encoded double-precision value will begin with the pattern 0x0000 or 0xFFFF. * Values must be decoded by reversing this operation before subsequent floating point * operations may be peformed.

So this comment explains that different value ranges are used to represent different types of objects. But notice that these bit-ranges don’t match those defined in IEEE-754; for instance, in the standard for double precision values:

a valid qNaN:

1 sign bit | 11 exponent bits | 52 mantissa bits

1 | 1 1 1 1 1 1 1 1 1 1 1 | 1 + {51 bits of payload}

chunked into bytes this is:

1 1 1 1 | 1 1 1 1 | 1 1 1 1 | 1 + {51 bits of payload}

which represents all the bit patterns in the range:

0x F F F F ...

to

0x F F F 8 ...

This means that according to the standard, the bit-ranges usually represented by valid doubles vs. qNaNs are:

/ 0000:****:****:****

Double { ...

\ FFF7:****:****:****

/ FFF8:****:****:****

qNaN { ...

\ FFFF:****:****:****

So what the comment in the code is showing us is that the ranges they’re representing are shifted from what’s defined in the standard. The reason they’re doing this is to favor pointers: because pointers occupy the range with the top two bytes zeroed, you can manipulate pointers without applying a mask. The effect is that pointers aren’t “boxed”, while all other values are. This choice to favor pointers isn’t obvious; the SpiderMonkey implementation doesn’t shift the range, thus favoring doubles.

Okay, so I think the easiest way to see what’s up with this range shifting business is by looking at the mask lower down in that file:

// This value is 2^48, used to encode doubles such that the encoded value will begin // with a 16-bit pattern within the range 0x0001..0xFFFE. #define DoubleEncodeOffset 0x1000000000000ll

This offset is used in the asDouble() function:

inline double JSValue::asDouble() const { ASSERT(isDouble()); return reinterpretInt64ToDouble(u.asInt64 - DoubleEncodeOffset); }

This shifts the encoded double into the normal range of bit patterns defined by the standard. Conversely, the asCell() function (I believe in JSC “cells” and “pointers” are roughly interchangeable terms) can just grab the pointer directly without shifting:

ALWAYS_INLINE JSCell* JSValue::asCell() const { ASSERT(isCell()); return u.ptr; }

Cool. That’s actually basically it. Below I’ll mention a few more fun tidbits from the JSC implementation, but this is really the heart of the NaN-boxing implementation.

What about all the other values?

The part of the comment that said that if the top two bytes are 0, then the payload is a pointer was lying. Or, okay, over-simplified. JSC reserves specific, invalid, pointer values to denote immediates required by the ECMAScript standard: boolean, undefined & null:

* False: 0x06 * True: 0x07 * Undefined: 0x0a * Null: 0x02

These all have the second bit set to make it easy to test whether the value is any of these immediates.

They also represent 2 immediates not required by the standard: ValueEmpty at 0x00, which are used to represent holes in arrays, & ValueDeleted at 0x04, which are used to mark deleted values.

And finally, they also represent pointers into Wasm at 0x03.

So, putting it all together, a complete picture of the bit pattern encodings in JSC is:

* ValEmpty { 0000:0000:0000:0000 * Null { 0000:0000:0000:0002 * Wasm { 0000:0000:0000:0003 * ValDeltd { 0000:0000:0000:0004 * False { 0000:0000:0000:0006 * True { 0000:0000:0000:0007 * Undefined { 0000:0000:0000:000a * Pointer { 0000:PPPP:PPPP:PPPP * / 0001:****:****:**** * Double { ... * \ FFFE:****:****:**** * Integer { FFFF:0000:IIII:IIII

Take-Aways

- The floating point spec leaves a lot of room for NaN payloads. It does this intentionally.

- What are these payloads used for in real life? Mostly, I don’t know what they’re used for. If you know of other real world uses, I’d love to hear from you.

- One use is NaN-boxing, which is where you stick all the other non-floating point values in a language + their type information into the payload of NaNs. It’s a beautiful hack.

Appendix: to NaNbox or not to NaNbox

Looking at this implementation begs the question, is NaN-boxing a good idea or a bizzaro hack? As someone who isn’t implementing or maintaining a dynamically typed language, I’m not well-posed to answer that question. There are a lot of different approaches which surely all have nuanced tradeoffs that show up depending on the use-cases of your language. With that caveat, here’s a rough sketch of what some pros & cons are. Pros: saves memory, all values fit in registers, bit masks are fast to apply; Cons: have to box & unbox almost all values, implementation becomes harder, and validation bugs can be serious security vulnerabilities.

For a better discussion of NaN-boxing tradeoffs from someone who does implement & maintain a dynamically typed language check out this article.

Apart from performance, there is this writeup and this other writeup of vulnerabilities discovered in JSC. Whether these vulnerabilities would have been preventable if JSC had used a different approach for storing type information is a moot point, but there is at least one vulnerability that seems like it would have been prevented:

This way we control all 8 bytes of the structure, but there are other limitations (Some floating-point normalization crap does not allow for truly arbitrary values to be written. Otherwise, you would be able to craft a CellTag and set pointer to an arbitrary value, that would be horrible. Interestingly, before it did allow that, which is what the very first Vita WebKit exploit used! CVE-2010-1807).

If you want to know way more about JSC’s memory model there is also this very in depth article.

Nouveau record du monde de marche pieds nus sur Lego

On est d’accord, marcher sur un Lego sans le faire exprès est l’une des pires sensations du monde (en le faisant exprès aussi d’ailleurs). Et bien comme à peu près toute « discipline » dans ce bas monde il existe un record du monde de marche pieds nus sur lego enregistré au Guiness World Records. Même qu’un mec vient de le battre.

Quand record du monde rime avec douleur

Tyler, membre de la chaîne Youtube Dude Perfect a parcouru 44,7 mètres de briques Lego pieds nus pour l’émission « Absurd Record ». Record qui a été, bien évidemment, validé par le Guiness World Records qui était, bien évidemment, aussi sur place. Sur la vidéo ci dessous, vous verrez un homme courageux, en pleine souffrance. Tu as tout notre respect Tyler.

Pour la petite anecdote, le meilleur score précédent était de 24,9 mètres. Pour ceux qui sont vraiment très mauvais en calcul mental, Tyler a quand même fait presque 20 mètres de plus que son prédécesseur…

L’article Nouveau record du monde de marche pieds nus sur Lego est apparu en premier sur GOLEM13.FR.

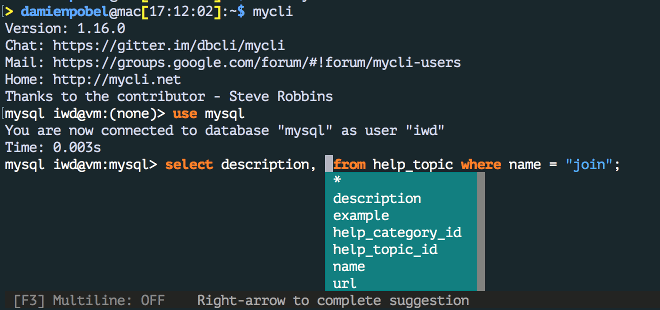

Damien Pobel : mycli, un client MySQL (et alternatives compatibles) en ligne de commande

Via le Journal du Hacker, je suis tombé

sur Config pour ne plus taper ses mots de passe MySQL et plus encore avec les

Options

file

qui rappelle que le client MySQL en ligne de commande propose un fichier de

configuration (~/.my.cnf) permettant de se simplifier la vie si on se connecte

toujours aux mêmes machines/bases. Ce billet montre aussi

l'option pager de ce fichier de configuration qui, comme son nom l'indique,

permet de configurer un pager (more, less, neovim, ... ou ce que vous

voulez) que l'auteur utilise pour mettre de la couleur dans le client MySQL /

MariaDB

avec Generic Colouriser. Bref, ce sont deux

très bonnes astuces pour les utilisateurs de mysql en ligne de commande dont

je fais partie.

Il se trouve qu'en plus, au travail, j'utilise une machine virtuelle. Et donc, pour accèder à MySQL, il me faut d'abord faire ouvrir un shell avec ssh pour ensuite lancer le client. Bien sûr, un bête alias permet de faire tout ça plus rapidement mais j'aime bien avoir mes outils de développement en local. En cherchant comment installer le client MySQL (et uniquement celui-ci) sur mon Mac, je suis tombé sur mycli et autant de le dire tout de suite, j'ai abandonné l'idée d'installer le client officiel :) En fait, mycli est un client MySQL (compatible avec MariaDB ou Percona) qui vient avec tout un tas de fonctionnalités vraiment pratiques et bien documentées comme la coloration syntaxique des requêtes, l'édition multi-ligne ou non, quelques commandes pratiques et surtout un complètement intelligent !

Il a sa propre configuration dans ~/.myclirc (qu'il génère au premier

lancement avec les commentaires, encore une bonne idée) mais le plus beau, c'est

qu'il utilise aussi ~/.my.cnf le fichier de configuration du client officiel

et donc les 2 astuces citées plus haut fonctionnent parfaitement et directement dans

cet outil !

Bref, pour le moment, mon .myclirc est celui par défaut (sauf le thème

fruity) et mon .my.cnf

ressemble à

[client]

user = MONUSER

password = PASSWORD

host = vm.local

# ~/.grcat/mysql provient de https://github.com/nitso/colour-mysql-console

pager = 'grcat ~/.grcat/mysql|most'J'utilise most comme pager mais j'hésite encore avec less

qui propose une option pour ne pas paginer lorsque les données sont trop courtes

ou Neovim dont j'ai vraiment l'habitude.

Dernier point, vous n'utilisez pas MySQL (ou MariaDB ou Percona) ? Pas de problème, l'auteur a écrit le même genre de clients pour d'autres serveur de base de données.

![]()

Original post of Damien Pobel.Votez pour ce billet sur Planet Libre.

Articles similaires

- Nassim KACHA : [Sysadmin's friends] Screen (23/08/2010)

- theClimber : Trics & Tips : configurer son client ssh (17/06/2009)

- Jos : Changer de serveur pulseaudio en ligne de commande (10/09/2010)

- Jos : Mettre en place SSH sur Ubuntu (08/09/2009)

- JJL : Éditer des fichiers XML en ligne de commande : xmlstarlet (09/02/2010)

US Border Patrol Hasn’t Validated E-Passport Data For Years

Les cartes bancaires sans contact et la confidentialité des données

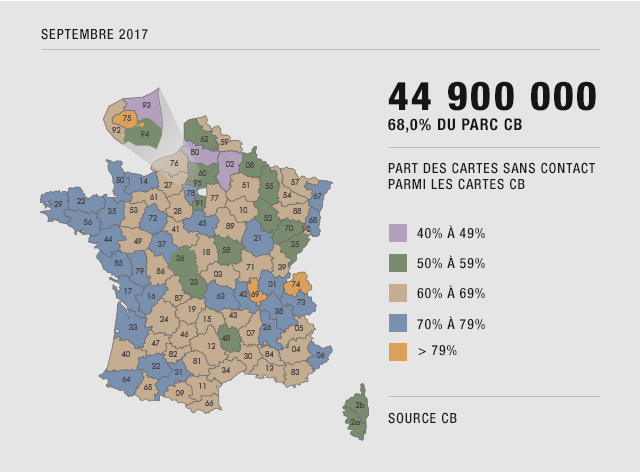

Le paiement sans contact est une fonction disponible sur plus de 60% des cartes bancaires en circulation. Les données bancaires étant des éléments sensibles, elles doivent naturellement être protégées.

Est-ce vraiment le cas ?

Evolution du paiement sans contact

Cette fonctionnalité est apparue en France aux alentours de 2012. Depuis, elle n’a cessé de se développer. Selon le GIE Cartes bancaires, 44,9 millions de cartes bancaires sans contact étaient en circulation en septembre 2017, soit 68% du parc français.

Dans son bilan 2016 (PDF, page 11), ce même GIE déclare que 605 millions de paiements ont été réalisés via du sans contact. Si ce chiffre semble énorme, l’évolution de ce dernier l’est encore plus : +158% de paiements par rapport à 2015, et la tendance ne faiblit pas.

Le paiement sans contact est fait pour des petites transactions, celles de « la vie quotidienne », le montant des échanges étant plafonné à maximum 30€ depuis octobre 2017.

Fonctionnement du paiement sans contact

Le principe est relativement simple, la personne détentrice d’une carte sans contact souhaite payer sa transaction (inférieure à 30€ donc), elle pose sa carte à quelques centimètres du terminal de paiement sans contact et « paf », c’est réglé.

Le paiement sans contact est basé sur la technologie NFC, ou Near Field Communication (communication en champ proche) via une puce et un circuit faisant office d’antenne, intégrés à la carte bancaire.

Le NFC est caractérisé par sa distance de communication, qui ne dépasse pas 10 cm avec du matériel conventionnel. Les fréquences utilisées par les cartes sans contact sont de l’ordre de la haute fréquence (13,56 MHz) et peuvent utiliser des protocoles de chiffrement et d’authentification. Le pass Navigo, les récents permis de conduire ou certains titres d’identité récents utilisent par exemple de la NFC.

Si la technique vous intéresse, je vous invite à lire en détail les normes ISO-14443A standard et la norme ISO 7816, partie 4.

Paiement sans contact et données personnelles

On va résumer simplement le problème : il n’y a pas de phase d’authentification ni de chiffrement total des données. En clair, cela signifie que des informations relativement sensibles se promènent, en clair, sur un morceau de plastique.

De nombreuses démonstrations existent çà et là, vous pouvez également trouver des applications pour mobile qui vous permettent de récupérer les informations non chiffrées (votre téléphone doit être compatible NFC pour réaliser l’opération).

![]()

Pour réaliser l’opération, avec du matériel conventionnel, il faut être maximum à quelques centimètres de la carte sans contact, ce qui limite fortement le potentiel d’attaque et interdit, de fait, une « industrialisation » de ces dernières.

Cependant, avec du matériel plus précis, plus puissant et plus onéreux, il est possible de récupérer les données de la carte jusqu’à 1,5 mètre et même plus avec du matériel spécifique et encore plus onéreux (il est question d’une portée d’environ 15 mètres avec ce genre de matériel). Un attaquant doté de ce type d’équipement peut récupérer une liste assez impressionnante de cartes, puisqu’elles sont de plus en plus présentes… problématique non ?

En 2012, le constat était plus alarmant qu’aujourd’hui, puisqu’il était possible de récupérer le nom du détenteur de la carte, son numéro de carte, sa date d’expiration, l’historique de ses transactions et les données de la bande magnétique de la carte bancaire.

En 2017… il est toujours possible de récupérer le numéro de la carte, la date d’expiration de cette dernière et, parfois, l’historique des transactions, mais nous y reviendrons.

Que dit la CNIL sur le sujet ?

J’ai demandé à la CNIL s’il fallait considérer le numéro de carte bancaire comme étant une donnée à caractère personnel, sans réponse pour le moment. J’éditerai cet article lorsque la réponse arrivera.

Si le numéro de carte bancaire est une donnée à caractère personnel, alors le fait qu’il soit disponible, et stocké en clair, me semble problématique, cela ne semble pas vraiment respecter la loi informatique et libertés.

En 2013, cette même CNIL a émis des recommandations à destination des organismes bancaires, en rappelant par exemple l’article 32 et l’article 38 de la loi informatique et libertés. Les porteurs de carte doivent, entre autres, être informés de la présence du sans contact et doivent pouvoir refuser cette technologie.

Les paiements sans contact sont appréciés des utilisateurs car ils sont simples, il suffit de passer sa carte sur le lecteur. Ils sont préférés aux paiements en liquide et certains vont même jusqu’à déclarer que « le liquide finira par disparaître dans quelques années ». Son usage massif fait que votre organisme bancaire vous connaît mieux, il peut maintenant voir les paiements qui lui échappaient avant, lorsque ces derniers étaient en liquide.

La CNIL s’est également alarmée, dès 2012, des données transmises en clair par les cartes en circulation à l’époque. Ainsi, il n’est plus possible de lire le nom du porteur de la carte, ni, normalement, de récupérer l’historique des transactions… ce dernier point étant discutable dans la mesure où, pas plus tard que la semaine dernière, j’ai pu le faire avec une carte émise en 2014.

Comme expliqué précédemment, il est encore possible aujourd’hui de récupérer le numéro de carte ainsi que la date d’expiration de cette dernière.

Dans le scénario d’une attaque ciblée contre un individu, obtenir son nom n’est pas compliqué. Le CVV – les trois chiffres indiqués au dos de la carte – peut être forcé, il n’existe que 1000 combinaisons possibles, allant de 000 à 999.

Si la CNIL a constaté des améliorations, elle n’est pas rassurée pour autant. En 2013, elle invitait les acteurs du secteur bancaire à mettre à niveau leurs mesures de sécurité pour garantir que les données bancaires ne puissent pas être collectées ni exploitées par des tiers.

Elle espère que ce secteur suivra les différentes recommandations émises [PDF, page 3], notamment par l’Observatoire de la Sécurité des Cartes de Paiement, quant à la protection et au chiffrement des échanges. Les premières recommandations datent de 2007 [PDF], mais malheureusement, dix ans après, très peu de choses ont été entreprises pour protéger efficacement les données bancaires présentes dans les cartes sans contact.

S’il existe des techniques pour restreindre voire empêcher la récupération des données bancaires via le sans contact, le résultat n’est toujours pas satisfaisant, le numéro de carte est toujours stocké en clair et lisible aisément, les solutions ne garantissent ni un niveau de protection adéquat, ni une protection permanente.

Une solution consiste à « enfermer » sa carte dans un étui qui bloque les fréquences utilisées par le NFC. Tant que la carte est dans son étui, pas de risques… mais pour payer, il faut bien sortir ladite carte, donc problème.

L’autre solution, plus « directe », consiste à trouer – physiquement – sa carte au bon endroit pour mettre le circuit de la carte hors service. Attention cependant, votre carte bancaire n’est généralement pas votre propriété, vous louez cette dernière à votre banque, il est normalement interdit de détériorer le bien de votre banque.

DCP ou pas DCP ?

J’en parlais précédemment : est-ce que le numéro de carte bancaire constitue à lui seul une donnée à caractère personnel, ou DCP ?

Cela semble un point de détail mais je pense que c’est assez important en réalité. Si c’est effectivement une DCP, alors le numéro de carte bancaire doit, au même titre que les autres DCP, bénéficier d’un niveau de protection adéquat, exigence qui n’est actuellement pas satisfaite.

Si vous avez la réponse, n’hésitez pas à me contacter ou à me donner quelques références.

Our experience designing and building gRPC services

This is the final post in a series on how we scaled Bugsnag’s new Releases dashboard backend pipeline using gRPC. Read our first blog on why we selected gRPC for our microservices architecture, and our second blog on how we package generated code from protobufs into libraries to easily update our services.

The Bugsnag engineering team recently worked on massively scaling our backend data-processing pipeline to support the launch of the Releases dashboard. The Releases dashboard (for comparing releases to improve the health of applications) included support for sessions which would mean a significant increase in the amount of data processed in our backend. Because of this and the corresponding increase in call load, we implemented gRPC as our microservices communications framework. It allows our microservices to talk to each other in a more robust and performant way.

In this post, we’ll walk through our experiences building out gRPC and some of the gotchas and development tips we’ve learned along the way.

Our experience with gRPC

For the Releases dashboard, we needed to implement gRPC in Ruby, Java, Node, and Go. This is because we’ve built the services in our data-processing Pipeline in the language best suited for the job. Our initial investigation uncovered that all client libraries have various degrees of maturity. Most of them are serviceable, but not all are feature complete. However, these libraries are progressing fast and were mature enough for our needs. Nevertheless, it’s worth evaluating their current state before jumping in.

Designing a gRPC service

The design process was much smoother than the RESTful interface in terms of the API specification. The endpoints were quickly defined, written, and understood, all self contained in a single protobuf file. However, there are very few rules on what these endpoints could be, which is generally the case with RPC. We needed to be strict on defining the role of the microservice, ensuring the endpoints reflected this role. We focused on making sure each endpoint was heavily commented, which helped our cross-continent teams avoid too many integration problems.

As an aside, it’s important to write documentation and communicate common use cases involving these endpoints. This is typically outside the scope of the protobuf file, but has a large impact on what the endpoints should be.

Developing a gRPC service

Implementing gRPC was initially a rough road. Our team was unfamiliar with best practices so we had to spend more time than we wanted on building tools and testing our servers. At the time, there was a lack of good tutorials and examples for us to copy from, so the first servers created were based on trial and error. gRPC could benefit from some clear documentation and examples about the concepts it uses like stubs and channels.

This page is a good start at explaining the basics; however, we would have felt more confident if we knew more. For example, knowing how channels handle connection failure without having to check the client’s source code. We also found most of the configurable options were only documented in source code and took a lot of time and effort to find. We were never really sure if the option had worked, which meant we ended up testing most options we changed. The barrier to entry for developing and testing gRPC was quite high. More intuitive documentation and tools are essential if gRPC is here to stay, and there do seem to be more and more examples coming out.

Handling opinionated languages

There were a few gotchas along the way, including getting familiar with how protobufs handle default values. For example, in the protobuf format, strings are primitive and have a default value of "". Java developers identify null as the default value for strings. But beware, setting null for primitive protobuf fields like strings will cause runtime exceptions in Java.

The client libraries try to protect against invalid field values before transmitting and assume you are trying to set null to a primitive field. These safeguards are present to protect against conflicting opinions between different language applications e.g. "", nil, and null for strings. This led us to create wrappers for these messages to avoid confusion once you were in the application’s native language. On the whole, we’ve had very little need to dive into and debug the messages themselves. Client library implementations are very reliable at encoding and decoding messages.

How to debug a gRPC service

When we started using gRPC, the testing tools available were limited. Developers want to cURL their endpoints, but with gRPC equivalents to familiar tools like Postman either don’t exist or are not very mature. These tools need to support both encoding and decoding messages using the appropriate protobuf file, and be able to support HTTP/2. You can actually cURL a gRPC endpoint directly, but this is far from a streamlined process. Some useful tools we came across were:

-

protoc-gen-lint, linting for protobufs - This tool checks for any deviations from Google’s Protocol Buffer style guide. We use this as part of our build process to enforce coding standards and catch basic errors. It’s good for spotting invalid message structures and typos.

-

grpcc, CLI for a gRPC Server - This uses Node REPL to interact with a gRPC service via its protobuf file, and is very useful for quickly testing an endpoint. It’s a little rough around the edges, but looks promising for a standalone tool to hit endpoints.

-

omgrpc, GUI client - Described as Postman for gRPC endpoints, this tool provides a visual way to interact with your gRPC services.

-

awesome gRPC - A great collection of resources currently available for gRPC

Let me cURL my gRPC endpoint

In addition to these tools, we managed to re-enable our existing REST tools by using Envoy and JSON transcoding. This works by sending HTTP/1.1 requests with a JSON payload to an Envoy proxy configured as a gRPC-JSON transcoder. Envoy will translate the request into the corresponding gRPC call, with the response message translated back into JSON.

Step 1: Annotate the service protobuf file with google APIs. This is an example of a service with an endpoint that has been annotated so it can be invoked with a POST request to /errorclass.

import "google/api/annotations.proto";

package bugsnag.error_service;

service Errors {

rpc GetErrorClass (GetErrorClassRequest) returns (GetErrorClassResponse) {

option (google.api.http) = {

post: "/errorclass"

body: "*"

};

}

}

message GetErrorClassRequest {

string error_id = 1;

}

message GetErrorClassResponse {

string error_class = 1;

}

Step 2: Generate a proto descriptor set that describes the gRPC service. This requires the protocol compiler, or protoc installed (how to install it can be found here). Follow this guide on generating a proto descriptor set with protoc.

Step 3: Run Envoy with a JSON transcoder, configured to use the proto descriptor set. Here is an example of an Envoy configuration file with the gRPC server listening on port 4000.

{

"listeners": [

{

"address": "tcp://0.0.0.0:3000",

"filters": [

{

"type": "read",

"name": "http_connection_manager",

"config": {

"codec_type": "auto",

"stat_prefix": "grpc.error-service",

"route_config": {

"virtual_hosts": [

{

"name": "grpc",

"domains": ["*"],

"routes": [

{

"timeout_ms": 1000,

"prefix": "/",

"cluster": "grpc-cluster"

}

]

}

]

},

"filters": [

{

"type": "both",

"name": "grpc_json_transcoder",

"config": {

"proto_descriptor": "/path/to/proto-descriptors.pb",

"services": ["bugsnag.error_service"],

"print_options": {

"add_whitespace": false,

"always_print_primitive_fields": true,

"always_print_enums_as_ints": false,

"preserve_proto_field_names": false

}

}

},

{

"type": "decoder",

"name": "router",

"config": {}

}

]

}

}

]

}

],

"admin": {

"access_log_path": "/var/log/envoy/admin_access.log",

"address": "tcp://0.0.0.0:9901"

},

"cluster_manager": {

"clusters": [

{

"name": "grpc-cluster",

"connect_timeout_ms": 250,

"type": "strict_dns",

"lb_type": "round_robin",

"features": "http2",

"hosts": [

{

"url": "tcp://docker.for.mac.localhost:4000"

}

]

}

]

}

}

Step 4: cURL the gRPC service via the proxy. In this example, we set up the proxy to listen to port 3000.

> curl -H "Accept: application/json" \

-X POST -d '{"error_id":"587826d70000000000000001"}' \

http://localhost:3000/errorclass

'{"error_class":"Custom Runtime Exception"}'

Although this technique can be very useful, it does require us to “muddy up” our protobuf files with additional dependencies, and manage the Envoy configurations to talk to these services. To streamline the process, we scripted the steps and ran an Envoy instance inside a Docker container, taking a protobuf file as a parameter. This allowed us to quickly set a JSON transcoding proxy for any gRPC services in seconds.

Running the gRPC Ruby client library on Alpine

We did encounter some trouble running the Ruby version of a gRPC client. When we came to build the applications container, we got the error:

LoadError: Error relocating /app/vendor/bundle/ruby/2.4.0/gems/grpc-1.4.1-x86_64-linux/src/ruby/lib/grpc/2.4/grpc_c.so: __strncpy_chk: symbol not found - /app/vendor/bundle/ruby/2.4.0/gems/grpc-1.4.1-x86_64-linux/src/ruby/lib/grpc/2.4/grpc_c.so

Most gRPC client libraries are written on top of a shared core library, written in C. The issue was due to using the an alpine version of Ruby with a precompiled version of the gRPC library requiring glibc. This was solved by setting BUNDLE_FORCE_RUBY_PLATFORM=1 in the environment when running bundle install which will build the gems from source rather than using the precompiled version.

Final thoughts

Rolling this out into production, we immediately observed latency improvements. Once the initial connection was made, transport costs were on the order of microseconds and effectively negligible compared to the call itself. This gave us confidence to ramp up to heavier loads which were handled with ease.

Now that we’ve streamlined our development process, and made our deployments resilient using Envoy, we can rollout new scalable gRPC communication links or upgrade existing ones quickly and efficiently. Load balancing did provide us with an interesting problem, and you can read about it here.

Chrome's WebUSB Feature Leaves Some Yubikeys Vulnerable to Attack

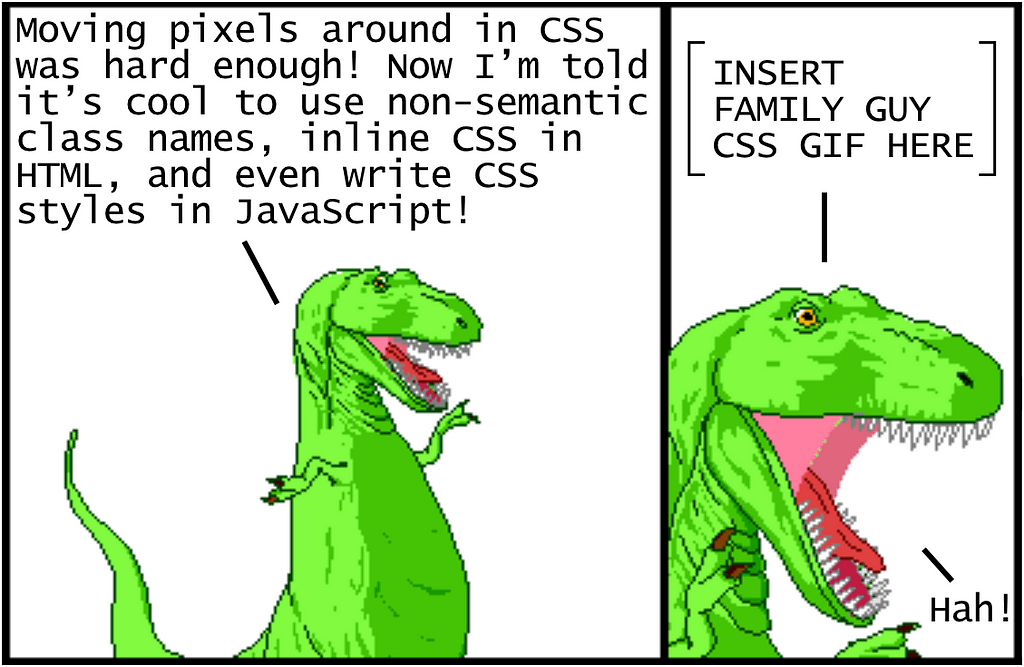

Modern CSS Explained For Dinosaurs

CSS is strangely considered both one of the easiest and one of the hardest languages to learn as a web developer. It’s certainly easy enough to get started with it — you define style properties and values to apply to specific elements, and…that’s pretty much all you need to get going! However, it gets tangled and complicated to organize CSS in a meaningful way for larger projects. Changing any line of CSS to style an element on one page often leads to unintended changes for elements on other pages.

In order to deal with the inherent complexity of CSS, all sorts of different best practices have been established. The problem is that there isn’t any strong consensus on which best practices are in fact the best, and many of them seem to completely contradict each other. If you’re trying to learn CSS for the first time, this can be disorienting to say the least.

The goal of this article is to provide a historical context of how CSS approaches and tooling have evolved to what they are today in 2018. By understanding this history, it will be easier to understand each approach and how to use them to your benefit. Let’s get started!

Update: I made a new video course version of this article, which goes over the material with greater depth, check it out here:

https://firstclass.actualize.co/p/modern-css-explained-for-dinosaurs

Using CSS for basic styling

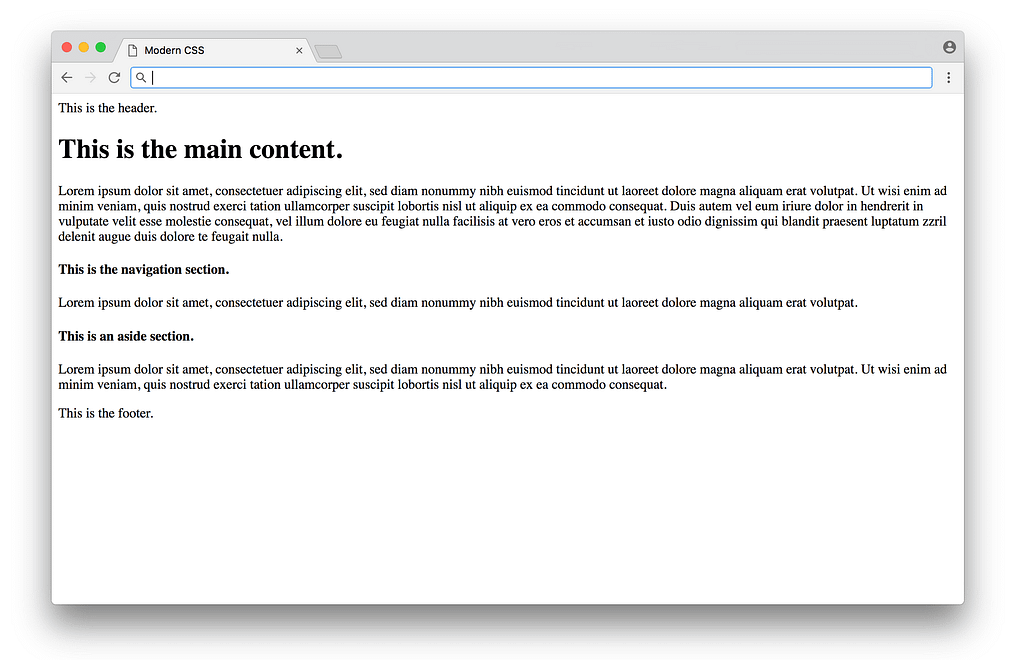

Let’s start with a basic website using just a simple index.html file that links to a separate index.css file:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Modern CSS</title>

<link rel="stylesheet" href="index.css">

</head>

<body>

<header>This is the header.</header>

<main>

<h1>This is the main content.</h1>

<p>...</p>

</main>

<nav>

<h4>This is the navigation section.</h4>

<p>...</p>

</nav>

<aside>

<h4>This is an aside section.</h4>

<p>...</p>

</aside>

<footer>This is the footer.</footer>

</body>

</html>

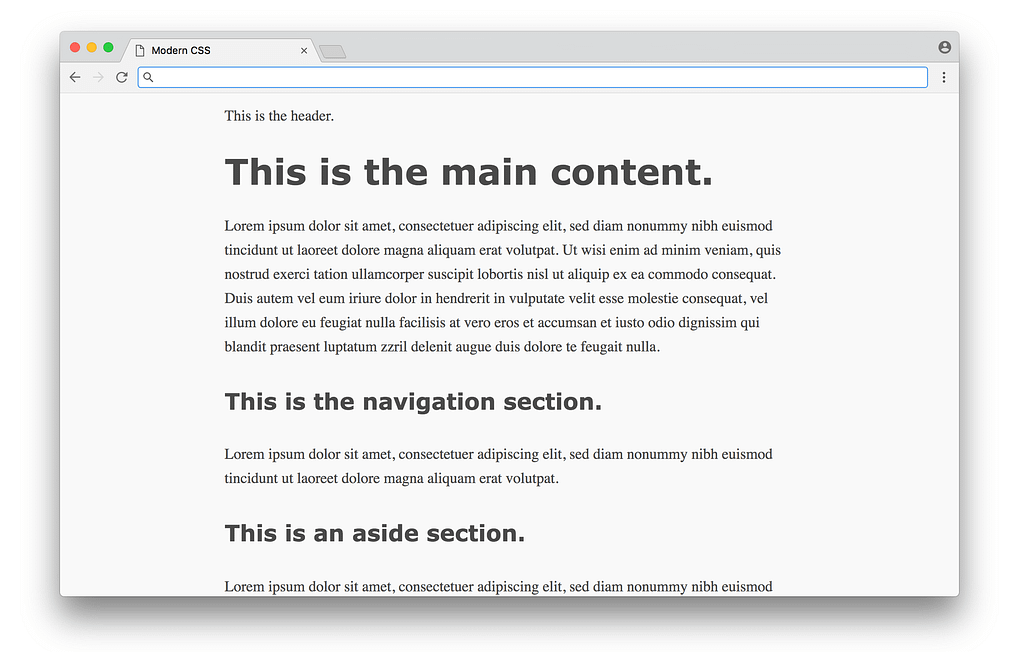

Right now we aren’t using any classes or ids in the HTML, just semantic tags. Without any CSS, the website looks like this (using placeholder text):

Functional, but not very pretty. We can add CSS to improve the basic typography in index.css:

/* BASIC TYPOGRAPHY */

/* from https://github.com/oxalorg/sakura */

html {

font-size: 62.5%;

font-family: serif;

}body {

font-size: 1.8rem;

line-height: 1.618;

max-width: 38em;

margin: auto;

color: #4a4a4a;

background-color: #f9f9f9;

padding: 13px;

}@media (max-width: 684px) {

body {

font-size: 1.53rem;

}

}@media (max-width: 382px) {

body {

font-size: 1.35rem;

}

}h1, h2, h3, h4, h5, h6 {

line-height: 1.1;

font-family: Verdana, Geneva, sans-serif;

font-weight: 700;

overflow-wrap: break-word;

word-wrap: break-word;

-ms-word-break: break-all;

word-break: break-word;

-ms-hyphens: auto;

-moz-hyphens: auto;

-webkit-hyphens: auto;

hyphens: auto;

}h1 {

font-size: 2.35em;

}h2 {

font-size: 2em;

}h3 {

font-size: 1.75em;

}h4 {

font-size: 1.5em;

}h5 {

font-size: 1.25em;

}h6 {

font-size: 1em;

}Here most of the CSS is styling the typography (fonts with sizes, line height, etc.), with some styling for the colors and a centered layout. You’d have to study design to know good values to choose for each of these properties (these styles are from sakura.css), but the CSS itself that’s being applied here isn’t too complicated to read. The result looks like this:

What a difference! This is the promise of CSS — a simple way to add styles to a document, without requiring programming or complex logic. Unfortunately, things start to get hairier when we use CSS for more than just typography and colors (which we’ll tackle next).

Using CSS for layout

In the 1990s, before CSS gained wide adoption, there weren’t a lot of options to layout content on the page. HTML was originally designed as a language to create plain documents, not dynamic websites with sidebars, columns, etc. In those early days, layout was often done using HTML tables — the entire webpage would be within a table, which could be used to organize the content in rows and columns. This approach worked, but the downside was the tight coupling of content and presentation — if you wanted to change the layout of a site, it would require rewriting significant amounts of HTML.

Once CSS entered the scene, there was a strong push to keep content (written in the HTML) separate from presentation (written in the CSS). So people found ways to move all layout code out of HTML (no more tables) into CSS. It’s important to note that like HTML, CSS wasn’t really designed to layout content on a page either, so early attempts at this separation of concerns were difficult to achieve gracefully.

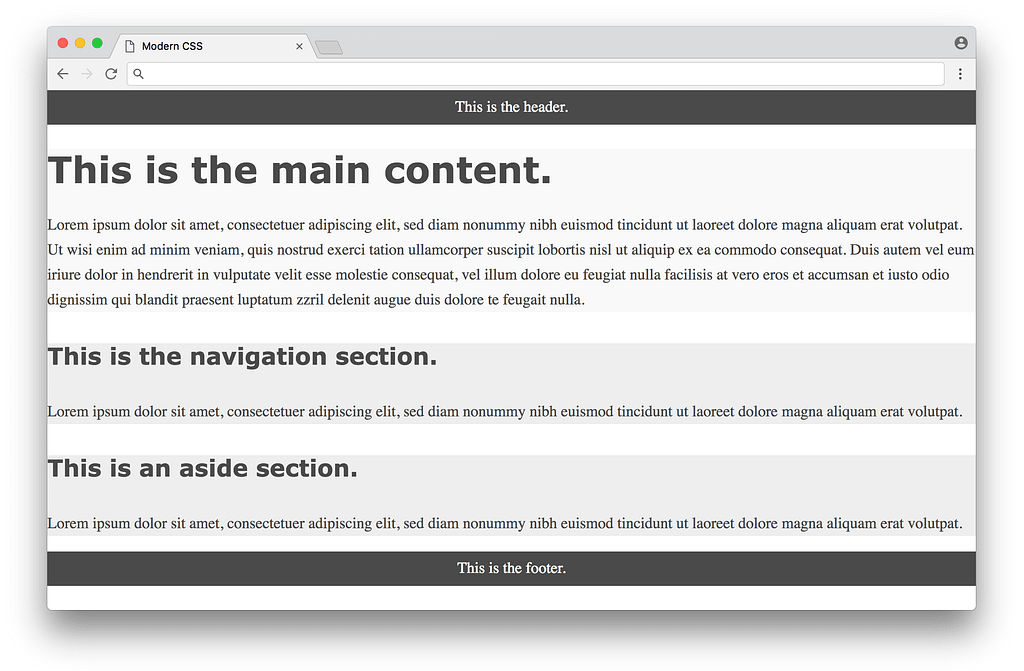

Let’s take a look at how this works in practice with our above example. Before we define any CSS layout, we’ll first reset any margins and paddings (which affect layout calculations) as well as give section distinct colors (not to make it pretty, but to make each section visually stand out when testing different layouts).

/* RESET LAYOUT AND ADD COLORS */

body {

margin: 0;

padding: 0;

max-width: inherit;

background: #fff;

color: #4a4a4a;

}header, footer {

font-size: large;

text-align: center;

padding: 0.3em 0;

background-color: #4a4a4a;

color: #f9f9f9;

}nav {

background: #eee;

}main {

background: #f9f9f9;

}aside {

background: #eee;

}Now the website temporarily looks like:

Now we’re ready to use CSS to layout the content on the page. We’ll look at three different approaches in chronological order, starting with the classic float-based layouts.

Float-based layout

The CSS float property was originally introduced to float an image inside a column of text on the left or right (something you often see in newspapers). Web developers in the early 2000s took advantage of the fact that you could float not just images, but any element, meaning you could create the illusion of rows and columns by floating entire divs of content. But again, floats weren’t designed for this purpose, so creating this illusion was difficult to pull off in a consistent fashion.

In 2006, A List Apart published the popular article In Search of the Holy Grail, which outlined a detailed and thorough approach to creating what was known as the Holy Grail layout — a header, three columns and a footer. It’s pretty crazy to think that what sounds like a fairly straightforward layout would be referred to as the Holy Grail, but that was indeed how hard it was to create consistent layout at the time using pure CSS.

Below is a float-based layout for our example based on the technique described in that article:

/* FLOAT-BASED LAYOUT */

body {

padding-left: 200px;

padding-right: 190px;

min-width: 240px;

}header, footer {

margin-left: -200px;

margin-right: -190px;

}main, nav, aside {

position: relative;

float: left;

}main {

padding: 0 20px;

width: 100%;

}nav {

width: 180px;

padding: 0 10px;

right: 240px;

margin-left: -100%;

}aside {

width: 130px;

padding: 0 10px;

margin-right: -100%;

}footer {

clear: both;

}* html nav {

left: 150px;

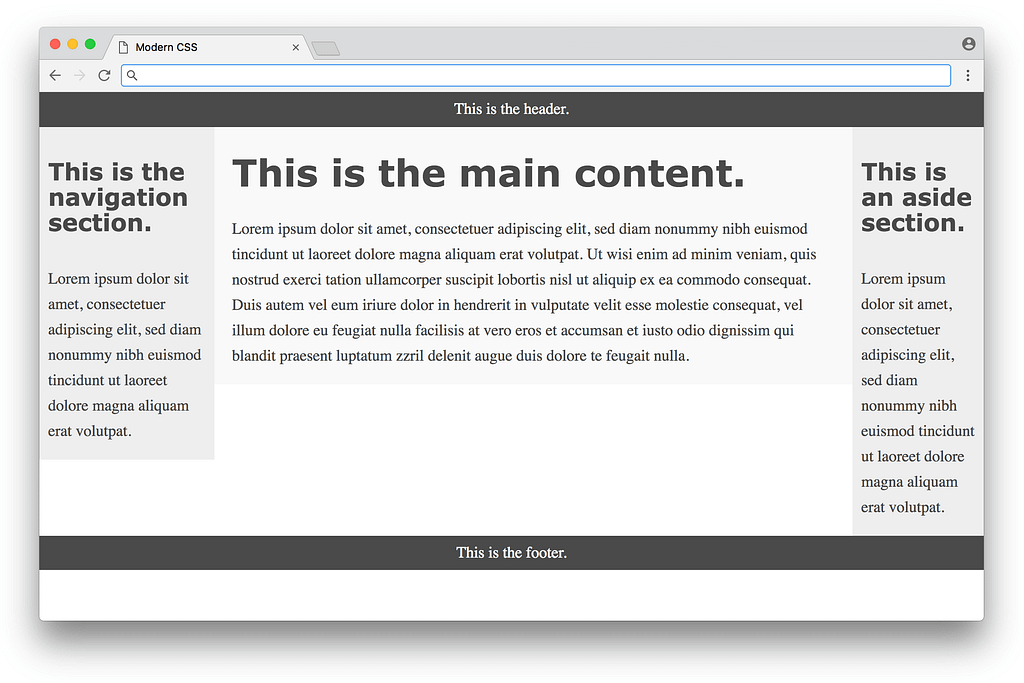

}Looking at the CSS, you can see there are quite a few hacks necessary to get it to work (negative margins, the clear: both property, hard-coded width calculations, etc.) — the article does a good job explaining the reasoning for each in detail. Below is what the result looks like:

This is nice, but you can see from the colors that the three columns are not equal in height, and the page doesn’t fill the height of the screen. These issues are inherent with a float-based approach. All a float can do is place content to the left or right of a section — the CSS has no way to infer the heights of the content in the other sections. This problem had no straightforward solution until many years later, with a flexbox-based layout.

Flexbox-based layout

The flexbox CSS property was first proposed in 2009, but didn’t get widespread browser adoption until around 2015. Flexbox was designed to define how space is distributed across a single column or row, which makes it a better candidate for defining layout compared to using floats. This meant that after about a decade of using float-based layouts, web developers were finally able to use CSS for layout without the need for the hacks needed with floats.

Below is a flexbox-based layout for our example based on the technique described on the site Solved by Flexbox (a popular resource showcasing different flexbox examples). Note that in order to make flexbox work, we need to an an extra wrapper div around the three columns in the HTML:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Modern CSS</title>

<link rel="stylesheet" href="index.css">

</head>

<body>

<header>This is the header.</header>

<div class="container">

<main>

<h1>This is the main content.</h1>

<p>...</p>

</main>

<nav>

<h4>This is the navigation section.</h4>

<p>...</p>

</nav>

<aside>

<h4>This is an aside section.</h4>

<p>...</p>

</aside>

</div>

<footer>This is the footer.</footer>

</body>

</html>

And here’s the flexbox code in the CSS:

/* FLEXBOX-BASED LAYOUT */

body {

min-height: 100vh;

display: flex;

flex-direction: column;

}.container {

display: flex;

flex: 1;

}main {

flex: 1;

padding: 0 20px;

}nav {

flex: 0 0 180px;

padding: 0 10px;

order: -1;

}aside {

flex: 0 0 130px;

padding: 0 10px;

}That is way, way more compact compared to the float-based layout approach! The flexbox properties and values are a bit confusing at first glance, but it eliminates the need for a lot of the hacks like negative margins that were necessary with float-based layouts — a huge win. Here is what the result looks like:

Much better! The columns are all equal height and take up the full height of the page. In some sense this seems perfect, but there are a couple of minor downsides to this approach. One is browser support — currently every modern browser supports flexbox, but some older browsers never will. Fortunately browser vendors are making a bigger push to end support for these older browsers, making a more consistent development experience for web designers. Another downside is the fact that we needed to add the <div class="container"> to the markup — it would be nice to avoid it. In an ideal world, any CSS layout wouldn’t require changing the HTML markup at all.

The biggest downside though is the code in the CSS itself — flexbox eliminates a lot of the float hacks, but the code isn’t as expressive as it could be for defining layout. It’s hard to read the flexbox CSS and get a visual understanding how all of the elements will be laid out on the page. This leads to a lot of guessing and checking when writing flexbox-based layouts.

It’s important to note again that flexbox was designed to space elements within a single column or row — it was not designed for an entire page layout! Even though it does a serviceable job (much better than float-based layouts), a different specification was specifically developed to handle layouts with multiple rows and columns. This specification is known as CSS grid.

Grid-based layout

CSS grid was first proposed in 2011 (not too long after the flexbox proposal), but took a long time to gain widespread adoption with browsers. As of early 2018, CSS grid is supported by most modern browsers (a huge improvement over even a year or two ago).

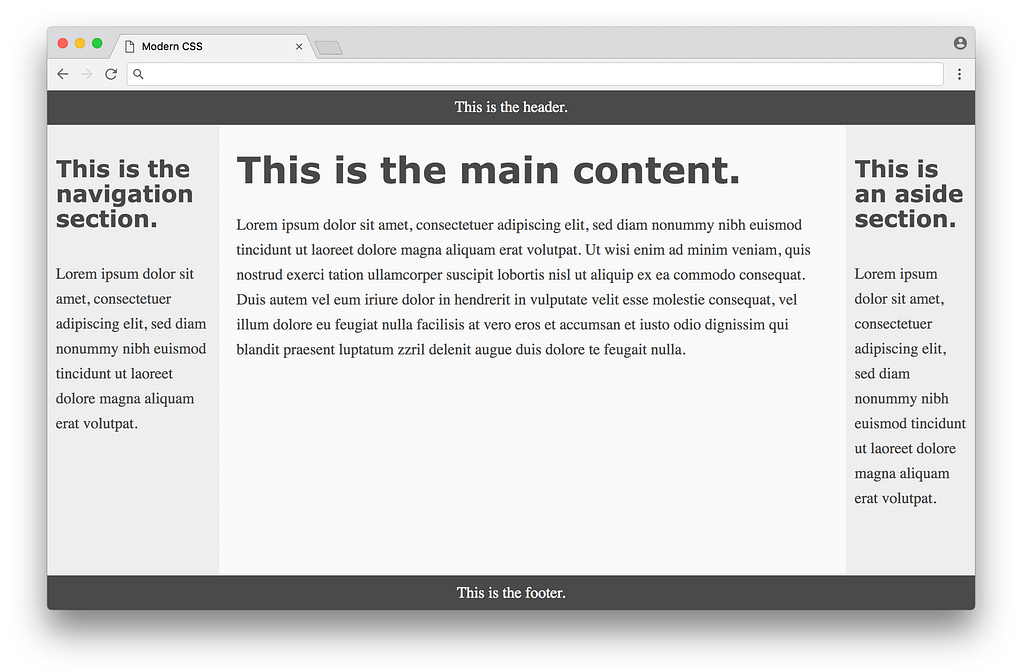

Below is a grid-based layout for our example based on the first method in this CSS tricks article. Note that for this example, we can get rid of the <div class="container"> that we had to add for the flexbox-based layout — we can simply use the original HTML without modification. Here’s what the CSS looks like:

/* GRID-BASED LAYOUT */

body {

display: grid;

min-height: 100vh;

grid-template-columns: 200px 1fr 150px;

grid-template-rows: min-content 1fr min-content;

}header {

grid-row: 1;

grid-column: 1 / 4;

}nav {

grid-row: 2;

grid-column: 1 / 2;

padding: 0 10px;

}main {

grid-row: 2;

grid-column: 2 / 3;

padding: 0 20px;

}aside {

grid-row: 2;

grid-column: 3 / 4;

padding: 0 10px;

}footer {

grid-row: 3;

grid-column: 1 / 4;

}The result is visually identical to the flexbox based layout. However, the CSS here is much improved in the sense that it clearly expresses the desired layout. The size and shape of the columns and rows are defined in the body selector, and each item in the grid is defined directly by its position.

One thing that can be confusing is the grid-column property, which defines the start point / end point of the column. It can be confusing because in this example, there are 3 columns, but the numbers range from 1 to 4. It becomes more clear when you look at the picture below:

The first column starts at 1 and ends at 2, the second column starts at 2 and ends at 3, and the third column starts at 3 and ends at 4. The header has a grid-column of 1 / 4 to span the entire page, the nav has a grid-column of 1 / 2 to span the first column, etc.

Once you get used to the grid syntax, it clearly becomes the ideal way to express layout in CSS. The only real downside to a grid-based layout is browser support, which again has improved tremendously over the past year. It’s hard to overstate the importance of CSS grid as the first real tool in CSS that was actually designed for layout. In some sense, web designers have always had to be very conservative with making creative layouts, since the tools up until now have been fragile, using various hacks and workarounds. Now that CSS grid exists, there is the potential for a new wave of creative layout designs that never would have been possible before — exciting times!

Using a CSS preprocessor for new syntax

So far we’ve covered using CSS for basic styling as well as layout. Now we’ll get into tooling that was created to help improve the experience of working with CSS as a language itself, starting with CSS preprocessors.

A CSS preprocessor allows you to write styles using a different language which gets converted into CSS that the browser can understand. This was critical back in the day when browsers were very slow to implement new features. The first major CSS preprocessor was Sass, released in 2006. It featured a new concise syntax (indentation instead of brackets, no semicolons, etc.) and added advanced features missing from CSS, such as variables, helper functions, and calculations. Here’s what the color section of our earlier example would look like using Sass with variables:

$dark-color: #4a4a4a

$light-color: #f9f9f9

$side-color: #eee

body

color: $dark-color

header, footer

background-color: $dark-color

color: $light-color

main

background: $light-color

nav, aside

background: $side-color

Note how reusable variables are defined with the $ symbol, and that brackets and semicolons are eliminated, making for a cleaner looking syntax. The cleaner syntax in Sass is nice, but features like variables were revolutionary at the time, as they opened up new possibilities for writing clean and maintainable CSS.