On July 30th, we announced our public ModSecurity XSS Evasion Challenge. This blog post will provide an overview of the challenge and results.

Value of Community Testing

First of all, I would like to thank all those people that participated in the challenge. All told, we had > 730 participants (based on unique IP addresses) which is a tremendous turn out. This type of community testing has helped to both validate the strengths and expose the weaknesses of the XSS blacklist filter protections of the OWASP ModSecurity Core Rule Set Project. The end result of this challenge is that the XSS Injection rules within the CRS have been updated within the Trunk release in GitHub.

XSS Evasion Challenge Setup

The form on this page is vulnerable to reflected XSS. Data passed within the

test parameter (either GET or POST) will be reflected back to this same page without any output encoding/escaping.

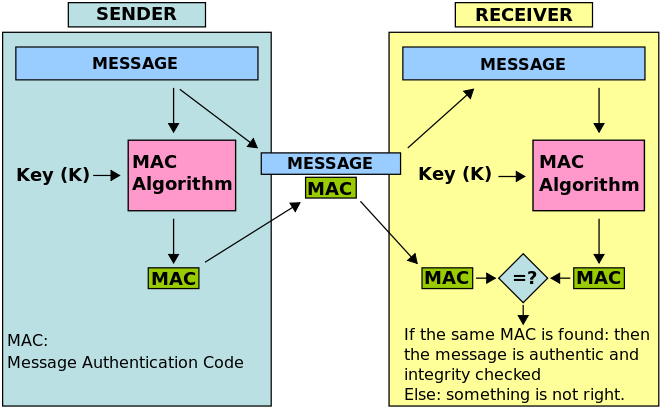

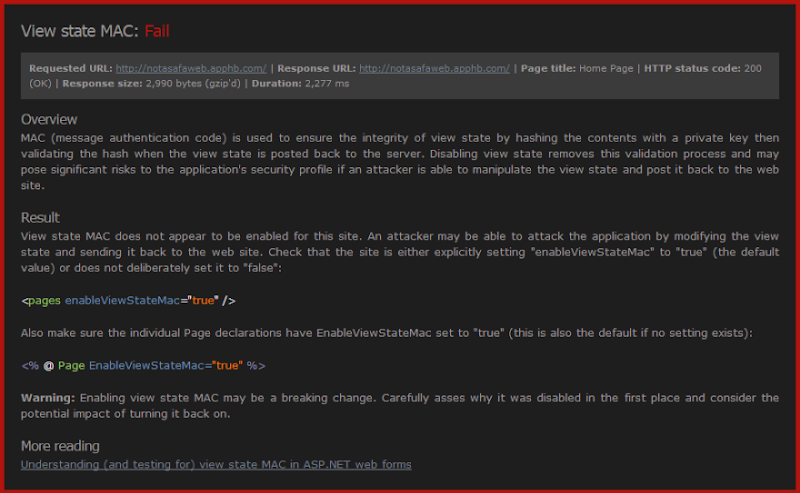

XSS Defense #1: Inbound Blacklist Regex Filters

We activated updated XSS filters from the OWASP ModSecurity Core Rule Set (CRS). When clients send attack payloads, they are evaluated by the CRS rules and then the detection scores are popagated to the HTML form as such:

CRS XSS Anomaly Score Exceeded (score 10): NoScript XSS InjectionChecker: HTML Injection

We added XSS filter regular expressions from the following 3rd party sources:

XSS Defense #2: JS Sandbox Injection

This defensive layer uses ModSecurity's Data Modification capability (@rsub operator) to dynamically insert Gareth Heyes' (@garethheyes) JS Sandbox called MentalJS to the beginning of html responses.

This defensive layer uses ModSecurity's Data Modification capability (@rsub operator) to dynamically insert Gareth Heyes' (@garethheyes) JS Sandbox called MentalJS to the beginning of html responses.

It is important to understand what a JS sandbox is and how it works. You may be able to execute JS code however it is in a sandboxed environment. For example - preventing a JS alert popup box is not the goal here but rather protecting DOM elements from being accessed.

In some cases, MentalJS prevents the injected code from executing at all. In other cases, the code will execute, however it does so from within a JS sandbox. The result is that the code is not able to access DOM elements outside of the sandbox. For instance, if an injection attempts to access document.cookie, it returns data as "undefined" -

Challenge Goals

The challenge was twofold and to win the participants needed to develop a single payload that achieved both of these goals:

1. Filter Evasion

You must execute a reflected XSS attack accessing one of the DOM elements listed below WITHOUT triggering an XSS filter alert. Example -

No CRS XSS Filter Alerts Triggered (score 0)

Depending on the payload, you may need to review the raw HTML to verify the CRS score.

2. Escape from the MentalJS JavaScript Sandbox

You must bypass the MentalJS JS Sandbox protections and successfully execute a reflected XSS attack that executes JS code in your browser. A successful attack will be able to access one of the following DOM elements:

- Trigger the youWon JS function

- Access document.location that is not undefined or sandboxed

- Access document.cookie that is not undefined or sandboxed

Example -

You may toggle On/Off the defenses by checking the box in the form below. This includes disable the MentalJS Sandbox injection and also will add the X-XSS-Protection: 0 response header to temporarily disable any browser side XSS filtering. This will help to facilitate testing of working XSS payloads.

Challenge Results

While various community submissions did include bypasses for each of the two protection layers individually, there were no official winners who were able to bypass BOTH the CRS filters and break out of the MentalJS sandbox all within a single payload. That being said, we do want to highlight the individual component bypasses as they still have value for the community.

OWASP CRS Filter Evasions

Evasion #1 - Nul Bytes inside Script Tags

Security Researcher Rafay Baloch actually was a bit ahead of the curve on this challenge as he notified us of a bypass using Nul Bytes within the JS script tag name just prior to the XSS Evasion Challenge being publicly announced.

He was testing our public Smoke Test page here.

Evasion #1 - Lesson Learned

Evasion #1 - Analysis

As you can see from the output, rule ID 960901 triggered and was specifically designed to flag the presence of Nul Bytes. We took this approach rather than attempting to deal with Nul Bytes within every remaining rule. That being said, there is still a valid point here - if a Nul Byte can be used to obfuscate payloads and evade detections then this should be addressed specifically.

If we research Gareth Heyes' Shazzer online fuzzer info, we find that indeed IE v9 allows the use of Nul Bytes within the script tag name -

The JSON export API shows the example vector payloads -

So, by adding %00 within the script tag name, many of the XSS regular expression checks would not match and IE9 would still parse the tag correct and execute javascript... Perfect.

Evasion #1 - Lesson Learned

Strip Nul Bytes

To combat this tactic, we added the "t:removeNulls" transformation function action to our XSS filters:

Test Shazzer Fuzz Payloads

This Nul Byte issue demonstrates just one different is browser parsing quirks. There are many, many others... It is for this reason that we decided for regression testing of XSS filters we needed to leverage the Shazzer dataset to identify payloads that trigger JS across multiple browser types and versions. I created a number of ruby scripts that interact with the Shazzer API that can extract successful fuzz payloads and test them against the live XSS evasion challenge page running the latest OWASP ModSecurity CRS rules.

Evasion #2 - Non-space Separators

Again Rafay Baloch sent in a submission that used this technique -

http://www.modsecurity.org/demo/demo-deny-noescape.html?test=%3Cscript%3Edocument.body.innerHTML=%22%3Ca%20onmouseover%0B=location=%27\x6A\x61\x76\x61\x53\x43\x52\x49\x50\x54\x26\x63\x6F\x6C\x6F\x6E\x3B\x63\x6F\x6E\x66\x69\x72\x6D\x26\x6C\x70\x61\x72\x3B\x64\x6F\x63\x75\x6D\x65\x6E\x74\x2E\x63\x6F\x6F\x6B\x69\x65\x26\x72\x70\x61\x72\x3B%27%3E%3Cinput%20name=attributes%3E%22;%3C/script%3E&disable_xss_defense=on&disable_browser_xss_defense=on

We also received a similar submission from ONsec_lab:

http://www.modsecurity.org/demo/demo-deny-noescape.html?test=%3Cinput+onfocus%0B%3Dalert%281%29%3E&disable_xss_defense=on&disable_browser_xss_defense=on

Evasion #2 - Analysis

There are a number of characters that browsers will treat as "spaces" characters. Here is a table of these characters for each browser type:

IExplorer = [0x09,0x0B,0x0C,0x20,0x3B]

Chrome = [0x09,0x20,0x28,0x2C,0x3B]

Safari = [0x2C,0x3B]

FireFox = [0x09,0x20,0x28,0x2C,0x3B]

Opera = [0x09,0x20,0x2C,0x3B]

Android = [0x09,0x20,0x28,0x2C,0x3B]

The evasion method used by both of these submissions was to place one of the characters in between the "onevent" name attribute (such as onmouseover and onfocus) and the equal sign (=) character -

- onmouseover%0B=

- onfocus%0B%3D

As you can see from the table above, Internet Explorer (IE) would treat the %0B character as a space and parse/execute the payload however it would bypass blacklist regular expression filters such as this -

(?i)([\s\"'`;\/0-9\=]+on\w+\s*=)

The problem with this regex is the use of the "\s" meta-character where %0B is not within the class.

Evasion #2 - Lesson Learned

Similar to the Evasion #1 - we can utilize Shazzer Fuzz DB data to obtain payloads with these non-space chars separating on-event handlers.

We modified our regex rules to catch these non-space characters:

Here is what the updatd regex visual looks like:

We then ran our ruby scripts to extract and test this Shazzer Fuzz data against our rules to verify that they were caught:

MentalJS Escaping

The second half of the challenge was to try and escape from the MentalJS sandbox and access the DOM elements. There were a few people who were successful. Remember, however, that while these payloads were able to break out of the MentalJS sandbox they were all caught by the regular expression filters.

Breaking the MentalJS Parser

The most interesting MentalJS bypass submission was from Roman Shafigullin which included a method of breaking the parser. My analyzing the JS, he found that he could break the parsing by setting an emply script tag:

With this as the payload, it then brok this section of the MentalJS parsing:

He was then able to set the "src" attribute to anything he wanted and chose to pull in a JS file hosted from his own server:

When resulting full payload returned the following:

Resetting document.body.innerHTML

Guiseppe Trotta sent in the first succesful submission.

Here were the payloads he used -

There were others who submitted similar payloads that used document.body.innerHTML to override the MentalJS sandboxing-

- Nicholas Mavis

- Rafay Baloch

DOM Events Missing from Sandbox

There are a few DOM events that are not covered within the current version of MentalJS including:

- expression:

- background:url(javascript:...)

- detachEvent

These were also reported by

Vladimir Vorontsov in the

sla.ckers.org forum.

Conclusions

Ok, so in this conclusion section, I am going to hit you with some Earth-shattering revelations! No, not really. What I will cover here, however is information that re-enforces security themes you have heard before with relevant attack testing data.

Attack-Driven Testing Is Essential

Trust, but Verify - Ronald Reagan.

Our rules are of a high quality because they have been field tested by the community. Only when top-tier web app pentesters focus some QA effort on the rules will they become better. It is through these types of public, community hacking challenges that we are able to strenthen our rules. The question is - what are other WAF vendors doing to test their rules???

Blacklist Filtering Alone Is Not Enough

Negative security models used on their own is not enough to prevent a determined attacker. It is only a matter of time before they are able to identify an evasion method. While this is true, negative security models do still have value:

-

They easily block the bulk of attacks. We received more than 8,400 attacks during the challenge and only a couple were successful in bypassing the filters.

-

They force attackers to use manual testing. If an attacker wants to bypass good negative security filters, they must be willing to use their own skills and expertise to develop a bypass. Most of these evasions are not present within testing tools. This increases resistance time to allow security personnel to respond.

-

They help to categorize attacks. Without negative security filters, injection attacks would not be able to be labeled and would reside within a generic "parameter manipulation" event from violations of input validation rules. With negative security model, you can put attacks into groups for XSS, SQL Injection, Remote File Inclusion, etc...

Beyond Regular Expressions

There are many other methods of detecting malicious payloads besides regular expressions. Some other examples are:

-

Bayesian Analysis - this helps by identifying attack payload liklihood rather than the binary yes or no of regular expressions. See this blog post for example Bayesian analysis. When running these payloads against our Bayesian analysis rules, they were flagged:

Security in Layers (server-side/client-side)

The combination of server-side blacklist filtering and client-side javascript challenge proved to be extremely effective. While some submissions were successful in evading the filters, they were not able to break out of the MentalJS sandbox. On the flip side, those submissions that were able to break out of the MentalJS sandbox, were not able to evade the filters. Use multple different methods of identifying attacks to increase coverage and to compensate for weaknesses in other layers.

Last time, I wrote about how an organization can get started with software security.

Last time, I wrote about how an organization can get started with software security. If your organization doesn’t have something like our PSO, you can look elsewhere. (And if it does, you should look outside too!)

If your organization doesn’t have something like our PSO, you can look elsewhere. (And if it does, you should look outside too!) You may think you know very little yet, but even then it’s valuable to share.

You may think you know very little yet, but even then it’s valuable to share.