Formal Methods Only Solve Half My Problems

At most half my problems. I have a lot of problems.

The following is a one-page summary I wrote as a submission to HPTS’22. Hopefully it’s of broader interest.

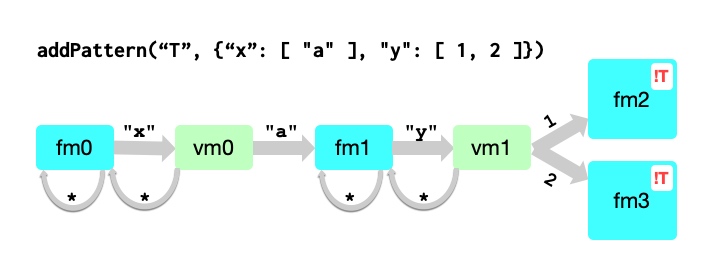

Formal methods, like TLA+ and P, have proven to be extremely valuable to the builders of large scale distributed systems1, and to researchers working on distributed protocols. In industry, these tools typically aren’t used for full verification. Instead, effort is focused on interactions and protocols that engineers expect to be particularly tricky or error-prone. Formal specifications play multiple roles in this setting, from bug finding in final designs, to accelerating exploration of the design space, to serving as precise documentation of the implemented protocol. Typically, verification or model checking of these specifications is focused on safety and liveness. This makes sense: safety violations cause issues like data corruption and loss which are correctly considered to be among the most serious issues with distributed systems. But safety and liveness are only a small part of a larger overall picture. Many of the questions that designers face can’t be adequately tackled with these methods, because they lie outside the realm of safety, liveness, and related properties.

What latency can customers expect, on average and in outlier cases? What will it cost us to run this service? How do those costs scale with different usage patterns, and dimensions of load (data size, throughput, transaction rates, etc)? What type of hardware do we need for this service, and how much? How sensitive is the design to network latency or packet loss? How do availability and durability scale with the number of replicas? How will the system behave under overload?

We address these questions with prototyping, closed-form modelling, and with simulation. Prototyping, and benchmarking those prototypes, is clearly valuable but too expensive and slow to be used at the exploration stage. Developing prototypes is time-consuming, and prototypes tend to conflate core design decisions with less-critical implementation decisions. Closed-form modelling is useful, but becomes difficult when systems become complex. Dealing with that complexity sometimes require assumptions that reduce the validity of the results. Simulations, generally Monte Carlo and Markov Chain Monte Carlo simulations, are among the most useful tools. Like prototypes, good simulations require a lot of development effort, and there’s a lack of widely-applicable tools for simulating system properties in distributed systems. Simulation results also tend to be sensitive to modelling assumptions, in ways that require additional effort to explore. Despite these challenges, simulations are widely used, and have proven very useful. Systems and database research approaches are similar: prototyping (sometimes with frameworks that make prototyping easier), some symbolic models, and some modelling and simulation work2.

What I want is tools that do both: tools that allow development of formal models in a language like Pluscal or P, model checking of critical parameters, and then allow us to ask those models questions about design performance. Ideally, those tools would allow real-world data on network performance, packet loss, and user workloads to be used, alongside parametric models. The ideal tool would focus on sensitivity analyses, that show how various system properties vary with changing inputs, and with changing modelling assumptions. These types of analyses are useful both in guiding investments in infrastructure (“how much would halving network latency reduce customer perceived end-to-end latency?”), and in identifying risks of designs (like finding workloads that perform surprisingly poorly).

This is an opportunity for the formal methods community and systems and database communities to work together. Tools that help us explore the design space of systems and databases, and provide precise quantitative predictions of design performance, would be tremendously useful to both researchers and industry practitioners.

Later commentary

This gap is one small part of a larger gap in the way that we, as practitioners, design and build distributed systems. While we have some in-the-small quantitative approaches (e.g. reasoning about device and network speeds and feeds), some widely-used modelling approaches (e.g. Markov modelling of storage and erasure code durability), most of our engineering approach is based on experience and opinion. Or, worse, à la mode best-practices or “that’s how it was in the 70s” curmudgeonliness. Formal tools have, in the teams around me, made a lot of the strict correctness arguments into quantitative arguments. Mental models like CAP, PACELC, and CALM have provided ways for people to reason semi-formally about tradeoffs. But I haven’t seen a similar transition for other properties, like latency and scalability, and it seems overdue.

Quantitative design has three benefits: it gives us a higher chance of finding designs that work, it forces us to think through requirements very crisply, and it allows us to explore the design space nimbly. We’ve very successfully applied techniques like prototyping and ad hoc simulation to create a partially quantitative design approach, but it seems like its time for broadly applicable tools.

Footnotes

- See, for example Using Lightweight Formal Methods to Validate a Key-Value Storage Node in Amazon S3, and How Amazon Web Services Uses Formal Methods

- E.g. the classic Concurrency control performance modeling: alternatives and implications, from 1987.