Rolandt

Shared posts

[ridgeline] Travel / Walking Gear

Tweaking the Brompton, 2019

In a couple of days, I’ll be flying out to the left coast again in order to join the Ride from Seattle to Vancouver plus Party (RSVP), which is put on by the Cascade Bike Club. Last year, I did their Seattle to Portland (STP) ride, and this time, Steve and I decided to mix things up a bit and do their other large ride along the coast.

Got my bib number!

As per my previous STP, I’ll be using the Brompton, and so it was time to make sure it was ready.

First order of business: replacing the Ti rack with a half rack. The hope is that it will make the fold a bit more compact, given that I’ll never use the full size rack. I got a very inexpensive half rack from eBay.

It is aluminum, rather than titanium, but given the fact that it is basically a mounting point for two EZ wheels, and the fender, it doesn’t have to be very strong. Here is a comparison of the two racks. You can see that the half rack is much shorter.

I managed to switch racks without removing the rear wheel, which was a clear win. The only tricky bit was drilling a new hole in the fender without puncturing the tire.

You can see that the new rack is not nicely triangulated like the Ti rack. We’ll see how it holds up.

One other concern that I had was that the fender protrudes much farther to the rear than the rack, but it turns out that this isn’t an issue while folding the bike.

The other thing that I did was to replace one of my aftermarket EZ wheels with a new pair from NOV designs.

Also, hydration. For a while I had misplaced my Randi Jo Fab bartender bag, so I pulled out a monkii cage that I had bought a while ago. This version has a Brompton specific mount that fits well on the stem.

The bottle cage clips into the mount, and when you want a drink, you remove the bottle while it is still attached to the cage.

I used this for a couple of training rides, and while it worked well, I found that I preferred the older setup with the soft bag. Happily I found my bartender bag yesterday, and so that is what I’ll be using on RSVP. BTW if anyone wants the monkii cage for cheap, let me know.

I noticed that Sam had a similar beverage bag on his bike when we met at the DAS ghost bike refurbishment. His is made in Montréal by Atwater Atelier.

I’ll be flying into Vancouver with the Brompton in its usual hard case. I’ll be taking a bus down to Seattle, and so for that leg of the trip, I’ll use a soft case. My bag of choice is the one by Radical Designs. It has a shoulder strap that will be handy.

Here is the bag folded up.

The storage bag has saddle bag loops and is designed to be carried on the bike, although in actuality, it will be in my backpack which will be hauled to the midpoint (Bellingham) and to the finish in Vancouver).

So, Seattle to Vancouver, about 302 km, over two days with 1940 m of climbing. This on insufficient training. I’ll let you know how it turns out.

Always Go Through the RFP Process

If you’re selecting a platform you’re going to spend a $50k+ on, always go through the RFP (request for proposal) process.

There are many reasons for this.

- It forces you to research your target audience. Creating an RFP without first researching your audience is clearly a waste of everyone’s time. Putting together a good RFP forces you to research your audience in-depth and develop a clear set of needs and use cases for the community. You will learn more than you suspect during this process.

- You have to specify what you need in detail. An RFP forces you to specify in detail exactly how your community will function, what you need, and typically requires you to develop your strategy in advance.

- It forces you to think through which features are most relevant and important to you. Not all features are important. Therefore it’s good to weight on a scale of 1 to 5 (or typically 1 to 3 for us) which features are most important. This is why you need to develop a strategy before developing the RFP. Without knowing your strategy you will struggle to know what features are most critical to your members.

- It helps you build strong relationships with stakeholders. In a larger organization, creating an RFP forces you to build a consensus about current capabilities, technology needs, and whether you can implement the technology yourself or need someone else. Bringing more stakeholders into the process early and building a consensus around budget, needs, and capabilities is wise. You might later find the stakeholders you consulted early are more likely to support you later on.

- You can identify potential tripwires early. You don’t want to be deep into the integration process and discover a problem which prevents you from using the platform. Having an RFP you can run past key stakeholders helps you identify and overcome potential problems before they become critical problems. Trust me, this will save you a lot of time and money later.

- You get more competitive bids. Trusting a vendor will give you a competitive quote without a competitive bidding process is like trusting a market seller to give you a good price when they’re the only stall you can buy from. You can’t make a choice without options. You never know how well your vendor compares with others until you have their competitive options. Once you have different quotes you can decide if investing an extra $20k per year is worthwhile to go with the leading platform. More information never hurts. You can’t make an informed decision without informed choices.

This isn’t an exhaustive list, but the point should be clear; going through an RFP process is what professionals in this space do.

p.s. Hint: Ensure you get clarity on what features come directly out of the box and which require further custom development. It’s one thing for a vendor to say they can do [x], it’s another to be clear when it requires custom development work.

Samsung Galaxy Note 10+ Charges to 80% in Just 35 Minutes with 45W Charger

One of the highlights of the Samsung Galaxy Note 10+ is that it comes with 45W wired fast charging support. Samsung is only bundling a 25W charger with the device though and it has not even shared much details about the 45W charger except for its hefty $50 price tag.

Continue reading →

One of the highlights of the Samsung Galaxy Note 10+ is that it comes with 45W wired fast charging support. Samsung is only bundling a 25W charger with the device though and it has not even shared much details about the 45W charger except for its hefty $50 price tag.

Continue reading →

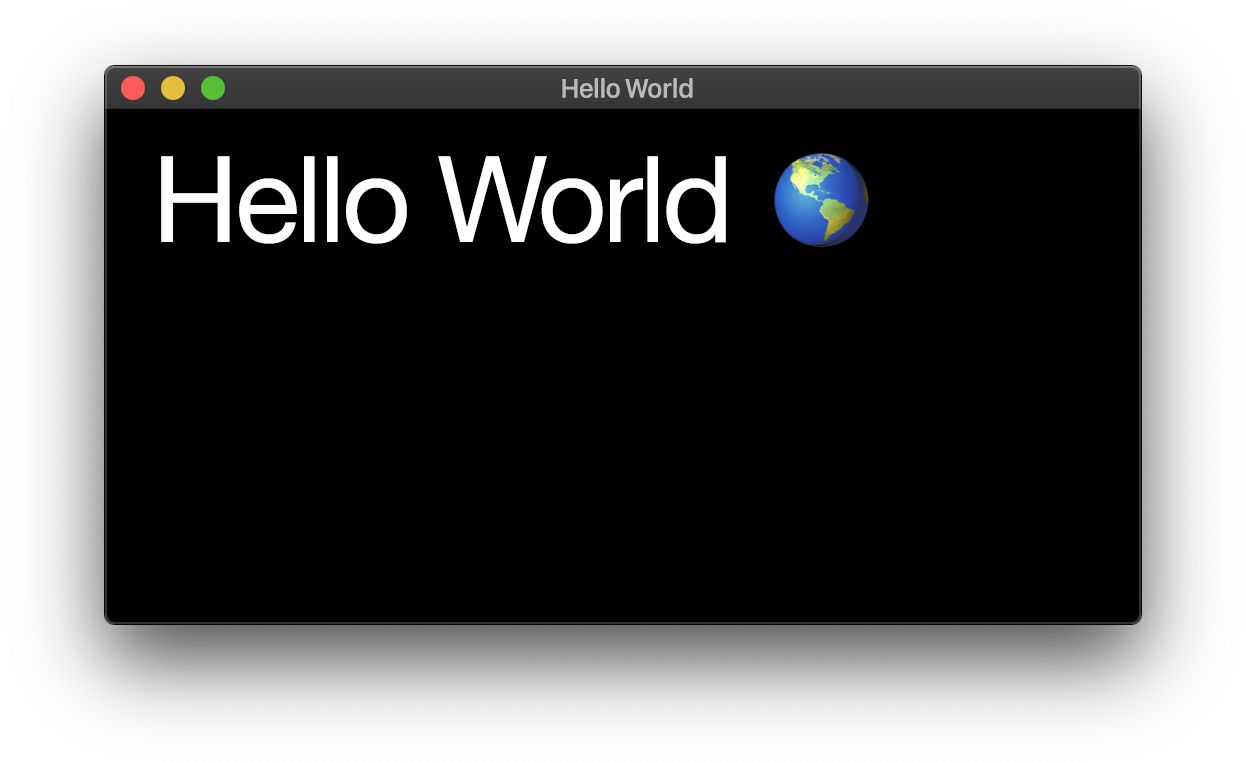

Hello World on the GPU

An Easy Tutorial

Graphics programming can be intimidating. It involves a fair amount of math, some low-level code, and it's often hard to debug. Nevertheless I'd like to show you how to do a simple "Hello World" on the GPU. You will see that there is in fact nothing to be afraid of.

Most environments offer you a printf-equivalent and a string type, but that's not how we do things in GPU land. We like the raw stuff, and we work with pixels themselves. So we're going to draw our text to the console directly. I'll show you the general high level flow, and then wrap up some of the details.

First, we're going to define our alphabet.

let alphabet = allocate_values(

&['H', 'E', 'L', 'O', 'W', 'R', 'D', '🌎'],

AlphabetFormat::Letter);Next we define our message by encoding it from this alphabet.

let message = allocate_indices(

// H E L L O W O R L D

&[0, 1, 2, 2, 3, 4, 3, 5, 2, 6, 7],

IndexFormat::Uint8);We'll also need to assemble this alphabet soup into positioned text. Don't worry, I precalculated the horizontal X offsets:

let xs = allocate_values(

&[0.0, 49.0, 130.0, 195.0, 216.0, 238.0, 328.0, 433.0, 496.0, 537.0, 561.0, 667.0],

AlphabetFormat::Float);The font is loaded as glyphs, a map of glyph images:

let glyphs = console.load_glyphs("helvetica.ttf");We now have everything we need to print it pixel-by-pixel to the top of the console, which we call 'rasterizing':

fn hello_world(

line: Line,

message: Vec<Index>,

alphabet: Vec<Letter>,

xs: Vec<Float>,

args: Vec<Argument>,

) {

// Get glyph library

let glyphs = args[0];

// Loop over all the indices in the message

for i in 0..message.len() {

// Retrieve the x position for this index.

let x = xs[i];

// Retrieve the letter in the alphabet

let letter = alphabet[message[i]];

// Retrieve the glyph image for this letter

let glyph = glyphs[letter];

// Rasterize it to the line

rasterize(line, x, glyph.image, glyph.width, glyph.height);

}

}rasterize() is provided for you, but if you're curious, this is what it looks like on the inside:

fn rasterize(

line: Line,

offset: Float,

image: Frame,

width: Int,

height: Int

) {

// Iterate over rows and columns

for y in 0..height {

for x in 0..width {

// Get target position

let tx = x + offset;

let ty = y;

// Get image pixel color

let source = get(image, x, y);

// Get current target pixel color

let destination = get(line, tx, ty);

// Blend source color with destination color

let blended = blend(source, destination);

// Save new color to target

set(target, tx, ty, blended);

}

}

};It's just like blending pixels in Photoshop, with a simple nested rows-and-columns loop.

Okay so I did gloss over an important detail.

The thing is, you can't just call hello_world(...) to run your code. I know it looks like a regular function, just like rasterize(), but it turns out you can only call built-in functions directly. If you want to call one of your own functions, you need to do a little bit extra, because the calling convention is slightly different. I'll just go over the required steps so you can follow along.

First you need to actually access the console you want to print to.

So you create a console instance:

let instance = Console::Instance::new();and get an adapter from it:

let adapter =

instance.get_adapter(

&AdapterDescriptor {

font_preference: FontPreference::Smooth,

});so you can get an actual console:

let console =

adapter.create_console(

&ConsoleDescriptor {

extensions: Extensions {

subpixel_antialiasing: true,

},

});But this console doesn't actually do anything yet. You need to create an interactive window to put it in:

let events_loop = EventsLoop::new();

let window = WindowBuilder::new()

.with_dimensions(LogicalSize {

width: 1280.0, height: 720.0

})

.with_title("Console")

.build(&events_loop).unwrap();and then make a surface to draw to:

let surface = instance.create_surface();Now if you want to print more than one line of text, you need to set up a line feed:

let descriptor =

LineFeedDescriptor {

usage: LineUsageFlags::OUTPUT_ATTACHMENT,

format: TextFormat::UTF8,

width: 120,

height: 50,

};

let line_feed = console.create_line_feed(&surface, &descriptor);

let next_line = line_feed.get_next_line();And if you want emoji, which we do, you need a separate emoji buffer too:

let images =

console.create_emoji(

EmojiDescriptor {

size: Extent2d {

width: 256,

height: 256,

},

array_size: 1024,

dimension: ImageDimension::D2,

format: ImageFormat::RGBA8,

usage: ImageUsageFlags::OUTPUT_ATTACHMENT,

});

let emoji_buffer = images.create_default_view();Okay, we're all set!

Now we just need to encode the call, using a call encoder:

let encoder = console.create_call_encoder();We begin by describing the special first argument (line), a combo of next_line and the emoji_buffer. We also have to provide some additional flags and parameters:

let call =

encoder.encode_function_call(

FunctionCallDescriptor {

console_attachments: &[

ConsoleAttachmentDescriptor {

attachment: &next_line,

load_op: LoadOp::Clear,

store_op: StoreOp::Store,

clear_letter: ' ',

}

],

emoji_attachment: Some(

ConsoleEmojiAttachmentDescriptor {

attachment: &emoji_buffer,

load_op: LoadOp::Clear,

store_op: StoreOp::Store,

clear_color: "rgba(0, 0, 0, 0)",

})

});The message of type Vec<Index> is added using a built-in convention for indices:

call.set_index_buffer(message);The alphabet: Vec<Letter> and the xs: Vec<Float> can also be directly passed in, because they are accessed 1-to-1 using our indices, as numbered arguments:

call.set_alphabet_buffers(&[

(&alphabet, 0), (&xs, 1)

]);However, the glyph images are a bit trickier, as they are a custom keyword argument.

To make this work, we need to create an argument group layout, which describes how we'll pass the arguments to sample our glyph images:

let argument_group_layout =

console.create_argument_group_layout(

&ArgumentGroupLayoutDescriptor {

bindings: &[

ArgumentGroupLayoutBinding {

binding: 0,

visibility: Visibility::PIXEL,

ty: BindingType::SampledText,

},

ArgumentGroupLayoutBinding {

binding: 1,

visibility: Visibility::PIXEL,

ty: BindingType::Sampler,

},

]

});We then put it into a larger function call layout, in case we have multiple groups of keyword arguments:

let function_call_layout =

console.create_function_call_layout(

FunctionCallLayoutDescriptor {

argument_group_layouts: &[argument_group_layout],

});We also need to create bindings to match this layout, to actually bind our argument values:

let glyph_view = glyphs.create_default_view();

let sampler = console.create_sampler(

&TextSamplerDescriptor {

address_mode: AddressMode::ClampToEdge,

text_filter: FilterMode::TypeHinted,

hint_clamp: 100.0,

max_anisotropy: 4,

compare_function: CompareFunction::Always,

border_color: BorderColor::TransparentBlack,

});

let argument_group =

console.create_argument_group(

&BindGroupDescriptor {

layout: argument_group_layout,

bindings: &[

Binding {

binding: 0,

resource: BindingResource::ImageView(&glyph_view),

},

Binding {

binding: 1,

resource: BindingResource::Sampler(&sampler),

},

]

});And add it to our call:

call.set_argument_group(0, argument_group);Alright! We're pretty much ready to make the call now. Just one more thing. The function call descriptor.

We need to pass the raw code for hello_world as a string to console.create_code_module, and annotate it with a few extra bits of information:

let function_call =

console.create_function_call(

&FunctionCallDescriptor {

layout: &function_call_layout,

call_stage: CallStageDescriptor {

module: console.create_code_module(&hello_world),

entry_point: "hello_world",

},

rasterization_state: RasterizationStateDescriptor {

emoji_alignment: Alignment::Middle,

emoji_bias: 0,

emoji_scale: 1.5,

},

text_topology: Topology::Letters,

console_states: &[

ConsoleStateDescriptor {

format: TextFormat::UTF8,

color: BlendDescriptor {

src_factor: BlendFactor::SrcAlpha,

dst_factor: BlendFactor::OneMinusSrcAlpha,

operation: BlendOperation::Add,

},

alpha: BlendDescriptor {

src_factor: BlendFactor::OneMinusDstAlpha,

dst_factor: BlendFactor::One,

operation: BlendOperation::Add,

},

write_mask: ColorWriteFlags::ALL,

},

],

emoji_state: Some(EmojiStateDescriptor {

format: ImageFormat::RGBA8,

emoji_enabled: true,

emoji_variant: CompareFunction::LessEqual,

}),

index_format: IndexFormat::Uint8,

alphabet_buffers: &[

AlphabetBufferDescriptor {

stride: 1,

step_mode: InputStepMode::Letter,

attributes: AlphabetAttributeDescriptor {

attribute_index: 0,

format: AlphabetFormat::Letter,

offset: 0,

},

},

AlphabetBufferDescriptor {

stride: 1,

step_mode: InputStepMode::Letter,

attributes: AlphabetAttributeDescriptor {

attribute_index: 1,

format: AlphabetFormat::Number,

offset: 0,

},

},

],

sample_count: 1,

});Which we add to the call:

call.set_function_call(&function_call);Well, you actually have to do this first, but it was easier to explain it last.

Now all that's left is to submit the encoded command to the console queue, and we're already done:

console

.get_queue()

.submit(&[encoder.finish()]);

Hm.

Damn, and I was going to show you how to make a matrix letter effect as an encore. You can pass a letter_shader to rasterizeWithLetterFX(...). It's easy, takes a couple hundred lines tops, all you have to do is call a function on a GPU.

(All code in this post is real, but certain names and places have been changed to protect the innocent. If you'd like to avoid tedious bureaucracy in your code, why not read about how the web people are trying to tame similar lions?)

Replied to a post by Chris M. What size do yo...

What size do you consider “critical mass” for an active community centred around a niche hobby/topic?

It depends I think. Critical mass towards what? Local agency? Go mainstream from a niche? Self sustainment of the group? What are your underlying assumptions?

If it’s about self sustaining a group, also have a look at some guidelines I wrote a decade ago on what makes a community of practice work (based on Etienne Wenger‘s work).

My rule of thumb for ‘stable’ groups is about 8 people (say 5-12, but 12 is better for ‘learning’ groups and likely too big for coordinating ‘doing’ groups), and then an ‘uncomfortable’ zone up to 25 or so, where I feel there’s a new equilibrium from 25 to 35 or so (3 times ~8, the uncomfortable zone is more like 2 times ~8, with a risk of splitting in half)

Assuming you’re after a self sustaining group for a niche hobby, I’d say 25-35 people. Depending on a multitude of factors in practice, like frequency of interaction, geographic dispersal, all the stuff in the slides linked above.

Notion.io is my new favorite - Simon

exatty95 wrote:

After that language was shared here, I

>moved everything into the Private section within Notion.

That may not be enough:

"Any content stored on the Service will be stored indefinitely, unless it is explicitly deleted or unless otherwise set forth in a separate agreement with an Enterprise (as defined below). This process is described under "Termination"."

https://www.notion.so/Terms-and-Privacy-28ffdd083dc3473e9c2da6ec011b58ac (under 1. Content, last paragraph)

If you have once made the content public, I'm not sure how irrevocable that is other than deleting it from the platform. You'd need to check with notion.

I have an immense dislike of any organisation that sets such a draconian content policy. IMO this indicates their business values and it is not something I would go anywhere near.

It reaffirms an old truth, "always read the small print"!

Slack as a Digital Campus

According to this website, "ASU is using the Slack Enterprise Grid as the communication hub for students, faculty and the staff. Via app integration (Zoom, Google Drive, Dropbox, Polls, etc.), Slack provides direct access to resources for student success." There are several pages here with background resources, slides and video describing the migration to Slack as a digital campus. Slack is "a collaboration hub that enables real-time communications and connections in a searchable platform."

Web: [Direct Link] [This Post]Liquidtext review - Luhmann

LiquidText lacks an iPhone app, which I need.

Margin Note annoyingly exports to specific apps rather than just support standards like OPML, Markdown, Txt, etc. which makes it impossible for me to use as I'd like (since I don't use OmniOutliner)...

I also find mindmaps far less useful and far harder to read/work with on a mobile device than a straight hierarchical outliner...

I really wish someone would make something like these two apps that worked with a Workflowy/Dynalist style outliner directly.

I can’t believe my publisher doesn’t . . .

It doesn’t matter if you have a six-figure advance or only got $3,000. It doesn’t matter if you have a big-five publisher or a small indie press or a hybrid publisher. If you’re a first-time author, you’ll soon be whining, “I can’t believe my publisher doesn’t . . . “, followed by some task that … Continued

The post I can’t believe my publisher doesn’t . . . appeared first on without bullshit.

Python in Azure Functions

I wish this had happened a little sooner (at least to save me the trouble of wrangling NodeJS a couple of weeks back), but it’s welcome nonetheless, especially with full Linux support tacked on.

There is also a durable Functions SDK in the works, so now I’ve got that to look forward to.

Our Favorite Mechanical Keyboards

If you spend most of your day typing or coding, a mechanical keyboard is a worthwhile upgrade over a cheaper, less comfortable keyboard. After spending months testing 19 of the most promising options, we found that the Varmilo VA87M is the best tenkeyless mechanical keyboard thanks to its excellent build quality and compact, minimalist design. It’s also available with a ton of switch options and colorful high-quality keycaps to match your preference.

Digital sweatshops then and now

In the early 1960s my family lived in New Delhi. In 1993, working for BYTE, I returned to India to investigate its software industry. The story I wrote, which appears below, includes this vignette:

Rolta does facilities mapping for a U.S. telephone company through its subsidiary in Huntsville, Alabama. Every night, scanned maps flow through the satellite link to Bombay. Operators running 386-based RoltaStations retrieve the maps from a Unix server, digitize them using Intergraph’s MicroStation CAD software, and relay the converted files back to Huntsville.

I didn’t describe the roomful of people sitting at those 386-based RoltaStations doing the work. It was a digital sweatshop. From the window of that air-conditioned room, though, you could watch physical laborers enacting scenes not unlike this one photographed by my dad in 1961.

I don’t have a picture of those rows of map digitizers in the city soon to be renamed Mumbai. But here’s a similar picture from a recent Times story, A.I. Is Learning From Humans. Many Humans.:

Labor-intensive data labeling powers AI. Web annotation technology is a key enabler for that labeling. I’m sure there will digital sweatshops running the software I help build. For what it’s worth, I’m doing my best to ensure there will be opportunities to elevate that work, empowering the humans in the loop to be decision-makers and exception-handlers, not just data-entry clerks.

Meanwhile, here’s that 1993 story, nearly forgotten by the web but salvaged from the NetNews group soc.culture.indian.

Small-systems thinking makes India a strategic software partner

by Jon Udell

BYTE, 09/01/1993

Recently, I saw a demonstration of a new Motif-based programmer’s tool called Sextant. It’s a source code analyzer that converts C programs into labeled graphs that you navigate interactively. The demonstration was impressive. What made it unique for me was that it took place in the offices of Softek, a software company in New Delhi, India.

It’s well known that Indian engineering talent pervades every level of the microcomputer industry. But what’s happening in India? On a recent tour of the country, I visited several high-tech companies and discovered that India is evolving rapidly from an exporter of computer engineering talent into an exporter of computer products and services. Software exports, in particular, dominate the agenda. A 1992 World Bank study of eight nations rated India as the most attractive nation for U.S., European, and Japanese companies seeking offshore software-development partners.

The World Bank study was conducted by Infotech Consulting (Parsippany, NJ). When the opportunity arose to visit India, I contacted Infotech’s president, Jack Epstein, for advice on planning my trip. He referred me to Pradeep Gupta, an entrepreneur in New Delhi who publishes two of India’s leading computer magazines, PC Quest and DataQuest. Gupta also runs market-research and conference businesses. He orchestrated a whirlwind week of visits to companies in New Delhi and Bombay and generously took time to acquaint me with the Indian high-tech scene.

A Nation of Small Systems

Even among Indians, there’s a tendency to attribute India’s emerging software prowess to the innate mathematical abilities of its people. “After all, we invented zero,” says Dewang Mehta, an internationally known computer graphics expert. He is also executive director of the National Association of Software and Service Companies (NASSCOM) in New Delhi. While this cultural stereotype may hold more than a grain of truth, it’s not the whole story. As NASSCOM’s 1992 report on the state of the Indian software industry notes, India has the world’s second-largest English-speaking technical work force. Consequently, Indian programmers are in tune with the international language of computing, as well as the language spoken in the U.S., the world’s largest market.

Furthermore, India’s data-communications infrastructure is rapidly modernizing. And the Indian government has begun an aggressive program of cutting taxes and lifting import restrictions for export-oriented Indian software businesses while simultaneously clearing the way for foreign companies to set up operations in India.

Other countries share many of these advantages, but India holds an ace. It is a nation of small systems. For U.S. and European companies that are right-sizing mainframe- and minicomputer-based information systems, the switch to PC-based client/server alternatives can be wrenching. Dumping the conceptual baggage of legacy systems isn’t a problem for India, however, because, in general, those systems simply don’t exist. “India’s mainframe era never happened,” says Gupta.

When Europe, Japan, and the U.S. were buying mainframes left and right, few Indian companies could afford their high prices, which were made even more costly by 150 percent import duties. Also, a government policy limiting foreign investors to a 40 percent equity stake in Indian manufacturing operations drove companies like IBM away, and the Indian mainframe industry never got off the ground.

What did develop was an indigenous microcomputer industry. In the early 1980s, Indian companies began to import components and to assemble and sell PC clones that ran DOS. This trend quickened in 1984, when the late Rajiv Gandhi, prime minister and an avid computer enthusiast, lifted licensing restrictions that had prevented clone makers from selling at full capacity.

In the latter half of the 1980s, a computerization initiative in the banking industry shifted the focus to Unix. Front offices would run DOS applications, but behind the scenes, a new breed of Indian-made PCs — Motorola- and Intel-based machines running Unix — would handle the processing chores. Unfortunately, that effort stalled when the banks ran afoul of the unions; even today, many of the Bank of India’s 50,000 branches aren’t linked electronically.

Nevertheless, the die was cast, and India entered the 1990s in possession of a special advantage. Indian programmers are not only well educated and English-speaking, but out of necessity they’re keenly focused on client/server or multiuser solutions for PCs running DOS (with NetWare) or Unix — just the kinds of solutions that U.S. and European companies are rushing to embrace. India finds itself uniquely positioned to help foreign partners right-size legacy applications.

The small-systems mind-set also guides India’s fledgling supercomputer industry. Denied permission by the U.S. government to import a Cray supercomputer, the Indian government’s Center for the Development of Advanced Computers built its own — very different — sort of supercomputer. Called PARAM, it gangs Inmos T800 transputers in parallel and can also harness Intel 860 processors for vector work. Related developments include a transputer-based neural-network engine intended to run process-control applications. The designers of this system impressed me with their clear grasp of the way in which inexpensive transputers can yield superior performance, scalability, modularity, and fault tolerance.

Software Products and Services

Many of the companies I visited produce comparable offerings for LAN or Unix environments. In the realm of packaged software, Oberoi Software in New Delhi sells a high-end hotel management application using Sybase 4.2 that runs on Hewlett-Packard, DEC, and Sun workstations. A low-end version uses Btrieve for DOS LANs. Softek offers 1-2-3, dBase, and WordStar work-alikes for DOS and Unix.

Shrink-wrapped products, however, aren’t India’s strong suit at the moment. PCs remain scarce and expensive commodities. According to DataQuest, fewer than 500,000 PCs can be found in this nation of 875 million people. To a U.S. software engineer, a $3000 PC might represent a month’s wages. An equivalently prosperous Indian professional would have to work a full year to pay for the same system. To put this in perspective, the average per capita wage in India is about $320, and the government caps the monthly salary of Indian corporate executives at around $1600 per month.

Software piracy is another vexing problem. “The competition for a 5000-rupee [approximately $165] Indian spreadsheet isn’t a 15,000-rupee imported copy of Lotus 1-2-3,” says Gupta, “but rather a zero-rupee pirated copy of Lotus 1-2-3.”

Painfully aware of the effect piracy has on the country’s international reputation as a software power, government and industry leaders have joined forces to combat it. The Department of Electronics (DoE), for example, has funded an anti-piracy campaign, and Lotus has a $69 amnesty program that enables users of illegal copies of 1-2-3 to come clean.

Reengineering Is a National Strength

The real action in Indian software isn’t in products. It’s in reengineering services. A typical project, for example, might involve re-creating an IBM AS/400-type application for a LAN or Unix environment. A few years ago, Indian programmers almost invariably would perform such work on location in the U.S. or Europe, a practice called “body shopping.” This was convenient for clients, but it wasn’t very beneficial to India because the tools and the knowledge spun off from reengineering projects tended to stay overseas.

More recently, the trend is to carry out such projects on Indian soil. Softek, for example, used a contract to build a law-office automation system for a Canadian firm as an opportunity to weld a number of its own products into a powerful, general-purpose client/server development toolkit. Softek engineers showed me how that toolkit supports single-source development of GUI software for DOS or Unix (in character mode) as well as Windows. They explained that client programs can connect to Softek’s own RDBMS (relational DBMS) or to servers from Gupta, Ingres, Oracle, or Sybase. That’s an impressive achievement matched by few companies anywhere in the world, and it’s one that should greatly enhance Softek’s appeal to foreign clients.

While reengineering often means right-sizing, that’s not always the case. For example, the National Indian Institution for Training, a New Delhi-based computer-training institute rapidly expanding into the realm of software products and services, has rewritten a well-known U.S. commercial word processor. Rigorous development techniques are the watchword at NIIT. “We have a passion for methodology,” says managing director Rajendra S. Pawar, whose company also distributes Excelerator, Intersolv’s CASE tool.

Other projects under way at NIIT include an X Window System interface builder, Mac and DOS tutorials to accompany the Streeter series of math textbooks (for McGraw-Hill), a simple but effective multimedia authoring tool called Imaginet, a word processor for special-needs users that exploits an NIIT-designed motion- and sound-sensitive input device, and an instructional video system.

Although services outweigh products for now, and the Indian trade press has complained that no indigenous software product has yet made a splash on the world scene, the situation could well change. Indian programmers are talented, and they’re up-to-date with database, GUI, network, and object-oriented technologies. These skills, along with wages 10 or more times less than U.S. programmers, make Indian programming a force to be reckoned with. Software development is a failure-prone endeavor; many products never see the light of day. But, as Tata Unisys (Bombay) assistant vice president Vijay Srirangan points out, “The cost of experimentation in India is low.” Of the many software experiments under way in India today, some will surely bear fruit.

A major obstacle blocking the path to commercial success is the lack of international marketing, but some help has been forthcoming. Under contract to the U.K.’s Developing Countries Trade Agency, the marketing firm Schofield Maguire (Cambridge, U.K.) is working to bring selected Indian software companies to the attention of European partners. “India does have a technological lead over other developing countries,” says managing partner Alison Maguire. “But to really capitalize on its software expertise, it must project a better image.”

Some companies have heard the message. For example, Ajay Madhok, a principal with AmSoft Systems (New Delhi), parlayed his firm’s expertise with computer graphics and digital video into a high-profile assignment at the 1992 Olympics. On a recent U.S. tour, he visited the National Association of Broadcasters show in Las Vegas. Then he flew to Atlanta for Comdex. While there, he bid for a video production job at the 1996 Olympics.

Incentives for Exporters

According to NASSCOM, in 1987, more than 90 percent of the Indian software industry’s $52 million in earnings came from “on-site services” (or body shopping). By 1991, on-site services accounted for a thinner 61 percent slice of a fatter $179 million pie. Reengineering services (and, to a lesser extent, packaged products) fueled this growth, with help from Indian and U.S. government policies.

On the U.S. side, visa restrictions have made it harder to import Indian software labor. India, meanwhile, has developed a range of incentives to stimulate the software and electronics industries. Government-sponsored technology parks in Noida (near New Delhi), Pune (near Bombay), Bangalore, Hyderabad, and several other locations support export-oriented software development. Companies that locate in these parks share common computing and telecommunications facilities (including leased-line access to satellite links), and they can import duty-free the equipment they need for software development.

The Indian government has established export processing zones in which foreign companies can set up subsidiaries that enjoy similar advantages and receive a five-year tax exemption. Outside these protected areas, companies can get comparable tax and licensing benefits by declaring themselves fully export-oriented.

Finally, the government is working to establish a number of hardware technology parks to complement the initiative in software. “We want to create many Hong Kongs and Singapores,” says N. Vittal, Secretary of the DoE and a tireless reformer of bureaucracy, alluding to the economic powerhouses of the Pacific Rim.

The Indian high-tech entrepreneurs I met all agreed that Vittal’s tenacious slashing of government red tape has blazed the trail they now follow. How serious is the problem of government red tape? When the government recently approved a joint-venture license application in four days, the action made headlines in both the general and trade press. Such matters more typically take months to grind their way through the Indian bureaucracy.

The evolution of India’s telecommunications infrastructure shows that progress has been dramatic, though uneven. In a country where only 5 percent of the homes have telephone service, high-tech companies increasingly rely on leased lines, packet-switched data networks, and satellite links. The DoE works with the Department of Telecommunication (DoT) to ensure that software export businesses get priority access to high-bandwidth services.

But the slow pace of progress at the DoT remains a major frustration. For example, faxing can be problematic in India, because the DoT expects you to apply for permission to transmit data. And despite widespread Unix literacy, only a few of the dozens of business cards I received during my tour carried Internet addresses. Why? DoT regulations have retarded what would have been the natural evolution of Unix networking in India. I did send mail home using ERNET, the educational resource network headquartered in the DoE building in New Delhi that links universities throughout the country. Unfortunately, ERNET isn’t available to India’s high-tech businesses.

Vittal recognizes the critical need to modernize India’s telecommunications. Given the scarcity of an existing telecommunications infrastructure, he boldly suggests that for many scattered population centers, the solution may be to completely pass over long-haul copper and vault directly into the satellite era. In the meantime, India remains in this area, as in so many others, a land of extreme contrasts. While most people lack basic telephone service, workers in strategic high-tech industries now take global voice and data services for granted.

Powerful Partners

When Kamal K. Singh, chairman and managing director of Rolta India, picks up his phone, Rolta’s U.S. partner, Intergraph, is just three digits away. A 64-Kbps leased line carries voice and data traffic from Rolta’s offices, located in the Santacruz Electronics Export Processing Zone (SEEPZ) near Bombay, to an earth station in the city’s center. Thence, such traffic travels via satellite and T1 lines in the U.S. to Intergraph’s offices in Huntsville, Alabama.

Rolta builds Intel- and RISC-based Intergraph workstations for sale in India; I saw employees doing everything from surface-mount to over-the-network software installation. At the same time, Rolta does facilities mapping for a U.S. telephone company through its subsidiary in Huntsville. Every night, scanned maps flow through the satellite link to Bombay. Operators running 386-based RoltaStations retrieve the maps from a Unix server, digitize them using Intergraph’s MicroStation CAD software, and relay the converted files back to Huntsville.

Many Indian companies have partnerships with U.S. firms. India’s top computer company, HCL, joined forces with Hewlett-Packard to form HCL-HP. HCL’s roots were in multiprocessor Unix. “Our fine-grained multiprocessing implementation of Unix System V has been used since 1988 by companies such as Pyramid and NCR,” says director Arjun Malhotra.

HCL’s joint venture enables it to build and sell HP workstations and PCs in India. “People appreciate HP quality,” says marketing chief Ajai Chowdhry. But since Vectra PCs are premium products in the price-sensitive Indian market, HCL-HP also plans to leverage its newly acquired HP design and manufacturing technology to build indigenous PCs that deliver “good value for money,” according to Malhotra.

Pertech Computers, a system maker in New Delhi, recently struck a $50 million deal to supply Dell Computer with 240,000 motherboards. Currently, trade regulations generally prohibit the import of certain items, such as finished PCs. However, exporters can use up to 25 percent of the foreign exchange they earn to import and sell such items. Pertech director Bikram Dasgupta plans to use his “forex” money to buy Dell systems for resale in India and to buy surface-mount equipment so that the company can build work-alikes.

IBM returned to India last year, after leaving in 1978, to join forces with the Tatas, a family of Indian industrialists. The joint venture, Tata Information Systems, will manufacture PS/2 and PS/VP systems and develop software exports.

Citicorp Overseas Software, a Citicorp subsidiary, typifies a growing trend to locate software-development units in India. “Our charter is first and foremost to meet Citicorp’s internal requirements,” says CEO S. Viswanathan, “but we are a profit center and can market our services and products.” On a tour of its SEEPZ facility in Bombay, I saw MVS, Unix, VMS, and Windows programmers at work on a variety of projects. In addition to reengineering work for Citicorp and other clients, the company markets banking products called Finware and MicroBanker.

ITC (Bangalore) supplements its Oracle, Ingres, and AS/400 consulting work by selling the full range of Lotus products. “Because we have the rights to manufacture Lotus software locally,” says vice president Shyamal Desai, “1-2-3 release 2.4 was available here within a week of its U.S. release.” Other distributors of foreign software include Onward Computer Technologies in Bombay (NetWare) and Bombay-based Mastek (Ingres).

India’s ambitious goal is to quadruple software exports from $225 million in 1992 to $1 billion in 1996. To achieve that, everything will have to fall into place. It would be a just reward. India gave much to the international microcomputer industry in the 1980s. In the 1990s, the industry just might return the favor.

Consumer Convenience & How It Relates to our Cities

|

mkalus

shared this story

from |

Photo by bruce mars on Pexels.com

Photo by bruce mars on Pexels.comJust as there is growing interest in slow cooking with meals made from scratch, is there a return to thinking about doing other things in a more 20th century and long hand way?

Gayle Macdonald in the Globe and Mail talks about the “convenience-driven quandary” and asks: “What if we become so accustomed to computers and other AI-driven technologies doing everything for us that we forget the joy of doing things slowly, meticulously and with our own two hands?”

Take a look at the data. Online purchases have increased to 2.9 trillion dollars in 2018, from 2.4 trillion in 2017. And Canadians, who have been late to the online purchasing party have now doubled their expenditures online from sales reported in 2016 to a a cool 39 Billion dollars in United States funds.

That sum is more than what the current American president was going to spend on the southern border wall (that clocked in at 25 Billion dollars). And here’s a story of what 25 Billion dollars will buy.

Something else happens when goods and services are ordered online and delivered to your door. That is the isolating experience when you don’t have to walk or bike or even go to a store, or have any interactions with people on the street or in shops.

As Macdonald observes ” loneliness – a close cousin of isolation – seems to be on the rise, with the U.S. Surgeon-General recently warning it’s an “epidemic” in United States and Britain appointing its first “minister of loneliness.”

While online shopping speaks to comfort and convenience, anthropologist Grant McCracken is wary of the ease of it, stating: “The industrial revolution declared war on space and time … and right through the second half of the 20th century, this war had no skeptics. Convenience was king. But in the last few decades we have seen a counter revolution. We saw the arrival of slow food, meditation, mindfulness, artisanal economies and a more measured approach to life by many people. All of which is better for humans and better for the planet.”

Encouraging “artisanal” enterprises also means developing great walking environments and safely separated bike lanes so that people can access shops and services at a slower pace. That slower pace also demands a level of detail in the environment, making streets and sidewalks clean, appealing, with benches, public washrooms, good wayfinding and places to spend time.

While online shopping provides convenience, it is at the municipal level that good amenities are needed to encourage citizens to explore on foot and on bike. There is a lot to be learned from Europeans that use public space longer than North Americans.

While a park “stay” in Europe is registered as twenty-five minutes, that same “stay” in a North American park is only ten minutes. Why? Is it the lack of amenity or just that using public spaces as outdoor dens is not culturally embraced? We need to create public spaces to walk and bike to and to sit in, making those experiences as approachable and accessible as online shopping, but a lot more stimulating and fun.

Part of a return to mindfulness and sustainability is reinforcing a community’s connection at the most basic level~the sidewalk and street. We need to demand the best attention to detail at this level to make the public realm and non-motorized use of city streets effortlessly accessible for everyone.

The WeWork IPO

There is a reason — beyond the fact it is August — that WeWork’s upcoming IPO has driven so much discussion: it is a document defined by audaciousness, both in terms of the company’s vision and also the flagrant disregard for corporate governance norms by its leadership. And, of course, massive losses despite massive amounts of capital raised. I suspect all of these things are related.

The AWS Example

Imagine it is 2006, and you go to investors with a bold new business plan: computer hardware! Never mind that IBM just sold its PC business to Lenovo a year previously, and that servers appeared on the same path to commoditization, particularly with the emergence of x86 solutions from companies like Dell in place of specialized architectures from traditional suppliers like Sun. It doesn’t sound particularly promising.

And yet 2006 was the year that Amazon launched Amazon Web Services, a computer hardware business that leveraged the commoditization of hardware into a business with operating margins of around 30%. It turned out having one company manage that commodity hardware for everyone else had several important advantages that more than justified those margins:

- New companies had instant access to an entire server stack for basically free, because payments tracked usage (which for new companies is zero).

- Growing companies did not need to obtain funding for or spend the time on extensive build-outs months or years ahead of future growth, instead they could pay for new capabilities as they needed them.

- Established companies no longer needed to have a competency in managing server installations, and could instead focus on their core competencies while outsourcing to cloud providers.

In all three cases the fundamental shift was from servers as capital investment to variable costs; the benefits were less about saving dollars and cents and more about increasing flexibility and optionality. At least, that is, to start: today AWS has offerings that extend far beyond basic compute and storage to capabilities like server-less (which, paradoxically, requires owning an huge number of always-available servers) that are uniquely possible because of AWS’ scale.

The WeWork Bull Case

AWS, broadly speaking, is the WeWork bull case. Consider the phrase, “fixed cost”. There is nothing more fixed than real estate, yet WeWork’s offering transforms real estate into a variable cost for all kinds of companies, with benefits that roughly mirror public clouds:

- New companies can have instant access to a well-appointed office space and pay for only a desk or two, and then grow as needed.

- Growing companies do not need to spend time on extensive build-outs months or years ahead of future growth, and instead pay for more space as they need it.

- Established companies no longer need to have a real estate competency all over the world, and can in fact expand to new territories with far less risk than previously required.

Note that, just like the public cloud, price is not necessarily the primary driver for WeWork space. Still, there is no doubt that AWS, for example, pays far less for AWS’ underlying infrastructure than any of their customers would pay on their own. For one, AWS can spread the cost of data centers around the world over a huge number of customers; for another AWS can bargain with hardware suppliers or simply design and make its own components.

WeWork can achieve similar gains, to an extent. Within a single location, common space, by virtue of being shared by all WeWork members, can be built out much more than any one member could build out on their own. Similarly, WeWork’s network of locations around the world provide options that accrue to all members.

WeWork has also developed an expertise in utilizing office space efficiently, and while some of this is simply a willingness to cram more people into less space, opening triple-digit locations a year means that the company is by definition learning and iterating on what works for office space far faster than anyone else, and that is before the promised application of sensors and machine learning to the challenge.

And then there is the question as to whether WeWork is, or can become, more than a real estate play at scale: what might be the equivalent of “server-less” when it comes to office space — a unique capability that is uniquely unlocked by one company providing all of the real estate needs for, well, everyone?

WeWork’s Losses and Ambition

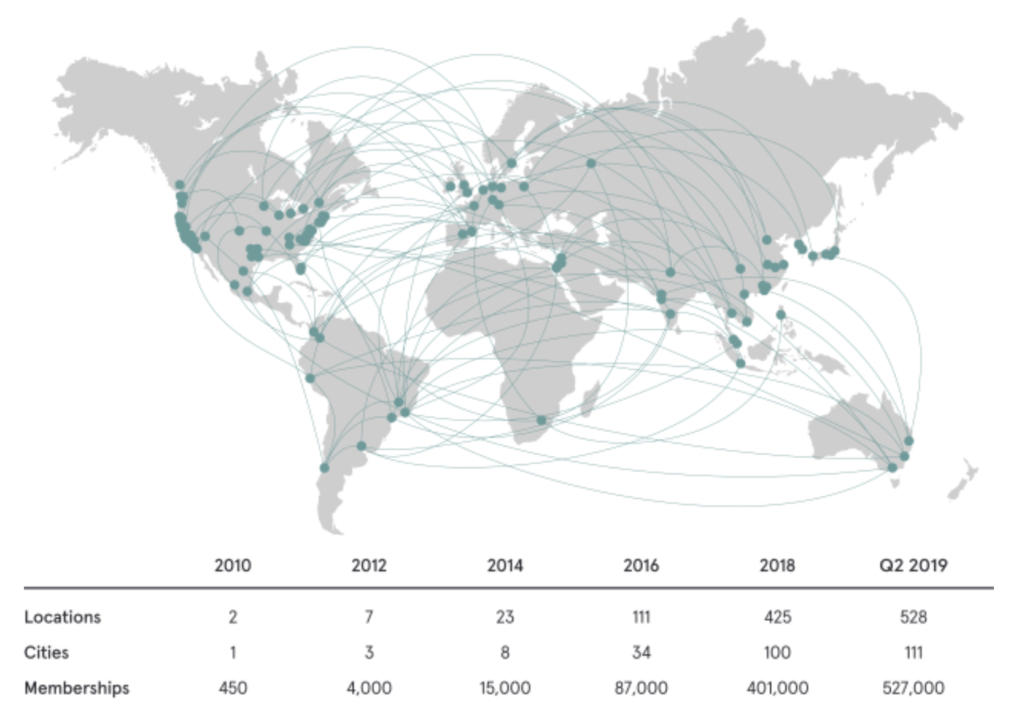

Given this vision, WeWork’s massive losses are, at least in theory, justifiable. The implication of creating a company that absorbs all of the fixed costs in order to offer a variable cost service to other companies is massive amounts of up-front investment. Just as Amazon needed to first build out data centers and buy servers before it could sell storage and compute, WeWork needs to build out offices spaces before it can sell desktops or conference rooms. In other words, it would be strange if WeWork were not losing lots of money, particularly given its expansion rate; from the S-1:

The company also includes this graphic, which on its own isn’t particularly helpful given the missing Y-axis:

What is useful is considering these two graphics together: over 300 locations — more than half — are in the money-losing part of the second graph, which helps explain why WeWork’s expenses are nearly double its revenue; should the company stop opening locations, it seems reasonable to expect that gap to close rapidly.

Still, it is doubtful that WeWork will slow the rate with which it opens locations given the company’s view of its total addressable market. From the S-1:

In the 111 cities in which we had locations as of June 1, 2019, we estimate that there are approximately 149 million potential members. For U.S. cities, we define potential members by the estimated number of desk jobs based on data from the Statistics of U.S. Businesses survey by the U.S. Census Bureau. For non-U.S. cities, we consider anyone in select occupations defined by the International Labor Organization — including managers, professionals, technicians and associate professionals and clerical support workers — to be potential members, because we assume that these individuals need workspace in which they have access to a desk and other services. We view this as our addressable market because of the broad variety of professions and industries among our members, the breadth of our solutions available to individuals and organizations of different types and our track record of developing new solutions in response to our members’ needs.

We expect to expand aggressively in our existing cities as well as launch in up to 169 additional cities. We evaluate expansion in new cities based on multiple criteria, primarily our assessment of the potential member demand as well as the strategic value of having that city as part of our location portfolio. Based on data from Demographia and the Organization for Economic Cooperation and Development, we have identified our market opportunity to be 280 target cities with an estimated potential member population of approximately 255 million people in aggregate.

When applying our average revenue per WeWork membership for the six months ended June 30, 2019 to our potential member population of 149 million people in our existing 111 cities, we estimate an addressable market opportunity of $945 billion. Among our total potential member population of approximately 255 million people across our 280 target cities globally, we estimate an addressable market opportunity of $1.6 trillion.

Did you catch that? WeWork is claiming nearly every desk job around the globe as its market, a move that by definition means moving beyond being a real estate company. From the S-1:

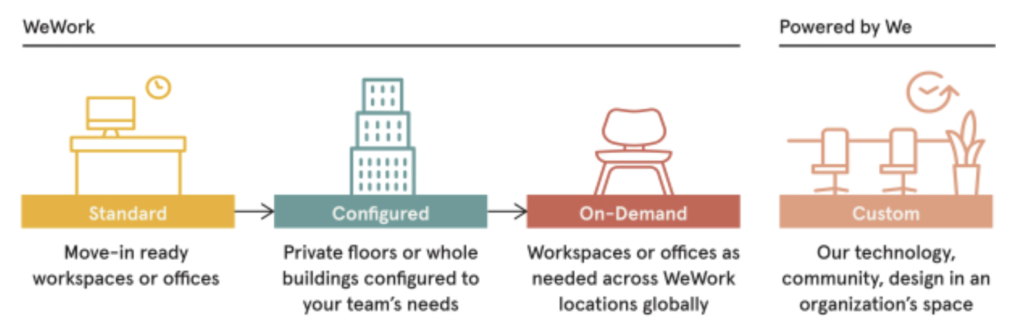

Our membership offerings are designed to accommodate our members’ distinct space needs. We provide standard, configured and on-demand memberships within our spaces. We also offer Powered by We, a premium solution configured to an organization’s needs and deployed at the organization’s location. Powered by We leverages our analysis, design and delivery capabilities to beautify and optimize an existing workplace, while also offering an organization increased efficiencies and an option to invigorate its spaces through our community offerings. The technology we deploy includes software and hardware solutions that deliver improved insights and an easier-to-use workplace experience for employees.

The sheer scale of this ambition again calls back to AWS. It was in 2013 that Amazon’s management first stated that AWS could end up being the company’s biggest business; at that time AWS provided a mere 4% of Amazon’s revenue (but 33% of the profit). In 2018, though, AWS had grown by over 1000% and was up to 11% of Amazon’s revenue (and 59% of the profit), and that share is very much expected to grow, even as AWS faces a competitor in Microsoft Azure that is growing even faster, in large part because existing enterprises are moving to the cloud, not just startups.

WeWork, meanwhile, using its expansive definition of its addressable market, claims that it has realized only 0.2% of their total opportunity globally, and 0.6% of their opportunity in their ten largest cities. To be fair, one may be skeptical that existing enterprises in particular will be hesitant to turn over management of their existing offices to WeWork, which would dramatically curtail the opportunity; on the other hand, large enterprises now make up 40% of WeWork’s revenue (and rising), and more importantly, WeWork doesn’t have any significant competition.

WeWork’s (Lack of) Competition

This point around competition is an important one, and one of the more compelling reasons to be bullish on WeWork’s opportunity.

The obvious competitor is a company called IWG, with 3,306 locations and 445k workstations at the end of 2018. WeWork, in comparison, had 528 locations and 604k workstations as of June 30, 2019. Note the date mismatch — this isn’t a perfect comparison — but that only makes the point that these are two very different companies: WeWork had only 466k workstations at the end of 2018; a year earlier, when the Wall Street Journal pointed out that WeWork’s then-valuation was 5x IWG’s (it is now 13x), WeWork had a mere 150k, while IWG had 414k.

In other words, WeWork is massively more concentrated than IWG (i.e. fewer locations with more workstations), and growing exponentially faster; unsurprisingly, and relatedly, IWG is making money (£154 million last year). That, though, further makes the point: IWG, for better or worse, is constrained by the revenue it makes; WeWork, on the other hand, is perhaps best understood as a clear beneficiary of a world of seemingly unlimited capital. It is difficult to see IWG competing in the long run, or, frankly, anyone else: who is going to fund a WeWork competitor, instead of simply pouring more money into WeWork itself?

Capital and Recessions

This question of capital is perhaps the biggest one facing WeWork: from the company’s inception on critics have (fairly) wondered what will happen in a recession. It is one thing to sign long leases at low rates and rent out office space at higher rates when the economy is growing; what happens when the economy is shrinking and those long-term leases are not going anywhere, while WeWork customers very well may be?

This is a fair concern and almost certainly the largest reason to be skeptical of WeWork in the short run, but the company does have counter-arguments:

- First, WeWork argues that in a downturn increased flexibility and lower costs (relative to traditional office space) may in fact attract new customers.

- Second, WeWork claims its growing enterprise customer base has nearly doubled lease commitments to 15 months with a committed revenue backlog of $4.0 billion; this is still far shorter than WeWork’s mostly 15-year leases, but perhaps long enough to stabilize the company through a recession.

- Third, WeWork notes that a recession — provided the company has sufficient capital — would actually allow it to accelerate its buildout as lease and construction costs come down.

The company also has another, rather unsavory, advantage in a recession: its opaque corporate structure. While there are many downsides to the fact that the “We Company” is a collection of entities, one of the big advantages is that landlords will have a difficult time enforcing any leases that WeWork abandons. From the Financial Times:

There are limits to what landlords can do to enforce rental commitments. The company, like others in the shared office sector, creates special purpose vehicles for its leases, meaning landlords do not have direct recourse to the parent company if it fails to pay rent.

In the past, companies in the sector have changed the terms of their leases when downturns hit. Regus, now IWG, renegotiated leases in 2002 when the end of the tech boom cut into its customer base. More recently, an IWG subsidiary that leased a site near Heathrow airport applied for voluntary liquidation.

To counter such concerns, WeWork has guaranteed a portion of its rental payments, though a small fraction of the overall obligation. About $4.5bn of rent payments are backed by corporate guarantees and $1.1bn by bank guarantees, according to the group’s pre-IPO filing. It has paid more than $268.3m in cash deposits to landlords and used another $183.9m of surety bonds, a form of insurance.

Those guarantees are only $1.6 billion more than WeWork’s committed revenue backlog.

WeWork’s Corporate Governance

Frankly, there is a lot to like about the WeWork opportunity. Yes, a $47 billion valuation seems way too high, particularly given the fact the company is on pace to make only about $440 million in gross profit this year (i.e. excluding all buildout and corporate costs), and given the huge recession risk. At the same time, this is a real business that provides real benefits to companies of all sizes, and those benefits are only growing as the nature of work changes to favor more office work generally and more remote work specifically. And, critically, there is no real competition.

The problem is that the “unsavoriness” I referred to above is hardly limited to the fact that WeWork can stiff its landlords in an emergency. The tech industry generally speaking is hardly a model for good corporate governance, but WeWork takes the absurdity an entirely different level. For example:

- WeWork paid its own CEO, Adam Neumann, $5.9 million for the “We” trademark when the company reorganized itself earlier this year.

- That reorganization created a limited liability company to hold the assets; investors, however, will buy into a corporation that holds a share of the LLC, while other LLC partners hold the rest, reducing their tax burden.

- WeWork previously gave Neumann loans to buy properties that WeWork then rented.

- WeWork has hired several of Neumann’s relatives, and Neumann’s wife would be one of three members of a committee tasked to replace Neumann if he were to die or become permanently disabled over the next decade.

- Neumann has three different types of shares that guarantee him majority voting power; those shares retain their rights if sold or given away, instead of converting to common shares.

Byrne Hobart has made the case that some of the real estate transactions with Neumann are justifiable, but given how bad everything else in this list is, not assuming the worst is a generous interpretation; meanwhile, Neumann has already reportedly cashed out $700 million of his holdings via sales and loans. Everything taken together hints at a completely unaccountable executive looting a company that is running as quickly as it can from massive losses that may very well be fatal whenever the next recession hits.

The Capital Glut

In fact, I would argue that the WeWork bull case and bear case have more in common than it seems: both are the logical conclusion of effectively unlimited capital. The bull case is that WeWork has seized the opportunity presented by that capital to make a credible play to be the office of choice for companies all over the world, effectively intermediating and commoditizing traditional landlords. It is utterly audacious, and for that reason free of competition. The bear case, meanwhile, is that unlimited capital has resulted in a complete lack of accountability and a predictable litany of abuses, both in terms of corporate risk-taking and personal rent-seeking.

Perhaps the real question, then, is what has driven the capital glut that has both helped WeWork’s business and harmed WeWork’s corporate governance? Is it merely the current economic cycle, which means a recession will not only pressure WeWork’s finances but also turn off the spigot of cash? Or has there been a fundamental shift in the global economy, as the increased impact of technology, with its capital-intensive business model that throws off huge amounts of cash, drives more and more global output?

In short, there is a case that WeWork is both a symptom of software-eating-the-world, as well as an enabler and driver of the same, which would mean the company would still have access to the capital it needs even in a recession. Investors would just have to accept the fact they will have absolutely no impact on how it is used, and that, beyond the sky-high valuation and the real concerns about a duration mismatch in a recession, is a very good reason to stay away.

I wrote a follow-up to this article in this Daily Update, explaining how WeWork is very different from AWS. It is free.

The Apple Card Is Here. Is It Worth Getting?

Apple has finally made the Apple Card, its own branded credit card, available to the public.

Open educational resources, student efficacy, and user perceptions: a synthesis of research published between 2015 and 2018

This article is a follow-up to a 2016 study by the same author and updates the work with the last three years of research into the efficacy of and perceptions about open educational resources (OER). The article considers whether and how often the studies controlled for student and teacher variables, as well as the strength of the research methodology in general. I would think these types of studies would be more appropriate to open pedagogy rather than OER. And as always I'm not really comfortable with studies depicting OER (or education in general) as some sort of 'treatment', as though it were analogous to medicine. Maybe - maybe - you could do this study for a specific resource, but it's absurd to think you're getting useful data by studying 'efficacy' or 'perceptions' of OER in general.

Web: [Direct Link] [This Post]Apple TV+ reportedly launching in November at $9.99 per month

The launch window and the price of Apple’s video streaming service has leaked.

The service is set to cost $9.99 USD (roughly $13 CAD) and will include some form of a free trial when it launches in November, according to Bloomberg.

Another detail Apple has yet to nail down is its programming release schedule. While popular streaming services like Netflix and Prime Video drop whole seasons at once, Apple TV+ could follow in the footsteps of HBO and release new episodes weekly.

When it does launch, Apple TV+ is rumoured to only feature a small selection of shows with more to follow as the service ramps up.

Apple TV+ will reportedly launch in 150 countries around the globe once it releases to the public, according to Bloomberg.

The price point in the leak is in USD, and given Apple has offered varied pricing strategies in the past for its subscription services, it’s unclear how much Apple TV+ will cost in Canada. For example, Apple Music is priced at $9.99 in both the U.S. and Canada, while Apple News+ is $3 more in Canada when compared to the cost in the United States.

This release date pits Apple TV+ against Disney+ which is set to release on November 12th for $8.99 CAD per month.

The Bloomberg report claims that the service will launch with The Morning Show, Amazing Stories, See, Truth Be Told and a documentary called Home. A new trailer for The Morning Show recently released.

Surprisingly, For All Mankind, Apple’s alternate take on 1950’s space race, wasn’t mentioned in Bloomberg’s report.

The service will be available through the Apple TV app on a variety of devices. Apple has announced that it’s even releasing a version of the Apple TV app for Samsung TVs, Amazon’s Fire TV platform and Roku. So far only Apple’s devices and Samsung’s smart TVs have the app available, but it’s expected that the other versions will release before Apple TV+’s launch.

A handful of other smart TV manufacturers will also be able to view the content through AirPlay 2, Apple’s wireless video connection standard. This means that if you have a macOS or iOS device, you should be able to stream content from that device to an AirPlay 2 compatible TV.

Earlier today pricing for Apple Arcade leaked out, pegging the cost somewhere between $5 and $7 in Canada.

Source: Bloomberg

The post Apple TV+ reportedly launching in November at $9.99 per month appeared first on MobileSyrup.

Teardown reveals new Nintendo Switch has smaller Tegra X1 chip and better RAM

iFixit just laid its hands on a refreshed Nintendo Switch unit, and the teardown revealed that the Japanese console maker has crammed in more efficient internals.

The new variant now reportedly sports a 16nm Nvidia Tegra X1 chipset as opposed to the older and less efficient 20nm chip.

The refreshed Nintendo Switch console has a shrunk Tegra X1 chip and better RAM to 16nm. The improved Tegra chip should also demonstrate better thermal control, therefore providing better overall performance, according to iFixit.

iFixit believes that the new 16nm chip should also appear in the rumoured new Nvidia Shield TV consoles and even the Switch Lite.

On top of a die-shrink, Nintendo swapped the older LPDDR4 RAM with state-of-the-art LPDDR4X RAM modules found in many 2019 high-end smartphones. According to Samsung, the LPDDR4X should provide up to 15 percent better performance while drawing 17 percent less power compared to the LPDDR4.

Back in July, the South Korean semiconductor giant also announced the next-gen LPDDR5 RAM specification that provides another leap in performance and efficiency.

Thanks to those under-the-hood upgrades, the new Nintendo Switch now enjoys a rated battery life of 4.5 to 9 hours instead of the old 2.5 to 6.5 hours.

Gizmodo also reported Nintendo has given all the refreshed Switch models an improved display to sweeten the deal.

If you are in the market for a brand-new Nintendo Switch console, the newer ones come with a packaging design with a fully red background (and no hands).

Source: iFixit

The post Teardown reveals new Nintendo Switch has smaller Tegra X1 chip and better RAM appeared first on MobileSyrup.

Former Siri head leaves Apple, joins Microsoft to focus on AI

Microsoft has hired the former head of Apple’s Siri division, Bill Stasior.

The hire reflects Microsoft’s move into emerging technologies like artificial intelligence, according to CNBC.

Microsoft established its Artificial Intelligence and Research engineering group in 2016, and since then has focused on making several AI acquisitions to bring in more talent. That includes Bonsai, Lobe and Semantic Machines, the article indicated.

Stasior indicated his new job title by changing his resume on his personal website on August 19th. It now notes that he will be the corporate vice-president of technology in the office of the chief technology officer, Kevin Scott.

The news was first reported by The Information, a San Francisco-based publication.

A spokesperson for Microsoft confirmed the move and indicated that he will be “starting in August.”

“He will work to help align technology strategies across the company,” the spokesperson told CNBC.

Before leaving in May, Stasior was the vice-president for AI and Siri at Apple. He had been with the tech giant since 2012. Prior to his time at Apple, he was a top executive at Amazon and also worked at AltaVista and Oracle.

Source: CNBC

The post Former Siri head leaves Apple, joins Microsoft to focus on AI appeared first on MobileSyrup.

Lucky Mobile adds unlimited data with speeds throttled at 128 Kbps

Bell’s prepaid wireless brand Lucky Mobile has added unlimited data into its plans, one that lines up with others in the market but seems to be the first of the entry-level sub-brands.

In the race to capture new customers, Lucky Mobile has added this new offering, according to its website.

The carrier said for all its plans starting from $25 per month or more: “Unlimited additional data at reduced speeds of up to 128 Kbps for email, light browsing and messaging once you have exceeded your allotted 3G data.”

It should be noted that carriers such as Rogers, Telus, and parent-company Bell speeds are throttled to 512Kbps (for upload and download speeds) for unlimited or infinite data plans.

The top tier plan for Lucky is priced at $50 per month and it offers unlimited Canada and United States calling with unlimited talk and text, and 8GB of data at 3G speeds.

Source: Lucky Mobile

The post Lucky Mobile adds unlimited data with speeds throttled at 128 Kbps appeared first on MobileSyrup.

How to set up a fresh Ubuntu desktop using only dotfiles and bash scripts

One of my most favourite things about open source files on GitHub is the ability to see how others do (what some people might call) mundane things, like set up their .bashrc and other dotfiles. While I’m not as enthusiastic about ricing as I was when I first came to the Linux side, I still get pretty excited when I find a config setting that makes things prettier and faster, and thus, better.

I recently came across a few such things, particularly in Tom Hudson’s dotfiles. Tom seems to like to script things, and some of those things include automatically setting up symlinks, and installing Ubuntu repository applications and other programs. This got me thinking. Could I automate the set up of a new machine to replicate my current one?

Being someone generally inclined to take things apart in order to see how they work, I know I’ve messed up my laptop on occasion. (Usually when I’m away from home, and my backup harddrive isn’t.) On those rare but really inconvenient situations when my computer becomes a shell of its former self, (ba-dum-ching) it’d be quite nice to have a fast, simple way of putting Humpty Dumpty back together again, just the way I like.

In contrast to creating a disk image and restoring it later, a collection of bash scripts is easier to create, maintain, and move around. They require no special utilities, only an external transportation method. It’s like passing along the recipe, instead of the whole bundt cake. (Mmm, cake.)

Additionally, functionality like this would be super useful when setting up a virtual machine, or VM, or even just a virtual private server, or VPS. (Both of which, now that I write this, would probably make more forgiving targets for my more destructive experimentations… live and learn!)

Well, after some grepping and Googling and digging around, I now have a suite of scripts that can do this:

This is the tail end of a test run of the set up scripts on a fresh Ubuntu desktop, loaded off a bootable USB. It had all my programs and settings restored in under three minutes!

This post will cover how to achieve the automatic set up of a computer running Ubuntu Desktop (in my case, Ubuntu LTS 18.04) using bash scripts. The majority of the information covered is applicable to all the Linux desktop flavours, though some syntax may differ. The bash scripts cover three main areas: linking dotfiles, installing software from Ubuntu and elsewhere, and setting up the desktop environment. We’ll cover each of these areas and go over the important bits so that you can begin to craft your own scripts.

Dotfiles

Dotfiles are what most Linux enthusiasts call configuration files. They typically live in the user’s home directory (denoted in bash scripts with the builtin variable $HOME) and control the appearance and behaviour of all kinds of programs. The file names begin with ., which denotes hidden files in Linux (hence “dot” files). Here are some common dotfiles and ways in which they’re useful.

.bashrc

The .bashrc file is a list of commands executed at startup by interactive, non-login shells. Interactive vs non-interactive shells can be a little confusing, but aren’t necessary for us to worry about here. For our purposes, any time you open a new terminal, see a prompt, and can type commands into it, your .bashrc was executed.

Lines in this file can help improve your workflow by creating aliases that reduce keystrokes, or by displaying a helpful prompt with useful information. It can even run user-created programs, like Eddie. For more ideas, you can have a look at my .bashrc file on GitHub.

.vimrc

The .vimrc dotfile configures the champion of all text editors, Vim. (If you haven’t yet wielded the powers of the keyboard shortcuts, I highly recommend a fun game to learn Vim with.)

In .vimrc, we can set editor preferences such as display settings, colours, and custom keyboard shortcuts. You can take a look at my .vimrc on GitHub.

Other dotfiles may be useful depending on the programs you use, such as .gitconfig or .tmux.conf. Exploring dotfiles on GitHub is a great way to get a sense of what’s available and useful to you!

Linking dotfiles

We can use a script to create symbolic links, or symlinks for all our dotfiles. This allows us to keep all the files in a central repository, where they can easily be managed, while also providing a sort of placeholder in the spot that our programs expect the configuration file to be found. This is typically, but not always, the user home directory. For example, since I store my dotfiles on GitHub, I keep them in a directory with a path like ~/github/dotfiles/ while the files themselves are symlinked, resulting in a path like ~/.vimrc.

To programmatically check for and handle any existing files and symlinks, then create new ones, we can use this elegant shell script. I compliment it only because I blatantly stole the core of it from Tom’s setup script, so I can’t take the credit for how lovely it is.

The symlink.sh script works by attempting to create symlinks for each dotfile in our $HOME. It first checks to see if a symlink already exists, or if a regular file or directory with the same name exists. In the former case, the symlink is removed and remade; in the latter, the file or directory is renamed, then the symlink is made.

Installing software

One of the beautiful things about exploring shell scripts is discovering how much can be achieved using only the command line. As someone whose first exposure to computers was through a graphical operating system, I find working in the terminal to be refreshingly fast.

With Ubuntu, most programs we likely require are available through the default Ubuntu software repositories. We typically search for these with the command apt search <program> and install them with sudo apt install <program>. Some software we’d like may not be in the default repositories, or may not be offered there in the most current version. In these cases, we can still install these programs in Ubuntu using a PPA, or Personal Package Archive. We’ll just have to be careful that the PPAs we choose are from the official sources.

If a program we’d like doesn’t appear in the default repositories or doesn’t seem to have a PPA, we may still be able to install it via command line. A quick search for “ installation command line” should get some answers.

Since bash scripts are just a collection of commands that we could run individually in the terminal, creating a script to install all our desired programs is as straightforward as putting all the commands into a script file. I chose to organize my installation scripts between the default repositories, which are installed by my aptinstall.sh script, and programs that involve external sources, handled with my programs.sh script.

Setting up the desktop environment

On the recent occasions when I’ve gotten a fresh desktop (intentionally or otherwise) I always seem to forget how long it takes to remember, find, and then change all the desktop environment settings. Keyboard shortcuts, workspaces, sound settings, night mode… it adds up!

Thankfully, all these settings have to be stored somewhere in a non-graphical format, which means that if we can discover how that’s done, we can likely find a way to easily manipulate the settings with a bash script. Lo and behold the terminal command, gsettings list-recursively.