Yes, it has been a little quiet on Hack Education lately. Even HEWN has gone dormant. I will write some more about the silence soon. Meanwhile... These remarks were made during a guest visit to Dan Krutka's class, "Critical Perspectives on Learning Technologies," at the University of North Texas. (Let me know if you are interested in my speaking to your Zoom school.)

Greetings. Thank you very much for inviting me to speak to you today. You have found yourself enrolled in perhaps the most perfectly and horribly timed class, as you're asked to think about education technology critically, right as the coronavirus crisis plays out across schools and colleges worldwide and as students and teachers are compelled — even more than usual — to turn over their educational experiences to ed-tech.

I want to talk at you briefly about some history of ed-tech — I'll yammer on for about 20 minutes or so, and then I hope we can have a discussion.

But first, a little preface on why history, why now...

I realize that most everyone — particularly on social media — wants to talk about the now or the future. But even with everything currently upside down, I insist that knowing the history of education technology is crucial. History is useful not just because of some "lessons" that we might glean from the past. But whether we actively recognize it or not, where we are today is an outgrowth of history — our systems, our practices, our beliefs. There is no magical "break" from the past, even if it feels like everything right now is different. History informs and shapes us. All our decisions about the now and the future involve some notion of what has come before — even if those notions are wildly incorrect (such as the very common narrative — a favorite of the current Secretary of Education — that schools have not changed in over one hundred years). It's worth correcting these notions, of course. And it's also worthwhile to stop and maybe calm the people who are throwing up their hands right now and insisting that "all this is completely unprecedented!" Because there is historical research and social scientific research about education and about ed-tech — knowledge that can help us think through this moment and moments to come more sensibly.

We do know — that is, we have decades of research that demonstrates — that some ed-tech works okay for some students in some subject areas under some circumstances. We also know that all of those qualifications in that previous sentence — which ed-tech, which students, which subject areas, what sort of circumstances — tend to play out in ways that exacerbate existing educational inequalities. Under normal conditions, ed-tech is no silver bullet. Why the hell would we expect it to be now?!

We also know — that is, we have decades of history that demonstrates — that the push to automate education is not new nor is it dependent on how well the software works. Moreover, we know that technology is often called upon to address social and economic and political and yes sure medical crises facing education. Crises like World War I. Polio outbreaks. Sputnik. The rising cost of college. Not enough teachers. Not enough punch card operators. Not enough computer programers. All occasions when politicians and administrators and business officials (and less often, educators) insisted that technology — machines that teach — would save us. We know that, as historian Larry Cuban wrote almost twenty years ago, computers — the latest teaching machines — have been "oversold"; their promises largely unfulfilled.

I want to tell you a story about computer-assisted instruction (CAI) in the 1960s. I want to do so, in part, because this actually isn't a story I tell in my forthcoming book, Teaching Machines, and I feel like getting some of this down on paper. And I want to do so, in part, because I think it's worth thinking about how an idea that is almost a century old now is still being heralded as revolutionary. And finally, I want to tell this particular story about computer-assisted instruction because I think there are several important strands that I hope this class has prepared you to pick up on: I want you to think about who is the target population for this ed-tech intervention; I want you to think about the space — the physical space — in which this intervention occurs and what it means and what it feels like to work there; and I want you to think about how this technology imagines the labor of teaching and learning and how it constructs both the learning and the learner.

In 1962, Patrick Suppes, whose name is one of those most closely associated with the development of CAI, and his fellow Stanford professors, Richard Atkinson (who'd later become the president of the University of California) and William Estes, received a million dollar grant from the Carnegie Corporation — that's about $8 million in today’s dollars — to study the computer-assisted teaching of math and to construct an automated computer-based program.

In 1962, Patrick Suppes, whose name is one of those most closely associated with the development of CAI, and his fellow Stanford professors, Richard Atkinson (who'd later become the president of the University of California) and William Estes, received a million dollar grant from the Carnegie Corporation — that's about $8 million in today’s dollars — to study the computer-assisted teaching of math and to construct an automated computer-based program.

1962 was, of course, before the advent of the personal computer, but at Stanford, Suppes and his team had access to an IBM 7090 and a PDP-1, two mainframe computers. In their laboratory, they established six "learning stations" where first-grade students were brought in five days a week for 20-minute sessions to work on a computer-based math curriculum. The logistics were obviously challenging, and so the project was soon moved off the Stanford campus and into the students' school — but that too was easier said than done. You couldn't put a giant mainframe computer in an elementary school building, although it was possible to install "dumb" terminals that could connect back to the mainframe via a phone line. First, you had to convince the phone company that it was okay to send signals over the wire this way. And then you had to find room for the terminals — remember, the "baby boom" had left many schools overcrowded. The only free space for terminals: the storage closet. So that's where the students were sent, one at a time, to work on their math lessons — "drill and kill" type exercises.

In his 1964 report to his funder, Carnegie, Suppes explained how the teletype — a modified typewriter keyboard and a ticker tape printer connected to the Stanford mainframe, was used daily at Walter Hays Elementary School in Palo Alto (one of the highest ranking elementary schools in the state of California, it's worth noting):

We are "on the air" for about 2 1/2 to 3 hours with a class of 40 students and we attempt to process all 40 students during that period. Each student is at the teletype terminal from 2 to 5 minutes. They are very efficient about it, not losing more than 20 or 30 seconds in arriving at the terminal or leaving it. We ask them to begin by typing in their names and hitting the return key. Timing on the rest of the drill and practice work is controlled by the program. What we are finding is that when detailed and objective control of the environment is possible, we can hope to train a student to a level of accuracy and perfection of response that is very difficult to achieve in a classroom environment.

(For the sake of time, I am going to skip over a whole discussion here about B. F. Skinner and operant conditioning and the training of children and the training of pigeons. But sufficed to say, do not let anyone try to tell you that computer-assisted education rejected behaviorism and embraced cognitive science.)

When the student entered their name on the teletype, the computer would pull up their file and determine the exercises to give them based on how they'd done the previous day — the concept to work on — say, least common multiples — as well as the level of difficulty. These exercises typically consisted of twenty questions, and students had ten seconds to answer each one. If they answered incorrectly, the computer would provide the correct answer. At the end of the student's time at the machine, the teletype would print out the student's score, along with the average of previous sessions. It would conclude with a friendly farewell: "GOOD BYE, O FEARLESS DRILL TESTER." And then "TEAR OFF ON DOTTED LINE" — the student would grab the receipt from their session.

Suppes argued — as had earlier proponents of teaching machines — that, in this way, computers would "individualize" education. "The computer makes the individualization of instruction easier," he wrote, "because it can be programmed to follow each student's history of learning successes and failures and to use his past performance as a basis for selecting new problems and new concepts to which he should be exposed next." The computer, he believed, would act as a tutor — a personal tutor — and take over from the teacher classroom instruction. He predicted in a 1966 article for Scientific American that "in a few more years millions of school children would have access to what Philip of Macedon's son Alexander enjoyed as a royal prerogative: the personal services of a tutor as well-informed and responsive as Aristotle."

By 1967, Suppes' computer-based curriculum was in use in seven schools in California. The first city in which every elementary school student learned math through his computer-based system, Suppes boasted, was McComb, Mississippi — a far cry from the elementary school down the street from Stanford. "Computer assisted instruction may well be a technique suited for

closing the educational gap," according to a 1969 report on the project in McComb, adding that drill-and-kill exercises via computer might not be suitable for more advantaged (read: white) students.

In 1967, Suppes founded a company the Computer Curriculum Corporation, which sold its "basic skills" courseware, along with IBM mainframe computers and terminals, to elementary schools, securing a large contract in its first year with the Chicago Public Schools expressly to provide remediation to struggling students. Suppes' company also struggled. As you can imagine, this all required an incredible financial investment from school districts. Computer-based education didn't save money; it actually cost more. Critics decried the computer as simply a "thousand dollar flash card." And frankly, the results just weren't that spectacular: students who used computer-assisted instruction did about as well as students who received traditional teacher-based instruction. (Incidentally, Suppes' company did not go out of business, thanks in part to an influx of federal dollars that supported programs offering remediation for disadvantaged students — by the late 1960s, urban renewal had replaced Sputnik as the educational crisis that warranted technological intervention. Computer Curriculum Corporation was sold to Simon & Schuster in 1990.)

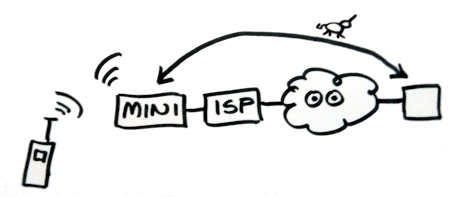

In addition to installing terminals in schools, CCC offered another a product called Dial-a-Drill. Rather than a teletype, the Dial-a-Drill used the telephone; it would call the student at home and pose questions that the student would then answer by pushing the buttons on the telephone in response. Let me repeat that — the program called the student; the student did not call the program. It would also, in some cases, call the parent to drill them on mathematical concepts too.

A Computerworld article in 1970 touted the progress students in New York City made after receiving three five-minute drills per week. “One reason for the one-year-old program’s apparent success,” the article proclaimed, “is that a student cannot 'fail' in the conventional sense. The DEC PDP-8/I automatically adjusts the drills to fit the level of difficulty at which the student may best operate. When a student has demonstrated mastery at one of the 25 levels, the computer moves him up a notch.” Echoing what has been the promise of education technology since at least the 1920s, the machine would enable students to move at their own pace, delivering lessons and quiz questions at the right skill level so as to minimize errors.

Although Suppes boasted that Dial-a-Drill could teach math, reading, foreign language, and computer programming, there were obvious limitations to how any subject could be taught using the system. It was, as the name suggested, designed for drill exercises. But drills — more drills more often — were precisely what many in the “back to basics” movement at the time were calling for students to do — less of that "new math" and more repetition of basic skills.

The zeal for computer-based instruction broadly and for Dial-a-Drill specifically did not last. An op-ed in InfoWorld in 1980, questioned whether or not these sorts of exercises were what computers should be used for. "One of the biggest potentials for personal computers is taking education out of the school and putting it back in the home," Infoworld argued. "Certainly programs like Dial-a-Drill will be one of the options available — an easy way for parents to turn over the responsibility for part of their children's education to a remote computer. What we must insure is that the potential benefits of this high-tech hickory stick do not come at the expense of more innovative educational software."

Of course, computer-assisted instruction never really went away; it re-emerged with the personal computer; it re-re-emerged with mobile devices. And I think we should ask too whether or not it's actually gotten any better, whether or not we are still surrounded by a bunch of "high-tech hickory sticks." And who gets the hickory stick, and who gets the "more innovative educational software"?

Even before the advent of "remote learning" this spring, we could also see the ways in which, as that Infoworld story gleefully predicted, educational computing was creeping into the home. As a replacement for school? I don't think so, not for the vast majority of students. An extension of school? Maybe. An extension of ed-tech corporations' reach? Definitely. So now, with students at home, online, it's more important than ever to think about what this creep might mean — particularly for disadvantaged students, particularly for educators.

In 1962, Patrick Suppes, whose name is one of those most closely associated with the development of CAI, and his fellow Stanford professors, Richard Atkinson (who'd later become the president of the University of California) and William Estes, received a million dollar grant from the Carnegie Corporation — that's about $8 million in today’s dollars — to study the computer-assisted teaching of math and to construct an automated computer-based program.

In 1962, Patrick Suppes, whose name is one of those most closely associated with the development of CAI, and his fellow Stanford professors, Richard Atkinson (who'd later become the president of the University of California) and William Estes, received a million dollar grant from the Carnegie Corporation — that's about $8 million in today’s dollars — to study the computer-assisted teaching of math and to construct an automated computer-based program.