Rolandt

Shared posts

Background

Deep Learning (Robot Dreams 2), by Ren Warom

Deep Learning by Ren Warom is the second of four novellas in which different authors explore what robots might dream of. Do androids dream of electric sheep? In this novella, Niner only works because of a glitch. Through its various ‘life’ stages it fights internal insanity caused by the information overload from permanent entanglement with the minds of all who commanded him at some point, while being limited in its communication to commands and responses. It seems to work well, mostly because the internal vortex is invisible to humans. Set in a post-climate-urgency world where everyone fights over the last bits of livable land in the USA.

Questlove's Prince Tribute

There could be no more fitting remembrance of Prince than Questlove going deep into his catalog and spinning some of Prince's greatest works into a multi-hour DJ set. Unless Quest did that five nights in a row. Which he did! It was an absolutely monumental marathon of nightly live streams for the better part of a week, often ending in the wee hours of the morning.

hey Prince newbies: say thank you to @anildash & @IAmMissTLC https://t.co/cleMeKHBwN

— Hold On Be Strong (@questlove) April 21, 2020

So, Miss TLC and I felt obligated to do justice to that herculean effort by documenting the sets as they happened, adding live liner notes covering everything from the song titles (responding to the incessant queries of "what song is this?" in the Instagram comments) to never-before-known bits of trivia shared by some of Prince's collaborators who dropped in to witness these already-legendary sets.

I hope you'll take a moment to read our notes, to listen to Quest's sets as you're able, and to remember one of the bravest and most inspiring artists we've ever gotten to enjoy. And if the spirit moves you to explore Prince's catalog for yourself, here are a few great places to start, along with every single video Prince ever made.

Oh, and if you're a geek? I wrote up a whole article on how I made the app to do all these live liner notes — in the 20 minutes before Quest started his set. Please do give it a look!

Covid Concept Generator

To save everyone some time, here’s a generator for the next five years of conceptual advances in social theory. Choose once at random from each column to secure your contribution.

| Column 1 | Column 2 |

|---|---|

| Sequenced | Stratification |

| Algorithmic | Differences |

| Automated | Capital |

| Robust | Contagion |

| COVID | Masking |

| Epidemiologic | Others |

| Viral | Politics |

| Rhizomatic | Inequality |

| Infectious | Sexualities |

| Compartmentalized | Classification |

| Pandemic | Causality |

| Epizootic | Discrimination |

| Transmissible | Polarization |

| Leucocyte | Paradox |

| Intersectional | Bodies |

| Corona | Disparities |

| Liquid | Isomorphism |

| Genomic | Populism |

| Nucleotide | Interdependence |

| Masked | Colorism |

Life under COVID-19: May 9

I’m not going to be updating my plot of the total number of COVID-19 cases anymore. There is not too much more to be learned.

You can see that the #cases per capita for Canada is now higher than Iran, and that the US has passed Italy. The curves for the US and Canada are tracking each other, with the numbers for Canada being about half of those for the US. More distressing is the fact that the #deaths per capita for Canada is increasing with respect to the US. Earlier this spring, the ratio was about 1:10. Now it is more than 1:2, i.e. about the same ratio as the number of cases. This CBC article talks about the narrowing of the gap. The other big issue for Canada is that about 65-75% of the fatalities are associated with long term care homes, the highest such percentage for all OECD countries. The number for the US is only about one third.

The best source of international statistics remains the Financial Times page. Here are a couple of figures from that page.

The caption that goes with this figure says that the total number of cases is decreasing globally, but the worrisome thing is the increasing fraction coming from places like Brazil. It is also not clear how up to date the data from Africa, India, Russia, and some other places is.

Nor does this chart reflect the fact that poorer countries generally have much lower testing rates, the outlier being Japan, which also lags severely in testing (currently about the same rate as Brazil at 1600 tests per 1 M population; the same number for the US or Canada is about 26K). The fact that the curve for Japan in the first figure is flattening again may indicate some degree of success in contact tracing, but with such limited testing of the population at large, it is hard to tell.

Their charts for the total number of deaths and cases has changed format. Here is the number of deaths per day.

If we are optimistic and assume that the curves have peaked and will decline somewhat symmetrically, then the total number of deaths will be about double the current tally. For the US, this yields a prediction of about 140K (Trump predicted yesterday a total of about 95K). For Canada, it would be about 9K for the first wave. The federal government has thrown out numbers between 10K and 200K, depending on how social distancing, etc are managed in the future. I believe these numbers also take into account more than just “the first wave” of fatalities.

The curves for the number of new cases per day looks like this:

The US seems to have plateaued, whereas the numbers for Canada continue to slowly increase (mainly due to Quebec, and to a less extent, Ontario). It will be interesting to see new trends as various lockdown restrictions are being lifted in both the US and Canada.

Of course, it being only May, it is snowing a bit this morning.

Between the cold and the high winds, I don’t think that I’ll be riding today.

(well I decided to run some errands anyway)

Stay safe, everyone!

Beyond Belief

This is another post on the subject of radical non-duality. Caveat lector.

image from a reproduction of a destroyed neon work by artist Joe Rees; photo by Steve Rhodes CC BY-NC-SA 2.0

I’ve been thinking about how and why we come to believe what we believe.

Wild creatures, I think, have it right: They believe what they observe, what their senses tell them. Such ‘self-evident’ truths are, literally, obvious.

All creatures are conditioned by their experiences, and for most creatures those experiences are delimited by what their senses tell them has happened. As Melissa Pierson explains: “The same law of behavior affects all creatures’ actions: we do something, it produces pleasure or it produces pain or it produces nothing, and the result determines whether we continue doing it, stop doing it, or do it differently, and these are the only options.”

Whether “it produces pleasure or it produces pain or it produces nothing” is an assessment, for most creatures, based entirely on their observed experience. But when language enters the picture our beliefs can quickly get very complicated. Suddenly we are pressed to believe not only what our own experiences and observations have led us to believe, but also what others we trust (scientists, journalists, and those we have personal relationships with) have observed or experienced.

So when as a small child our observation is that the sun circles the earth, but we are told it’s the other way around, we have to balance the two to decide what we believe. And there are other things we’re told are true (eg religious and moral beliefs) that usually can’t be shown to be true from observation or experience, but which almost everyone we know and trust asserts to be true, based not on evidence but on anecdote or just on faith. Now deciding what to believe gets even harder, and more tenuous.

I used to believe in good and evil, in progress, in humans’ vast capacity to make things better, in the standard cosmological theory of space-time, matter and energy and their origins, in evolution and Gaia theory, in human self-consciousness as an evolutionary advance, in free will and personal responsibility, in life having meaning and purpose.

All of these beliefs were conditioned by my observations and experiences, by trusted others’ observations and experiences, and by what trusted others believed even though they were not subject to direct observation and experience. Our large complex brains, after all, are essentially sense-making machines, and beliefs are how they make sense of things that haven’t been, or can’t be, absolutely known.

So how is it that those creatures whose actions and beliefs are based solely on personal observation and experience seem so much more adept at living successfully and equanimously than our destructive and unhappy species?

I’m the stereotypical Doubting Thomas, always challenging what I’m told and what I believe (I have a poster behind me on Zoom calls that reminds me: “Don’t believe everything you think.”) From a very young age I’ve had a nagging feeling there’s something very wrong with how we humans live, and what we believe, but I couldn’t quite put my finger on it. Surely, I keep asking myself, life shouldn’t be this hard, this complicated, this stressful and confusing. All this bewildering complexity, just to survive and struggle in a world where other creatures have thrived for hundreds of millions of years without having to know any of it?

What, I’ve asked myself, if it isn’t actually that hard? What if all of this new knowledge of what to believe and what you ‘should’ do is not actually true, or real, or even necessary? What if what’s actually true is not obvious, and not scientifically verifiable, and does not have to be taken on faith?

This is where I landed when I began exploring radical non-duality. The more I’ve studied and learned, the more I’ve been filled with doubt about what I believe. Astrophysics and quantum science, it turns out, seems to make more sense if there is no such thing as time, and if what we observe as space, and things in it, are actually merely appearances in an infinite field of possibilities. In other words, that everything we call “real” is just an invention of a brain desperately trying to make sense of everything, and hence mistaking a constructed model of reality for the “real” thing.

Neuroscience, at the same time, now seems to posit that there is no such thing as a “self” (the homunculus inside our heads is nowhere to be found), and that there is no such thing as free will. In other words, there is no “you”, and everything “you” seemingly do and believe is simply a conditioned response. Not predetermined, mind you, as an infinite number of unpredictable variables can affect your conditioning, but thence what you do and believe is determined completely by that conditioning. “You” have no say in it.

No time, no space, no free will or choice, and no “you” — this is a loathsome and abhorrent possibility for creatures that seem driven to make sense, to know, and hence to make things better. I did not want to hear this message. It makes no sense. I refused to listen to it for years. It struck me as anti-intuitive, counter to what was observable (I was a phenomenologist), anti-science, unprovable (the ultimate condemnation, to me), and utterly useless.

Two things finally forced me (as I evidently have no free will!) to open myself to the heresy that this possibility, the opposite of all I believed, might actually be true.

The first of these were the glimpses. There have been times when ‘I’ seemingly disappeared, and it was somehow ‘obvious’ (though not to ‘me’ as ‘I’ was not there) that there was only everything, eternal and already perfect, and that this ‘everything’ was just an appearance out of nothing. When the glimpses ended and ‘I’ returned, they were recalled as being wonderful, mystical experiences, but I dismissed them as daydreams, disconnected from reality.

But more recently they have come across differently, as a ‘seeing through’ of the illusions that have dominated my life. It was ‘obvious’ that what was glimpsed was not only true (and everything I had believed to be real and true was false), but always and already there — there was no anxiety about ‘holding on’ to this glimpsed reality because it was already there and already everything, complete. The veil of my self and my separate existence were seen for what they were —illusory and transient, of no importance whatsoever.

I had heard and read descriptions of this from many people, from all walks of life, all over the world, some of them dating back millennia, and while what they were saying varied enormously in how (and how articulately) it was said, it was absolutely clear that they were all describing the same thing. And the reason for the inarticulateness was that what they were describing cannot be described.

The second, more recent discovery is that there are (apparent) people all around us who no longer have (or never had) the illusion of self or separation, but who don’t seem different (or at all dysfunctional) because of it. To the extent it is in their nature to talk about this, I have called them ‘radical’ non-dualists to differentiate them from non-duality ‘teachers’. Almost all of what claims to be ‘non-dual teaching’ is actually utterly dual — it is describing the nature of separation and what can be done to overcome it and become ‘enlightened’ or ‘liberated’ or achieve some ‘higher state’ or other nonsense.

Only a small number are saying what is obvious after a glimpse — that there is no one, no separation, no time (or past or present or ‘now’), no space, no thing ‘real’ at all — just ‘one-ness’ appearing freely as everything, for no reason, always and already. This is excruciatingly difficult to convey — as it cannot be described, this message has to be explained mostly by saying what is not, rather than what is. ‘Meetings’ with radical non-dual messages are just now starting to evolve a common approach and vocabulary to try to articulate this unfathomable message, and to reinforce that all alleged ‘paths’ to seeing this are inherently misleading and futile, and that there is nothing ‘you’ can do to see that there is no ‘you’. There is no ‘teaching’ by the hopeless messengers of radical non-duality.

This is not a ‘theory’ — it is what glimpses show, and what those apparent characters who lack the illusion of self or separation describe, as being obviously, eternally, and already true. They have no axe to grind, nothing to promote, and nothing to gain from conning us.

No ‘one’ can really believe this. It asserts the non-existence of anyone who can believe anything. This message makes no sense. Yet somehow, despite the staggering cognitive dissonance it presents, it is, at least for now, believed here. It has rendered all my other beliefs moot, though this well-conditioned character continues, apparently, to act in accordance with the ‘old’ beliefs. It’s not that I passionately believe this message, a message that makes no sense. It’s more that I can no longer believe in anything else — nothing else makes sense anymore either.

I’m learning to be careful about what I believe. My beliefs have caused me much grief and stress, and they make poor place-holders for what I do not, based on my own direct observation, know to be true. I watch the wild creatures in the forest below my house, and envy their obvious freedom from the need to believe.

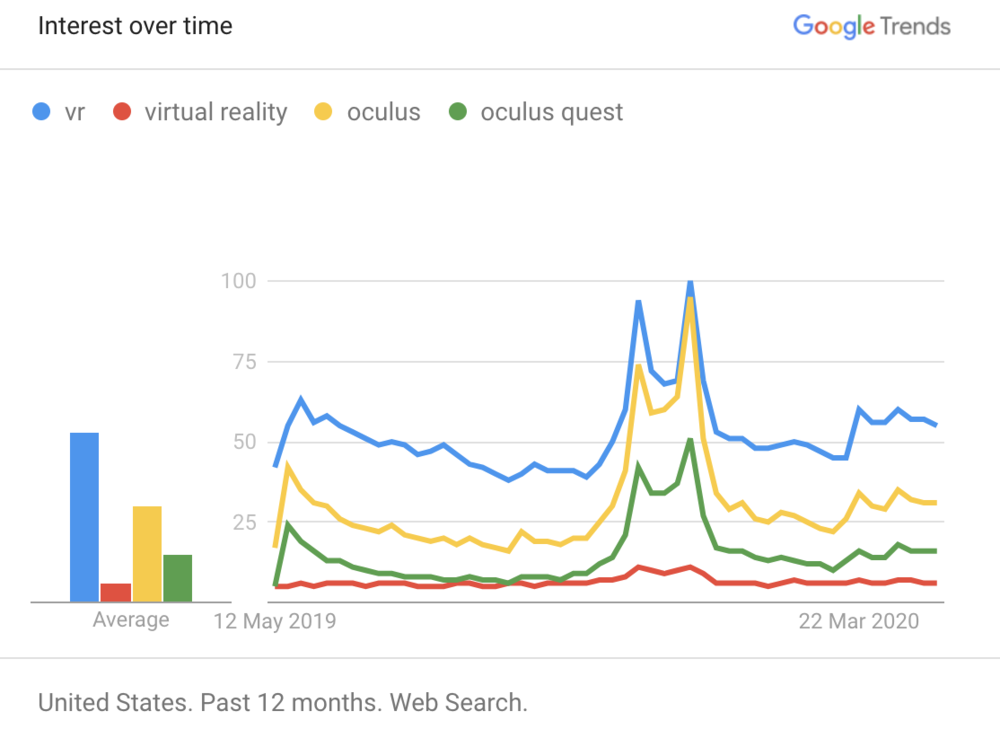

The VR winter

“Our vision is that VR / AR will be the next major computing platform after mobile in about 10 years. It can be even more ubiquitous than mobile - especially once we reach AR - since you can have it always on… Once you have a good VR / AR system, you no longer need to buy phones or TVs or many other physical objects - they can just become apps in a digital store.’ - Mark Zuckerberg, 2015 (Source)

We tried VR in the 1980s, and it didn’t work. The idea may have been great, but the technology of the day was nowhere close to delivering it, and almost everyone forgot about it. Then, in 2012, we realised that this might work now. Moore’s law and the smartphone component supply chain meant that the hardware to deliver the vision was mostly there on the shelf. Since then we’ve gone from the proof of concept to maybe three quarters of the way towards a really great mass-market consumer device.

However, we haven’t worked out what you would do with a great VR device beyond games (or some very niche industrial application), and it’s not clear that we will. We’ve had five years of experimental projects and all sorts of content has been tried, and nothing other than games has really worked.

Meanwhile, it’s instructive that now that we’re all locked up at home, video calls have become a huge consumer phenomenon, but VR has been not. This should have been a VR moment, and it isn’t.

Does that tell us anything? Surely if a raw experience is amazing, the applications will come with a bit more time? Well, perhaps. If you try the Oculus Quest, the experience is indeed amazing and it’s easy to think that this is part of the future. However, if you’d tried one of today’s games consoles in 1980 you’d have had the same reaction - clearly amazing and clearly part of the future. But it turned out that games consoles were a 150-200m unit installed base, not the 1.5bn of PCs, let alone the 4bn of smartphones. That’s a big business, but it’s a branch off the side of the tech industry, not its central, driving ecosystem. Most people’s experience of console games is the demo in the window of a Microsoft store in the mall - they say ‘that’s pretty’ and walk past. A long time ago a school teacher named Hammy Sparks (yes, really) blew my mind by suggesting that there can be different sized infinities - in tech, there can be different sized amazings.

Smartphones are broad and universal, whereas consoles are deep and narrow, and deep and narrow is a smaller market. VR is even deeper and even narrower, and so if we can’t work out a form of content that isn’t also deep and narrow, I think we have to assume that VR will be a subset of the games console. That would be a decent business, but it’s not why Mark Zuckerberg bought Oculus. It’s another branch off the side of tech, not the next platform after smartphones.

There’s a bunch of ideas that float around here. One is that you can’t really do apps and productivity yet because the screens aren’t high enough resolution to read text, so we can’t yet work in a 360 degree virtual sphere, and that will come. Another is that the headsets need to be even smaller and even lighter, and do pass-through so you can see the room around you. Yet another is that we just have to keep waiting, and in particular wait for a larger installed base (presumably driven by those deep-and-narrow games sales), and the innovation will somehow kick in.

There’s nothing fundamentally illogical about any of these ideas, but they do remind me a little of Karl Popper’s criticism of Marxists - that when asked why their supposedly scientific prediction hadn’t happened yet they would always say ‘ah, the historical circumstances aren’t right - you just have to wait a few more years’. There is also, of course, the tendency of Marxists to respond to being asked why communist states seem always to turn out badly by saying ‘ah, but that isn’t proper communism’. I seem to hear ‘ah, but that isn’t proper VR’ an awful lot these days.

To put this another way, it’s quite common to say that the iPhone, or PCs, or aircraft also looked primitive and useless once, but they got better, and the same will happen here. The problem with this is that the iPhone or the Wright Flier were indeed primitive and impractical, but they were breakthroughs of concept with clear paths for radical improvement. The iPhone had a bad camera, no apps and no 3G, but there was no reason why those couldn’t quickly be added. Blériot flew across the Channel just six years after the Wrights’ first powered flight. What’s the equivalent forward path here? There was an obvious roadmap for getting from a duct-taped mock-up to the Oculus Quest, and today for making the Quest even smaller and lighter, but what is the roadmap for breaking into a completely different model of consumer behaviour or consumer application? What specifically do you have to believe will change to take VR beyond games?

Poking away at this a bit further, I think there are maybe four propositions to think about.

Is it true that we are essentially almost there, and a bit more iteration of the hardware and the developer ecosystem will get us to a tipping point, and the S Curve will turn upwards? How would we know?

Are we where smartphones were before the iPhone? All of the core technology was there - we had apps and touch screens and fast data networks and so on - but we needed a change in the paradigm to package them all up in a much more accessible form. Is Oculus the new Symbian? It’s worth noting that no-one was really saying this about mobile before the iPhone - as I wrote here, the need for a new approach was only obvious in hindsight.

Is there a fundamental contradiction between a universally accessible experience and a device that you put on your head that shuts out the world around you and places you into an alternative reality? Is ‘VR that isn’t deep and narrow’ an oxymoron? That, after all, was the answer for games consoles. I suspect a lot of people in tech would reject this out of hand - the right VR, when we have it, must be the future, but one can’t actually take it as a given.

Or, by extension, is this the point - that ‘real’ VR needs some completely different device and that’s what would take it to universality? VR as HMD is narrow but VR as, say, neural lace is not?

Reading Mark’s quote above, as he talks about the merging of AR and VR, it strikes me that this and many visions for VR (cf ‘Ready Player One’) are really describing not ‘an HMD but a bit better’ but glasses, or perhaps contact lenses, or maybe even something even further into the future like neural implants. On that basis I think you could argue that even the Oculus Quest is not 3/4 of the way ‘there’ but actually still just at the beginning of the VR S Curve. The successor to the smartphone will be something that doesn’t just merge AR and VR but make the distinction irrelevant - something that you can wear all day every day, and that can seamlessly both occlude and supplement the real world and generate indistinguishable volumetric space. On that view the Oculus isn’t the iPhone - it’s the Newton, or the Apple 2, which were also far from universal, and the platonic ideal universal device is a decade or two into the future.

In turn, the trouble with this argument is that when tech people take about ‘ten years’ or ‘twenty years’, they are effectively right on the edge of science fiction - my grandfather wrote a lot of science fiction, but I try to think about the stuff we have now, and the roadmaps we have now that might tell us what we can build next. But if ‘real VR’ needs something that’s ten or twenty years away, we’re in for another VR winter.

Pulling all of these threads together, the issue I circle around is not just that we don’t have a ‘killer app’ for VR beyond games, but that we don’t know what the path to getting one might be. We can make assertions of belief from first principles - there was no killer app for the first PCs either, but they looked useful. When I started my career 3G was the hot topic, and every investor kept asking ‘what’s the killer app for 3G?’ It turned out that the killer app for having the internet in your pocket was, well, having the internet in your pocket. But with each of those, we knew what to build next, and with VR we don’t. That tells me that VR has a place in the future. It just doesn’t tell me what kind of place.

Choose One

A few days ago, in Figuring the Future, I sourced an Arnold Kling blog post that posed an interesting pair of angles toward outlook: a 2×2 with Fragile <—> Robust on one axis and Essential <—> Inessential on the other. In his sort, essential + fragile are hospitals and airlines. Inessential + fragile are cruise ships and movie theaters. Robust + essential are tech giants. Inessential + robust are sports and entertainment conglomerates, plus major restaurant chains. It’s a heuristic, and all of it is arguable (especially given the gray along both axes), which is the idea. Cases must be made if planning is to have meaning.

Now, haul Arnold’s template over to The U.S. Labor Market During the Beginning of the Pandemic Recession, by Tomaz Cajner, Leland D. Crane, Ryan A. Decker, John Grigsby, Adrian Hamins-Puertolas, Erik Hurst, Christopher Kurz, and Ahu Yildirmaz, of the University of Chicago, and lay it on this item from page 21:

The highest employment drop, in Arts, Entertainment and Recreation, leans toward inessential + fragile. The second, in Accommodation and Food Services is more on the essential + fragile side. The lowest employment changes, from Construction on down to Utilities, all tending toward essential + robust.

So I’m looking at those bottom eight essential + robust categories and asking a couple of questions:

1) What percentage of workers in each essential + robust category are now working from home?

2) How much of this work is essentially electronic? Meaning, done by people who live and work through glowing rectangles, connected on the Internet?

Hard to say, but the answers will have everything to do with the transition of work, and life in general, into a digital world that coexists with the physical one. This was the world we were gradually putting together when urgency around COVID-19 turned “eventually” into “now.”

In Junana, Bruce Caron writes,

“Choose One” was extremely powerful. It provided a seed for everything from language (connecting sound to meaning) to traffic control (driving on only one side of the road). It also opened up to a constructivist view of society, suggesting that choice was implicit in many areas, including gender.

Choose One said to the universe, “There are several ways we can go, but we’re all going to agree on this way for now, with the understanding that we can do it some other way later, thank you.” It wasn’t quite as elegant as “42,” but it was close. Once you started unfolding with it, you could never escape the arbitrariness of that first choice.

In some countries, an arbitrary first choice to eliminate or suspend personal privacy allowed intimate degrees of contract tracing to help hammer flat the infection curve of COVID-19. Not arbitrary, perhaps, but no longer escapable.

Other countries face similar choices. Here in the U.S., there is an argument that says “The tech giants already know our movements and social connections intimately. Combine that with what governments know and we can do contact tracing to a fine degree. What matters privacy if in reality we’ve lost it already and many thousands or millions of lives are at stake—and so are the economies that provide what we call our ‘livings.’ This virus doesn’t care about privacy, and for now neither should we.” There is also an argument that says, “Just because we have no privacy yet in the digital world is no reason not to have it. So, if we do contact tracing through our personal electronics, it should be disabled afterwards and obey old or new regulations respecting personal privacy.”

Those choices are not binary, of course. Nor are they outside the scope of too many other choices to name here. But many of those are “Choose Ones” that will play out, even if our choice is avoidance.

The Ideal iPhone App First-Run Experience Is None At All

Consider the person who finds your app on the iOS App Store. They decide they want your app: they tap the get or purchase button.

The app downloads — hopefully quickly; hopefully you‘ve made it small, because you’ve pictured this moment and you care about even this aspect of the user experience — and then the user taps the Open button.

They’ve already waited to see the app. They’re excited to see what it’s like and get started using it!

And, now that it’s here, you can put a bunch of obstacles in their way — which will cause you to lose some of these people — or you can satisfy their interest and curiosity right away by getting them into the app.

* * *

Here’s me: when I download an app with a first-run tutorial, I try to find a way to short-circuit it and get to the actual app. If I can’t, I just race through it, knowing I wouldn’t have remembered any of it anyway.

Either I can figure out the app later or I can’t.

Similarly, if an app has first-run setup to do, I try to avoid it. If it has first-run setup and a tutorial, I’ll just give up unless I know for absolute sure that I want this app.

* * *

Isn’t there some quote, maybe even from Steve Jobs, about apps early in the day of the App Store, that went something like this? “iPhone apps should be so easy to use that they don’t need Help.”

I’ve always thought to myself, since then, that if I see a first-run tutorial, they blew it. Apps should be designed so that you can figure out the basics quickly, and then find, through progressive disclosure, more advanced features.

It seems to me that the best first-run experience is to get people into the app as quickly as possible, because that’s where they want to be.

They’ve already waited long enough — finding the app, downloading it — and now you want to delay the joy even longer, and thereby tarnish or even risk it? Don’t do it!

Remember that people are busy, often distracted, and there are zillions of other apps. Your app is not the world. The person is the world.

* * *

Also:

Remember that every single thing in your app sends a message. A first-run tutorial sends the message that your app has a steep learning curve. Definitely a turn-off.

It provokes anxiety in the user immediately, in two related ways: 1) “Will I ever be able to learn this apparently hard-to-use app?” and 2) “Will I remember any of this tutorial at all? Do I need to get out pen and paper and take notes?”

And that’s the first impression. Your app makes the user anxious.

pyp: Easily run Python at the shell

pyp: Easily run Python at the shell

Fascinating little CLI utility which uses some deeply clever AST introspection to enable little Python one-liners that act as replacements for all manner of pipe-oriented unix utilities. Took me a while to understand how it works from the README, but then I looked at the code and the entire thing is only 380 lines long. There's also a useful --explain option which outputs the Python source code that it would execute for a given command.

Via Show HN

Weeknote 19/2020

I think this was the week when it began to sink in how long all of this is going to affect us for. I’m not sure if it was going through the playable simulations in What Happens Next? or realising the extent of the incompetence of the UK government, but either way, the world seems different than it did last Sunday.

Two days on MoodleNet, two days for We Are Open Co-op, and a day’s Bank Holiday — the latter moved from the usual first Monday of May to commemorate the 75th anniversary of Victory in Europe (or ‘VE’) Day. The way some people here were talking about it, I think we need to remember that it was was fighting alongside allies to defeat fascism, rather than some kind of victory over Europe.

I enjoyed working on the co-op stuff this week. We were wrapping up a short project through The Catalyst, which paired us and another organisation with a charity making an emergency pivot to digital. I think there will be plenty more work in a similar vein in the coming weeks and months.

On the MoodleNet side of things, I did a debrief with Johanna Sprondel after her MA students at Macromedia University in Germany worked on a draft crowdfunding plan. I was impressed with what they came up with, and enjoyed working with them over the last few weeks.

Moodle News this week published an article I (mostly) wrote entitled 3 tips for first-time remote educators. Other than that, my time was mainly taken up with management, team and 1:1 meetings. My role at Moodle has largely gone from one that involved a lot of innovation to one that now involves a lot of management.

I’ve agreed to keynote a UK university’s internal staff conference (whatever ‘keynote’ means these days!) I get confirmation that it’s definitely going ahead in the next couple of weeks, and then I can start planning a talk on post-pandemic digital skills.

The most enjoyable thing this week was the socially-distanced quiz we had with neighbours. I live on a row of eight terraced houses, with one detached house at the end. We all share a back lane. In the glorious sunshine on Friday evening, we ate, drank, chatted, and answered questions that we’d each come up with. It was marvellous, and really lifted my spirits.

On the Thought Shrapnel front, I published an article entitled Happiness is when what you think, what you say, and what you do are in harmony in which I cited Buster Benson’s ‘Codex Vitae’ and came up with 10 aphorisms of my own. I also put together my usual link roundup, this week entitled Saturday seductions.

Next week will be a new normal week, working on Moodle stuff Monday, Tuesday, and Friday, and co-op stuff on Wednesday and Thursday. It will be 20 years on Thursday since, at the university ball, I plucked up the courage to kiss the woman who became my wife. I am a lucky man.

Photo of a tree in Bluebell Wood, near where I live.

Software Can Make You Feel Alive, or It Can Make You Feel Dead

This week I read one of Craig Mod's old essays and found a great line, one that everyone who writes programs for other people should keep front of mind:

When it comes to software that people live in all day long, a 3% increase in fun should not be dismissed.

Working hard to squeeze a bit more speed out of a program, or to create even a marginally better interaction experience, can make a huge difference to someone who uses that program everyday. Some people spend most of their professional days inside one or two pieces of software, which accentuates further the human value of Mod's three percent. With shelter-in-place and work-from-home the norm for so many people these days, we face a secondary crisis of software that is no fun.

I was probably more sensitive than usual to Mod's sentiment when I read it... This week I used Blackboard for the first time, at least my first extended usage. The problem is not Blackboard, of course; I imagine that most commercial learning management systems are little fun to use. (What a depressing phrase "commercial learning management system" is.) And it's not just LMSes. We use various PeopleSoft "campus solutions" to run the academic, administrative, and financial operations on our campus. I always feel a little of my life drain away whenever I spend an hour or three clicking around and waiting inside this large and mostly functional labyrinth.

It says a lot that my first thought after downloading my final exams on Friday morning was, "I don't have to login to Blackboard again for a good long while. At least I have that going for me."

I had never used our LMS until this week, and then only to create a final exam that I could reliably time after being forced into remote teaching with little warning. If we are in this situation again in the fall, I plan to have an alternative solution in place. The programmer in me always feels an urge to roll my own when I encounter substandard software. Writing an entire LMS is not one of my life goals, so I'll just write the piece I need. That's more my style anyway.

Later the same morning, I saw this spirit of writing a better program in a context that made me even happier. The Friday of finals week is my department's biennial undergrad research day, when students present the results of their semester- or year-long projects. Rather than give up the tradition because we couldn't gather together in the usual way, we used Zoom. One student talked about alternative techniques for doing parallel programming in Python, and another presented empirical analysis of using IR beacons for localization of FIRST Lego League robots. Fun stuff.

The third presentation of the morning was by a CS major with a history minor, who had observed how history profs' lectures are limited by the tools they had available. The solution? Write better presentation software!

As I watched this talk, I was proud of the student, whom I'd had in class and gotten to know a bit. But I was also proud of whatever influence our program had on his design skills, programming skills, and thinking. This project, I thought, is a demonstration of one thing every CS student should learn: We can make the tools we want to use.

This talk also taught me something non-technical: Every CS research talk should include maps of Italy from the 1300s. Don't dismiss 3% increases in fun wherever they can be made.

Quoting Tom MacWright

And for what? Again - there is a swath of use cases which would be hard without React and which aren’t complicated enough to push beyond React’s limits. But there are also a lot of problems for which I can’t see any concrete benefit to using React. Those are things like blogs, shopping-cart-websites, mostly-CRUD-and-forms-websites. For these things, all of the fancy optimizations are optimizations to get you closer to the performance you would’ve gotten if you just hadn’t used so much technology.

Second-guessing the modern web

The emerging norm for web development is to build a React single-page application, with server rendering. The two key elements of this architecture are something like:

- The main UI is built & updated in JavaScript using React or something similar.

- The backend is an API that that application makes requests against.

This idea has really swept the internet. It started with a few major popular websites and has crept into corners like marketing sites and blogs.

I’m increasingly skeptical of it.

There is a sweet spot of React: in moderately interactive interfaces. Complex forms that require immediate feedback, UIs that need to move around and react instantly. That’s where it excels. I helped build the editors in Mapbox Studio and Observable and for the most part, React was a great choice.

But there’s a lot on either side of that sweet spot.

The high performance parts aren’t React. Mapbox GL, for example, is vanilla JavaScript and probably should be forever. The level of abstraction that React works on is too high, and the cost of using React - in payload, parse time, and so on - is too much for any company to include it as part of an SDK. Same with the Observable runtime, the juicy center of that product: it’s very performance-intensive and would barely benefit from a port.

The less interactive parts don’t benefit much from React. Listing pages, static pages, blogs - these things are increasingly built in React, but the benefits they accrue are extremely narrow. A lot of the optimizations we’re deploying to speed up these things, things like bundle splitting, server-side rendering, and prerendering, are triangulating what we had before the rise of React.

And they’re kind of messy optimizations. Here are some examples.

Bundle splitting.

As your React application grows, the application bundle grows. Unlike with a traditional multi-page app, that growth affects every visitor: you download the whole app the first time that you visit it. At some point, this becomes a real problem. Someone who lands on the About page is also downloading 20 other pages in the same application bundle. Bundle splitting ‘solves’ this problem by creating many JavaScript bundles that can lazily load each other. So you load the About page and what your browser downloads is an ‘index’ bundle, and then that ‘index’ bundle loads the ‘about page’ bundle.

This sort of solves the problem, but it’s not great. Most bundle splitting techniques require you to load that ‘index bundle’, and then only once that JavaScript is loaded and executed does your browser know which ‘page bundle’ it needs. So you need two round-trips to start rendering.

And then there’s the question of updating code-split bundles. User sessions are surprisingly long: someone might have your website open in a tab for weeks at a time. I’ve seen it happen. So if they open the ‘about page’, keep the tab open for a week, and then request the ‘home page’, then the home page that they request is dictated by the index bundle that they downloaded last week. This is a deeply weird and under-discussed situation. There are essentially two solutions to it:

- You keep all generated JavaScript around, forever, and people will see the version of the site that was live at the time of their first page request.

- You create a system that alerts users when you’ve deployed a new version of the site, and prompt them to reload.

The first solution has a drawback that might not be immediately obvious. In those intervening weeks between loading the site and clicking a link, you might’ve deployed a new API version. So the user will be using an old version of your JavaScript frontend with a new version of your API backend, and they’ll trigger errors that none of your testing knows about, because you’ll usually be testing current versions of each.

And the second solution, while it works (and is what we implemented for Mapbox Studio), is a bizarre way for a web application to behave. Prompting users to ‘update’ is something from the bad old days of desktop software, not from the shiny new days of the web.

Sure: traditional non-SPA websites are not immune to this pitfall. Someone might load your website, have a form open for many weeks, and then submit it after their session expired or the API changed. But that’s a much more limited exposure to failure than in the SPA case.

Server-Side Rendering

Okay, so the theory here is that SPAs are initially a blank page, which is then filled out by React & JavaScript. That’s bad for performance: HTML pages don’t need to be blank initially. So, Server-Side Rendering runs your JavaScript frontend code on the backend, creating a filled-out HTML page. The user loads the page, which now has pre-rendered content, and then the JavaScript loads and makes the page interactive.

A great optimization, but again, caveats.

The first is that the page you initially render is dead: you’ve created the Time To Interactive metric. It’s your startup’s homepage, and it has a “Sign up” button, but until the JavaScript loads, that button doesn’t do anything. So you need to compensate. Either you omit some interactive elements on load, or you try really hard to make sure that the JavaScript loads faster than users will click, or you make some elements not require JavaScript to work - like making them normal links or forms. Or some combination of those.

And then there’s the authentication story. If you do SSR on any pages that are custom to the user, then you need to forward any cookies or authentication-relevant information to your API backend and make sure that you never cache the server-rendered result. Your formerly-lightweight application server is now doing quite a bit of labor, running React & making API requests in order to do this pre-rendering.

APIs

The dream of APIs is that you have generic, flexible endpoints upon which you can build any web application. That idea breaks down pretty fast.

Most interactive web applications start to triangulate on “one query per page.” API calls being generic or reusable never seems to persist as a value in infrastructure. This is because a large portion of web applications are, at their core, query & transformation interfaces on top of databases. The hardest performance problems they tend to have are query problems and transfer problems.

For example: a generically-designed REST API that tries not to mix ‘concerns’ will produce a frontend application that has to make lots of requests to display a page. And then a new-age GraphQL application will suffer under the N+1 query problem at the database level until an optimization arrives. And a traditional “make a query and put it on a page” application will just, well, try to write some good queries.

None of these solutions are silver bullets: I’ve worked with overly-strict REST APIs, optimization-hungry GraphQL APIs, and hand-crafted SQL APIs. But no option really lets a web app be careless about its data-fetching layer. Web applications can’t sit on top of independently-designed APIs: to have a chance at performance, the application and its datasource need to be designed as one.

Data fetching

Speaking of data fetching. It’s really important and really bizarre in React land. Years ago, I expected that some good patterns would emerge. Frankly, they didn’t.

There are decent patterns in the form of GraphQL, but for a React component that loads data with fetch from an API, the solutions have only gotten weirder. There’s great documentation for everything else, but old-fashioned data loading is relegated to one example of how to mock out ‘fetch’ for testing, and lots of Medium posts of varying quality.

Don’t read this as anti-React. I still think React is pretty great, and for a particular set of use cases it’s the best tool you can find. And I explicitly want to say that – from what I’ve seen – most other Single-Page-Application tools share most of these problems. They’re issues with the pattern, not the specific frameworks used to implement it. React alternatives have some great ideas, and they might be better, but they are ultimately really similar.

But I’m at the point where I look at where the field is and what the alternative patterns are – taking a second look at unloved, unpopular, uncool things like Django, Rails, Laravel – and think what the heck is happening. We’re layering optimizations upon optimizations in order to get the SPA-like pattern to fit every use case, and I’m not sure that it is, well, worth it.

And it should be easy to do a good job.

Frameworks should lure people into the pit of success, where following the normal rules and using normal techniques is the winning approach.

I don’t think that React, in this context, really is that pit of success. A naïvely implemented React SPA isn’t stable, or efficient, and it doesn’t naturally scale to significant complexity.

You can add optimizations on top of it that fix those problems, or you can use a framework like Next.js that will include those optimizations by default. That’ll help you get pretty far. But then you’ll be lured by all of the easy one-click ways to add bloat and complexity. You’ll be responsible for keeping some of these complex, finicky optimizations working properly.

And for what? Again - there is a swath of use cases which would be hard without React and which aren’t complicated enough to push beyond React’s limits. But there are also a lot of problems for which I can’t see any concrete benefit to using React. Those are things like blogs, shopping-cart-websites, mostly-CRUD-and-forms-websites. For these things, all of the fancy optimizations are trying to get you closer to the performance you would’ve gotten if you just hadn’t used so much technology.

I can, for example, guarantee that this blog is faster than any Gatsby blog (and much love to the Gatsby team) because there is nothing that a React static site can do that will make it faster than a non-React static site.

But the cultural tides are strong. Building a company on Django in 2020 seems like the equivalent of driving a PT Cruiser and blasting Faith Hill’s “Breathe” on a CD while your friends are listening to The Weeknd in their Teslas. Swimming against this current isn’t easy, and not in a trendy contrarian way.

I don’t think that everyone’s using the SPA pattern for no reason. For large corporations, it allows teams to work independently: the “frontend engineers” can “consume” “APIs” from teams that probably work in a different language and can only communicate through the hierarchy. For heavily interactive applications, it has real benefits in modularity, performance, and structure. And it’s beneficial for companies to shift computing requirements from their servers to their customers browsers: a real win for reducing their spend on infrastructure.

But I think there are a lot of problems that are better solved some other way. There’s no category winner like React as an alternative. Ironically, backends are churning through technology even faster than frontends, which have been loyal to one programming language for decades. There are some age-old technologies like Rails, Django, and Laravel, and there are a few halfhearted attempts to do templating and “serve web pages” from Go, Node, and other new languages. If you go this way, you’re beset by the cognitive dissonance of following in the footsteps of enormous projects - Wikipedia rendering web pages in PHP, Craigslist rendering webpages in Perl - but being far outside the norms of modern web development. If Wikipedia were started today, it’d be React. Maybe?

What if everyone’s wrong? We’ve been wrong before.

Public computing and two ideas for touchless interfaces

Think about ATMs, or keypads on vending machines, or Amazon lockers, or supermarket self-checkout, or touchscreens on kiosks to buy train tickets. Now there’s a virus that spreads by touch, how do we redesign these shared interfaces?

(This post prompted because I know two people who have separately been grappling with public computing recently. There must be something in the air. These are two ideas I came up with in those conversations.)

Idea #1. QR codes, augmented reality, and the unreal real

The obvious approach is to move the control surface to a smartphone app, just like the Zipcar app lets me unlock my rental or sound the horn. But as an answer, it’s pretty thin… how does a person discover the smartphone app is there to be used? How do you ensure, in a natural fashion, that only the person actively “using” the ticket machine or locker is using the app, and everyone else has to wait their turn? A good approach would deal with these interaction design concerns too.

So, imagine your train ticket machine. Because of the printer, it’s a modal device: although it’s public, only one person can use it at a time.

Let’s get rid of the touchscreen and replace it with a big QR code.

Scan this code with your smartphone camera, and the QR code is magically replaced - in the camera view - with an interactive, 3D, augmented reality model of what the physical interface would be: menu options, a numeric keypad, and so on.

There’s something that tickles me about the physicality of the interface only visible through the smartphone camera viewfinder.

How does it work? An exercise for the reader… the iPhone can launch a website directly from a QR code seen in the camera view. So perhaps that website includes a webcam view which can add the augmented reality interface? Or perhaps it triggers an app download which similarly includes the camera view? (Android has the ability to run mini Instant Apps direct from the store; there are rumours about iOS doing the same.)

The point is to make the transition from the QR code to the AR interface as invisible and immediate as possible. No intermediate steps or confirmations or changes in metaphor: it should feel like your phone is a little window that you’re reaching through to work with the computer, like using a scientific glovebox in a chemistry laboratory, and you’re just moving it into position.

The bonus here is that the interface can only be used while the user is standing directly in front of it, so the “one person at a time” nature of the machine is communicated through natural physicality. I don’t think you get that with apps; an app tethered to a place would feel wrong.

Idea #2. Gestures for no-touch touchscreens

The starting point here is a kiosk with a touchscreen.

Obviously we don’t want the touchscreen to be touched by the general public with their filthy, virus-infested fingers. So, instead, use a tablet with a camera in it, but the screen of the tablet is not intended to be touched. The camera instead recognises hand gestures such as

- point at screen left/right/up/down (to select)

- brush left/right (to browse or dismiss)

- fist (hold for 5 seconds to confirm)

- count with fingers from 1 to 5 (more sophisticated, for input)

The inspiration is this gorgeous Rock Paper Scissors browser game that uses machine learning and the webcam. That is, the web browser activates your webcam, and you make a fist (rock), flat hand (paper), or scissors gesture, and the A.I. which is also running in the web browser recognises it, and then the computer makes its move. All without hitting the server.

Check out the live graph in the background of that site. It provides a view of the classifier internals - how confident the machine is in recognising your gesture.

What this tells me is that all of this can be done with a web browser and a tablet with a camera in it. For robustness, stick the tablet inside the shop window, looking out through the glass. Set the web browser to show the live feed from the webcam, providing discoverability: people will see the moving image, understand it as a mirror, and experiment with gestures.

It would be like a touchscreen with very large buttons, only you wave at it to interact.

Look, the Minority Report gestural interface is cool but dumb because your arms get knackered in like 30 seconds. But just using your hands or fingers? I could live with a future where we do tiny techno dancing at our devices to interact with them.

Whatever the approaches, the important considerations for public computing interfaces would seem to be:

- Discoverability (how do you know the interface is there? Public computing has a ton of first-time users)

- Privacy/security (think of using an ATM on a public street)

- Familiarity (like, weird is fun, but not too weird…)

- Accessibility

- Viability

On accessibility, I’m into Microsoft’s Inclusive Design approach - to see it summarised in a single graphic, scroll to the permanent/temporary/situation diagram here: accommodations might be required for visual impairments, but a person with a cataract has temporary blindness; a distracted driver has situational blindness. For me, understanding situational accessibility (like, having my arms full of shopping or a wriggling toddler so I can’t press a touchscreen) really made me start thinking about accessibility in a much broader way.

Viability is about the commercial and physical reality of public computing interfaces: can it withstand being used 100s of times daily, is it reliable in the rain, is it cheap, etc.

BUT, LIKE, ALSO:

Touchscreens with cameras, web browsers with computer vision, broadly deployed smartphones, augmented reality, voice: these technologies weren’t around when the last generation of public computing interfaces was being invented. It might be worth experimenting to see what else can be done?

Is Meritocracy an Idea Worth Saving?

The problem with meritocracy is that "there’s huge correlation between the kind of material support that people have, and their ability to perform on the kind of exams that allow people to get into colleges." So 'merit' turns out to be a proxy for 'wealth'. And as Ross Douthat says, "That is what Harvard is in the business of doing, the sort of condensation of networking and people having access to each other’s ideas and family connections and all the rest. That’s what Harvard institutionally is committed to."

Web: [Direct Link] [This Post]Oliver's Unconference

Oliver is organizing an unconference, in Zoom, for this Friday.

Because we have all been confined to our homes for many weeks, the theme of the unconference, taking off from the theme of earlier unconferences, is “House Stuff that Matters” (HSTM), and we will gather and seek to answer the questions:

What have you learned from the pandemic that you want to keep for the future?

What do you like about the place where you live?

All are welcome to attend. Spread the word.

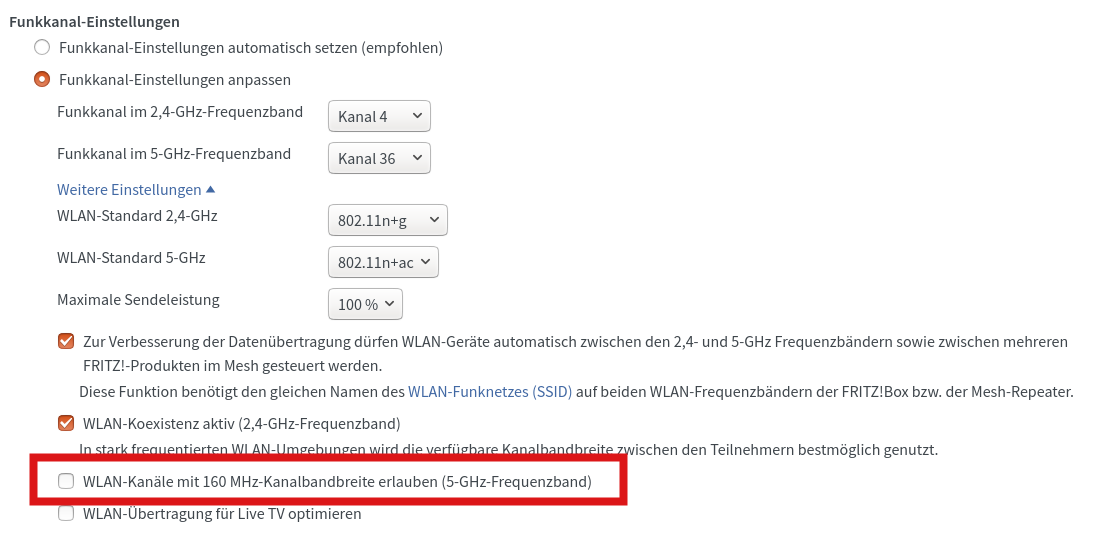

802.11ac – 160 MHz Is Coming To My Home – At Least In Theory

About two years ago I bought my current VDSL + Wifi Access Point router, a Fritzbox 7590. It is still AVM’s flagship model to this date with 802.11ac Wifi-5 support and it is good to see that the manufacturer continues to develop the software to this day. Especially the Wifi functionality around Mesh and 5 GHz operation keeps getting better and better.

About two years ago I bought my current VDSL + Wifi Access Point router, a Fritzbox 7590. It is still AVM’s flagship model to this date with 802.11ac Wifi-5 support and it is good to see that the manufacturer continues to develop the software to this day. Especially the Wifi functionality around Mesh and 5 GHz operation keeps getting better and better.

160 MHz Channels!

In particular I have noticed two new functions: One function that has already made it into the release version is support of 160 MHz bandwidth in the 5 GHz range. Previously, operation was ‘limited’ to 80 MHz bandwidth. This suited me just fine so far for two reasons. The problem in Germany and in many other parts of the world is that only the first 80 MHz of the 5 GHz range can be used without a 10 minute Dynamic Frequency Sharing (DFS) backoff. Selecting channel 52 or higher always meant that for 10 minutes after booting or after a config change, the 5 GHz band is not available while the router listens for weather radar signals and other higher priority users of the band. That means that I wouldn’t even have considered a 160 MHz channel because that would implicitly have meant a 10 minute wait time.

Intelligent DFS Handling

The DFS backoff time is the second thing AVM has worked on for the next feature release. If channel 52 or higher is selected, the router will use channel 36-48 for the first 10 minutes after booting or reconfiguration and offer service over an 80 MHz channel there. At the same time, the router observes the rest of the band for weather radar and other signals. If not found, the router then moves the channel to the desired target location in the band. Very cool! Whether this only works for 80 MHz channels or also for 160 MHz channels is not quite clear. I found one page that says that the dynamic-DFS feature is limited to 80 MHz channels and deactivated for 160 MHz operation, while another page says it works with 160 MHz channels as well. Time will tell.

160 MHz Won’t Help Much At The Moment

Two slight problems though: The notebooks in the household are limited to 802.11ac 80 MHz operation at best. And 160 MHz operation would be particularly useful with my notebooks, e.g. for large file transfers to my local cloud or between notebooks, which I do quite often. This is not going to change anytime soon as even the Lenovo X270 models only support 80 MHz operation. Only the latest X390 model supports 802.11ax (Wifi-6) and 160 MHz operation.

And the second problem: Already today, I can ‘only’ get around 300 Mbit/s out of the channel at my desk compared to the 600 Mbit/s when I move closer to the router. So I’m not sure 160 MHz channels would help me any. Perhaps switching the AC into 160 MHz mode would even been counter productive as the transmit power and hence reception level at my desk would drop to half the current value, as the available power has to cover twice as much bandwidth. Hm, perhaps a Mesh setup with a repeater would help, if the repeater could use the full 160 MHz bandwidth? That’s an interesting though for another post.

Physical Distancing Shopping Style~Bollards On Granville Street, Cooped Up on Commercial Drive, & Rueing about Robson

Historian and author John Atkin posted this image he took last Fall of the new work on downtown Granville Street that involved installing a whole bunch of bollards on the south end of the street right before the bridge. As John notes

“Sorting thru old photos, found this shot of the recently completed Granville St redo… In light of the ongoing sidewalk space discussion maybe its time to get rid of this waste of space given to bollards marking sidewalk parking for cars… hmmm.”

Covid spacing requirements mean we need to look at our downtown spaces and places a bit differently and ensure people are comfortable getting outdoors with appropriate physical distancing and patronizing local merchants who badly need the business.

I also found this blog on Downtown Vancouver Bollards by Reliance Foundry that enthusiastically sees bollards as “a form of communication”. There’s also been discussions about security bollards to be placed on the street at night following the horrible attack on Toronto Streets.

Covid distancing requirements of that requisite two meters means there’s a need to be a bit more creative on the use of the street. And it’s clear we are not there yet in commercial areas, as these two images from Simon Fraser University’s City Program Director Andy Yan illustrates. Here’s a group of people waiting to get into a grocery store on Commercial Drive. There’s not enough distance on the sidewalk, and so the potential customers are relegated to the street.

And surprise! The bus is trying to use the street too. As Andy Yan states, “This is NOT the best way of making public sidewalk space with the #covid19 rules.”

Kristen Robinson of Global TV has produced a film clip outlining some of the physical distancing challenges on Robson Street as merchants look forward to opening their businesses. At this point retailers are not asking for a full road closure but want to have enough space on sidewalks and in the parking lane to ensure that potential customers can line up with appropriate physical distancing. This has already been done using barricades in the parking lane at several businesses. You can take a look at Global TV’s video here.

Many cities around the world are using the Covid opportunity of the need for physical distancing as a way to reboot their economy by using streets in a different way.

I have been writing on Price Tags about the importance for the City of providing a street network for transportation by walking, rolling and cycling throughout the city. A plan needs to be developed for Vancouver commercial areas too to give them a boost.

Data from Transport for London (TfL) found that street improvements for walking and cycling increased time spent on retail streets by 216% . Retail space vacancies declined by 17%.

But the best news, and this is also in line with research conducted in Toronto and in New York City “people walking, cycling and using public transport spend the most money in their local shops, spending 40% more each month than car drivers”.

Covid concerns add another layer of worry for consumers who will want to support businesses and also keep themselves and their family safe and happy. Creating comfortable physical distancing opportunities in these commercial areas are not solely about taking over vehicular space. This conversation is about boosting consumer confidence and safety. Local businesses deserve a fair and equitable chance to survive and thrive during a biomedical pandemic, and they can only do that with confident customers who feel safe.

Image: CBC

The Case for a Universal Basic Income in the Time of COVID-19

This might not seem to have anything to do with education, but I invite you to think about what it would mean for learning and development if we had, on the one hand, a universal basic income, and on the other hand, free and open access to courses and other learning resources. In this way, education would cease to be reserved for those who are privileged, but instead would become available to everyone who wanted to learn. Would there still be challenges, issues with equity, and hurdles to overcome? Absolutely. But would it be an enormous advance over the current system? Without a doubt.

Web: [Direct Link] [This Post]Jan Gehl, the Covid Crisis & The Future of Tomorrow

Toronto’s Janes Walk did a Covid required shift from their usual fine walks and included an online experience which they could of course share across the country. They had some great virtual walks, some great panels, and they had a plenary opener that included Jan Gehl, who with his writing and his interest in people and places personifies much of what placemaking should be.

In these Covid times it was inspiring to have Jan talk about his perspective on the Covid crisis, and also hear what the post-Covid city will look like. Jan married psychologist Ingrid Mundt in 1961. It was her influence that completely changed his work as an architect and as a designer. Jan’s formal training had taught him the importance of modernity in design, with sweeping lawns, high rise buildings and public spaces, but he now sees that as “windy”. They are activated not by people but by weather and perception.

Ingrid’s influence taught him that public space needed to be personable, and in his practice he now sees public space as an important essential service. She also drew his attention to the gap between the built environment and how people FELT about being in places.

In 1965 Jan and Ingrid spent six months in public squares in Italy observing behaviour. An article in the local paper explained why people in Asconi might see a “beatnik” sitting in a corner of one of the town squares. This work became the basis for his books and for his philosophy behind Gehl Architects.

Jan had a written correspondence with Jane Jacobs, and first met her in 2001. He said one of their central discussions had been about the “New Urbanism” movement, and in Jan’s words “Whether it had clothes or not” or was just a rehash of what was tried and true.

And that brings Jan to the Covid discussion. In Denmark the Covid motto translates to “All have to be close together to stay apart”.

His “doctor daughter” has told Jan that he will be in lock down and physically isolating until the end of the year. With this news Jan and Ingrid Gehl have been going to and exploring various parts of Copenhagen on their own, rediscovering city spaces and revisiting favourites. Jan has an unwavering faith in “Homo sapiens” and says we will have our lively places back. Jan sees the Covid crisis as a wakeup call, asking for redirecting resources to a greener, more sustainable way of living.

Jan expects the following as new trends:

- there will be an increase in home deliveries and “those bloody vans” as people stay behind the screens of their house.

- the increase in personal car usage post -Covid will be temporary. You cannot dismiss that every kilometer ridden by a bike gives 25 cents back to society, while every car kilometer takes away 17 cents.

- Bicycle usage will increase, and smart cities will provide dedicated road space for that increased usage.

- There will be a major shift in storefront and in shop use as businesses struggle to survive. Storefronts need to be animated and occupied, and a fulsome discussion needs to occur about who will pay to ensure that storefronts remain operating in some type of business or use.

- There will be less “senseless travelling” with air fares increasing. People need to realize that previously air travel has been “unrealistically cheap“. “Mass tourism ruin places”.

- Cruise ships don’t give to the economy of places other than quick daytime retail transactions. They pollute and they need to stop.

Jan sees the link between public health and planning as self-evident and perceives that public space needs to be designed for good times and bad, as an experience individually, with small family groups, or in crowds. Resiliency is key in his messaging.

As Jan states:

“In a Society becoming steadily more privatized with private homes, cars, computers, offices and shopping centers, the public component of our lives is disappearing. It is more and more important to make the cities inviting, so we can meet our fellow citizens face to face and experience directly through our senses. Public life in good quality public spaces is an important part of a democratic life and a full life.”

You can check out the Jane’s Walk Festival in Toronto here.

And here is one of the snappy videos advertising Toronto’s Jane’s Walks from last year.

S12:E5 - How to not get bogged down in technical debt (Nina Zakharenko)

In this episode we’re talking about technical debt, with Nina Zakharenko, Principal Cloud Developer Advocate at Microsoft. Nina talks about what causes technical debt, what can happen when it gets out of control, and how we can mitigate the accumulation of that debt.

Show Links

- AWS Insiders (sponsor)

- Technical debt

- Hanson

- HTML

- Yahoo! GeoCities

- Java

- Python

- The Recurse Center

- Mainframe computer

- COBOL

- Technical Debt: The code monster in everyone's closet

- Style guide

- PEP 8

- Unit testing

- Code review

- Git

- Microsoft Azure

- Visual Studio Code

- PyCon US

- The Ultimate Guide To Memorable Tech Talks

- CFP

- Open source

Nina Zakharenko

Nina Zakharenko is a developer advocate, software engineer, pythonista, & speaker.

I see you people. And I’m disappointed.

I’m not getting together in person with very many people these days. I connect with all my professional friends on social media and email. And you people are remarkably consistent — you either offer value, or you don’t. Here’s what I value: original, thoughtful content, and information I wouldn’t have seen otherwise. For example: Data, … Continued

The post I see you people. And I’m disappointed. appeared first on without bullshit.

Why we at $FAMOUS_COMPANY Switched to $HYPED_TECHNOLOGY

Why we at $FAMOUS_COMPANY Switched to $HYPED_TECHNOLOGY

Beautiful piece of writing by Saagar Jha. "Ultimately, however, our decision to switch was driven by our difficulty in hiring new talent for $UNREMARKABLE_LANGUAGE, despite it being taught in dozens of universities across the United States. Our blog posts on $PRACTICAL_OPEN_SOURCE_FRAMEWORK seemed to get fewer upvotes when posted on Reddit as well, cementing our conviction that our technology stack was now legacy code."

Via Hacker News

What happens next with your Sonos setup

In four weeks Sonos will release a new client and a new operating system for existing players. Let's call the existing platform S1 and the new one S2.

- If your household contains any "legacy" players , most likely a Connect, Connect:Amp, the original S5/Play:5 or a Bridge, nothing will change. You will remain on S1, you will continue to use your players as is, you will be able to use your streaming services, you will receive bug fixes and security updates. Sonos is not going to add any new features in the future, even if you have supported players. You are basically stuck where you are today.

- If your household contains only supported players, you will migrate all players to S2. This gives you everything in S1, and you will get new features as you previously did. You can also add new players that depend on S2, like the new Arc sound bar.

- Things get tricky, if you have both legacy and supported players, and want to enjoy any of the new features or add new players. Your only option is to run two separate Sonos setups in your household, one running S1 and the other S2. Those two setups will have two different apps and they will not talk to each other.

You will no like this split setup. In the long run, you can either get rid of the legacy players and advance to S2 or stay forever on S1. I have given away all legacy players, so I will be moving on to S2. What is your plan?

Twitter Favorites: [ReneeStephen] I mean I definitely still used SO, but the PHP docs have always been extremely good and, I contend, part of its suc… https://t.co/kb29yx5kP7

I mean I definitely still used SO, but the PHP docs have always been extremely good and, I contend, part of its suc… twitter.com/i/web/status/1…

Samsung rolling out patch for security vulnerability in all its phones since 2014

Samsung is patching a critical security vulnerability that impacts Galaxy phones sold since 2014.

Discovered by Google’s Project Zero, the security flaw is part of how Samsung phones handle a custom image format called ‘Qmage’ (.qmg). Samsung phones began supporting the format in late 2014 and it appears only Samsung modified the Android operating system to support Qmage. In other words, the flaw doesn’t affect other Android devices. The format was developed by South Korean company ‘Quramsoft.’

The vulnerability can be exploited in a ‘zero-click’ scenario. Zero-click vulnerabilities don’t require any user interaction. Further, Project Zero researchers told ZDNet that it’s possible to exploit the flaw without notifying the user.

According to the researchers, attackers could use flaw by sending Qmage files to a Samsung device and exploiting how Android’s graphics library, ‘Skia,’ handles the images. Android redirects all image files sent to a device to the Skia library for processing, which can include generating thumbnail previews. Android does this without users’ knowledge.

Project Zero researchers developed a proof-of-concept demo that exploits the bug against the Samsung Messages app, the default app for handling SMS and MMS communication on Samsung devices. The demo repeatedly sends MMS messages to a phone. These messages attempt to guess the position of the Skia library in Android’s memory, which is necessary to bypass Android’s Address Space Layout Randomization (ASLR) protection. Once the Skia library is located, a final MMS will deliver the Qmage payload, which executes the attacker’s code on the device.

In a video shared by Project Zero researcher Mateusz Jurczyk, you can see the exploit in action. The result is that Jurczyk gains control of the Samsung phone and, for example, is able to launch apps.

However, the process of locating Skia can take between 50 and 300 MMS messages. Researchers said it takes around 100 minutes on average.

Although it may seem like someone would notice hundreds of messages sent over almost two hours, Project Zero says the attack can be modified to send the messages silently without alerting the user.

While Project Zero only tested the exploit against Samsung Messages, researchers note that theoretically, any app running on a Samsung phone capable of receiving a Qmage file could be vulnerable.

Thankfully, Samsung’s May 2020 patch includes a fix for the vulnerability. If you’ve got a Samsung phone, keep an eye out for the May patch and update once it’s available.

Source: ZDNet

The post Samsung rolling out patch for security vulnerability in all its phones since 2014 appeared first on MobileSyrup.

Leaks suggest next iPhone could feature 120Hz display, 3x rear camera

The next iPhone could feature a 120Hz display refresh rate along with a 3x rear camera zoom and improved Face ID, according to recent leaks.

Notable leaker Max Weinbach shared the latest leaks with the ‘EverythingApplePro‘ YouTube channel. Weinbach suggests that the upcoming iPhone 12 Pro will switch between 60Hz and 120Hz to preserve battery life.

It may also feature a larger internal battery to accommodate the high-refresh rate display along with support for 5G. Weinbach notes that the largest upcoming iPhone model could have a 4,400mAh battery, which is a significant increase from the 3,969mAh battery that the current iPhone 11 Pro includes.

The leaks also suggest that the upcoming iPhone will have improved Face ID by featuring a wider angle view to unlock at a wider range.

Further, the telephoto zoom lens could be increased to a 3x optical zoom, which would be an upgrade from the current 2x zoom. MacRumors notes that the upgrade would let users take pictures and videos closer to an object without having reduced quality due to a digital zoom.

The leaks also suggest that the phones will have improved low-light photography with faster autofocus and enhanced image stabilization.

As with any other leak, there’s no guarantee that these features will be included in the next iPhone lineup, as manufacturers often implement last minute changes or drop certain features from a launch.

Source: MacRumors, EverythingApplePro

The post Leaks suggest next iPhone could feature 120Hz display, 3x rear camera appeared first on MobileSyrup.