Here's my Chana Masala recipe. Click on any picture to bring up the lightbox with captions.

Rolandt

Shared posts

Must we mention the war?

|

mkalus

shared this story

from |

There’s relatively little happening as regards Brexit developments this week (although the increasing row over the Northern Ireland Protocol is important), and little new to say about such developments as there are (but see Dr Katy Hayward and Professor David Phinnemore’s analysis of the background to the row). As regards the current situation overall, Katya Adler, the BBC’s excellent Europe Editor, has provided a clear summary.

So instead of writing a new post, I am ‘re-upping’ one from 28 September 2018 on Brexit Britain’s war fixation. It seems appropriate since Britain is having a public holiday for VE Day. That was decided last year, to reflect that this will be the 75th anniversary (the same happened for the 50th) but perhaps also reflects, precisely, a fixation with the Second World War which is growing rather than diminishing with time, even as those who still remember it diminish in number. (For further, interesting, reflections on this do take a look at an excellent blog post by Miles King)

And, indeed, since writing that post it does seem as if war obsession is growing and coarsening, with the behaviour of pantomime oaf Mark Francois a prime, even paradigmatic, case. That could be seen as harmless enough, but it does carry dangers as the post points out. These have come into even sharper relief during the coronavirus. For as discussed in my post of a couple of weeks ago dubious comparisons with Spitfire production or the Blitz have undoubtedly adversely affected Britain’s response to the pandemic.

Despite the post being about 18 months old, there is not much that I want to change or add (and I haven’t edited it apart from expunging one, now legally superseded, phrase), except for two things. One is that my point in the third footnote that people might not be sanguine if faced with disruptions to supplies in the event of no-deal Brexit seems borne out by the panic buying at the start of the pandemic crisis. The no-deal Brexit under discussion at the time was that of there being no Withdrawal Agreement at all, but similar disruptions can be envisaged if, at the end of December, we leave the transition period with no trade deal in place and, actually, even with such a deal there will be a need for new customs processes which will disrupt established supply chains. Also of note is that the coronavirus shortages were caused by a short-term demand spike; those at the end of the transition period will be driven by supply shortages, and may be of longer duration.

The second additional point is that, in response to the original post, I received several messages saying that I was ‘showing disrespect’ for those who fought in the war, or failed to understand its significance. This is nonsense. I spent eight years researching and writing a history of Bletchley Park, Britain’s wartime codebreaking organization. That history is itself often mythologized or misunderstood and as I wrote in the book it shows no lack of respect to those who worked there “to avoid sanitization and sentimentality … most of them would have regarded an attempt at analytical rigour as a more fitting tribute” (p.32).

That same idea matters in the current context, where there is sometimes a mood of almost authoritarian insistence upon jingoistic celebration. It would have seemed odd, I imagine, to those who were engaged in fighting authoritarianism and for individual freedom.

At all events, it is a strange historical irony that, in the long-run, it has proved more difficult for Britain – or perhaps England – to ‘get over’ winning than it has for other countries to get over defeat and occupation. And perhaps an even stranger and grimmer one that this has been partly responsible for Britain leaving the institution which embodies the successful attempt to provide the continent of Europe with an alternative to the horrors of both the war and its long, bleak, ‘cold’ aftermath. Happy Victory in Europe Day.

The September 2018 post follows.

Brexit Britain’s wartime fixation

In the run up to the referendum, it was widely remarked upon that one significant strand of the leave campaign channelled the British fixation with, and often mythologization of, World War Two (WW2). How big a part it played in the outcome of the vote is impossible to say, but it seems plausible that it was a factor amongst the demographic that voted most strongly for Brexit, the over 50s. This would be not so much people who remember WW2 – now a relatively small number – but the generation or two who grew up, as I did, in its shadow. It was a time when every other film and TV series was set in the war, when children made models of Spitfires and Lancasters, and teachers and parents spoke of ‘the war’ both routinely and as the defining event of their lives.

No doubt future analysts will have much to say about this*. For now, what matters most urgently is to understand how that same fixation and mythologization is impacting upon the ongoing politics of Brexit. As the outgoing German Ambassador to Britain remarked earlier this year, it has two components**. One is the idea of Britain ‘standing alone’, the other a narrative that links, as Boris Johnson has explicitly done, Nazi Germany’s attempt to subjugate Europe with the present-day EU. Perhaps we could add (at least) a third aspect, quite often seen on social media, that Europe owes Britain a debt of gratitude from the war that ought to be repaid by accepting all Britain’s negotiating demands.

These and similar sentiments constantly re-appear almost every day, and, possibly, with increasing regularity as the negotiations grind, stutter and stall. Just today there was a report of the views of Conservative Party members on these negotiations. One said: “I’d rather have no deal than a bad deal … if this country had a chance and an opportunity it could look after itself. In the second world war we were feeding ourselves”.

This is obviously historically inaccurate (much food was brought to Britain, at huge cost in human life and suffering, by Atlantic convoys) but the real point is that seems to have been said not as a worst case scenario, but as something desirable, part of the opportunities of Brexit, not as a calamity or as Project Fear hyperbole. It is revealing of the quite different ways that people may react to things like the appointment this week of a Food Supplies Minister as part of no deal planning. Many will think it extraordinary that a rich country in peacetime could even be entertaining such a possibility but others may feel not just sanguine but enthusiastic about it***.

A national quirk takes centre stage

We’re no longer in the situation where this longstanding quirk of the British psyche can just be dismissed as an amusing eccentricity. It has somehow come to occupy centre stage in the politics of Brexit. The Brexit Cabinet sub-committee is routinely described as the ‘war cabinet’. Boris Johnson constantly attempts – and fails – to cultivate a Churchillian image.

Peter Hargraves - the businessman who donated millions to the leave campaign, funding a leaflet to every household in the country - celebrates the insecurity Brexit will bring: “it will be like Dunkirk again”, he enthuses. Liam Fox announces his support for a round-the-world flight of a restored Spitfire to drum up exports for post-Brexit Britain.

Nor is war fever the preserve of crusty oldsters. Darren Grimes, the 22-year old Brexit activist, recently pronounced that “we’re a proud island nation that survived a world war – despite blockades in the Atlantic that tried to starve our country into submission. I don’t think we’re about to be bullied by a French egomaniac [i.e. Macron]”.

There’s a certain bathos in all this. Brexit, sold to the voters as a sunny upland of national pride and prosperity, reduced to the glum promise by Brexit Secretary Dominic Raab that the government will ensure “there is adequate food supply”. Slogans like ‘it won’t be too bad’ or ‘you won’t actually starve’ would probably not have had much traction, no matter how shiny and red a bus they were written on.

The dangers of war fixation

But there is far more danger than humour in it. The underlying sentiment of confrontational antagonism has permeated the Brexit negotiations from the beginning. Brexiters, having won their great prize, and having assured us how easy it would be, immediately adopted a stance not of confident optimism but of sullen suspicion punctuated with bellicosity.

Recall one of the early moments that the complexities of Brexit became clear – in relation to Gibraltar – and former Conservative leader Michael Howard immediately started talking about war. Or, more low-key but showing how permeated with hostility the approach has been, Johnson’s ‘go whistle’ jibe or Davis’s ‘row of the summer’ bluster.

That’s one danger, and it has already done its damage. The far greater one is the now ingrained and sure to become worse narrative of EU ‘bullying’ and ‘punishment’. This is almost invariably accompanied by invocations of WW2, of standing alone, of German aggression and French duplicity. It is dangerous not so much because it often invokes a highly partial picture of the war but because it always invokes an entirely unrealistic picture of Brexit.

Britain, through its vote and its government’s actions, has chosen to leave and to do so in the form that it has. That entails losing all of the benefits of membership of the EU and of the single market. It is not bullying or punishment to be expected to face the consequences of that choice. Britain has not been forced by foreign aggression to ‘stand alone’: it has chosen to do so. It has backed itself into a corner, through lies and fantasies about the practical realities of what Brexit would mean. It is now in danger of telling itself lies and fantasies about why that has happened.

Britain’s wartime history is something we can justly feel proud of. For that matter there are plenty of people - older people, now, inevitably - in countries like France, Belgium and Holland who continue to feel gratitude for it. But pride should not mean truculence, bellicosity, entitlement and self-pity. Above all, Dunkirk was almost 80 years ago. There is also plenty to feel proud of since and, in any case, that one desperate moment in our history should not and does not define us forever.

*Indeed some already have. See in particular the excellent chapter by Robert Eaglestone, ‘Cruel Nostalgia and the Memory of the Second World War’ in Eagelstone, R. (Ed) Brexit and Literature. Critical and Cultural Responses. Routledge, 2018.

**It is noteworthy that even pointing this out was enough to enrage Brexiters, with the Daily Express railing against the comments for ‘mocking’ them.

***The relative numbers in these different camps will become politically significant if a no deal Brexit were to happen. Brexiters are likely to find much less appetite than they think for massive disruption to the amenities of everyday life, even amongst those who currently appear relaxed about the prospect.

Not moving on, not going away

|

mkalus

shared this story

from |

The Brexit process is in another of its periodic ‘lull before the storm’ moments. So this will be quite a boring post, but at least it's fairly short. There were no talks scheduled this week but next week, when they resume, will be the last negotiations before a decision on whether to extend the Transition Period (TP) or not will have to made. Nothing of substance has shifted on this.

Nothing has changed

Michael Gove and David Frost appeared before the EU Future Relationship Select Committee and made it clear that no extension remains the government position and, also, that whether or not a deal is done there will be no separate implementation period for businesses to adjust (thus, closing down the idea of a TP extension by the back door). As for the prospects of a deal being done, this again was discussed in terms of the need for the EU to shift its position, and to recognize the ‘precedents’ set in its relationships with Canada, Japan, Norway and so on as applying to the UK. All this was just a reiteration of the UK position since the February ‘Negotiations Approach’ document (as discussed and critiqued in a previous post).

So there was nothing new here and no sense of realism in what was said – at one stage David Frost even suggested that “we are still at a relatively early stage of the negotiations” which seems rather bizarre. Nevertheless, it was recognized that a fisheries deal, meant to be completed by the end of June, may not meet this (soft) deadline which might, at a pinch, suggest some realization that the timeframes overall are unrealistic. On the other hand, Frost reiterated the suggestion that not only would the UK not agree an extension period by the end of June, but that Boris Johnson might even walk out of the talks altogether at that point.

On the EU side, a letter from Michel Barnier to opposition party leaders in the UK Parliament re-iterated an openness to a one or two-year extension. No real news there, either, since it says no more than is contained within the Withdrawal Agreement. But it flagged up an important domestic political issue in that the leaders in question did not include Keir Starmer. This was not an oversight on Barnier’s part – his letter was a reply to those opposition leaders who had written to him, and Starmer was not amongst them.

Starmer’s stance on extension

This reflects the fact that he continues to reject demands to call for an extension, saying instead that the government has promised to negotiate a deal by the end of the year and should be held to account on this. This is smart politics, for now, because it prevents Johnson presenting extension as a Labour demand and a remainer trick – and in the process reactivating the Brexit culture war as a party political divide – rather than a rational response to the force majeure of the coronavirus crisis.

But it is now reaching its limits, for several reasons. First, just as a matter of principle, it would be wrong to treat something as crucial as extension as a taboo topic simply for fear of being dubbed a ‘remainer party’. Second, there is a large constituency, by no means confined to erstwhile remainers, who want extension and whose voice Labour should represent. Third, for this reason there are also political advantages in calling for extension because it will appeal to many voters and, also, could position Labour as more business-friendly than the Tories. At the very least it would consolidate Starmer's credentials as a serious-minded pragmatist. And, finally, because it would set down a marker for the future – if Starmer fails to call for an extension in the coming month he will have made Labour to a degree complicit in the consequences of non-extension in a not dissimilar way to how its support for triggering Article 50 made it complicit in Brexit itself.

So, if Starmer continues to be as sure-footed an opposition leader as he has been so far, there are good reasons of principle and tactics to call for extension soon. He would be aided in this if some loud business and civil society voices prepared the ground by doing the same – providing some juicy quotes for use at PMQs in much the same way as he has so effectively used those from medical experts to puncture Johnson’s coronavirus bluster - just as they would be aided in speaking out were he to do so. There really is very little time to be lost in this, and the ongoing coronavirus crisis should not blind Labour, or anyone else, to the fact that what happens in the next month as regards TP extension is going to shape what happens to Britain for years.

Extension and the Cummings affair

Of course, no matter how vociferously an extension is demanded it looks highly unlikely that the government will budge. I wrote in detail about the wider significance of the Cummings affair in an ‘extra’ post on this blog earlier this week. But on the narrow issue of TP extension it is also important, in quite intricate ways.

One of the arguments with which Cummings’ supporters have tried to close down criticism of him from Tory MPs is to suggest that, were he forced to resign, the Brexit project itself would be under threat, and extension would become more likely. This message has a particular salience given that high profile Brexiter MPs including Steve Baker and Peter Bone have been amongst those calling for Cummings’ resignation.

To the extent that he is reportedly adamantly opposed to extension there might be some truth in this but, overall, it seems like a false hare. Is Brexit policy really dependent on just one advisor? This was presumably the thrust behind Bone’s questioning of David Frost at the Select Committee, as to whether he, Frost, operated under orders from Cummings, and whether Brexit policy would collapse without Cummings. The answer was that he does not and that it would not.

What is more important about the Cummings affair is that whether he stays or goes – which still remains an open question - it has made it even less likely that the UK will seek or accept an extension to the TP. On the one hand, with so much internal dissent already in the Tory Party over Cummings, the chances of Johnson now facing down the ERG Ultras is even smaller than before. On the other hand, the discomfort of the affair, coupled with its wider context of the government’s incompetent handling of coronavirus, makes the comfort and relative safety of his core ‘get Brexit done’ message all the more appealing to Johnson.

One might, of course, hope that the small matter of the national interest would be a factor here but to do so would be to invest Johnson with qualities he self-evidently lacks. It would in any case entail that he broke with recent history by framing Tory policy on Europe in terms of national, rather than party, interest. After the election, I wrote that the fundamental dynamics of Brexit remained unchanged and that the third of them was that any government was constrained by the need to avoid massive economic dislocation, which put a break on the second of them, namely internal Tory Party politics.

That has changed, partly because I hadn’t anticipated quite how much “f*** business” was to become actual government policy, but mainly because of the impact coronavirus crisis. Amongst Brexiters, this is interpreted to make it even more likely that the EU will ‘blink’ and do a deal on UK terms by the end of the year and to strengthen the UK’s ‘hand’ (£), but also to mean that, if it doesn’t, the economic damage of no deal will be concealed by or subsumed within that of the virus. So with the dynamic of economic realism more muted, the constraints upon a policy framed purely in terms of the internal interests of the Tory Party are even fewer than before.

Moving on

Yet it is this which, going back to Labour, gives Starmer an opening. As suggested in my previous post, the Cummings episode makes a mockery of the populist claim of anti-elitism, and this can be married together with the idea of a government turning its back on the public interest to good effect. Just as ‘we can now see that they only care for themselves when it comes to lockdown’ so, Labour can argue, ‘we can see that they don’t care about damaging your jobs and livelihoods’.

And, yes, no doubt Johnson will try to twist that round to mean ‘you never accepted Brexit’ but, like much else about Brexit, that will be so 2019. Coronavirus has not only reshaped the arguments for TP extension, it is also, and at speed, reshaping the entire political landscape. Johnson and the Brexiters would love to pull things back to the heady ‘will of the people days’ but ‘the people’ have, to coin the phrase du jour, ‘moved on”. There’s a tide there that Starmer can catch to Labour Party advantage but, much more importantly, to the benefit of us all. For the idea that a country, battered by coronavirus, should be led into the calamity of no deal in just six months’ time, or even just to the shocks of moving from single market membership to a limited trade agreement, is plain crazy.

And, please, let’s have no nonsense about this being to do with thwarting Brexit. Not only has Brexit happened, in the precise sense of having left the EU, but absolutely nothing in what the government now envisages is remotely – remotely – like what leave voters were promised in 2016, or indeed for years afterwards, including at the last election (when, in fact, its details were barely discussed). Not only was an economically advantageous deal promised, but it would be negotiated before the formal process to leave was even begun.

The magnitude of these lies still has the power to shock. The idea that they now mandate a Prime Minister who is daily exposed as being completely out of his depth in every respect, heading a government which already looks exhausted and incompetent (£), and acting solely in the interests of a small faction of fanatical nihilists, to drag us into even greater disaster is grotesque. Grotesque in every conceivable way: democratically, intellectually, economically, and morally.

Patched

The duvet cover on my bed had a couple of holes in it that were only getting bigger with time. Duvet covers are expensive, and I kind of like this one, so I decided to see if I could patch it.

The most frequent suggestion you run into online for patching things like this is to use fusible interfacing, essentially an iron-on patch. Seemed reasonable but for Charlottetown being sold out of it (now that all the pandemic bread has been baked, are we turning to pandemic patching en masse?).

At Walmart, however, I did find some fabric-patching glue, and decided to try that.

I cut out a piece of similar-looking fabric about an inch larger than each hole, tucked them inside the duvet, and then applied a thin layer of glue. I managed to make something of a mess of things, but, in the end, it all held.

I wasn’t content to leave my patch in the hands of chemistry, so I supplemented the glue with some hand-sewing around the edges. I managed to make something of a mess of things, but, in the end, it all held (I should become a better sewer).

The result isn’t elegant or invisible, but the holes are patched.

RT @getfiscal: Journalists: There is no Christian Left. Ezekiel: Hold my buttermilk....

|

mkalus

shared this story

from |

Journalists: There is no Christian Left.

Ezekiel: Hold my buttermilk.... twitter.com/MyGalaxyBTSArm…

Even the Amish are getting in on the protests #Minneapolisprotests #BlackLivesMatter pic.twitter.com/jYefNLWJNK

4501 likes, 1312 retweets

wtyppod

on Saturday, May 30th, 2020 5:01pm

wtyppod

on Saturday, May 30th, 2020 5:01pm1566 likes, 116 retweets

RT @Haggis_UK: Paul Merton - "Why did Dominic Cummings feel the need to go back to work... is it too difficult to bully people on Zoom?" 🤣…

|

mkalus

shared this story

from |

Paul Merton - "Why did Dominic Cummings feel the need to go back to work... is it too difficult to bully people on Zoom?" 🤣

Ian Hislop - "Why is a political aid making decisions about vaccines?

#hignfy pic.twitter.com/7S4clNHzbG

mrjamesob

on Saturday, May 30th, 2020 8:19am

mrjamesob

on Saturday, May 30th, 2020 8:19am6572 likes, 2430 retweets

A little over two years ago, in February 2018, ...

A little over two years ago, in February 2018, Space-X launched a Tesla into space with a Don’t Panic sticker on the dashboard, while landing two boosters back on earth. Now for the first time in years the USA launches its own astronauts to ISS. In 2011 the last Space Shuttle flight took place (the first one 3 decades before it, in April 1981). Quite a feat.

(screenshot from the live feed)

(screenshot from the live feed)

OnePlus says it accidentally released update that removes controversial camera filter

OnePlus accidentally rolled out an over-the-air (OTA) update that removed the x-ray-like ‘Photochrom’ filter from its camera app.

In case you missed it, the China-based phone maker has come under fire over the last few weeks because of the filter. Included in the camera app of the OnePlus 8 Pro, it uses infrared sensors to capture light not visible to the human eye to create an x-ray effect. For the most part, the filter affected thin black plastics and also some thin clothing.

After facing backlash for the possible privacy implications of the filter, OnePlus said it would remove the filter from HydrogenOS, the version of its phone software made for China. However, global users on the company’s OxygenOS software would receive an update that would limit some of the “functionality that may be of concern.”

However, that update accidentally removed the filter from the global software. If you’ve got an OnePlus 8 Pro, OxygenOS 10.5.9 will remove the Photochrom filter if you apply the update. XDA Developers reports that update notes it spotted said the filter was “temporarily removed for adjustment.”

OnePlus issued a statement about the update on its forums, noting the “OTA inadvertently went out to a limited number of devices.” OnePlus plans to enable the filter again in the next software update.

Source: OnePlus Via: The Verge

The post OnePlus says it accidentally released update that removes controversial camera filter appeared first on MobileSyrup.

Stream Like a CEO

Update: There’s an updated 2021 version of this setup.

When Bill Gates was on Trevor Noah’s show it was amazing how much better quality his video was. I had experimented with using a Sony camera and capture card for the virtual event we did in February when WordCamp Asia was canceled, but that Trevor Noah video and exchanging some tweets with Garry Tan sent me down a bit of a rabbit hole, even after I was on-record with The Information saying a simpler setup is better.

The quality improved, however something was still missing: I felt like I wasn’t connecting with the person on the other side. When I reviewed recordings, especially for major broadcasts, my eyes kept looking at the person on the screen rather than looking at the camera.

Then I came across this article about the Interrotron, a teleprompter-like device Errol Morris would use to make his Oscar-winning documentaries. Now we’re onto something!

For normal video conferencing a setup this nice is a distraction, but if you’re running for political office during a quarantine, a public company CEO talking to colleagues and the press, here’s a cost-is-no-object CEO livestreaming kit you can set up pretty easily at home.

GEAR GUIDE

Basically what you do is put the A7r camera, shotgun mic, and the lens together and switch it to video mode, go to Setup 3, choose HDMI settings, and turn HDMI Info Display off — this gives you a “clean” video output from the camera. You can run off the built-in battery for a few hours, but the Gonine virtual battery above lets you power the camera indefinitely. Plug the HDMI from the camera to the USB Camlink, then plug that into your computer. Now you have the most beautiful webcam you’ve ever seen, and you can use the Camlink as both a video source and an audio source using the shotgun mic. Put the Key Light wherever it looks best. You’re fine to record something now.

If you’d like to have a more two-way conversation Interrotron style, set up the teleprompter on the tripod, put the camera behind it, connect the portable monitor to your computer (I did HMDI to a Mac Mini) and “mirror” your display to it. (You can also use an iPad and Sidecar for that.) Now you’ll have a reversed copy of your screen on the teleprompter mirror. I like to put the video of the person I’m talking to right over the lens, so near the bottom of my screen, and voilà! You now have great eye contact with the person you’re talking to. The only thing I haven’t been able to figure out is how to horizontally flip the screen in MacOS so all the text isn’t backward in the mirror reflection. For audio I usually just use a headset at this point, but if you want to not have a headset in the shot…

Use a discreet earbud. I love in-ear monitors from Ultimate Ears, so you can put one of these in and run the cable down the back of your shirt, and I use a little audio extender cable to easily reach the computer’s 3.5mm audio port. This is “extra” as the kids say and it may be tricky to get an ear molding taken during a pandemic. For the mic I use the audio feed from the Camlink, run through Krisp.ai if there is ambient noise, and it works great (except in the video above where it looks a few frames off and I can’t figure out why. On Zoom it seems totally normal).

Here’s what the setup looks like all put together:

After that photo was taken I got a Mac Mini mount and put the computer under the desk, which is much cleaner and quieter, but used this earlier photo so you could see everything plugged in. When you run this off a laptop its fan can get really loud.

Again, not the most practical for day to day meetings, but if you’re doing prominent remote streaming appearances—or if your child is an aspiring YouTube star—that’s how you can spend ~9k USD going all-out. You could drop about half the cost with only a minor drop in quality switching the camera and lens to a Sony RX100 VII and a small 3.5mm shotgun mic, and that’s probably what I’ll use if I ever start traveling again.

If I were to put together a livestreaming “hierarchy of needs,” it would be:

- Solid internet connection (the most important thing, always)

- Audio (headset mic or better)

- Lighting (we need to see you, naturally)

- Webcam (video quality)

We’ve put together a Guide to Distributed Work Tools here, which includes a lot of great equipment recommendations for day-to-day video meetings.

Get Airfoil for Mac 5.9, the Latest Version of Our Audio Streamer

Continuing our parade of free product updates, we’ve just released Airfoil 5.9. It’s the latest version of our audio streaming tool, perfect for playing audio through wireless devices all around your house.

For over 15 years now, Airfoil has made it possible to stream any audio from your Mac to devices around your network. Today’s update brings refinements and improvements throughout the application. Read more below, or just visit Airfoil’s product page to download the latest.

Equalizer Refinements

Airfoil’s built-in 10-Band Lagutin Equalizer has been substantially improved. It now offers a handy new sparkline indicator in the Presets menu, which provides a quick idea of how each preset works. The saving and editing of user-created Presets has also been overhauled and improved. To top it off, transitions between presets now animate beautifully as well.

Airfoil’s built-in 10-Band Lagutin Equalizer has been substantially improved. It now offers a handy new sparkline indicator in the Presets menu, which provides a quick idea of how each preset works. The saving and editing of user-created Presets has also been overhauled and improved. To top it off, transitions between presets now animate beautifully as well.

A New “Alerts” Window

Whether it’s an incorrectly entered password or a problem with a remote speaker, errors are bound to happen sooner or later. When they do, Airfoil will now show them all in a centralized location, its new “Alerts” window.

After first adding the Alerts window to Farrago 1.5, we’ve now refined it further in Airfoil. Over time, it’s likely to show up in all our products, as a useful way of letting users know of any issues that appear.

Track Title Improvements

When Airfoil transmits audio from supported applications, it also passes along metadata about that audio, like artist name, track title, and album artwork. Today’s update adds support for track titles from the MiX16 apps, and restores track title support to Audirvana. It also improves the performance of track title retrieval from several apps including Spotify, Music.app, and VLC.

And More

As always, there are many smaller fixes and improvements as well. Airfoil’s audio capture, powered by the Audio Capture Engine (ACE), has been updated. It will now properly grab audio from Google Chrome’s Progressive Web Apps, and several smaller bug fixes and refinements have been made. In addition, Airfoil now correctly streams only the remote half of a VoIP conversation, perfect for listen-only call. Alongside that, we’ve made small enhancements for VoiceOver users, and fixed up a number of rare bugs.

Download Airfoil for Mac 5.9 Now

If you’re new to Airfoil, use the links below to learn more, and download the free trial. In no time at all, you’ll be streaming audio all around your house.

If you’re new to Airfoil, use the links below to learn more, and download the free trial. In no time at all, you’ll be streaming audio all around your house.

For our existing customers, Airfoil 5.9 is another free update. Existing Airfoil users should select “Check for Update” from the Airfoil menu to get the latest.

South Philippine Dwarf Kingfisher

The South Philippine Dwarf Kingfisher (Ceyx mindanensis) was first described 130 years ago during the Steere Expedition to the Philippines in 1890, and was photographed this year for the very first time. So beautiful. Via @rogre.

The many ways to pass code to Python from the terminal

For the Python extension for VS Code, I wrote a simple script for generating our changelog (think Towncrier, but simpler, Markdown-specific, and tailored to our needs). As part of our release process we have a step where you are supposed to run python news which points Python at the news directory in our repository. A co-worker the other day asked how that worked since everyone on my team knows to use -m (see my post on using -m with pip as to why)? That made me realize that other people probably don't know the myriad of ways you can point python at code to execute, hence this blog post.

Via stdin and piping

Since how you pipe things into a process is shell-specific, I'm not going to dive too deep into this. Needless to say, you can pipe code into Python.

echo "print('hi')" | pythonpythonThis obviously also works if you redirect a file into Python.

python < spam.pypythonNothing really surprising here thanks to Python's UNIX heritage.

A string via -c

If you just need to quickly check something, passing the code in as a string on the command-line works.

python -c "print('hi')"-c flag with pythonI personally use this when I need to check something that's only a line or two of code instead of launching the REPL.

A path to a file

Probably the most well-known way to pass code to python is via a file path.

python spam.pypythonThe key thing to realize about this is the directory containing the file is put at the front of sys.path. This is so that all of your imports continue to work. But this is also why you can't/shouldn't pass in the path to a module that's contained from within a package. Since sys.path won't have the directory that contains the package, all your imports will be relative to a different directory than you expect for your package.

Using -m with packages

The proper way to execute a package is by using -m and specifying the package you want to run.

python -m spam-m with pythonThis uses runpy under the hood. To make this work with your project all you need to do is specify a __main__.py inside your package and it will get executed as __main__. And the submodule can be imported like any other module, so you can test it and everything. I know some people like having a main submodule in there package and then make their __main__.py be:

from . import main

if __name__ == "__main__":

main.main()Personally, I don't bother with the separate main submodule and put all the relevant code directly in __main__.py as the module names feel redundant to me.

A directory

Defining a __main__.py can extend to a directory as well. If you look at my example that instigated this blog post, python news works because the news directory has __main__.py file. Python executes that like a file path. Now you might be asking, "why don't you just specify the file path then?" Well, it's honestly one less thing to know about a path. 😄 I could have just as easily written out instructions in our release process to run python news/announce.py, but there is no real reason to when this mechanism exists. Plus I can change the file name later on and no one would notice. Plus I knew the code was going to have ancillary files with it, so it made sense to put it in a directory versus as a single file on its own. And yes, I could have made it a package to use -m, but there as no point as the script is so simple I knew it was going to stay a single, self-contained file (it's less than 200 lines and the test module is about the same length).

Besides, the __main__.py file is extremely simple.

import runpy

# Change 'announce' to whatever module you want to run.

runpy.run_module('announce', run_name='__main__', alter_sys=True)__main__.py for when you point python at a directoryNow obviously there's having to deal with dependencies, but if your script just uses the stdlib or you place the dependencies next to the __main__.py then you are good to go!

Executing a zip file

When you do have multiple files and/or dependencies and you want to ship our code our as a single unit, you can place it in a zip file with a __main__.py and Python will run that file on your behalf with the zip file places on sys.path.

python app.pyzpythonNow traditionally people name such zip files with a .pyz file extension, but that's purely tradition and does not affect anything; you can just as easily use the .zip file extension.

To help facilitate creating such executable zip files, the stdlib has the zipapp module. It will generate the __main__.py for you and add a shebang line so you don't even need to specify python if you don't want to on UNIX. If you are wanting to move around a bunch of pure Python code it's a nice way to do it.

Unfortunately using a zip file like this only works when all the code the zip file contains is pure Python. Executing zip files as-is doesn't work for extension modules (this is why setuptools has a zip_safe flag). To load an extension module Python has to call the dlopen() function and it takes a file path which obviously doesn't work when that file path is contained within a zip file. I know at least one person who talked to the glibc team about adding support for passing in a memory buffer so Python could read an extension module into memory and pass that in, but if memory serves the glibc team didn't go for it.

But not all hope is lost! You can use a project like shiv which will bundle your code and then provide a __main__.py that will handle extracting the zip file, caching it, and then executing the code for you. While not as ideal as the pure Python solution, it does work and is about as elegant as one can get in this situation.

Richards Street Bike Lane: Decades in the Making

From Jeff Leigh of HUB, with photos by Clark.

Construction continues on Richards Street, with the new protected bi-directional bike lanes. These lanes replace the painted lanes that were one way, and will provide valuable connections to our downtown cycling network.

Construction is underway from Cordova to Nelson. This summer City crews will shift to the southern end of Richards and complete the improvements through to Pacific Boulevard..

The planter boxes for the new street trees. (There are 100 trees planned.)

PT: Planning and construction for Richards did not take decades obviously, but the route to get to this point goes back to the 1970s when, after lobbying and advocacy by many of our two-wheeled pioneers, the first vision was developed by the cycling advisory committee and then approved by Council in the early 1980s. From there, it took decades more to approve funding in capital plans, to develop specific work plans, to evolve ever more advanced designs (particularly the separated bi-directional routes pioneered on Dunsmuir Street), and to commit to a completely integrated network not only through downtown but across the city and region. That may take decades more.

But as the Beach Flow Way and the new Slow Streets show, it’s possible to advance a decades-long vision in a matter of months. They, however, are temporary and experimental. When you start pouring concrete, it’s best to have done the detail work only possible by building on the work of generations past.

Deno is a Browser for Code

One of the most interesting ideas in Deno is that code imports are loaded directly from URLs - which can themselves depend on other URL-based packages. On first encounter it feels wrong - obviously insecure. Deno contributor Kitson Kelly provides a deeper exploration of the idea, and explains how the combination of caching and lock files makes it no less secure than code installed from npm or PyPI.

Via Hacker News

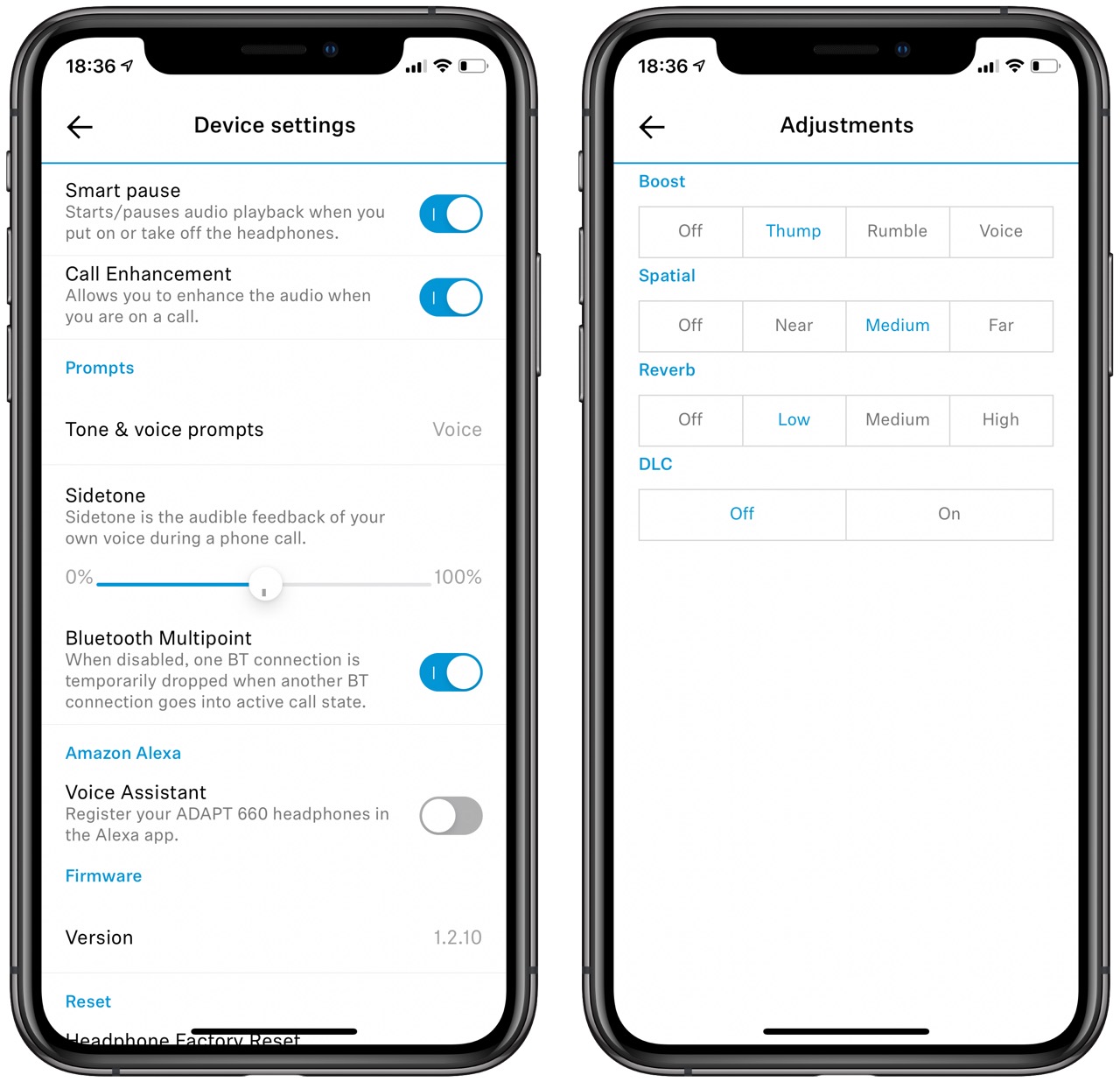

EPOS Adapt 660 :: Erste Eindrücke

Kopfhörer sind wie Schuhe. Wenn man reinschlüpft, dann weiß man sofort, ob sie passen oder nicht. Und dennoch muss man sie erst einmal eintragen, damit man keine Blasen bekommt. Das trifft ganz besonders auf den EPOS Adapt 660 zu. Auf Anhieb hat er mir exzellent gepasst und überraschenderweise auch der Scheffin, die einen viel kleineren Kopf hat. Und dennoch war die Eingewöhnung schwierig, was viel mit der Software zu tun hat.

Um den offensichtlichen Elefanten aus dem Zimmer zu räumen (elephant in the room), wieso EPOS Adapt? Das ist doch ein Sennheiser MB-660 UC, nicht wahr? Jein. EPOS ist ein Unternehmen der dänischen Demant-Gruppe und aus Sennheiser Communications entstanden. Hier steht die ganze Geschichte. Weil EPOS die Enterprise-Headsets von Sennheiser weiterführt, will ich mich in Zukunft mit diesen Headsets beschäftigen. Was unterscheidet Adapt 660 und MB-660 UC? Vor allem die Software. Die Taste, mit der man beim Sennheiser-Headset das Klangprofil ändert, ist beim Adapt 660 für Microsoft Teams zuständig. Außerdem liefert EPOS den für den PC-Betrieb essentiellen USB-Dongle mit, ohne den die Integration in Softphones nicht richtig funktioniert. Das machen die Mitbewerber Jabra und Poly/Plantronics genauso.

Was ist gut am Adapt 660? Die Passform und damit die passive Geräuschunterdrückung und der typische neutrale Sennheiser-Klang. Das passt sofort. Das Headset verbindet sich per Bluetooth mit bis zu acht Geräten, zwei davon gleichzeitig. Dazu gibt es ein Audiokabel mit 3,5mm-Stecker PC-seitig und 2,5 mm am Headset. Das MicroUSB-Ladekabel überträgt ebenfalls das Audiosignal, wenn man den USB-A-Stecker in den PC/Mac einsteckt. Zusätzlich zu der sehr guten passiven Geräuschunterdrückung gibt es ein ANC (Active Noise Cancelling), das man über einen dreistufigen Schalter ein- und ausschalten kann. In der mittleren Position passt sich ANC adaptiv an den Umgebungslärm an und kann per App reguliert werden.

Beim Kennenlernen der Bedienung war mir die Online-Dokumentation des MB-660 UC sehr hilfreich. Eine so ausführliche Anleitung habe ich bei EPOS nicht gefunden. Ich habe ziemlich lange gesucht, bis ich die Einstellung des Sidetones (Zahnrad in der App), die Mute-Funktion (nach hinten streichen während des Calls) oder die Hearthrough-Funktion (Doppeltap) gefunden habe. Das Headset kann alles, was man von so einem Business-Headset erwartet, aber man muss es erst mal erfolgreich finden und einüben. Das meinte ich eingangs mit dem Eintragen der neuen Schuhe.

Das Headset hat ungewöhnlich wenige Schalter. Ein-und Ausschalten geschieht wie beim Jabra Elite 85h durch Drehen der Ohrmuscheln in die Parkposition. Dazu die bereits erwähnten Schalter für ANC und Microsoft Teams, und einen dritten Schalter, mit dem man Bluetooth abschalten kann. Das Headset ist dann eingeschaltet, bietet ANC, aber verbindet sich nicht mit einem Endgerät. Bei einem Handy wäre das der Flugmodus. Alles andere macht man über Touchgesten auf der rechten Ohrmuschel. Wenn man die Gesten einmal gelernt hat, funktionieren sie einwandfrei.

Was mich enttäuscht hat, ist die Sprachqualität, vor allem in lauten Umgebungen. Das Headset hat vier Mikrofone für das ANC und drei Mikrofone auf der rechten Ohrmuschel, die als Array geschaltet die Stimme perfekt aufnehmen sollen. Andere Headset wie das Surface Headsphones, das Bose NC 700, das Jabra Elite 85h oder das Poly Voyager 8200 verwenden vier Mikrophone, davon jeweils zwei links und rechts. Diese vier Headsets schaffen es in meiner lauten Testumgebung besser, die Stimme aus dem Umgebungslärm herauszulösen. Das Adapt 660 hatte hörbare Probleme mit Aussetzern. In leiser Umgebung ist die Sprachübertragung gut, aber nicht überragend.

Die App erlaubt die Anpassung des Klangs durch verschiedene Soundprofile: Neutral, Club, Movie, Sprache und Regisseur. Dieses letzte Profil ist vom Benutzer selbst einstellbar, wie die Abbildung zeigt.

Erstes Fazit: Sehr leichtes und bequemes Headset mit sehr guter Geräuschunterdrückung und perfektem Klang. Kurze, steile Lernkurve durch schwache Dokumentation, in meinem Testfeld eine eher schwache Geräuschunterdrückung ausgehend.

A failure of formats

A failure of formats

I have a very long diatribe on the state of document editors that I am working on, and almost anyone that knows me has heard parts of it, as I frequently rail against all of them: MS Word, Google Docs, Dropbox Paper, etc. In the process of writing up my screed I started thinking what a proper document format would look like and realized the awfulness of all the current document formats is a much more pressing problem than the lack of good editors. In fact, it may be that failing to have a standard, robust, and extensible file format for documents might be the single biggest impediment to having good document editing tools. Seriously, even if you designed the world’s best document editor but all it can output is PNGs then how useful if your world’s best document editor?

Let’s start with HTML, as that is probably the most successful document format in the history of the world outside of plain old paper. I can write an HTML page, host it on the web, and it can be read on any computer in the world. That’s amazing reach and power, but if you look at HTML as a universal document format you quickly realize it is stunted and inadequate. Let’s look at a series of comparisons between HTML and paper, starting with simple text, a note to myself:

Now this is something that HTML excels at:

<p>Get Milk</p>

And here HTML is better than paper because that HTML document is easily machine readable. I throw in the word “easily” because I know some ML/AI practitioner will come along and claim they can also “read” the image, but we know there are many orders of magnitude difference in processing power and complexity of those two approaches, so let’s ignore them.

So far HTML is looking good. Let’s make our example a little more complex; a shopping list.

This again is something that HTML is great at:

<h1>Shopping list</h1>

<ul>

<li>Milk</li>

<li>Eggs</li>

</ul>

Not only can HTML represent the text that’s been written, but can also capture the intended structure by encoding it as a list using the

- and

- elements. So now we are expressing not only the text, but also the meaning, and again this representation is machine readable.

At this point we should take a small detour to talk about the duality we are seeing here with HTML, between the markup and the visual representation of that markup. That is, the following HTML:

<h1>Shopping list</h1> <ul> <li>Milk</li> <li>Eggs</li> </ul>Is rendered in the browser as:

Shopping list

- Milk

- Eggs

The markup carries not only the text, but also the semantics. I hesitate to use the term ‘semantics’ because that’s an overloaded term with a long history, particularly in web technology, but that is what we’re talking about. The web browser is able to convert from the markup semantics,

- and

- , into the visual representation of a list, i.e. vertically laying out the items and putting bullets next to them. That duality between meaningful markup in text, distinct from the final representation, is important as it’s the distinction that made search engines possible. And we aren’t restricted to just visual representations, screen readers can also use the markup to guide their work of turning the markup into audio.

But as we make our example a little more complex we start to run into the limits of HTML, for example when we draw a block diagram:

When the web was first invented your only way to add such a thing to web page would have been by drawing it as an image and then including that image in the page:

<img src="server.png" title="Server diagram with two disk drives.">The image is not very machine readable, even with the added title attribute. HTML didn’t initially offer a native way to create that visualization in a semantically more meaningful way. About a decade after the web came into being SVG was standardized and became available, so you can now write this as:

<svg width="580" height="400" xmlns="http://www.w3.org/2000/svg"> <title>Server diagram with two disk drives.</title> <g> <rect height="60" width="107" y="45" x="215" stroke-width="1.5" stroke="#000" fill="#fff"/> <text font-size="24" y="77" x="235" stroke-width="0" stroke="#000" fill="#000000" id="svg_3"> Server </text> <line y2="204" x2="173" y1="105" x1="267" stroke-width="1.5" stroke="#000" fill="none" id="svg_4"/> <rect height="59" width="125" y="205" x="98" stroke-width="1.5" stroke="#000" fill="#fff" id="svg_5"/> <rect height="62" width="122" y="199" x="342" stroke-width="1.5" stroke="#000" fill="#fff" id="svg_6"/> <line y2="197" x2="403" y1="103" x1="268" stroke-width="1.5" stroke="#000" fill="none" id="svg_7"/> <text font-size="24" y="240" x="119" fill-opacity="null" stroke-opacity="null" stroke-width="0" stroke="#000" fill="#000000" id="svg_8"> Disk 1 </text> <text stroke="#000" font-size="24" y="236" x="361" fill-opacity="null" stroke-opacity="null" stroke-width="0" fill="#000000" id="svg_9"> Disk 2 </text> </g> </svg>This is a slight improvement over the image. For example, we can extract the title and the text found in the diagram from such a representation, but the markup isn’t what I would call human readable. To get a truly human readable markup of such a diagram we’d need to leave HTML and write it in Graphviz dot notation:

graph { Server -- "Disk 1"; Server -- "Disk 2"; }So we’ve already left the capabilities of HTML behind and we’ve only just begun, what about math formulas?

Again, about a decade after the web started MathML was standardized as a way to add math to HTML pages. It’s been 20 years since the MathML specification was released and you still can’t use MathML in your web pages because browser support is so bad.

But even if MathML had been fully adopted and incorporated in to all web browsers, would we be done? Surely not, what about musical notations?

If we want to include notes in a semantically meaningful way on a web page do we have to wait another 10 years for standardization and then hope that browsers actually implement the spec?

What about Feynman diagrams?

Or dance notations?

You see, the root of the issue is that humans don’t just communicate by text, we communicate by notation; we are continually creating new new notations, and we will never stop creating them. No matter how many FooML markup languages you standardize and stuff into a web browser implementation you will only ever scratch the surface, you will always be leaving out more notations than you include. This is the great failing of HTML, that you cannot define some squiggly set of lines as a symbol and then use that symbol in your markup.

Is such a thing even possible?

The only markup language that comes even close to achieving this universality of expression is TeX. In TeX it is possible to create you own notations and define how they are rendered and then to use that notation in your document. For example, there’s a TeX package that enables Feynman diagrams:

\feynmandiagram [horizontal=a to b] { i1 -- [fermion] a -- [fermion] i2, a -- [photon] b, f1 -- [fermion] b -- [fermion] f2, };Note that both TeX and the

\feynmandiagramnotation are both human readable, which is an important distinction, as without it you could point at Postscript or PDF as a possible solution. While PDF may be able to render just about anything, the underlying markup in PDF files is not human readable.I’m also not suggesting we abandon HTML in favor of TeX. What I am pointing out is that there is a serious gap in the capabilities of HTML: the creation and re-use of notation, and if we want HTML to be a universal format for human communication then we need to fill this gap.

Further Reading

I’m not the first person to address these ideas by any stretch of the imagination. For example, see the entire fields of Linguistics and Semiotics. Also watch this talk from Bret Viktor:

The Humane Representation of Thought from Bret Victor on Vimeo.

And check out Edward Tufte Sparklines.

Hugo

This blog has just migrated from Jekyll to Hugo.

Why? Having programmed in Go for six years now I’m very comfortable with Go

templates, which are the basis of Hugo templating. Also, speed is a

feature,

and hugo -D can rebuild this entire static site, all 2,700 pages, in under 2

seconds.

And while I am happy with Hugo now that I’ve gotten up to speed, the introductory documentation is missing a hugely important bit of trivia about Hugo configuration files and the templates, which is that case is ignored.

That is, if you have a configuration file that looks like this:

baseURL = "https://example.org/"

languageCode = "en-us"

title = "My New Hugo Site"

theme = "ananke"

You will later look at a template and see:

{{ .Title | default .Site.Title }}

Which may cause you to scratch your head. As far as I can tell, Hugo doesn’t

care about case at all, so .Site.Title could just as easily be written

as .site.title, or .SiTe.TiTlE, and the same things goes for the config file,

where case also doesn’t seem to matter:

baseURL = "https://example.org/"

languageCode = "en-us"

TITLE = "My New Hugo Site"

theme = "ananke"

will work just as well.

Another feature that I really like with Hugo is the simple post tagging functionality that’s built in, allowing me have a page of categorized posts. In the process I went back and added tags to many, but not all, of my posts. The tags, along with the Related Content functionality builds easy navigation paths among related posts. My favorite of these was bringing together all of my entries that have visualizations.

Oh, one more update about a footgun to avoid in the Ananke theme, it blocks all indexing by search engines by default.

My home cooking has improved, but no thanks to YouTube chefs or food blogs – keeping recipes simple is the key

Why DIY is a Methodology not an Ideology

Two things have recently inspired me to get this concept out in the world. One is the number of artists who have jumped headlong into doing things on their own in the face of the COVID-19 pandemic, and the other is a flurry of questionnaires I’ve received from music degree students exploring ‘DIY vs signed’ as an undergrad thesis topic.

Two things have recently inspired me to get this concept out in the world. One is the number of artists who have jumped headlong into doing things on their own in the face of the COVID-19 pandemic, and the other is a flurry of questionnaires I’ve received from music degree students exploring ‘DIY vs signed’ as an undergrad thesis topic.

My place in all this is that, for the last 20 years, my career has looked like the textbook example of how to be ‘DIY’ in music – I’ve been self-recording, self releasing, self-managing, self-booking for pretty much all of that time. I even do my own photoshoots, my own album artwork. I’ve yet to interview myself for a magazine, but it’s surely only a matter of time, eh?

So it looks – from the outside – like the life of someone ideologically committed to a DIY ethos. To a life of keeping everything in-house, to the innate value of – literally – doing it all yourself.

However, that’s not the case. At all. I have no particular attachment to being DIY. It is, as the title of this post says, a methodology, not an ideology. It is the route by which I execute the things that I AM very much ideologically committed to, in the absence of any other route revealing itself as I go along.

I do, in fact, have a real issue with the idea that DIY should be an ideology. As far as I can tell, to be committed to DIY contains no particular meaningful ethical consideration of other people, of their wellbeing, of the potential for collaboration helping everyone out, of the ways in which music projects and identities can scale in relation to public recognition in ways that can start to support micro-economies and build scenes. DIY as an ideology says that – for no apparent creative or humane reason – doing literally everything yourself is objectively purer than hiring other people, or working in larger teams.

What’s odd is that this being the dominant view of DIY is a pretty new occurrence. The DIY punk scenes of the 80s, perhaps best described in the extraordinary book Our Band Could Be Your Life, were very much DIY out of necessity, and became increasingly collaborative and, in some notable cases, structured as their visibility grew and required a greater level of infrastructure to manage the various implications of that success. DIY was the start point, it was a way to stop blaming a lack of ‘support’ for not making art, and it was definitely weaponised culturally to create a sense of ‘us against the world’ – easy enough to do in the fairly binary pre-internet record releasing world of ‘Major or indie’. But the mechanisms by which things got done – at least by those who weren’t epically inhibited by drink and drug addictions – were notable for their practicality. Everything seemed to be geared towards making the next thing happened. The frustration documented in Our Band Could Be Your Life around having to recoup on one record before being able to afford to even press copies of the next is an indication of just how practical they needed to be. There wasn’t really much room for ideological purity, but there was also precious little room for ‘selling out’ – for most of the bands in the punk scene in the US, ending up on a major was deeply unlikely before Nirvana moved to Geffen.

But back to our idea that DIY is a method. Because if it is the method, what is it that we’re working towards? What is the ethos, the ideology, the creative aim that is being served by a DIY method? For me, it was a commitment to productivity, to knowing my audience, and to creative freedom. Not that I wanted to be wilfully obscure, just that it always struck me as deeply reductive that labels would try to squeeze artists into a category that they felt best able to market. I mean, I understand the desire to make back the money that was invested, but I don’t understand the lack of trust in the artist to make the music that matters to them. So even at a small indie level, back when I started out in the age of all music sales happening via physical media, the economic need to recoup placed creative strictures on what any given artist could do on any given label. (as an aside, I first began thinking about this LONG before I began my solo career – working with various artists in gospel music/CCM in the early/mid 90s, I’d come across a number of artists who felt completely unable to write honestly because their label demanded a lyrical adherence to a pretty moribund and juvenile set of theological benchmarks. On US Christian radio at the time, there was literally a ‘JPM’ count – ‘Jesus per minute’ – that required artists to name check the big guy a certain number of times to get played. These artists I’d come into contact with were severely hampered in their professional growth and ended up living lives completely out of whack with the trite bullshit on their records… An object lesson at a time I didn’t realise I needed it).

So, I needed the freedom to make the music that mattered to me, to not ‘make it funky’ or ‘do an all-ambient record’ or any of the other things that angry bass-splainers would email me in those halcyon pre-social media days. I needed to be able to make the music that I cared about. That doesn’t require me to professionally isolate myself from other people, but it does require them to demonstrate a significant understanding of my creative priorities before jumping in and getting involved. Or alternatively, for the interaction to be short-lived enough that they provide a service, I provide music, and we move on. So I would occasionally play gigs booked by other people, and had a couple of quite significant supporters of my early live work (Sebastian Merrick in London, who now runs kazum.co.uk and Iain Martin of Stiff Promotions on the south coast), neither of whom ever tried to tell me what to play, or in any way hindered or hampered my music progress. I also had a co-producer for Behind Every Word – Sue Edwards – who had demonstrated over and over that she completely understood what I was trying to do and why, and her advice was always geared towards me making the best version of what I do, not moulding it to anyone else’s notion of what it ought to be… Sue’s continued to be a valued collaborator over the years, having had vital input into aspects of my music life at various times.

So my DIY method has continued in the absence of anyone or anything coming along to fill those roles more effectively and in an economically sustainable way without impacting my creative aims. I’ve had various offers over the years, from production companies wanting to put together tuitional videos, an early offer of a nationwide CD distribution deal, and the unsolicited occasional expression of interest from a producer evidencing zero awareness of what I do or why I do it.

Developing the know-how, the skills, the competencies and assembling the tools and resources – as well as refining (often downsizing) the external benchmarks of success – has been an ongoing daily discipline for 20 years. Getting better at everything every day. Taking every opportunity to learn about the skills needed, iteratively improving my skills at playing, recording, writing (words and music), photography, design, web design (my current website design is another example of someone coming along and offering to do the job WAY better than I could, without impacting negatively on the big picture – thanks Thatch!), mixing, mastering, social media, videography… Every element improving daily. I never whinge about having to do a multitude of things because not doing them would require me to pay someone else to do them, skill-swap, or rely on someone else’s generosity for personal gain, and if I CAN do them it means that the offer that comes in to replace them needs to be significantly better than what I can do myself.

At any moment, any aspect of my career is open to help/support/collaboration/advice/learning/outsourcing. But if it messes with those core aims, if it suggests making less music so I can make more money by focussing my attention on marketing one thing, if it removes me from the audience community that sustains the work, if it starts telling me the kind of music I should be making to reach more people, it’ll be cut off straight away. I don’t have time to spend explaining why those things are bullshit in my context, why I’m not interested in any of those metrics of success or why I’m way more happy in my obscure corner of the internet making ridiculous amounts of music for people who are actively invested in its ongoing viability than I would be landing a track on a Spotify playlist then touring off the back of the listener data it generates having to play the same music each night… Those are not sustainable practices.

So where does this leave us? Sadly, there is no simple binary that says DIY=good, record deals=selling out. That’s a fairly childish nonsense and belies the complex reality of how and why music gets made, marketed and funded. People’s purposes are different, and people’s sense of what validates their art is different, and the discussion about the implications of those validation strategies is separate from the acknowledgement that the infrastructure needed to sustain different types of music career is complex and varied and requires completely different levels of outside support.

However, what is universally true is that any skill you acquire is one that someone has to actively demonstrate they can improve on to be of value to you and the pursuit of your creative or ideological goals. If you can make your own recordings, you have a concrete benchmark for what someone offering to help would need to improve on to be of value to the project. If you can design artwork, you can then connect with people whose vision and ideas are demonstrably more in line with the aesthetic you’re looking for than your own attempts. If you’re sat waiting for someone else to make your art happen, you’re far more open to being exploited or coerced into doing the things that will meet the commercial aims of the other party rather than finding a win/win that benefits everyone.

It’s also important to acknowledge that seeing the acquisition of support as a sliding scale enables us to innovate in how we think about the exchange of value between creative and business entities. Skill swaps, collectives, short term collaborations and the distribution of labour amongst a community can all be replacements for more hierarchical economic structures around the production of art. As they get more complex and have more invested in them they may require more formal structures (the forming of a legal co-operative for example), but they are all possible ways to explore the extending of input into our creative lives without seeing the world in falsely black and white ‘DIY or signed’ terms.

The mantra is the same as it’s always been. Keep making your art, keep practicing, get better, seek knowledge wherever and whenever you can, and find ways to collaborate on meeting yours and others’ creative goals. Everything else is just method.

Pandemic Shopping Like It’s 1980’s GDR

When I made a visit to East Berlin a few years before the wall came down, my teenage eyes wondered about shopping and customer service.

To visit a bookstore near Alexanderplatz I had to stand in line. There were only a handful of shopping baskets available, and they were mandatory, so you stood in line until someone left the shop and returned the basket. I stood there for a while, and then with a basket could browse the shelves. There were less than ten people in the shop. While many more stood outside waiting.

Visiting a cafe with two others, the tables were all the same size, only the number of chairs at each table differed. We were three. A table with two chairs was free. Next to it was a man on his own, I remember he wore a leather jacket sipping coffee and reading a paper, at a table with three chairs. We asked if we could have a chair, and pull it up to our table. “Na klar”, he said. We looked at the menu. No service came. We waited. No service came. I went up to the waitress and asked if she could take our order. No, she said, “you’re with three people on a table for two so you’re not getting served.” I was stunned. I tried logic, “look the tables are all the same size!”, but failed. In the end we returned a chair to the table with the guy in the leather jacket and asked him to trade tables. He picked up his coffee and newspaper (it was the 80’s remember), and sat at our original table, while we moved to his. Within seconds the waitress was with us to take our lunch orders.

For years I shared these anecdotes as examples of how odd it all was during that visit to East Germany.

Fast forward 33 years, to our pandemic times.

In our neighbhourhood most shops have introduced a system of mandatory baskets. They use it to cap the number of clients in the store to the maximum they can accomodate within the 1.5m distancing guidelines. Outside others wait their turn.

From next week cafes and restaurants can open again, and I see and read how those here in town are arranging same sized tables out on the market square, varying the number of chairs to make it all work, and setting tables inside for specific numbers of people to stay within max allowed capacity.

After 33 years I need to retire my anecdotes from 1980’s East Berlin it seems. It wasn’t odd, it was avant garde!

A sober analysis of Trump’s executive order regarding social media

President Trump signed an executive order yesterday that would change the government’s stance on social networks. Let’s look at the portions that make sense, and the portions that are unhinged raving. I’ll start with this. Social networks need regulation. Twitter, Facebook, and YouTube are spreading disinformation and dividing America. Now that Twitter has added content … Continued

The post A sober analysis of Trump’s executive order regarding social media appeared first on without bullshit.

Filtered for musical cyborgs

1.

Here’s Piano Genie, a device with a row of eight colourful arcade buttons that sits between the player and the actual piano keyboard.

Play the coloured buttons, and the device improvises a virtuoso performance on the piano itself – matching the intent of your ham-fisted button pushing.

Musical upscaling, I guess?

File this cyborg prosthesis as: power amplifier. It directly amplifies human intent.

2.

Guitar Machine is a robotic attachment for a guitar.

At first it seems like Guitar Machine is a replacement for the human: the player will “train” the machine (programming by example?) and the machine will replay what it has been taught.

So file this cyborg prosthesis as: macro engine. Script once, repeat indefinitely.

BUT – give a machine like this to a musician, and they try to break it. Guitar Machine can play the guitar simultaneously with the human:

He was seamlessly transitioning between giving the robot the lead, taking over control, and synthesizing his own playing with the robot’s once he understood what the robot was doing.

A duet!

3.

Here’s a short documentary about a drummer with a bionic arm. He lost his original arm, and now in its place is this bionic arm that is made to play the drums.

Another article goes hard on how the beats aren’t humanly possible: The prosthetic arm can play the drums four times faster than humans.

The arm can also play strange polyrhythms that no human can play.

Then there’s this bit:

Then, he fitted Barnes with a cyborg arm with two drumsticks – one that is controlled by Barnes, and the other that operates autonomously through its own actuator. The arm actually listens to the music being played and improvises its own accompanying beat pattern, which are pre-programmed into it.

I am into the idea that this cyborg arm has its own will and its own creative urge.

The two-way feedback and improvisation makes this more than a duet.

File this cyborg prosthesis as a third type: centaur.

I posted last month about wild cyborg prosthetics – it strikes me that a typology like this is a useful way to generate more ideas.

HEY, A QUESTION:

Are there research labs in the UK/Europe working on the underlying tech for this? In any domain really, music to military, swimming to shopping. Like, human prostheses haunted by embedded wilful compute?

As they say, lmk.

4.

The Impossible Music of Black MIDI starts with some historical background:

In 1947, the composer Conlon Nancarrow–frustrated with human pianists and their limited ability to play his rhythmically complex music–purchased a device which allowed him to punch holes in player piano rolls. This technology allowed him to create incredibly complex musical compositions, unplayable by human hands, which later came to be widely recognized by electronic musicians as an important precursor to their work.

And this is the whole point. Cyborg technology is not about existing musicians playing existing music with less effort. It’s about scouting ahead to invent whole new genres.

The article goes on to talk about Black MIDI itself:

A similar interest in seemingly impossible music can be found today in a group of musicians who use MIDI files (which store musical notes and timings, not unlike player piano rolls) to create compositions that feature staggering numbers of notes. They’re calling this kind of music “black MIDI,” which basically means that when you look at the music in the form of standard notation, it looks like almost solid black.

The sound is an ascent into an insane chaos, like jamming static in your ears. I love it.

Do check out the article because then you can listen to the track Bad Apple, which is embedded there, and which reportedly includes 8.49 million separate notes.

Not that we measure the worth of music by weight, like buying potatoes. But still, what if we did.

ahahahahahahaha kill me pic.twitter.com/Oc8PTiBSlY

|

mkalus

shared this story

from |

call me crazy but i feel like car guys got in the public transportation business in southern california once before and the results weren't great pic.twitter.com/lGa9Ou4a7s

|

mkalus

shared this story

from |

call me crazy but i feel like car guys got in the public transportation business in southern california once before and the results weren't great pic.twitter.com/lGa9Ou4a7s

27 likes, 3 retweets

Dell Canada offering deals on XPS 13, laptops, monitors and more

Dell is running a sale on several Inspiron and XPS laptops, as well as desktops, monitors and more.

There are a surprising number of deals on the company’s Canadian website. I’ve picked out a few highlights for you below, but it’s worth checking out for yourself.

- Inspiron 15 3000 laptop – $649.99 CAD (save $119)

- Inspiron 12 5000 2-in-1 – $779.99 (save $169)

- Inspiron desktop – $709.99 (save $259)

- Dell 24 Monitor – $159.99 (save $110)

- Dell UltraSharp 24 – $319.99 (save $120)

- Inspiron 14 7000 – $1,199.99 (save $200)

- XPS 13 – $1,299.99 (save $150)

Along with the deals on computers, Dell also offers discounts on several accessories on its website. Various adapters, monitor arms, keyboard, mice and other items are on sale as well.

You can find the full details on Dell’s website.

The post Dell Canada offering deals on XPS 13, laptops, monitors and more appeared first on MobileSyrup.

Practical Python Programming

David Beazley has been developing and presenting this three day Python course (aimed at people with some prior programming experience) for over thirteen years, and he's just released the course materials under a Creative Commons license for the first time.

Via @dabeaz

The Science of Sourdough Starters

You might think this has nothing to do with online learning, but you'd be wrong. This is online learning. Sure, it's not an online course or program, but regular readers will know I've long advocated for a much wider definition of online learning. This article, and the recent popularity of pandemic sourdough, is a case in point. It's clearly written, even though it throws some heavy scientific terms at the reader, and most people (myself included) will come away knowing more than they did before reading it. A few people will be inspired to investigate further and (like my wife) master the art. And many people will start reading the article, decide they're not interested, and not finish. That's how online learning works. See also: how to make sourdough starter. Next up: Neapolitan pizza dough.

Web: [Direct Link] [This Post]Mega List of Project Updates!

I first wanted to start off with a huge thank you to all of our customers, readers, suppliers, and staff for your support during these strange times.

Velo Orange is a strong company, and in the midst of challenging times like these, we're adapting and moving forward. We wanted to share some of the moves we're making that we're proud of, and look forward to coming to fruition.

We've designed, tested, prototyped, and have sent the next generation Polyvalents into production. And let me tell you - they are a blast! We're offering two frame styles: "Low-Kicker" and "Diamond."

The first production run is going to be in the "Low-Kicker" configuration. In addition to being able to get on and off the saddle easily at stoplights, this low-slung top tube setup is a great option for lots of different riders. Fully loaded tourists will find it significantly easier to mount and dismount. Riders with limited flexibility will be able to ride a seriously capable and fun bike without compromising performance. Lastly, riders down to 5' will be able to have a very comfortable position with both drop and flat bars.

We'll also be offering a diamond-frame option for those after a more traditional look. These are just going into testing, but here is a raw frame picture.

Both will be in this absolutely stunning Metallic Sage paint. These should be available around October. We'll be opening up a pre-sale soon with more info, geometry, and close-ups, so stay tuned.

We've just gotten the final prototypes of the Pass Hunters and they are sooooo good. I'm super excited to be offering the (in my opinion) perfect "Sport Touring Bike."

The first Pass Hunter design called for the smaller sizes to be built around a 26x45mm tire size, but in the time of prototyping and testing the first iteration, this tire size is simply no longer common or available. We even talked to a number of rim and tire manufacturers who were discontinuing performance-oriented 26" rims and tires. Bummer. No worries, we've re-designed the framesets to accommodate 650bx47mm or 700x32mm tires and fenders across the board.