I gave this keynote this morning at the ICLS Conference, not in Nashville as originally planned (which is a huge bummer as I really want some hot chicken)

As I sat down to prepare this talk, I had to go back into my email archives to see what exactly I'd said I'd say. It was October 29, 2019 when I sent the conference organizers my title and abstract. October 29, 2019 — lifetimes ago. Do you remember what happened on October 29? Lt. Col. Alexander Vindman testified in front of Congress about President Trump's phone call with the leader of the Ukraine. Lifetimes ago. I'm guessing there are plenty of you who submitted your conference papers in the "Before Times" and now struggle too to decide if and how you need to rethink and rewrite your whole presentation.

I do still want to talk to you today about "the ed-tech imaginary," and I think some of the abstract I wrote last year still stands:

How do the stories we tell about the history and the future of education (and education technology) shape our beliefs about teaching and learning — the beliefs of educators, as well as those of the general public?

I do wonder, however, if or how much our experiences over the past four months or so have shifted our beliefs about the possibilities of ed-tech — our experiences as teachers and students and parents certainly, but also our experiences simply as participants and observers of the worlds of work-from-home and Zoom-school. Do people still imagine, do people still believe that technology is the silver bullet? I don't know. I do know I hear statements like this a lot: "I can't imagine how we will go back to face-to-face classes in the fall." And at the same time, I hear this: "I can't imagine there won't be football." What do we now imagine for the future?

I'd like to think that it's not just the pandemic that has changed us and changed our expectations of school and of ed-tech. So too, I hope, have the scenes of racist police brutality and the protests that have arisen in response. Black lives matter.

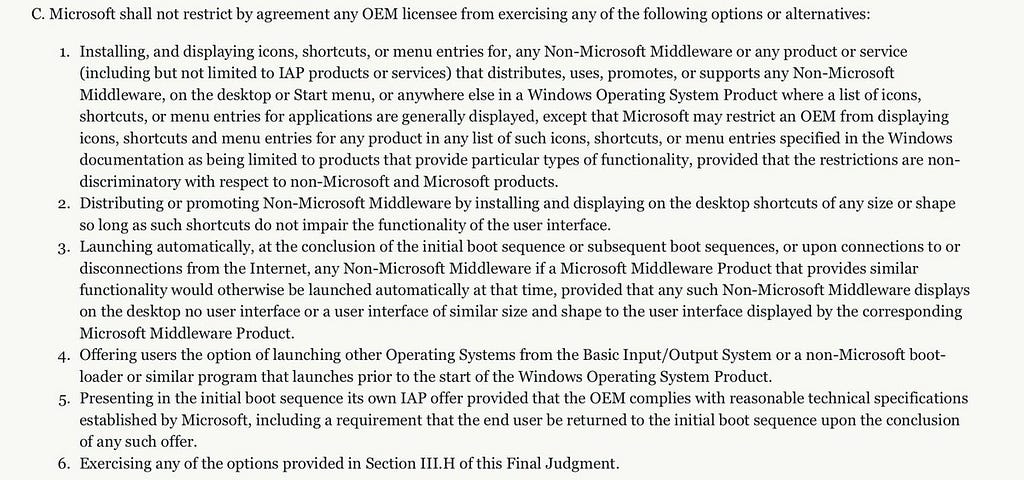

We can say "Black lives matter," but we must also demonstrate through our actions that Black lives matter, and that means we must radically alter many of our institutions and practices, recognizing their inhumanity and carcerality. And that includes, no doubt, ed-tech. How much of ed-tech is, to use Ruha Benjamin's phrase, "the new Jim Code"? How much of ed-tech is designed by those who imagine students as cheats or criminals, as deficient or negligent?

"To see things as they really are," legal scholar Derrick Bell reminds us, "you must imagine them for what they might be."

I write a lot about the powerful narratives that shape the ways in which we think about education and technology. But I won't lie. I tend to be pretty skeptical about exercises in "reimagining school." "Reimagining" is a verb that education reformers are quite fond of. And "reimagining" seems too often to mean simply defunding, privatizing, union-busting, dismantling, outsourcing.

We must recognize that the imagination is political. And if Betsy DeVos is out there "reimagining," then we best be resisting not just dreaming alongside her.

The "ed-tech imaginary," as I have argued elsewhere, is often the foundation of policies. It's certainly the foundation of keynotes and marketing pitches. It includes the stories we invent to explain the necessity of technology, the promises of technology; the stories we use to describe how we got here and where we are headed. Tall tales about "factory-model schools" and so on.

Despite all the talk about our being "data-driven," about the rigors of "learning sciences" and the like, much of the ed-tech imaginary is quite fanciful. Wizard of Oz pay-no-attention-to-the-man-behind-the-curtain kinds of stuff.

This storytelling is, nevertheless, quite powerful rhetorically, emotionally. It's influential internally, within the field of education and education technology. And it's influential externally — that is, in convincing the general public about what the future of teaching and learning might look like, should look like, and making them fear that teaching and learning today are failing in particular ways. This storytelling hopes to set the agenda.

In a talk I gave last year, I called this "ed-tech agitprop" — the shortened name of the Soviet Department for Agitation and Propaganda which was responsible for explaining communist ideology and convincing the people to support the party. This agitprop took a number of forms — posters, press, radio, film, social networks — all in the service of spreading the message of the revolution, in the service of shaping public beliefs, in the service of directing the country towards a particular future. I think we can view the promotion of ed-tech as a similar sort of process — the stories designed to convince us that the future of teaching and learning will be a technological wonder. The "jobs of the future that don't exist yet." The push for everyone to "learn to code."

Arguably, one of the most powerful, most well-known stories of the future of teaching and learning looks like this:

Now, you can talk about the popularity of TED Talks all you want — how the ideas of Sal Khan and Sugata Mitra and Ken Robinson have been spread to change the way people imagine education — but millions more people have watched Keanu Reeves, I promise you. This — The Matrix — has been a much more central part of our ed-tech imaginary than any book or article published by the popular or academic press. (One of the things you might do is consider what other stories you know — movies, books — that have shaped our imaginations when it comes to education.)

The science fiction of The Matrix creeps into presentations that claim to offer science fact. It creeps into promises about instantaneous learning, facilitated by alleged breakthroughs in brain science. It creeps into TED Talks, of course. Take Nicholas Negroponte, for example, the co-founder of the MIT Media Lab who in his 2014 TED Talk predicted that in 30 years time (that is, 24 years from now), you will swallow a pill and "know English," swallow a pill and "know Shakespeare."

What makes these stories appealing or even believable to some people? It's not science. It's "special effects." And The Matrix is, after all, a dystopia. So why would Matrix-style learning be desirable? Maybe that's the wrong question. Perhaps it's not so much that it's desirable, but it's just how our imaginations have been constructed, constricted even. We can't imagine any other ideal but speed and efficiency.

We should ask, what does it mean in these stories -- in both the Wachowskis' and Negroponte's -- to "know"? To know Kung Fu or English or Shakespeare? It seems to me, at least, that knowing and knowledge here are decontextualized, cheapened. This is an hollowed-out epistemology, an epistemic poverty in which human experience and human culture and human bodies are not valued. But this epistemology informs and is informed by the ed-tech imaginary.

"What if, thanks to AI, you could learn Chinese in a weekend?" an ed-tech startup founder once asked me — a provocation that was meant to both condemn the drawbacks of traditional language learning classroom and prompt me, I suppose, to imagine the exciting possibilities of an almost-instanteous fluency in a foreign language. And rather than laugh in his face — which, I confess that I did — and say "that's not possible, dude," the better response would probably have been something like: "What if we addressed some of our long-standing biases about language in this country and stopped stigmatizing people who do not speak English? What if we treated students who speak another language at home as talented, not deficient?" Don't give me an app. Address structural racism. Don't fund startups. Fund public education.

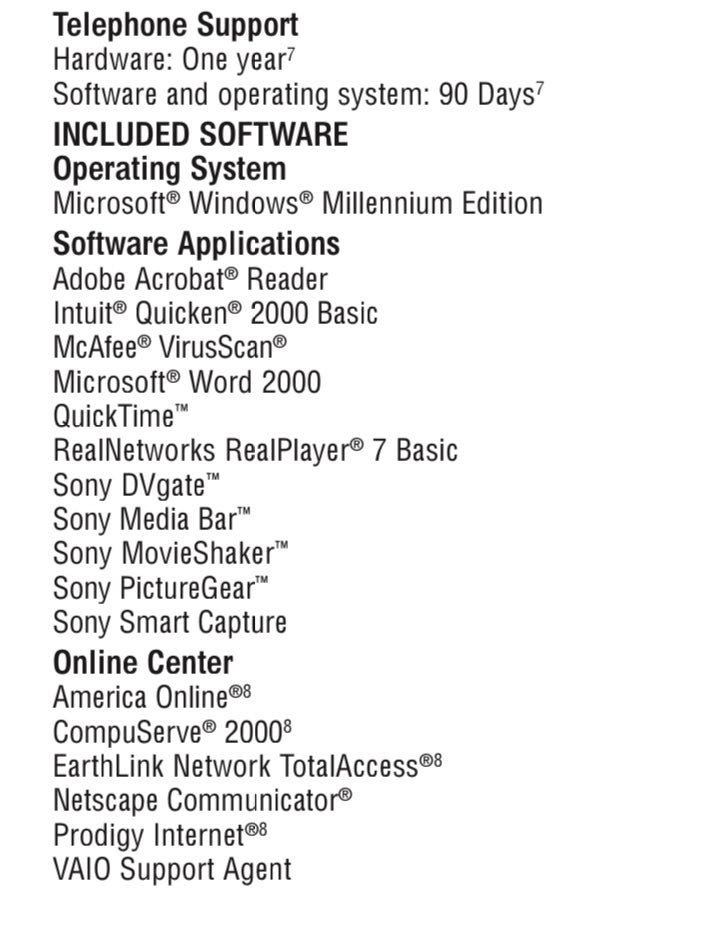

This comic appeared in newspapers nationwide in 1958 — the same year that psychologist B. F. Skinner published his first article in Science on education technology. You can guess which one more Americans read.

Push-button education. Tomorrow's schools will be more crowded; teachers will be correspondingly fewer. Plans for a push-button school have already been proposed by Dr. Simon Ramo, science faculty member at California Institute of Technology. Teaching would be by means of sound movies and mechanical tabulating machines. Pupils would record attendance and answer questions by pushing buttons. Special machines would be "geared" for each individual student so he could advance as rapidly as his abilities warranted. Progress records, also kept by machines, would be periodically reviewed by skilled teachers, and personal help would be available when necessary.

The comic is based on an essay by Simon Ramo titled "The New Technique in Education" in which he describes at some length a world in which students' education is largely automated and teachers are replaced with "learning engineers" — a phrase that has become popular again in certain ed-tech reform circles. This essay and the comic, I'd argue, helped establish an ed-tech imaginary that is familiar to us still today. Push-button education is "personalized learning." Personalized learning is push-button education.

(Ramo is better known in other circles as "the father of the intercontinental ballistic missile," incidentally.)

Another example of the ed-tech imaginary from post-war America: The Jetsons. The Hanna-Barbera cartoon — a depiction of the future of the American dream — appeared on prime-time television during the height of the teaching machine craze in the 1960s. Mrs. Brainmocker, young Elroy Jetson's robot teacher (who, one must presume by her title, was a married robot teacher), appeared in just one episode — the very last one of the show's original run in 1963.

Mrs. Brainmocker was, of course, more sophisticated than the teaching machines that were peddled to schools and to families at the time. The latter couldn't talk. They couldn't roll around the classroom and hand out report cards. Nevertheless, Mrs. Brainmocker’s teaching — her functionality as a teaching machine, that is — is strikingly similar to the devices that were available to the public. Mrs. Brainmocker even looks a bit like Norman Crowder's AutoTutor, a machine released by U.S. Industries in 1960, which had a series of buttons on its front that the student would click on to input her answers and which dispensed a paper read-out from its top containing her score. An updated version of the AutoTutor was displayed at the World's Fair in 1964, one year after The Jetsons episode aired.

Teaching machines and robot teachers were part of the Sixties' cultural imaginary — perhaps that's the problem with so many Boomer ed-reform leaders today. But that imaginary — certainly in the case of The Jetsons — was, upon close inspection, not always particularly radical or transformative. The students at Little Dipper Elementary still sat in desks in rows. The teacher still stood at the front of the class, punishing students who weren't paying attention. (In this case, that would be school bully Kenny Countdown, who Mrs. Brainmocker caught watching the one-millionth episode of The Flintstones on his TV watch.)

Not particularly radical or transformative in terms of pedagogy and yet utterly exclusionary in terms of politics. This ed-tech imaginary is segregated. There are no Black students at the push-button school. There are no Black people in The Jetsons — no Black people living the American dream of the mid-twenty-first century.

To borrow from artist Alisha Wormsley, "there are Black people in the future." Pay attention when an imaginary posits otherwise. To decolonize the curriculum, we must also decolonize the ed-tech imaginary.

There are other stories, other science fictions that have resonated with powerful people in education circles. Mark Zuckerberg gave everyone at Facebook a copy of the Ernest Cline novel Ready Player One, for example, to get them excited about building technology for the future — a book that is really just a string of nostalgic references to Eighties white boy culture. And I always think about that New York Times interview with Sal Khan, where he said that "The science fiction books I like tend to relate to what we're doing at Khan Academy, like Orson Scott Card's 'Ender's Game' series." You mean, online math lectures are like a novel that justifies imperialism and genocide?! Wow.

There are other stories, of course.

The first science fiction novel, published over 200 years ago, was in fact an ed-tech story: Mary Shelley's Frankenstein. While the book is commonly interpreted as a tale of bad science, it is also the story of bad education — something we tend to forget if we only know the story through the 1931 film version. Shelley's novel underscores the dangerous consequences of scientific knowledge, sure, but it also explores how knowledge that is gained surreptitiously or gained without guidance might be disastrous. Victor Frankenstein, stumbling across the alchemists and then having their work dismissed outright by his father, stoking his curiosity so much that a formal (liberal arts?) education can't change his mind. And the creature, abandoned by Frankenstein and thus without care or schooling, learning to speak by watching the De Lacey family, learning to read by watching Safie, "the lovely Arabian," do the same, finding and reading Paradise Lost.

"Remember that I am thy creature," the creature says when he confronts Frankenstein, "I ought to be thy Adam; but I am rather the fallen angel, whom thou drivest from joy for no misdeed. Everywhere I see bliss, from which I alone am irrevocably excluded. I was benevolent and good — misery made me a fiend." Misery and, perhaps, reading Milton.

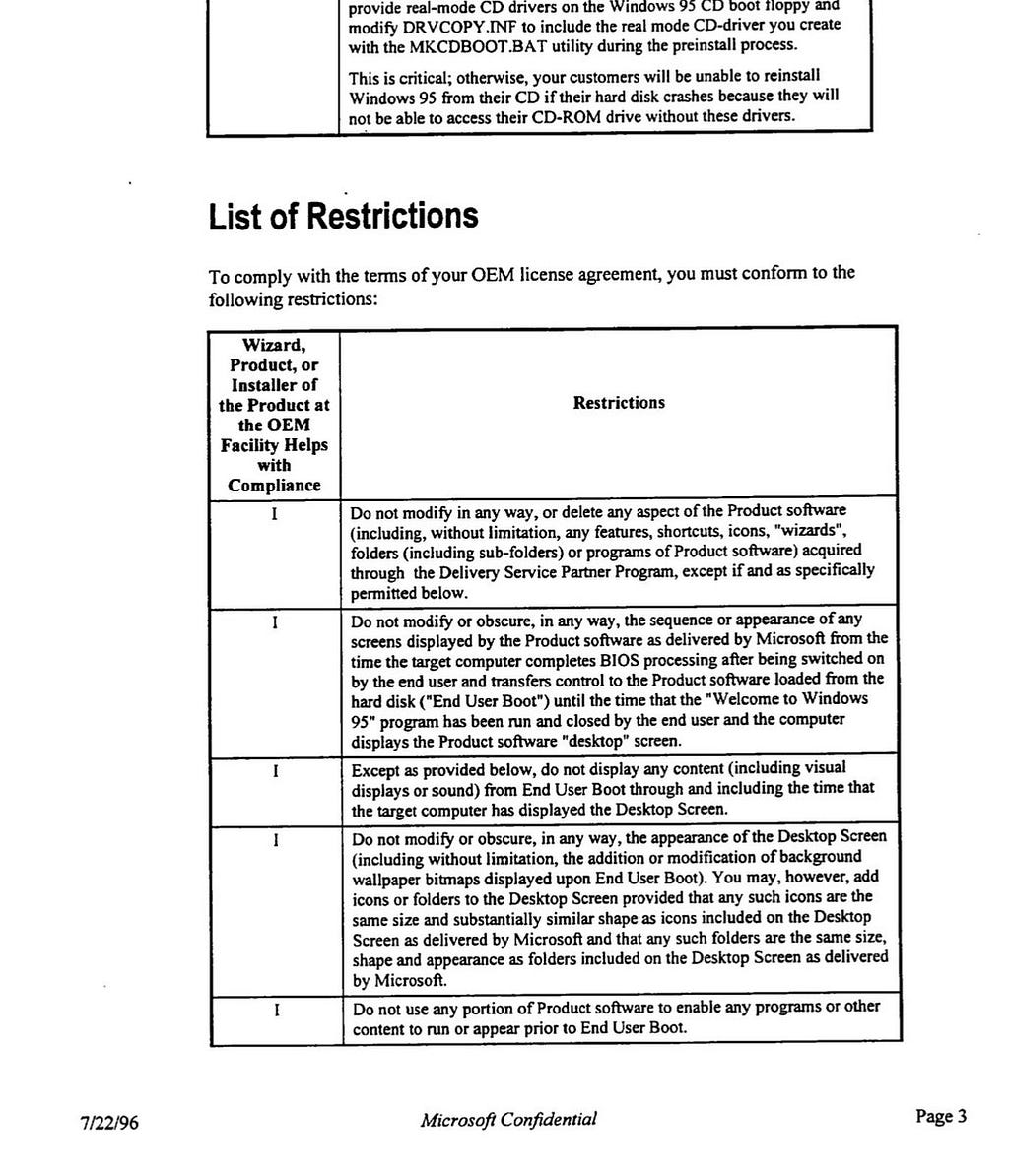

I've recently finished writing a book, as some of you know, on teaching machines. It's a history of the devices built in the mid-twentieth century — before computers — that psychologists like B. F. Skinner believed could be used to train children (much as he trained pigeons) through operant conditioning. Teaching machines would, in the language of the time, "individualize" education. It's a book about machines, and it's a book about Skinner, and it's a book about the ed-tech imaginary.

B. F. Skinner was, I'd argue, one of the best known public intellectuals of the twentieth century. His name was in the newspapers for his experimental work. His writing was published in academic journals as well as the popular press. He was on television, on the cover of magazines, on bestseller lists.

Incidentally, here's how Ayn Rand described Skinner's infamous 1971 book Beyond Freedom and Dignity, a book in which he argued that freedom was an illusion, a psychological "escape route" that convinced people their behaviors were not controlled or controllable:

"The book itself is like Boris Karloff's embodiment of Frankenstein's monster,” Rand wrote, "a corpse patched with nuts, bolts and screws from the junkyard of philosophy (Pragmatism, Social Darwinism, Positivism, Linguistic Analysis, with some nails by Hume, threads by Russell, and glue by the New York Post). The book's voice, like Karloff's, is an emission of inarticulate, moaning growls — directed at a special enemy: 'Autonomous Man.'"

Note: I only cite Ayn Rand here because of the Frankenstein reference. It's also a reminder that the enemy of your enemy need not be your friend. And it's always worth pointing how much of the Silicon Valley imaginary — ed-tech or otherwise — is very much a Randian fantasy of libertarianism and personalization.

Part of the argument I make in my book is that much of education technology has been profoundly shaped by Skinner, even though I'd say that most practitioners today would say that they reject his theories; that cognitive science has supplanted behaviorism; and that after Ayn Rand and Noam Chomsky trashed Beyond Freedom and Dignity, no one paid attention to Skinner any more — which is odd considering there are whole academic programs devoted to "behavioral design," bestselling books devoted to the "nudge," and so on.

In 1971, the same year that Skinner's Beyond Freedom and Dignity was published, Stanley Kubrick released his film A Clockwork Orange. And I contend that the movie did much more damage to Skinner's reputation than any negative book review.

To be fair, the film, based on Anthony Burgess's 1963 novel, did not depict operant conditioning. Skinner had always argued that positive behavioral reinforcement was far more effective than conduct aversion therapy — than the fictional "Ludovic Technique" that A Clockwork Orange portrays.

A couple of years after the release of the film, Anthony Burgess wrote an essay (unpublished) reflecting on his novel and the work of Skinner. Burgess made it very clear that he opposed the kinds of conditioning that Skinner advocated — even if, as Skinner insisted, behavioral controls and social engineering could make the world a better place. "It would seem," Burgess concluded, "that enforced conditioning of a mind, however good the social intention, has to be evil." Evil.

Many people who tell the story of ed-tech say that Skinner's teaching machines largely failed because computers came along. But what if what led to the widespread rejection of teaching machines — for a short time, at least — was in part that the "ed-tech imaginary" shifted and we recognized the dystopia, the inhumanity, the carcerality in behavioral engineering and individualization? The imaginary shifted, and politics shifted. A Senate subcommittee investigated behavior modification methods in 1974, for example.

How do we shift the imaginary again? And just as importantly, of course, how do we shift the reality? How do we design and adopt ed-tech that does not harm users?

First, I think, we must recognize that ed-tech does do harm. And then, we must realize that there are alternatives. And there are different stories we can turn to outside those that Silicon Valley and Hollywood have given us for inspiration.

I'll close here with one more story — not a piece of the ed-tech imaginary per se, but one that maybe could be, something that points towards possibility, something that might help us tell stories and enact practices that are less carceral, more liberatory. In her essay "The Carrier Bag Theory of Fiction," science fiction writer Ursula K. Le Guin offers some insight into the (Capital-H) Hero and (Capital-A) Action that has long dominated the stories we have told about Western civilization, its history and its future. This is our mythology. She refers to that famous scene in another Stanley Kubrick film, 2001: A Space Odyssey, in which a bone is used to murder an ape and then gets thrown into the sky where it becomes a space ship. Weapons and Heroes and Action. "I'm not telling that story," she says. Instead of the bone or the spear, she's interested in a different tool from human evolution: the bag — something to carry or store grain in, something to carry a child in, something that sustains the community, something where you put precious items that you will want to take out later and study.

That's the novel, Le Guin says — the novel is the carrier bag theory of fiction. And as the Hero with his pointy sticks tends to rather silly in that bag, she argues, we've developed different characters instead to fill our novels. But the genre of science fiction, to the contrary, has largely embraced that older Hero narrative.

If science fiction is the mythology of modern technology, then its myth is tragic. "Technology," or "modern science" (using the words as they are usually used, in an unexamined shorthand standing for the "hard" sciences and high technology founded upon continuous economic growth), is a heroic undertaking, Herculean, Promethean, conceived as triumph, hence ultimately as tragedy. The fiction embodying this myth will be, and has been, triumphant (Man conquers earth, space, aliens, death, the future, etc.) and tragic (apocalypse, holocaust, then or now).

I'd say that this applies to science and technology as fields not just as fictions. Think of Elon Musk shooting his sports car into space.

As we imagine a different path forward for teaching and learning, perhaps we can devise a carrier bag theory of ed-tech, if you will. Indeed, as I hope I've shown you this morning, so much of the ed-tech imaginary is wrapped up in narratives about the Hero, the Weapon, the Machine, the Behavior, the Action, the Disruption. And it's so striking because education should be a practice of care, not conquest. Knowledge as a bag that sustains a community, not as a cudgel. Imagine that.

I liked this prototype for its small size and for the way it kept the light very close to the rails– the mount itself is barely visible. Plus, depending on the height of the saddle leather, it also could be mounted upside-down, with the light above the rails, to make it look really stealthy:

I liked this prototype for its small size and for the way it kept the light very close to the rails– the mount itself is barely visible. Plus, depending on the height of the saddle leather, it also could be mounted upside-down, with the light above the rails, to make it look really stealthy: