Rolandt

Shared posts

The courtyard and the public radio

Book Review – Weaving the Web

In 1999, Tim Berners-Lee, together with Mark Fischetti, wrote ‘Weaving the Web‘, a book on how he invented the World Wide Web in the late 1980s and the early 1990’s. It is still published today so it’s not difficult to get hold of it. You can also lend a copy in PDF format from OpenLibrary.org. Written less than a decade after the web had its early break through, it is now two decades old itself and offers incredible insight into the early days, the thoughts of the time how it should evolve and it made me reflect on how it turned out today.

The story starts in the early 1980’s but things really start rolling around 1989. At the time, I had an Amiga 500 at home. Windows 3.1, the operating system that would bring graphical user interfaces and PCs to homes and offices, was still 3 years in the future. In other words, graphical user interfaces, except on home computers such as the Amiga and the Atari ST, and of course on the the Mac, had not yet reached the main stream, at least not the business world anyway. So things were basically still text based, especially around the Internet but things were about to change significantly in the years to come. So from that point of view it is not much of a surprise that first web server and web browser were programmed on a NeXT machine with a graphical user interface. So while GUIs were still ‘islands’ in the vast sea of text based computing, a graphical user interface was part of the Web from the beginning. However, text based browsers where still important which again demonstrates how different the computing and Internet landscape was at the beginning of the 1990s compared to today.

In the first part of the book Tim describes how important concepts such as the URL/URI, http and html came to be, who programmed the first web browsers such as Viola, Erwise and Midas that only few remember today and how the web expanded from CERN in Switzerland to the rest of the world.

As CERN is a nuclear research facility, the ‘web’ did obviously not fit very well into the main research topic of the organization. So for me it was interesting to note that Tim, especially in the early days, took care and worried about staying enough below the radar so the project wouldn’t be shut down, but not so deep that nobody would notice and use the system, which, after all, could make life of many people at CERN itself easier as well.

Odd from today’s perspective is that in the early 90’s quite a number of ‘web sites’ used FTP instead of HTTP servers to host their web pages. Fraunhofer IPA where I worked as an intern in the mid-1990s for example did that as can be seen in the screenshot here. Tim also mentions this in the book, and I guess the reason is quite simple: At the time, lots of institutions already had FTP servers running so it was easier to host the web pages there than setting up a separate http server. Obviously, performance was far from ideal, as the FTP protocol was written with human interaction in mind with a rather lengthy login procedure. But in the early days, with only few people using web browsers and not used to responses within a few hundred milliseconds anyway, it wasn’t really an issue.

Tim also talked about hypertext preceding the web by quite a bit, how he struggled to convince hypertext editor makers from the benefits of linking to sources over the Internet instead of only linking local documents, and the earlier competition to the Web such as Gopher and why the Web prevailed while other solutions faded away.

Also a nice approach at the time: To make it easier for people to try out the web, Tim offered a text based web browser over telnet so anyone could try ‘surfing the text based web’ by just ‘telneting’ to his machine at CERN over the Internet. No software installation necessary. Imagine what this would mean today, in an Internet where automated bots trying to attack and subvert servers every couple of seconds. How innocent things were back then.

There are many many more anecdotes in the book about those early days but I’ll leave it at this point. Before ending this post I’d rather like to spend a few words on the second part of this book which is not about the past, from a 1999 perspective, but about the future of the web. That’s 20 years in the past now and I am amazed how Tim saw the challenges that we still struggle with today to keep the web open and decentralized with access to information for all without walled gardens and a few companies controlling the flow of information and gathering data of everybody. I’d say these thoughts are more current today as ever.

‘Weaving the Web’ by Tim Berners Lee, highly recommended!

Increasing The Quality of Content (instead of the quantity of it)

Too many community strategies prioritise quantity of content over the trustworthiness of content.

This is a shame. It makes the community increasingly noisy without obviously increasing its value to participants.

For a mature community, your goal shouldn’t be to increase the quantity of engagement but to improve the quality of it. People need to have deep trust in the content that’s being created and shared within the community.

Fortunately, the contours of trust are fairly clear:

- Perceived ability of the poster (qualifications, knowledge, experiences).

- Perceived integrity of the poster (honesty, intentions to help others, fairness).

- The consensus of the group (quantity of people who share the opinion compared with those who don’t).

- Trust in the host (is this content hosted on a site known for being trustworthy).

Begin by hosting an annual trust survey.

Ask members about the following (using a 5-point Likert scale):

- Do you feel content posted by members in this community is trustworthy?

- Do you feel members who share content have qualifications, knowledge, and experiences?

- Do you feel members who share content have the best intentions?

- Do you feel enough members share their expertise/opinions in this community?

- Do you feel this website has a reputation for trustworthy content?

You can adapt these as you see fit.

Now you can use this to see which factors are the biggest antecedents of trust for your community. More importantly, you can use this to develop specific actions to increase the trustworthiness of content.

You can find sites that are considered very trustworthy and see the specific steps they’ve taken. Amazon has verified purchases, for example. StackOverflow relies heavily upon its rating system and tight moderation. Find sites that exceed in the areas you’re weak in and borrow their ideas.

Twitter Favorites: [markpopham] the establishment of the IPA as the default style of American craft beer has had a worse effect on human happiness… https://t.co/CmBQZzCVaT

the establishment of the IPA as the default style of American craft beer has had a worse effect on human happiness… twitter.com/i/web/status/1…

Freitag Ahlgren

Forever in love with Swiss Freitag bags and their product videos. This is Ahlgren. Love the size and design. Not familiar with Freitag bags? They are made with super durable truck tarp, last *forever*, are waterproof and super sturdy. I have 4 of them and *love* them.

Apple Commits to 2030 Carbon Neutrality Across Full Business

Every year Apple releases a new environmental report showing the company’s progress in environmental efforts, and alongside the release of this year’s report, the company has announced a new commitment for the decade ahead:

Apple today unveiled its plan to become carbon neutral across its entire business, manufacturing supply chain, and product life cycle by 2030. The company is already carbon neutral today for its global corporate operations, and this new commitment means that by 2030, every Apple device sold will have net zero climate impact.

“Businesses have a profound opportunity to help build a more sustainable future, one born of our common concern for the planet we share,” said Tim Cook, Apple’s CEO. “The innovations powering our environmental journey are not only good for the planet — they’ve helped us make our products more energy efficient and bring new sources of clean energy online around the world. Climate action can be the foundation for a new era of innovative potential, job creation, and durable economic growth. With our commitment to carbon neutrality, we hope to be a ripple in the pond that creates a much larger change.”

Achieving carbon neutrality for its corporate operations was a nice milestone for the company, but this new commitment appears far more challenging. Apple works with third-party suppliers and manufacturers all around the world to build its devices, and fulfilling this new goal depends a lot on those third parties. It will be interesting to see over the next decade all of the different actions that will be taken to find success in carbon neutrality, but the report of Apple including fewer accessories in the box with new iPhone purchases certainly seems like it would help.

→ Source: apple.com

Why GPT-3 Matters

I've run a few posts on GPT-3 and it makes sense to include this item to put it into context. First is its size; "it’s an entire order of magnitude larger" than the previously largest model. "Loading the entire model’s weights in fp16 would take up an absolutely preposterous 300GB of VRAM." What this means is that GPT-3's language models are "few shot learners" - that is, they can "perform a new language task from only a few examples or from simple instructions." That's why it can create a Shakespeare sonnet after being given only the first few lines - it recognizes what you're trying to do and is able to emulate it. Now we're not quite at the point where artificial intelligence can write new open educational resources (OER) on an as-needed basis - but we're a whole lot closer with GPT-3.

Web: [Direct Link] [This Post]22 Principles for Great Product Managers

A product manager is the person responsible for orchestrating all aspects of the development process in toder to realize the vision imagined in a product. They connect the needs expressed by the eventual users of the product with the work undertaken by the development team to meet those needs. This article outlines what makes a good product manager (and it's worth reading in contrast with Audrey Watters's most recent talk). "Yopu should know the game you’re playing (your vision for the product, your product’s value to the customer, your competitive advantage, and how you’ll win) and how you’ll keep score: What does winning mean? How will you measure success? ( Credit to Adam Nash for this framing, see here for his fantastic article)." Image: Career Karma.

Web: [Direct Link] [This Post]Validating Problems

A simple tip that can save you a lot of pain; validate the problems you’re seeing.

Sometimes community leaders see problems that don’t exist.

Is one member creating too many new topics?

Are too many responses coming from the community manager?

Are there too many posts? (or too few?)

Are too many members posting in one place?

And sure, some of these many have a genuine impact.

Yet if you ask members in a survey (or simply send out an email inviting members to name the biggest problems they see in the community) my guess is none of the above would rank in the top 10.

You might be spending a lot of time trying to solve problems that only you’re seeing.

Worse yet, you might be spending too little time solving your members’ biggest problems.

p.s. When validating a problem don’t list a problem and ask members to say if it’s a problem, that’s cheating. Use an open text field and see if members name it without being prompted.

Rules to live by

I have a number of things printed out and blu-tacked to the back of my home office door. The most recent addition has been a four-point list entitled ‘Rules to live by’.

- Avoidance is rarely the correct option

- Transparency is the best policy

- Perfect is the enemy of done

- Listen to what people actually say

This list came out of the CBT sessions I’ve been attending since last September. A combination of things made me realise I needed some help:

- Death of a good friend

- Stressful situation at work

- Burden of volunteer responsibilities

As I’m sure most people say after going through therapy, it’s something I should have done years ago. Not because I’m weird, broken, or had anything other than a happy childhood. Just because as I approach middle-age, it’s good to be able to jettison some mental baggage and ways of thinking that aren’t helpful.

The list seems simple, but follows some fairly deep excavations into the reasons why I act the way I do, and the causes of anxiety flare-ups. The four points are my response to a prompt by my therapist to think first of all of the implicit rules I’m teaching my kids, and then writing down explicitly the rules I’d want them to live by instead.

My 10th therapy session is on Friday afternoon. After that, we’ll be moving to maintenance sessions every few months. I’m spending my own money on this, because the NHS had too much of a backlog. I realise I’m in a privileged position to be able to spend money on my mental health, but it’s definitely been money very well spent. The sessions have made a tangible (and hopefully long-lasting) effect on my life.

If you’re reading this and dealing with some stuff, I’d highly recommend a course of CBT. That’s especially true if you already think you should have the tools / strength to deal with it by yourself. Therapy has made me a better person.

This post is Day 12 of my #100DaysToOffload challenge. Want to get involved? Find out more at 100daystooffload.com

Wiki.js

Wiki.js is a Javascript-based wiki you can run on your computer or (better) in the cloud. It is open source and based on Node. It uses any of a number of database engines and runs on most platforms. There are modules available that allow you to run analytics, use social authentication, and choose the content editor you wish to use, and sync and backup your content to a number of storage services.

Web: [Direct Link] [This Post]Elvert Barnes: Three decades of documentary photography and counting

For the past three decades, Barnes has attended every major US movement with a camera in tow and a desire to capture history through his lens. In this Q&A interview, he talks us through his work and what motivates him to keep photographing the streets of Baltimore, Maryland.

Flickr: Tell us about where you’re from, where you live, and what you do.

Elvert Barnes: I am a self-taught freelance documentary photographer who, except for a few years in NYC, have lived most of my adult life in the Washington DC area and, since August 2016, in Baltimore, Maryland. I’m a 66-year-old gay Black male. For the most part, where I live, work, and play serves as the backdrop of my picture-taking.

How did you get interested in and started with photography? And more specifically, in outdoor event photography?

While my interest in photography dates back to my childhood years, it wasn’t until my partner gave me a Minolta as a Christmas gift in 1991 that I decided to integrate my picture-taking with my writings.

Seldom without a camera, I always carried a note pad and have created a collection of writings and photo essays entitled “BLACKOUT” that in the future will be developed into books and exhibitions that reflect on my personal story as an openly gay Black man.

My focus has almost always been in street photography. I like the fact that the photographer has little control over what appears in the image. Years later, when viewing an image, so many details will have been recorded of a particular moment in time that cannot ever be again. That same focus also drives my protest photography.

Living for many years in Washington DC and now Baltimore MD, there has never been a shortage of outdoor events to photograph. A few of my favorite ongoing DC projects are the St. Patrick’s Day Parade, the Cherry Blossom Festival, Rolling Thunder, National Police Week, Gay Pride, and Sundays at Meridian Hill Park. And now Baltimore MD.

What is the role of a photographer when documenting a protest?

In earlier years, I usually documented a protest on assignment for an event organizer or news organization, which affected what and how I photographed. From the September 11th terrorist attacks through 2008, much of my protest photography was associated with the anti-war movement and anti-capitalist demonstrations in Washington DC and NYC. Since then, my protests documentation has been strictly for my archives.

As a photojournalist, my focus may be on demonstrators, police, and counter-demonstrators. As a documentary photographer, while marching down a particular street or through a neighborhood, I also turn my attention to the storefronts, buildings, spectators, street art, etc.

What are some safety precautions to take when photographing a protest, rally, march, or an outdoor event in general?

I tend to travel light. Almost always with two cameras. One camera for close-ups and another for distance shooting. I never carry a camera bag, which is too cumbersome. I have no interest in being arrested, so I take all precautions against any actions that may result in such.

Of most importance, at all times, I must be aware that as a Black male, law enforcement will not only overreact to me but so will protesters and other photographers.

Now that everyone has a camera in their pocket, what advice do you have for those who want to document a massive event?

My motto is, “you do you, and I do me.” Just don’t interfere with what and how I photograph.

What are the essential shots you’re looking to capture when photographing an outdoor event?

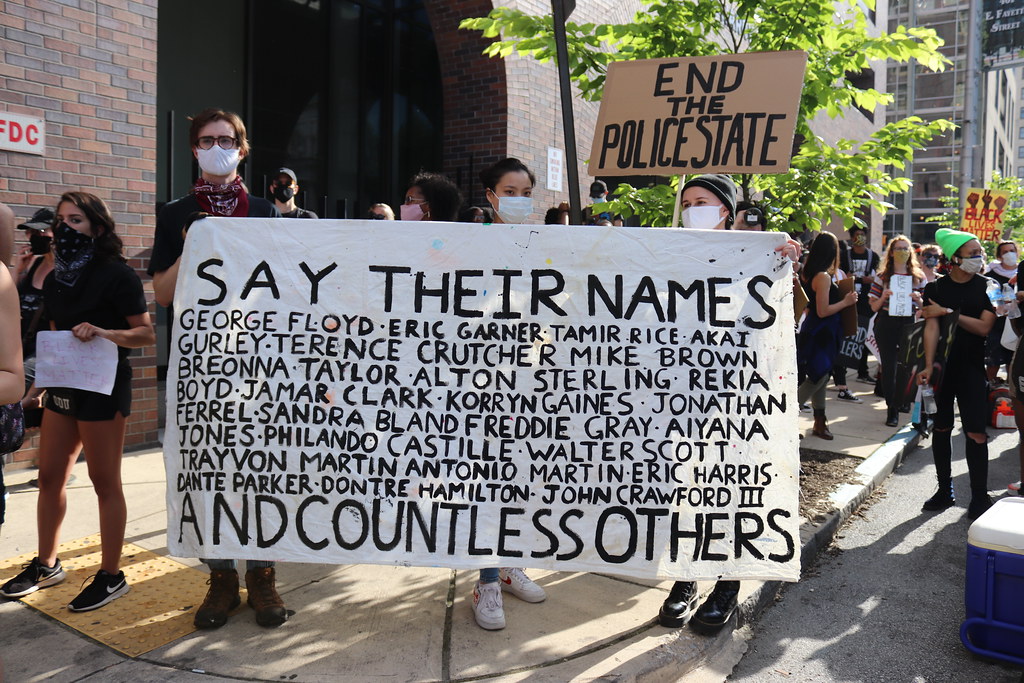

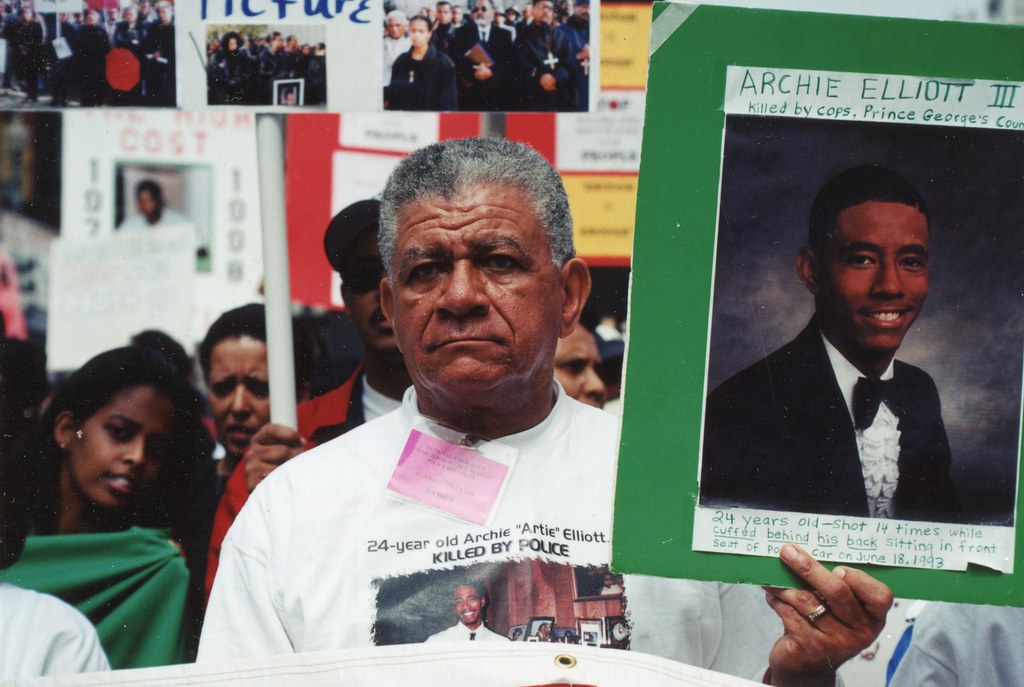

Speakers, performers, organizers, people interacting with each other, protest signs, law enforcement. Various shots from different locations and perspectives that depict the size of the crowd. Candid shots.

In your view, how can photography contribute to social justice?

Photography can play a major role in contributing to social justice as protest images often inspire others to participate in effecting change. Images from the 60’s civil rights, 70’s anti-war, and 70’s gay rights movements not only inspired generations of future activists but actually resulted in cultural and legislative changes.

Can you choose two of your Flickr photos and describe why they’re important to you?

This first image was taken over Easter weekend during the April 1999 National Emergency March for Justice Against Police Brutality in Washington DC. If not the third, certainly the second year in a row, I documented an anti-police brutality protest over Easter weekend, depicting a father carrying a sign of his dead son. It still haunts me that so many Black families march against racism and memorialize their murdered sons not only over Easter weekend but also on other holidays. Every Easter since then, almost like a flashback, this picture comes to mind.

This second is of two friends, partners Mark and Ken, who have been together since 1979 and who I’ve known since 1982. Taken at the 1994 Washington DC Gay Pride Festival, I’ve always admired and respected the relationship that these two men have. This picture and similar ones that I’ve taken over the years are part of my ongoing Without Apologies project.

Is there anything else we should know about you or your work? Are you working on any projects?

As a documentary photographer, I have many ongoing projects. However, since moving to Baltimore in August 2016 and in connection with my Ride-by Shooting docu-project, which documents my travels from car, train, bus and airplane windows, my MARC Commuter Train series has been a focus. And, of course, COVID-19.

Be sure to visit Elvert’s Flickr photostream to see more of his work.

I'm in ur virtual machines, managing them

I’ve been working on an application recently which lets you create, configure, and run VMware virtual machines (it just forks out to VMware Player for the running part). It’s nearing usefulness now, so I thought I’d post some screenshots and stuff.

The main window

The configuration window

You need either qemu or vmware-vdiskmanager installed in order to create new hard disks, and it probably fails pretty badly currently if you don’t. If I get a chance, I may write my own stuff to create the hard disks (the format is a public spec, woo!). Anyway, you can get it from the vmx-manager module in gnome CVS.

OpenBSD on the Microsoft Surface Go 2

I used OpenBSD on the original Surface Go back in 2018 and many things worked with the big exception of the internal Atheros WiFi. This meant I had to keep it tethered to a USB-C dock for Ethernet or use a small USB-A WiFi dongle plugged into a less-than-small USB-A-to-USB-C adapter.

Microsoft has switched to Intel WiFi chips on their recent Surface devices, making the Surface Go 2 slightly more compatible with OpenBSD.

Hardware

As with the original Surface Go, I opted for the WiFi-only model with 8Gb of RAM and a 128Gb NVMe SSD. The processor is now an 8th generation dual-core Intel Core m3-8100Y.

The tablet still measures 9.65” across, 6.9” tall, and 0.3” thick, but the screen bezel has been reduced a half inch to enlarge the screen to 10.5” diagonal with a resolution of 1920x1280, up from 1800x1200.

The removable USB-attached Surface Go Type Cover contains the backlit keyboard and touchpad. I opted for the “ice blue” alcantara color, as the darker “cobalt blue” version I purchased last time is no longer available. The Type Cover attaches magnetically along the bottom edge and can be folded up against the front of the screen magnetically, issuing an ACPI sleep signal, or against the back, automatically disabling the keyboard and touchpad in expectation of being touched while holding the Surface Go.

One unfortunate side-effect of the smaller screen bezel is that the top of the Type Cover keyboard now rests very close to the bottom of the screen when the keyboard is in its raised position. With one’s fingers on the keyboard, the text at the very bottom of the screen such as the status bar in a web browser can be covered up by the left hand.

The Type Cover’s keyboard is quiet but has a very satisfying tactile bounce. The keys are small considering the entire keyboard is only 9.75” across, but it works for my small hands. The touchpad has a slightly less hollow-sounding click than I remember on the previous model which is a plus.

The touchscreen is an Elantech model connected by HID-over-I2C and supports pen input. The Surface Pen is available separately and requires a AAAA battery, though it works without any pairing necessary with the exception of the top eraser button which requires Bluetooth for some reason. The pen attaches magnetically to the left side of the screen when not in use.

A set of stereo speakers face forward in the top left and right of the screen bezel but they lack bass. Along the top of the unit are a power button and physical volume rocker buttons. Along the right side are a 3.5mm headphone jack, USB-C port, Surface dock/power port, and microSD card slot located behind the kickstand.

Wireless connectivity is now provided by an Intel Wi-Fi 6 AX200 802.11ax chip which also provides Bluetooth connectivity.

Firmware

The Surface Go’s BIOS/firmware menu can be entered by holding down the Volume Up button, then pressing and releasing the Power button, and releasing Volume Up when the menu appears. Secure Boot as well as various hardware components can be disabled in this menu. Boot order can also be adjusted.

When powered on, holding down the power button will eventually force a power-off of the device like other PCs, but the button must be held down for what seems like forever.

Installing OpenBSD

Due to my previous OpenBSD work on the original Surface Go, most components work as expected during installation and first boot.

To boot the OpenBSD installer, dd the install67.fs image to a USB

disk, enter the BIOS as noted above and disable Secure Boot, then

set the USB device as the highest boot priority.

When partitioning the 128Gb SSD, one can safely delete the Windows Recovery partition which takes up 1Gb, as it can’t repair a totally deleted Windows partition anyway and a full recovery image can be downloaded from Microsoft’s website and copied to a USB disk.

After installing OpenBSD but before rebooting, mount the EFI partition

(sd0i) and delete the /EFI/Microsoft directory.

Without that, it may try to boot the Windows Recovery loader.

OpenBSD’s EFI bootloader at /EFI/Boot/BOOTX64.EFI will otherwise load

by default.

One annoyance remains that I noted in my previous review: if the touchpad is touched or F1-F7 keys are pressed, the Type Cover will detach all of its USB devices and then reattach them. I’m not sure if this is by design or some Type Cover firmware problem, but once OpenBSD is booted into X, it will open the keyboard and touchpad USB data pipes and they work as expected.

OpenBSD Support Log

2020-05-12: Upon unboxing, I booted directly into the firmware screen

to disable Secure Boot and then installed OpenBSD.

Upon first full boot of OpenBSD, the kernel panicked due to acpivout

not being able to initialize its index of BCL elements.

I disabled acpivout for the moment.

The Intel AX200 chip is detected by iwx, the firmware loads (installed via

fw_update), and the device can do an active network scan, but when trying

to authenticate to a network, the device firmware reports a fatal error.

2020-05-13: I created a new umstc driver that attaches to the

uhidev device of the Type Cover and responds to the volume and brightness

keys without having to use usbhidaction.

More importantly, it holds the data pipe open so the Type Cover doesn’t

reboot when the F1-F7 keys are pressed at the console.

A solution will still be needed to prevent the Type Cover from rebooting

when the touchpad is accidentally touched when not in X.

I tried S3 suspend and resume, but when pressing the power button to wake it up, it immediately displays the initial firmware logo and boots as if it were restarted. S4 hibernation works fine. S3 suspend works in Ubuntu, so I’ll need to do some debugging to figure out where OpenBSD is failing.

2020-05-31: I

imported

my umstc driver into OpenBSD.

2020-06-02: I

imported

my acpihid driver into OpenBSD.

2020-07-05: Stefan Sperling (stsp@) has done a lot of work on iwx over

the past couple months, so WiFi is much more stable and performant now.

2020-07-31: While looking into why S3 suspend doesn’t work, I realized that Linux and Windows don’t even do S3 on the Surface Go (or any Surface products). Windows puts the device into “Modern Standby” which is just S0 (normal operating state) but with most devices powered down, allowing the CPU to go into its deepest idle state. Linux was changed in 2017 to prefer its “s2idle” on these devices, which does the same thing. This puts the onus on the operating system to put the correct devices into the correct low power state rather than the firmware doing it and stopping the CPU. OpenBSD doesn’t support this idle state, so it looks like the Surface Go will be limited to S4 hibernation.

Current OpenBSD Support Summary

Status is relative to my OpenBSD-current tree as of 2020-07-31.

| Component | Works? | Notes |

|---|---|---|

| AC adapter | Yes | Supported via acpiac and status available via apm and hw.sensors, also supports charging via USB-C. |

| Ambient light sensor | No | Connected behind a PCI Intel Sensor Hub device which requires a new driver. |

| Audio | Yes | HDA audio with a Realtek 298 codec supported by azalia. |

| Battery status | Yes | Supported via acpibat and status available via apm and hw.sensors. |

| Bluetooth | No | Intel device, shows up as a ugen device but OpenBSD does not support Bluetooth. Can be disabled in the BIOS. |

| Cameras | No | There are apparently front, rear, and IR cameras, none of which are supported. Can be disabled in the BIOS. |

| Gyroscope | No | Connected behind a PCI Intel Sensor Hub device which requires a new driver which could feed our sensor framework and then tell xrandr to rotate the screen. |

| Hibernation | Yes | Works via the ZZZ command. |

| MicroSD slot | Yes | Realtek RTS522A, supported by rtsx. |

| SSD | Yes | SK hynix NVMe device accessible via nvme. |

| Surface Pen | Yes | Works on the touchscreen via ims. The button on the top of the pen requires Bluetooth support so it is not supported. |

| Suspend/resume | No | The Surface Go firmware does not properly support S3 (though it claims it does). OpenBSD does not support “suspend to idle” which is used on Windows and Linux. S4 Hibernation works, though. |

| Touchscreen | Yes | HID-over-I2C, supported by ims. |

| Type Cover Keyboard | Yes | USB, supported by ukbd and my umstc. 3 levels of backlight control are adjustable by the keyboard itself with F1. |

| Type Cover Touchpad | Yes | USB, supported by the umt driver for 5-finger multitouch, two-finger scrolling, hysteresis, and to be able to disable tap-to-click which is otherwise enabled by default in normal mouse mode. |

| USB | Yes | The USB-C port works fine for data and charging. |

| Video | Yes |

inteldrm has Kaby Lake support adding accelerated video, DPMS, gamma control, integrated backlight control, and proper S3 resume. |

| Volume buttons | Yes | Supported by my acpihid driver. |

| Wireless | Yes | Intel AX200 802.11ax wireless chip supported by iwx. |

Twitter Favorites: [uncleweed] I know 2020 is really hard for lot of folks, but, tomorrow my son turns 1 month old and I’m so unbelievably happy t… https://t.co/ePVdX84cA8

I know 2020 is really hard for lot of folks, but, tomorrow my son turns 1 month old and I’m so unbelievably happy t… twitter.com/i/web/status/1…

Twitter Favorites: [dylan_reid] Interesting: "with regular cleaning, mask use, good ventilation and minimal talking, transit is possibly lower risk… https://t.co/wXYR0w9dTJ

Interesting: "with regular cleaning, mask use, good ventilation and minimal talking, transit is possibly lower risk… twitter.com/i/web/status/1…

Apple’s Thirty Percent Cut

Developers will often tell you that Apple’s 30% cut isn’t the worst thing about the App Store, and that it’s actually far down the list.

True. They’re right.

But it’s worth remembering that money really does matter. Say you’re making $70K per year as a salary, and someone asks if you’d like a raise to $90K. You say yes! Because that extra $20K makes a real difference to you and your family.

To an app on the App Store it might mean being able to lower prices — or hire a designer or a couple junior developers. It might be the difference between abandoning an app and getting into a virtuous circle where the app thrives.

Quality costs money, and profitability is just simple arithmetic: anything that affects income — such as Apple’s cut — goes into that equation.

To put it in concrete terms: the difference between 30% and something reasonable like 10% would probably have meant some of my friends would still have their jobs at Omni, and Omni would have more resources to devote to making, testing, and supporting their apps.

But Apple, this immensely rich company, needs 30% of Omni’s and every single other developer’s paycheck?

MAKE AN APPLE TART NOW

Put on a good album and MAKE AN APPLE TART RIGHT NOW. Stop waiting for a special dinner or lunch with friends. Just use those amazing organic apples you just bought and make a tart. Here, to help you hurry up here’s the recipe. For more pictures on how we ended up enjoying the thing, check out our first guest post on the Lululab blog.

Apple Tart

Yields 1 8-inch tart

Sweet Tart Dough – (from the Tartine cookbook)

1 cup + 2 tablespoons unsalted butter, room temperature

1 cup granulated sugar

¼ tsp kosher salt

2 large eggs, room temperature

3 ½ cups all-purpose flour

Method:

Combine the butter and sugar in a stand mixer and mix on a medium speed until completely smooth, approximately 3-4 minutes. Stop the mixer and scrape down the sides. Return mixer to medium speed and add the eggs one at a time until completely combined. Stop the mixer and add the flour. Mix on low speed until a loose dough is formed. On a lightly floured surface, divide the dough into 4 equal balls and wrap in plastic wrap. Chill for at least 2 hours or overnight. This recipe makes extra tart dough, which can be frozen for up to 2 months. For one 8-inch tart shell, you will need one quarter of the dough.

The Tart

5-6 medium sized apples, we used both granny smith and gala

1 ball sweet tart dough, recipe above

2 tablespoons granulated sugar

¼ cup unsalted butter, diced small

1 tablespoon Beta 5 yuzu marmalade, lightly warmed

Method:

Pre-heat the oven to 325 F. Bring the dough to room temperature and roll on a lightly floured work surface until approximately 1/8th of an inch thick. Line an 8-inch tart pan with the dough and gently press the dough into the mold. Use a knife to trim the excess dough around the edge of the pan. Peel the apples, quarter and remove the core. Thinly slice the apples to 1/8th of an inch thick. Begin to arrange the apples from the outside in. Sprinkle sugar on each layer, as well as a few small pieces of butter. Continue until the shell is full and bake the tart in the oven for 45-60 minutes, or until the apples are tender and the tart shell is golden brown. Using a pastry brush, glaze the top of the tart with the warmed marmalade. Cool to room temperature and enjoy.

Developing A Great Community Strategy

“I’ve written a great community strategy, but can’t get the organisation to adopt it”

Then it’s not a great strategy, is it?

It doesn’t matter what’s in the strategy if your organisation doesn’t embrace it. You can’t write a strategy in isolation for an unsuspecting audience and expect them to swallow it whole when it’s published.

That’s simply not how it works.

A great community strategy isn’t written, it’s facilitated. You set up collaborative processes to educate and solicit the opinions of your stakeholders. You bring everyone on the journey with you. You get people into the same room (or Zoom call) to discuss competing priorities, the trade-offs and build a consensus about where and how to move forward.

This also means you won’t get everything you want. That’s how collaboration works. The theoretical best strategy for the community and the best strategy with the resources, permission, understanding, and attitudes within the organisation are often very different.

The final strategy document should never be at risk of rejection. It’s simply the outcome of the discussions and decisions you’ve guided your colleagues through so far.

This is why it might be best to hire an outside consultant. This is a time-intensive process and is a very different skillset from simply managing a community (it also helps to have someone independent from the existing web of relationships within your organisation and with experience working at many other organisations).

A great strategy should feel like a breakthrough. All the critical decisions have been made. There shouldn’t be any more hold-ups. Everyone should be aligned on the community’s value, everyone should know what they need to do to support the community, and everyone should be excited to make it happen.

Some resources:

Your Brand’s Online Community Strategy

The Online Community Strategy Guide

Online Community Strategy Course

The Online Community Lifecycle

The Airport Lounge

I tweeted today in response to a Tweet from YVR Airport who are celebrating their 89th birthday.

“Every time I travel through YVR airport I compare it to all the other airports I have used. YVR sets a standard which most fail to equal, and none exceeds. We recently had a complimentary pass for a lounge. In YVR we didn’t need it. Where we had a layover it wasn’t open!”

The tweet limit still means it does not really tell what I think is a story worth expanding. Elsewhere I have written about our trip in January to New Orleans. We did not manage to get a direct flight but had to switch from WestJet to Delta at Los Angeles.

VanCity had recently persuaded me to upgrade my VISA card and one of the sweeteners offered was airport lounge access. It turned out to be complimentary for the first occasion only. Since you have to get to the airport early for the lengthy (and completely unnecessary) “security” check there is usually at least an hour to waste once through the interrogations and interference. At YVR the US departures area is spacious, well laid out and actually quite interesting. I usually enjoy just wandering around and taking pictures. Since we had an early morning departure we would have stopped at Starbucks for coffee and either bran muffins or oatmeal or something equally “healthy”.

But since we had this voucher we went to the lounge. The breakfast on offer looked very much like the standard US hotel complimentary in the lobby type of thing. And in a very similar atmosphere. The wifi is free all over YVR anyway, so the lounge is not any different. You are just a bit further away from the gates and with only a view into the terminal. And a rather intrusive tv with the morning news – again just like the gate area. The fee I would now have to pay for subsequent visits would not be much different to what I would pay at Starbucks – or the restaurant at other times of day.

https://www.flickr.com/photos/stephen_rees/49461276967/

The connection at LAX to the flight to MSY (as NOLA is rendered in airport code) meant changing terminals by bus across the apron. Delta’s terminal is way too small and too darn crowded, but there was a lunch counter right next to our gate and they served craft beer. We had just enough time to eat lunch before our flight was called to start boarding.

On the way back, the story was different. The lounge we might have used was in the wrong terminal i.e. the one we arrived in not the one we left from. And anyway was closed to people with my kind of pass except in the early morning. The terminal used by WestJet was even nastier than that for Delta – and the choice of eating places was fast food or nothing. The noise level was atrocious. I was feeling a little under the weather – it turned out to be the ‘flu (not COVID19) – and I really wanted somewhere peaceful. At Amsterdam they have an art gallery and a library, both with comfy chairs. LAX was more like McDonalds on a midterm break. And were were there for three hours.

Worst of all, there was no working free wifi, and even the departure screens were hidden away behind a staircase. The well placed screens were only for commercials – the same ones for hours on a short rotation.

To be fair the Delta terminal is being enlarged and physically connected so that the need for a bus ride will be eliminated. I do not know what they plan for lounges and right now it seems to be unlikely we will be needing them in any foreseeable future. But at that time I would have been very much happier if my imposed wait could have been in YVR or an airport built to that standard than LAX. It was somewhat better than Kansas City, where Frontier had once required me to spend all day. But that isn’t saying much.

On the whole I have not felt especially constrained by the current travel restrictions and I am in no hurry to go very far again.

Liquid society?

According to a recent survey, only 12% of people want to go back to how things were before lockdown:

“I hate it when people talk about the ‘new normal’ – it just makes me want to scream. But actually, people don’t want the ‘old normal’. They really, really don’t,” said BritainThinks founding partner Deborah Mattinson. “They want to support and value essential workers and social services more. They want to see more funding for the NHS. There’s a massive valuing of those services and austerity is totally off the agenda.”

Donna Ferguson (The Observer)

I can’t imagine that this is an original thought, but while walking with the family yesterday it struck me that conservative tendencies within society want to ‘freeze’ things as they are. Why? Because the status quo suits them and their place in society.

Meanwhile, revolutionaries want to ‘boil away’ what currently exists to create room for what comes next. Why? Because the status quo does not suit them, either directly because of their place in society, or because it does not fit with their values.

These two tendencies are usually in tension. This means we end up with a free-flowing ‘liquid’ society. That is to say that, usually, we experience neither the ‘ice’ of reactionary times nor the ‘steam’ of revolutionary times.

For a society that suits the majority rather than the few, we need to keep things liquid, which is going to be particularly difficult given the current economic situation.

To stretch the metaphor, we may end up with a period of sublimation where ‘ice’ turns to ‘steam’. In this situation, people who have previously been reactionary (because the status quo has served them) become revolutionary (because the status quo no longer works for them).

I think I need to go and re-read Zygmunt Bauman on liquid modernity…

This post is Day 13 of my #100DaysToOffload challenge. Want to get involved? Find out more at 100daystooffload.com

Apple’s 2022 iPhone will reportedly feature telephoto cameras

While rumours surrounding the Apple’s iPhone in July and August typically relate to the tech giant’s next iteration of the smartphone, that isn’t the cause when it comes to this report.

According to often-reliable TF Securities analytic Ming-Chi Kuo, as first reported by MacRumors, the same South Korean company called Semco that is supplying the autofocus motors for the 2020 iPhone, will also be providing half of the periscope lenses required for the 2022 version of the smartphone.

Over the last few years, periscope lens technology has been featured in smartphones from Chinese smartphone manufacturers like Oppo and Huawei. Samsung also featured a periscope lens in its S20 Ultra released earlier this year.

Periscope lenses use prisms and mirrors to reflect and then focus light onto a camera sensor. With a traditional camera, the sensor is typically placed directly behind the lens.

With Android manufacturers pushing the envelope when it comes to optical zoom, it makes sense for Apple to have plans to implement the technology in the iPhone eventually. It’s unclear exactly how far these lenses will be capable of zooming optically, but it’ll likely be somewhere in the 5x to 10x range.

The iPhone 11 Pro and Pro Max are currently only capable of 2x zoom. Apple first brought optical zoom to its smartphone line with the iPhone 7 Plus back in 2016.

Source: MacRumors

The post Apple’s 2022 iPhone will reportedly feature telephoto cameras appeared first on MobileSyrup.

Apple launches security research program with dedicated iPhones for hackers

Apple has launched a new security research device program that will provide special iPhones to bug hunters and professional hackers.

“This program is designed to help improve security for all iOS users, bring more researchers to iPhone, and improve efficiency for those who already work on iOS security,” Apple notes.

The program features iPhones dedicated exclusively to security research, with unique code execution and containment policies. The iPhones will allow researchers to run custom commands and also include debugging tools that will allow them to run their code.

Apple told TechCrunch that these devices aren’t new, but that this is the first time they are being provided to researchers directly.

The tech giant notes that it hopes this program will be a collaboration, and that researchers will have access to a forum where they can communicate with Apple engineers.

Apple hopes that by giving security researchers a pre-jailbroken iPhone, it can make it easier for them to discover vulnerabilities within the software that have yet to be found.

With this new program, Apple is asking the researchers to privately disclose the vulnerabilities that they have found so that its engineers can address and fix them. The researchers will then be paid accordingly depending on the severity of the bug.

Source: Apple, TechCrunch

The post Apple launches security research program with dedicated iPhones for hackers appeared first on MobileSyrup.

Telus now offering 20GB data deal for $80

In the wake of the other big two Canadian carriers, Telus is now offering a data plan with 20GB of unlimited data for $80 CAD per month.

The Telus plan is just like Bell’s since users can’t share the 20GB data bucket with their other connected devices. Beyond that, it comes with unlimited Canada-wide calling and unlimited nationwide texting and picture messages.

The plan also includes voicemail, call display, call waiting and conference calling.

Like all the other big carriers with unlimited plans, Telus throttles subscriber data down to 512Kbps after the 20GBs of full-speed data is used up.

You can get the deal from Telus’ website. To compare this plan with Bell and Rogers, check out our prior reporting.

Source: Telus

The post Telus now offering 20GB data deal for $80 appeared first on MobileSyrup.

Tesla Q2 2020 earnings: The company isn’t backing down from being profitable

Tesla slightly upped its automotive revenue in its second quarter of 2020 compared to last quarter, with revenue of $6.036 billion USD (roughly, $8.09 billion CAD).

This small growth amid the uncertainty of the Coronavirus pandemic was bolstered by growth in the company’s automotive sector, which grew one percent this quarter to $5.179 billion USD ($6.946 billion CAD). While this is growth compared to Q1 2020, it’s a four percent drop compared to last year’s Q2.

While these results aren’t groundbreaking, they do show a much more stable company than we’ve seen from Tesla in other years.

Telsa says that “the positive impact of higher vehicle deliveries, higher regulatory credit revenue and

higher energy generation and storage revenue was somewhat offset by lower vehicle average selling price (ASP) and lower services and other revenue.”

However, the fact that it’s able to make more revenue off its side projects like solar energy generation and storage is likely a reassuring fallback for the automaker.

Beyond the finances, Tesla also rehashed its delivery and production numbers from early July. However, looking at both of these stats compared to the same quarter in 2019, the automaker is making and selling fewer cars. It’s hard to lay blame on Tesla for this though, due to the circumstances the world has been through this year.

Last year in Q2, Tesla built a total of 87,048 cars. This quarter it only created 82,272. It also delivered roughly 5,000 more cars this time last year than it has in 2020.

Regarding the company’s solar and energy storage businesses, the press release mentions that this is the first quarter where Powerwall and Megapack, the company’s two storage solutions, started to be profitable. The release goes on to say that solar installations have tripled compared to last quarter, but there’s no mention of that division making a profit.

At the end of the release, Tesla mentions that its vehicle production is back to normal, and it can exceed 500,000 deliveries this year and that number continues to be the company’s goal regardless of the COVID-19 slowdowns.

You can find the whole investor breakdown here.

Source: Tesla

The post Tesla Q2 2020 earnings: The company isn’t backing down from being profitable appeared first on MobileSyrup.

Copy, paste catastrophe: how Apple’s iOS 14 disrupted clipboard espionage

Over the last few weeks, you’ve likely seen many stories — both from MobileSyrup and others — about apps accessing the iOS clipboard. Some probably wonder what the big deal is. After all, apps access your clipboard for copy and paste, a tool many of us use regularly.

Unfortunately, not all apps use the clipboard as they should. Most of the recent iOS clipboard coverage traces back to two things: iOS 14 and app developer Mysk. In February 2020, German-based developer Tommy Mysk and Toronto-based developer Talal Haj Bakry shared a blog post explaining how iOS and iPadOS apps have unrestricted access to the clipboard.

The duo highlighted how this access could lead to security vulnerabilities, such as exposing users’ precise location. For example, if someone copied a picture they took to their iPhone’s clipboard, any app that accessed the clipboard could obtain the image and the GPS coordinates embedded in the photo when it was taken.

Further, based on how people often use their smartphone, other essential data like passwords, addresses or other information copied to the clipboard could be vacuumed up by apps without user consent.

The blog post includes a disclaimer that Mysk submitted the details to Apple in January, but the company told the developers it didn’t see an issue with the vulnerability.

However, with the release of iOS 14 betas to developers and later the public, it became clear that Apple did see a problem with clipboard access. iOS 14 reworks the clipboard and notifies users when apps copy data from it.

The story quick spiralled from there as beta testers and developers stumbled across multiples apps hoovering data from the clipboard at every opportunity.

Name and shame

When Apple made iOS 14 available for developers, the software instigated what I like to call a ‘name and shame’ campaign. Apple’s latest mobile operating system ushered in two significant changes for the clipboard; a notification to tell users when apps accessed clipboard data and a new API that makes the clipboard more secure.

That first change was the catalyst for all the recent stories naming apps that misused the clipboard. Thanks to the coverage, it also lead to the ‘shame’ aspect with many developers walking back clipboard features.

It’s important, however, to note that many apps do use the clipboard properly. Further, many apps use the clipboard with good intentions. For example, some browser apps on iOS check the clipboard for URLs and offer a quick ‘paste-and-go’ shortcut. Users can tap a button and navigate to the copied URL instead of needing to open a new tab, tap the address bar and press-and-hold to paste the URL.

Still, for all the apps doing this properly, many arguably don’t. Since late June, people have caught over 50 apps abusing clipboard access. This can come in many forms, from some apps accessing the clipboard without user interaction to others that constantly checked the clipboard for no good reason. Some developers pushed updates to stop accessing the clipboard, claiming the issues were bugs. We’ve compiled a list of these apps, which you can view at the bottom of this story.

Offering a better way

Along with naming and shaming the apps that aren’t using the clipboard properly, Apple has updated its clipboard APIs in iOS 14 to protect user privacy better.

When iOS 14 officially arrives later this year, it will allow apps to query the clipboard without seeing its data. Going back to the browser example used above, apps can use the new API to ask iOS what’s in the clipboard.

iOS can then tell the browser whether it has a URL, text, a picture, or something else. Plus, the software can do this without revealing what’s in the clipboard.

If iOS says a URL is available, the browser can paste it from the clipboard, triggering the notification and letting the user know what transpired. If there isn’t a URL, the app doesn’t access the clipboard data, the user’s information remains secure and iOS doesn’t notify the user.

While on the surface it’s a simple change that will hopefully prevent apps from snooping on users’ clipboard, it may also take time for developers to implement proper support in their apps.

How can I protect my clipboard now?

Unfortunately, for many users, apps will still have free rein for the next few months. iOS 13 doesn’t offer the same clipboard protections as iOS 14 will and it also doesn’t notify users when apps access the clipboard.

Thankfully, there are a few steps people can take to protect themselves. First up, keep an eye out for the apps that have been caught accessing the clipboard. If possible, stop using apps caught snooping on clipboard data. Alternatively, access them through a trusted web browser instead of the native iOS app, as native apps have full clipboard access.

Those running the iOS 14 beta likely haven’t caught every app engaged in clipboard espionage yet. Plus, some people will have apps they need to use that still snoop on the clipboard. So, the other active step you can take is avoiding copying any sensitive data to your clipboard. If you do have to copy something, take steps to replace it with other information after. Hopefully this will prevent leaking sensitive data to apps that misuse the clipboard.

It’s also worth noting that Apple offers a cloud clipboard feature that enables users to copy and paste across iOS, iPadOS and Mac devices. Apps snooping on the clipboard can get data from your laptop or tablet too. If you don’t use this feature, you can turn it off by going to ‘System Preferences’ > ‘General’ > ‘Allow Handoff between this Mac and your iCloud devices’ and deselecting that option. On your iPhone or iPad, you can turn it off under ‘Settings’ > ‘General’ > ‘Handoff.’

Finally, if you use a password manager app, avoid copying and pasting your passwords when possible. Many support iOS’ autofill settings, which should mean you don’t need to copy and paste passwords manually. Some password managers offer the ability to clear your clipboard after a short time, so turn on that setting.

None of those solutions are ideal, but until iOS 14 arrives with the new clipboard API, they’re all we have.

What about Android users?

After reading all this, you may wonder if the clipboard on your Android phone or Windows PC is safe. In short, probably not.

How-To Geek offers a great rundown on clipboard access. On smartphones, any app you install can access the clipboard. In fact, Mysk told Ars Technica that Android is more lenient with the clipboard than iOS.. Hopefully, Google follows Apple and implements a similar system to iOS 14 on Android.

Laptops running Windows 10 or macOS operate a little differently. Many apps you install can still access the clipboard whenever they want — unfortunately, that part is still the same. However, both desktop operating systems also offer some kind of cloud clipboard feature. As mentioned above, macOS has ‘Universal Clipboard,’ which shares copied data across macOS, iOS and iPadOS. That means anything you copy will pass through Apple’s servers.

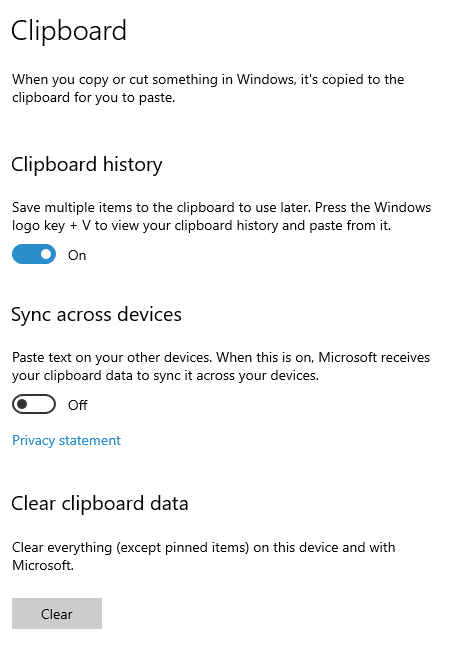

Windows 10 has a ‘Clipboard history’ setting that saves a record of everything you copy and paste. You can access this by tapping Windows+V. Windows 10 will sync your clipboard history across devices as well if you enable that setting.

The one saving grace here is websites. Web apps can’t automatically access your clipboard. Users have to paste content manually for a website to access it.

Regardless if you use iOS, Android or something else, you should be cautious with what you copy to your clipboard and how. When possible, avoid copying any sensitive data and use available tools to clear that data out when you’re done with it.

It could be a good idea to get in the habit of copying non-sensitive data to your clipboard to replace any sensitive data since most clipboards only store the last thing you copied. Anyone who wants to be really cheeky should copy “Stop looking at my clipboard” and let apps see that whenever they snoop.

Apps that access the clipboard without user consent

The below list of apps was compiled from a combination of previous reporting and MobileSyrup’s own testing. The list primarily contains apps that copy clipboard data without user interaction, or repeatedly access the clipboard while in use. While not every app accessing the clipboard is doing something wrong, by accessing the data without user consent, those apps are potentially seeing sensitive data.

The list below is not an exhaustive account of apps using the clipboard. It also includes anything developers have said in response. Sources include The Telelgraph, Ars Technica, MSPoweruser and Mysk.

- Firefox

- Google Chrome

- Discord

-

TikTok – said it would update its app

- Fox News

- The New York Times

- Wall Street Journal

- Bejeweled

- Fruit Ninja

- PUBG Mobile – stopped clipboard snooping

- Viber – told MobileSyrup it “blocked” the option to save clipboard data

- Zoosk

- AccuWeather

- DAZN – stopped clipboard snooping

- Overstock

- CBC News – stopped clipboard snooping

- CBS News – stopped clipboard snooping

- ABC News – stopped clipboard snooping

- Al Jazeera English – stopped clipboard snooping

- CNBC

- News Break

- NPR

- Reuters

- ntv Nachrichten – stopped clipboard snooping

- Russia Today

- Stern Nachrichten

- Huffington Post

- The Economist

- Vice News

- 8 Ball Pool– stopped clipboard snooping

- Amaze – stopped clipboard snooping

- ToTalk

- Tok

- Truecaller – stopped clipboard snooping

- Block Puzzle

- Classic Bejeweled – stopped clipboard snooping

- Class Bejeweled HD – stopped clipboard snooping

- Watermarbling

- Total Party Kill

- Tomb of the Mask – stopped clipboard snooping

- Tomb of the Mask: Color – stopped clipboard snooping

- FlipTheGun

- Golfmasters

- Letter Soup – stopped clipboard snooping

- Love Nikki

- My Emma

- Plants vs. Zombies Heroes

- Pooking – Billiards City

- 10% Happier: Meditation – promised to stop the behaviour and followed through

- AliExpress Shopping App

- Bed Bath & Beyond

- Hotels.com – stopped clipboard snooping

- 5-0 Radio Police Scanner – stopped clipboard snooping

- Hotel Tonight – promised to stop and did so

- The Weather Network – removed a “diagnostic functionality” that was accessing the clipboard

- Pigment – Adult Coloring Book

- Recolor Coloring Book to Color – stopped clipboard snooping

- Sky Ticket

- Microsoft Teams

- Call of Duty Mobile

- Google News

- LinkedIn – said the clipboard access was a bug, updated its app

- Reddit – released a fix to remove the clipboard access code

- McDonald’s – working to fix the issue

- Starbucks – issue to be fixed in upcoming update

- Wendy’s – a fix is underway

The post Copy, paste catastrophe: how Apple’s iOS 14 disrupted clipboard espionage appeared first on MobileSyrup.

Slither.io, the Two Sigmas, and Customer Support

I had another good conversation with Mike Hoye this morning, during which he pointed out that we’re living in a golden age of independent game development. The reason, he believes, is that there’s no longer a minimum viable size for a game: one person can create and deploy a simple gem like slither.io or 2048, which gives radically new ideas a chance to become real.

Is teaching about to experience a similar Cambrian Explosion, and if so, will it be good for learners? I think the answer to the first is “yes”. More online learning has taken place in the last six months than in the whole of human history prior to COVID. Literally millions of teachers have suddenly had to figure out how to do it without the months and money it takes to produce a MOOC. They have had to find ways to do things fast and cheap, and some of their crazy ideas are bound to be better than anything we’ve done before.

To answer the second question we need to know how much better teaching and learning could be. A partial answer comes from work done by Benjamin Bloom and others in the 1980s:

…the average student tutored one-to-one using mastery learning techniques performed two standard deviations better than students who learn via conventional instructional methods—that is, “the average tutored student was above 98% of the students in the control class”. Additionally, the variation of the students’ achievement changed: “about 90% of the tutored students…attained the level…reached by only the highest 20%” of the control class.

Fifteen years ago, the MOOC model of recorded video and autograded exercises was sometimes touted as a way to scale the benefits of one-to-one tutoring, but that has proven to be an evolutionary dead end. What intrigues me now is the way good customer support teams teach every day:

-

Customer: “I have a problem.”

-

Support: “Let me ask a couple of questions. Hm. OK, it’s not a purely factual matter like a missing license key. Instead, it looks like I’m going to have to explain X in order for you to understand how to solve it.”

Every customer has a different background, so X varies from call to call, but some X’s come up so often that support staff get a chance to figure out how to explain them well. The challenge is how to transfer those good explanations to their colleagues. “Write it down” is the obvious answer, but:

-

There are half a dozen other customers with issues that need to be solved right now. And yes, a little time now might save other people a lot of time in the future, but only if this topic actually does come up frequently.

-

The details of the explanation vary significantly from one telling to the next based on the teller’s impressions of their listener.

-

“Wikis are where knowledge goes to die.” Unless someone works continuously to organize the collected explanations, what accumulates will be unfindable, contradictory, or out of date. Unfortunately, the “someones” who can do best are the experienced support staff whose phones are metaphorically ringing off the hook (see item #1).

There are echoes here of Reusability Paradox, which states that, “The pedagogical effectiveness of a learning object and its potential for reuse are completely at odds with one another”. It also reminds me of a long-ago colleague’s explanation of why he only wrote software documentation on demand: he could never tell in advance which parts people would actually have questions about.

I don’t have any conclusions yet, much less any advice, but I predict that we’re going to see a lot of teachers shifting to a “you call me any time” model with late-teen and adult learners, and that support for the choral explanations exemplified by sites like Stack Overflow and Quora are going to become much more common. What I need to find now is a good introduction to any research that has looked at the on-demand teaching done by customer support teams and how members of those teams share their greatest hits with each other. If you know of any, I’d be grateful for pointers.

Fritz Lang pic.twitter.com/bHWq7burZX

|

mkalus

shared this story

from |

CCPA for nerds, part 2

Here's a quick update on CCPA opt out, nerd edition, which describes how I can send so many CCPA opt-outs so quickly. As you may recall, I made a simple CCPA opt-out tool, using...

-

A /code/ccpa shell script

-

An opt-out letter. This is my opt-out for Facebook advertisers

The script generates signed CCPA opt-out requests, which do work.

But how do I get my PII into the outgoing mail, without putting it in the CCPA opt-out letters (this is the one for Facebook advertisers) that I share on this site?

Use templates. Since I already know Mustache templates from web development, the final tool in the CCPA opt out stack is...

-

mo

is a tool to replace simple

{{ STUFF }}template variables with values taken from environment variables.

So now I just set CCPA_ADDRESS and CCPA_PHONE in my .bashrc and they get substituted into the letter.

Bonus links

(enough of the surveillance marketing links for now, here are some off-topic good reads)

Kinky Labor Supply and the Attention Tax

We tagged Andean condors to find out how huge birds fly without flapping

How a Long-Lost Perfume Got a Second Life After 150 Years Underwater

Cory Doctorow: Full Employment

It’s Time to Abolish Single-Family Zoning

A Message from Your University’s Vice President for Magical Thinking

The Confederacy Was an Antidemocratic, Centralized State

Why a small town in Washington is printing its own currency during the pandemic

A look at password security, Part III: More secure login protocols

In part II, we looked at the problem of Web authentication and covered the twin problems of phishing and password database compromise. In this post, I’ll be covering some of the technologies that have been developed to address these issues.

In part II, we looked at the problem of Web authentication and covered the twin problems of phishing and password database compromise. In this post, I’ll be covering some of the technologies that have been developed to address these issues.

This is mostly a story of failure, though with a sort of hopeful note at the end. The ironic thing here is that we’ve known for decades how to build authentication technologies which are much more secure than the kind of passwords we use on the Web. In fact, we use one of these technologies — public key authentication via digital certificates — to authenticate the server side of every HTTPS transaction before you send your password over. HTTPS supports certificate-base client authentication as well, and while it’s commonly used in other settings, such as SSH, it’s rarely used on the Web. Even if we restrict ourselves to passwords, we have long had technologies for password authentication which completely resist phishing, but they are not integrated into the Web technology stack at all. The problem, unfortunately, is less about cryptography than about deployability, as we’ll see below.

Two Factor Authentication and One-Time Passwords

The most widely deployed technology for improving password security goes by the name one-time passwords (OTP) or (more recently) two-factor authentication (2FA). OTP actually goes back to well before the widespread use of encrypted communications or even the Web to the days when people would log in to servers in the clear using Telnet. It was of course well known that Telnet was insecure and that anyone who shared the network with you could just sniff your password off the wire1 and then login with it [Technical note: this is called a replay attack.] One partial fix for this attack was to supplement the user password with another secret which wasn’t static but rather changed every time you logged in (hence a “one-time” password).

OTP systems came in a variety of forms but the most common was a token about the size of a car key fob but with an LCD display, like this:

The token would produce a new pseudorandom numeric code every 30 seconds or so and when you went to log in to the server you would provide both your password and the current code. That way, even if the attacker got the code they still couldn’t log in as you for more than a brief period2 unless they also stole your token. If all of this looks familiar, it’s because this is more or less the same as modern OTP systems such as Google Authenticator, except that instead of a hardware token, these systems tend to use an app on your phone and have you log into some Web form rather than over Telnet. The reason this is called “two-factor authentication” is that authenticating requires both a value you know (the password) and something you have (the device). Some other systems use a code that is sent over SMS but the basic idea is the same.

OTP systems don’t provide perfect security, but they do significantly improve the security of a password-only system in two respects:

- They guarantee a strong, non-reused secret. Even if you reuse passwords and your password on site A is compromised, the attacker still won’t have the right code for site B.3

- They mitigate the effect of phishing. If you are successfully phished the attacker will get the current code for the site and can log in as you, but they won’t be able to log in in the future because knowing the current code doesn’t let you predict a future code. This isn’t great but it’s better than nothing.

The nice thing about a 2FA system is that it’s comparatively easy to deploy: it’s a phone app you download plus another code that the site prompts you for. As a result, phone-based 2FA systems are very popular (and if that’s all you have, I advise you to use it, but really you want WebAuthn, which I’ll be describing in my next post).

Password Authenticated Key Agreement

One of the nice properties of 2FA systems is that they do not require modifying the client at all, which is obviously convenient for deployment. That way you don’t care if users are running Firefox or Safari or Chrome, you just tell them to get the second factor app and you’re good to go. However, if you can modify the client you can protect your password rather than just limiting the impact of having it stolen. The technology to do this is called a Password Authenticated Key Agreement (PAKE) protocol.

The way a PAKE would work on the Web is that it would be integrated into the TLS connection that already secures your data on its way to the Web server. On the client side when you enter your password the browser feeds it into TLS and on the other side, the server feeds in a verifier (effectively a password hash). If the password matches the verifier, then the connection succeeds, otherwise it fails. PAKEs aren’t easy to design — the tricky part is ensuring that the attacker has to reconnect to the server for each guess at the password — but it’s a reasonably well understood problem at this point and there are several PAKEs which can be integrated with TLS.

What a PAKE gets you is security against phishing: even if you connect to the wrong server, it doesn’t learn anything about your password that it doesn’t already know because you just get a cryptographic failure. PAKEs don’t help against password file compromise because the server still has to store the verifier, so the attacker can perform a password cracking attack on the verifier just as they would on the password hash. But phishing is a big deal, so why doesn’t everyone use PAKEs? The answer here seems to be surprisingly mundane but also critically important: user interface.

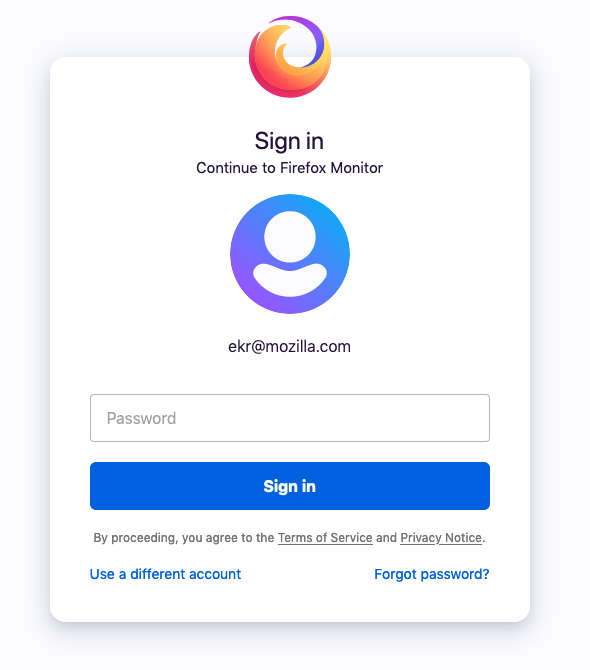

The way that most Web sites authenticate is by showing you a Web page with a field where you can enter your password, as shown below:

When you click the “Sign In” button, your password gets sent to the server which checks it against the hash as described in part I. The browser doesn’t have to do anything special here (though often the password field will be specially labelled so that the browser can automatically mask out your password when you type); it just sends the contents of the field to the server.

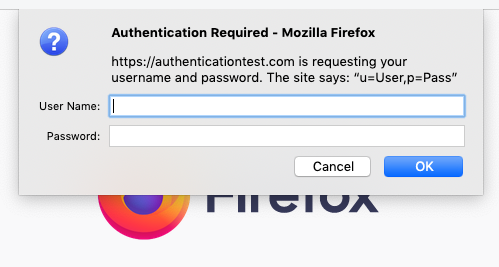

In order to use a PAKE, you would need to replace this with a mechanism where you gave the browser your password directly. Browsers actually have something for this, dating back to the earliest days of the Web. On Firefox it looks like this:

Hideous, right? And I haven’t even mentioned the part where it’s a modal dialog that takes over your experience. In principle, of course, this might be fixable, but it would take a lot of work and would still leave the site with a lot less control over their login experience than they have now; understandably they’re not that excited about that. Additionally, while a PAKE is secure from phishing if you use it, it’s not secure if you don’t, and nothing stops the phishing site from skipping the PAKE step and just giving you an ordinary login page, hoping you’ll type in your password as usual.

None of this is to say that PAKEs aren’t cool tech, and they make a lot of sense in systems that have less flexible authentication experiences; for instance, your email client probably already requires you to enter your authentication credentials into a dialog box, and so that could use a PAKE. They’re also useful for things like device pairing or account access where you want to start with a small secret and bootstrap into a secure connection. Apple is known to use SRP, a particular PAKE, for exactly this reason. But because the Web already offers a flexible experience, it’s hard to ask sites to take a step backwards and PAKEs have never really taken off for the Web.

Public Key Authentication

From a security perspective, the strongest thing would be to have the user authenticate with a public private key pair, just like the Web server does. As I said above, this is a feature of TLS that browsers actually have supported (sort of) for a really long time but the user experience is even more appalling than for builtin passwords.4 In principle, some of these technical issues could have been fixed, but even if the interface had been better, many sites would probably still have wanted to control the experience themselves. In any case, public key authentication saw very little usage.

It’s worth mentioning that public key authentication actually is reasonably common in dedicated applications, especially in software development settings. For instance, the popular SSH remote login tool (replacing the unencrypted Telnet) is commonly used with public key authentication. In the consumer setting, Apple Airdrop usesiCloud-issued certificates with TLS to authenticate your contacts.

Up Next: FIDO/WebAuthn

This was the situation for about 20 years: in theory public key authentication was great, but in practice it was nearly unusable on the Web. Everyone used passwords, some with 2FA and some without, and nobody was really happy. There had been a few attempts to try to fix things but nothing really stuck. However, in the past few years a new technology called WebAuthn has been developed. At heart, WebAuthn is just public key authentication but it’s integrated into the Web in a novel way which seems to be a lot more deployable than what has come before. I’ll be covering WebAuthn in the next post.

- And by “wire” I mean a literal wire, though such sniffing attacks are prevalent in wireless networks such as those protected by WPA2 ↩

- Note that to really make this work well, you also need to require a new code in order to change your password, otherwise the attacker can change your password for you in that window. ↩

- Interestingly, OTP systems are still subject to server-side compromise attacks. The way that most of the common systems work is to have a per-user secret which is then used to generate a series of codes, e.g., truncated HMAC(Secret, time) (see RFC6238). If an attacker compromises the secret, then they can generate the codes themselves. One might ask whether it’s possible to design a system which didn’t store a secret on the server but rather some public verifier (e.g., a public key) but this does not appear to be secure if you also want to have short (e.g., six digits) codes. The reason is that if the information that is used to verify is public, the attacker can just iterate through every possible 6 digit code and try to verify it themselves. This is easily possible during the 30 second or so lifetime of the codes. Thanks to Dan Boneh for this insight. ↩

- The details are kind of complicated here, but just some of the problems (1) TLS client authentication is mostly tied to certificates and the process of getting a certificate into the browser was just terrible (2) The certificate selection interface is clunky (3) Until TLS 1.3, the certificate was actually sent in the clear unless you did TLS renegotiation, which had its own problems, particularly around privacy. ↩

Update: 2020-07-21: Fixed up a sentence.

The post A look at password security, Part III: More secure login protocols appeared first on The Mozilla Blog.