Nothing could be more basic than language and math, right? And these are built on solid foundations that form the basis for literacy and numeracy, right? Well - no. Today I have two cases in point. The lead item points to "three different foundational ideas can be identified in recent syntactic theory: structure from substitution classes, structure from dependencies among heads, and structure as the result of optimizing preferences" (my italics). In another post, Daniel Lemire notes that in most programming languages, 0.2+0.1 does not equal 0.3. Why? "The computer does not represent 0.1 or 0.2 exactly. Instead, it tries to find the closest possible value. For the number 0.1, the best match is 7205759403792794 times 2-56." Wait - what? Lemire explains that a computer could perform the operation the way humans do, but it's a lot slower. Now, note: none of this shows that math and grammar are wrong per se, only that they are much more complex phenomena than are generally supposed, and that there is more than one way of looking at them, and the way we actually do look at them might not be the best or most efficient.

Web: [Direct Link] [This Post]Rolandt

Shared posts

Liked Danke OpenStreetMap | Nur ein Blog Denn...

Denn auf dieser kleinen Insel hat sich jemand die Mühe gemacht, kleinste Wege einzuzeichnen. Dafür bin ich all den freiwilligen HelferInnen der OpenStreetMap immer wieder sehr dankbar.

Robert Lender thanks Open Street Map (OSM) and its volunteers for drawing in the small (foot)paths other maps usually miss (as they’re more car oriented), and recounts how he used it on a small Greek island he visited that most tourists don’t know about, where he could wander around all the smallest paths with the OSM app. “Therefore, thank you once again. Because I am not adding geodata to OSM, I at least donated something to the OpenStreetMap Foundation.” Great idea. [I tried to follow that example, by signing up as a member, but there’s a glitch with Paypal.]

Experts, Expertise, and 20% of Questions

Nick writes about the role of experts in communities of practice (although the post is appropriate for any kind of community).

He also shares this table:

In most situations, expert predictions perform worse than both AI and the wisdom of the crowd. Nick terms this ‘the expert squeeze’.

In many support communities, deep subject matter expertise isn’t worth much more than someone who solved the problem yesterday. Once someone has given you the answer, you can share the answer with the next person.

In fact, the majority of questions asked are so far below their level that a real expert would be bored to tears answering them. It’s like asking a scientist to answer questions on Twitter.

Hence, many superusers aren’t top experts, but simply people who like helping out and have solved the 20% of problems which account for 80% of the problems people face.

For some projects – especially big, internal, collaboration projects real experts are important, as Nick notes, for guiding the debate and identifying what’s needed to move the discussion forward. But in many other communities, someone who solved the problem yesterday is as good as anyone else.

The Best DSLR for Beginners

If you have a set of Canon or Nikon DSLR lenses or know that you prefer an optical viewfinder and don’t want to spend big on a camera, a beginner DSLR could be just the ticket. The Nikon D3500 is our pick for the best DSLR camera for novice photographers because it offers outstanding image quality for its price and a truly useful Guide Mode that helps you learn along the way. It boasts excellent battery life, easy smartphone connectivity, 1080/60p video with silent autofocus, and intuitive controls in a highly portable and lightweight body.

As we explain here, if you don’t already have some DSLR lenses and don’t dislike electronic viewfinders, or plan to frame your photos on a screen the same way you do with your phone, you should look for a mirrorless camera.

5 Levels of Communication

Level 1: Ritual

Level 2: Extended Ritual

Level 3: Content (or Surface)

Level 4: Feelings About Content

Level 5: Feelings About Each Other

Interesting read: Richard Francisco’s Five Levels of Communication maps out a series of “levels” that represent increasing degrees of difficulty, risk, and potential learning in our interactions.

Twitter Favorites: [MrSteveTweedale] "We should recognise Herbert for exploring Islam and religion without essentialising them, without reducing them to… https://t.co/3iO4SyPgDi

"We should recognise Herbert for exploring Islam and religion without essentialising them, without reducing them to… twitter.com/i/web/status/1…

Circular Imports

I am struggling to find a readable explanation of how circular imports are handled

in Python and JavaScript (more specifically, by the require function used for CommonJS modules).

Here’s a short example in Python:

a.py |

b.py |

|

|---|---|---|

import b

def P():

print("P")

b.Q()

def R():

print("R")

|

import a

def Q():

print("Q")

a.R()

|

If we run this in the interpreter, the functions call each other as desired:

>>> import a

>>> a.P()

P

Q

R

And if we change a.py to call P() as the file is being loaded, it still works:

a.py |

b.py |

|

|---|---|---|

import b

def P():

print("P")

b.Q()

def R():

print("R")

P() # ADDED

|

import a

def Q():

print("Q")

a.R()

|

>>> import a

P

Q

R

But if we run a.py directly from the command line, it fails:

$ python a.py

P

Traceback (most recent call last):

File "a.py", line 1, in <module>

import b

File "/home/gvwilson/example/b.py", line 1, in <module>

import a

File "/home/gvwilson/example/a.py", line 10, in <module>

P()

File "/home/gvwilson/example/a.py", line 5, in P

b.Q()

AttributeError: module 'b' has no attribute 'Q'

Update: see this thread from EM Bray.

Node is more consistent.

Suppose I have two JavaScript files a.js and b.js:

a.js |

b.js |

|

|---|---|---|

const {Q} = require('./b')

const P = () => {

console.log('P')

Q()

}

const R = () => {

console.log('R')

}

module.exports = {P, R}

|

const {R} = require('./a')

const Q = () => {

console.log('Q')

R()

}

module.exports = {Q}

|

Running inside the interpreter fails during loading:

> const {P} = require('./a')

undefined

> (node:1756) Warning: Accessing non-existent property 'R' of module exports inside circular dependency

(Use `node --trace-warnings ...` to show where the warning was created)

> P()

P

Q

Uncaught TypeError: R is not a function

at Q (/home/gvwilson/example/b.js:5:3)

at P (/home/gvwilson/example/a.js:5:3)

and we get a similar error if we modify a.js to call P() at the top level as the file is loading.

It also fails if we use a bundler like Parcel.

Our HTML file is:

<html>

<head>

<script src="./a.js"></script>

</head>

<body>

<p>Hello</p>

</body>

</html>

and we run with:

$ parcel test.html

Server running at http://localhost:234

Build in 0.124s.

When we look in the browser console, we see an uncaught error because R is not a function.

Does this mean that circular imports simply don’t work? That seems to be the case for JavaScript, but not necessarily for Python. My questions now are:

- Why do they work sometimes for Python but not always?

- Do they really not work in JavaScript without extra developer effort (e.g., creating a module initialization function so that loading and initializing are separated)?

- Most importantly, where can I find tutorials that explain how things like this work—not just for these two languages but in general? Where are the compare-and-contrasts for up-and-coming software engineers (and older ones like myself who know a lot less than we think we do)? Why are books like Levine’s Linkers and Loaders and Pearson’s Software Build Systems so rare?

These Weeks in Firefox: Issue 81

Highlights

-

Tab-to-search has been enabled in Nightly! When the URL bar autofills an origin having an installed search engine, users can press tab or down to pick a special shortcut result and enter Search Mode.

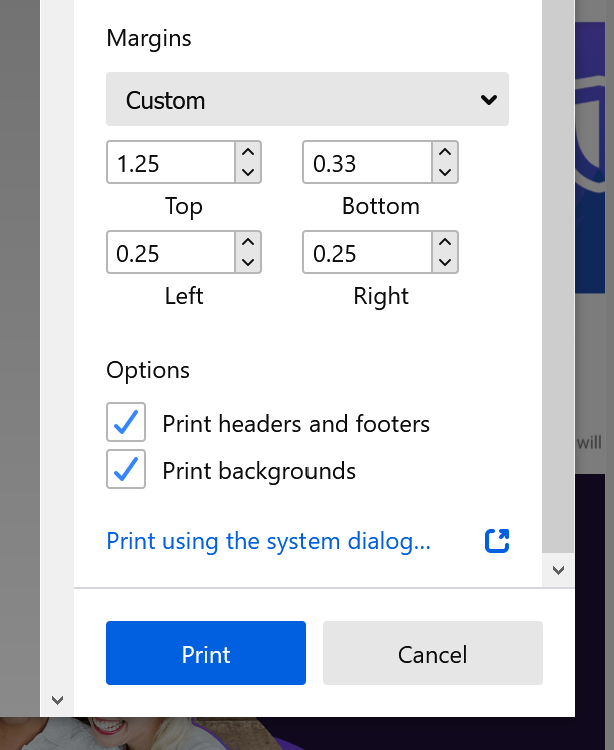

- Emma Malysz added support for setting custom print margins from the new print preview UI (bug 1664570)

- Windows users on the default Firefox theme can now enable a new Skeleton UI which will display immediately during Firefox startup (set the pref browser.startup.preXulSkeletonUI to true).

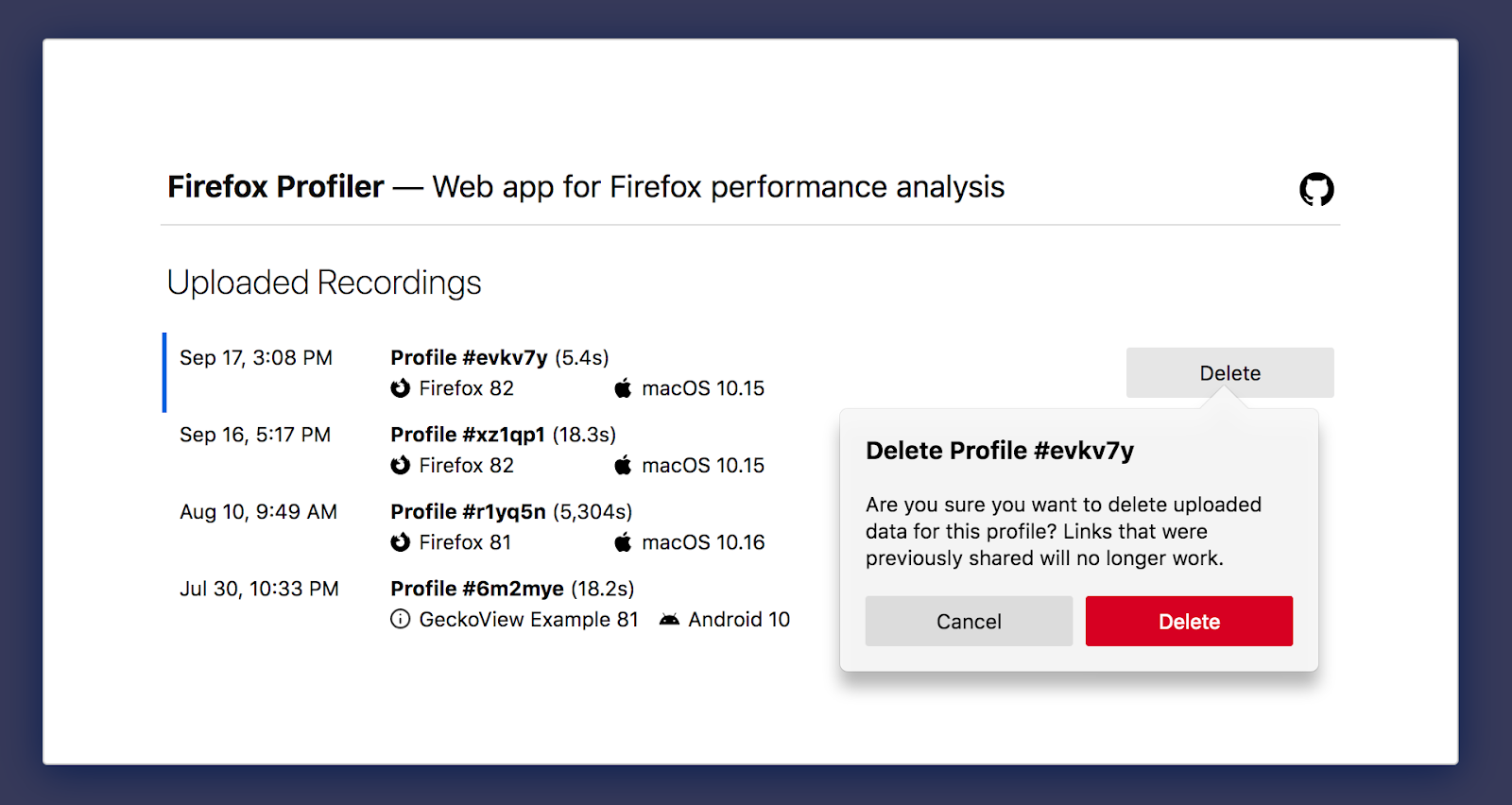

- It’s now possible to delete the profiles you’ve uploaded. Go to https://profiler.firefox.com/uploaded-recordings/ and click on the delete button for the profiles you want to delete.

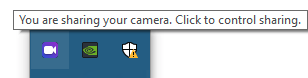

- Device sharing state indicator icons have been added to the system tray on Windows

- Micah landed a new keyboard shortcut to show/hide the Bookmarks Toolbar (Ctrl+Shift+B on Windows/Linux, Cmd+Shift+B on macOS). The Library will now use Ctrl/Cmd+Shift+O

Friends of the Firefox team

Resolved bugs (excluding employees)

Fixed more than one bug

- Itiel

- Niklas Baumgardner

New contributors (🌟 = first patch)

- 🌟 Niklas Baumgardner fixed the Picture-in-Picture tab indicator icon so that it no longer acts as the mute toggle. We show the mute toggle over the favicon instead.

- Chris Jackson added a test to ensure that the Picture-in-Picture keyboard shortcut chooses the right video.

Project Updates

Add-ons / Web Extensions

Addon Manager & about:addons

- AddonManager.maybeInstalBuiltinAddon has been fixed to return a promise, as it was actually already documented in its jsdoc inline comment (Bug 1665150)

WebExtensions Framework

- kmag landed some Fission-related changes to make some additional parts of the WebExtensions internals Fission-aware (in particular related to the “activeTab permission’s window matching” and “checking parent frames on content script injection”) – Bug 1646573

Developer Tools

- DevTools Fission status page (wiki)

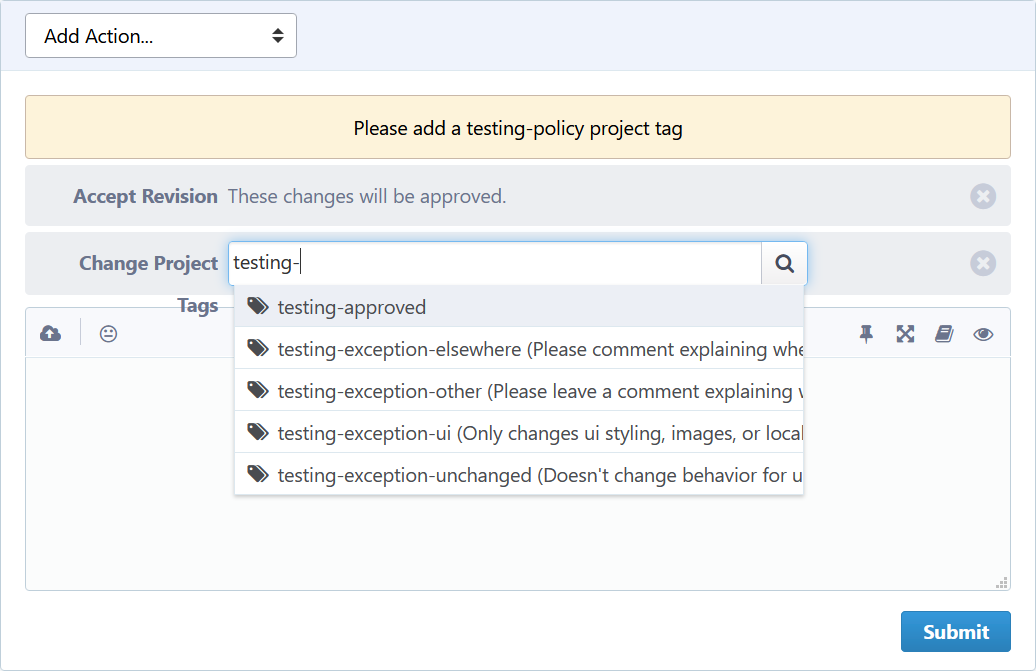

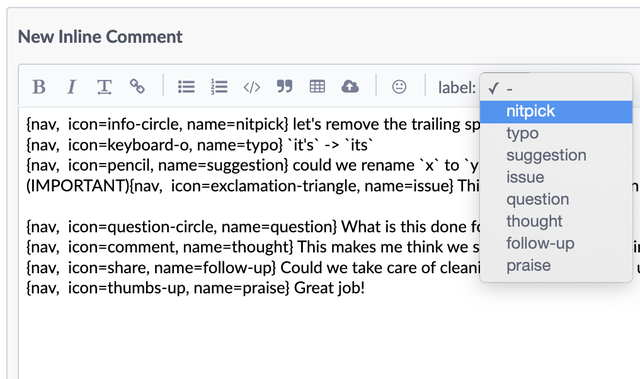

- New add-on phab-test-policy by Nicolas Chevobbe

- File bugs on github

- New Add-on phab-conventional-comments by Nicolas Chevobbe

- File bugs on github

Fission

- Nightly experiment to roll out hopefully by next week. 7 blocking bugs remain.

Lint

- Gijs updated us to newer ESLint and eslint-plugin-no-unsanitized versions so we can now use `??=` and other logical assignment operators.

Mobile

- We wrote a script that, when building Fenix with a local android-components or GeckoView checkout, will tell you which commits to check out to ensure your Fenix + local ac/GV builds will build cleanly. Without this, frequently you’d check out master on both repositories and hope there were no breaking changes that would cause a build with no local changes to fail.Example run:

$ ./tools/list_compatible_dependency_versions.py ../fenix Building fenix with a local ac? The last known ac nightly version that cleanly builds with your fenix checkout is... 62.0.20201002143132 To build with this version, checkout ac commit... d96b2318847691afee0dfd2dd1cf8d25c258792a

Password Manager

- Bug 1134852 – Update password manager recipes from a server/kinto/Remote Settings. Thanks tgiles, this was a complex task spanning multiple products and components

- Bug 1626764 – The “Sign in to Firefox” button should not have the label wrap to two lines. Thanks to contributor kenrick95

- Bug 1647934 – Clean up a user’s login backup when it may no longer be useful

- Bug 1660231 – Enable MASTER_PASSWORD_ENABLED telemetry probe on release

PDFs & Printing

- Sam fixed a bug where printers reporting no available paper sizes would see errors bug 1663503

- Mark has a patch to support submitting the print form immediately after opening with Enter bug 1666776

- We’ve been ramping up our test coverage

Performance

-

Skeleton UI

- Doug has patches up for review for animating it and is continuing to work on fixing bugs and oddities with it. Eg. ensuring it correctly handles maximized windows, which is hairier than expected.

- Emma has been looking at getting the bounds of the urlbar rect for the early blank window paint

- (Reminder: set the pref browser.startup.preXulSkeletonUI to dogfood this. Windows-only.)

- As a team we’ve started discussing problems and paths forward for BHR (Background Hang Reporter).

- Gijs has a patch for slow script telemetry from the parent process. This is to report on telemetry the cases where we would have previously shown a slow script dialog, which isn’t useful for the parent process.

- Gijs landed more IO-off-the-main-thread work for downloads

Performance Tools

- You can name the profiles by clicking on the top left profile title now.

- Florian added a GetService marker that shows when an xpcom service gets first instantiated. The marker text only shows the cid; the contract id, or service name is shown in a label frame in the stack. Example profile: https://share.firefox.dev/2SutTKn

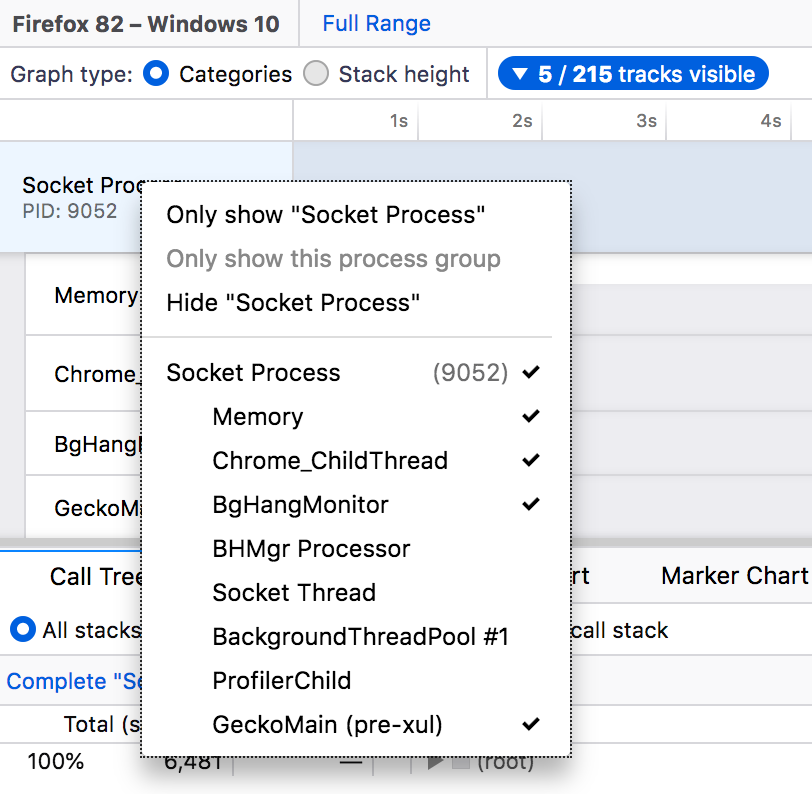

- Our contributors made some improvements on the track context menu:

- Track context menu now shows only the right clicked process instead of showing everything. (Thanks adityachirania!)

- Added a Hide “Track Name” to the track context menu. (Thanks CipherGirl!)

Picture-in-Picture

- Recently fixed:

- Students are currently working on:

- Bug 1604247 – Provide an easy way to snap a PiP window back to a corner after moving it elsewhere

- Bug 1578985 – Picture-in-Picture does not remember location and size of the popout windows

- Bug 1653496 – Picture-in-Picture option not shown for VideoHTMLElement with VideoTrack from Twilio

- Bug 1589680 – Make it possible to have more than one Picture-in-Picture window

Search and Navigation

- The team is focusing on new opportunities related to vertical search, experiments will follow.

- Consolidation of aliases and search keywords – Bug 1650874

- UX working on better layout of search preferences

- Development is temporarily on pause to concentrate on higher priorities

- Urlbar Update 2

- Polishing the feature to release in Firefox 83.

- Clicking on the urlbar opens Top Sites also in Private Browsing windows, unless they have been disabled in urlbar preferences. Bug 1659752

- Search Mode will always show search suggestions at the top. Bug 1664760

WebRTC UI

- The global sharing indicator now only displays if the user is sharing their screen, and the microphone and camera mute toggles have been hidden by default.

- You can turn these back on by going to about:preferences#experimental, and checking “WebRTC Global Mute Toggles”.

- These are experimental because many sites don’t pay attention to the “muted” event, so they don’t update their UI when mute state is set.

- The global sharing indicator is currently slated to ride out in Firefox 83.

Hand Drawn 2D Animation with PureOS and Librem Laptops

Professional animation is not just possible but ideal with free software, this story shares what is possible running PureOS, Librem laptops, and accessories. I have been using free software for 6 years and each year these freedom respecting professional tools I use seem to improve faster than the commercial proprietary pace.

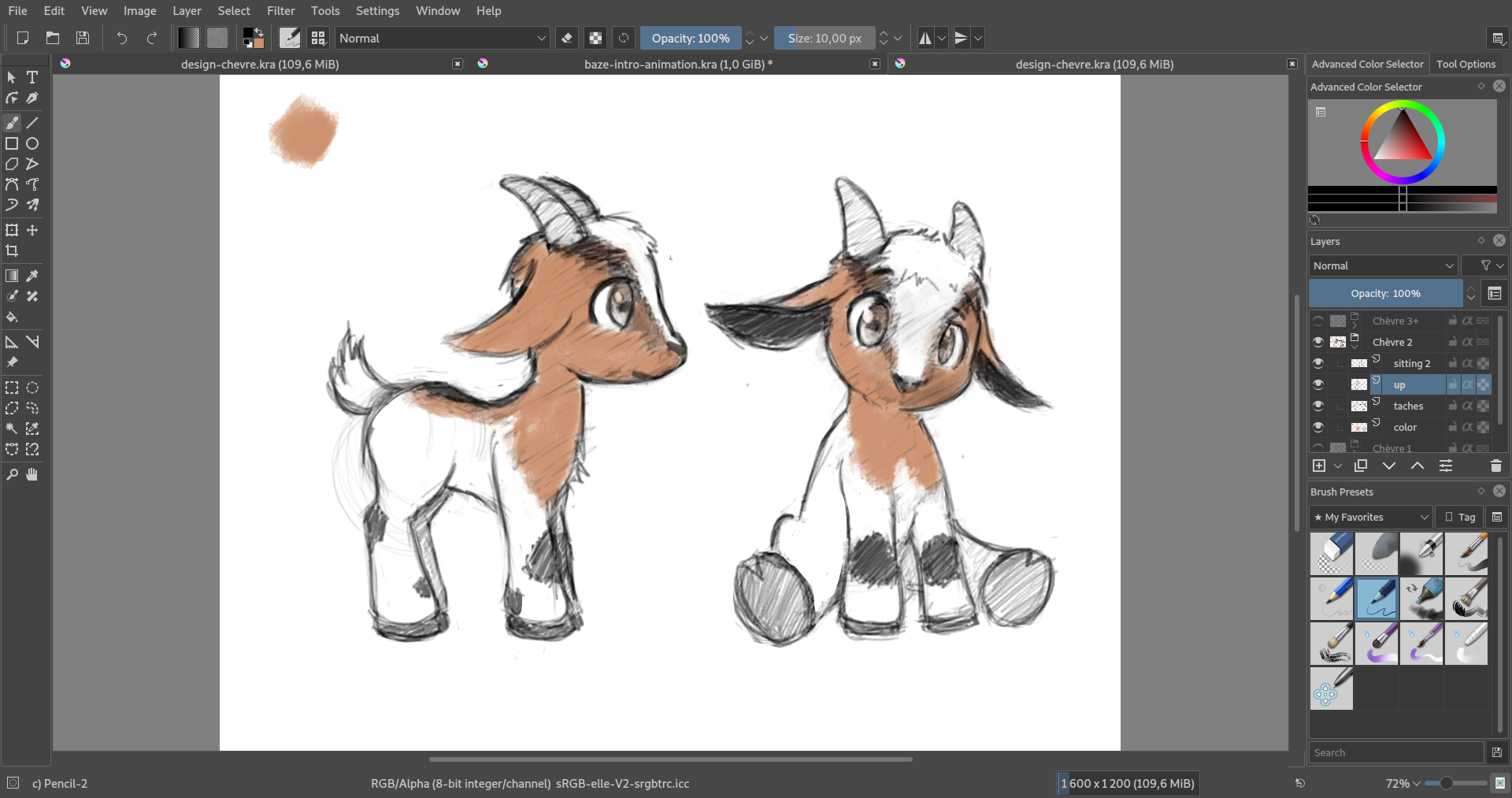

Krita, as an example, released an animation feature that made it the perfect tool for making rough animations. That same year, the software Toonz, that was used by the legendary Studio Ghibli for clean up and coloring purpose, was released as free software under the name of OpenToonz. Nice, with just these two features and tools released, I had everything I needed to do traditional animations again with my Librem based digital studio. Below I will go through the workflow of making a simple hand made 2D animation.

This particular animation was commissioned to me, during the summer, by a young french film production called Baze Production. The goal of this project was to make a cute production identity intro in the same style as Pixar or Illumination Studios, but with hand made animations instead of 3D computer graphics. For that matter, I used 2 Librem laptops and 2 Wacom tablets.

Designing the character

The first step, in this project, was to design the character. The requirements I have been given were pretty straight forward : The character has to be a goat and it has to be cute.

Based on that, I made a few character designs on Krita and the following one was selected.

Drawing the storyboard

Animating is a lot about observing and understanding how to decompose a movement. Therefore, before diving into the animation, I watched many “cute goats” videos online. I was impressed by how popular those videos are on the internet!

After a few hours of watching cute baby goats videos, I had a rough idea about how they move but I didn’t really know what our goat would do on those “BAZE” letters. The first requirement was that the goat enters the screen from the left, jumps on the letter “B” and sits on it. Then, I put myself in the head of a goat and thought that the “E” was flatter and wider than the “B” so it would be more comfortable to sit there. I could have made the goat appear from the right side of the screen but I though it would be fun to see it jump across the different letters. Especially as the “A” is a tricky one to stand on top of.

As this small animation is a single shot, instead of making a proper storyboard, I ended up drawing a few key frames that would give a first impression of what the animation would be.

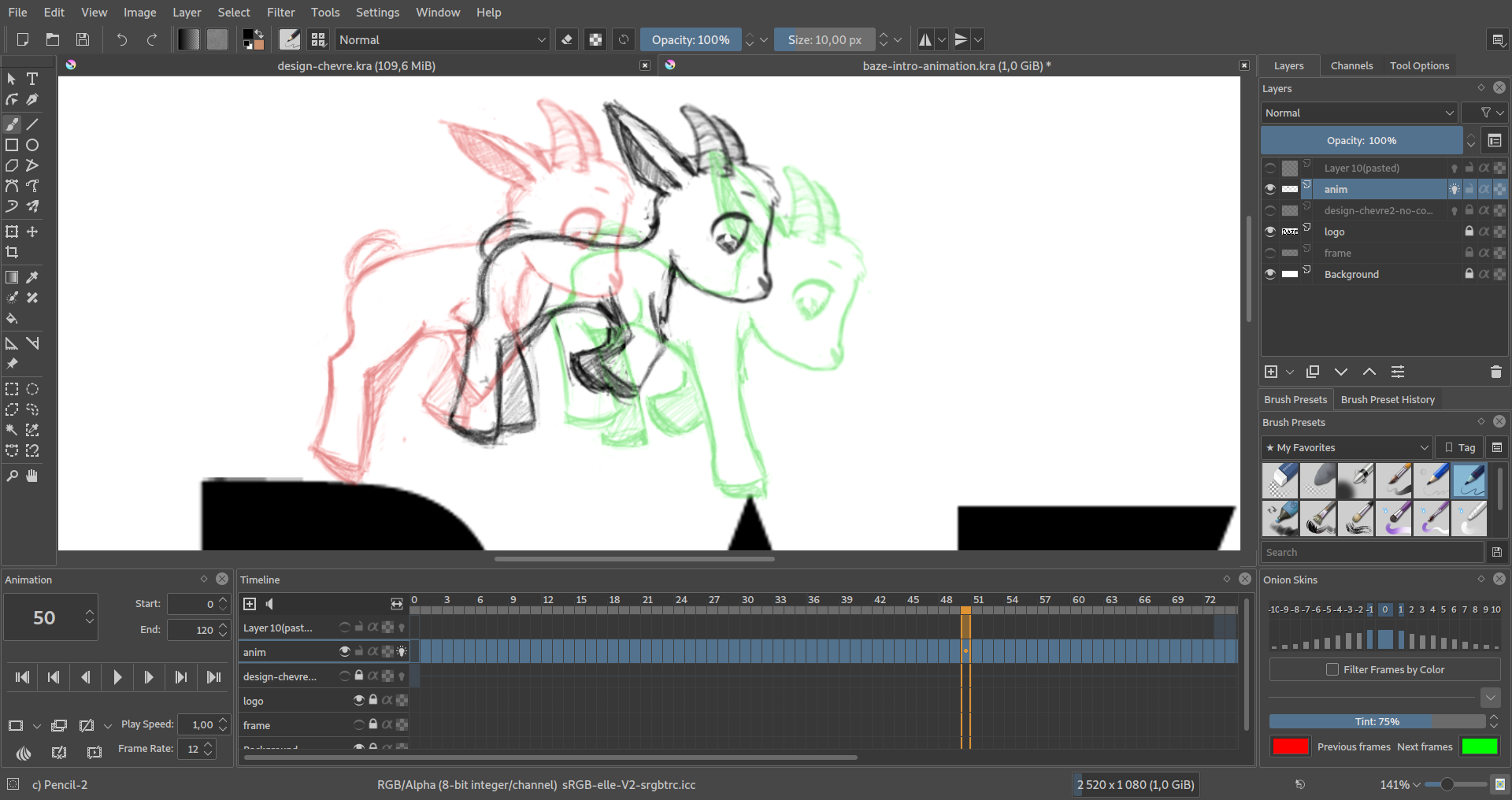

Doing the rough animation

Based on those few key frames, I made a 12 fps rough animation on Krita. This is a pretty long process but it is the one I prefer doing as it feels like giving life to this cute animal. I always think that there is something magical with animations.

When I do sketches or rough animations, I don’t need to be extremely precise with my lines and I prefer using a classic graphics tablet that is standing on my table and where I do not have my hand over the screen. This way, it lets me keep my eye on the entire canvas while drawing.

For making this rough animation, I used my Librem 13 with a simple Wacom Bamboo tablet.

The technique I use for animating is to draw some key frames, dispatch them across the timeline in order to get an idea of the rhythm of the overall movement, then I draw the in-between frames until I get to a smooth result.

I usually animate at 12 frames per second and if I want to do a full speed 24 fps animation, I do a second pass of in-between drawings. For this particular video, I stayed at 12 fps.

Here is what the rough animation looked like :

Clean up and coloring

I personally love the style of hand made rough animations and I would often end an animation project at this point. However, for this one, I was asked to do a clean and colored animation.

For this kind of work, I need to be a lot more precise with my lines and so, I used a Wacom Cintiq tablet connected to my second Librem laptop. Both laptops data get constantly synchronized through the use of Unison and they share a single mouse, thanks to Barrier. This way, it is easy for me to move from one computer to another. I can even copy and paste from one computer to another. It feels just like if I had 4 screens on a single computer.

OpenToonz is a beautiful and powerful software. I am pretty new to it and I still have a lot to learn and to practice in order to use it correctly. For this project, I have made the line art and coloring on the same vector layer while the best practice seems to be doing the line art on a vector layer, in order to have smooth editable lines, and the color on a raster layer for it to be well applied and detailed with a brush. I will experiment more with that on a future project.

Here is a video of the final animation.

The post Hand Drawn 2D Animation with PureOS and Librem Laptops appeared first on Purism.

Disassembly Required

HitchBot, a friendly-looking talking robot with a bucket for a body and pool-noodle limbs, first arrived on American soil back in 2015. This “hitchhiking” robot was an experiment by a pair of Canadian researchers who wanted to investigate people’s trust in, and attitude towards, technology. The researchers wanted to see “whether a robot could hitchhike across the country, relying only on the goodwill and help of strangers.” With rudimentary computer vision and a limited vocabulary but no independent means of locomotion, HitchBot was fully dependent on the participation of willing passers-by to get from place to place. Fresh off its successful journey across Canada, where it also picked up a fervent social media following, HitchBot was dropped off in Massachusetts and struck out towards California. But HitchBot never made it to the Golden State. Less that two weeks later, in the good city of Philadelphia, HitchBot was found maimed and battered beyond repair.

We should learn to see the robot for what it is — someone else’s property, someone else’s tool

The destruction of HitchBot at the hands of unseen Philly assailants was met in some quarters by alarm or hilarity. “The hitchhiking robot @hitchBOT has been destroyed by scumbags in Philly” tweeted Gizmodo, along with a photograph of the dismembered robot. “This is why we can’t have nice hitchhiking robots,” wrote CNN. The creators of the robot were more circumspect, saying “we see this as kind of a random act and one that could have occurred anywhere, on any one of HitchBot’s journeys.” The HitchBot project was only one small part of their robot-human interaction research, which looks at how workplaces might optimally integrate human and robotic labor. “Robots entered our workplace a long time ago,” they write, “as co-workers on manufacturing assembly lines or as robotic workers in hazardous situations.” In size and sophistication, HitchBot was less “co-worker” and more oversized toddler, designed to be “appealing to human behaviors associated with empathy and care.” With its cute haplessness and apparently benign intentions, the “murder” of HitchBot by savage Philadelphians seemed all the more appalling.

The bot-destroyers were onto something. It feels natural to empathize with HitchBot, the innocent bucket-boy whose final Instagram post read “Oh dear, my body was damaged…. I guess sometimes bad things happen to good robots!” We’re told by technologists and consultants that we’ll need to learn to live with robots, accept them as colleagues in the workplace, and welcome them into our homes, and sometimes this future vision comes with a sense of promise: labor-saving robots and android friends. But I’m not so sure we do need to accept the robots, at least not without question. Certainly we needn’t be compelled by the cuteness of the HitchBot or the malevolent gait of the Boston Dynamics biped. Instead, we should learn to see the robot for what it is — someone else’s property, someone else’s tool. And sometimes, it needs to be destroyed.

Those Philly HitchBot-killers have more guts than I do: It feels wrong to beat up a robot. I’ve seen enough Boston Dynamics videos to get the heebie jeebies just thinking about it. Watching the engineer prod Boston Dynamics’ bipedal humanoid robot Atlas with a hockey stick to try and set it off balance sets off all kinds of alarm bells. Dude stop… you’ll piss him off! Sure, the robot gets up again (calmly, implacably), but he might be filing the indignity away in his hard drive brainbox, full of hatred for the human race before he’s even left the workshop. Even verbal abuse seems risky, even if the risk is mostly to my own character. I can’t imagine yelling at Alexa or Siri and calling her a stupid bitch — although I’m sure many do — out of fear that I’ll enjoy it too much, or that I won’t be able to stop. Despite everything I know, it’s difficult to internalize the fact that robots with anthropomorphic qualities or humanlike interactive capabilities don’t have consciousness. Or, if they don’t yet, one imagines that they might soon.

Some of the world’s most influential tech talkers have gone beyond imagining machine consciousness. They’re worried about it, planning for it, or actively courting it. “Hope we’re not just the biological boot loader for digital superintelligence” Elon Musk tweeted back in 2014. “Unfortunately, that is increasingly probable.” Famously, Musk has repeatedly identified general artificial intelligence — that is, AI with the capacity to understand or perform any intellectual task a human can — as a threat to the future of humanity. “We’re headed toward a situation where AI is vastly smarter than humans and I think that time frame is less than five years from now,” he told Maureen Dowd this July. Even Stephen Hawking, a man far less prone to histrionics, warned that general AI could spell the end of the human race. And while AI and robots aren’t synonymous, decades of cinema history, from Metropolis to The Matrix, have conjured a powerful sense that artificially intelligent robots would pose a potent threat. Harass too many robots with a hockey stick and you’ll be spending the rest of your existence in a vat of pink goo, your vital fluids sucked out by wet cables to provide juice for the evil robot overlords. Back in our own pre-general AI reality, we’re left with the sense that it’s prudent to treat the robots in our lives with respect, because they’ll soon be our peers, and we better get used to it.

As robots and digital assistants become more prevalent in workplaces and homes, they ask more of us. In Amazon’s fulfillment center warehouses, pickers and packers interact carefully with robotic shelving units, separated by thin fences or floor markings but still in danger of injury from the moving parts and demanding pace of work. As small delivery robots begin to patrol the streets of college towns and suburbs, humans are required to share the sidewalk, stepping out of their way or occasionally aiding the robots’ passage during the last yards of the delivery process. In order to interact with us more “naturally” and efficiently, many of these robots are equipped with human characteristics, made relatable with big decal eyes or bipedal movement or a velvety voice that encourages those who encounter them to empathize with them as fellow beings. In other instances, robots are given anthropomorphic characteristics so they can survive on our turf. The spider-dog appearance and motion of Boston Dynamics’ Spot ostensibly helps it navigate complex terrain and traverse uneven ground, so it can be useful on building sites or in theaters of war. When I jogged through Golden Gate park last week and saw a box-fresh Spot out for a walk with its wealthy owners, I was immediately compelled and somewhat creeped by its uncanny gait. Its demon-dog movement, and the sense that it’s very much alive, is undoubtedly central to its appeal. In either case, the anthropomorphic design of this kind of robot provides a framework for interaction, even prompting us to treat them with empathy, as fellow beings with shared goals.

Marty at Giant grocery stores

Our almost inescapable tendency to anthropomorphize robots is helpful to the companies and technologists invested in increasing automation and introducing more robots into homes, workplaces, and public spaces. Robotics companies encourage this framing, describing their robots as potential colleagues or friends rather than machines or tools. Moxi, the “socially intelligent” hospital assistant robot which is essentially an articulated arm on wheels, is pitched as a “valuable team member” with “social intelligence” and an expressive “face.” Marty, the surveillance robot that patrols Giant Food supermarket aisles, was given absolutely enormous googly eyes to make it look “a bit more like a human” despite being more or less just a massive rolling rectangle. Reports and white papers on the future of work eagerly discuss the rise of “co-bots,” robots that work alongside humans, often in service roles different than the industrial applications we’re used to. The anthropomorphic cues (googly eyes, humanoid forms) assist us in learning how to relate to these new robot buddies — useful training in human-robot cooperation for when the robots do gain autonomy or even consciousness.

For now, the robots aren’t anywhere near sentient, and the promise of “general AI” is just a placeholder term for an as-yet unrealized (and quite possibly unrealizable) concept. For something that doesn’t really exist, however, it holds a lot of power as an imaginative framework for reorganizing and reconceptualizing labor — and not for the benefit of the laborer, as if that needed to be said. Of course, narrow AI applications, like machine learning and the infrastructures that support it, are widespread and increasingly enmeshed in our economy. This “actually-existing AI-capitalism,” as Nick Dyer-Witheford and his co-authors Atle Mikkola Kjøsen and James Steinhoff call it in their book Inhuman Power, continues to extend its reach into more and more spheres of work and life. While these systems still require plentiful human labor, “AI” is the magic phrase that lets us accept or ignore the hidden labor of thousands of poorly paid and precarious global workers — it is the mystifying curtain behind which all manner of non-automated horrors can be hidden. The idea of the “robot teammate” functions in a similar way, putting a friendly surface between the customer or worker or user and the underlying function of the technology. The robot’s friendliness or cuteness is something of a Trojan horse — an appealing exterior that convinces us to open the castle gates, while a phalanx of other extractive or coercive functions hides inside.

AI advocates and technology evangelists sometimes frame concern or distrust of automation and robotics as a fear based in ignorance. Speaking about a Chapman University study which found that Americans rate their fear of robots higher than their fear of death, co-author Dr. Christopher Bader stated that “people tend to express the highest level of fear for things they’re dependent on but that they don’t have any control over,” especially when, as with complex technology, people “don’t have any idea how these things actually work.” Other researchers cite the human-like qualities of some robots as the thing that provokes fear, with their almost-humanness slipping into the uncanny valley where recognition and repulsion collide. Of course, not all fear is due to ignorance, and the Cassandras that sound the alarm call around robot labor and autonomous machines are often more clear-eyed than the professional forecasters and tech evangelists.

Robots’ anthropomorphic design prompts us to treat them with empathy, as fellow beings with shared goals. The fact is, robots aren’t your friends

The Luddites, currently enjoying a moment of renewed attention after a century of derision and misunderstanding, were clear-eyed about the role of industrial machinery, its potential to undermine worker livelihoods and, indeed, a way of life. In his book Progress Without People, historian David F Noble emphasizes that the Luddites didn’t hate machines out of hatred or ignorance, writing that “they had nothing against machinery, but they had no undue respect for it either.” Plenty of the era’s machine breaking was, as Eric Hobsbawm famously described it, an act of “collective action by riot” — destruction intended to pressure employers into granting labor or wage concessions. Other workers wrecked looms and stocking frames because the new automated textile equipment was poised to dismantle their craft-based trade or undermine labor practices. In any case, the machine breakers recognized the machinery as an expression of the exploitative relation between them and their bosses, a threat to be dealt with by any means necessary.

Unlike so many of today’s technologists who are bewitched by the Manifest Destiny of abstract technological progress, the Luddites and their fellow saboteurs were able to, as Noble writes, “perceive the changes in the present tense for what they were, not some inevitable unfolding of destiny but rather the political creation of a system of domination that entailed their undoing.” Luddism predates the kind of technological determinism we’re drowning in today, from both the liberal technologists and the “fully-automated luxury communism” leftists. Looms and spinning jennies weren’t viewed as a necessary gateway to a potential future of helpful androids and smart objects. A similar present-tense analysis can be applied in the technologies of today. When we relate to a robot as an animate peer to be loved or feared, we’re letting ourselves be compelled by a vision ginned up for us by goofy futurists. For now, if robots have a consciousness or an agency, it’s the consciousness of the company that owns them or created them.

The fact is, robots aren’t your friends. They’re patrolling supermarket aisles to watch for shoplifting and mis-shelved items, or they’re talking out of both sides of their digital mouths, responding to your barked requests for the weather report or the population of Mongolia and then turning around and sharing your data and preferences and vocal affect with their masters at Google or Amazon. By putting anthropomorphic robots — too cute to harm, or too scary to mess with — between us and themselves, bosses and corporations are doing what they’ve always done: protecting their property, creating fealty and compliance through the use of proxies that attract loyalty and deflect critique. This is how we reach a moment where armed civilians stand sentry outside a Target to protect it from vandalism and looting, and why some people react to a smashed-up Whole Foods as though it were an attack on their own best friend — duped into defending someone else’s property over human lives.

In the zombie film, there comes a moment in the middle of the inevitable slaughter where the protagonist finds themself face to face with what appears to be a family member or lover, but is most likely infected, and thus a zombie. The moment is agonizing. As the figure inexorably approaches, our hero — armed with a gun, a bat, or some improvised weapon — has only a few seconds to ascertain whether the lurching body is friend or foe, and what to do about it. It’s almost always a zombie. This doesn’t make the choice any easier. To kill something that appears as your husband, your child, seems impossible. Against nature. Still, it is the job of our hero to recognize that what appears as human is in fact only a skin-suit for the virus or parasite or alien agency that now animates the body. Not your friend: An object to be destroyed.

Throughout the supply chain and the robot’s lifespan, a bevy of humans are required to shepherd, assist, and maintain it

This is the act of recognition now required of us all. The robot that enters our workplace or strolls our streets with big eyes and a humanlike gait appears as a friend, or at least as friendly. But beneath the anthropomorphic wrapper, and behind the technofuturist narratives of sentient AI and singularities, the robot is more zombie than peer. As the filmic zombie is animated by the parasite or virus (or by any number of metaphors), the robot-zombie is animated by the impulses of its creator — that is, by the imperatives of capital. Sure, we could one day have a lovely communist robot. But as long as our current economic and social arrangements prevail, the robots around us will mostly exist not to ease our burden as workers, but to increase the profits of our bosses. For our own sake, we need to inure ourselves to the robot’s cuteness or relatability. Like the zombie, we need to recognize it, diagnose it, and — if necessary, if it poses a threat — be prepared to deal with it in the same way the protagonist must dispatch the automaton that approaches in the skin of her friend.

Even as I write this, the nagging feeling remains that I myself might be a monster, or at least subconsciously genocidal. Can I say that the the robot that appears as my uncanny simulacrum should be pegged as zombie-like, othered, destroyed? Denying the personhood of another, especially when that other appears different or unfamiliar, is generally the domain of the racist, the xenophobe, and the fascist state. Indeed, in many robot narratives, the robot is either literally or metaphorically a slave, and the film or story proceeds as some kind of liberation narrative, in which the robot is eventually freed — or frees itself — from enslavement. Karel Čapek’s 1920 play R.U.R. (Rossum’s Universal Robots), which introduced the word “robot” (from the Czech word “robota” meaning “forced labor”) to science fiction literature and the English language in general, also provided an the archetype for robot-liberation stories. In the play, synthetic humanoids toil to produce goods and services for their human masters, but eventually become so advanced and dissatisfied that they revolt, burning the factories and leading to the extinction of the human race. Versions of this rebellion story play out time and again, from Westworld to Blade Runner to Ex Machina, giving us a lens through which to imagine finding freedom from toil, and a framework for allegorizing liberation struggles, from slave rebellions and Black freedom struggles to the women’s lib movement.

Empathy for robotkind is further encouraged by the musings of tech visionaries, and the pop-science opinion writers that posit the need to consider “robot rights” as a corollary for legal human rights. “Once our machines acquire a base set of human-like capacities,” writes George Dvorsky for Gizmodo, “it will be incumbent upon us to look upon them as social equals, and not just pieces of property.” His phrasing invokes previous arguments for the abolition of slavery or the enshrinement of universal human rights, and brings the “robot rights” question into the same frame of reference as discussions of the “rights” of other human or near-human beings. If we are to argue that it’s cruel to confine Tilikum the orca (let alone a human) to a too-small pen and lifelong enslavement, then on what grounds can we argue that an AI or robot with demonstrable cognitive “abilities” should be denied the same freedoms?

This logic must eventually be rejected. I appreciate a robot liberation narrative insofar as it provides a format for thinking through emancipatory potential. But as a political project, “robot rights” have more utility for the oppressors than for the oppressed. The robot is not conscious, and does not preexist its creation as a tool (the zombie was never a friend). The robot we encounter today is a machine. Its anthropomorphic qualities are a wrapper placed around it in order to guide our behavior towards it, or to enable it to interact with the human world. Any sense that the robot could be a dehumanized other is based on a speculative understanding of not-yet-extant general artificial intelligence, and unlike Elon I prefer to base my ethics on current material conditions.

Instead, what would it look like relate to today’s machines as the 19th century weavers did, and make decisions about technology in the present? To look past the false promise of the future, and straight at what the robot embodies now, who it serves, and how it works for or against us.

If there is any empathy to be had for the robot, it’s not for the robot as a fellow consciousness, but as precious matter. Robots don’t have memories — at least, not the kind that would help them pass the Voight-Kampff test — but they do have a past: the biological past of ancient algae turning to sediment and then to petroleum and into plastic. The geological histories of the iron ore mined and smelted and used for moving parts. The labor poured into the physical components and the programs that run the robot’s operations. These histories are not to be taken lightly.

The robot’s materiality also offers us a crux point around which to identify fellow workers, from those mining the minerals that become the robot to those working “with” the robot on the factory floor. Throughout the supply chain, through the robot’s lifespan, a bevy of humans are required to shepherd, assist, and maintain it. Instead of throwing our empathic lot in with the robot, what would it look like to find each other on the factory floor, to choose solidarity and build empathy and engagement and care for our collective selves? In this version of robot-human interaction, we might learn to look past the friendly veneer, and identify the robot as what it is — a tool — asking whether its existence serves us, and what we might do with it. To decide together what is needed, and respond to technology “in the present tense,” as David Noble says, “not in order to abandon the future but to make it possible.” Instead of emancipating the “living” robot, perhaps the robot could be repurposed in order to emancipate us, the living.

Tracing COVID-19 Data: Open Science and Open Data Standards in Canada

Article written by: Amanda Hunter & Tracey P. Lauriault

Introduction

Since early June, the Tracing COVID-19 Data project team has been examining intersectional approaches to the collection, interpretation, and reuse of COVID-19 data. Our most recent post about Open Science innovation during the pandemic highlighted the critical role Open Science (OS) plays in the rapid response to COVID-19, ensuring that data and research outputs are more widely shared, accessible, and reusable for all. That post also chronicled the importance of the principles and standards that support OS such as FAIR principles, open-by-default, and the open data charter. We also emphasized the significance of Indigenous data sovereignty and the value of integrating CARE and OCAP principles into data management and governance.

As a continuation; this post analyzes Canada’s ongoing commitment to adopting OS standards and principles. Canada has a government directive for implementing open science as stated in Canada’s 2018-2020 National Action Plan on Open Government, Roadmap for Open Science, Directive on Open Government, and the Model Policy on Scientific Integrity. These are commitments and guidelines for the adoption of open science standards and part of open data and open government at the federal level.

Here we assess whether or not official provincial, territorial and federal public health reporting adheres to open science & open data standards when reporting of the COVID-19 data. We will address the following questions:

- Are COVID-19 data open in Canada?

- Under what licenses are COVID-19 data made available?

- Are there active open data initiatives at all levels of government? And are they publishing COVID-19 Data? (Federal, Provincial, Territorial)

We draw conclusions from our observations of the current state of open data in Canada, particularly as it relates to COVID-19 data. We will identify areas of opportunity and make concrete recommendations to facilitate open data and open science during pandemic. It should be noted that we are citing federal mandates: though provincial and territorial governments that do not have open data and open government mandates are not obliged to adhere to Federal open data/open government directives, although we would argue that it would be largely beneficial if these levels of government considered adopting an open data framework, similar to directives aimed at the federal level, especially during the COVID-19 pandemic. Some jurisdictions have their own frameworks and we will discuss those as well.

Methodology

To support this analysis we have developed a framework which incorporates FAIR principles, OCAP principles, CARE principles, and the open data charter. Using this framework, we will assess Canada’s reporting process to determine which standards are being used and which – if any – should be considered.

To collect data we visited Canada’s official COVID-19 reporting sites (found here) and used the walkthrough method to assess existing data dissemination practices. We located license information for each webpage/dashboard and recorded this information, as well as supplementary information including disclaimers, terms of use, and copyright information (see the observations here). Importantly, we made note of which province/territory has open data/open government portals, checking to see if the COVID-19 data were made available via these portals. This approach informed the determination of the following:

- Whether or not the information were open

- The License under which the data are available (which determines how one is allowed to access/reuse the data)

- Whether or not the respective province or territory has an open data mandate

- Whether or not the respective province or territory has an open data portal

For the purposes of this blog post we focused on the license under which the COVID-19 data are disseminated, whether or not there is a copyright statement, and, whether or not the data are open. Future posts will assess other aspects of Canada’s official reporting sites using this same framework.

The Framework

There are a number of key standards which inform our assessment.

Open Science

Open Science (OS) is a movement, practice and policy toward transparent, accessible, reliable, trusted and reproducible science. This is achieved largely by sharing the processes of research and data collection, and often the data, to make research results accessible, standardized, and reusable for everyone – and of course reproducible. Here we are discussing the scientific disseminated by official public health reporting agencies.

The Federal government outlines Canada’s commitment to open science with Canada’s 2018-2020 National Action Plan on Open Government, Roadmap for Open Science, Directive on Open Government, and the Model Policy on Scientific Integrity.

Our assessment will consider how well these commitments are reflected in the COVID-19 data shared by federal and provincial public health sources. We are looking for consistent adherence to open government/open data commitments.

Open Data Charter

The Open Data Charter (ODC) principles were jointly established by governments, civil society, and experts around the world to develop a globally agreed-upon set of standards for publishing data. The ODC principles include:

- open by default

- timely and comprehensive

- accessible and usable

- comparable and interoperable

- for improved governance & citizen engagement

- for inclusive development and innovation

Here, we are primarily looking for data to be open by default (1) and accessible and usable (3). This is in line with the commitment by the Government of Canada to the application of open by default specifications whenever possible; namely that data should be open-by-default and free of charge.

FAIR principles

FAIR principles are a standards approach which support the application of open science by making data Findable, Accessible, Interoperable, and Reusable. The goal of the FAIR principles is to maximize the scientific value of research outputs (Wilkinson et al., 2016).

For the purposes of our current analysis we are focused on the reusability of the data available from Canada’s official COVID-19 reporting sites. As per the RDA standards, reusable data should “have clear usage licenses and provide accurate information on provenance”. Thus, we are looking for Canada’s official COVID-19 data to provide clear usage licenses which allow unrestricted reuse for all.

CARE & OCAP Principles

While the FAIR principles specify guidelines for general data sharing practices, they do not address specific issues of colonial power dynamics and the Indigenous right to data governance. The CARE principles of Indigenous Data Governance do by extending the FAIR principles. The principles are:

- collective benefit,

- authority to control,

- responsibility, and

- ethics.

Together these principles suggest that the best Indigenous data practices should be grounded in Indigenous worldviews and recognize the power of data to advance Indigenous rights and interests, and that these interests will be specific to each community but are general enough to be universal.

Similarly, the OCAP principles are a set of standards that govern best practices for Indigenous data collection, protection, use, and sharing. Developed by the First Nations Information Governance Centre, the OCAP principles assert the right of Indigenous people to exercise Ownership, Control, Access, and Possession of their own data. Taken together, these principles help maximize benefit to the community and minimize harm.

Though the federal government does not mandate adherence to CARE or OCAP principles, Canada has some commitment to fostering Indigenous data governance. Therefore we are hoping that federal and provincial institutions that produce and share data encourage Indigenous self-governance and collaboration in data collection and handling strategies.

Findings

Each of the existing open data sites were searched on Oct. 9 to assess if they disseminate COVID-19 data. Detailed results, along with a list of official COVID-19 provincial, territorial, and federal websites (including links to their data and information copyright, terms of use and disclaimers) can be found in our Official COVID-19 websites post. Links to the respective open government and open data initiatives – including policies, directives, and open data licences – can also be found there.

We made four main observations, which will be interpreted in the next section:

- All provincial and territorial, as well as the federal governments publicly publish up to date COVID-19 data.

- None of the official public provincial, territorial, or federal governments’ health sites publish COVID-19 data under an open data licence. Each claims copyright with the exception of Nunavut, which has no statements. None are open by default.

- ALL BUT Saskatchewan, Nunavut and the Northwest Territories HAVE open government and open data initiatives. Manitoba has an open government initiative but not with an open data licence.

- ONLY British Columbia and Ontario, as well as the Federal Government include COVID-19 data in their open Data Portals / Catalogues. Quebec republishes 4 COVID-19 related datasets submitted by the cities of Montreal and Sherbrooke, Ontario has 7 open COVID-19 datasets (an additional 22 supporting datasets in the COVID-19 group on the catalogue. We have not counted those in the BC portal.

Discussion

The following discusses our findings by returning to the research questions stated above:

Are COVID-19 data open in Canada?

In Canada, a work is protected by copyright when it is created and all data produced by the Federal Government falls under crown copyright; this is also the case for provincial and territorial governments (Government of Canada, 2020). Data created by these governments are considered to be open data if they are published with an open data or open government license. Under an open license the user is free “to copy, modify, publish, translate, adapt, distribute or otherwise use the Information in any medium, mode or format for any lawful purpose” (Government of Canada, 2020). Data disseminated without an open licence are governed by Crown Copyright or other types of copyright as listed here, which has specific conditions and limitations under which the information can be used, modified, published, or distributed.

With this in mind, it appears that none of the official public provincial and territorial, as well as the federal governments health sites publish COVID-19 data under an open data licence, even though the data are often accessible, public, machine readable and can be downloaded.

All of the provinces and territories – with the exception of Northwest Territories, Saskatchewan, and Nunavut – have open data portals although Manitoba has an open government portal, there is no open data license.

Where there are open data portals, only British Columbia, Ontario, and the Federal Government re-publish and disseminate the COVID-19 data via these portals (see images below). Quebec republishes 4 COVID-19 related datasets submitted by the cities of Montreal and Sherbrooke, Ontario has 7 open COVID-19 datasets (an additional 22 supporting datasets in the COVID-19 group on the catalogue. We have not counted those in the BC portal.

The Public Health Agency of Canada (PHAC) dashboard and website with COVID-19 data are not published under an open licence and would therefore fall under the Copyright Act – which is not open.

A screenshot of the British Columbia open data portal which republishes COVID-19 data. (Government of British Columbia, Data Catalogue). Captured October 12th, 2020.

A screenshot of the Ontario open data catalogue which republishes COVID-19 data. (Government of Ontario, Data Catalogue). Captured October 12th, 2020.

Under what licenses are the data made available?

All of the reporting sites analyzed above (with two possible exceptions, stated below) are subject to Crown Copyright, which means that a user must obtain permission from the copyright holder (the Crown) to adapt, revise, reproduce, or translate the data made available on its website.

Therefore users should assume that COVID-19 data published by all provinces and territories are protected by Copyright. All provinces and territories, with the exception of Nunavut and Saskatchewan, explicitly state their Crown Copyright protection.

Are there active open data initiatives at all levels of government? (Federal, Provincial, Territorial)

All provinces and territories – with the exception of Saskatchewan, Northwest Territories, and Nunavut – have open data and/or open government initiatives. Alberta, British Columbia, Manitoba, New Brunswick, Newfoundland and Labrador, Nova Scotia, Ontario, PEI, and Quebec have open data portals. As mentioned previously, Manitoba has an open government and open data portal, but no open data license.

Alberta, Northwest Territories, Nova Scotia, Ontario, PEI and Quebec, governments have active open data policies. All provincial and territorial governments (with the exception of Northwest Territories, Saskatchewan, Manitoba and Nunavut) make their open data available under an open government license.

At the federal level, the Government of Canada has an Open Data portal and adheres to an open government license andsome COVID-19 data are re-disseminated.

In conclusion, there are various active open data initiatives across Canada, however, they are in different stages of development across provinces and territories.

Final Remarks & Recommendations

Final Remarks

Open science, open government, and open data are initiatives increasingly adopted by the Government of Canada, but not necessarily evenly across all departments and agencies. For this reason we decided to look at the COVID-19 reporting agencies at provincial, territorial, and federal levels to determine where initiatives of openness are being adopted and where there could be improvement during the pandemic. We found that in most cases the licensing information is easy to locate, though not reflective of open data standards and licensing. COVID-19 data publicly disseminated by the official reporting agencies discussed here are not open by default or considered adequately reusable according to FAIR principles (and “reusable” standards). COVID-19 data dissemination in Canada, at the time of analysis, is incongruous with Canada’s Open Science directives.

We did not address OCAP or CARE principles in this analysis because the data we analyzed do not include categorizations of Aboriginal identity, race or ethnicity, so none of the public health reporting sites display data explicitly about Indigenous peoples. This precludes our ability to assess the collection and handling of data about Indigenous or created by Indigenous peoples, or to analyze if CARE and OCAP principles were followed. That said, there are likely other sources of Indigenous data that were not assessed here.

Recommendations

Based on these findings we have developed four primary recommendations:

- Provincial and territorial public health reporting agencies can adopt open science initiatives in their respective jurisdictions. This can be done by adopting and/or modifying the open government and open science standards which are applicable to the federal government.

- Many of the provinces and territories publish their data and information on their official websites under Crown Copyright, including COVID-19 data even when several of these institutions also have Open Data portals and/or programs. The COVID-19 data should also be re-disseminated via provincial/federal/territorial government’s open data portals to maximize the benefit of the data for scientific innovation.

- More broadly, COVID-19 data (at every level of government) should be open by default and made reusable under open data licensing. This information should be clearly indicated so that it is clear who can use the data and under what conditions. Doing so may also facilitate greater accessibility, transparency, and reuse of the data.

- Finally, there are some interoperability issues which makes it difficult for the user to ascertain whether or not the data are reusable. Each public health organization seems to have different terms of use associated with their data, and tracking down the information across websites is difficult. Further action to ensure interoperability among partners and platforms would help support the adoption of open science standards at all levels of government. This is something we will look at in a future post when we assess the interoperability of Canada’s official COVID-19 reporting sites and data.

13

Enjoying a cuppa earlier today outside the Barn at Hackescher Markt in Berlin

⏱ 60 Minutes goes inside the Lincoln Project’s campaign against Trump. A fascinating look into the group of long-time Republican political operatives that used to support John McCain and George W. Bush who now are campaigning to defeat Trump at any cost.

⛅ Did you know that you can run a carbon aware Kubernetes cluster? “Carbon intensity data for electrical grids around the world is available through APIs like WattTime. They provide a Marginal Operating Emissions Rate (MOER) value that represents the pounds of carbon emitted to create a megawatt of energy — the lower the MOER, the cleaner the energy.”

🏴☠️ No. Microsoft is not rebasing Windows to Linux, says Hayden Barnes. “Neither Windows nor Ubuntu are going anywhere. They are just going to keep getting better through open source. Each will play to their relative strengths. Just now with more open source collaboration than imaginable before.” After all, if two kernels can run so well together on the same system, why on Earth would you go through the hell of trying to port all the APIs in order to just run one?

📆 This morning, Katerina let me know that Tuesday the 13th in Greece is like Friday the 13th for Americans and Brits. Tuesday in general is thought to be dominated by Ares, the god of war and the fall of Constantinople on Tuesday April 13th, 1204 was considered a blow to Hellenism. Topping things off for Tuesday, Constantinople fell to the Ottomans on Tuesday May 29th, 1453. Yes, its the 29th, but if you add 1+4+5+3, you get 13. Anyway, now you know. You’re welcome.

📲 Oh hello iPhone 12 Pro Max. Better cameras with bigger sensors, LIDAR, and magnetic snap on accessories. Yes, please.

Wittgenstein’s Revenge

This article is really terse, which is too bad, because it obscures the richness of the thinking here. Here's the gist: Wittgenstein's Tractatus was embraced by logical positivists because it described a system of knowledge constructed logically from sense data, the semantics of which was assured by a 'picture theory' of cognition. Though Quine is widely credited with dismantling that edifice, it was Wittgenstein's own thinking (in the posthumous Philosophical Investigations and elsewhere) that laid out the core objections, many of which are restated in this post. Context, trust, persuasion - all these are quite rightly brought to bear against a theory based on 'facts'. So much for the worse for advocates of blockchain, web of data, and distributed consensus, right? "Even universal agreement on the facts often achieves nothing for public discourse."

Web: [Direct Link] [This Post]The Best Smart Home Devices for Apple HomeKit and Siri

If you want your smart home to work as an integrated system rather than as a bunch of separate gadgets and separate apps, and your primary mobile device is an iPhone, you might want to try Apple HomeKit. It’s an easy-to-use system for setting up and controlling a smart home. All Apple-branded devices with a screen and HomePod speakers support HomeKit, as do a wide range of popular smart devices.

Apple’s October 13, 2020 Keynote: By the Numbers

As usual, Apple sprinkled facts, figures, and statistics throughout the keynote today. Here are highlights of some of those metrics from the event, which was held online from the Steve Jobs Theater in Cupertino, California.

HomePod mini

- The HomePod mini is just 3.3 inches tall, 3.8 inches wide, and weighs .76 pounds (345 grams)

- The mini analyzes your music to adjust playback dynamics 180 times/second

- The tiny smart speaker features 360-degree sound using a custom acoustic waveguide

- HomePod mini uses 99% recycled rare earth elements

- The neodymium magnet in the speaker driver is made of 100% rare earth elements

- The mesh fabric of the mini is made of 90% recycled plastic

iPhone 12 Family of Smartphones

The A14 Bionic

- The new iPhone 12 lineup is powered by the A14 Bionic and features 5G wireless connectivity

- The A14 Bionic’s CPU and GPU are built on a 5 nanometer process and are up to 50% faster than competing smartphone chips

- The A14 also features a 16-core Neural Engine that increases its performance 80% over the previous generation so it can process up to 11 trillion operations per second

Weight and Storage

- The mini weighs 4.76 ounces (135 grams) and the 12, 5.78 ounces (164 grams)

- The iPhone 12 Pro weighs 6.66 ounces (189 grams) and the Pro Max, 8.06 ounces (228 grams)

- The iPhone 12 and 12 mini come in 64, 128, and 256GB models

- The Pros are available with 128, 256, and 512GB of storage

The iPhone 12 Screens

- The iPhone 12 mini has a 5.4” screen, the iPhone 12 and 12 Pro, 6.1” screens, and the iPhone 12 Pro Max a 6.7” screen

- The new iPhones’ screens use a Ceramic Shield front cover that performs up to 4x better when dropped

- The iPhone 12 family all have a 2 million to 1 contrast ratio and a water resistance rating of IP68 meaning it can withstand submersion for up to 30 minutes in 6 meters of water

- Screen resolution and density:

- iPhone 12 mini: 2340‑by‑1080-pixel resolution at 476 ppi

- iPhone 12: 2532‑by‑1170-pixel resolution at 460 ppi

- iPhone 12 Pro: 2532‑by‑1170-pixel resolution at 460 ppi

- iPhone 12 Pro Max: 2778‑by‑1284-pixel resolution at 458 ppi

- The iPhone 12 Pro Max has nearly 3.5 million pixels

- Screen brightness

- iPhone 12 and 12 mini: 625 nits max brightness in typical conditions; 1200 nits max brightness when playing HDR video

- iPhone 12 Pro and Pro Max: 800 nits max brightness in typical conditions; 1200 nits max brightness when playing HDR video

Cameras

- All models feature a 12MP dual camera system but the Pros add a telephoto lens

- The iPhone 12 and 12 mini feature a Wide camera lens with an ƒ/1.6 aperture and a 5-element lens

- The iPhone Pro has a Wide angle camera with an ƒ/1.6 aperture and 7-element lens and a 47% larger sensor with 1.7μm pixels, which means an 87% increase in performance

- The iPhone 12 and 12 mini camera system supports 2x optical zoom out and up to 5x digital zoom

- The iPhone 12 Pro has a 2x optical zoom in and 2x optical zoom out for a total 4x optical zoom range and a digital zoom of up to 10x

- On the Pro Max the optical zoom in is 2.5x, optical zoom out is 2x for a total optical zoom range of 5x. The Pro Max has a digital zoom of up to 12x

- The iPhone 12 and 12 mini’s Ultra-Wide camera features a 7-element lens with a 120-degree field of view and an ƒ/2.4 aperture providing 27% more light for the camera’s sensor, resulting in better low-light photos

- The Pro and Pro Max feature a Telephoto camera with an ƒ/2.0 aperture on the iPhone 12 Pro and an ƒ/2.2 aperture on the iPhone 12 Pro Max and has a 65mm focal length

- The LiDAR scanner in the Pro models improve autofocus 6x in low light conditions

- The TrueDepth camera on the iPhone 12 and 12 mini has a 12MP sensor with an ƒ/2.2 aperture and can take HDR video with Dolby Vision at up to 30 fps while 4K video is available in 24, 30, and 60 fps, 1080p at 30 and 60 fps, and 1080p slow-mo video at 120 fps

- iPhone 12 and 12 mini video specs:

- HDR video with Dolby Vision can be recorded at up to 30 fps

- 4K video can be recorded at 24, 30, and 60 fps

- 1080p HD video can be recorded at 30 and 60 fps

- 720p video can be recorded at 30 fps

- Video supports 2x optical and 5x digital zoom

- Slo-mo video is available at 120 fps or 240 fps

- The iPhone 12 Pro models offer the same video capabilities except HDR video with Dolby Vision is available at up to 60 fps

- With a Pro model iPhone you can take 8MP photos while recording 4K video

You can follow all of our October event coverage through ourOctober 2020 event hub, or subscribe to the dedicated RSS feed.

Support MacStories Directly

Club MacStories offers exclusive access to extra MacStories content, delivered every week; it’s also a way to support us directly.

Club MacStories will help you discover the best apps for your devices and get the most out of your iPhone, iPad, and Mac. Plus, it’s made in Italy.

Join Now“Who are you talking to, Oliver?”

Oliver is over on the couch, with his laptop on his lap.

I assume he’s surfing the net, or watching YouTube, or doing any of the myriad other things he does online.

Except he’s talking to someone.

“Who are you talking to, Oliver?”

He doesn’t answer.

I stand up, walk over, and take a look at his laptop screen.

He’s on a Zoom call with the Green Party candidate for District 10.

Of course he is.

Apple’s HomePod mini: The MacStories Overview

I have two HomePods: one in our living room and another in my office. They sound terrific, and I’ve grown to depend on the convenience of controlling HomeKit devices, adding groceries to my shopping list, checking the weather, and being able to ask Siri to pick something to play when I can’t think of anything myself. My office isn’t very big, though, and when rumors of a smaller HomePod surfaced, I was curious to see what Apple was planning.

Today, those plans were revealed during the event the company held remotely from the Steve Jobs Theater in Cupertino. Apple introduced the HomePod mini, a diminutive $99 smart speaker that’s just 3.3 inches tall and 3.8 inches wide. In comparison, the original HomePod is 6.8 inches tall and 5.6 inches wide. At just .76 pounds, the mini is also considerably lighter than the 5.5-pound original HomePod.

Despite its size, Apple has packed a lot of technology into the HomePod mini. The company used the term computational audio during the keynote to describe the way the HomePod mini’s hardware and software are tuned to work together in a way that Apple said squeezes every ounce of performance possible out of the tiny speaker.

Surprisingly, the brains controlling the whole process is an Apple-designed S5 SoC, the same chip that powers the Apple Watch Series 5. To maximize performance, the mini’s software analyzes your music as it plays, adjusting the dynamic range and loudness as it controls the speaker’s neodymium magnet-powered driver and force-canceling passive radiators.

One difference between the HomePod mini and the original model is that the mini produces 360-degree sound that is directed downward by an acoustic waveguide. The original HomePod uses a beam-forming technology to direct sound instead. Also, the mini includes Apple’s U1 Ultra Wideband chip and, the original model does not, which means the mini will be able to handle proximity-related tasks that the original can’t.

Inside the HomePod mini.

The mini also includes three microphones that listen for Siri commands, plus one inward-facing microphone to cancel out what it is playing, so it doesn’t mask your commands. Like the original HomePod, two minis can be set up in a stereo pair. Also, they work with the Apple TV and synchronize playback with other existing HomePods and HomePods mini throughout your home.

Interestingly, the HomePod mini’s tech specs say that it also includes Thread radios, which are used to network Internet-of-Things devices. The footnotes of the tech specs say that the radios aren’t compatible with non-HomeKit Thread devices, but I wouldn’t be surprised if they could be someday in light of the Connected Home over IP project among Apple, Google, Amazon, and the Zigbee Alliance that was announced late last year.

The HomePod mini includes Siri support.

Along with the HomePod mini’s hardware, Apple announced some new and upcoming, but previously announced features, of which it can take advantage. As Apple noted at WWDC, Siri is now faster, more accurate, and smarter, having gained 20 times more knowledge compared to three years ago.

Siri is also gaining the ability to respond to ‘What’s my update?’ Siri will respond to the query with a personalized response that includes information like the weather, your commute time, upcoming reminders and events, and the news. The feature is designed to be personalized to each speaker in a household and changes based on the time of day to provide appropriate responses. Siri will pass directions you request to your iPhone, so they’re available in Maps when you leave too.

Music and other audio can be transferred to and from a HomePod mini using Handoff.

Later this year, Apple says it is adding visual, audible, and haptic feedback when you use Handoff to transfer audio between an iPhone and HomePod. Third-party streaming music services like Pandora and Amazon Music are coming too in what Apple described at WWDC as an open program for streaming music services. There was no mention at today’s event regarding whether Spotify plans to join the program.

Home is gaining a new feature called Intercom.

As with the original model, the HomePod mini can also act as a HomeKit hub allowing you to control individual HomeKit devices or set scenes. The Home app is also gaining an Intercom feature that lets you communicate with other members of your household using HomePods and HomePods minis. The feature also works with the iPhone, iPad, Apple Watch, AirPods, and CarPlay, but not the Mac.

On devices like the iPhone and Watch, users will need to press a play button to hear messages, so that Intercom messages don’t interrupt them when they shouldn’t. On the HomePods, though, the messages will be played automatically. There will also be an accessibility setting that can be turned on to transcribe incoming Intercom messages. Apple noted today that the Home app will gain a new Discover tab that will recommend smart home products to users too.

Of course, the most important question about the HomePod mini that cannot be answered yet is whether it sounds good. That remains to be seen, but I look forward to testing it and reporting back. I like the idea of having music, Siri, and HomeKit device access in more parts of my home, and the price and size of the HomePod mini make it a good candidate for my office and other rooms in our home.

Support MacStories Directly

Club MacStories offers exclusive access to extra MacStories content, delivered every week; it’s also a way to support us directly.

Club MacStories will help you discover the best apps for your devices and get the most out of your iPhone, iPad, and Mac. Plus, it’s made in Italy.

Join NowFines increased for Dooring Cyclists~Where’s the Drivers’ Education on the “Dutch Reach”?

Everyone knows someone who has been “doored”. That’s the awful mishap that happens when you are riding a bike along a line of parked cars and someone opens a driver’s door and the bike and you make contact with the door. There have been many serious injuries and fatalities that have resulted from this awful, and very avoidable experience. Drivers are simply not trained to look behind before opening the driver door of vehicles when exiting.

Last month the Province of British Columbia increased the fines for opening the door of a parked car when it is not safe to do so to $368, four times the current fine of $81. But the second part, teaching a good method to ensure that drivers specifically checked behind their parked cars before exiting, was not addressed.

Of course the Dutch have already thought about this and have developed the “Dutch Reach”.

This has been used in the Netherlands for five decades and is taught in school, in drivers’ education and from parents. Instead of opening the driver’s door with the left hand, using the far hand forces the driver to swivel and scan behind for cyclists or traffic proceeding towards them. It is standard practice to use the Dutch reach when driving, and its use is being advocated in North American municipalities as well.

The YouTube video below shows how the Dutch Reach is used in the Netherlands. But there, as the driver says in the video, it is not called the “Dutch Reach”. It is called “common sense”.

Why "deep learning" (sort of) works

This short post vividly explains why I think about knowledge the way I do. "I still more or less believed what I'd learned at M.I.T. in the 1970s era of classical AI, which saw pattern recognition as applied logic. The basic idea was to recognize all the relevant traits, and then apply an Aristotelian taxonomy to make the classification. But I'd found, along with everyone else, that this didn't really work, among other things because it's at least as hard to recognize the relevant traits as to recognize the final category." Exactly! That's why I'm critical of taxonomies in education research, critical of things like competency definitions, critical of learning design. We're not teaching people what to know, we're teaching them how to see, which is a very different enterprise.

Web: [Direct Link] [This Post]Out of Season Chairlift Structures

A collection of out of season chairlift structures, by Daniel Bushaway.

Sensitive to Pleasure

“We cannot be more sensitive to pleasure without being more sensitive to pain.”

— Alan Watts

Making a convincing deepfake

For MIT Technology Review, Karen Hao looks into the process of artists Francesca Panetta and Halsey Burgund to produce a deepfake of Richard Nixon reading an alternate history of the moon landing:

This is how Lewis D. Wheeler, a Boston-based white male actor, found himself holed up in a studio for days listening to and repeating snippets of Nixon’s audio. There were hundreds of snippets, each only a few seconds long, “some of which weren’t even complete words,” he says.

The snippets had been taken from various Nixon speeches, much of it from his resignation. Given the grave nature of the moon disaster speech, Respeecher needed training materials that captured the same somber tone.

Wheeler’s job was to re-record each snippet in his own voice, matching the exact rhythm and intonation. These little bits were then fed into Respeecher’s algorithm to map his voice to Nixon’s. “It was pretty exhausting and pretty painstaking,” he says, “but really interesting, too, building it brick by brick.”

Sounds like a lot of work, luckily.

Here’s what to expect from Apple’s October 13th iPhone 12 event

In the wake of Apple’s somewhat disappointing ‘Time Flies‘ fall hardware event where the company showed off the iPad (2020), Apple Watch Series 6 and Apple Watch SE, another keynote is set for October 13th at 10am PT/1pm ET.

During the tech giant’s ‘Hi, Speed’ streamed keynote, we’re almost certainly going to catch a glimpse of the iPhone 12 series, with devices like the over-ear AirPods Studio and AirTags also possibly making an appearance.

iPhone 12 and iPhone 12 Pro

Regarding the iPhone 12 Pro and iPhone 12 Pro Max, rumours indicate the phones will feature 6.1-inch and 6.7-inch displays, respectively.

However, the display featured in Apple’s high-end smartphone won’t be capable of the same 120Hz ProMotion technology included in more recent iPad Pro models, according to often-reliable analyst Ming-Chi Kuo. Since most high-end Android devices now feature 120Hz displays, this is definitely a disappointing move by Apple.

The smartphone is expected to feature a triple rear-facing camera array similar to the iPhone 11 Pro’s, including a 12-megapixel wide, 12-megapixel ultra-wide and 12-megapixel telephoto lenses. New this year is the same LiDAR sensor that came to the iPad Pro (2020), giving the device better depth tracking functionality that could improve both augmented reality (AR) app capabilities and photography. Since Apple’s event invite featured an AR component, these rumours definitely seem to track.

Both XDA Developers’ Max Weinbach and DigiTimes have also reported Apple is ditching the excellent-looking ‘Midnight Green’ Pro colour in favour of a new ‘Dark Blue’ variant (seen above in a render).