For some reason, many or most of the images in this blog don’t load in some browsers. Same goes for the ProjectVRM blog as well. This is new, and I don’t know exactly why it’s happening.

So far, I gather it happens only when the URL is https and not http.

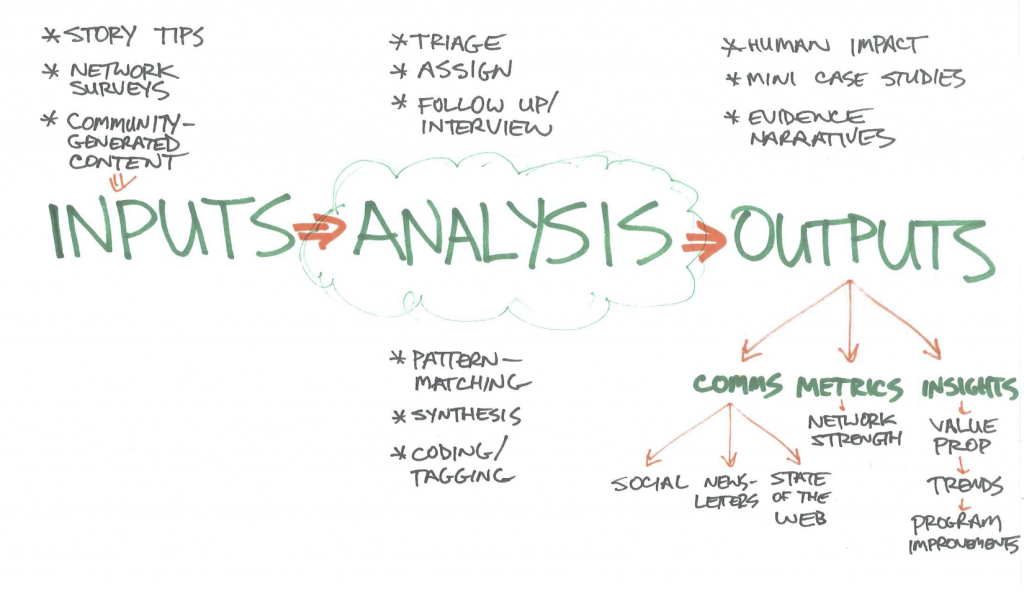

Okay, here’s an experiment. I’ll add an image here in the WordPress (4.4.2) composing window, and center it in the process, all in Visual mode. Here goes:

Now I’ll hit “Publish,” and see what we get.

When the URL starts with https, it fails to show in—

- Firefox ((46.0.1)

- Chrome (50.0.2661.102)

- Brave (0.9.6)

But it does show in—

- Opera (12.16)

- Safari (9.1).

Now I’ll go back and edit the HTML for the image in Text mode, taking out class=”aligncenter size-full wp-image-10370 from between the img and src attributes, and bracket the whole image with the <center> and </center> tags. Here goes:

Hmm… The <center> tags don’t work, and I see why when I look at the HTML in Text mode: WordPress removes them. That’s new. Thus another old-school HTML tag gets sidelined.

Okay, I’ll try again to center it, this by time by taking out class=”aligncenter size-full wp-image-10370 in Text mode, and clicking on the centering icon in Visual mode. When I check back in Text mode, I see WordPress has put class=”aligncenter” between img and src. I suppose that attribute is understood by WordPress’ (or the theme’s) CSS while the old <center> tags are not. Am I wrong about that?

Now I’ll hit the update button, rendering this—

—and check back with the browsers.

Okay, it works with all of them now, whether the URL starts with https or http.

So the apparent culprit, at least by this experiment, is centering with anything other than class=”aligncenter”, which seems to require inserting a centered image Visual mode, editing out size-full wp-image-whatever (note: whatever is a number that’s different for every image I put in a post) in Text mode, and then going back and centering it in Visual mode, which puts class=”aligncenter” in place of what I edited out in text mode. Fun.

Here’s another interesting (and annoying) thing. When I’m editing in the composing window, the url is https. But when I “view post” after publishing or updating, I get the http version of the blog, where I can’t see what doesn’t load in the https version. But when anybody comes to the blog by way of an external link, such as a search engine or Twitter, they see the https version, where the graphics won’t load if I haven’t fixed them manually in the manner described above.

So https is clearly breaking old things, but I’m not sure if it’s https doing it, something in the way WordPress works, or something in the theme I’m using. (In WordPress it’s hard — at least for me — to know where WordPress ends and the theme begins.)

Dave Winer has written about how https breaks old sites, and here we can see it happening on a new one as well. WordPress, or at least the version provided for https://blogs.harvard.edu bloggers, may be buggy, or behind the times with the way it marks up images. But that’s a guess.

I sure hope there is some way to gang-edit all my posts going back to 2007. If not, I’ll just have to hope readers will know to take the s out of https and re-load the page. Which, of course, nearly all of them won’t.

It doesn’t help that all the browser makers now obscure the protocol, so you can’t see whether a site is http or https, unless you copy and paste it. They only show what comes after the // in the URL. This is a very unhelpful dumbing-down “feature.”

Brave is different. The location bar isn’t there unless you mouse over it. Then you see the whole URL, including the protocol to the left of the //. But if you don’t do that, you just see a little padlock (meaning https, I gather), then (with this post) “blogs.harvard.edu | Doc Searls Weblog • Help: why don’t images load in https?” I can see why they do that, but it’s confusing.

By the way, I probably give the impression of being a highly technical guy. I’m not. The only code I know is Morse. The only HTML I know is vintage. I’m lost with <span> and <div> and wp-image-whatever, don’t hack CSS or PHP, and don’t understand why <em> is now preferable to <i> if you want to italicize something. (Fill me in if you like.)

So, technical folks, please tell us wtf is going on here (or give us your best guess), and point to simple and global ways of fixing it.

Thanks.

[Later…]

Some answer links, mostly from the comments below:

That last one, which is cited in two comments, says this:

Chris Cree who experienced the same problem discovered that the WP_SITEURL and WP_HOME constants in the wp-config.php file were configured to structure URLs with http instead of https. Cree suggests users check their settings to see which URL type is configured. If both the WordPress address and Site URLs don’t show https, it’s likely causing issues with responsive images in WordPress 4.4.

Two things here:

- I can’t see where in Settings the URL type is mentioned, much less configurable. But Settings has a load of categories and choices within categories, so I may be missing it.

- I wonder what will happen to old posts I edited to make images responsive. (Some background on that. “Responsive design,” an idea that seems to have started here in 2010, has since led to many permutations of complications in code that’s mostly hidden from people like me, who just want to write something on a blog or a Web page. We all seem to have forgotted that it was us for whom Tim Berners-Lee designed HTML in the first place.) My “responsive” hack went like this: a) I would place the image in Visual mode; b) go into Text mode; and c) carve out the stuff between img and src and add new attributes for width and height. Those would usually be something like width=”50%” and height=”image”. This was an orthodox thing to do in HTML 4.01, but not in HTML 5. Browsers seem tolerant of this approach, so far, at least for pages viewed with the the http protocol. I’ve checked old posts that have images marked up that way, and it’s not a problem. Yet. (Newer browser versions may not be so tolerant.) Nearly all images, however, fail to load in Firefox, Chrome and Brave when viewed through https.

So the main question remaining are:

- Is this something I can correct globally with a hack in my own blogs?

- If so, is the hack within the theme, the CSS, the PHP, or what?

- If not, is it something the übergeeks at Harvard blogs can fix?

- If it’s not something they can fix, is my only choice to go back and change every image from the blogs’ beginnings (or just live with the breakage)?

- If that’s required, what’s to keep some new change in HTML 5, or WordPress, or the next “best practice” from breaking everything that came before all over again?

Thanks again for all your help, folks. Much appreciated. (And please keep it coming. I’m sure I’m not alone with this problem.)