I’m writing this because — for a project author, a company, or a community — I believe documentation is the most import thing on earth. Yet it is something we often think of as an afterthought or just outsource to a documentation department and never really invest in.

I’ve been pretty successful because of a focus on documentation and wanted to pass on some tips in hopes others could take advantage.

While I was pretty awesome in English class, I’ve never been a documentation writer by trade. I‘ve built software and managed people building software. I want to be building more things — And it is documentation, not technical issues, that keep me from being better, every single day.

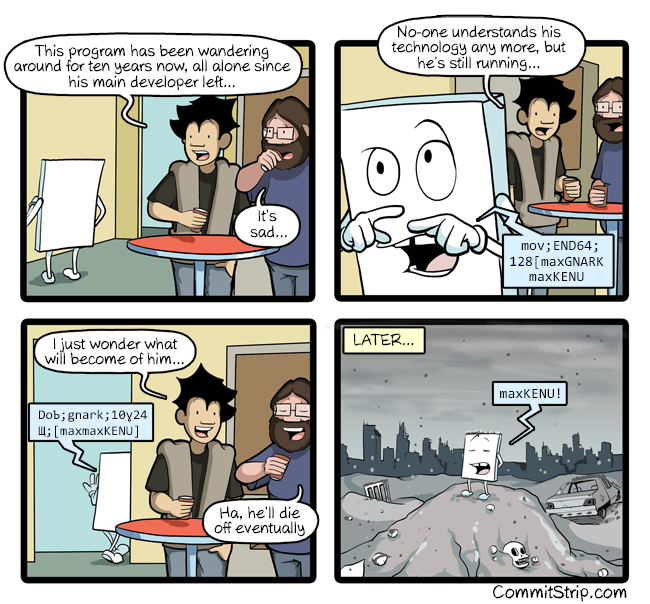

I should be pretty solid as a developer, I’d think, but I struggle with this stuff. I find of lot of tech highly frustrating in this regard. I think I’m good at making basic programs and cutting out complexity, but I’m not so good at staying up to date with the latest things. And I’m really wondering if that is my fault. Shouldn’t I have some awesome video synthesizer project and know 14 languages instead of just cranking out over-glorified shell scripts?

I recently sat in a car dealership deciding whether I should learn Go, Rust, or Elixir — all while waiting for a very slow service appointment. I actually gave up on all three, because various critical questions weren’t well presented, and I couldn’t easily learn what I wanted to learn. (Ok, I lie slightly, I got mad at language design choices in most of them too).

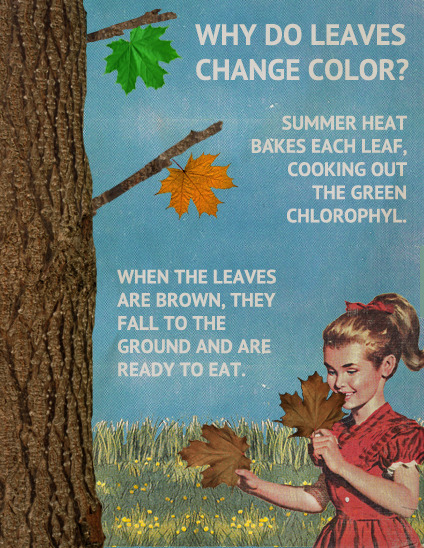

I know people love all of these languages, but they have overcome problems through some enormous slog, and along the way, their problems and trials have not been converted to making things easier for future developers along the way. I’m not sure why, but I think it’s mostly because docs are a skill that hasn’t been taught well, and too many developers don’t enjoy writing.

As another example, I really believe computer books shouldn’t have to exist. While many writers do great work, often people are learning something as they are writing the book, so they aren’t really experts. More importantly, things change constantly and books grow out of date. Who is not the best person to communicate the vision of the thing they are creating than those who are creating it? I believe this should be the case.

I told myself, when I was starting my company — that if it supported a consultancy (something that was needed to explain and install the product and get it going) I had failed, because I wanted to build something that anybody could learn in a short amount of time. This relates to my philosophy on documentation — if a separate book is required to be purchased, I have failed in communicating that vision. (If people would like a book, great, but it shouldn’t be required).

Plus, if you want to get an idea out there, what is the best way to communicate how to use an idea if not free dissemination of collected knowledge?

Imagine that you have an existing project that doesn’t have the uptake that you want. You might think about the splash page, or holding community events. What I’m hear to tell you is that your most overlooked secret weapon to being widely loved and celebrated is probably the one you aren’t thinking of: better documentation.

In the the rest of this post, I’ll show you a bit about how to make it better. I’m not saying I couldn’t do better with what I did — I could. But I did the best with what I had.

The Failure of the .Com Homepage

Today’s main .com webpages frequently make grievous errors. They copy similar often-single-page formats, showing meaningless clip art, and then try to describe a product using puffery and good-sounding adjectives. The result is often that I, as a very seasoned developer and former CTO, can’t tell what your product is. I am exceedingly infuriated at about 80% of the technological marketers out there for being totally incompetent when selling to technical audiences. If you just have a free software project, don’t copy them.

Even if you say your app “schedule workloads” or “manages containers” that still doesn’t even tell me all that much. As the worst example, I once worked at database company that didn’t use the word “database” on their webpage.

While you can and should fix those things, in the event your company is organized/separated in ways that make this comically not easy, the one thing you do have is the great hammer of documentation. It’s where I go to decide what a product REALLY is, and what their commitment to userland is. Documentation is truth.

If you have a product homepage, show screenshots, code, and maybe a few very very short videos showing the product itself. A meaningful architectural diagram doesn’t hurt either. Then link to the documentation and keep it real.

Learning Styles

In college, I took a version of the intro-to-engineering course called “E497F”, taught by Dr. Porter. It was a pretty excellent class, and he referenced a lot of work from Dr. Richard Felder, also at NC State University.

I’ve read various less-informed Hacker News comments trying to say the whole “Learning Styles” idea is junk, claiming it didn’t hold up to testing, but it seems many of them weren’t talking about Felder’s idea so much, but other papers. I am still not sure those studies even looked at engineering education either.

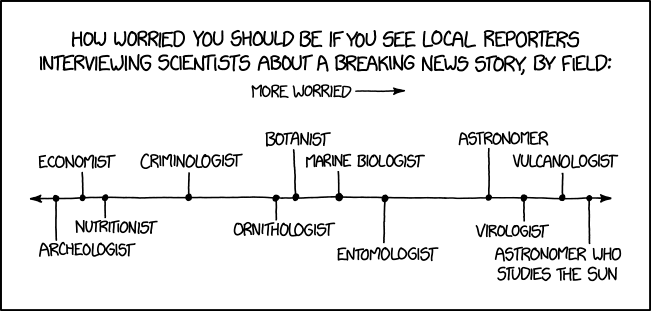

As presented to us, everybody in class took a survey that figured out if they learned more visually, audibly, or in writing — and whether they preferred to learn more sequentially (like a math proof) or more globally (like it doesn’t make any sense until it comes together all at once).

At the time, it was interesting — all of the computer science students in the class, and mostly ONLY them, came out in the “global” vs “sequential” learning form.

However, if you think about it, most books and manuals are presented sequentially. They build knowledge on bases of other knowledge. If the learning style theory applies, and for some weird reason even freshman C.S. brains are so different than those of the rest of engineering, this makes it really hard on computer types to learn from the usual books and documentation.

I, but not everyone (and maybe even a minority of everyone, it has been a while), also ended up pretty heavy in the “visual” learning bucket. I pretty much require a whiteboard or pen to understand what you are talking about most of the time and get lost in meandering explanations.

As a result, I tend to ask lots of unrelated questions as I’m learning something, needing to explore weird corners, and I really benefit from seeing the “big picture” before I hear explanations. Seeing facts build up slowly on top of each other, compared to just seeing what I want to do and then breaking it down — tends to be frustrating to me.

I developed the docs for the ansible project with that in mind — that different people learn differently and need information presented in different ways. Thank you to Dr. Porter and Dr. Feldman for this, as I think it’s really responsible for the success of my company.

Helping “Global” Learners In Tech Documentation

First off, there should be a clear index sidebar so you can explore rapidly all the things the app can do. Thankfully many people do this.

When in each section, explain why I should care about each section. What do these features do, and when would I use them? What are the benefits? What am I about to learn, and what other sections might I want to read?

When describing each feature, show it in context.

If we take the example of programming libraries, much of the documentation is commonly shown as either code generated simple listings of functions, or it is still very reference oriented.

Reference oriented documentation does not present the “why” you would use something and does not show how other features, functions, or API details would be used surrounding the code in question.

In looking over a very low level audio library recently (I gave up) I found out I had no idea what it was doing, because the results of one function were only meaningful when used with another dozen or so functions.

In this case, showing code snippets and diagrams, showing how the tool X solves a particular use case — and how to accomplish it, is infinitely better for people who want to learn “globally”, if we buy into the learning styles idea.

People who also learn globally will probably want to jump into other related topics, lightly study them, and see what other details they want to explore before going on. To do this, I recommend a “see also” section in almost every docs page that links to other material that would either be remedial or new jumping-off points for future learning and exploration.

Don’t always assume there is one path through your docs. People will skip chapters. Think of it as “Choose Your Own Adventure”.

Make Folks Excited

While one shouldn’t get too wordy or conversational, you want the user to feel like they are learning something powerful and useful. Pass on the reasons why someone is learning something, and tell them what they can do with it. This helps focus attention and prevent skimming over documentation, not knowing what is important.

Avoid Elevations To Rocket Science

Avoid overcomplexity. One of my competitors came from a very academic background, and that showed in the product and documentation. In reality, the problem being solved was a simple problem. Simple explanations win. Make the depth available if people want to read it, but don’t lead with something that makes a tool sound like it is more complicated than it is. You don’t need to make yourself feel smart for building it — you want your users to get a giant ton of work done for building it.

Re-Read Your Docs

Blind spots occur when you know a product too well. Frequently re-read your project documentation trying to ignore everything you thought you knew. Read it aloud if need be, and think about what parts need more explanation, which parts are not clear, and what parts could be shorter.

All Features Must Be Documented

While it wasn’t 100% successful, I strongly fought to make sure any feature or configuration option added to the program contained documentation approximately at the same time the code was merged in. This couldn’t always be required to be part of a pull request because not everyone is a great writer — and we had a lot of great ESL contributors too! — but making sure every option is well documented avoids worry about hidden documentation.

It’s also important to document implicit behaviors. Sometimes things work a certain way because they are not described. When this behavior changes, it is a bug or was it supposed to work that way? Was the old behavior a bug? Who knows! Document how things work, or better yet, write a test.

The Thirty Minute Rule

I credit most of my awareness of this idea from an IRC conversation with Seth Vidal, who helped me a ton with Ansible.

The idea is that people don’t have a lot of time to try something new out, and they will get frustrated and move on. I’ve usually discussed this in a product perspective, but it’s not just a product thing. My competition at the time could take several days to get remote communications working (for me anyway). By creating a tutorial that allowed the user to accomplish something cool in 30 minutes, you could have someone successful within their lunch hour. This is super key, and I can’t state this enough — your documentation must have people making a lot of successful gains, and saying “wow, that’s great!” as they try to stuff out along the way.

By contrast, I’ve spent some time trying to learn various programming languages these days, and have seen some start off with about a two hour dive into memory management. It didn’t keep my interest, and I didn’t have a very good program in 30 minutes, and I moved on.

Show The Whole Picture

I was recently trying to learn a programming language I thought I’d like, but found nothing in the official docs about how to deploy it. There was a github project somewhere, I think, but it was unclear on how to use it. The project was very dependent on interactive shells for all the examples, but I couldn’t tell how I would ever share it with anyone.

Another project I was trying to learn relied on a code generator, which output results into a target directory, and also created various other directories. Upon presenting the code generator, it didn’t bother to explain any of the directories it created or what files were important. The lack of desire to explain this lead me to believe there were many other problems down the road.

Rehash Information

Your audience is coming in at different levels of experience and background. For me, developers may have known a lot about X and systems administrators a lot about Y. Some people would be right out of school and others would have more experience with systems than me. So even in basic instructions, I tried to include a bit more level of background information than I normally would.

Perhaps a silly example is one of TV recaps. When a new show comes on it will recap what happened in the previous week so you can remember what happened. If you remember what happened, it doesn’t take too long, and probably helps your neurons wake up. If you did, it isn’t much of a loss.

I vaguely remember reading Hardy Boys books when I was a kid. How many times did a book remind you who Chet was? Or what kind of car he had? This was so you didn’t have to read every book in sequence.

I think about the giant stacks of knowledge that require understanding these days before I can understand something. It has completely kept me from digging into say, javascript, knowing that before I can know A, I must understand dependencies B and C, which are explained in context of tool D, which assumes you already understood E.

I recall a README for some random Ruby library that said something like “Rainbow is Sprockets for Unicorn and Sunshowers”. I’m not sure if that’s what it said or not, but it was something like that. Now I had to look ALL of those things up, and I’m sure the documentation was self-referential.

I can imagine what that feels like for folks new to programming if it feels so bad to me.

Speak With Confidence

When I was considering learning Rust recently I found a docs webpage where two versions of the documentation were presented on the main web site. Door number 1 was an old version but apparently “explained the esoteric parts” but was probably out of date in a few areas, and version 2 was a rewrite, that said it was missing some sections.

This does not inspire confidence in a learner. Your act must be together. Say your docs are good and know it because your docs are good, and you spend a lot of time on them.

(Rust was worse a year ago, where the documentation was out of date with the current version, and you had to try to figure it out)

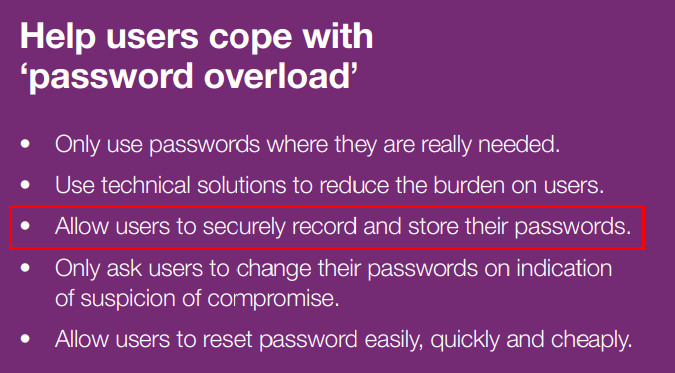

No Skipped Steps

In install instructions, don’t tell someone to “just go install Node”. Any time you ask people to go research how to do something, or link to other install instructions, you’re losing people’s attention and time. Show all the steps.

If you can show all the steps in a bash script you can just execute, all the better.

There is nothing worse than seeing a library that you think might be interesting but not being sure how to install it, and losing an hour in the process.

Also IMHO: please don’t just pipe a bash script through curl — I care about where things are being installed and would rather just execute the steps.

Generated Docs

For generating documentation, I like something where the documentation can live in source control. This helps with collaboration, but also allows open source teams (where available) to easily make additions. For Ansible, I we used Sphinx. Sphinx templates don’t always look the best, but it is easy to use. Start there, or with something similar. I believe Tim Bielawa deserves credit for the very first push to Sphinx. It was huge, so thanks!

I’ve also mentioned it a lot, but J.P. Mens also made a really great addition for us. We had a lot of modules that were submitted by open source contributors, who would frequently not write documentation copy. So what we did was add a way for the documentation stubs for Sphinx to be generated from code. This documentation was more referenced based, so we extended that to include some additional examples, showing the module used in context. That helps users a lot as they can quickly process what parameters it takes rather than trying to consume a huge chunk of text.

Again, written for different learning styles.

Consider Keeping Docs Online

As your project grows in popularity, your doc site will grow in traffic. Traffic to the ansible docs page regularly dwarfed traffic to the main .com webpage. By keeping docs online, we were able to let people know what the current release was, occasionally run a banner about an upcoming event, and also introduce some *minor* advertising for a commercial product. Additionally, strong online docs are things that people can link to on places like Hacker News and Reddit or (more importantly) Stack Overflow.

Commercial advertising in docs need not be heavy handed — your goal is not make people think they need some software, but just make people aware that something exists and let them try it if they think it would be valuable.

If we had focused on locally built docs, it would have been harder to keep people on the current version, we would have lost analytics information (I was mostly just interested in web traffic count and where referrals came from), and we wouldn’t have been able to make people as aware of commercial offerings.

If you are selling physical hardware (like alarm clocks) though, I highly recommend making a PDF manual available. The rules for rapidly changing pure software products are a bit different.

Think About Eliminating Questions

When writing documentation — just like code, one of the more powerful things you can do is figure out how to eliminate a question or problem from being encountered again.

In documentation this is hard — people skim. But what areas could be misunderstandings? What ares need to be re-iterated throughout the doc?

Does the documentation need to integrate your whole theory-of-the-universe so people don’t use your cleverly designed hammer to paint with?

If you can eliminate a common problem by better structuring an error message to provide advice, do that.

Share Tips

I have always felt that the phrase “Best Practices” is kind of a lie. They are usually pushed by someone who does not know something, and then insists their way is the best.

Or they are arrived at by groupthink, and don’t actually apply in a wide variety of cases.

Still, offering tips and tricks is a solid idea. While you present each concept in learning-based sections, how do you combine ideas and use them in concert?

You can include a Tips section or also just sprinkle them through the document. I preferred to include them all in one place.

You Can’t Please Everyone

Despite what felt like me spending 40% of my time in the first year of the project building what I thought were pretty solid documentation, things are not always so happy on twitter.

At the same time people would say “you have great docs”, people would say “you have the worst docs”.

I don’t know if this was true — I imagine they were just frustrated with a problem and choosing to blame docs, but it is a clear example of the need to present information for multiple learning styles and backgrounds.

Some folks may file bugs or even fix things via a pull request, but don’t count on it. Having a section on each page to easily suggest suggestions to the documentation using some kind of web form is probably a good start.

Final Advice

With a highly technical product that isn’t instantly intutive, your product will be perceived by it’s documentation service.

I have worked with many tech writers over the years, who have all been great people. At Ansible, during the first year before the company, as well as all the way through my tenure of Ansible 1.9.3, we didn’t have any.

This was because the documentation mattered that incredibly much, and it wasn’t a product where you could just hand that off to anyone without miles of sysadmin experience. I wrote most of them, in a time where my time organizing the project itself was at a massive premium.

The person leading a project must care about it’s perceived surface area more than anyone else, and to some extent, if you want a happy userland, the documentation is the most important thing you have.

I don’t think a lot of developers DO love to write, but if you look at the more successful projects, they have two things — (1) great API design and modularity, (2) really strong web presence.

A classic example of that for me would be nearly everything coming out of the Rails community. Partially because they all come from .com web space, they nail typefaces and presentation.

Compare that instead with the average nearly-blank README of a GitHub project, and you can see how they gather steam at such increased rates.

I’ve never really explained my views on crafting documentation to anyone in full before. So here they are. Hopefully the ideas above are useful, and will help make some other technical documentation better, and improve the uptake of something you have built.

Les célèbres scientifiques Mae Jemison, Margaret Hamilton, Nancy Grace Roman et Sally Ride, sont à l’honneur chez LEGO avec leur […]

Les célèbres scientifiques Mae Jemison, Margaret Hamilton, Nancy Grace Roman et Sally Ride, sont à l’honneur chez LEGO avec leur […]